Camera-Based Indoor Positioning System for the Creation of Digital Shadows of Plant Layouts

Abstract

:1. Introduction

2. Related Work

2.1. Previous Work by Other Researchers

2.2. Research Gaps, Shortcomings, and Limitations

3. Proposed System

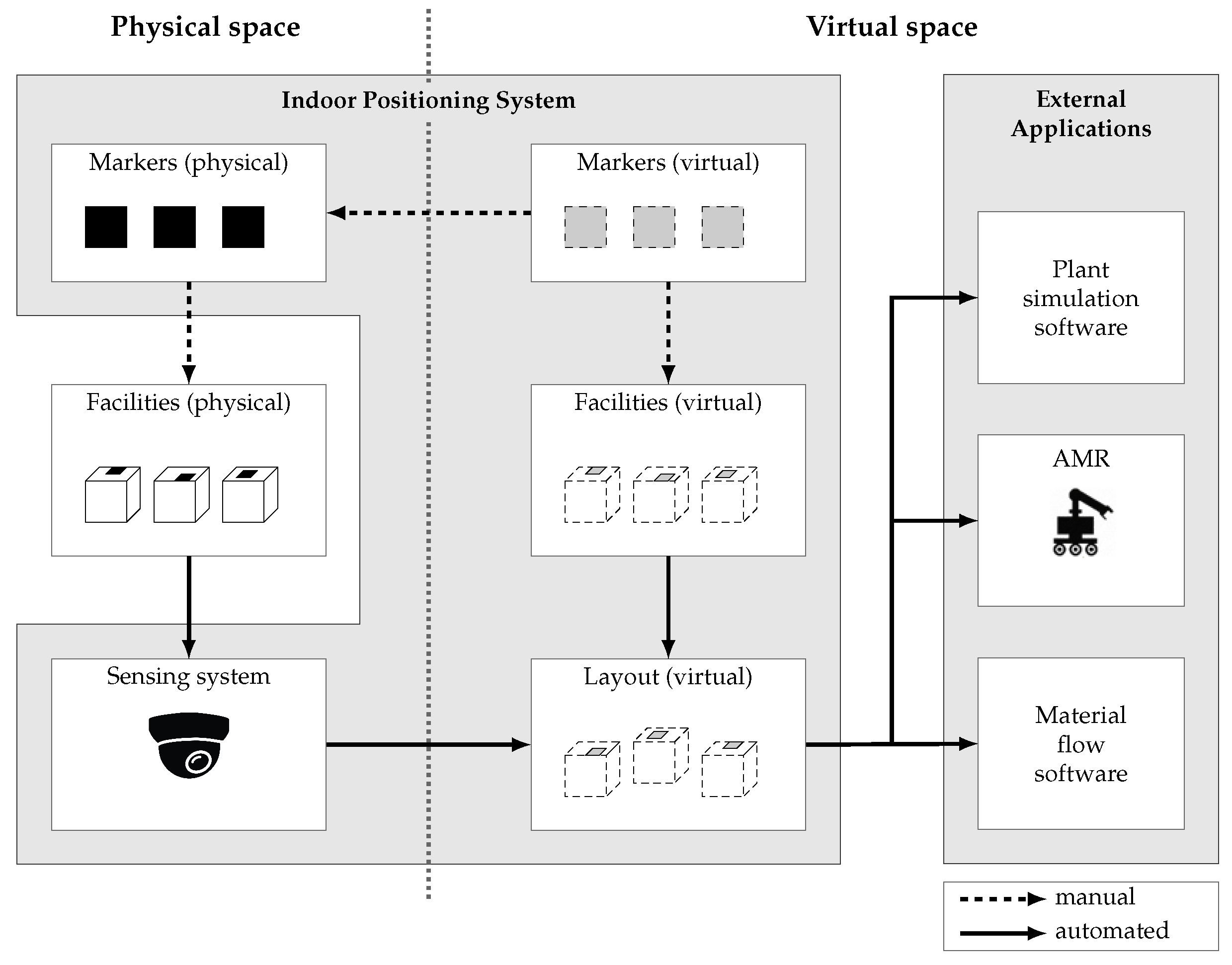

3.1. Indoor Positioning System Architecture

3.2. Sensor System Components

- Measure marks: The markers that are attached to the individual facilities to determine their pose and identity;

- Landmarks: The markers are attached to a fixed and known position in the plant coordinate system and serve as a reference.

3.3. Sensor System Overview

3.4. Determination of the Camera Pose

3.5. Determination of the Measure Mark Positions

3.6. Determination of the Measure Mark Orientation

3.7. Determination of the Facilities Poses

4. Materials and Methods

4.1. Calibration

4.2. Test Setup

4.3. Measurement Procedure

4.4. Measurement Results

5. Case Study Validation and Results

5.1. Digital Shadow of a Plant Layout from Different Perspectives

5.2. Use Case Scenario

5.3. Perspective of the Factory Planner

5.4. Perspective of the Material Flow Analyst

5.5. Perspective of the Route Planner

6. Summary and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Koren, Y.; Gu, X.; Guo, W. Reconfigurable manufacturing systems: Principles, design, and future trends. Front. Mech. Eng. 2018, 13, 121–136. [Google Scholar] [CrossRef]

- Chen, B.; Wan, J.; Shu, L.; Li, P.; Mukherjee, M.; Yin, B. Smart Factory of Industry 4.0: Key Technologies, Application Case, and Challenges. IEEE Access 2018, 6, 6505–6519. [Google Scholar] [CrossRef]

- Mabkhot, M.M.; Al-Ahmari, A.M.; Salah, B.; Alkhalefah, H. Requirements of the Smart Factory System: A Survey and Perspective. Machines 2018, 6, 23. [Google Scholar] [CrossRef]

- Xu, X.; Hua, Q. Industrial Big Data Analysis in Smart Factory: Current Status and Research Strategies. IEEE Access 2017, 5, 17543–17551. [Google Scholar] [CrossRef]

- Saad, S.M. The reconfiguration issues in manufacturing systems. J. Mater. Process. Technol. 2003, 138, 277–283. [Google Scholar] [CrossRef]

- Westkämper, E.; Zahn, E. Wandlungsfähige Produktionsunternehmen: Das Stuttgarter Unternehmensmodell; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar] [CrossRef]

- Elbasani, E.; Siriporn, P.; Choi, J.S. A Survey on RFID in Industry 4.0. In Internet of Things for Industry 4.0: Design, Challenges and Solutions; Springer: Cham, Switzerland, 2020; pp. 1–16. [Google Scholar] [CrossRef]

- Stopka, O.; L’upták, V. Optimization of Warehouse Management in the Specific Assembly and Distribution Company: A Case Study. Nase More 2018, 65, 266–269. [Google Scholar] [CrossRef]

- Wan, J.; Cai, H.; Zhou, K. Industrie 4.0: Enabling technologies. In Proceedings of the International Conference on Intelligent Computing and Internet of Things, Harbin, China, 17–18 January 2015; pp. 135–140. [Google Scholar] [CrossRef]

- Fragapane, G.; De Koster, R.; Sgarbossa, F.; Strandhagen, J.O. Planning and control of autonomous mobile robots for intralogistics: Literature review and research agenda. Eur. J. Oper. Res. 2021, 294, 405–426. [Google Scholar] [CrossRef]

- Vogel-Heuser, B.; Bauernhansl, T.; Ten Hompel, M. Handbuch Industrie 4.0 Bd. 4, Allgemeine Grundlagen, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Abrate, F.; Bona, B.; Indri, M.; Rosa, S.; Tibaldi, F. Multi-robot Map Updating in Dynamic Environments. In Distributed Autonomous Robotic Systems; Springer: Berlin/Heidelberg, Germany, 2013; Volume 83, pp. 147–160. [Google Scholar] [CrossRef]

- Dang, X.; Rong, Z.; Liang, X. Sensor Fusion-Based Approach to Eliminating Moving Objects for SLAM in Dynamic Environments. Sensors 2021, 21, 230. [Google Scholar] [CrossRef]

- Gong, L.; Berglund, J.; Fast-Berglund, Å.; Johansson, B.; Wang, Z.; Börjesson, T. Development of virtual reality support to factory layout planning. Int. J. Interact. Des. Manuf. 2019, 13, 935–945. [Google Scholar] [CrossRef]

- Siegert, J.; Schlegel, T.; Bauernhansl, T. Matrix Fusion Factory. Procedia Manuf. 2018, 23, 177–182. [Google Scholar] [CrossRef]

- Siegert, J.; Schlegel, T.; Groß, E.; Bauernhansl, T. Standardized Coordinate System for Factory and Production Planning. Procedia Manuf. 2017, 9, 127–134. [Google Scholar] [CrossRef]

- Bracht, U.; Brosch, P.; Fleischmann, A.C. Mobile devices and applications for factory planning and operation. In Simulation in Produktion und Logistik 2013; Heinz Nixdorf Institut: Paderborn, Germany, 2013; Volume 316, pp. 61–70. [Google Scholar]

- Bracht, U.; Geckler, D.; Wenzel, S. Digitale Fabrik—Methoden und Praxisbeispiele, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar] [CrossRef]

- Braun, D.; Biesinger, F.; Jazdi, N.; Weyrich, M. A concept for the automated layout generation of an existing production line within the digital twin. Procedia CIRP 2021, 97, 302–307. [Google Scholar] [CrossRef]

- Lind, A.; Högberg, D.; Syberfeldt, A.; Hanson, L.; Lämkull, D. Evaluating a Digital Twin Concept for an Automatic Up-to-Date Factory Layout Setup. In Proceedings of the 10th Swedish Production Symposium—SPS2022, Skövde, Sweden, 26–29 April 2022; Ng, A.H., Syberfeldt, A., Högberg, D., Holm, M., Eds.; IOS Press: Amsterdam, The Netherlands, 2022; pp. 473–484. [Google Scholar] [CrossRef]

- Soori, M.; Arezoo, B.; Dastres, R. Digital twin for smart manufacturing, A review. Sustain. Manuf. Serv. Econ. 2023, 2, 100017. [Google Scholar] [CrossRef]

- Guo, H.; Zhu, Y.; Zhang, Y.; Ren, Y.; Chen, M.; Zhang, R. A digital twin-based layout optimization method for discrete manufacturing workshop. Int. J. Adv. Manuf. Technol. 2021, 112, 1307–1318. [Google Scholar] [CrossRef]

- Pawlewski, P.; Kosacka-Olejnik, M.; Werner-Lewandowska, K. Digital Twin Lean Intralogistics: Research Implications. Appl. Sci. 2021, 11, 1495. [Google Scholar] [CrossRef]

- Biesinger, F.; Kraß, B.; Weyrich, M. A Survey on the Necessity for a Digital Twin of Production in the Automotive Industry. In Proceedings of the 23rd International Conference on Mechatronics Technology (ICMT), Salerno, Italy, 23–26 October 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Stobrawa, S.; Münch, G.V.; Denkena, B.; Dittrich, M.A. Design of Simulation Models. In DigiTwin: An Approach for Production Process Optimization in a Built Environment; Springer: Cham, Switzerland, 2022; pp. 181–204. [Google Scholar] [CrossRef]

- Sommer, M.; Seiffert, K. Scan Methods and Tools for Reconstruction of Built Environments as Basis for Digital Twins. In DigiTwin: An Approach for Production Process Optimization in a Built Environment; Stjepandić, J., Sommer, M., Denkena, B., Eds.; Springer: Cham, Switzerland, 2022; pp. 51–77. [Google Scholar] [CrossRef]

- Hermann, J.; von Leipzig, K.; Hummel, V.; Basson, A. Requirements analysis for digital shadows of production plant layouts. In Proceedings of the of the 8th Changeable, Agile, Reconfigurable and Virtual Production Conference (CARV2021), Aalborg, Denmark, 1–2 November 2021; Springer: Cham, Switzerland, 2021; pp. 347–355. [Google Scholar] [CrossRef]

- Ding, G.; Guo, S.; Wu, X. Dynamic Scheduling Optimization of Production Workshops Based on Digital Twin. Appl. Sci. 2022, 12, 10451. [Google Scholar] [CrossRef]

- Nåfors, D.; Johansson, B.; Gullander, P.; Erixon, S. Simulation in Hybrid Digital Twins for Factory Layout Planning. In Proceedings of the Winter Simulation Conference (WSC), Orlando, FL, USA, 14–18 December 2020; pp. 1619–1630. [Google Scholar] [CrossRef]

- Zeiser, R.; Ullmann, F.; Neuhäuser, T.; Hohmann, A.; Schilp, J. System concept for semi-automated generation of layouts for simulation models based on point clouds. In Simulation in Produktion und Logistik 2021: Erlangen, 15–17 September 2021; Cuvillier: Göttingen, Germany, 2021; pp. 443–452. [Google Scholar]

- Lindskog, E.; Berglund, J.; Vallhagen, J.; Johansson, B. Layout Planning and Geometry Analysis Using 3D Laser Scanning in Production System Redesign. Procedia CIRP 2016, 44, 126–131. [Google Scholar] [CrossRef]

- Parthasarathy, S.R. Comparing the Technical and Business Effects of Working with Immersive Virtual Reality Instead of, or in Addition to LayCAD in the Factory Design Process. Master’s Thesis, KTH, Production Engineering, Stockholm, Sweden, 2018. [Google Scholar]

- Lind, A.; Hanson, L.; Högberg, D.; Lämkull, D.; Syberfeldt, A. Extending and demonstrating an engineering communication framework utilising the digital twin concept in a context of factory layouts. Int. J. Serv. Oper. Manag. 2023, 12, 201–224. [Google Scholar] [CrossRef]

- Awange, J.; Paláncz, B. Geospatial Algebraic Computations—Theory and Applications, 3rd ed.; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar] [CrossRef]

- Haralick, B.M.; Lee, C.N.; Ottenberg, K.; Nölle, M. Review and Analysis of Solutions of the Three Point Perspective Pose Estimation Problem. Int. J. Comput. Vis. 1994, 13, 331–356. [Google Scholar] [CrossRef]

- Grunert, J.A. Das pothenotische Problem in erweiterter Gestalt nebst über seine Anwendungen in der Geodäsie. In Grunerts Archiv fur Mathematik und Physik; Verlag von C. A. Koch: Greifswald, Germany, 1841; pp. 238–248. [Google Scholar]

- Donner, R.U. Eine Lösung für den räumlichen Rückwärtsschnitt in der Vermessungstechnik. In zfv—Zeitschrift für Geodäsie, Geoinformation und Landmanagement; Wißner-Verlag: Augsburg, Germany, 2020. [Google Scholar]

- Krogius, M.; Haggenmiller, A.; Olson, E. Flexible Layouts for Fiducial Tags. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1898–1903. [Google Scholar] [CrossRef]

- Olson, E. AprilTag: A robust and flexible visual fiducial system. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3400–3407. [Google Scholar] [CrossRef]

- Bloomenthal, J.; Rokne, J. Homogeneous coordinates. Vis. Comput. 1994, 11, 15–26. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- Szeliski, R. Video Mosaics for Virtual Environments. IEEE Comput. Graph. Appl. 1996, 16, 22–30. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Shoemake, K.; Duff, T. Matrix Animation and Polar Decomposition. In Proceedings of the Conference on Graphics Interface ’92, Vancouver, BC, Canada, 11–15 May 1992; Morgan Kaufmann Publishers: San Francisco, CA, USA, 1992; pp. 258–264. [Google Scholar]

- Yu, G.; Hu, Y.; Dai, J. TopoTag: A Robust and Scalable Topological Fiducial Marker System. IEEE Trans. Vis. Comput. 2021, 27, 3769–3780. [Google Scholar] [CrossRef] [PubMed]

| Layout Capture Method | Layout Recording Process | Transfer to Simulation Software | Total Time |

|---|---|---|---|

| Manual approach | 2.5 h | 5 min | ≈2.5 h |

| IPS | 6.5 min | 5 min | ≈12 min |

| AMR Setup Method | Map Generation | Drawing the No-Go Areas | Creating Source and Target Poses | Total Time |

|---|---|---|---|---|

| Manual approach | 20 min | 45 min | 10 min | ≈1.25 h |

| IPS | 6.5 min | 0 min | 0 min | ≈6.5 min |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hermann, J.; von Leipzig, K.H.; Hummel, V.; Basson, A.H. Camera-Based Indoor Positioning System for the Creation of Digital Shadows of Plant Layouts. Sensors 2023, 23, 8845. https://doi.org/10.3390/s23218845

Hermann J, von Leipzig KH, Hummel V, Basson AH. Camera-Based Indoor Positioning System for the Creation of Digital Shadows of Plant Layouts. Sensors. 2023; 23(21):8845. https://doi.org/10.3390/s23218845

Chicago/Turabian StyleHermann, Julian, Konrad H. von Leipzig, Vera Hummel, and Anton H. Basson. 2023. "Camera-Based Indoor Positioning System for the Creation of Digital Shadows of Plant Layouts" Sensors 23, no. 21: 8845. https://doi.org/10.3390/s23218845

APA StyleHermann, J., von Leipzig, K. H., Hummel, V., & Basson, A. H. (2023). Camera-Based Indoor Positioning System for the Creation of Digital Shadows of Plant Layouts. Sensors, 23(21), 8845. https://doi.org/10.3390/s23218845