Deep Learning Based Fire Risk Detection on Construction Sites

Abstract

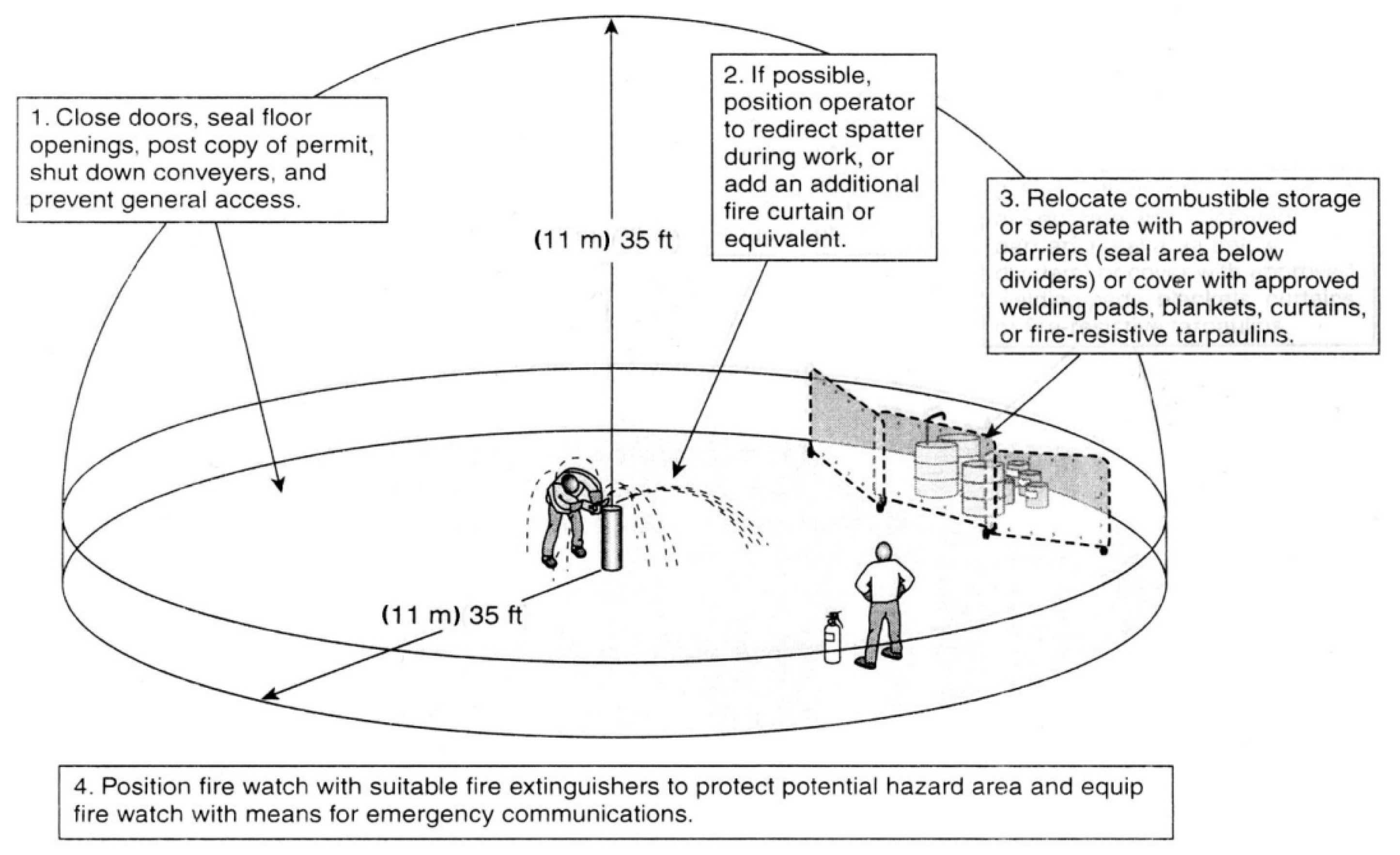

:1. Introduction

2. Fire Incidents on Construction Sites in South Korea

3. Object Detection

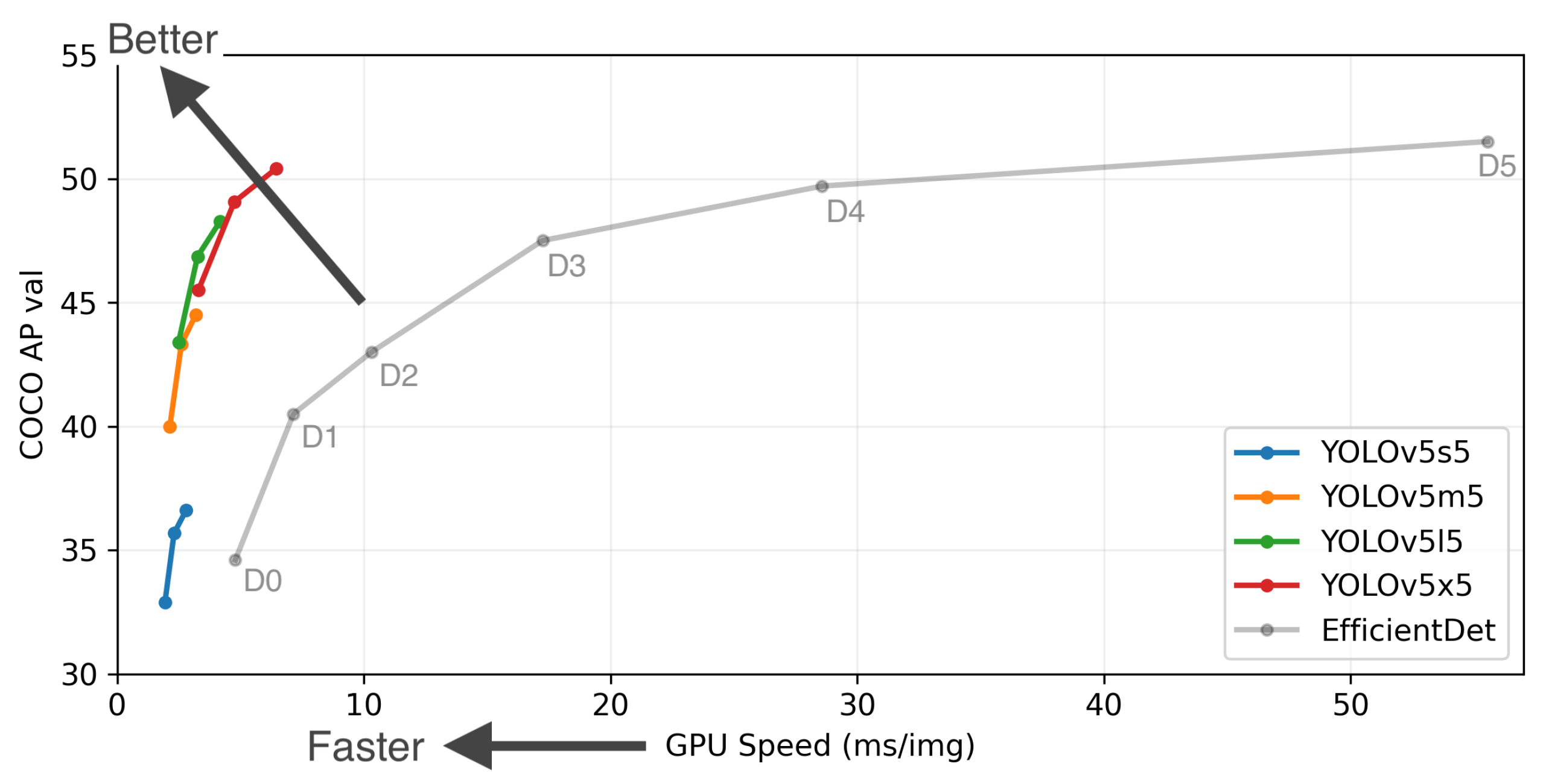

3.1. Yolov5

3.2. EfficientDet

4. Fire Risk Detection by Object Detection

4.1. Dataset Preparation

4.2. Image Labeling Approach

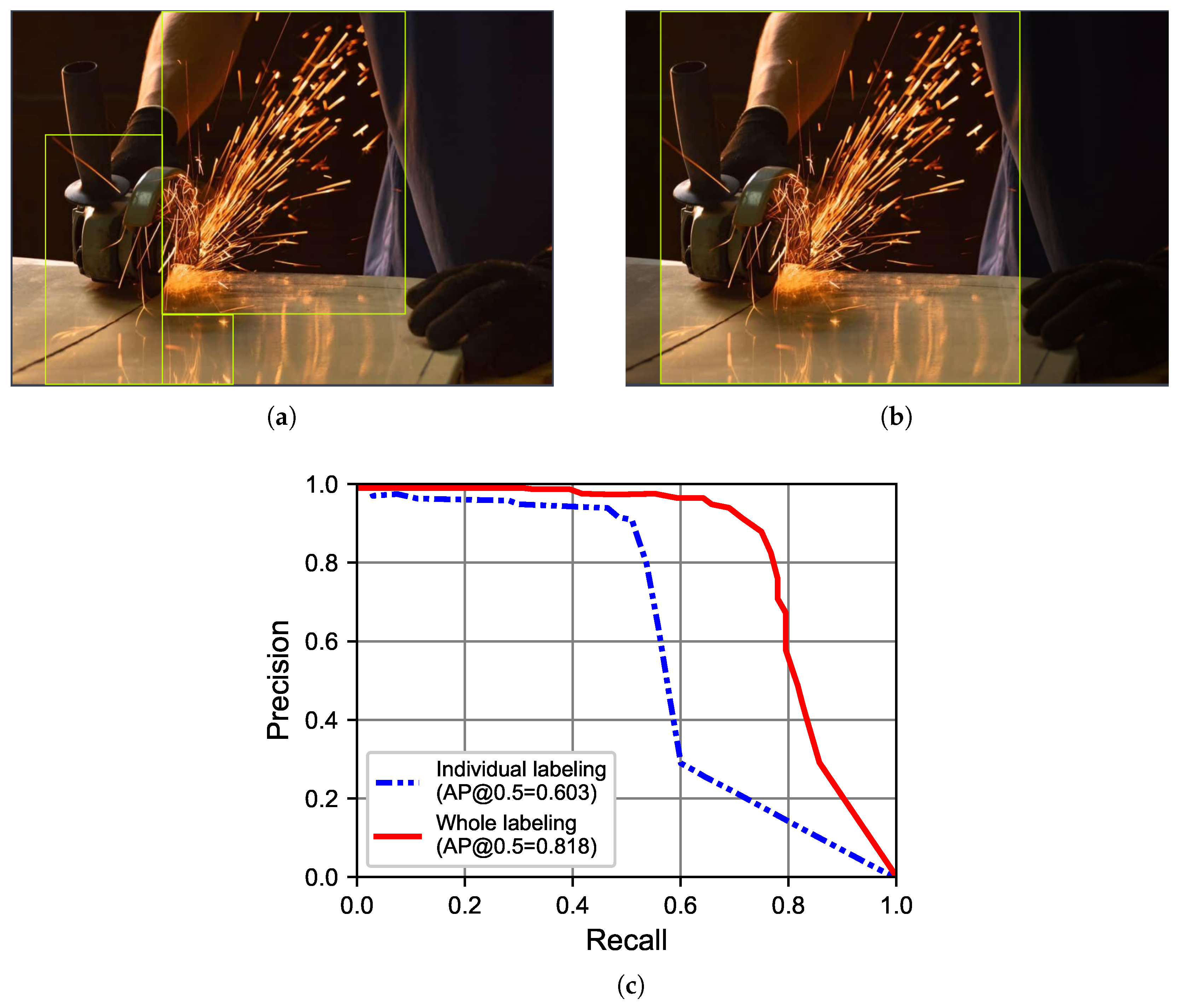

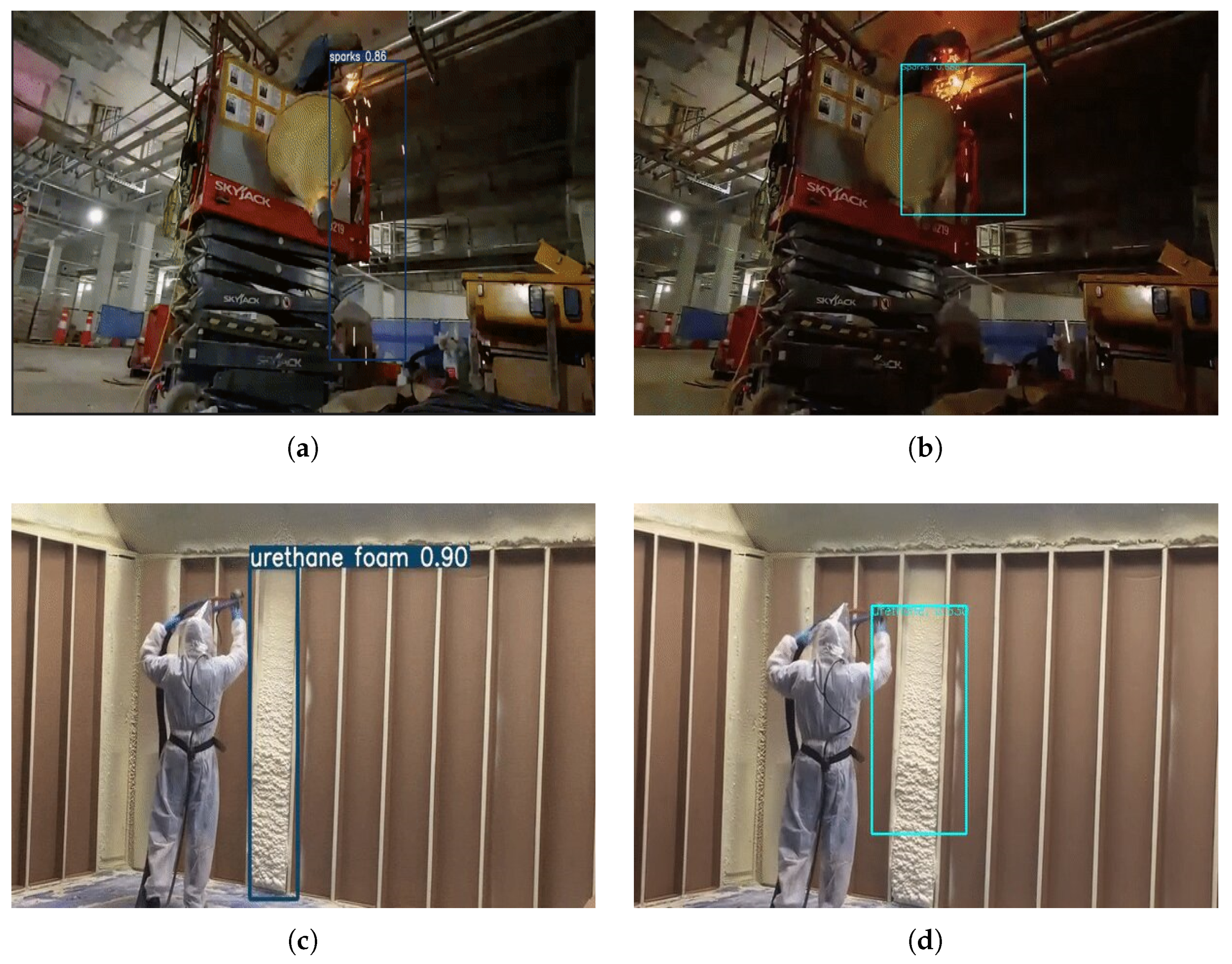

4.2.1. Sparks

4.2.2. Urethane Foam

4.2.3. Styrofoam

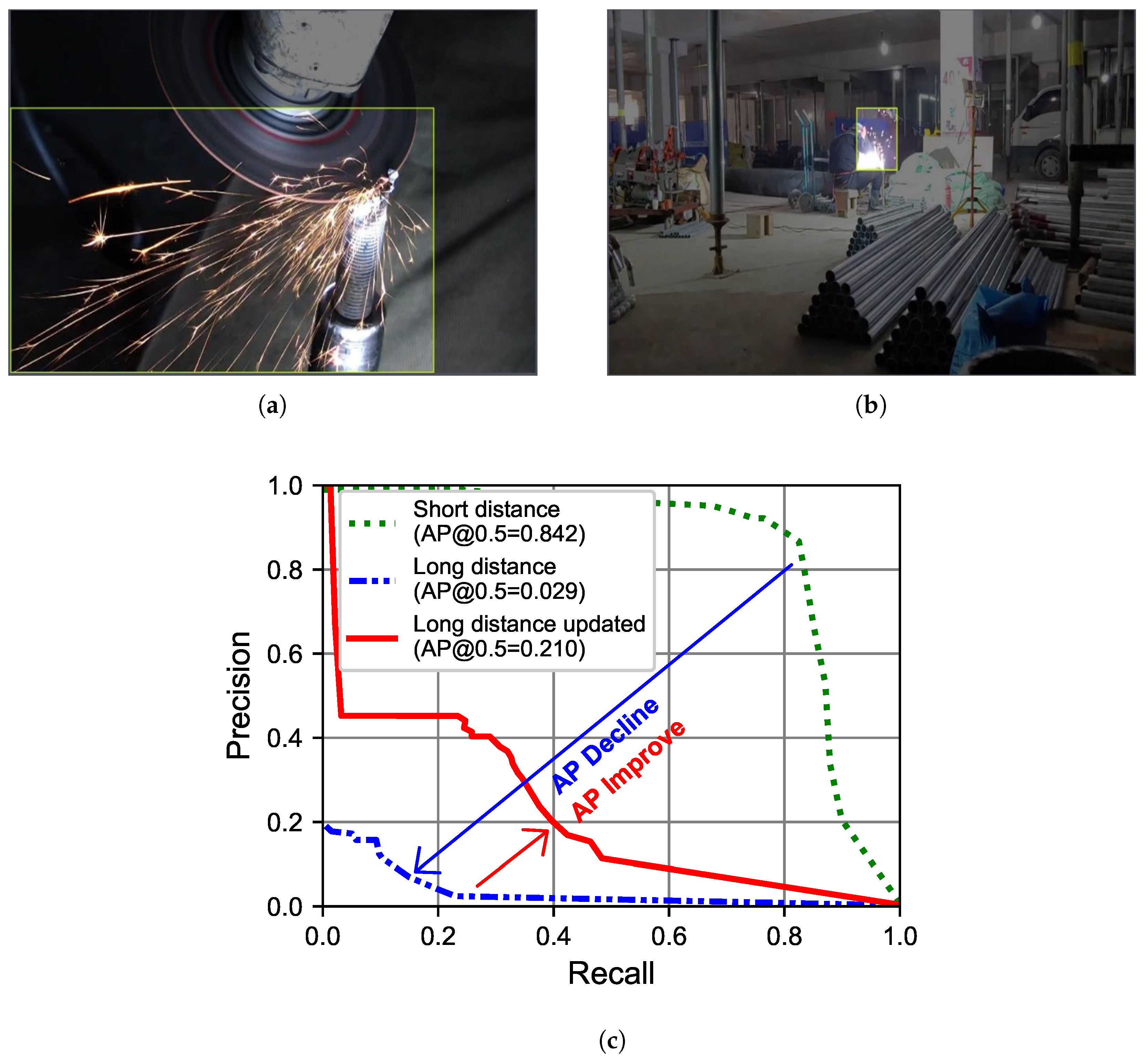

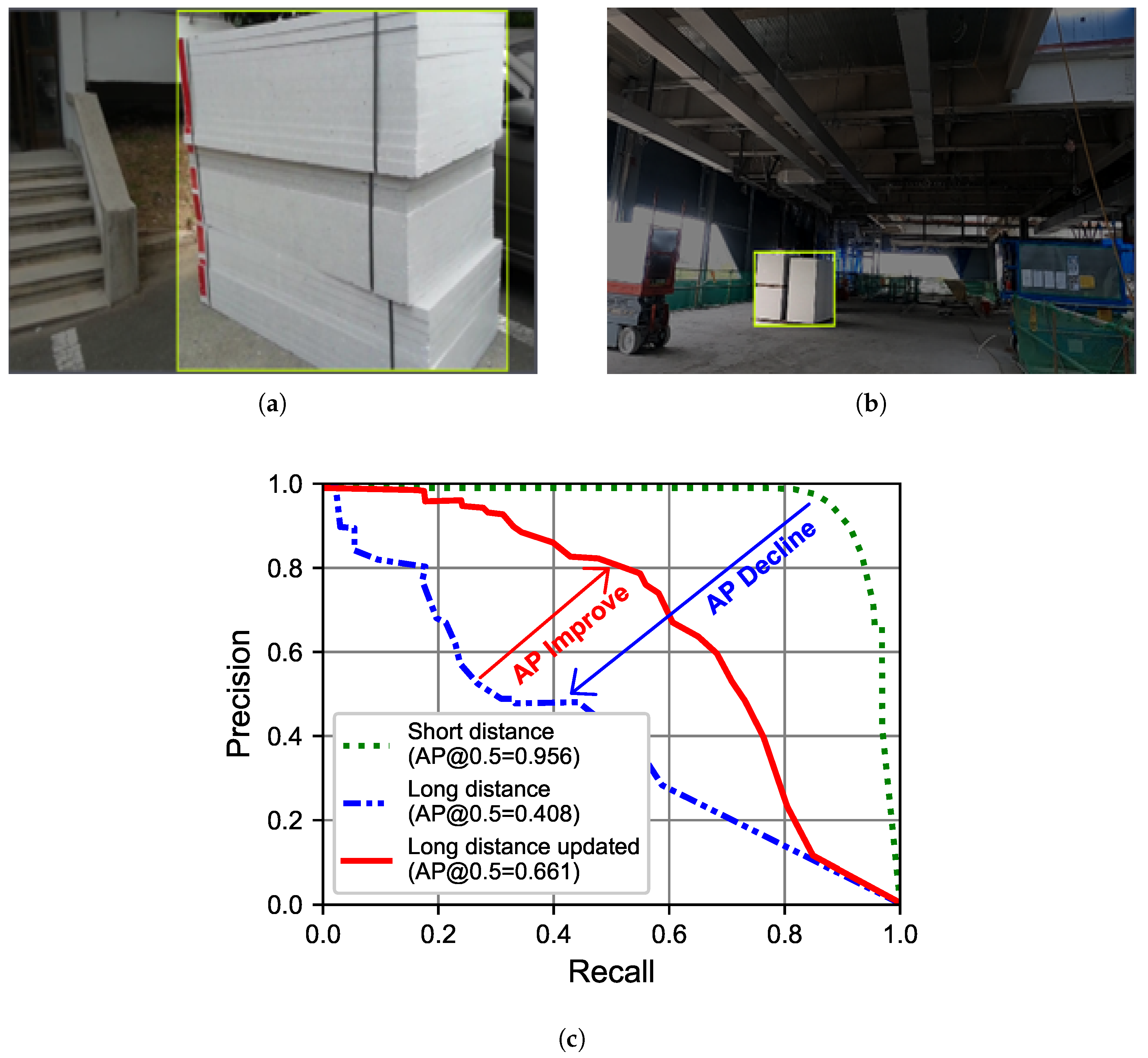

4.3. Long-Distance Object Detection

4.3.1. Sparks

4.3.2. Urethane Foam

4.3.3. Styrofoam

4.4. Performance of Yolov5 and EfficientDet

5. Conclusions

- Improved Labeling for Enhanced Performance: To maximise the performance of the deep learning models in terms of the mean average precision (mAP), for detecting fire risks such as sparks and urethane foam, it was observed that higher mAPs were achieved by the labeling approach that encompassed the entire object(s) with relatively large bounding box(es). This improved labeling approach significantly improved the detection performance mAPs by around 15% for the given dataset.

- Improved Long-Distance Object Detection: To enhance long-distance object detection, the study highlighted the importance of inclusion of images from diverse scenarios with varying distances into the dataset. By incorporating long-distance images, the model’s ability to detect fire risks was notably improved, increasing the detection performance mAP by around 28% for the given dataset.

- Best Model for Fire Risk Detection: In terms of the fire risk detection performance, Yolov5 showed a slightly better performance than EfficientDet for the given set of objects—sparks, urethane foam, and Styrofoam. It was found that YOLOv5 was easier to train without the need to fine-tune hyperparameters such as learning rate, batch size, and a choice of optimization algorithm.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- National Fire Protection Association. NFPA 51B: Standard for Fire Prevention During Welding, Cutting, and Other Hot Work; National Fire Protection Association: Quincy, MA, USA, 2009. [Google Scholar]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. arXiv 2023, arXiv:1905.05055. [Google Scholar] [CrossRef]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep Learning vs. Traditional Computer Vision. In Proceedings of the 2019 Computer Vision Conference (CVC), Las Vegas, NV, USA, 2–3 May 2020; Volume 943. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M.J. Robust Real-Time Face Detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893, ISSN 1063-6919. [Google Scholar] [CrossRef]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8, ISSN 1063-6919. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. arXiv 2014, arXiv:1311.2524. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. In Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Volume 8691, pp. 346–361. [Google Scholar]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. arXiv 2017, arXiv:1612.03144. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision-ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2018, arXiv:1708.02002. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2020, arXiv:1911.09070. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2021, arXiv:2010.04159. [Google Scholar]

- Wu, S.; Guo, C.; Yang, J. Using PCAand one-stage detectors for real-time forest fire detection. J. Eng. 2020, 2020, 383–387. [Google Scholar] [CrossRef]

- Nguyen, A.Q.; Nguyen, H.T.; Tran, V.C.; Pham, H.X.; Pestana, J. A Visual Real-time Fire Detection using Single Shot MultiBox Detector for UAV-based Fire Surveillance. In Proceedings of the 2020 IEEE Eighth International Conference on Communications and Electronics (ICCE), Phu Quoc Island, Vietnam, 13–15 January 2021; pp. 338–343. [Google Scholar] [CrossRef]

- Xu, R.; Lin, H.; Lu, K.; Cao, L.; Liu, Y. A Forest Fire Detection System Based on Ensemble Learning. Forests 2021, 12, 217. [Google Scholar] [CrossRef]

- Wei, C.; Xu, J.; Li, Q.; Jiang, S. An Intelligent Wildfire Detection Approach through Cameras Based on Deep Learning. Sustainability 2022, 14, 15690. [Google Scholar] [CrossRef]

- Xue, Z.; Lin, H.; Wang, F. A Small Target Forest Fire Detection Model Based on YOLOv5 Improvement. Forests 2022, 13, 1332. [Google Scholar] [CrossRef]

- Mukhiddinov, M.; Abdusalomov, A.B.; Cho, J. A Wildfire Smoke Detection System Using Unmanned Aerial Vehicle Images Based on the Optimized YOLOv5. Sensors 2022, 22, 9384. [Google Scholar] [CrossRef]

- Huang, J.; Zhou, J.; Yang, H.; Liu, Y.; Liu, H. A Small-Target Forest Fire Smoke Detection Model Based on Deformable Transformer for End-to-End Object Detection. Forests 2023, 14, 162. [Google Scholar] [CrossRef]

- Wu, H.; Wu, D.; Zhao, J. An intelligent fire detection approach through cameras based on computer vision methods. Process. Saf. Environ. Prot. 2019, 127, 245–256. [Google Scholar] [CrossRef]

- Li, P.; Zhao, W. Image fire detection algorithms based on convolutional neural networks. Case Stud. Therm. Eng. 2020, 19, 100625. [Google Scholar] [CrossRef]

- Saponara, S.; Elhanashi, A.; Gagliardi, A. Real-time video fire/smoke detection based on CNN in antifire surveillance systems. J.-Real-Time Image Process. 2021, 18, 889–900. [Google Scholar] [CrossRef]

- Pincott, J.; Tien, P.W.; Wei, S.; Kaiser Calautit, J. Development and evaluation of a vision-based transfer learning approach for indoor fire and smoke detection. Build. Serv. Eng. Res. Technol. 2022, 43, 319–332. [Google Scholar] [CrossRef]

- Pincott, J.; Tien, P.W.; Wei, S.; Calautit, J.K. Indoor fire detection utilizing computer vision-based strategies. J. Build. Eng. 2022, 61, 105154. [Google Scholar] [CrossRef]

- Ahn, Y.; Choi, H.; Kim, B.S. Development of early fire detection model for buildings using computer vision-based CCTV. J. Build. Eng. 2023, 65, 105647. [Google Scholar] [CrossRef]

- Dwivedi, U.K.; Wiwatcharakoses, C.; Sekimoto, Y. Realtime Safety Analysis System using Deep Learning for Fire Related Activities in Construction Sites. In Proceedings of the 2022 International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Maldives, Maldives, 16–18 November 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Kumar, S.; Gupta, H.; Yadav, D.; Ansari, I.A.; Verma, O.P. YOLOv4 algorithm for the real-time detection of fire and personal protective equipments at construction sites. Multimed. Tools Appl. 2022, 81, 22163–22183. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; NanoCode012; Kwon, Y.; Michael, K.; TaoXie; Fang, J.; imyhxy; et al. ultralytics/yolov5: V7.0—YOLOv5 SOTA Realtime Instance Segmentation. Programmers: _:n2611. 2022. Available online: https://zenodo.org/records/7347926 (accessed on 21 January 2023).

- Patel, D.; Patel, F.; Patel, S.; Patel, N.; Shah, D.; Patel, V. Garbage Detection using Advanced Object Detection Techniques. In Proceedings of the 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; pp. 526–531. [Google Scholar] [CrossRef]

- Yap, M.H.; Hachiuma, R.; Alavi, A.; Brungel, R.; Cassidy, B.; Goyal, M.; Zhu, H.; Ruckert, J.; Olshansky, M.; Huang, X.; et al. Deep Learning in Diabetic Foot Ulcers Detection: A Comprehensive Evaluation. arXiv 2021, arXiv:2010.03341. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote. Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhao, J.; Li, Y. Research on Detecting Bearing-Cover Defects Based on Improved YOLOv3. IEEE Access 2021, 9, 10304–10315. [Google Scholar] [CrossRef]

- Choinski, M.; Rogowski, M.; Tynecki, P.; Kuijper, D.P.J.; Churski, M.; Bubnicki, J.W. A first step towards automated species recognition from camera trap images of mammals using AI in a European temperate forest. arXiv 2021, arXiv:2103.11052. [Google Scholar]

- Khamlae, P.; Sookhanaphibarn, K.; Choensawat, W. An Application of Deep-Learning Techniques to Face Mask Detection During the COVID-19 Pandemic. In Proceedings of the 2021 IEEE 3rd Global Conference on Life Sciences and Technologies (LifeTech), Nara, Japan, 9–11 March 2021; pp. 298–299. [Google Scholar] [CrossRef]

- Bao, M.; Chala Urgessa, G.; Xing, M.; Han, L.; Chen, R. Toward More Robust and Real-Time Unmanned Aerial Vehicle Detection and Tracking via Cross-Scale Feature Aggregation Based on the Center Keypoint. Remote. Sens. 2021, 13, 1416. [Google Scholar] [CrossRef]

- Moral, P.; García-Martín, Á.; Escudero-Viñolo, M.; Martínez, J.M.; Bescós, J.; Peñuela, J.; Martínez, J.C.; Alvis, G. Towards automatic waste containers management in cities via computer vision: Containers localization and geo-positioning in city maps. Waste Manag. 2022, 152, 59–68. [Google Scholar] [CrossRef] [PubMed]

- Rahman, A.; Lu, Y.; Wang, H. Performance evaluation of deep learning object detectors for weed detection for cotton. Smart Agric. Technol. 2023, 3, 100126. [Google Scholar] [CrossRef]

- Guo, Y.; Aggrey, S.E.; Yang, X.; Oladeinde, A.; Qiao, Y.; Chai, L. Detecting broiler chickens on litter floor with the YOLOv5-CBAM deep learning model. Artif. Intell. Agric. 2023, 9, 36–45. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. arXiv 2021, arXiv:2104.00298. [Google Scholar] [CrossRef]

| Title of Research Article | Year | Object | # of Images | Object Detector (AP) | |

|---|---|---|---|---|---|

| A Forest Fire Detection System Based on Ensemble Learning [21] | 2021 | Forest Fire | 2381 | EfficientDet (0.7570) | Yolov5 (0.7050) |

| Garbage Detection using Advanced Object Detection Techniques [36] | 2021 | Garbage | 500 | EfficientDet-D1 (0.3600) | Yolov5m (0.6130) |

| Deep Learning in Diabetic Foot Ulcers Detection: A Comprehensive Evaluation [37] | 2020 | Ulcers | 2000 | EfficientDet (0.5694) | Yolov5 (0.6294) |

| A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved Yolov5 [38] | 2021 | Apple | 1214 | EfficientDet-D0 (0.8000) | Yolov5s (0.8170) |

| Research on Detecting Bearing-Cover Defects Based on Improved Yolov3 [39] | 2021 | Bearing-Cover | 1995 | EfficientDet-D2 (0.5630) | Yolov5 (0.5670) |

| A first step towards automated species recognition from camera trap images of mammals using AI in a European temperate forest [40] | 2021 | Mammals | 2659 | Yolov5 (0.8800) | |

| An Application of Deep-Learning Techniques to Face Mask Detection During the COVID-19 Pandemic [41] | 2021 | Face masks | 848 | Yolov5 (0.8100) | |

| Toward More Robust and Real-Time Unmanned Aerial Vehicle Detection and Tracking via Cross-Scale Feature Aggregation Based on the Center Key point [42] | 2021 | Drones | 5700 | Yolov5 (0.9690) | |

| Towards automatic waste containers management in cities via Computer Vision: containers localization and geo-positioning in city maps [43] | 2022 | Waste containers | 2381 | EfficientDet (0.8400) | Yolov5 (0.8900) |

| Performance evaluation of deep learning object detectors for weed detection for cotton [44] | 2022 | Weed | 5187 | EfficientDet-D2 (0.7783) | Yolov5n (0.7864) |

| Detecting broiler chickens on litter floor with the YOLOv5-CBAM deep learning model [45] | 2023 | Chickens | 560 | EfficientDet (0.5960) | Yolov5s (0.9630) |

| Model | Size (Pixels) | Params (M) | FLOPs (B) | [email protected] 1 (%) |

|---|---|---|---|---|

| Yolov5n | 640 | 1.9 | 4.5 | 45.7 |

| Yolov5s | 640 | 7.2 | 16.5 | 56.8 |

| Yolov5m | 640 | 21.2 | 49.0 | 64.1 |

| Yolov5l | 640 | 46.5 | 109.1 | 67.3 |

| Yolov5x | 640 | 86.7 | 205.7 | 68.9 |

| Object Detection Dataset | Dataset Split Ratios | The Number of Images | ||

|---|---|---|---|---|

| Training/Validation/Test | Sparks | Urethane Foam | Styrofoam | |

| Image Labeling Dataset | 60%/20%/20% | 1900 | 114 | 1381 |

| Short Distanced Dataset | 60%/20%/20% | 1520 | 1518 | 824 |

| Long Distanced Updated Dataset | 63%/21%/16% | 1850 | 2054 | 1209 |

| Final Dataset | 60%/20%/20% | 2158 | 3316 | 3915 |

| Model | Model’s Performance | |||

|---|---|---|---|---|

| Sparks AP (%) | Urethane Foam AP (%) | Styrofoam AP (%) | mAP (%) | |

| Yolov5n | 83.6 | 87.4 | 91.1 | 87.4 |

| Yolov5s | 87.0 | 89.6 | 92.3 | 89.6 |

| Yolov5m | 87.3 | 90.0 | 92.6 | 90.0 |

| Yolov5l | 86.1 | 91.0 | 92.6 | 89.9 |

| Yolov5x | 86.2 | 90.9 | 92.3 | 89.8 |

| EfficientDet-d0 | 78.0 | 81.3 | 87.6 | 82.3 |

| EfficientDet-d1 | 83.1 | 83.0 | 88.6 | 84.9 |

| EfficientDet-d2 | 85.9 | 86.7 | 89.1 | 87.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ann, H.; Koo, K.Y. Deep Learning Based Fire Risk Detection on Construction Sites. Sensors 2023, 23, 9095. https://doi.org/10.3390/s23229095

Ann H, Koo KY. Deep Learning Based Fire Risk Detection on Construction Sites. Sensors. 2023; 23(22):9095. https://doi.org/10.3390/s23229095

Chicago/Turabian StyleAnn, Hojune, and Ki Young Koo. 2023. "Deep Learning Based Fire Risk Detection on Construction Sites" Sensors 23, no. 22: 9095. https://doi.org/10.3390/s23229095