An Improved Projector Calibration Method by Phase Mapping Based on Fringe Projection Profilometry

Abstract

:1. Introduction

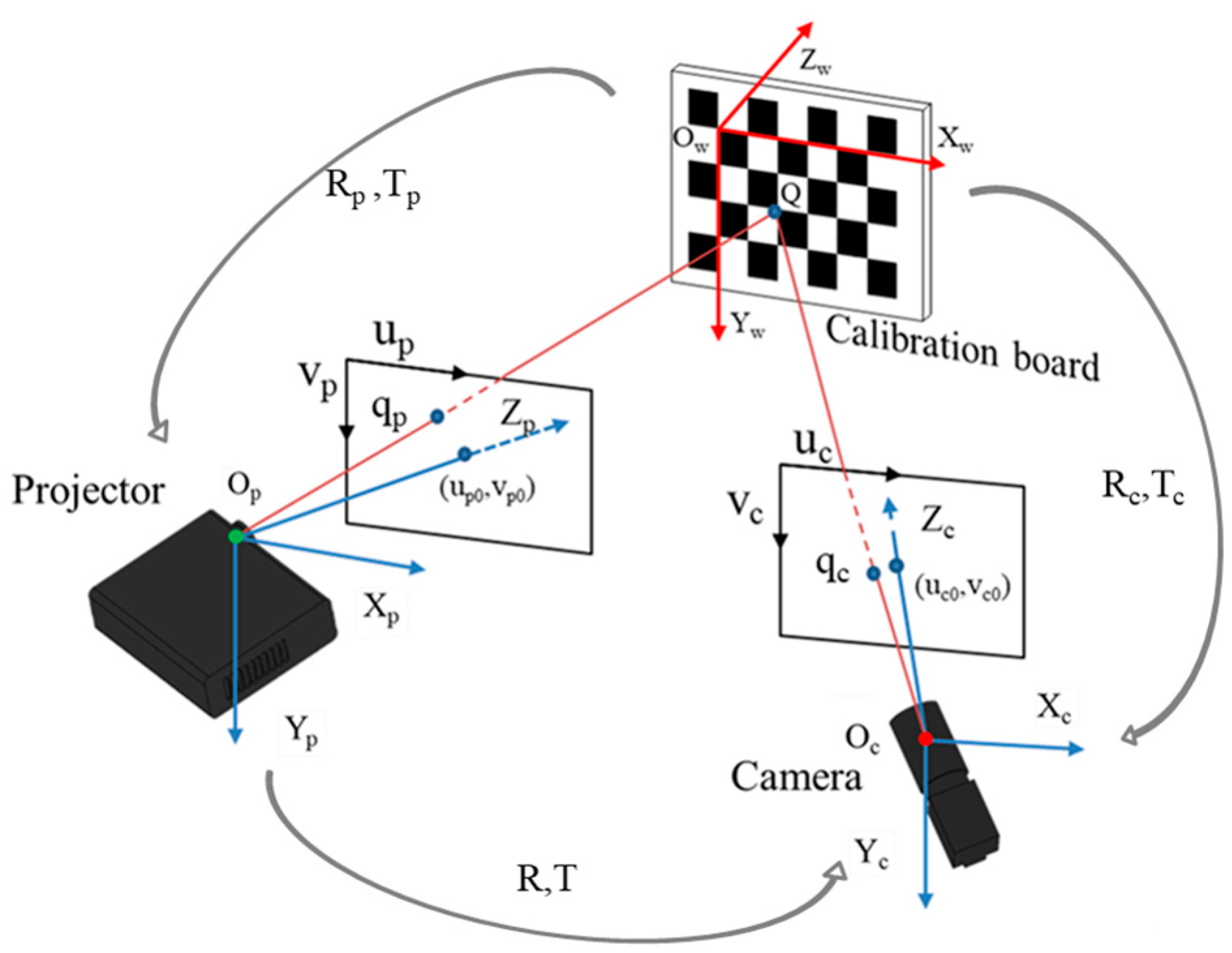

2. Camera and Projector Model of the Measurement System

3. High-Accuracy Projector Calibration Method

3.1. Phase Calculation

3.2. Sub-Pixel Coordinate Extraction Based on the Local RANSAC

3.3. Optimized Calibration by BA Algorithm

3.4. Three-Dimensional Reconstruction Based on Phase Mapping

4. Experiment and Discussion

4.1. System Setup

4.2. Calibration Experiment

4.3. Measurement Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zuo, C.; Feng, S.; Huang, L.; Tao, T.; Yin, W.; Chen, Q. Phase shifting algorithms for fringe projection profilometry: A review. Opt. Lasers Eng. 2018, 109, 23–59. [Google Scholar] [CrossRef]

- Cheng, X.; Liu, X.; Li, Z.; Zhong, K.; Han, L.; He, W.; Gan, W.; Xi, G.; Wang, C.; Shi, Y. High-Accuracy Globally Consistent Surface Reconstruction Using Fringe Projection Profilometry. Sensors 2019, 19, 668. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gorthi, S.S.; Rastogi, P. Fringe projection techniques: Whither we are? Opt. Lasers Eng. 2009, 48, 133–140. [Google Scholar] [CrossRef] [Green Version]

- Takeda, M.; Mutoh, K. Fourier transform profilometry for the automatic measurement of 3-D object shapes. Appl. Opt. 1983, 22, 3977–3982. [Google Scholar] [CrossRef] [PubMed]

- Hamzah, R.A.; Kadmin, A.F.; Hamid, M.S.; Ghani, S.F.A.; Ibrahim, H. Improvement of stereo matching algorithm for 3D surface reconstruction. Signal Process. Image Commun. 2018, 65, 165–172. [Google Scholar] [CrossRef]

- An, S.; Yang, H.; Zhou, P.; Xiao, W.; Zhu, J.; Guo, Y. Accurate stereo vision system calibration with chromatic concentric fringe patterns. Appl. Opt. 2021, 60, 10954–10963. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, X.; Wan, Z.; Zhang, J. A Method for Extrinsic Parameter Calibration of Rotating Binocular Stereo Vision Using a Single Feature Point. Sensors 2018, 18, 3666. [Google Scholar] [CrossRef] [Green Version]

- Din, I.; Anwar, H.; Syed, I.; Zafar, H.; Hasan, L. Projector Calibration for Pattern Projection Systems. J. Appl. Res. Technol. 2014, 12, 80–86. [Google Scholar] [CrossRef] [Green Version]

- Gao, W.; Wang, L.; Hu, Z. Flexible method for structured light system calibration. Opt. Eng. 2008, 47, 083602. [Google Scholar] [CrossRef]

- Zhang, S.; Huang, P.S. Novel method for structured light system calibration. Opt. Eng. 2006, 45, 083601. [Google Scholar]

- Zhang, W.; Li, W.; Yu, L.; Luo, H.; Zhao, H.; Xia, H. Sub-Pixel projector calibration method for fringe projection profilometry. Opt. Express 2017, 25, 19158–19169. [Google Scholar] [CrossRef] [PubMed]

- Rao, L.; Da, F. Local blur analysis and phase error correction method for fringe projection profilometry systems. Appl. Opt. 2018, 57, 4267–4276. [Google Scholar] [CrossRef] [PubMed]

- Wilm, J.; Olesen, O.V.; Larsen, R. Accurate and simple calibration of DLP projector systems. Proc. SPIE Int. Soc. Opt. Eng. 2014, 8979, 46–54. [Google Scholar] [CrossRef] [Green Version]

- Rao, L.; Da, F.; Kong, W.; Huang, H. Flexible calibration method for telecentric fringe projection profilometry systems. Opt. Express 2016, 24, 1222–1237. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, Y.; Cai, Z.; Tang, Q.; Liu, X.; Xi, J.; Peng, X. An improved projector calibration method for structured-light 3D measurement systems. Meas. Sci. Technol. 2021, 32, 075011. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, Z.; Leach, R.K.; Lu, W.; Xu, J. Predistorting Projected Fringes for High-Accuracy 3-D Phase Mapping in Fringe Projection Profilometry. IEEE Trans. Instrum. Meas. 2021, 70, 1–9. [Google Scholar] [CrossRef]

- Cai, Z.; Liu, X.; Li, A.; Tang, Q.; Peng, X.; Gao, B.Z. Phase-3D mapping method developed from back-projection stereovision model for fringe projection profilometry. Opt. Express 2017, 25, 1262–1277. [Google Scholar] [CrossRef]

- Moreno, D.; Taubin, G. Simple, accurate, and robust projector-camera calibration. In Proceedings of the 2nd Joint 3DIM/3DPVT Conference: 3D Imaging, Modeling, Processing, Visualization and Transmission, 3DIMPVT 2012, Zürich, Switzerland, 13–15 October 2012; pp. 464–471. [Google Scholar]

- Tang, Z.; Von Gioi, R.G.; Monasse, P.; Morel, J.-M. A Precision Analysis of Camera Distortion Models. IEEE Trans. Image Process. 2017, 26, 2694–2704. [Google Scholar] [CrossRef] [Green Version]

- Zuo, C.; Huang, L.; Zhang, M.; Chen, Q.; Asundi, A. Temporal phase unwrapping algorithms for fringe projection profilometry: A comparative review. Opt. Lasers Eng. 2016, 85, 84–103. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Yin, Y.; Peng, X.; Li, A.; Liu, X.; Gao, B.Z. Calibration of fringe projection profilometry with bundle adjustment strategy. Opt. Lett. 2012, 37, 542–544. [Google Scholar] [CrossRef] [PubMed]

- Moré, J.J. The Levenberg-Marquardt algorithm: Implementation and theory. In Numerical Analysis; Springer: Berlin/Heidelberg, Germany, 1978; pp. 105–116. [Google Scholar]

- Fusiello, A.; Trucco, E.; Verri, A. A compact algorithm for rectification of stereo pairs. Mach. Vis. Appl. 2000, 12, 16–22. [Google Scholar] [CrossRef]

- Huang, B.; Tang, Y.; Ozdemir, S.; Ling, H. A Fast and Flexible Projector-Camera Calibration System. IEEE Trans. Autom. Sci. Eng. 2020, 18, 1049–1063. [Google Scholar] [CrossRef]

| Fitting Method | Polynomial Fitting Results of f() | RMSE | |

|---|---|---|---|

| Polynomial Fitting | 3D Reconstruction | ||

| Linear polynomial | 9.753 pixel | 1.7785 mm | |

| Quadratic polynomial | 0.7789 pixel | 0.1554 mm | |

| Cubic polynomial | 0.2185 pixel | 0.1209 mm | |

| Method | Reprojection Errors (Pixel) | ||

|---|---|---|---|

| Camera | Projector | Overall Mean Error | |

| Moreno and Taubin [18] | 0.15 | 2.58 | 1.83 |

| Global homography | 0.15 | 7.45 | 5.27 |

| Huang’s method [25] | 0.26 | 0.17 | 0.22 |

| Proposed | 0.02 | 0.06 | 0.03 |

| Scenes | Proposed Method | SV Method | ||

|---|---|---|---|---|

| Number of Points | Time Cost (s) | Number of Points | Time Cost (s) | |

| Metal block | 145,293 | 0.207 | 142,587 | 0.76 |

| Water sprinkler | 378,727 | 0.235 | 360,769 | 1.99 |

| Metal plate | 1,543,808 | 0.412 | 1,617,944 | 5.08 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Zhang, B.; Yuan, X.; Lin, J.; Jiang, K. An Improved Projector Calibration Method by Phase Mapping Based on Fringe Projection Profilometry. Sensors 2023, 23, 1142. https://doi.org/10.3390/s23031142

Liu Y, Zhang B, Yuan X, Lin J, Jiang K. An Improved Projector Calibration Method by Phase Mapping Based on Fringe Projection Profilometry. Sensors. 2023; 23(3):1142. https://doi.org/10.3390/s23031142

Chicago/Turabian StyleLiu, Yabin, Bingwei Zhang, Xuewu Yuan, Junyi Lin, and Kaiyong Jiang. 2023. "An Improved Projector Calibration Method by Phase Mapping Based on Fringe Projection Profilometry" Sensors 23, no. 3: 1142. https://doi.org/10.3390/s23031142