1. Introduction

With the development of the manufacturing industry, machining processes play an increasingly paramount role in the modern manufacturing industry. A major problem of processing is tool wear, which will lead to poor quality of machined parts and low production efficiency [

1,

2,

3,

4]. Studies have revealed that 20% of machine downtime can be attributed to different forms of tool wear [

5,

6]. Specifically, if the tool wear exceeds the failure standard and the tool cannot be superseded in time, it will directly affect the surface quality of the workpiece [

7,

8]. However, if the tool is replaced too early, it will cause waste and reduce productivity [

9]. Tool wear can lead to unexpected downtime and extra costs [

10]. Specifically, reliable and accurate tool wear estimates can reduce downtime costs by 10–40% [

11]. Therefore, tool wear monitoring is of great significance.

Generally, tool wear diagnostic approaches are differentiated into direct method and indirect method. Results of the direct method are accurate and intuitive, but the operation of the machine tool must be stopped during monitoring, which will prolong the processing time and reduce the production efficiency [

12,

13]. The monitoring results are easily interfered with by cutting fluid and chips, so the direct method is not suitable for the machine tool processing site [

12,

13]. The indirect method collects sensor signals, such as force [

14,

15], vibration [

16,

17] and acoustic emission [

13], extracts data features and establishes a feature map relationship between monitoring signals and tool wear condition.

Generally speaking, feature extraction is mainly divided into two categories: model-based and data-based methods. The model-based method uses a mathematical model to simulate the relationship between tool wear and the machining process to obtain relevant features [

18]. Mathematical models are machine learning algorithms, such as the Hidden Markov Model [

19], Wiener and Gamma processes [

20] and Kalman filtering [

21]. Although the model-based approach is successful, it requires a wealth of expertise. This method is susceptible to prior knowledge [

22] and limits the maximum utilization of sensors signals.

To overcome these difficulties, data-based approaches have been proposed as more attractive alternatives. The data-based approach has two main advantages. First, it does not require extensive prior knowledge. Second, monitoring sensors can conveniently collect real-time data of cutting tools [

23]. Deep Learning (DL) is the epitome of this approach.

In industrial processing, enormous amounts of data are obtained through sensors for tool conditions. DL technology has powerful characteristics, such as powerful nonlinear learning ability. Xu et al. [

24] realized multi-scale feature fusion by using the developed parallel convolutional neural network and the channel attention mechanism of the remaining connections considered the weights of different feature graphs. Liu et al. [

25] proposed a new neural network model based on Transformer, based on the Transformer model, self-attention mechanism and LSTM. Yin et al. [

26] proposed a one-dimensional convolutional neural networks (1D-CNN) and deep Generalized canonical correlation Analysis (DGCCA) for multi-sensor-based tool wear diagnosis. Zhou et al. [

27] proposed an improved multi-scale edge marker map neural network, in order to improve the recognition accuracy of TCM medicines based on DL in small samples. Marei et al. [

28] proposed a hybrid CNN-LSTM with transfer learning in cutting tool prognostics. Cai et al. [

29] proposed a hybrid information system based on Long and Short Term Memory Network (LSTM) for tool wear prediction. Dong et al. [

30] proposed a new monitoring method of woodworking tool wear condition by using the limit arithmetic mean filtering method and particle swarm optimization (PSO) backpropagation (BP) neural network algorithm. Phani et al. [

31] proposed the deep CNN architecture by selecting appropriate hyperparameters and established the CNN model of tool wear classification by selecting appropriate training parameters. Achyuth et al. [

32] proposed support vector machine (SVM) and convolutional neural network (CNN) to analyze the audible signals generated during the machining process to predict the changes of tool wear and workpiece hardness. Shi et al. [

33] proposed a new framework for the fusion of multiple heap toxic sparse autoencoders, which mainly consists of a training model and a feature fusion structure. Jian et al. [

34] adopted short-time Fourier transform (STFT) for data preprocessing and based on ResNet’s feature layer by layer dimension reduction optimization model.

In this paper, we propose a novel ResNet-based one-dimensional network (CaAt-ResNet-1d) for tool wear condition monitoring. It consists of two kinds of channel attention mechanisms. The dataset reported in the 2010 PHM Data Challenge (PHM Society Conference Data Challenge, provided at

https://www.phmsociety.org/competition/phm/10 (accessed on 1 January 2022)) is trained and tested after downsampling. The main contributions of this paper include the following:

CaAt-ResNet-1d is realized via ResNet18 of one-dimensional convolutional neural network (1D CNN) and channel attention. Depending on the timing characteristics of tool wear data, ResNet18 is composed of 1D CNN. ResNet residual connections retain the depth advantage of multiple networks and the advantage of shallow networks to avoid degradation problems. In view of the multi-channel features of time series data, the channel attention was in addition to 1D CNN ResNet18 to improve the model’s ability to automatically learn different channel features.

The original PHM2010 dataset downsamples and redivides. Three groups of different models were trained and tested on the newly divided dataset, which proved the accuracy and stability of the proposed model.

The rest of the paper is organized as follows: the basic structure of CNN, the residual unit and channel attention are introduced and described in

Section 2;

Section 3 presents a novel resnet-based one-dimensional network (CaAt-ResNet-1d) for tool wear condition monitoring. In more detail, channel attention mechanisms combining with the residual connections are developed to achieve tool wear classification.

Section 4 presents the experimental setup, results and a discussion. Finally,

Section 5 presents the conclusion and future work directions.

4. Experiments and Results

4.1. Dataset Description

The dataset which analyzes the performance of the proposed method is originally made available from the PHM2010 data challenge. It is obtained from a high-speed CNC machine which uses a three slot ball head tungsten carbide tool on the surface of stainless steel workpiece processing. During milling processing, the three-way dynamometer is installed between the workpiece and the processing table to measure the cutting force in X, Y and Z directions, the piezoelectric acceleration sensor is installed on the workpiece to collect vibration signals in X, Y and Z directions, the Kistler acoustic emission sensor is installed on the processing table to monitor and record high-frequency stress wave changes, as shown in

Figure 6.

The details of CNC equipment are shown in

Table 1, the setting of milling conditions is shown in

Table 2 and the signal data collected by the corresponding sensors of different channels are shown in

Table 3.

The workpiece machining surface used in the milling process is a square with a length of 108 mm and the distance of each milling of 108 mm is marked as one cutting. After each cutting, the tool wear amount of the back cutting surface is measured with a microscope and recorded as the wear result. The number for the cutting time in each process is 315.

A total of six ball-end carbide milling cutters are recorded as C1, C2, C3, C4, C5 and C6. Only C1, C4 and C6 are used in the model evaluation. The three-way force signal, three-way vibration signal and acoustic emission signal of the tool are, respectively, collected in each process, as shown in

Table 3. Considering the convenience of measurement in industrial application, the wear width VB of 1/2 cutting amount on the back tool face is selected as the standard parameter of tool blunting, as shown in

Figure 7. For carbide cutting tools, VB above 0.3 mm reaches the standard blunt and the tool must be replaced. The average wear value of the rear tool was calculated as the wear label. Maximum wear of the initial wear stage is 0.086 mm. When the wear amount is greater than 0.12 mm, the tool has reached the stage of severe wear and must be replaced immediately. The middle wear stage is between 0.086 mm and 0.12 mm.The tool wears curves of C1, C4 and C6 are shown in

Figure 8.

As shown in

Table 1, spindle speed is 10,400 RPM, indicating that the tool rotates 173 revolutions per second. The data sampling rate was 50 khz; consequently, approximately 289 data points are collected per rotation. The data points are too redundant and the computation time is added without value. Therefore, it is indispensable to re-select the appropriate signal length, which should be as brief as possible and be able to express all the captured features. After calculation, the most appropriate length of the signal data points is 256. The data of each milling process are analysed and calculated, respectively. Taking C1 as an example, the number of cutting events in the initial wear stage is approximately 50, the number of the middle wear stage is about 160 and the number of the severe wear stage is close to 100. If training data are generated by randomly sampling from the complete dataset, the number of initial wear categories would be approximately 16%, about 51% of the samples would be in the middle wear category and about 33% would be in the severe wear category. The training sample generates the problem of class data imbalance. To solve this problem, the dataset needs to be redefined using appropriate downsampling operations.

Because a sufficient amount of data are collected in each milling process, downsampling operations of the three categories can be performed separately. As an example, more than 200,000 data points are collected per walk in C1 and 50 runs were in the initial wear stage. Thus, a total of 10 million data points are gathered during the initial wear stage. In order to ensure category balance, the initial wear stage is downsampled at a rate of 8% and about 3000 samples of the initial wear stage are obtained. With the same downsampling strategy, the downsampling rates of 2% and 4% are adopted in the middle wear and severe wear stages, respectively. The C1 dataset redefined a dataset consisting of 8954 samples, including 2990 samples in the initial wear stage, 3006 samples in the middle wear stage and 2958 samples in the severe wear stage.

4.2. Training and Test

After the dataset is downsampled, the average wear value of each cutting event is calculated for label establishment. Initial wear, middle wear and severe wear correspond to labels 1, 2 and 3, respectively.

Raw data collected from seven channels are worked in the CaAt-ResNet-1d model for multi-sensor tool wear diagnosis. In order to fully verify the experiment, three sets of verification experiments are conducted on C1, C2 and C3 datasets, as shown in

Table 4. In the first set of experiments, data from C1 and C4 cutters (a total of 17,684 samples) are used as a training set to generate model M1+4, which is tested using data from C6 cutters. In the second set of experiments, data from C1 and C6 cutters (17,190 samples in total) are invoked as a training set to produce model M1+6, which is tested using data from C4 cutters. In the third experiment, the training set of C4 and C6 tool data (17,414 samples in total) are used to obtain the model M4+6 and the data of the C1 tool are utilized to test the model.

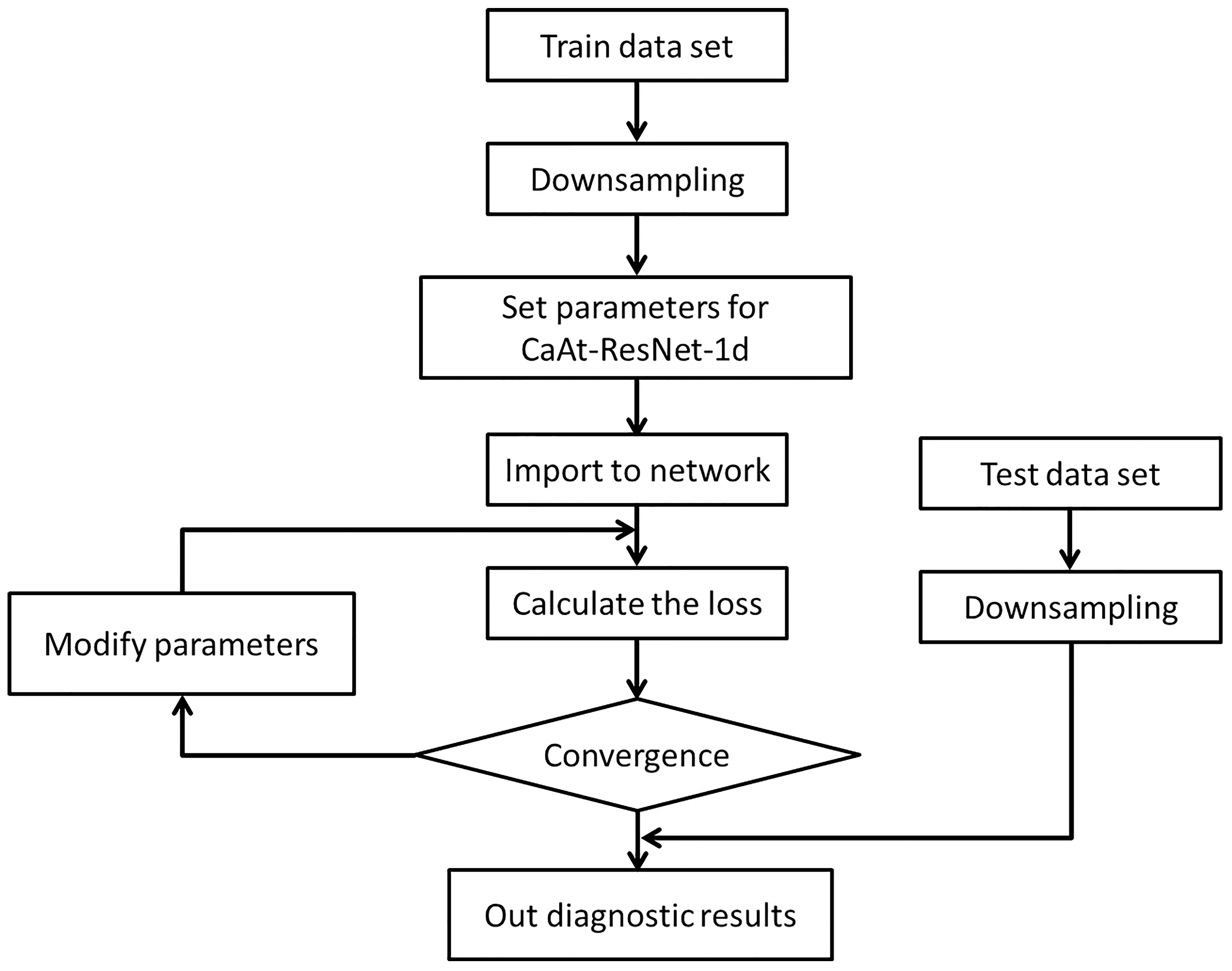

The computer system is Ubuntu 18.04, which uses two nVidia 1080Ti GPUs for parallel training. The general flow is shown in

Figure 9.

The collected C1, C4 and C6 datasets are subsampled and divided into new datasets;

CaAt-ResNet-1d model initialization parameters, learning rate is 0.0001, detailed parameters are shown in

Table 5;

After data input to the model, the loss value, reverse transmission and correction of the hyperparameter are calculated;

The model and output the evaluation results are tested.

Binary cross entropy is used as a loss function in the evaluation and its mathematical expression can be expressed as follows:

Accuracy is used as the evaluation index of the experiment and the test results can be extensively quantified by accuracy. The specific formula is shown as follows:

where

represents the overall accuracy of the evaluation.

,

,

and

represent the number of true positives, false negatives, false positives and true negatives

4.3. Experiment Results

In this part, we construct a CaAt-ResNet-1d model and evaluate the tool condition through the actual tool data. C1, C4 and C6 in three test experiments (M4+6, M1+6 and M1+4) are fully trained and tested, respectively. In order to further assess the performance of CaAt-ResNet-1d, comparison is made with other popular algorithms, LSTM [

38], GRU [

39], Gated-Transformer [

40] and Resnet18 [

35].

Table 6 presents the results of different algorithms, respectively.

The experimental results demonstrate that different algorithm models produce different accuracy. The accuracy of the CaAt-ResNet-1d algorithm is greater than of other popular algorithms in the three experiments, respectively. In the M1+4 tested dataset, the proposed method improves by about 4% compared to LSTM, 4% compared to GRU, 12% compared to Gated-Transformer and 5% compared to Resnet18. In the M1+6 tested dataset, the proposed method improves by about 13% compared to LSTM, 9% compared to GRU, 20% compared to Gated-Transformer and 3% compared to Resnet18. In the M4+6 tested dataset, the proposed method improves by about 7% compared to LSTM, 2% compared to GRU, 6% compared to Gated-Transformer and 3% compared to Resnet18. The highest accuracy of the LSTM model is 81.52%, GRU model is 85.82%, Gated Transformer model is 81.95% and ResNet18 model is 85.12%. The highest accuracy of the CaAt-ResNet-1d model is 89.27%, which is more effective than other algorithms.

4.4. Discussion

In this study, an improved CaAt-ResNet-1d model is proposed for the PHM2010 dataset. We completed the experimental verification on three sets of downsampling datasets. The CaAt-ResNet-1d algorithm behaves more efficiently than LSTM, GRU, Gated-Transformer and Resnet18. The accuracy of Gated-Transformer is the worst; a possible reason is that the dataset sample is not sufficient. Gated-Transformer with Transformer [

41] as the core requires a large number of samples to reflect the superiority of the algorithm, so it is not suitable for the dataset of small samples like PHM2010. LSTM and GRU are suitable for time series data monitoring, but their accuracies are not the highest. A possible reason is that the algorithm is too simple and does not extract rich features. Compared to the baseline Resnet18, CaAt-ResNet-1d has been improved to obtain the highest accuracy. The reasons for this may be as follows: 1. Residual connections retain the depth advantage of multiple networks and the advantage of shallow networks to avoid degradation problems. 2. One-dimensional CNN and channel attention are more suitable for feature extraction of multi-sensor series data. There are differences in the accuracy of CaAt-ResNet-1d on the results of M1+4, M1+6, M4+6 datasets that cannot be ignored. LSTM, GRU, Gated-Transformer and Resnet18 also have accuracy gaps that cannot be ignored in the three test datasets, as shown in

Table 7. The reasons for this may be as follows: the experiment datasets could not fully include various feature spaces. In addition, it is worth noting that the current results do not meet real-time machining requirements. The reasons for this may be as follows: 1. The signal data collected by the sensor is vulnerable to the influence of the milling process, resulting in the destruction of the actual characteristics of the data. 2. The dataset does not collect enough rich data samples and could not fully include various feature spaces, resulting in failing to meet the standard for real-time detection. On the algorithm level, we can try to design a more reasonable network to achieve the purpose of real-time detection. For example, the combination design of CaAt-ResNet-1d and GRU may obtain more ideal accuracy.