An Adaptive Kernels Layer for Deep Neural Networks Based on Spectral Analysis for Image Applications

Abstract

1. Introduction

2. Width-Based Layer Design (Inception and Inception-like Approaches)

3. Proposed Method: An Adaptive Kernels Layer

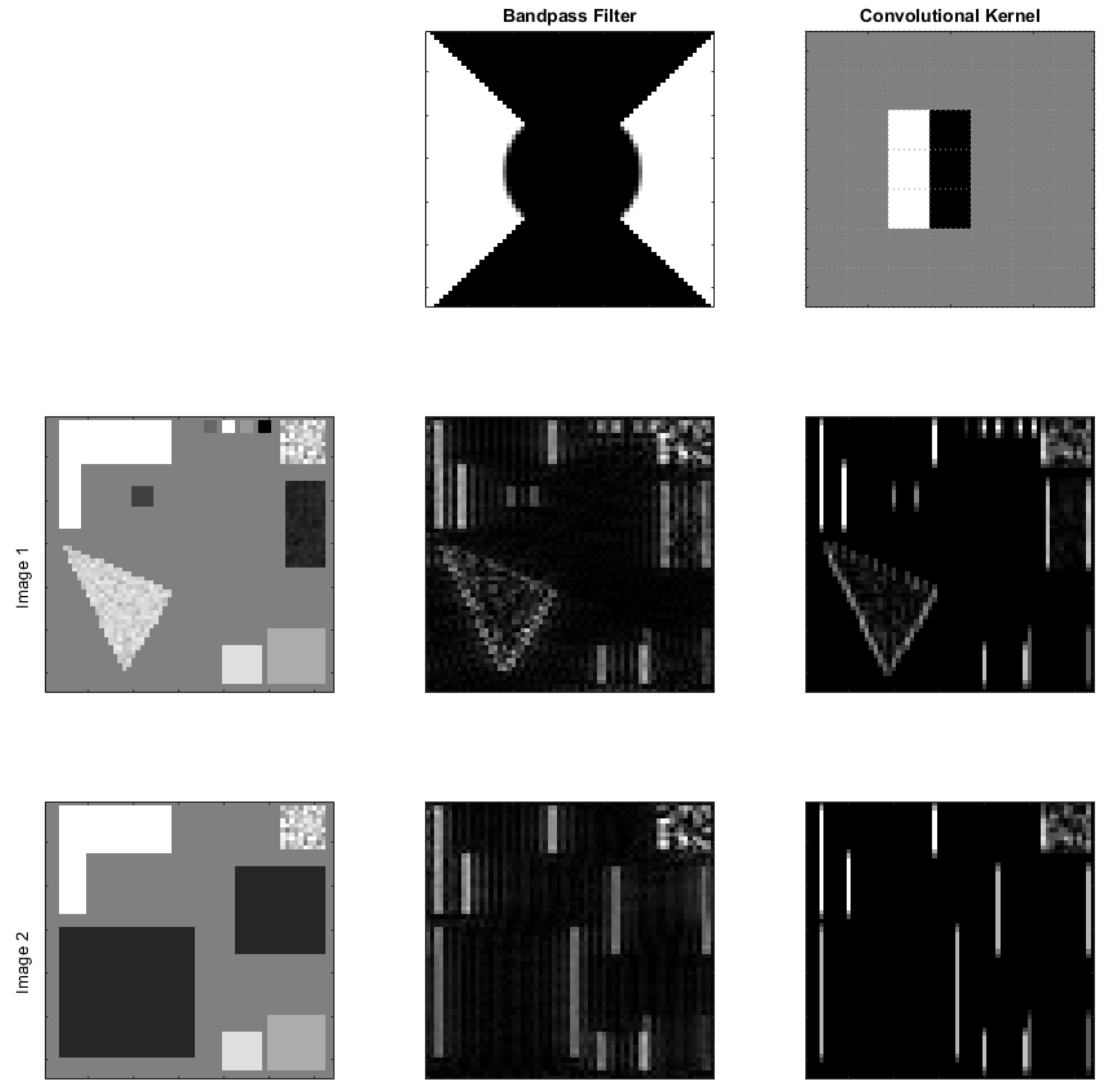

3.1. Examining Spatial–Spectral Features and Convolutional Kernels

3.2. Proposed Layer Formulation

4. Experimental Results

4.1. Image Classification

- Horses or Humans Dataset [27]: consists of about 1000 rendered images of size , divided into two categories: horse or human.

- Cats and Dogs Dataset [28]: this dataset was generated for the Playground Prediction Competition, and is made from 25,000 images of sizes , divided into two categories: cat or dog.

- Food 5K Dataset [29]: consists of 50,000 images of size divided into two categories: food or nonfood.

4.2. Object Localization

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| VHR | Very high resolution |

| CNN | Convolutional neural networks |

| LRF | Local receptive field |

| AKL | Adaptive kernels layer |

| IoU | Intersection over the union |

References

- Marin, C.; Bovolo, F.; Bruzzone, L. Building change detection in multitemporal very high resolution SAR images. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2664–2682. [Google Scholar] [CrossRef]

- Saha, S.; Bovolo, F.; Bruzzone, L. Building Change Detection in VHR SAR Images via Unsupervised Deep Transcoding. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1917–1929. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Luan, S.; Chen, C.; Zhang, B.; Han, J.; Liu, J. Gabor convolutional networks. IEEE Trans. Image Process. 2018, 27, 4357–4366. [Google Scholar] [CrossRef]

- Ioannou, Y.; Robertson, D.; Shotton, J.; Cipolla, R.; Criminisi, A. Training cnns with low-rank filters for efficient image classification. arXiv 2015, arXiv:1511.06744. [Google Scholar]

- Wu, Y.; Bai, Z.; Miao, Q.; Ma, W.; Yang, Y.; Gong, M. A Classified Adversarial Network for Multi-Spectral Remote Sensing Image Change Detection. Remote Sens. 2020, 12, 2098. [Google Scholar] [CrossRef]

- Hou, B.; Liu, Q.; Wang, H.; Wang, Y. From W-Net to CDGAN: Bitemporal Change Detection via Deep Learning Techniques. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1790–1802. [Google Scholar] [CrossRef]

- Saha, S.; Bovolo, F.; Bruzzone, L. Unsupervised Deep Change Vector Analysis for Multiple-Change Detection in VHR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3677–3693. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Krüger, V.; Sommer, G. Gabor wavelet networks for object representation. In Multi-Image Analysis; Springer: Berlin/Heidelberg, Germany, 2001; pp. 115–128. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Serte, S.; Demirel, H. Gabor wavelet-based deep learning for skin lesion classification. Comput. Biol. Med. 2019, 113, 103423. [Google Scholar] [CrossRef]

- Ahsan, M.; Based, M.; Haider, J.; Kowalski, M. An intelligent system for automatic fingerprint identification using feature fusion by Gabor filter and deep learning. Comput. Electr. Eng. 2021, 95, 107387. [Google Scholar]

- Serre, T.; Wolf, L.; Bileschi, S.; Riesenhuber, M.; Poggio, T. Robust object recognition with cortex-like mechanisms. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 411–426. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Tsai, C.Y.; Chen, C.L. Attention-Gate-Based Model with Inception-like Block for Single-Image Dehazing. Appl. Sci. 2022, 12, 6725. [Google Scholar] [CrossRef]

- Du, G.; Zhou, P.; Abudurexiti, R.; Mahpirat; Aysa, A.; Ubul, K. High-Performance Siamese Network for Real-Time Tracking. Sensors 2022, 22, 8953. [Google Scholar] [CrossRef] [PubMed]

- Munir, K.; Frezza, F.; Rizzi, A. Deep Learning Hybrid Techniques for Brain Tumor Segmentation. Sensors 2022, 22, 8201. [Google Scholar] [CrossRef]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet: Rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [CrossRef]

- Bao, T.; Fu, C.; Fang, T.; Huo, H. PPCNET: A combined patch-level and pixel-level end-to-end deep network for high-resolution remote sensing image change detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1797–1801. [Google Scholar] [CrossRef]

- Sariturk, B.; Seker, D.Z. A Residual-Inception U-Net (RIU-Net) Approach and Comparisons with U-Shaped CNN and Transformer Models for Building Segmentation from High-Resolution Satellite Images. Sensors 2022, 22, 7624. [Google Scholar] [CrossRef]

- Wu, W.; Pan, Y. Adaptive Modular Convolutional Neural Network for Image Recognition. Sensors 2022, 22, 5488. [Google Scholar] [CrossRef]

- Prabhakar, K.R.; Ramaswamy, A.; Bhambri, S.; Gubbi, J.; Babu, R.V.; Purushothaman, B. Cdnet++: Improved change detection with deep neural network feature correlation. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Moroney, L. Datasets for Machine Learning—Laurence Moroney—The AI Guy. Available online: https://laurencemoroney.com/datasets.html (accessed on 6 January 2023).

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A.; Jawahar, C. Cats and dogs. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3498–3505. [Google Scholar]

- Singla, A.; Yuan, L.; Ebrahimi, T. Food/non-food image classification and food categorization using pre-trained googlenet model. In Proceedings of the 2nd International Workshop on Multimedia Assisted Dietary Management, Amsterdam, The Netherlands, 15–19 October 2016; pp. 3–11. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.A.; Adam, J. A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Laaksonen, J.; Oja, E. Classification with learning k-nearest neighbors. In Proceedings of the International Conference on Neural Networks (ICNN’96), Washington, DC, USA, 3–6 June 1996; Volume 3, pp. 1480–1483. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 658–666. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al Shoura, T.; Leung, H.; Balaji, B. An Adaptive Kernels Layer for Deep Neural Networks Based on Spectral Analysis for Image Applications. Sensors 2023, 23, 1527. https://doi.org/10.3390/s23031527

Al Shoura T, Leung H, Balaji B. An Adaptive Kernels Layer for Deep Neural Networks Based on Spectral Analysis for Image Applications. Sensors. 2023; 23(3):1527. https://doi.org/10.3390/s23031527

Chicago/Turabian StyleAl Shoura, Tariq, Henry Leung, and Bhashyam Balaji. 2023. "An Adaptive Kernels Layer for Deep Neural Networks Based on Spectral Analysis for Image Applications" Sensors 23, no. 3: 1527. https://doi.org/10.3390/s23031527

APA StyleAl Shoura, T., Leung, H., & Balaji, B. (2023). An Adaptive Kernels Layer for Deep Neural Networks Based on Spectral Analysis for Image Applications. Sensors, 23(3), 1527. https://doi.org/10.3390/s23031527