Altering Fish Behavior by Sensing Swarm Patterns of Fish in an Artificial Aquatic Environment Using an Interactive Robotic Fish

Abstract

1. Introduction

- Designing a futuristic and minimalistic robotic fish with an Ostraciiform tail that can operate in a wide range of water environments.

- Analyzing fish behaviors and fish swarm patterns using the robotic fish and recognizing these patterns using a proposed machine learning algorithm.

- Performing fish-food drop activity based on the identified fish swarm patterns and analyzing the resulting changes in fish behavior.

- Collecting user feedback about the robot fish and its behavior.

2. Literature Review

3. Design and Methodology

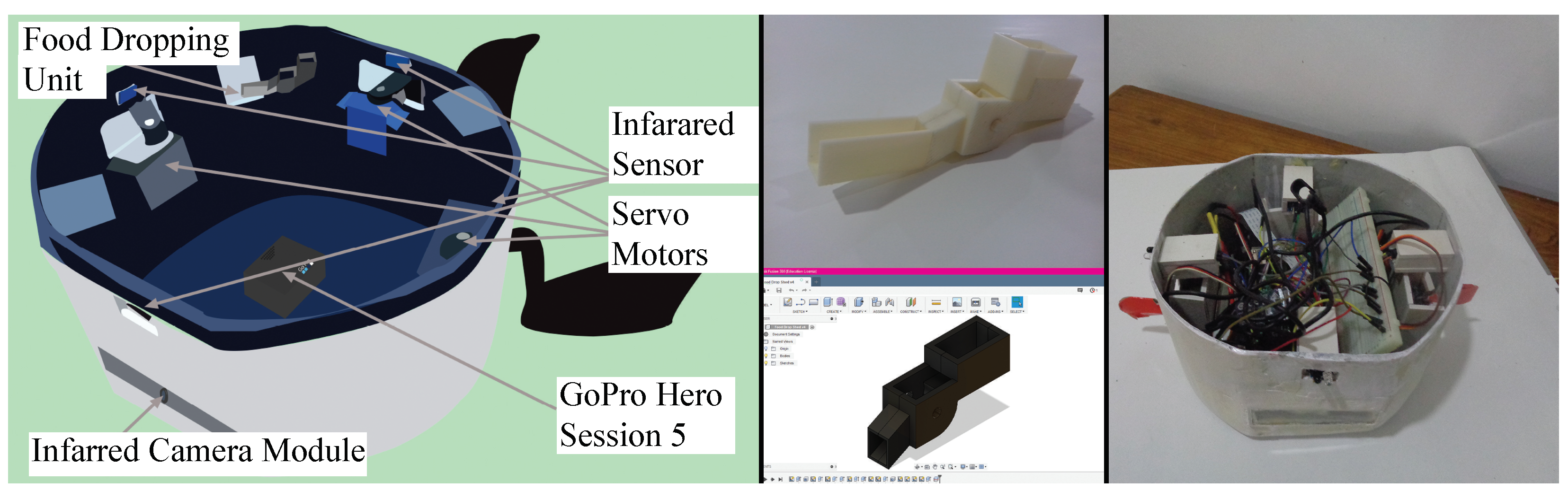

3.1. Design of the Robotic Fish

3.1.1. Rigid Body

3.1.2. Robotic Fish Fins

3.1.3. Internal Structure

3.1.4. Food Dropping Unit

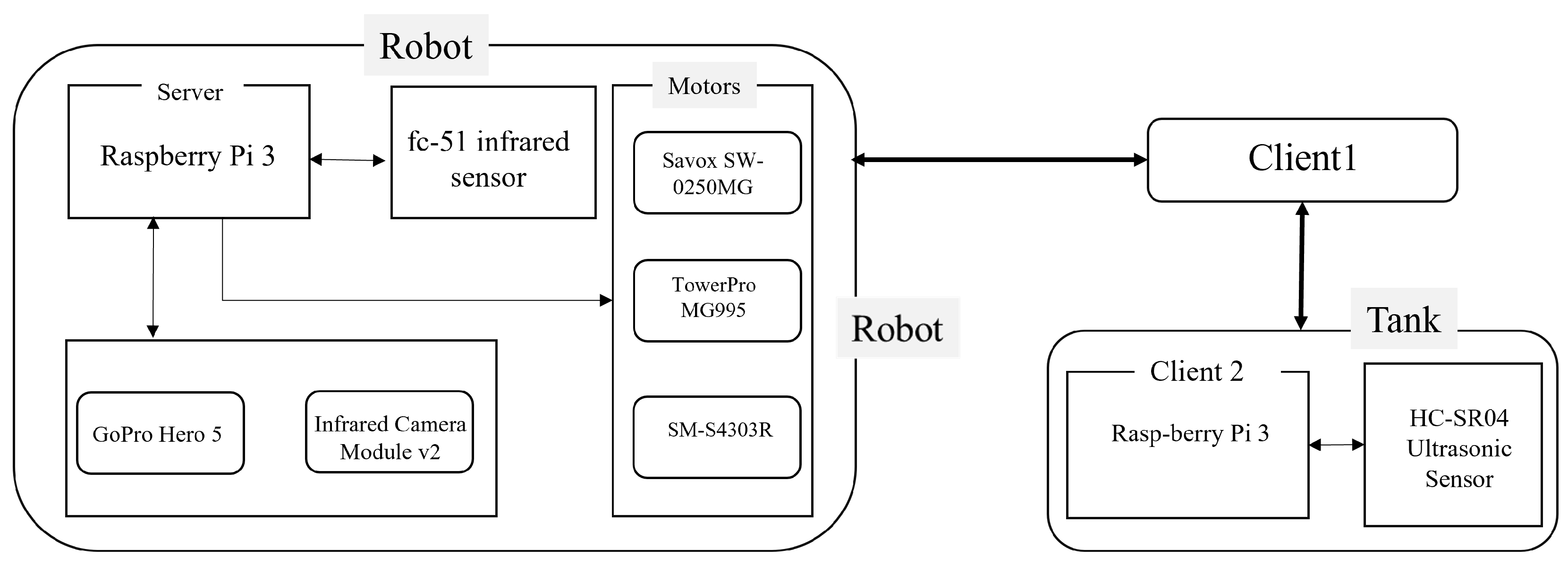

3.2. Hardware Architecture of the System

Internal Structure

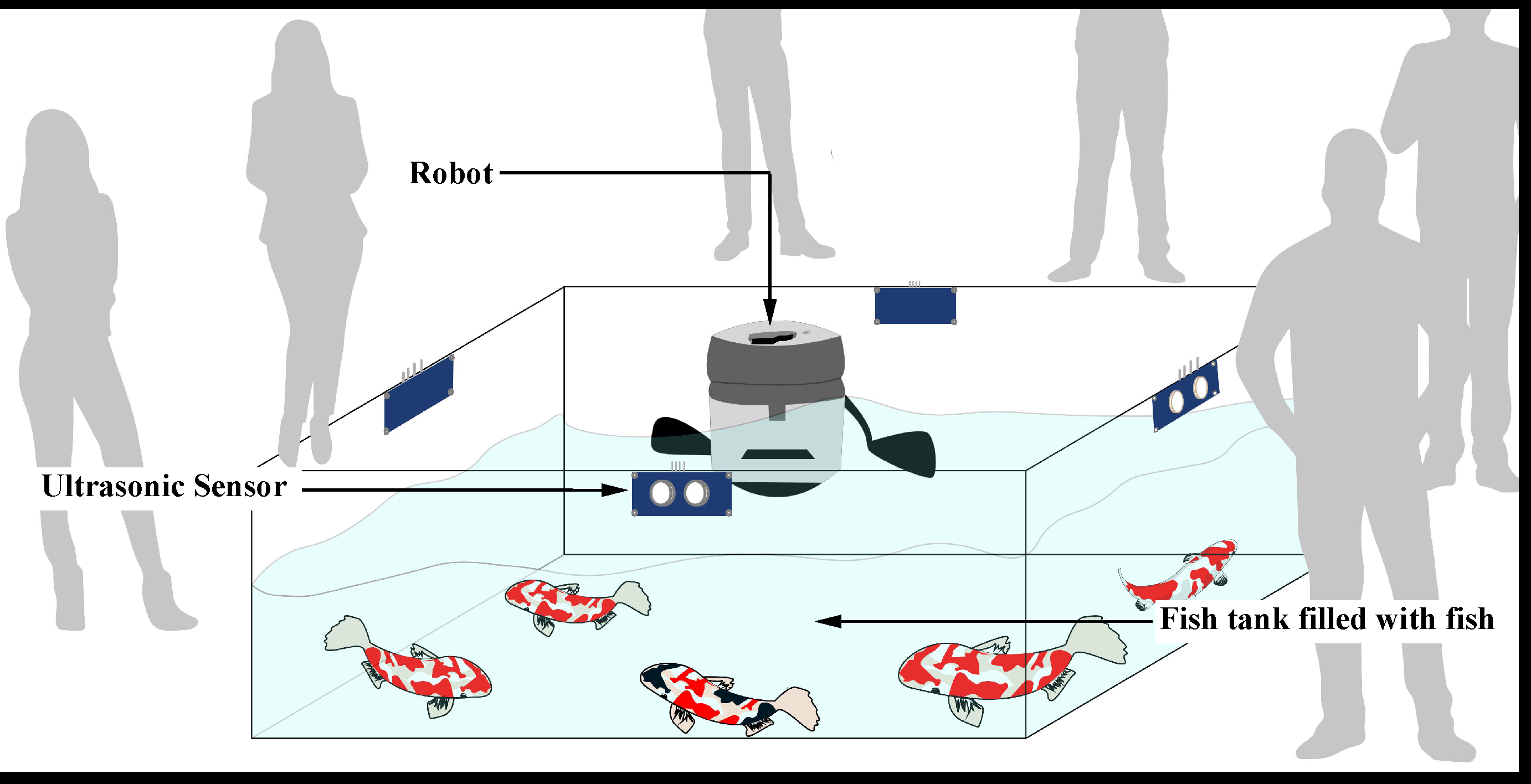

3.3. Experimental Setup

3.4. Software Implementation

3.4.1. Fish Detecting Algorithm

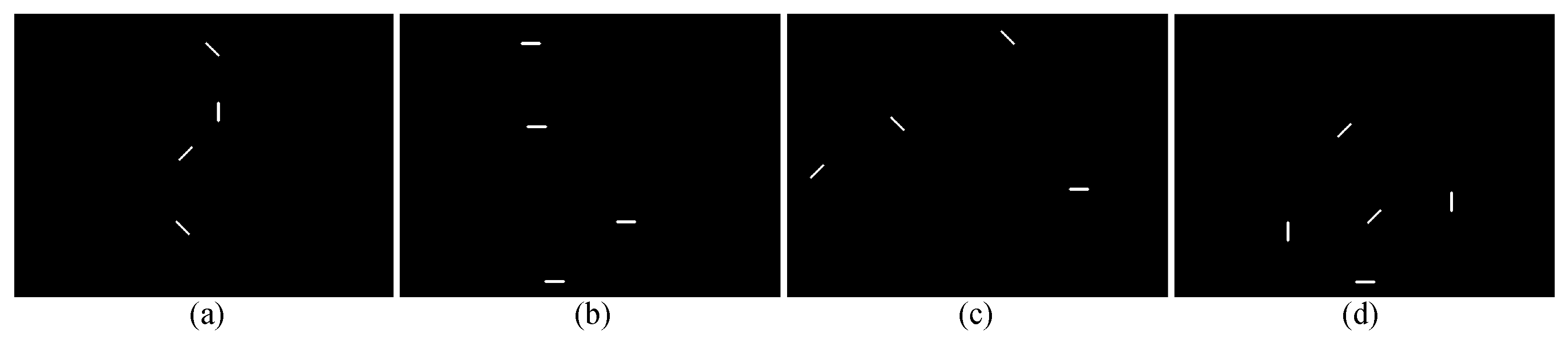

3.4.2. Classify Fish Swarm Patterns

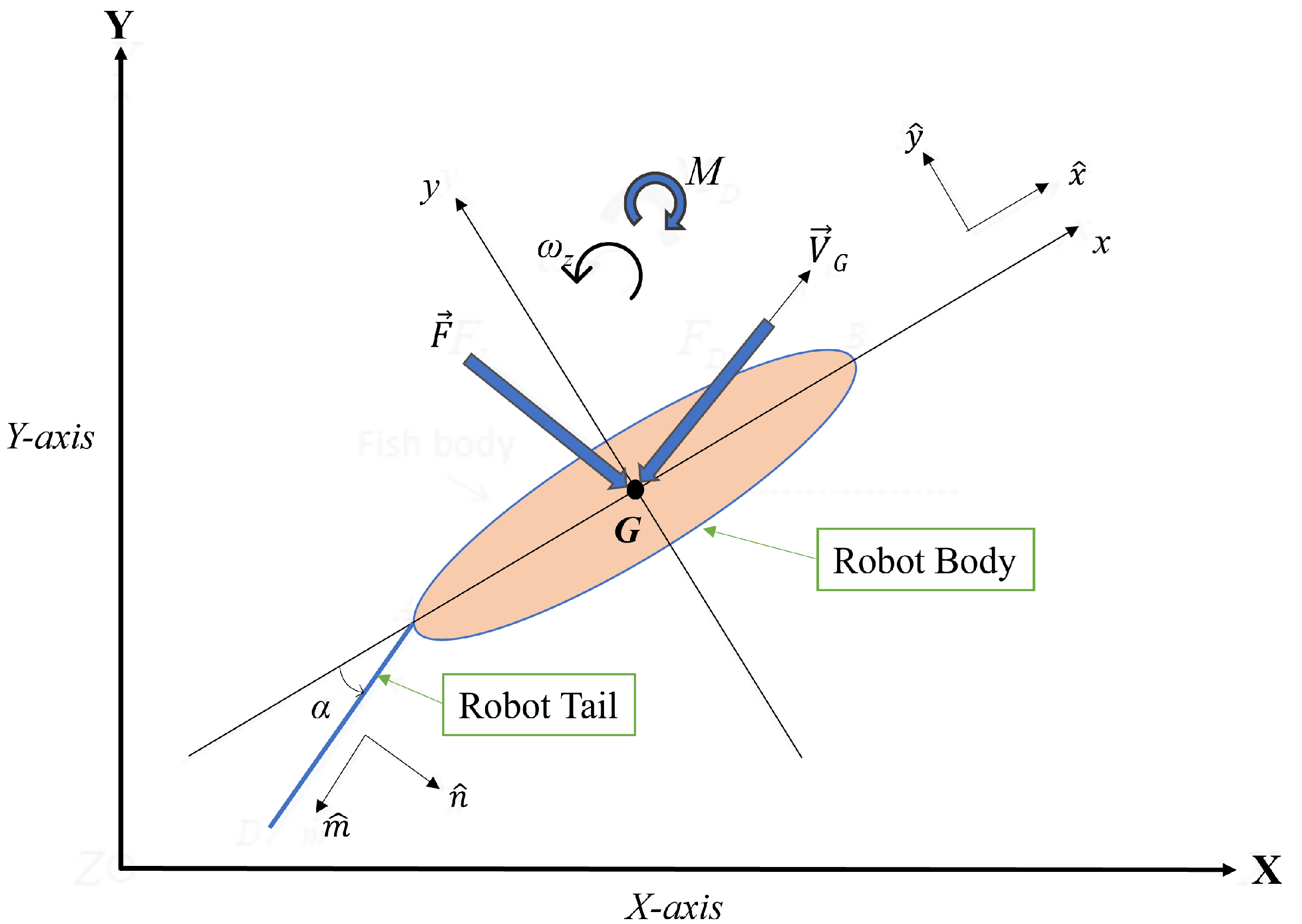

3.5. Dynamic Model of Robotic Fish

3.6. Data Collection

- Part 1: information about the participant (5 questions);

- Part 2: animacy of the robotic fish (3 questions);

- Part 3: design of the robotic fish (3 questions);

- Part 4: the effectiveness of robotic fish behaviors (3 questions);

- Part 5: the overall impression of robotic fish (3 questions).

4. Results and Discussion

4.1. Fish Detection

4.2. Fish Swarm Pattern Recognition

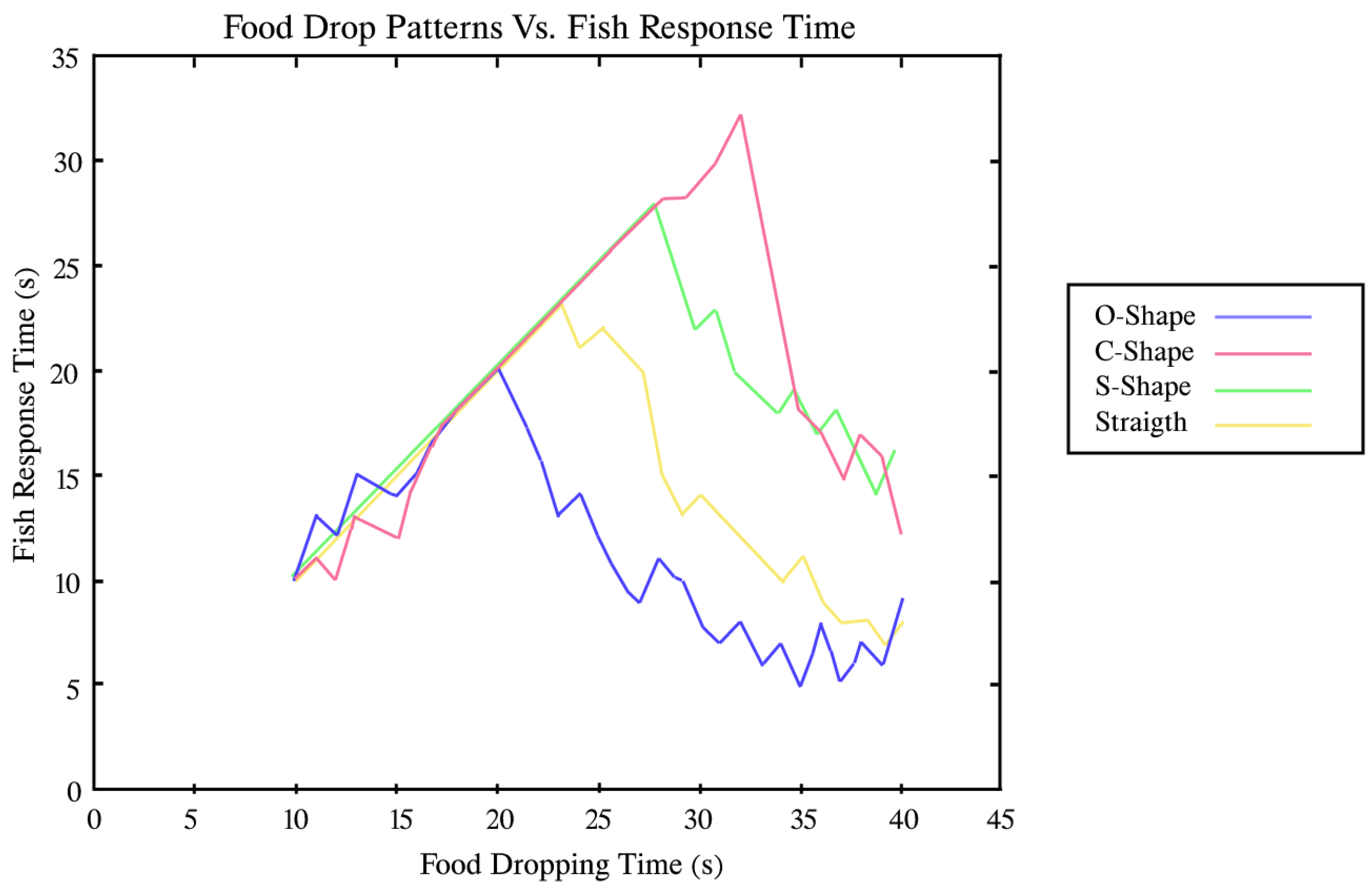

4.3. Food Drop Patterns

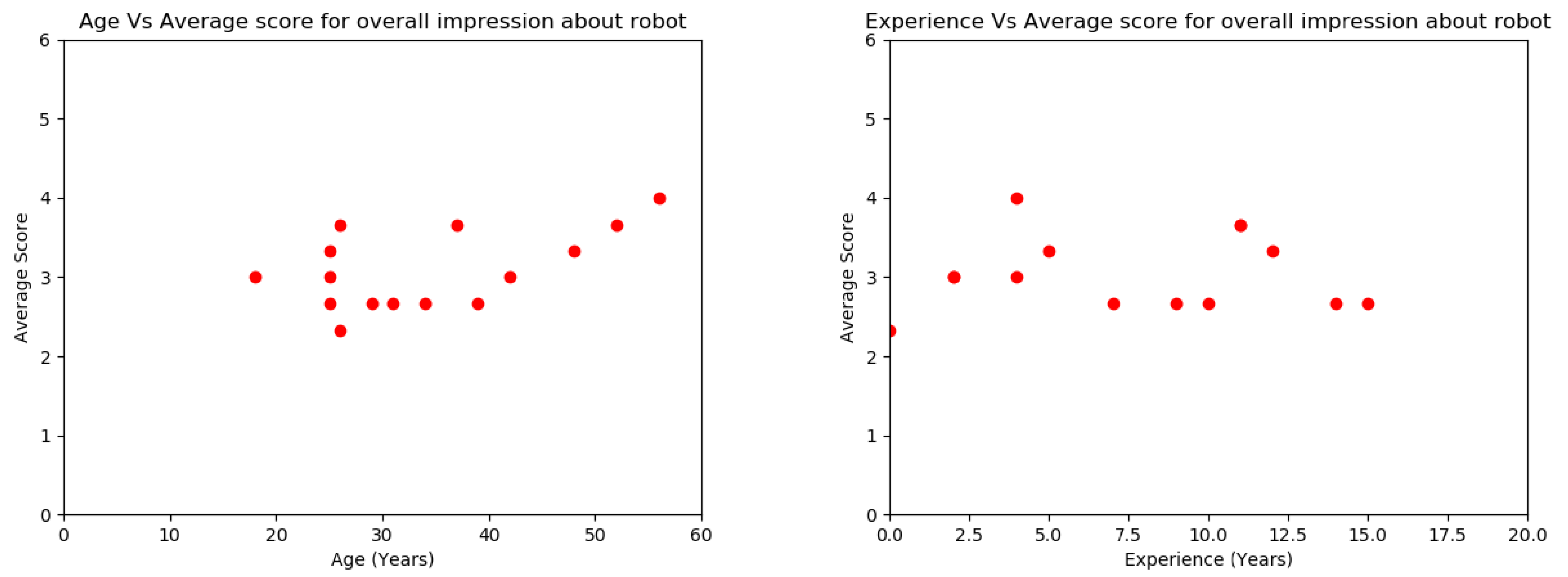

4.4. Data Collected through the Questionnaire

5. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- DeSchriver, M.M.; Riddick, C.C. Effects of watching aquariums on elders’ stress. Anthrozoös 1990, 4, 44–48. [Google Scholar] [CrossRef]

- Riddick, C.C. Health, aquariums, and the non-institutionalized elderly. Marriage Fam. Rev. 1985, 8, 163–173. [Google Scholar] [CrossRef]

- Liu, J.; Hu, H. Biological inspiration: From carangiform fish to multi-joint robotic fish. J. Bionic Eng. 2010, 7, 35–48. [Google Scholar] [CrossRef]

- Barker, S.B.; Rasmussen, K.G.; Best, A.M. Effect of aquariums on electroconvulsive therapy patients. Anthrozoös 2003, 16, 229–240. [Google Scholar] [CrossRef]

- Cocker, H. The positive effects of aquarium visits on children’s behaviour: A behavioural observation. Plymouth Stud. Sci. 2012, 5, 165–181. [Google Scholar]

- Aleström, P.; D’Angelo, L.; Midtlyng, P.J.; Schorderet, D.F.; Schulte-Merker, S.; Sohm, F.; Warner, S. Zebrafish: Housing and husbandry recommendations. Lab. Anim. 2020, 54, 213–224. [Google Scholar] [CrossRef] [PubMed]

- Torgersen, T. Ornamental Fish and Aquaria. In The Welfare of Fish; Springer International Publishing: Cham, Switzerland, 2020; pp. 363–373. [Google Scholar] [CrossRef]

- Deborah, C.; White, M.P.; Pahl, S.; Nichols, W.J.; Depledge, M.H. Marine biota and psychological well-being: A preliminary examination of dose—Response effects in an aquarium setting. Environ. Behav. 2016, 48, 1242–1269. [Google Scholar] [CrossRef]

- Clements, H.; Valentin, S.; Jenkins, N.; Rankin, J.; Baker, J.S.; Gee, N.; Snellgrove, D.; Sloman, K. The effects of interacting with fish in aquariums on human health and well-being: A systematic review. PLoS ONE 2019, 14, e0220524. [Google Scholar] [CrossRef]

- Foresti, G.L. Visual inspection of sea bottom structures by an autonomous underwater vehicle. IEEE Trans. Syst. Man Cybern. Part B 2001, 31, 691–705. [Google Scholar] [CrossRef]

- Johannsson, H.; Kaess, M.; Englot, B.; Hover, F.; Leonard, J. Imaging sonar-aided navigation for autonomous underwater harbor surveillance. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4396–4403. [Google Scholar] [CrossRef]

- Chutia, S.; Kakoty, N.M.; Deka, D. A review of underwater robotics, navigation, sensing techniques and applications. In Proceedings of the Conference on Advances in Robotics, New Delhi, India, 28 June–2 July 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Li, Y.; Xu, Y.; Wu, Z.; Ma, L.; Guo, M.; Li, Z.; Li, Y. A comprehensive review on fish-inspired robots. Int. J. Adv. Robot. Syst. 2022, 19. [Google Scholar] [CrossRef]

- Kidd, A.H.; Kidd, R.M. Benefits, problems, and characteristics of home aquarium owners. Psychol. Rep. 1999, 84, 998–1004. [Google Scholar] [CrossRef]

- Gee, N.R.; Reed, T.; Whiting, A.; Friedmann, E.; Snellgrove, D.; Sloman, K.A. Observing live fish improves perceptions of mood, relaxation and anxiety, but does not consistently alter heart rate or heart rate variability. Int. J. Environ. Res. Public Health 2019, 16, 3113. [Google Scholar] [CrossRef] [PubMed]

- Kooijman, S. Social interactions can affect feeding behaviour of fish in tanks. J. Sea Res. 2009, 62, 175–178. [Google Scholar] [CrossRef]

- Mason, R.; Burdick, J.W. Experiments in carangiform robotic fish locomotion. In Proceedings of the 2000 ICRA, Millennium Conference, IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 24–28 April 2000; Volume 1, pp. 428–435. [Google Scholar] [CrossRef]

- Yu, J.; Tan, M.; Wang, S.; Chen, E. Development of a biomimetic robotic fish and its control algorithm. IEEE Trans. Syst. Man Cybern. Part B 2004, 34, 1798–1810. [Google Scholar] [CrossRef]

- MIT_News. MIT’s Robotic Fish Takes First Swim. MIT News, 13 September 1994. [Google Scholar]

- Zhao, W.; Yu, J.; Fang, Y.; Wang, L. Development of multi-mode biomimetic robotic fish based on central pattern generator. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 3891–3896. [Google Scholar] [CrossRef]

- Aureli, M.; Kopman, V.; Porfiri, M. Free-locomotion of underwater vehicles actuated by ionic polymer metal composites. IEEE/Asme Trans. Mechatron. 2009, 15, 603–614. [Google Scholar] [CrossRef]

- Low, K.; Willy, A. Biomimetic motion planning of an undulating robotic fish fin. J. Vib. Control. 2006, 12, 1337–1359. [Google Scholar] [CrossRef]

- Kopman, V.; Laut, J.; Acquaviva, F.; Rizzo, A.; Porfiri, M. Dynamic modeling of a robotic fish propelled by a compliant tail. IEEE J. Ocean. Eng. 2014, 40, 209–221. [Google Scholar] [CrossRef]

- Kickstarter. BIKI: First Bionic Wireless Underwater Fish Drone; Kickstarter: Brooklyn, NY, USA, 2018. [Google Scholar]

- Cazenille, L.; Chemtob, Y.; Bonnet, F.; Gribovskiy, A.; Mondada, F.; Bredeche, N.; Halloy, J. Automated calibration of a biomimetic space-dependent model for zebrafish and robot collective behaviour in a structured environment. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Stanford, CA, USA, 26–28 July 2017; pp. 107–118. [Google Scholar] [CrossRef]

- Zivkovic, Z. Improved adaptive Gaussian mixture model for background subtraction. In Proceedings of the 17th International Conference on Pattern Recognition, Los Alamitos, CA, USA, 26–26 August 2004; Volume 2, pp. 28–31. [Google Scholar] [CrossRef]

- Yi, Z.; Liangzhong, F. Moving object detection based on running average background and temporal difference. In Proceedings of the 2010 IEEE International Conference on Intelligent Systems and Knowledge Engineering, Hangzhou, China, 15–16 November 2010; pp. 270–272. [Google Scholar] [CrossRef]

- Sun, X.; Shi, J.; Dong, J.; Wang, X. Fish recognition from low-resolution underwater images. In Proceedings of the 2016 9th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Datong, China, 15–17 October 2016; pp. 471–476. [Google Scholar] [CrossRef]

- Marini, S.; Fanelli, E.; Sbragaglia, V.; Azzurro, E.; Fernandez, J.D.R.; Aguzzi, J. Tracking fish abundance by underwater image recognition. Sci. Rep. 2018, 8, 13748. [Google Scholar] [CrossRef]

- Yu, J.; Wu, Z.; Yang, X.; Yang, Y.; Zhang, P. Underwater Target Tracking Control of an Untethered Robotic Fish With a Camera Stabilizer. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 6523–6534. [Google Scholar] [CrossRef]

- Spampinato, C.; Chen-Burger, Y.H.; Nadarajan, G.; Fisher, R.B. Detecting, Tracking and Counting Fish in Low Quality Unconstrained Underwater Videos. In Proceedings of the VISAPP 2008: Third International Conference on Computer Vision Theory and Applications, Funchal, Portugal, 22–25 January 2008; Volume 2. [Google Scholar]

- Morais, E.F.; Campos, M.F.M.; Padua, F.L.; Carceroni, R.L. Particle filter-based predictive tracking for robust fish counting. In Proceedings of the XVIII Brazilian Symposium on Computer Graphics and Image Processing (SIBGRAPI’05), Natal, Brazil, 9–12 October 2005; pp. 367–374. [Google Scholar] [CrossRef]

- Katzschmann, R.K.; DelPreto, J.; MacCurdy, R.; Rus, D. Exploration of underwater life with an acoustically controlled soft robotic fish. Sci. Robot. 2018, 3, eaar3449. [Google Scholar] [CrossRef]

- Chen, Y.; Qiao, J.; Liu, J.; Zhao, R.; An, D.; Wei, Y. Three-dimensional path following control system for net cage inspection using bionic robotic fish. Inf. Process. Agric. 2022, 9, 100–111. [Google Scholar] [CrossRef]

- Romano, D.; Stefanini, C. Robot-Fish Interaction Helps to Trigger Social Buffering in Neon Tetras: The Potential Role of Social Robotics in Treating Anxiety. Int. J. Soc. Robot. 2022, 14, 963–972. [Google Scholar] [CrossRef]

- Angani, A.; Lee, J.; Talluri, T.; Lee, J.; Shin, K. Human and Robotic Fish Interaction Controlled Using Hand Gesture Image Processing. Sensors Mater. 2020, 32, 3479–3490. [Google Scholar] [CrossRef]

- Reichardt, M.; Föhst, T.; Berns, K. An overview on framework design for autonomous robots. IT-Inf. Technol. 2015, 57, 75–84. [Google Scholar] [CrossRef]

- Wu, Z.; Yu, J.; Tan, M.; Zhang, J. Kinematic comparison of forward and backward swimming and maneuvering in a self-propelled sub-carangiform robotic fish. J. Bionic Eng. 2014, 11, 199–212. [Google Scholar] [CrossRef]

- Butail, S.; Bartolini, T.; Porfiri, M. Collective response of zebrafish shoals to a free-swimming robotic fish. PLoS ONE 2013, 8, e76123. [Google Scholar] [CrossRef] [PubMed]

- Wahab, M.; Ahmed, Z.; Islam, M.A.; Haq, M.; Rahmatullah, S. Effects of introduction of common carp, Cyprinus carpio (L.), on the pond ecology and growth of fish in polyculture. Aquac. Res. 1995, 26, 619–628. [Google Scholar] [CrossRef]

- Piccardi, M. Background subtraction techniques: A review. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No.04CH37583), The Hague, The Netherlands, 10–13 October 2004; Volume 4, pp. 3099–3104. [Google Scholar] [CrossRef]

- Lighthill, M.J. Large-amplitude elongated-body theory of fish locomotion. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1971, 179, 125–138. [Google Scholar] [CrossRef]

- Wang, J.; McKinley, P.K.; Tan, X. Dynamic modeling of robotic fish with a base-actuated flexible tail. J. Dyn. Syst. Meas. Control. 2015, 137. [Google Scholar] [CrossRef]

- Novikov, S.P.; Shmel’tser, I. Periodic solutions of Kirchhoff’s equations for the free motion of a rigid body in a fluid and the extended theory of Lyusternik–Shnirel’man–Morse (LSM). I. Funktsional’nyi Analiz i ego Prilozheniya 1981, 15, 54–66. [Google Scholar] [CrossRef]

- Fossen, T.I.; Fjellstad, O.E. Nonlinear modelling of marine vehicles in 6 degrees of freedom. Math. Model. Syst. 1995, 1, 17–27. [Google Scholar] [CrossRef]

- Almero, V.J.; Concepcion, R.; Rosales, M.; Vicerra, R.R.; Bandala, A.; Dadios, E. An Aquaculture-Based Binary Classifier for Fish Detection Using Multilayer Artificial Neural Network. In Proceedings of the 2019 IEEE 11th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Laoag, Philippines, 29 November–1 December 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Rekha, B.S.; Srinivasan, G.N.; Reddy, S.K.; Kakwani, D.; Bhattad, N. Fish Detection and Classification Using Convolutional Neural Networks. In Proceedings of the Computational Vision and Bio-Inspired Computing, Coimbatore, India, 25–26 September 2019; Smys, S., Tavares, J.M.R.S., Balas, V.E., Iliyasu, A.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 1221–1231. [Google Scholar] [CrossRef]

- Christensen, J.H.; Mogensen, L.V.; Galeazzi, R.; Andersen, J.C. Detection, Localization and Classification of Fish and Fish Species in Poor Conditions using Convolutional Neural Networks. In Proceedings of the 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV), Porto, Portugal, 6–9 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Han, F.; Zhu, J.; Liu, B.; Zhang, B.; Xie, F. Fish Shoals Behavior Detection Based on Convolutional Neural Network and Spatiotemporal Information. IEEE Access 2020, 8, 126907–126926. [Google Scholar] [CrossRef]

- Li, X.; Tang, Y.; Gao, T. Deep but lightweight neural networks for fish detection. In Proceedings of the OCEANS 2017, Aberdeen, UK, 19–22 June 2017; pp. 1–5. [Google Scholar] [CrossRef]

| Author | Data Size | Training Testing Ratio | No. of Classes | No. of Layers | Accuracy |

|---|---|---|---|---|---|

| Almero et al. [46] | 369 | 2:1 | 2 | 4 | 79.00% |

| Rekha et al. [47] | 16,000 | 8:2 | 8 | 15 | 90.00% |

| Christensen et al. [48] | 13,124 | NA | 3 | 6 | 66.7% |

| Hanet et al. [49] | 600 | 4:1 | 6 | 4 | 82% |

| n = 200 | Detected Yes | Detected No |

|---|---|---|

| Fish Present Yes | TP = 121 | FN = 27 |

| Fish Present No | FP = 17 | TN = 35 |

| Measure | Derivation | Value |

|---|---|---|

| Accuracy | ACC = (TP + TN)/(P + N) | 78.00% |

| Sensitivity | TPR = TP/(TP + FN) | 81.75% |

| Specificity | SPC = TN/(FP + TN) | 67.30% |

| Precision | PPV = TP/(TP + FP) | 87.68% |

| Classified | Pattern 1 | Pattern 2 | Pattern 3 | Pattern 4 | |

|---|---|---|---|---|---|

| Actual | |||||

| Pattern 1 | 49 | 8 | 15 | 0 | |

| Pattern 2 | 2 | 73 | 6 | 13 | |

| Pattern 3 | 8 | 7 | 42 | 0 | |

| Pattern 4 | 0 | 8 | 1 | 18 | |

| Food Drop Patterns | S-Shape | C-Shape | O-Shape | Straight | |

|---|---|---|---|---|---|

| Fish Swarm Patterns | |||||

| Fish Schooling-Following | 26.7% | 23.3% | 20.0% | 30.0% | |

| Fish Schooling-Parallel | 11.1% | 55.6% | 11.1% | 22.2% | |

| Shoal | 13.6% | 9.1% | 40.9% | 36.4% | |

| Fish Schooling-Tornado | 5.9% | 35.3% | 47.1% | 11.8% | |

| Food Pattern Name | Maximum Fish Response Time (s) |

|---|---|

| Straight pattern | 22 |

| S-shape pattern | 28 |

| C-shape pattern | 32 |

| O-shape pattern | 19 |

| User Feedback | Strongly Agree | Agree | Not Sure | Disagree | Strongly Disagree |

|---|---|---|---|---|---|

| Part 2 | 20.0% | 48.9% | 31.1% | 0.0% | 0.0% |

| Part 3 | 51.1% | 37.8% | 11.1% | 0.0% | 0.0% |

| Part 4 | 22.2% | 26.7% | 33.3% | 17.8% | 0.0% |

| Part 5 | 35.6% | 42.2% | 22.2% | 0.0% | 0.0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manawadu, U.A.; De Zoysa, M.; Perera, J.D.H.S.; Hettiarachchi, I.U.; Lambacher, S.G.; Premachandra, C.; De Silva, P.R.S. Altering Fish Behavior by Sensing Swarm Patterns of Fish in an Artificial Aquatic Environment Using an Interactive Robotic Fish. Sensors 2023, 23, 1550. https://doi.org/10.3390/s23031550

Manawadu UA, De Zoysa M, Perera JDHS, Hettiarachchi IU, Lambacher SG, Premachandra C, De Silva PRS. Altering Fish Behavior by Sensing Swarm Patterns of Fish in an Artificial Aquatic Environment Using an Interactive Robotic Fish. Sensors. 2023; 23(3):1550. https://doi.org/10.3390/s23031550

Chicago/Turabian StyleManawadu, Udaka A., Malsha De Zoysa, J. D. H. S. Perera, I. U. Hettiarachchi, Stephen G. Lambacher, Chinthaka Premachandra, and P. Ravindra S. De Silva. 2023. "Altering Fish Behavior by Sensing Swarm Patterns of Fish in an Artificial Aquatic Environment Using an Interactive Robotic Fish" Sensors 23, no. 3: 1550. https://doi.org/10.3390/s23031550

APA StyleManawadu, U. A., De Zoysa, M., Perera, J. D. H. S., Hettiarachchi, I. U., Lambacher, S. G., Premachandra, C., & De Silva, P. R. S. (2023). Altering Fish Behavior by Sensing Swarm Patterns of Fish in an Artificial Aquatic Environment Using an Interactive Robotic Fish. Sensors, 23(3), 1550. https://doi.org/10.3390/s23031550