Face Mask Identification Using Spatial and Frequency Features in Depth Image from Time-of-Flight Camera

Abstract

:1. Introduction

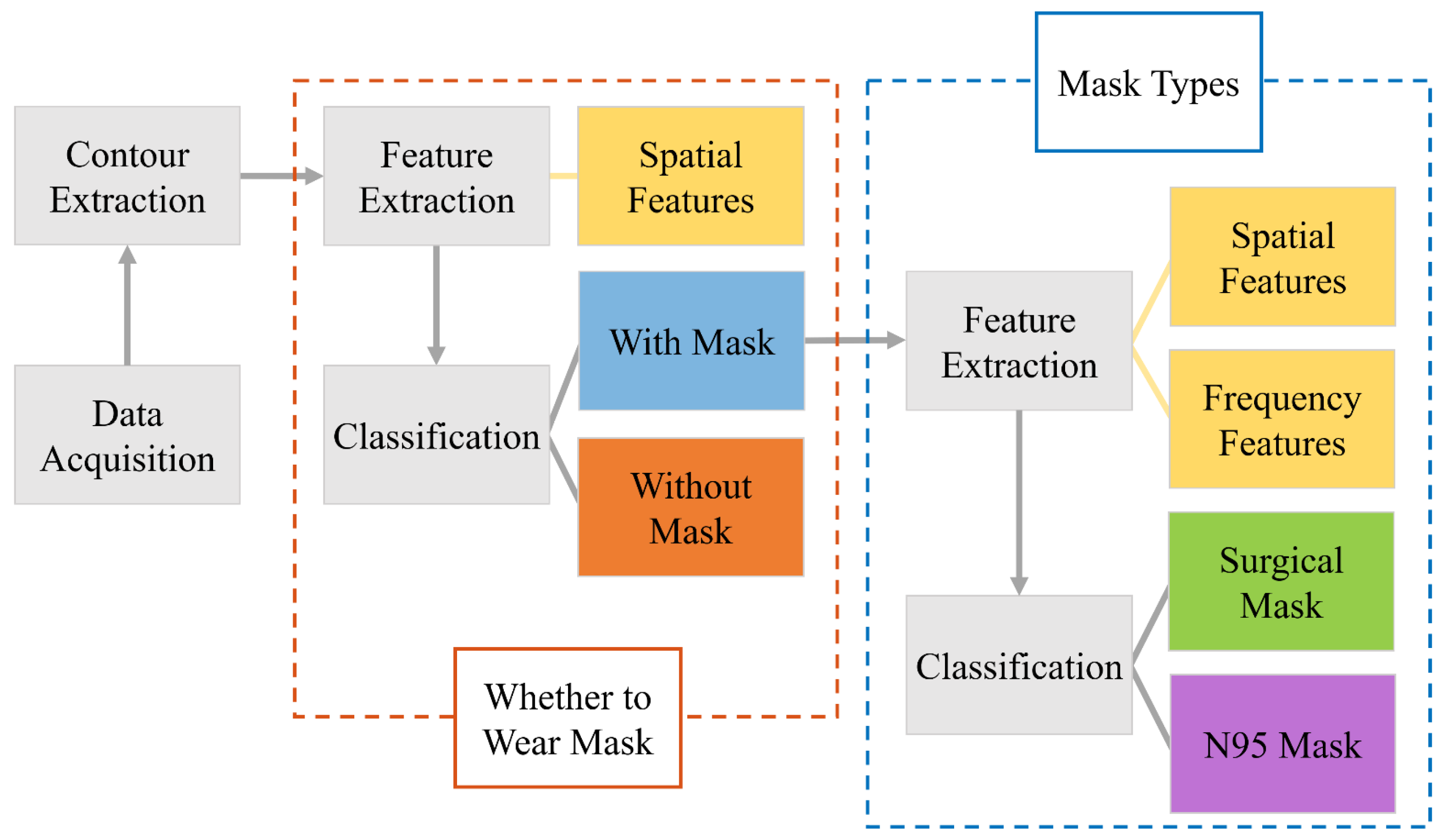

2. Method

2.1. Facial Contour Extraction

2.2. Feature Extraction and Classification of Whether to Wear Mask

2.3. Feature Extraction and Classification of Mask Types

3. Results

4. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cheng, Y.; Ma, N.; Witt, C.; Rapp, S.; Wild, P.S.; Andreae, M.O.; Pöschl, U.; Su, H. Face Masks Effectively Limit the Probability of SARS-CoV-2 Transmission. Science 2021, 372, 1439–1443. [Google Scholar] [CrossRef] [PubMed]

- Mbunge, E.; Chitungo, I.; Dzinamarira, T. Unbundling the Significance of Cognitive Robots and Drones Deployed to Tackle COVID-19 Pandemic: A Rapid Review to Unpack Emerging Opportunities to Improve Healthcare in Sub-Saharan Africa. Cogn. Robot. 2021, 1, 205–213. [Google Scholar] [CrossRef]

- Goar, V.; Sharma, A.; Yadav, N.S.; Chowdhury, S.; Hu, Y.-C. IoT-Based Smart Mask Protection against the Waves of COVID-19. J. Ambient Intell. Hum. Comput. 2022, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Rahim, M.S.A.; Yakub, F.; Hanapiah, A.R.M.; Rashid, M.Z.A. Development of Low-Cost Thermal Scanner and Mask Detection for Covid-19. In Proceedings of the 2021 60th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Tokyo, Japan, 8–10 September 2021. [Google Scholar]

- Hussain, S.; Yu, Y.; Ayoub, M.; Khan, A.; Rehman, R.; Wahid, J.A.; Hou, W. IoT and Deep Learning Based Approach for Rapid Screening and Face Mask Detection for Infection Spread Control of COVID-19. Appl. Sci. 2021, 11, 3495. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.; Wu, X. Object Detection with Deep Learning: A Review 2019. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Walia, I.S.; Kumar, D.; Sharma, K.; Hemanth, J.D.; Popescu, D.E. An Integrated Approach for Monitoring Social Distancing and Face Mask Detection Using Stacked ResNet-50 and YOLOv5. Electronics 2021, 10, 2996. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, W. Face Mask Wearing Detection Algorithm Based on Improved YOLO-V4. Sensors 2021, 21, 3263. [Google Scholar] [CrossRef]

- Talahua, J.S.; Buele, J.; Calvopiña, P.; Varela-Aldás, J. Facial Recognition System for People with and without Face Mask in Times of the COVID-19 Pandemic. Sustainability 2021, 13, 6900. [Google Scholar] [CrossRef]

- Nagrath, P.; Jain, R.; Madan, A.; Arora, R.; Kataria, P.; Hemanth, J. SSDMNV2: A Real Time DNN-Based Face Mask Detection System Using Single Shot Multibox Detector and MobileNetV2. Sustain. Cities Soc. 2021, 66, 102692. [Google Scholar] [CrossRef]

- Su, X.; Gao, M.; Ren, J.; Li, Y.; Dong, M.; Liu, X. Face Mask Detection and Classification via Deep Transfer Learning. Multimed. Tools Appl. 2022, 81, 4475–4494. [Google Scholar] [CrossRef]

- Jiang, X.; Gao, T.; Zhu, Z.; Zhao, Y. Real-Time Face Mask Detection Method Based on YOLOv3. Electronics 2021, 10, 837. [Google Scholar] [CrossRef]

- Kumar, A.; Kaur, A.; Kumar, M. Face Detection Techniques: A Review. Artif. Intell. Rev. 2019, 52, 927–948. [Google Scholar] [CrossRef]

- Cao, Z.; Shao, M.; Xu, L.; Mu, S.; Qu, H. MaskHunter: Real-time Object Detection of Face Masks during the COVID-19 Pandemic. IET Image Process 2020, 14, 4359–4367. [Google Scholar] [CrossRef]

- Daneshmand, M.; Helmi, A.; Avots, E.; Noroozi, F.; Alisinanoglu, F.; Arslan, H.S.; Gorbova, J.; Haamer, R.E.; Ozcinar, C.; Anbarjafari, G. 3D Scanning: A Comprehensive Survey. arXiv 2018, arXiv:1801.08863. [Google Scholar]

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-The-Art and Applications of 3D Imaging Sensors in Industry, Cultural Heritage, Medicine, and Criminal Investigation. Sensors 2009, 9, 568–601. [Google Scholar] [CrossRef]

- Zhang, S. High-Speed 3D Shape Measurement with Structured Light Methods: A Review. Opt. Lasers Eng. 2018, 106, 119–131. [Google Scholar] [CrossRef]

- He, Y.; Chen, S. Recent Advances in 3D Data Acquisition and Processing by Time-of-Flight Camera. IEEE Access 2019, 7, 12495–12510. [Google Scholar] [CrossRef]

- Cippitelli, E.; Fioranelli, F.; Gambi, E.; Spinsante, S. Radar and RGB-Depth Sensors for Fall Detection: A Review. IEEE Sensors J. 2017, 17, 3585–3604. [Google Scholar] [CrossRef]

- Zanuttigh, P.; Marin, G.; Dal, M.C.; Dominio, F.; Minto, L.; Cortelazzo, G.M. Time-of-Flight and Structured Light Depth Cameras: Technology and Applications, 1st ed.; Springer: Cham, Switzerland, 2016; pp. 199–350. [Google Scholar]

- Horaud, R.; Hansard, M.; Evangelidis, G.; Ménier, C. An Overview of Depth Cameras and Range Scanners Based on Time-of-Flight Technologies. Mach. Vis. Appl. 2016, 27, 1005–1020. [Google Scholar] [CrossRef]

- Aggarwal, J.K.; Xia, L. Human Activity Recognition from 3D Data: A Review. Pattern Recognit. Lett. 2014, 48, 70–80. [Google Scholar] [CrossRef]

- Xu, T.; An, D.; Wang, Z.; Jiang, S.; Meng, C.; Zhang, Y.; Wang, Q.; Pan, Z.; Yue, Y. 3D Joints Estimation of the Human Body in Single-Frame Point Cloud. IEEE Access 2020, 8, 178900–178908. [Google Scholar] [CrossRef]

- Bae, J.-H.; Jo, H.; Kim, D.-W.; Song, J.-B. Grasping System for Industrial Application Using Point Cloud-Based Clustering. In Proceedings of the 2020 20th International Conference on Control, Automation and Systems (ICCAS), Busan, Republic of Korea, 13 October 2020. [Google Scholar]

- Zengeler, N.; Kopinski, T.; Handmann, U. Hand Gesture Recognition in Automotive Human–Machine Interaction Using Depth Cameras. Sensors 2018, 19, 59. [Google Scholar] [CrossRef] [PubMed]

- Gai, J.; Xiang, L.; Tang, L. Using a Depth Camera for Crop Row Detection and Mapping for Under-Canopy Navigation of Agricultural Robotic Vehicle. Comput. Electron. Agric. 2021, 188, 106301. [Google Scholar] [CrossRef]

- Eric, N.; Jang, J.-W. Kinect Depth Sensor for Computer Vision Applications in Autonomous Vehicles. In Proceedings of the 2017 Ninth International Conference on Ubiquitous and Future Networks (ICUFN), Milan, Italy, 4–7 July 2017. [Google Scholar]

- Procházka, A.; Schätz, M.; Vyšata, O.; Vališ, M. Microsoft Kinect Visual and Depth Sensors for Breathing and Heart Rate Analysis. Sensors 2016, 16, 996. [Google Scholar] [CrossRef] [PubMed]

- Luna, C.A.; Losada-Gutiérrez, C.; Fuentes-Jiménez, D.; Mazo, M. Fast Heuristic Method to Detect People in Frontal Depth Images. Expert Syst. Appl. 2021, 168, 114483. [Google Scholar] [CrossRef]

- Das, S.; Sarkar, S.; Das, A.; Das, S.; Chakraborty, P.; Sarkar, J. A Comprehensive Review of Various Categories of Face Masks Resistant to Covid-19. Clin. Epidemiol. Glob. Health 2021, 12, 100835. [Google Scholar] [CrossRef]

| Category | Precision | Recall | F1 Score |

|---|---|---|---|

| Without a mask | 0.906 | 0.992 | 0.947 |

| With a mask | 0.996 | 0.946 | 0.970 |

| Category | Precision | Recall | F1 Score |

|---|---|---|---|

| With a surgical mask | 0.847 | 0.909 | 0.877 |

| With an N95 mask | 0.910 | 0.842 | 0.875 |

| Work | Method | Data | Distinguished Type | Accuracy | Efficiency |

|---|---|---|---|---|---|

| Ours | Feature-based | Depth | With/Without | 96.9% | 31.55 FPS |

| Mask Type | 87.85% | ||||

| Cao et al. [14] | YOLOv4-large | RGB | With/Without | 94% | 18 FPS |

| Night Time | 77.9% | ||||

| Nagrath et al. [10] | SSDMNV2 | RGB | With/Without | 92.64% | 15.71 FPS |

| Jiang et al. [12] | SE-YOLOv3 | RGB | With/Without/ Correct Wearing | 73.7% | 15.63 FPS |

| Walia et al. [7] | YOLOv5 | RGB | With/Without | 98% | 32 FPS |

| Su et al. [11] | Transfer Learning and Effcient-Yolov3 | RGB | With/Without | 96.03% | 15 FPS |

| Mask Type | 97.84% | ||||

| Yu et al. [8] | YOLO-v4 | RGB | With/Without | 98.3% | 54.57 FPS |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Xu, T.; An, D.; Sun, L.; Wang, Q.; Pan, Z.; Yue, Y. Face Mask Identification Using Spatial and Frequency Features in Depth Image from Time-of-Flight Camera. Sensors 2023, 23, 1596. https://doi.org/10.3390/s23031596

Wang X, Xu T, An D, Sun L, Wang Q, Pan Z, Yue Y. Face Mask Identification Using Spatial and Frequency Features in Depth Image from Time-of-Flight Camera. Sensors. 2023; 23(3):1596. https://doi.org/10.3390/s23031596

Chicago/Turabian StyleWang, Xiaoyan, Tianxu Xu, Dong An, Lei Sun, Qiang Wang, Zhongqi Pan, and Yang Yue. 2023. "Face Mask Identification Using Spatial and Frequency Features in Depth Image from Time-of-Flight Camera" Sensors 23, no. 3: 1596. https://doi.org/10.3390/s23031596

APA StyleWang, X., Xu, T., An, D., Sun, L., Wang, Q., Pan, Z., & Yue, Y. (2023). Face Mask Identification Using Spatial and Frequency Features in Depth Image from Time-of-Flight Camera. Sensors, 23(3), 1596. https://doi.org/10.3390/s23031596