The Detection of Yarn Roll’s Margin in Complex Background

Abstract

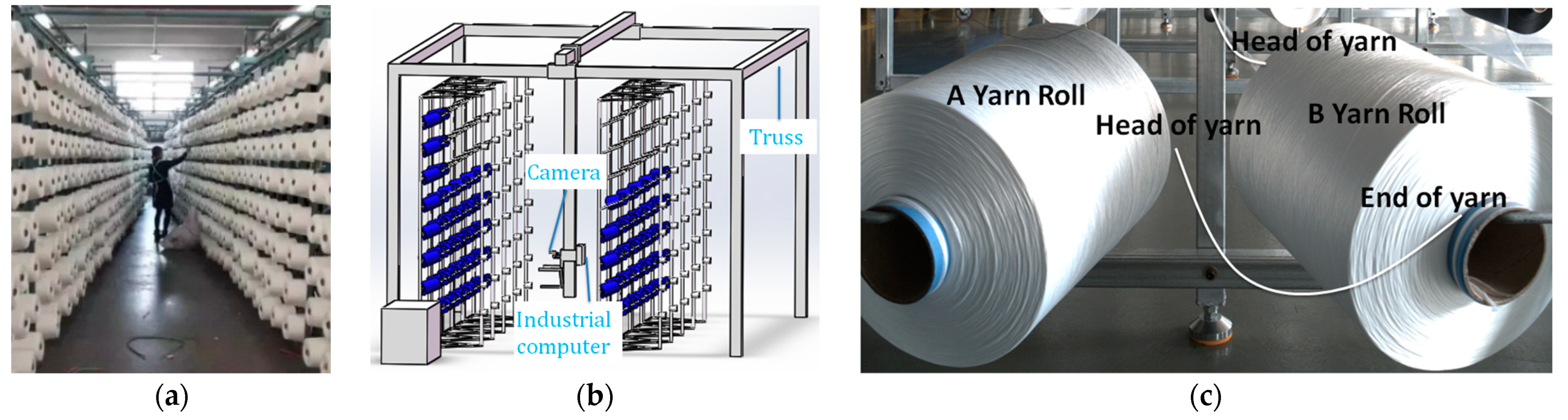

1. Introduction

2. Related Work

- (1)

- Monocular vision method: Du, C.J., and Sun, D.W. propose an automatic method [18] to estimate the surface area and volume of ham. They extract the ham through image-by-image segmentation and edge detection. This method cannot process complex background. Similarly, Jing, H., Li, D., and Duan, Q. present a method [19] to classify species of fish based on color and texture features using a multi-class support vector machine (MSVM). In the present paper, the yarn roll has different color and texture features, so this method cannot be used directly. This method requires pure background and is not very accurate for volume or class detection.

- (2)

- Stereo vision method: compared with monocular camera, the stereo camera can measure an object’s three-dimensional information, and the measurement result is more accurate; it is also widely used in the industry. However, stereo camera needs to be calibrated before use, and the three-dimensional information is obtained through intrinsic and extrinsic matrices, a process that is more complicated. Molinier, T., Fofi, D., and Bingham, P.R. use two-vision systems to extract complementary 3D fire points [20]. The obtained data are projected in a same reference frame and used to build a global form of the fire front. From the obtained 3D fire points, a three-dimensional surface rendering is performed and the fire volume estimated. Sheng, J., Zhao, H., and Bu, P. propose a four-direction global matching with a cost volume update scheme [21] to cope with textureless regions and occlusion. Experimental results show that their method is highly efficient.

- (3)

- There are some defects in the algorithms commonly used in edge detection, for example, the canny algorithm [29,30,31,32,33] uses Gaussian filtering for smoothing and noise reduction, which only considers the similarity of images in the spatial domain, and the filtering process leads to the loss of some useful weak edges. At the same time, the selection of the size of the convolution kernel of Gaussian filtering is influenced by human factors and, if the value is too small, the noise of the image cannot be effectively suppressed and the smoothed image is blurred. The canny algorithm uses a 2 × 2 size convolution kernel with two directions of horizontal and vertical detection, which is too small to extract the complete edge information, too sensitive to noise, and easily captures a pseudo-edge. The traditional canny algorithm relies on human experience to select the high and low thresholds, which cannot take into account the local feature information, and the uncertainty of the threshold value appears to be a certain error and lacks self-adaptability.

- (4)

- Deep learning method: as an important branch of machine vision, deep learning technology has been undergoing rapid development in recent years and has been used more and more widely in the industrial field. Yolo [29,30,31,32] is a type of neural network which is used to detect the target online. It transforms the object detection into a regression problem. We can directly obtain the position in the image, the corresponding category of the object, and its confidence only through one inference. Yolo does not explicitly solve region proposal, but it integrates the process into the network, which reduces the operational complexity of the detection process and improves the efficiency of the algorithm to a certain extent.

3. Yarn Roll’s Margin Detection Method

3.1. Yolo Model

3.2. Contours Extraction and Process

3.2.1. Perspective Transformation

3.2.2. Detecting the Yarn Roll’s Center

- (1)

- Transform raw image into gray image;

- (2)

- Design circle-filters with different diameter according to the circle’s features in the image. Figure 7a,b show two circle filters designed to detect a circle of seven pixels in diameter. The bobbin’s diameter is about 60–100 pixels, so we should design four and with diameter 50 and 30 pixels;

- (3)

- Obtain convolution image by sliding in the gray image with a fixed step to the computer convolution and geting x-gradient image, then getting y-gradient image in the same way. X-gradient and y-gradient images are shown in Figure 8b,c;

- (4)

- Use AND operation to process corresponding pixel values of the x-gradient image and the y-gradient image and obtain the gradient image, which is shown in Figure 8d;

- (5)

- Use “erode” and “dilate” methods to process the gradient image and obtain the result image, which is shown in Figure 8e;

- (6)

- Detect contours in the result image. The max contour is the yarn roll’s center, drawn in the raw and gradient images, which are shown in Figure 8f,g.

3.2.3. Extracting the Yarn Roll’s Contours

- (1)

- We transform the RGB image into a gray image, which is shown in Figure 9a;

- (2)

- (3)

- We convert the gradient image into a binary image, which is shown in Figure 9c;

- (4)

- We use opencv’s “findContours” founction to process binary image to get contour points, which is shown in Figure 9d, blue points are extracted from binary image;

- (5)

3.3. Fusing Diameter Detected by Yolo and Contours

- (1)

- A weight value can be calculated. First, the maximum value of the density curve must be obtained through the above steps. Then, the peak of the corresponding histogram is found around the maximum value of the curve (plus or minus five pixels). Second, the average value of the histogram is calculated. Third, the weight value is calculated using Equation (6), m is the mean value of the histogram and p is the peak value of the histogram.

- (2)

- The weighted average is calculated using Equation (7), r is the bobbin’s radius, is the bobbin’s radius detected by Yolo, is the bobbin’s radius detected by contours, R is the yarn roll’s radius.

3.4. Calculating the Yarn Roll’s Diameter and Length in Real World

3.5. Kalman Filter

4. Experiments

5. Discussion

- (1)

- The method is based on monocular camera. The measurement error of the yarn roll’s length is large, its error being about 3 cm. Since the types of yarn roll in this enterprise are few, the measurement accuracy will not be affected temporarily. If more types of yarn roll with different diameter and lengths are used, a stereo camera needs to be considered. The latter’s accuracy is higher, and so is the price;

- (2)

- The detection algorithm should be optimized, especially to improve the detection accuracy when the yarn roll’s margin is small. The existing algorithm presents some defects;

- (3)

- The resolution of the camera can be increased. Increasing resolution can improve detection accuracy, but the detection speed will decrease correspondingly.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fu, G.; He, Z.; Liu, F. Exploring the development of intelligence and wisdom in textile and garment industry. Light Text. Ind. Technol. 2020, 49, 81–82. [Google Scholar]

- Wang, J. The Foundation of the Intellectualization of the Textile Accessories and Parts Including On-line Detection of Textile Production Process, Quality Data Mining and Process Parameters Optimization. J. Text. Accessories. 2018. Available online: http://en.cnki.com.cn/Article_en/CJFDTotal-FZQC201805001.htm (accessed on 2 January 2023).

- Pierleoni, P.; Belli, A.; Palma, L.; Palmucci, M.; Sabbatini, L. A Machine Vision System for Manual Assembly Line Monitoring. In Proceedings of the 2020 International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 17–19 June 2020; IEEE: Piscataway, NJ, USA. [Google Scholar]

- Imae, M.; Iwade, T.; Shintani, Y. Method for monitoring yarn tension in yarn manufacturing process. U.S. Patent 6,014,104, 11 January 2000. [Google Scholar]

- Catarino, A.; Rocha, A.M.; Monteiro, J. Monitoring knitting process through yarn input tension: New developments. In Proceedings of the IECON 02 2002 28th Annual Conference of the. IEEE Industrial Electronics Society, Seville, Spain, 5–8 November 2002; IEEE: Piscataway, NJ, USA, 2002. [Google Scholar]

- Miao, Y.; Meng, X.; Xia, G.; Wang, Q.; Zhang, H. Research and development of non-contact yarn tension monitoring system. Wool Text. J. 2020, 48, 76–81. [Google Scholar]

- Chen, Z.; Shi, Y.P.; Ji, S.-P. Improved image threshold segmentation algorithm based on OTSU method. Laser Infrared 2012, 5, 584–588. [Google Scholar]

- Yang, Y.; Ma, X.; He, Z.; Gao, M. A robust detection method of yarn residue for automatic bobbin management system. In Proceedings of the 2019 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Hongkong, China, 8–12 July 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Hwa, S.; Bade, A.; Hijazi, M. Enhanced Canny edge detection for COVID-19 and pneumonia X-Ray images. IOP Conf. Ser. Mater. Sci. Eng. 2020, 979, 012016. [Google Scholar] [CrossRef]

- Zheng, Z.; Zha, B.; Yuan, H.; Xuchen, Y.; Gao, Y.; Zhang, H. Adaptive Edge Detection Algorithm Based on Improved Grey Prediction Model. IEEE Access 2020, 8, 102165–102176. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic Convolution:Attention over Convolution Kernels. arXiv 2020, arXiv:1912.03458. [Google Scholar]

- Wu, L.; Shang, Q.; Sun, Y.; Bai, X. A self-adaptive correction method for perspective distortions of image. Front. Comput. Sci. China. 2019, 13, 588–598. [Google Scholar] [CrossRef]

- Shi, T.; Kong, J.-Y.; Wang, X.-D.; Liu, Z.; Guo, Z. Improved Sobel algorithm for defect detection of rail surfaces with enhanced efficiency and accuracy. J. Cent. South Univ. 2016, 23, 2867–2875. [Google Scholar] [CrossRef]

- Abolghasemi, V.; Ahmadyfard, A. An edge-based color-aided method for license plate detection. Image Vis. Comput. 2009, 27, 1134–1142. [Google Scholar] [CrossRef]

- Phan, R.; Androutsos, D. Content-based retrieval of logo and trademarks in unconstrained color image databases using Color Edge Gradient Co-occurrence Histograms. Comput. Vis. Image Underst. 2010, 114, 66–84. [Google Scholar] [CrossRef]

- Chan, T.; Zhang, J.; Pu, J.; Huang, H. Neighbor Embedding Based Super-Resolution Algorithm through Edge Detection and Feature Selection. Pattern Recognit. Lett. 2019, 5, 494–502. [Google Scholar] [CrossRef]

- Papari, G.; Petkov, N. Edge and line oriented contour detection State of the art. Image Vis. Comput. 2011, 29, 79–103. [Google Scholar] [CrossRef]

- Du, C.J.; Sun, D.W. Estimating the surface area and volume of ellipsoidal ham using computer vision. J. Food Eng. 2006, 73, 260–268. [Google Scholar] [CrossRef]

- Jing, H.; Li, D.; Duan, Q.; Han, Y.; Chen, G.; Si, X. Fish species classification by color, texture and multi-class support vector machine using computer vision. Comput. Electron. Agric. 2012, 88, 133–140. [Google Scholar]

- Molinier, T.; Fofi, D.; Bingham, P.R.; Rossi, L.; Akhloufi, M.; Tison, Y.; Pieri, A. Estimation of fire volume by stereovision. Proc. SPIE Int. Soc. Opt. Eng. 2011, 7877, 78770B. [Google Scholar]

- Da Silva Vale, R.T.; Ueda, E.K.; Takimoto, R.Y.; de Castro Martins, T. Fish Volume Monitoring Using Stereo Vision for Fish Farms. IFAC-PapersOnLine 2020, 53, 15824–15828. [Google Scholar] [CrossRef]

- Sheng, J.; Zhao, H.; Bu, P. Four-directions Global Matching with Cost Volume Update for Stereovision. Appl. Opt. 2021, 60, 5471–5479. [Google Scholar]

- Liu, Z. Construction and verification of color fundus image retinal vessels segmentation algorithm under BP neural network. J. Supercomput. 2021, 77, 7171–7183. [Google Scholar] [CrossRef]

- Spiesman, B.J.; Gratton, C.; Hatfield, R.G.; Hsu, W.H.; Jepsen, S.; McCornack, B.; Patel, K.; Wang, G. Assessing the potential for deep learning and computer vision to identify bumble bee species from images. Sci. Rep. 2021, 11, 7580. [Google Scholar] [CrossRef]

- He, Z.; Zeng, Y.; Shao, H.; Hu, H.; Xu, X. Novel motor fault detection scheme based on one-class tensor hyperdisk. Knowledge-Based Syst. 2023, 262, 110259. [Google Scholar] [CrossRef]

- Yan, S.; Shao, H.; Xiao, Y.; Liu, B.; Wan, J. Hybrid robust convolutional autoencoder for unsupervised anomaly detection of machine tools under noises. Robot. Comput. Integr. Manuf. 2023, 79, 102441. [Google Scholar] [CrossRef]

- Yuan, J.; Liu, L.; Yang, Z.; Zhang, Y. Tool wear condition monitoring by combining variational mode decomposition and ensemble learning. Sensors 2020, 20, 6113. [Google Scholar] [CrossRef] [PubMed]

- Duan, J.; Hu, C.; Zhan, X.; Zhou, H.; Liao, G.; Shi, T. MS-SSPCANet: A powerful deep learning framework for tool wear prediction. Rob. Comput. Integr. Manuf. 2022, 78, 102391. [Google Scholar] [CrossRef]

- Tang, S.; Zhai, S.; Tong, G.; Zhong, P.; Shi, J.; Shi, F. Improved Canny operator with morphological fusion for edge detection. Comput. Eng. Des. 2023, 44, 224–231. [Google Scholar]

- Chen, D.; Cheng, J.-J.; He, H.-Y.; Ma, C.; Yao, L.; Jin, C.-B.; Cao, Y.-S.; Li, J.; Ji, P. Computed tomography reconstruction based on canny edge detection algorithm for acute expansion of epidural hematoma. J. Radiat. Res. Appl. Sci. 2022, 15, 279–284. [Google Scholar] [CrossRef]

- Tian, J.; Zhou, H.-J.; Bao, H.; Chen, J.; Huang, X.-D.; Li, J.-C.; Yang, L.; Li, Y.; Miao, X.-S. Memristive Fast-Canny Operation for Edge Detection. IEEE Trans. Electron Devices 2022, 69, 6043–6048. [Google Scholar] [CrossRef]

- Chen, H.F.; Zhuang, J.L.; Zhu, B.; Li, P.H.; Yang, P.; Liu, B.Y.; Fan, X. Image edge fusion method based on improved Canny operator. J. Xinxiang Coll. 2022, 39, 23–27. [Google Scholar]

- Yang, S.; Zeng, S.; Liu, Z.; Tang, H.; Fang, Y. Nickel slice edge extraction algorithm based on Canny and bilinear interpolation. J. Fujian Eng. Coll. 2022, 20, 567–572. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. Proc. IEEE 2016, 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Elgammal, A.; Duraiswami, R.; Harwood, D.; Davis, L.S. Background and foreground modeling using nonparametric kernel density estimation for visual surveillance. Proc. IEEE 2002, 90, 1151–1163. [Google Scholar] [CrossRef]

- Liu, C.; Shui, P.; Wei, G.; Li, S. Modified unscented Kalman filter using modified filter gain and variance scale factor for highly maneuvering target tracking. Syst. Eng. Electron. 2014, 25, 380–385. [Google Scholar] [CrossRef]

- Arulampalam, M.S.; Maskell, S.; Gordon, N.; Clapp, T. A Tutorial on Particle Filters for Online Nonlinear/Non-Gaussian Bayesian Tracking. IEEE Trans. Signal Process. IEEE Signal Process. Soc. 2002, 50, 174–188. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Shi, Z.; Shi, W.; Wang, H. The Detection of Yarn Roll’s Margin in Complex Background. Sensors 2023, 23, 1993. https://doi.org/10.3390/s23041993

Wang J, Shi Z, Shi W, Wang H. The Detection of Yarn Roll’s Margin in Complex Background. Sensors. 2023; 23(4):1993. https://doi.org/10.3390/s23041993

Chicago/Turabian StyleWang, Junru, Zhiwei Shi, Weimin Shi, and Hongpeng Wang. 2023. "The Detection of Yarn Roll’s Margin in Complex Background" Sensors 23, no. 4: 1993. https://doi.org/10.3390/s23041993

APA StyleWang, J., Shi, Z., Shi, W., & Wang, H. (2023). The Detection of Yarn Roll’s Margin in Complex Background. Sensors, 23(4), 1993. https://doi.org/10.3390/s23041993