A Mapless Local Path Planning Approach Using Deep Reinforcement Learning Framework

Abstract

:1. Introduction

- For reward function shaping, a potential-based reward shaping is introduced. Two variables, angle and distance, are used as sources of potential energy. The robot is encouraged to move towards the target by continuously adjusting the angle and reducing the distance to the target.

- Navigation tasks usually have only one goal and belong to a sparse reward environment. In this environment, the one-step method makes the agent learn slowly. Replacing the n-step method with a one-step method to obtain more information about the environment is a solution. Meanwhile, the effect of reward value and target value normalization on the stability of the algorithm is also explored.

- To address the drawbacks of exploration in traditional reinforcement learning, an -greedy strategy based on reward value control probability variation is proposed. It can make the agent use exploration rationally in the environment and learn more effectively.

2. Related Work

2.1. Traditional Algorithms

2.2. Reinforcement Learning Algorithms

3. Materials and Methods

3.1. Background

3.2. Problem Definition

3.3. The n-Step Dueling Double DQN with Reward-Based -Greedy (RND3QN)

3.3.1. The n-Step TD

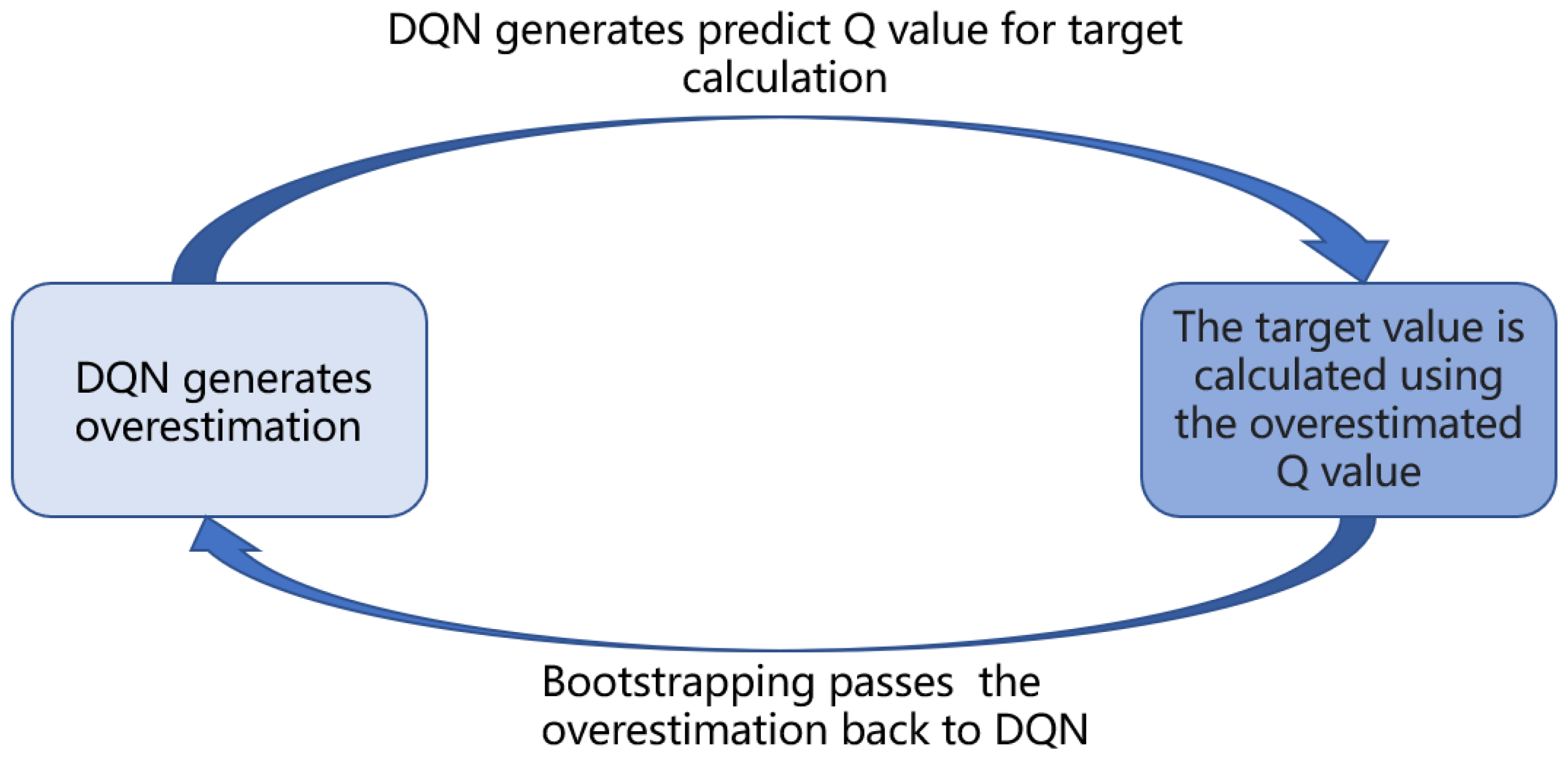

3.3.2. Mitigating Overestimation Using Double DQN

3.3.3. Introduction of Dueling Network to Optimize the Network

3.3.4. Reward-Based -Greedy

| Algorithm 1 reward-based -greedy |

| Input: |

| Initialize total training episodes , total steps , -greedy initial probability , min |

| Initialize reward threshold and reward increment |

| Output: selected actions |

| for e = 1 to E do |

| if epsilon > and then |

| end if |

| random selection of actions with the probability of |

| select the optimal action with a probability of |

| end for |

| Algorithm 2 ND3QN |

| Input:

Initialize a dueling network and a target dueling network Initialize replay memory , batch size , discount factor , learning rate , total training episodes , the max steps of each episode Initialize n-step n, -greedy initial probability |

| Output: Q value for each action |

| for i = 1 to B do |

| Taking a random subscript finish in the interval (n, R.size) |

| begin = finish − n |

| Randomly take state and action in the interval (begin, finish) |

| for t = 1 to n do |

| end for |

| end for |

| for e = 1 to episodes do |

| for t = 1 to S do |

| action a is chosen according to a reward-based -greedy policy |

| get the next state, reward, and done from the environment |

| store transition in replay buffer |

| if then |

| randomly sample n pieces of transitions from the replay memory; |

| obtain the optimal action |

| Calculate the loss with network parameters |

| update target network every C step |

| end if |

| end for |

| end for |

3.3.5. Design of the Reward Function

4. Results

4.1. Experiment Settings

4.2. Simulation Experiments

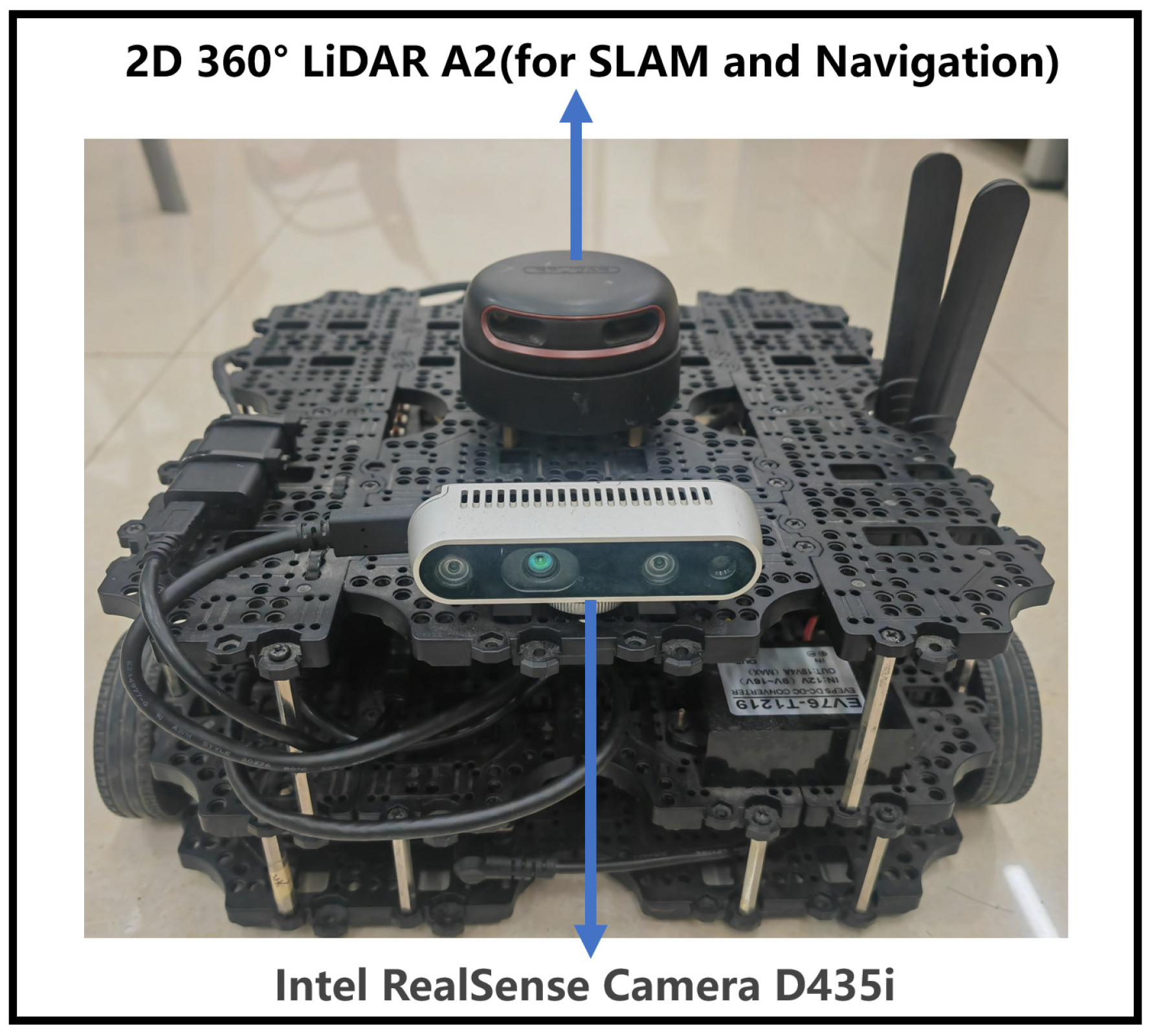

4.3. Real-World Experiments

5. Discussion

6. Conclusions

- Agents need more internal drivers to explore in sparsely rewarded environments. One possible solution is to introduce curiosity as an internal driver. This would increase the agent’s ability to learn, allowing for more exploratory strategic improvements.

- We will explore path planning and obstacle avoidance solutions for robots in continuous action space.

- A better strategy will be designed to optimize the robot’s path trajectory.

- For the sample efficiency aspect, the uncertainty weights of each action will be considered. More rational and efficient sampling methods to reduce memory overhead and improve sampling efficiency will be adopted.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sinisa, M. Evaluation of SLAM Methods and Adaptive Monte Carlo Localization. Doctoral Dissertation, Vienna University of Technology, Vienna, Austria, 2022. [Google Scholar] [CrossRef]

- Gurel, C.S. Real-Time 2D and 3D Slam Using RTAB-MAP, GMAPPING, and CARTOGRAPHER PACKAGES; University of Maryland: College Park, MD, USA, 2018. [Google Scholar] [CrossRef]

- Shi, H.; Shi, L.; Xu, M.; Hwang, K.S. End-to-end navigation strategy with deep reinforcement learning for mobile robots. IEEE Trans. Ind. Inform. 2019, 16, 2393–2402. [Google Scholar] [CrossRef]

- Leiva, F.; Ruiz-del Solar, J. Robust RL-based map-less local planning: Using 2D point clouds as observations. IEEE Robot. Autom. Lett. 2020, 5, 5787–5794. [Google Scholar] [CrossRef]

- Hao, B.; Du, H.; Zhao, J.; Zhang, J.; Wang, Q. A Path-Planning Approach Based on Potential and Dynamic Q-Learning for Mobile Robots in Unknown Environment. Comput. Intell. Neurosci. 2022, 2022, 1–12. [Google Scholar] [CrossRef]

- Park, J.; Lee, J.Y.; Yoo, D.; Kweon, I.S. Distort-and-recover: Color enhancement using deep reinforcement learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5928–5936. [Google Scholar] [CrossRef]

- Zhao, X.; Xia, L.; Zhang, L.; Ding, Z.; Yin, D.; Tang, J. Deep reinforcement learning for page-wise recommendations. In Proceedings of the 12th ACM Conference on Recommender Systems, Vancouver, BC, Canada, 2–7 October 2018; pp. 95–103. [Google Scholar] [CrossRef]

- Yang, Y.; Li, J.; Peng, L. Multi-robot path planning based on a deep reinforcement learning DQN algorithm. CAAI Trans. Intell. Technol. 2020, 5, 177–183. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Hasselt, H. Double Q-learning. In Proceedings of the Advances in Neural Information Processing Systems 23: 24th Annual Conference on Neural Information Processing Systems 2010, Vancouver, BC, Canada, 6–9 December 2010. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.; Lanctot, M.; Freitas, N. Dueling network architectures for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; pp. 1995–2003. [Google Scholar] [CrossRef]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar] [CrossRef]

- Dijkstra, E. A Note on Two Problems in Connexion with Graphs. Numer. Math. 1959, 1, 269–271. [Google Scholar] [CrossRef]

- Hart, P.E.; Nilsson, N.J.; Raphael, B. A formal basis for the heuristic determination of minimum cost paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- Stentz, A. Optimal and efficient path planning for partially known environments. In Intelligent Unmanned Ground Vehicles; Springer: Berlin/Heidelberg, Germany, 1997; pp. 203–220. [Google Scholar] [CrossRef]

- Koenig, S.; Likhachev, M.; Furcy, D. Lifelong planning A*. Artif. Intell. 2004, 155, 93–146. [Google Scholar] [CrossRef]

- Koenig, S.; Likhachev, M. D* lite. Aaai/iaai 2002, 15, 476–483. [Google Scholar]

- LaValle, S.M. Rapidly-Exploring Random Trees: A New Tool for Path Planning; Iowa State University: Ames, IA, USA, 1998; pp. 98–111. [Google Scholar]

- Karaman, S.; Walter, M.R.; Perez, A.; Frazzoli, E.; Teller, S. Anytime motion planning using the RRT. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1478–1483. [Google Scholar] [CrossRef]

- Gammell, J.D.; Srinivasa, S.S.; Barfoot, T.D. Informed RRT*: Optimal sampling-based path planning focused via direct sampling of an admissible ellipsoidal heuristic. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 2997–3004. [Google Scholar] [CrossRef]

- Puterman, M.L. Markov Decision Processes: Discrete Stochastic Dynamic Programming; John Wiley & Son: Hoboken, NJ, USA, 2014; ISBN 978-1-118-62587-3. [Google Scholar]

- Watkins, C.J.C.H. Learning from Delayed Rewards. Ph.D. Thesis, King’s College, Cambridge, UK, 1989. [Google Scholar]

- Jiang, L.; Huang, H.; Ding, Z. Path planning for intelligent robots based on deep Q-learning with experience replay and heuristic knowledge. IEEE/CAA J. Autom. Sin. 2019, 7, 1179–1189. [Google Scholar] [CrossRef]

- Anschel, O.; Baram, N.; Shimkin, N. Averaged-dqn: Variance reduction and stabilization for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 176–185. [Google Scholar] [CrossRef]

- Riviere, B.; Hönig, W.; Yue, Y.; Chung, S.J. Glas: Global-to-local safe autonomy synthesis for multi-robot motion planning with end-to-end learning. IEEE Robot. Autom. Lett. 2020, 5, 4249–4256. [Google Scholar] [CrossRef]

- Burda, Y.; Edwards, H.; Storkey, A.; Klimov, O. Exploration by random network distillation. arXiv 2018, arXiv:1810.12894. [Google Scholar] [CrossRef]

- Hong, W.; Zhu, M.; Liu, M.; Zhang, W.; Zhou, M.; Yu, Y.; Sun, P. Generative adversarial exploration for reinforcement learning. In Proceedings of the First International Conference on Distributed Artificial Intelligence, Beijing, China, 13–15 October 2019; pp. 1–10. [Google Scholar] [CrossRef]

- Wang, B.; Liu, Z.; Li, Q.; Prorok, A. Mobile robot path planning in dynamic environments through globally guided reinforcement learning. IEEE Robot. Autom. Lett. 2020, 5, 6932–6939. [Google Scholar] [CrossRef]

- Apuroop, K.G.S.; Le, A.V.; Elara, M.R.; Sheu, B.J. Reinforcement learning-based complete area coverage path planning for a modified hTrihex robot. Sensors 2021, 21, 1067. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Lan, Q.; Pan, Y.; Luo, J.; Mahmood, A.R. Memory-efficient Reinforcement Learning with Knowledge Consolidation. arXiv 2022, arXiv:2205.10868. [Google Scholar] [CrossRef]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized experience replay. arXiv 2015, arXiv:1511.05952. [Google Scholar]

- Andrychowicz, M.; Wolski, F.; Ray, A.; Schneider, J.; Fong, R.; Welinder, P.; McGrew, B.; Tobin, J.; Pieter Abbeel, O.; Zaremba, W. Hindsight experience replay. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Zhao, R.; Tresp, V. Energy-based hindsight experience prioritization. In Proceedings of the Conference on Robot Learning, Zurich, Switzerland, 29–31 October 2018; pp. 113–122. [Google Scholar] [CrossRef]

- Doukhi, O.; Lee, D.J. Deep reinforcement learning for end-to-end local motion planning of autonomous aerial robots in unknown outdoor environments: Real-time flight experiments. Sensors 2021, 21, 2534. [Google Scholar] [CrossRef]

- Zeng, J.; Ju, R.; Qin, L.; Hu, Y.; Yin, Q.; Hu, C. Navigation in unknown dynamic environments based on deep reinforcement learning. Sensors 2019, 19, 3837. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kaelbling, L.P.; Littman, M.L.; Cassandra, A.R. Planning and acting in partially observable stochastic domains. Artif. Intell. 1998, 101, 99–134. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar] [CrossRef]

- Badia, A.P.; Sprechmann, P.; Vitvitskyi, A.; Guo, D.; Piot, B.; Kapturowski, S.; Tieleman, O.; Arjovsky, M.; Pritzel, A.; Bolt, A.; et al. Never give up: Learning directed exploration strategies. arXiv 2020, arXiv:2002.06038. [Google Scholar] [CrossRef]

- Harutyunyan, A.; Dabney, W.; Mesnard, T.; Gheshlaghi Azar, M.; Piot, B.; Heess, N.; van Hasselt, H.P.; Wayne, G.; Singh, S.; Precup, D.; et al. Hindsight credit assignment. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar] [CrossRef]

| Action Space | State Space |

|---|---|

| 5 | 28 |

| Action | Angular Velocity (rad/s) | Direction |

|---|---|---|

| 0 | −1.5 | left |

| 1 | −0.75 | left front |

| 2 | 0 | front |

| 3 | 0.75 | right front |

| 4 | 1.5 | right |

| Parameters | Value | Description |

|---|---|---|

| 1.0 | The probability of randomly selecting an action in the -greedy strategy. | |

| 0.99 | Initial (not change). | |

| 0.1 | The minimum of . | |

| learning_rate | 0.00025 | Learning rate. |

| episode_step | 6000 | The time step of one episode. |

| discount_factor | 0.99 | Discount factor, indicating the extent to which future events lose their value compared to the moment. |

| batch_size | 64 | Size of a group of training samples. |

| memory_size | 1,000,000 | The size of replay memory. |

| train_start | 64 | Start training if the replay memory size is greater than 64. |

| n_multi_step | 3 | Number of n-step steps. |

| Models | DQN’ | DQN | Double DQN | Dueling DQN | D3QN | ND3QN | RND3QN |

|---|---|---|---|---|---|---|---|

| stage 2 | 0 | 1.1 | 2.7 | 3.3 | 3.4 | 7.8 | 9.3 |

| stage 3 | 0 | 0.8 | 0.9 | 1.2 | 2.0 | 2.8 | 3.3 |

| stage 4 | 0 | 0.1 | 0.1 | 3.4 | 3.8 | 5.7 | 6.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, Y.; Chen, Z.; Liu, G.; Guo, J. A Mapless Local Path Planning Approach Using Deep Reinforcement Learning Framework. Sensors 2023, 23, 2036. https://doi.org/10.3390/s23042036

Yin Y, Chen Z, Liu G, Guo J. A Mapless Local Path Planning Approach Using Deep Reinforcement Learning Framework. Sensors. 2023; 23(4):2036. https://doi.org/10.3390/s23042036

Chicago/Turabian StyleYin, Yan, Zhiyu Chen, Gang Liu, and Jianwei Guo. 2023. "A Mapless Local Path Planning Approach Using Deep Reinforcement Learning Framework" Sensors 23, no. 4: 2036. https://doi.org/10.3390/s23042036

APA StyleYin, Y., Chen, Z., Liu, G., & Guo, J. (2023). A Mapless Local Path Planning Approach Using Deep Reinforcement Learning Framework. Sensors, 23(4), 2036. https://doi.org/10.3390/s23042036