AOEHO: A New Hybrid Data Replication Method in Fog Computing for IoT Application

Abstract

1. Introduction

Motivation and Contributions

- Design a discrete AOEHO strategy for solving the dynamic data replication problem in a fog computing environment.

- Improving a swarm intelligent technique based on the hybrid aquila optimizer (AO) algorithm with the elephant herding optimization (EHO) for solving dynamic data replication problems in the fog computing environment.

- Developing a multi-objective optimization based on the proposed AOEHO to decrease the bandwidth to enhance the load balancing and cloud throughput. It evaluates data replication using seven criteria. These criteria are data replication access, distance, costs, availability, SBER, popularity, and the Floyd algorithm.

- The experimental results show the superiority of the AOEHO strategy performance over other algorithms, such as bandwidth, distance, load balancing, data transmission, and least cost path.

2. Related Work

3. Suggested System and Discussion

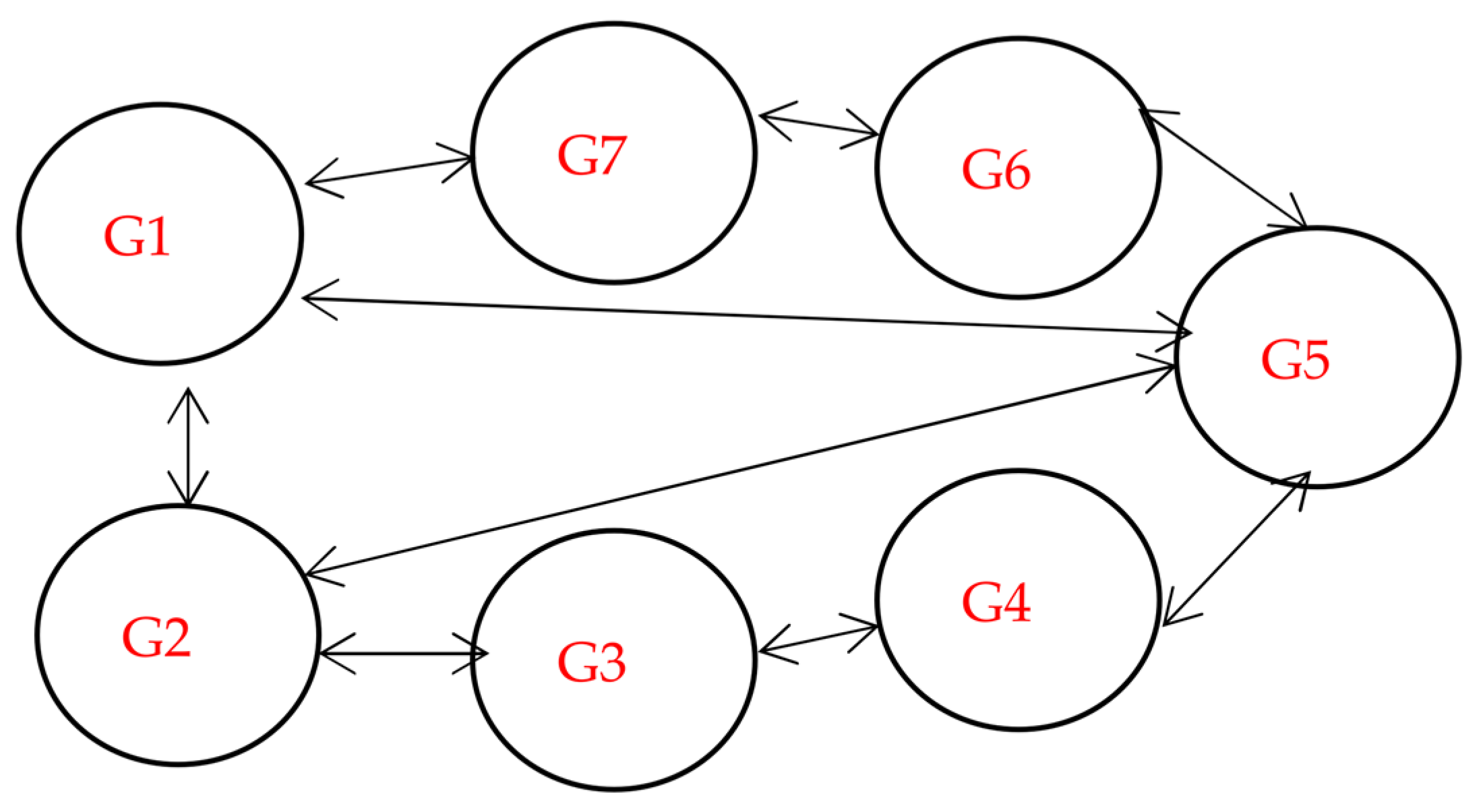

3.1. Proposed System and Structure

3.2. Aquila Optimizer (AO)

- Step 1: Expanded exploration

- Step 2: Narrowed exploration

- Step 3: Expanded exploitation

- Step 4: Narrowed exploitation

3.3. Elephant Herding Optimization

3.3.1. Clan-Updating Operator

3.3.2. Separating Operator

3.4. Proposed Swarm Intelligence for Data Replication

3.4.1. Cost and Time of Replication

3.4.2. Shortest Paths Problem (SPP) between Nodes Based on the Floyd

3.4.3. Popularity Degree of the Data File

3.4.4. System-Level Availability

3.4.5. Placement of New Replicas

3.5. Computational Complexity

| Algorithm 1: The Proposed Algorithm AOEHO |

| Input: Regions, datacenters, data availability, minimum distance between regions, cost, time, SBER, fog nodes, popularity data file, max_iter, population size, and number of IoT tasks. Output: select and place data file replica optimal Begin Initialize no. of IoT tasks Initialize the population Initialize the population using the fitness function Initialize availability and unavailability probabilities Initialize replicas according to costs and time Initialize distance between regions Initialize popularity data file Initialize data replication costs and time Initialize optimal best data replica placement in DC solution Initialize the least cost path Initialize SBER Initialize RF Initialize budget repeat Initialization phase: Initialize the population X of the AO. Initialize the parameters of the AO. WHILE (t < T) Calculate the fitness function values. Determine the best-obtained solution according to the fitness values (Xbest(t)). FOR (i = 1,2,...,N) Update the mean value of the current solution XM(t). Update the x, y, G1, G2, Levy(D), etc. IF (t ≤ (2/3)*T) IF (rand ≤ 0.5) Update the current solution using Equation (1). Step 1: Expanded exploration (X1) IF (Fitness X1(t + 1) < Fitness X(t)) X(t) = X1(t + 1) IF (Fitness X1(t + 1) <Fitness (Xbest(t)) Xbest(t) = X1(t + 1) ENDIF ENDIF ELSE Update the current solution using Equation (3). Step 2: Narrowed exploration (X2) IF (Fitness X2(t + 1) <Fitness X(t)) X(t) = X1(t + 1) IF (Fitness X2(t + 1) <Fitness (Xbest(t)) Xbest(t) = X2(t + 1) ENDIF ENDIF ENDIF ELSE IF (rand ≤ 0.5) Update the current solution using Equation (11). Step 3: Expanded exploitation (X3) IF (Fitness X3(t + 1) <Fitness X(t)) X(t) = X3(t + 1) IF (Fitness X3(t + 1) <Fitness (Xbest(t)) Xbest(t) = X3(t + 1) ENDIF ENDIF ELSE Update the current solution using Equation (12). Step 4: Narrowed exploitation (X4) IF (Fitness X4(t + 1) <Fitness X(t)) X(t) = X4(t + 1) IF (Fitness X4(t + 1) <Fitness (Xbest(t)) Xbest(t) = X4(t + 1) ENDIF ENDIF ENDIF ENDIF ENDFOR ENDWHILE Return the best solution (Xbest). Apply Elephant Herding Optimization (EHO) to AO t++ End while Calculate the RF Calculate the distance between regions Calculate SBER Calculate the cost and time Return the optimal minimum data replica placement in the region. |

4. Experimental Evaluation

4.1. Configuration Details

4.2. Results and Discussion

4.2.1. Different Scenarios of Data Replica Size

First Scenario of Tasks

Second Scenario of Response Time for Tasks

Third Scenario of Response Time for File

Third Scenario of Execution Time

Fourth Scenario of Data Transmission in Nodes

Fifth Scenario of Data Transmission in Tasks

4.3. Performance Evaluation

4.3.1. Degree of Balancing

4.3.2. Data Loss Rate

4.3.3. Load Balancing

4.3.4. Throughput Time

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Calheiros, R.N.; Ranjan, R.; Beloglazov, A.; De Rose, C.A.F.; Buyya, R. CloudSim: A toolkit for modeling and simulation of cloud computing environments and evaluation of resource provisioning algorithms. Softw. Pract. Exp. 2011, 41, 23–50. [Google Scholar] [CrossRef]

- Long, S.; Zhao, Y. A toolkit for modeling and simulating cloud data storage: An extension to CloudSim. In Proceedings of the 2012 International Conference on Control Engineering and Communication Technology, Washington, DC, USA, 7–9 December 2012. [Google Scholar]

- Mansouri, Y.; Buyya, R. To move or not to move: Cost optimization in a dual cloud-based storage architecture. J. Netw. Comput. Appl. 2016, 75, 223–235. [Google Scholar] [CrossRef]

- Rajeshirke, N.; Sawant, R.; Sawant, S.; Shaikh, H. Load balancing in cloud computing. Int. J. Recent Trends Eng. Res. 2017, 3. [Google Scholar]

- Milani, A.S.; Navimipour, N.J. Load balancing mechanisms and techniques in the cloud environments: Systematic literature review an future trends. J. Netw. Comput. Appl. 2016, 71, 86–98. [Google Scholar] [CrossRef]

- Ghomi, E.J.; Rahmani, A.M.; Qader, N.N. Load-balancing algorithms in cloud computing: A survey. J. Netw. Comput. Appl. 2017, 88, 50–71. [Google Scholar] [CrossRef]

- Naha, R.K.; Othman, M. Cost-aware service brokering and performance sentient load balancing algorithms in the cloud. J. Netw. Comput. Appl. 2016, 75, 47–57. [Google Scholar] [CrossRef]

- Ahn, H.-Y.; Lee, K.-H.; Lee, Y.-J. Dynamic erasure coding decision for modern block-oriented distributed storage systems. J. Supercomput. 2016, 72, 1312–1341. [Google Scholar] [CrossRef]

- Zhu, T.; Feng, D.; Wang, F.; Hua, Y.; Shi, Q.; Xie, Y.; Wan, Y. A congestion-aware and robust multicast protocol in SDN-based datacenter networks. J. Netw. Comput. Appl. 2017, 95, 105–117. [Google Scholar] [CrossRef]

- Nguyen, B.; Binh, H.; Son, B. Evolutionary algorithms to optimize task scheduling problem for the IoT based bag-of-tasks application in cloud-fog computing environment. Appl. Sci. 2019, 9, 1730. [Google Scholar] [CrossRef]

- Ghasempour, A. Internet of things in smart grid: Architecture, applications, services, key technologies, and challenges. Inventions 2019, 4, 22. [Google Scholar] [CrossRef]

- Ranjana, T.R.; Jayalakshmi, D.; Srinivasan, R. On replication strategies for data intensive cloud applications. Int. J. Comput. Sci. Inf. Technol. 2017, 6, 2479–2484. [Google Scholar]

- Fu, J.; Liu, Y.; Chao, H.; Bhargava, B.; Zhang, Z. Secure data storage and searching for industrial IoT by integrating fog computing and cloud computing. IEEE Trans. Ind. Inf. 2018, 14, 4519–4528. [Google Scholar] [CrossRef]

- Lin, B.; Guo, W.; Xiong, N.; Chen, G.; Vasilakos, A.; Zhang, H. A pretreatment workflow scheduling approach for big data applications in multicloud environments. IEEE Trans. Netw. Serv. Manag. 2016, 13, 581–594. [Google Scholar] [CrossRef]

- Liu, X.-F.; Zhan, Z.-H.; Deng, J.; Li, Y.; Gu, T.; Zhang, J. An energy efficient ant colony system for virtual machine placement in cloud computing. IEEE Trans. Evol. Comput. 2016, 22, 113–128. [Google Scholar] [CrossRef]

- Kumar, P.J.; University, V.; Ilango, P. Data replication in current generation computing environment. Int. J. Eng. Trends Technol. 2017, 45, 488–492. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A. A novel hybrid antlion optimization algorithm for multi-objective task scheduling problems in cloud computing environments. Clust. Comput. 2020, 24, 205–223. [Google Scholar] [CrossRef]

- Wang, Y.; Guo, C.; Yu, J. Immune scheduling network based method for task scheduling in decentralized fog computing. Wirel. Commun. Mob. Comput. 2018, 2018, 2734219. [Google Scholar] [CrossRef]

- Yang, M.; Ma, H.; Wei, S.; Zeng, Y.; Chen, Y.; Hu, Y. A multi-objective task scheduling method for fog computing in cyber-physical-social services. IEEE Access 2020, 8, 65085–65095. [Google Scholar] [CrossRef]

- John, S.; Mirnalinee, T. A novel dynamic data replication strategy to improve access efficiency of cloud storage. Inf. Syst. e-Bus. Manag. 2020, 18, 405–426. [Google Scholar] [CrossRef]

- Pallewatta, S.; Kostakos, V.; Buyya, R. QoS-aware placement of microservices-based IoT applications in Fog computing environments. Future Gener. Comput. Syst. 2022, 131, 121–136. [Google Scholar] [CrossRef]

- Milani, B.A.; Navimipour, N.J. A comprehensive review of the data replication techniques in the cloud environments: Major trends and future directions. J. Netw. Comput. Appl. 2016, 64, 229–238. [Google Scholar] [CrossRef]

- Sahoo, J.; Salahuddin, M.A.; Glitho, R.; Elbiaze, H.; Ajib, W. A survey replica server placement algorithms for content delivery networks. IEEE Commun. Surv. Tutor. 2017, 19, 1002–1026. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, Q. Optimized data storage algorithm of IoT based on cloud computing in distributed system. Comput. Commun. 2020, 157, 124–131. [Google Scholar] [CrossRef]

- Taghizadeh, J.; Arani, M.; Shahidinejad, A. A metaheuristic-based data replica placement approach for data-intensive IoT applications in the fog computing environment. Softw. Pract. Exp. 2021, 52, 482–505. [Google Scholar] [CrossRef]

- Torabi, E.; Arani, M.; Shahidinejad, A. Data replica placement approaches in fog computing: A review. Clust. Comput. 2022, 25, 3561–3589. [Google Scholar] [CrossRef]

- Jin, W.; Lim, S.; Woo, S.; Park, C.; Kim, D. Decision-making of IoT device operation based on intelligent-task offloading for improving environmental optimization. Complex Intell. Syst. 2022, 8, 3847–3866. [Google Scholar] [CrossRef]

- Salem, R.; Abdsalam, M.; Abdelkader, H.; Awad, A. An Artificial Bee Colony Algorithm for Data Replication Optimization in Cloud Environments. IEEE Access 2020, 8, 51841–51852. [Google Scholar] [CrossRef]

- Awad, A.; Salem, R.; Abdelkader, H.; Abdsalam, M. A Novel Intelligent Approach for Dynamic Data Replication in Cloud Environment. IEEE Access 2021, 9, 40240–40254. [Google Scholar] [CrossRef]

- Awad, A.; Salem, R.; Abdelkader, H.; Abdsalam, M. A Swarm Intelligence-based Approach for Dynamic Data Replication in a Cloud Environment. Int. J. Intell. Eng. Syst. 2021, 14, 271–284. [Google Scholar] [CrossRef]

- Sarwar, K.; Yong, S.; Yu, J.; Rehman, S. Efficient privacy-preserving data replication in fog-enabled IoT. Future Gener. Comput. Syst. 2022, 128, 538–551. [Google Scholar] [CrossRef]

- Chen, D.; Yuan, H.; Hu, S.; Wang, Q.; Wang, C. BOSSA: A Decentralized System for Proofs of Data Retrievability and Replication. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 786–798. [Google Scholar] [CrossRef]

- Li, C.; Liu, J.; Wang, M.; Luo, Y. Fault-tolerant scheduling and data placement for scientific workflow processing in geo-distributed clouds. J. Syst. Softw. 2022, 187, 111227. [Google Scholar] [CrossRef]

- Shi, T.; Ma, H.; Chen, G.; Hartmann, S. Cost-Effective Web Application Replication and Deployment in Multi-Cloud Environment. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 1982–1995. [Google Scholar] [CrossRef]

- Majed, A.; Raji, F.; Miri, A. Replication management in peer-to-peer cloud storage systems. Clust. Comput. 2022, 25, 401–416. [Google Scholar] [CrossRef]

- Li, C.; Cai, Q.; Youlong, L. Optimal data placement strategy considering capacity limitation and load balancing in geographically distributed cloud. Future Gener. Comput. Syst. 2022, 127, 142–159. [Google Scholar] [CrossRef]

- Khelifa, A.; Mokadem, R.; Hamrouni, T.; Charrada, F. Data correlation and fuzzy inference system-based data replication in federated cloud systems. Simul. Model. Pract. Theory 2022, 115, 102428. [Google Scholar] [CrossRef]

- Mohammadi, B.; Navimipour, N. A Fuzzy Logic-Based Method for Replica Placement in the Peer to Peer Cloud Using an Optimization Algorithm. Wirel. Pers. Commun. 2022, 122, 981–1005. [Google Scholar] [CrossRef]

- Zhao, J.; Gao, Z.; Chen, H. The Simplified Aquila Optimization Algorithm. IEEE Access 2022, 10, 22487–22515. [Google Scholar] [CrossRef]

- Li, J.; Lei, H.; Alavi, A.H.; Wang, G.G. Elephant Herding Optimization: Variants, Hybrids, and Applications. Mathematics 2020, 8, 1415. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Abualigah, L.; Alharbi, S.K.; Khalifa, H.A.E.W. Efficient Initialization Methods for Population-Based Metaheuristic Algorithms: A Comparative Study. Arch. Comput. Methods Eng. 2022, 1–61. [Google Scholar] [CrossRef]

- Forestiero, A.; Pizzuti, C.; Spezzano, G. A single pass algorithm for clustering evolving data streams based on swarm intelligence. Data Min. Knowl. Discov. 2013, 26, 1–26. [Google Scholar] [CrossRef]

- Gul, F.; Mir, A.; Mir, I.; Mir, S.; Islaam, T.U.; Abualigah, L.; Forestiero, A. A Centralized Strategy for Multi-Agent Exploration. IEEE Access 2022, 10, 126871–126884. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Khodadadi, N.; Forestiero, A.; Jia, H.; Gandomi, A.H. Aquila Optimizer Based PSO Swarm Intelligence for IoT Task Scheduling Application in Cloud Computing. In Integrating Meta-Heuristics and Machine Learning for Real-World Optimization Problems; Springer: Cham, Switzerland, 2022; pp. 481–497. [Google Scholar]

| Author | Advantage | Disadvantage |

|---|---|---|

| K. Sarwar et al., in [31] | Privacy | High latency |

| Secrecy | High bandwidth | |

| Reliability | load balancing | |

| Authentication | ||

| D. Chenet al., in [32] | Reliability | High replication cost |

| Secrecy | High response time | |

| Privacy | ||

| C. Li et al., in [33] | load balancing | High response time |

| storage | High cost | |

| data transmission time | ||

| T. Shiet et al., in [34] | High availability | High cost |

| High performance | High response time | |

| A. Majed et al., in [35] | decreased user waiting | High cost |

| load balancing | High storage cost | |

| data transmission time | ||

| C. LiA et al., in [36] | data transmission time | High response time |

| load balancing | ||

| low cost | ||

| A. Khelifa et al., in [37] | Low response time | High cost |

| data transmission time | High storage cost | |

| load balancing | ||

| B. Mohammadi et al., in [38] | Low response time | High cost |

| load balancing | High storage cost |

| Cloud Entity | Ranges |

|---|---|

| Nodes | [1, 120] |

| User | [10, 1000] |

| Regions | [5, 50] |

| Geographical | [10, 64] |

| Bandwidth | [2 Mbps, 256 Mbps] |

| Data sets | [0.1, 64 G] |

| Data file | [10, 1000] |

| Cost of file | [100, 5000] |

| Storage nodes | [8, 512] |

| Transfer rate | [16, 256 MB/s] |

| Host | [10, 300] |

| Processor | [12, 128] |

| MIPS | [100, 20,000] |

| Memory RAM | [2, 64 G] |

| Virtual machine | [100, 1000] |

| Processor | [8, 128] |

| MIPS | [200, 20,000] |

| Memory RAM | [2, 64 G] |

| Cloudlet | [1000, 6000] |

| Length of task | [1000, 10,000 MI] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mohamed, A.a.; Abualigah, L.; Alburaikan, A.; Khalifa, H.A.E.-W. AOEHO: A New Hybrid Data Replication Method in Fog Computing for IoT Application. Sensors 2023, 23, 2189. https://doi.org/10.3390/s23042189

Mohamed Aa, Abualigah L, Alburaikan A, Khalifa HAE-W. AOEHO: A New Hybrid Data Replication Method in Fog Computing for IoT Application. Sensors. 2023; 23(4):2189. https://doi.org/10.3390/s23042189

Chicago/Turabian StyleMohamed, Ahmed awad, Laith Abualigah, Alhanouf Alburaikan, and Hamiden Abd El-Wahed Khalifa. 2023. "AOEHO: A New Hybrid Data Replication Method in Fog Computing for IoT Application" Sensors 23, no. 4: 2189. https://doi.org/10.3390/s23042189

APA StyleMohamed, A. a., Abualigah, L., Alburaikan, A., & Khalifa, H. A. E.-W. (2023). AOEHO: A New Hybrid Data Replication Method in Fog Computing for IoT Application. Sensors, 23(4), 2189. https://doi.org/10.3390/s23042189