YOLOv4 with Deformable-Embedding-Transformer Feature Extractor for Exact Object Detection in Aerial Imagery

Abstract

:1. Introduction

- A new feature extraction network named a deformable embedding vision transformer (DEViT) is proposed, which has the excellent global feature extraction capability of transformers while using deformable embedding instead of normal embedding to efficiently extract features at different scales. In addition, a fully convolutional feedforward network (FCFN) is used to replace the feedforward neural network (FFN), and the extraction of location information is effectively enhanced by introducing zero padding and convolutional operations.

- The depthwise separable deformable convolution (DSDC) is proposed to reduce computational effort while preserving the deformable convolution’s ability to zero in on regions of interest. It is proposed that the depthwise separable deformable pyramid (DSDP) module extracts multiscale feature maps and prioritizes key features.

- Our proposed DET-YOLO achieved the highest accuracy among existing models, with mean average precision (mAP) values of 0.728 on the DOTA dataset, 0.952 on the RSOD dataset, and 0.945 on the UCAS-AOD dataset.

2. Methodology

2.1. Review of YOLOv4

2.2. DET-YOLO

2.3. Deformable Embedding Vision Transformer

2.3.1. Deformable Embedding

2.3.2. Full Convolution Feedforward Network

2.4. Depthwise Separable Deformable Pyramid

2.4.1. Depthwise Separable Deformable Convolution

2.4.2. Depthwise Separable Deformable Pyramid Module

3. Datasets and Experimental Settings

3.1. Data Description

3.2. Evaluation Metrics

3.3. Implementation Details

4. Experimental Results and Discussion

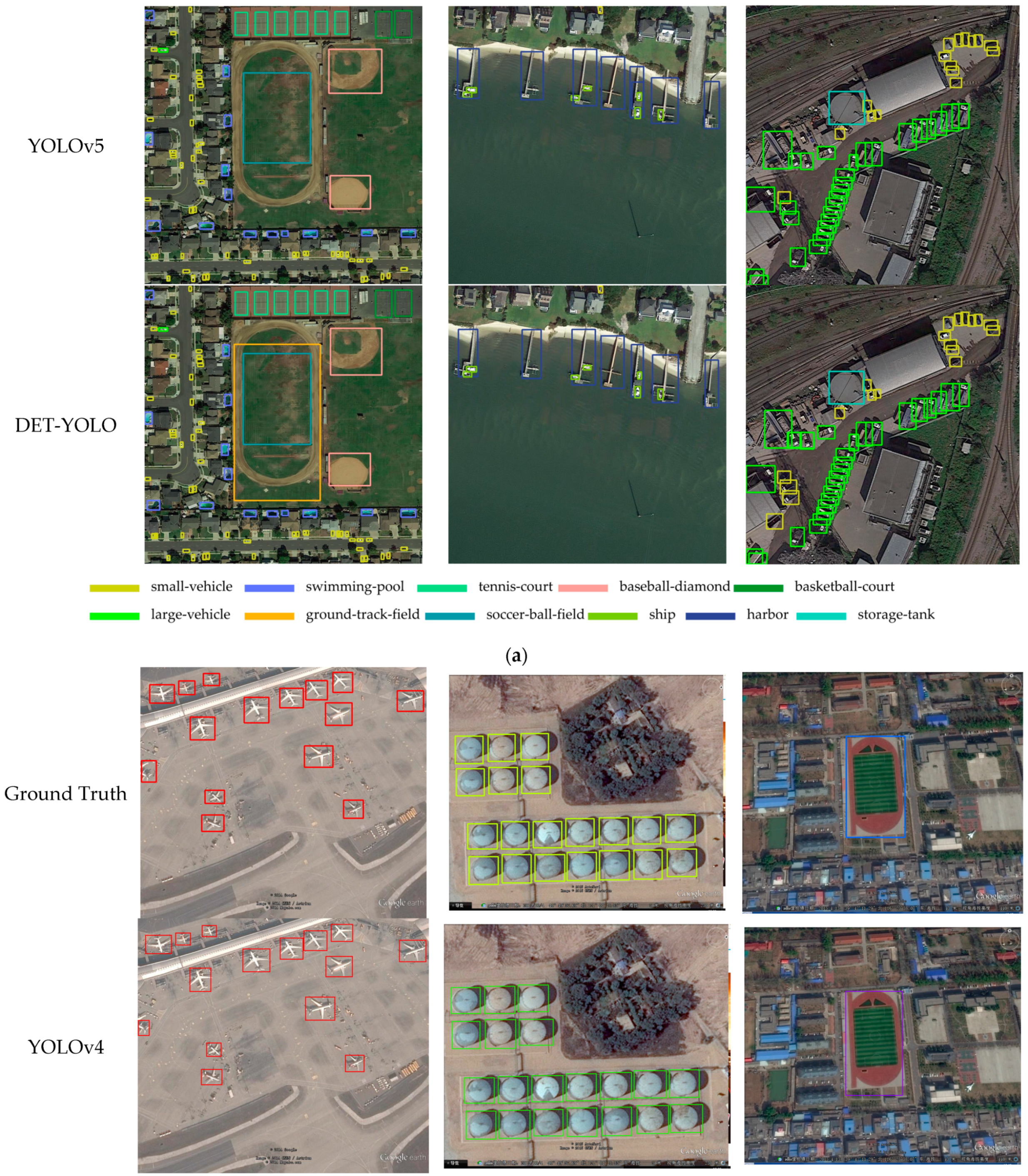

4.1. Contrasting Experiments

4.2. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, J.; Moon, S.; Nam, D.W.; Lee, J.; Oh, A.R.; Yoo, W. A Study on the Identification of Warship Type/Class by Measuring Similarity with Virtual Warship. In Proceedings of the 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 21–23 October 2020; pp. 540–542. [Google Scholar]

- Reilly, V.; Idrees, H.; Shah, M. Detection and tracking of large number of targets in wide area surveillance. In Computer Vision—ECCV 2010, Proceedings of the 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 186–199. [Google Scholar]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Yang, N.; Li, J.; Mo, W.; Luo, W.; Wu, D.; Gao, W.; Sun, C. Water depth retrieval models of East Dongting Lake, China, using GF-1 multi-spectral remote sensing images. Glob. Ecol. Conserv. 2020, 22, e01004. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision, (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.M.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A.J. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—Eccv 2016, Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. DSSD: Deconvolutional Single Shot Detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Ma, W.; Wang, X.; Yu, J. A Lightweight Feature Fusion Single Shot Multibox Detector for Garbage Detection. IEEE Access 2020, 8, 188577–188586. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Gelly, S. An image is worth 16 × 16 words: Transformers for image recognition at scale. In Proceedings of the International Conference on Learning Representations, Virtual Event, 3–7 May 2021. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision (ECCV), Online, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 9650–9660. [Google Scholar]

- Everingham, M.; van Gool, L.; Williams, C.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Cheng, G.; Lang, C.; Wu, M.; Xie, X.; Yao, X.; Han, J. Feature enhancement network for object detection in optical remote sensing images. J. Remote Sens. 2021, 2021, 9805389. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. Cspnet: A new backbone that can enhance learning capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Misra, D. Mish: A self regularized non-monotonic activation function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier Nonlinearities Improve Neural Network Acoustic Models. 2013. Available online: https://www.mendeley.com/catalogue/a4a3dd28-b56b-3e0c-ac53-2817625a2215/ (accessed on 1 June 2021).

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-iou loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and Efficient IOU Loss for Accurate Bounding Box Regression. arXiv 2021, arXiv:2101.08158. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. arXiv 2021, arXiv:2102.12122. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, Pasadena, CA, USA, 13–15 December 2021; pp. 10347–10357. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Howard, A.; Zhu, M.; Chen, B.; Kalenichenko, D. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Islam, M.; Jia, S.; Bruce, N. How much position information do convolutional neural networks encode. arXiv 2020, arXiv:2001.08248. [Google Scholar]

- Li, Y.; Mao, H.; Girshick, R.; He, K. Exploring plain vision transformer backbones for object detection. arXiv 2022, arXiv:2203.16527. [Google Scholar]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate Object Localization in Remote Sensing Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Zhu, H.; Chen, X.; Dai, W.; Fu, K.; Ye, Q.; Jiao, J. Orientation robust object detection in aerial images using deep convolutional neural network. In Proceedings of the 2015 IEEE International Conference on Image Processing, Quebec City, QC, Canada, 27–30 September 2015; pp. 3735–3739. [Google Scholar]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The unmanned aerial vehicle benchmark: Object detection and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. Dota: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Xu, D.; Wu, Y. Improved YOLO-V3 with DenseNet for Multi-Scale Remote Sensing Target Detection. Sensors 2020, 20, 4276. [Google Scholar] [CrossRef] [PubMed]

- Xu, D.; Wu, Y. MRFF-YOLO: A Multi-Receptive Fields Fusion Network for Remote Sensing Target Detection. Remote Sens. 2020, 12, 3118. [Google Scholar] [CrossRef]

- Jocher, G.; Nishimura, K.; Mineeva, T. Yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 23 September 2022).

- Prechelt, L. Early stopping—But when? In Neural Networks: Tricks of the Trade, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 53–67. [Google Scholar]

- Gong, H.; Mu, T.; Li, Q.; Dai, H.; Li, C.; He, Z.; Wang, W.; Han, F.; Tuniyazi, A.; Li, H.; et al. Swin-Transformer-Enabled YOLOv5 with Attention Mechanism for Small Object Detection on Satellite Images. Remote Sens. 2022, 14, 2861. [Google Scholar] [CrossRef]

- Long, X.; Deng, K.; Wang, G.; Zhang, Y.; Dang, Q.; Gao, Y.; Shen, H.; Ren, J.; Han, S.; Ding, E.; et al. PP-YOLO: An effective and efficient implementation of object detector. arXiv 2020, arXiv:2007.12099. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. Tph-yolov5: Improved yolov5 based on transformer prediction head for object detection on drone- captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 2778–2788. [Google Scholar]

| Datasets | Below 10 Pixels | 10–50 Pixels | 50–300 Pixels | Above 300 Pixels |

|---|---|---|---|---|

| RSOD | 0% | 36% | 60% | 4% |

| UCAS-AOD | 0% | 32% | 68% | 0% |

| DOTA | 16% | 69% | 14% | 1% |

| UAVDT | 1% | 89% | 10% | 0% |

| Datasets | Class | Image Number | |

|---|---|---|---|

| RSOD | Training Set | Oil Tank | 102 |

| Aircraft | 270 | ||

| Overpass | 140 | ||

| Playground | 140 | ||

| Test Set | Oil Tank | 63 | |

| Aircraft | 176 | ||

| Overpass | 36 | ||

| Playground | 49 | ||

| UCAS-AOD | Training Set | Aircraft | 600 |

| Car | 310 | ||

| Test Set | Aircraft | 400 | |

| Car | 200 | ||

| DOTA | Training Set | - | 14,729 |

| Test Set | - | 5066 | |

| UAVDT | Training Set | - | 23,829 |

| Test Set | - | 16,580 | |

| Methods | p | R | mAP (IOU = 0.5) |

|---|---|---|---|

| Faster R-CNN | 0.710 | 0.594 | 0.631 |

| SSD | 0.696 | 0.522 | 0.587 |

| RetinaNet | 0.714 | 0.585 | 0.622 |

| YOLOv3 | 0.716 | 0.532 | 0.575 |

| YOLOv4 | 0.732 | 0.593 | 0.653 |

| YOLOv5 | 0.742 | 0.607 | 0.659 |

| TPH-YOLOv5 [49] | 0.785 | 0.643 | 0.683 |

| SPH-YOLOv5 [47] | 0.806 * | 0.683 | 0.716 |

| DET-YOLO | 0.748 | 0.668 | 0.728 |

| Methods | p | R | AP | ||||

|---|---|---|---|---|---|---|---|

| Aircraft | Oil Tank | Overpass | Playground | mAP (IOU = 0.5) | |||

| Faster R-CNN | 0.873 | 0.748 | 0.859 | 0.867 | 0.882 | 0.904 | 0.878 |

| SSD | 0.824 | 0.682 | 0.692 | 0.712 | 0.702 | 0.813 | 0.729 |

| RetinaNet | 0.893 | 0.846 | 0.867 | 0.882 | 0.817 | 0.902 | 0.867 |

| YOLOv3 | 0.850 | 0.693 | 0.743 | 0.739 | 0.751 | 0.852 | 0.771 |

| YOLOv4 | 0.903 | 0.735 | 0.855 | 0.858 | 0.862 | 0.914 | 0.872 |

| YOLOv5 | 0.897 | 0.872 | 0.873 | 0.884 | 0.854 | 0.932 | 0.886 |

| DET-YOLO | 0.925 | 0.909 | 0.925 | 0.963 | 0.918 | 1.000 | 0.952 |

| Methods | p | R | AP | ||

|---|---|---|---|---|---|

| Airplane | Car | mAP (IOU = 0.5) | |||

| Faster R-CNN | 0.896 | 0.775 | 0.873 | 0.865 | 0.869 |

| SSD | 0.770 | 0.574 | 0.702 | 0.726 | 0.714 |

| RetinaNet | 0.887 | 0.742 | 0.843 | 0.865 | 0.854 |

| YOLOv3 | 0.772 | 0.692 | 0.757 | 0.756 | 0.757 |

| YOLOv4 | 0.894 | 0.732 | 0.857 | 0.862 | 0.859 |

| YOLOv5 | 0.892 | 0.785 | 0.892 | 0.858 | 0.875 |

| DET-YOLO | 0.962 | 0.863 | 0.997 | 0.892 | 0.945 |

| Methods | p | R | AP | |||

|---|---|---|---|---|---|---|

| Car | Truck | Bus | mAP (IOU = 0.5) | |||

| YOLOv4 | 0.438 | 0.431 | 0.765 | 0.104 | 0.332 | 0.400 |

| YOLOv5 | 0.471 | 0.427 | 0.767 | 0.121 | 0.349 | 0.419 |

| DET-YOLO | 0.464 | 0.432 | 0.777 | 0.131 | 0.365 | 0.424 |

| Methods | Speed (ms per Picture) |

|---|---|

| YOLOv3 | 28.4 ms |

| YOLOv4 | 43.2 ms |

| YOLOv5 | 102.0 ms |

| TPH-YOLOv5 | 123.5 ms |

| DET-YOLO | 62.1 ms |

| Methods | ViT | DE | DEViT | FPN | DSDP | SAT | PAL | mAP0.50 | mAP0.50:0.95 |

|---|---|---|---|---|---|---|---|---|---|

| YOLOv4 | √ | 0.653 | 0.438 | ||||||

| DET-YOLO | √ | √ | 0.650 | 0.433 | |||||

| √ | √ | 0.664 | 0.447 | ||||||

| √ | √ | √ | 0.687 | 0.471 | |||||

| √ | √ | 0.710 | 0.495 | ||||||

| √ | √ | √ | √ | 0.717 | 0.509 | ||||

| √ | √ | 0.720 | 0.514 | ||||||

| √ | √ | 0.728 | 0.515 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Li, J. YOLOv4 with Deformable-Embedding-Transformer Feature Extractor for Exact Object Detection in Aerial Imagery. Sensors 2023, 23, 2522. https://doi.org/10.3390/s23052522

Wu Y, Li J. YOLOv4 with Deformable-Embedding-Transformer Feature Extractor for Exact Object Detection in Aerial Imagery. Sensors. 2023; 23(5):2522. https://doi.org/10.3390/s23052522

Chicago/Turabian StyleWu, Yiheng, and Jianjun Li. 2023. "YOLOv4 with Deformable-Embedding-Transformer Feature Extractor for Exact Object Detection in Aerial Imagery" Sensors 23, no. 5: 2522. https://doi.org/10.3390/s23052522