Physical inactivity is the fourth leading cause of death worldwide [

1]. Indeed, 5.3 million people die from non-communicable diseases yearly due to physical inactivity, including breast and colon cancers, type 2 diabetes, and coronary heart disease [

2]. The World Health Organization is committed to reduce the prevalence of physical inactivity by 10% by the end of 2025 [

3], drawing even more attention to this phenomenon.

The restraint measures, widely taken to contrast the spread of COVID-19, have further reduced the general level of physical activity, mainly because citizens were forced to stay in their homes [

4,

5]. Besides, lockdowns and other travel-limiting measures have restricted access to gyms, parks, and other places where people can train and work out [

6]. On the flip side, home confinement and increased time availability have fostered the use of digital communication technologies [

7]. Therefore, even though the pandemic caused a further decrease in physical activity, the adoption of mobile health-related applications (mHealth) has increased, thereby opening up new perspectives for promoting physical activity.

This trend indicates the potential to reach a large number of individuals with smartphone-based interventions promoting and supporting physical activity at a relatively little cost [

7]. Notably, mobile methods allow to remotely collect data of actual app usage in a non-invasive manner and in a fully ecological context [

8].

A growing body of evidence indicates that mHealth can positively influence behavior change, resulting in improved health outcomes. However, for what specifically concerns physical activity, the results are mixed [

9]. This may be due to the poor quality of the apps or the limited inclusion of gamification and competition elements [

9]. Yet, the potential of gamified mobile apps to promote higher levels of physical activity is well exemplified by the Pokémon GO app, which has been able to increase the number of young adults who reach 10,000 steps per day [

10].

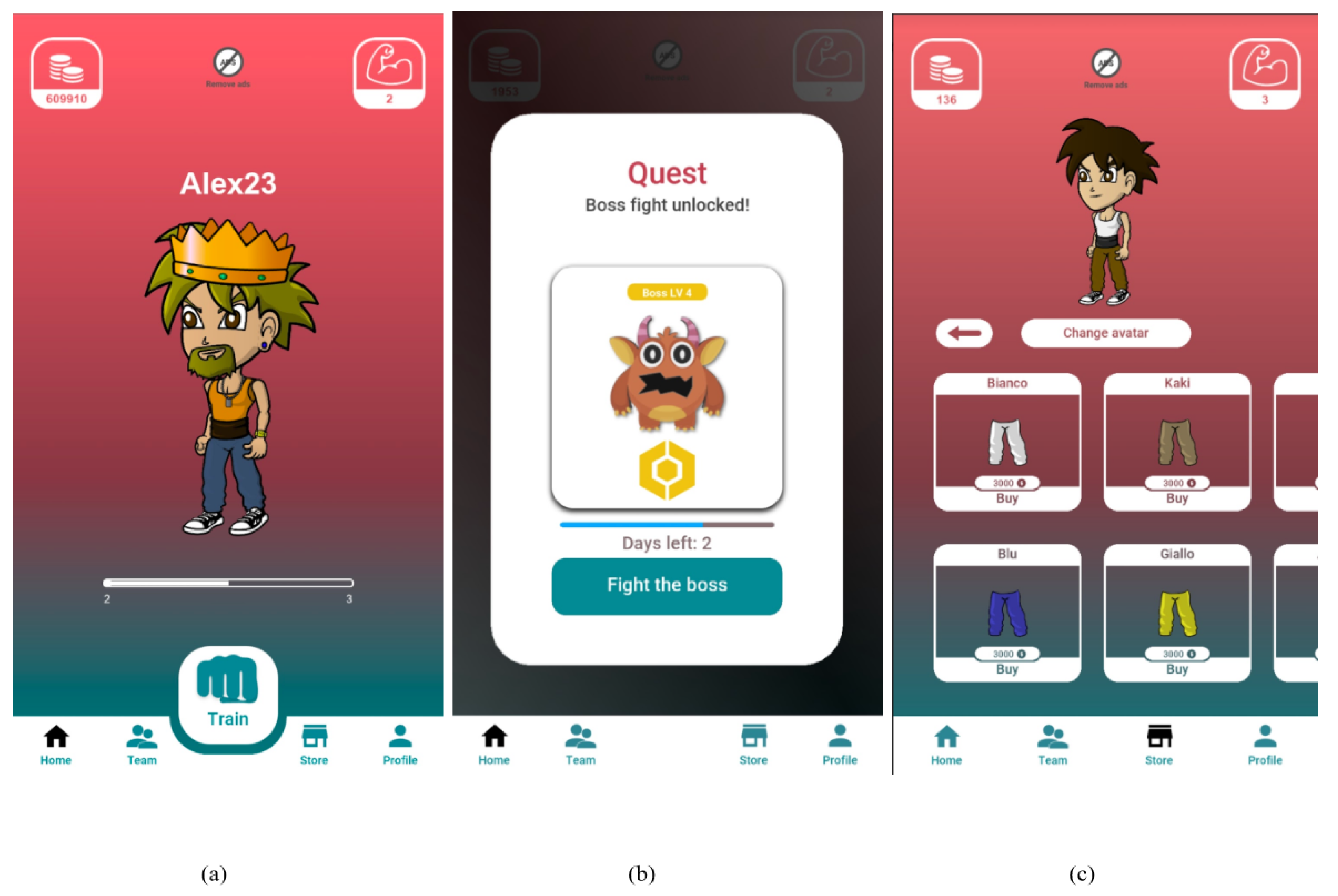

The study presented in this paper has the explicit purpose of helping people become more physically active, leveraging gamification to improve engagement and promote behavior change. Additionally, in order to face the new challenges presented by the pandemic and isolation situation, it exploits mobile methods to achieve a no-contact and fully remote field study. To do this, we purposefully developed a mobile app promoting physical activity designed to be the experimental platform in which the participant should conduct all the experimental phases. Moreover, to evaluate the effectiveness of game design elements, three app versions that differ in the richness of gamification features implemented were developed. Participants were randomly divided into three groups, each associated with a different experimental condition running on a dedicated version of the app.

We collected data logs about app retention time on the user’s device, behavioral data regarding the number of workout sessions, and user performance, to investigate the effect of different patterns of game elements. The rest of the paper is organized as follows. The next section will present the related works. Subsequently, the study will be described, focusing on the developed app, the experimental method, and the procedure. Finally, the analyses will be detailed and the outcomes discussed.

1.1. Gamification to Foster Behavior Change

In the past years, numerous apps have been developed to help users increase physical activity both through daily monitoring (e.g., step counters) and through performance-related push notifications [

11]. However, up to 75% of users who install health apps quit using them within two weeks from the first installation [

12].

To overcome these high dropout rates, a number of different strategies have been experimented. The presence of social elements, for example, has proven to be an effective motivational leverage for gym fanatics, leading many fitness enthusiasts to spend several hours each day in intensive training sessions for the only purpose of being able to “post” successful selfies on their Instagram accounts [

13].

In the same vein, many apps have embedded elements typical of the world of video games, such as badges and levels, managing to increase the engagement of their users [

14]. Notably, the apps that decided to remove these elements, considering them superfluous, such as Nike+, which removed the badges from its apps in 2016, found a reduction in involvement, generating a sense of dissatisfaction among its users [

15].

Many researchers have emphasized the importance of investigating the role of gamification in apps designed to promote physical activity [

16]. In particular, Johnson and collaborators [

17] found that gamified smartphone apps are in fact capable of increasing the level of physical activity.

In the present work, we refer to the definition of gamification proposed by Deterding, who conceptualized it as the “use of game design elements in non-game contexts” [

18]. More specifically, with game design elements, we refer to features that, once introduced in non-playful contexts, can replicate playful dynamics without necessarily turning the application into an actual game. There are many different possible game elements, but points, ranking, and badges are the ones most commonly employed [

19]. Often these elements are simply added to the application without taking into consideration the user experience or the generated dynamics, resulting in a losing approach known as pointification [

20]. In recent years, however, other approaches have been developed that try to incorporate more aspects of the experience, such as the Smart Gamification Model by Kim [

21]. Her model emphasizes the importance of applying different mechanics depending on the user’s specific needs and expertise level [

21]. More specifically, she breaks the player journey into three stages, each identifying a different type of player. In particular, newbies need onboarding elements to understand and appreciate the new game world into which they are introduced; regular players are already familiar with the game mechanics and require habit-building elements to master it. Finally, player enthusiasts need elements to recognize their ability after reaching total mastery of the game.

1.2. Leveraging Mobile Apps to Run Field Experiments

The distinctive feature of field experiments is that they are conducted in natural environments. Their main value is thus attributed to the high ecological validity, because it increases the researcher’s confidence that the phenomenon under investigation naturally occurs and follows its spontaneous unfolding. With that respect, the emerging field of mobile methods is particularly promising because it enables the collection of detailed event logs of naturally occurring behaviors by leveraging mobile communication technologies [

8].

Furthermore, there are many additional advantages of using mobile apps as data collection tools. Firstly, scaling, which is the ability to reach a large pool of participants with relatively few resources, is a common struggle in field experiments [

22]. With that respect, virtually everybody owns a smartphone and/or a tablet [

23]. In the United States 95% of adults aged 18–35 years and 60% of adults aged over 50 years own a smartphone [

24]. This wide penetration potentially makes every device an intervention facilitator and a data collection tool to track thousands of people interacting with the platform [

22].

A further benefit concerns the control over the randomization and the delivery of the experimental materials. In this regard, mobile apps can automate the random assignment and control material delivery, avoiding human errors and ensuring strict double-blind experiments [

22]. According to Helbing and Pounaras [

25], mobile methods could become the new gold standard for accurate measures of real-time behavior changes.

Still, we have to acknowledge that mobile methods have an important limitation amounting to the generalizability of the results to the entire population. Indeed, the sample is inherently biased, because participation in the study usually requires a certain level of expertise and familiarity with these technologies, thereby failing to represent digital illiterates. In the research of Rothschild and colleagues [

26], for example, only 6% of contacted women enrolled in the study. The authors acknowledged that the sociodemographic characteristics of the sample could differ from those of the source group.

Despite all these potential benefits, only a few studies have used apps as experimental platforms; that is, mobile apps that are purposefully implemented to collect data, deliver experiment materials, and manage the random assignment across different experimental conditions, without the intervention of the researcher to mediate the interaction between the participant and the app. Typically, apps have been used to run the experimental condition paired with a non-app-based control condition, including, for instance, face-to-face training [

22].

Mulcahy and colleagues [

27] conducted a field experiment to test whether the level of sustainability of actual consumers could be improved by using game elements (including points, badges, and other rewards). To this end, they developed a custom app in collaboration with a local city government and were able to randomly recruit 601 real consumers. They showed that gamification positively affected consumers’ attitudes and behaviors toward bill savings.

A further study that was meant to improve sustainability-related behaviors employed a gamification app [

28]. More specifically, they examined users’ experiences with a gamified app designed to promote sustainable energy behaviors. The results indicated that the sustainable behavior of turning off electricity switches could be encouraged through gamification. It should be noted that this study employed the “Reduce Your Juice” app, which was not purposefully developed to be an experiment platform. Indeed, the pre and post-test phases unfolded using different digital platforms.

Gamification apps have also been employed in longitudinal field studies. For instance, Feng and collaborators [

29] analyzed a gamification app to investigate whether the type of game element, being commensurate and non-commensurate, had an impact on their effectiveness. The term commensurability refers to the extent to which consumers are able to quantify the value of a reward [

30]. For example, commensurate game elements are directly associated with consumers’ performances, while incommensurate game elements are not related to their performances. The former were found to be associated with user performances, while the latter were connected to the satisfaction of psychological needs (i.e., autonomy, competence, and relatedness). However, it should be acknowledged that in this case, the apps were not differing only for the commensurate or non-commensurate game elements, but rather the entire app was different.

The study presented here aimed to overcome the above-mentioned limitations. More specifically, we purposefully developed a mobile app designed to be the experimental platform in which the participant should conduct all the experimental phases. In the present article, we report a field experiment that was meant to investigate the contribution of gamification features to foster compliance in a mobile application promoting physical activity.