A Multi-Layered 3D NDT Scan-Matching Method for Robust Localization in Logistics Warehouse Environments

Abstract

:1. Introduction

- Complete mathematical description for the multi-layered 3D NDT scan-matching is provided;

- Robustness of the 3D NDT scan-matching-based localization can be greatly improved by identifying the layers that significantly cause the uncertainties in scan-matching using the entire map;

- Accurate localization in the warehouse’s cluttered and dynamic logistics environments can be achieved;

- Robustness and accuracy of a proposed method are thoroughly evaluated by comparing standard 3D NDT scan-matching through the domain randomization technique of Nvidia Isaac Sim, which powers a photorealistic and physically accurate virtual environment.

2. Methods

3. Simulation and Performance Evaluation

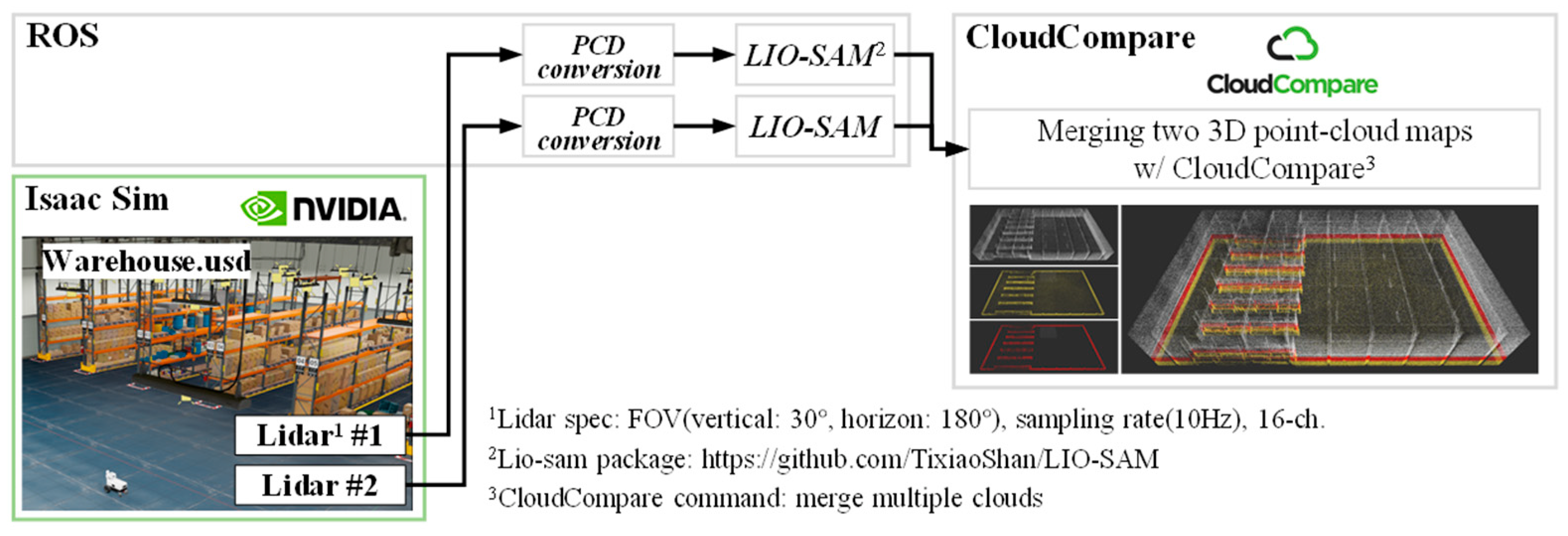

3.1. Simulation Framework of a Multi-Layered 3D NDT Scan-Matching Method

| Algorithm 1 Pseudocode for the covariance intersection of multiple covariance matrices |

| Inputs: layered 3D point-cloud scan data leaf size for voxelization Outputs: : mean vector of ith layer : covariance matrix of ith layer Initialization process: 1: Create an instance of CovarianceIntersection class as fuser; 2: Do the voxelization of layered 3D point-cloud scan data; 3: Road sets of mean and covariance arrays from voxelization; 4: Initialize mean(1) and cov(1) with mean and covariance of the 1st cell. Covariance intersection process: 1: for all cells (j = 2 …. num_cell) do; 2: current_mean = mean(j), current_cov = cov(j); 3: fused_mean = mean(j − 1), fused_cov = cov(j − 1); 4: mean, cov = fuser.fuse(fused_mean, fused_cov, current_mean, current_cov); 5: end for; 6: return a final mean and cov as the mean and covariance of ith layer, and , respectively. |

3.2. Simulation Environment: Logistics Warehouse

- Racks and Shelving: The primary storage structure in a warehouse is to store boxes, pallets, and other items;

- Conveyors: Used to move items and packages around the warehouse, including receiving docks, sorting lines, and shipping docks;

- Forklifts: Used to move heavy items and pallets within the warehouse, including stacking and retrieving items from the racks;

- Loading Bays: Areas designated for loading and unloading trucks, trailers, and containers;

- Pick and Pack Stations: Areas designated for picking and packing items for shipment, typically equipped with conveyors, packing tables, and materials handling equipment.

3.3. Comparative Study: Convergence Performance and Scan-Matching Robustness

- Scenario 1: Start 3D scan-matching-based localization without object-teleport -> When scan-matching starts, start the same as the original map, and then use Isaac Sim’s teleport to appear boxes on the pallet around MR and change its location at regular time intervals;

- Scenario 2: 3D scan-matching-based localization starts with object-teleport -> The difference from scenario one is that scan-matching starts in a scene different from the original 3D point-cloud map through the teleport.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ekici, M.; Seçkin, A.Ç.; Özek, A.; Karpuz, C. Warehouse Drone: Indoor Positioning and Product Counter with Virtual Fiducial Markers. Drones 2022, 7, 3. [Google Scholar] [CrossRef]

- Zeng, Q.; Kan, Y.; Tao, X.; Hu, Y. LiDAR Positioning Algorithm Based on ICP and Artificial Landmarks Assistance. Sensors 2021, 21, 7141. [Google Scholar] [CrossRef] [PubMed]

- Ismail, H.; Roy, R.; Sheu, L.-J.; Chieng, W.-H.; Tang, L.-C. Exploration-Based SLAM (e-SLAM) for the Indoor Mobile Robot Using Lidar. Sensors 2022, 22, 1689. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Wang, C.; Wu, H.; Wei, Y.; Ren, M.; Zhao, C. Improved LiDAR Localization Method for Mobile Robots Based on Multi-Sensing. Remote Sens. 2022, 14, 6133. [Google Scholar] [CrossRef]

- Basavanna, M.; Shivakumar, M.; Prakash, K.; Bhomkar, P. ROS Based 3D Mapping of an Indoor Environment Using Fusion of Orbbec Astra Camera and Lidar on Turtlebot Mobile Robot. In Proceedings of the 5th International Conference on Electrical, Electronics, Communication, Computer Technologies and Optimization Techniques (ICEECCOT), Mysuru, India, 10–11 December 2021; pp. 323–327. [Google Scholar] [CrossRef]

- Arun, S.; Huang, T.S.; Blostein, S.D. Least-square fitting of two 3-D point sets. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 9, 698–700. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Besl, P.J.; McKay, N.D. A Method for Registration of 3-D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Zhang, Z. Iterative point matching for registration of free-form curves and surfaces. Int. J. Comput. Vis. 1994, 13, 119–152. [Google Scholar] [CrossRef]

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 27–31 October 2003. [Google Scholar]

- Magnusson, M.; Lilienthal, A.; Duckett, T. Scan registration for autonomous mining vehicles using 3D-NDT. J. Field Robot. 2007, 24, 803–827. [Google Scholar] [CrossRef] [Green Version]

- Akai, N.; Morales, L.Y.; Takeuchi, E.; Yoshihara, Y.; Ninomiya, Y. Robust localization using 3D NDT scan matching with experimentally determined uncertainty and road marker matching. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1356–1363. [Google Scholar] [CrossRef]

- Magnusson, M.; Nuchter, A.; Lorken, C.; Lilienthal, A.J.; Hertzberg, J. Evaluation of 3D registration reliability and speed—A comparison of ICP and NDT. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3907–3912. [Google Scholar] [CrossRef] [Green Version]

- Uhlmann, J. Dynamic Map Building and Localization: New Theoretical Foundations. Ph.D. Thesis, University of Oxford, Oxford, UK, 1995. [Google Scholar]

- Takeuchi, E.; Tsubouchi, T. A 3-D scan matching using improved 3-D normal distributions transform for mobile robotic mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 3068–3073. [Google Scholar] [CrossRef]

- Kan, Y.-C.; Hsu, L.-T.; Chung, E. Performance Evaluation on Map-Based NDT Scan Matching Localization Using Simulated Occlusion Datasets. IEEE Sens. Lett. 2021, 5, 1–4. [Google Scholar] [CrossRef]

- Javanmardi, E.; Javanmardi, M.; Gu, Y.; Kamijo, S. Pre-Estimating Self-Localization Error of NDT-Based Map-Matching From Map Only. IEEE Trans. Intell. Transp. Syst. 2020, 22, 7652–7666. [Google Scholar] [CrossRef]

- Zhen, W.; Zeng, S.; Soberer, S. Robust localization and localizability estimation with a rotating laser scanner. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 6240–6245. [Google Scholar] [CrossRef]

- Ripperda, N.; Brenner, C. Marker-free registration of terrestrial laser scans using the normal distribution transform. In Proceedings of the ISPRS Working Group V/4 Workshop 3D-ARCH: Virtual Reconstruction and Visualization of Complex Architectures, Venice, Italy, 22–24 August 2005. [Google Scholar]

- Ulaş, C.; Temeltaş, H. 3D Multi-Layered Normal Distribution Transform for Fast and Long Range Scan Matching. J. Intell. Robot. Syst. 2012, 71, 85–108. [Google Scholar] [CrossRef]

- LIO-SAM: Tightly-Coupled Lidar Inertial Odometry via Smoothing and Mapping. Available online: https://github.com/TixiaoShan/LIO-SAM.git (accessed on 20 January 2023).

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled lidar inertial odometry via smoothing and mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021. [Google Scholar]

- Marques, S. Covariance intersection algorithm for formation flying spacecraft navigation from RF measurements. In Proceedings of the 4 ISLAB Workshop, Formation Estimation Methodologies for Distributed Spacecraft ESA (European Space Agency), 12 November 2014. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. Using covariance intersection for SLAM. Robot. Auton. Syst. 2007, 55, 3–20. [Google Scholar] [CrossRef]

- Chen, L.; Arambel, P.; Mehra, R. Fusion under unknown correlation—Covariance intersection as a special case. In Proceedings of the Fifth International Conference on Information Fusion, Annapolis, MD, USA, 8–11 July 2003; Volume 2, pp. 905–912. [Google Scholar] [CrossRef]

- Covariance Intersection. Available online: https://github.com/KIT-ISAS/data-fusion/blob/master/algorithms/covariance_intersection.py (accessed on 15 January 2023).

- Xie, L.; Li, H.; Luttmann, L. Formulating and solving integrated order batching and routing in multi-depot AGV-assisted mixed-shelves warehouses. Eur. J. Oper. Res. 2023, 307, 713–730. [Google Scholar] [CrossRef]

- Foumani, M.; Moeini, A.; Haythorpe, M.; Smith-Miles, K. A cross-entropy method for optimising robotic automated storage and retrieval systems. Int. J. Prod. Res. 2018, 56, 6450–6472. [Google Scholar] [CrossRef]

| Matching Resolution 1 | Down Sampling Rate [%] | Voxel Size 2 [m3] | Transform Epsilon | Max. Iteration | Distance Metric 3 |

|---|---|---|---|---|---|

| 1.0 | 50 | 0.5 × 0.5 × 0.5 | 0.1 | 35 | Euclidean distance |

| Method | Distance Error Mean [mm] | Distance Error Mean [mm] | Heading Error Mean [deg] | Heading Error Mean [deg] | Computational Complexity [ms] |

|---|---|---|---|---|---|

| Scenario 1-1 | Scenario 2-1 | Scenario 1-1 | Scenario 2-1 | ||

| 3D NDT | 124.3 | 124.3 | −0.39 | −0.39 | 202 |

| ML 3D NDT | 98.22 (−21.0%) | 98.2 (−20.9%) | −0.24 (38.5%) | −0.23 (41.0%) | 224 (10.9%) |

| 20 cm | 40 cm | 60 cm | |||

|---|---|---|---|---|---|

| ML-3D NDT | 3D NDT | ML-3D NDT | 3D NDT | ||

| Distance error | 101.7 (−18.6%) | 125.0 | 103.2 (−18.3%) | 126.3 | divergence |

| Heading error | −0.205 (−47.4%) | −0.39 | −0.21 (−46.1%) | −0.39 | divergence |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, T.; Jeon, H.; Lee, D. A Multi-Layered 3D NDT Scan-Matching Method for Robust Localization in Logistics Warehouse Environments. Sensors 2023, 23, 2671. https://doi.org/10.3390/s23052671

Kim T, Jeon H, Lee D. A Multi-Layered 3D NDT Scan-Matching Method for Robust Localization in Logistics Warehouse Environments. Sensors. 2023; 23(5):2671. https://doi.org/10.3390/s23052671

Chicago/Turabian StyleKim, Taeho, Haneul Jeon, and Donghun Lee. 2023. "A Multi-Layered 3D NDT Scan-Matching Method for Robust Localization in Logistics Warehouse Environments" Sensors 23, no. 5: 2671. https://doi.org/10.3390/s23052671