Exploring Self-Supervised Vision Transformers for Gait Recognition in the Wild

Abstract

1. Introduction

2. Related Work

2.1. Gait Recognition

2.2. Vision Transformers

3. Method

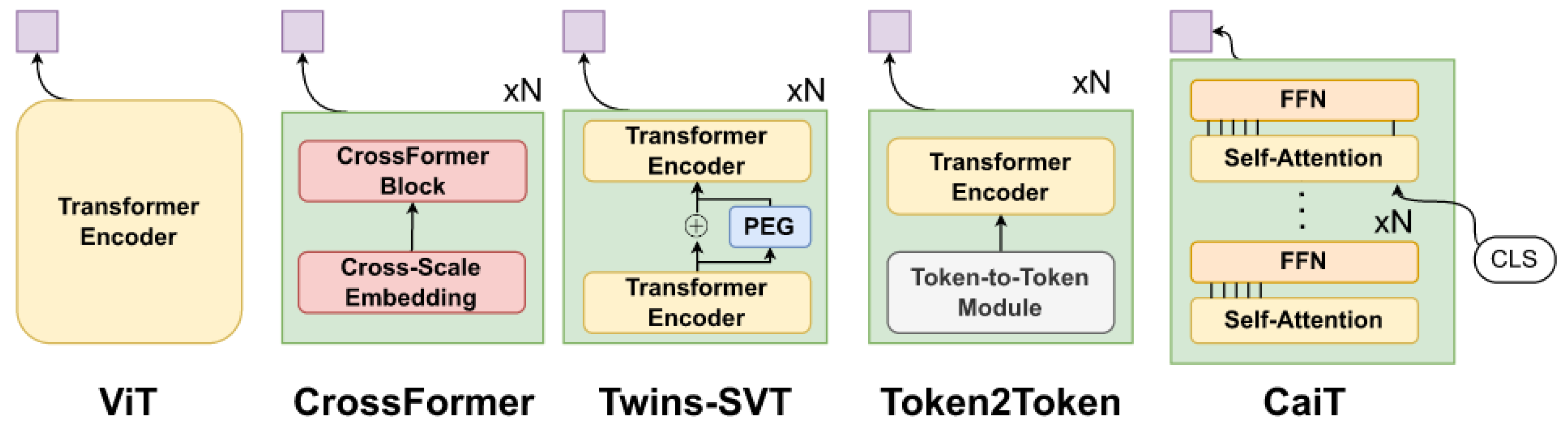

3.1. Architectures Description

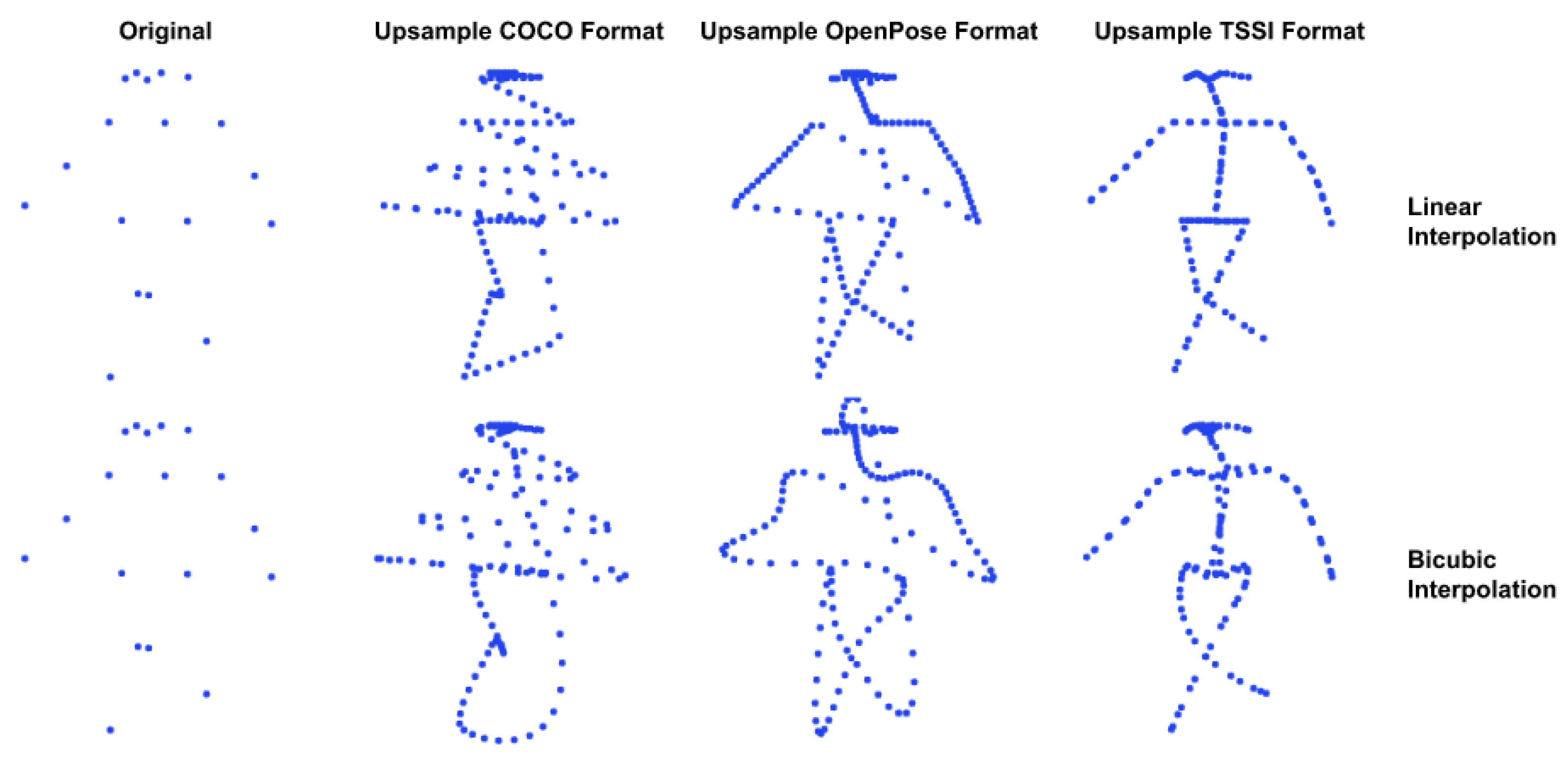

3.2. Data Preprocessing

3.3. Training Details

3.4. Data Augmentation

3.5. Initialization Methods

3.6. Evaluation

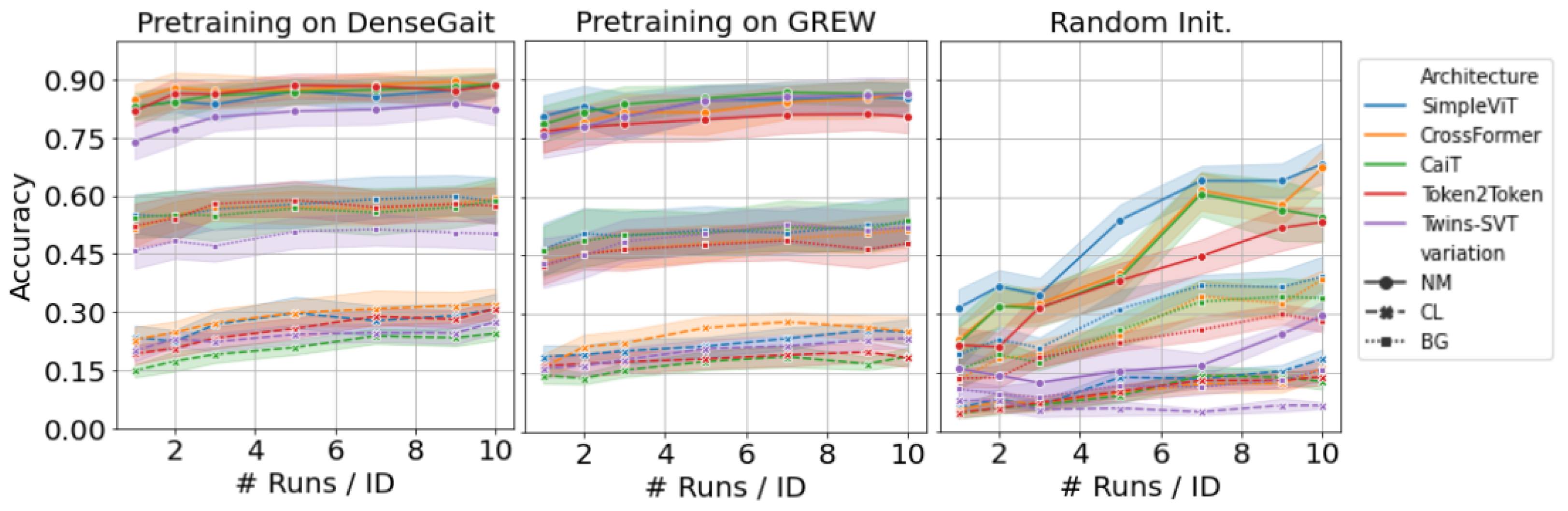

4. Experiments and Results

4.1. Evaluation of CASIA-B

4.2. Evaluation of FVG

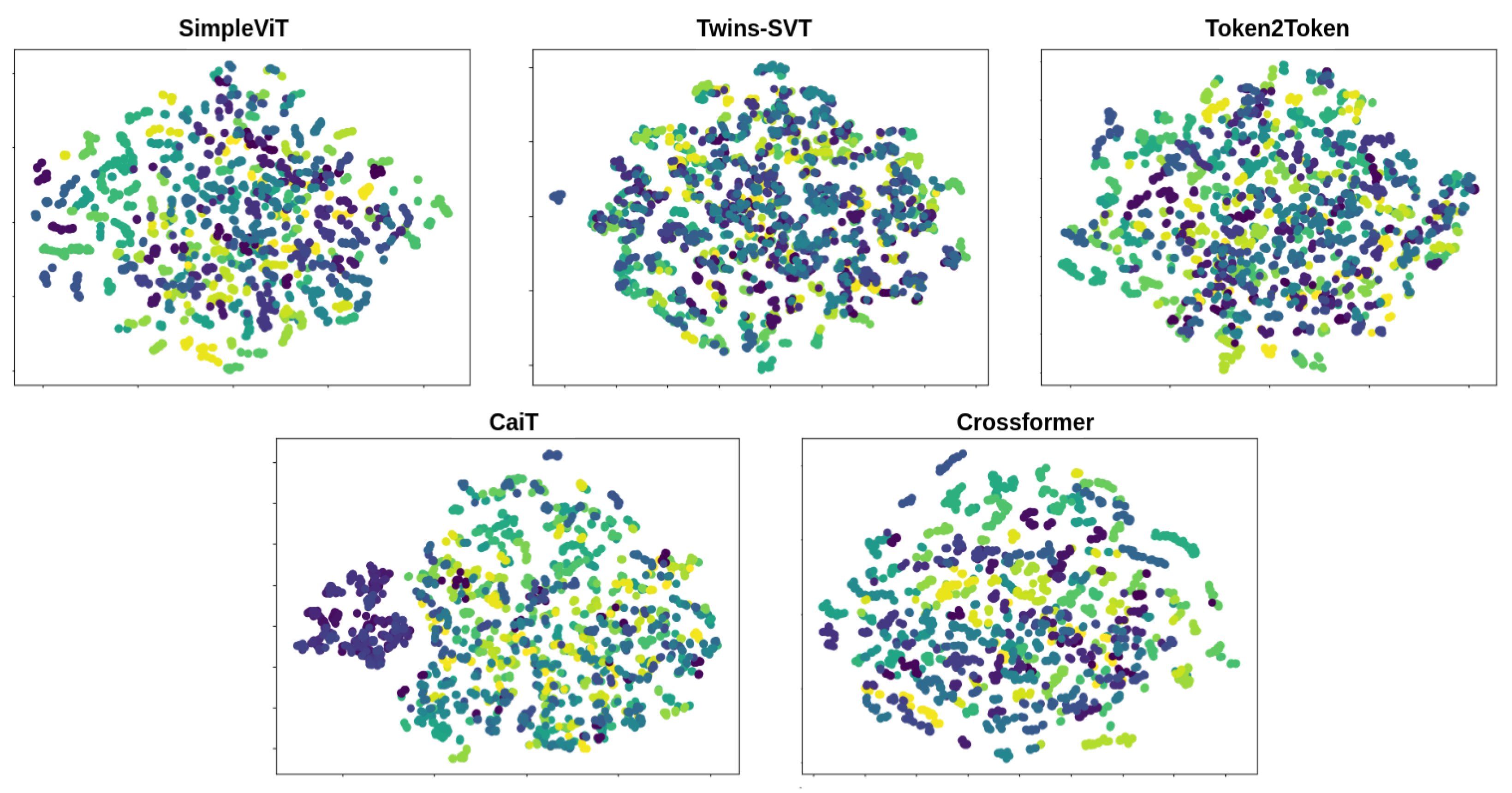

4.3. Spatiotemporal Sensitivity Test

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| LN | LayerNorm |

| MSA | multi-head self-attention |

| FFN | feedforward network |

| SS | soft split |

| SSSA | spatially separable self-attention |

| LSA | locally-grouped self-attention |

| GSA | global sub-sampled attention |

| SDA | short-distance attention |

| LDA | long-distance attention |

| TSSI | tree skeleton structure image |

| SupCon | supervised contrastive |

| FVG | front-view gait |

| GREW | gait recognition in the wild |

| ViT | vision transformer |

References

- Kyeong, S.; Kim, S.M.; Jung, S.; Kim, D.H. Gait pattern analysis and clinical subgroup identification: A retrospective observational study. Medicine 2020, 99, e19555. [Google Scholar] [CrossRef] [PubMed]

- Michalak, J.; Troje, N.F.; Fischer, J.; Vollmar, P.; Heidenreich, T.; Schulte, D. Embodiment of Sadness and Depression—Gait Patterns Associated With Dysphoric Mood. Psychosom. Med. 2009, 71, 580–587. [Google Scholar] [CrossRef] [PubMed]

- Willems, T.M.; Witvrouw, E.; De Cock, A.; De Clercq, D. Gait-related risk factors for exercise-related lower-leg pain during shod running. Med. Sci. Sports Exerc. 2007, 39, 330–339. [Google Scholar] [CrossRef] [PubMed]

- Singh, J.P.; Jain, S.; Arora, S.; Singh, U.P. Vision-based gait recognition: A survey. IEEE Access 2018, 6, 70497–70527. [Google Scholar] [CrossRef]

- Makihara, Y.; Nixon, M.S.; Yagi, Y. Gait recognition: Databases, representations, and applications. Comput. Vis. Ref. Guide 2020, 1–13. [Google Scholar]

- Yu, S.; Tan, D.; Tan, T. A framework for evaluating the effect of view angle, clothing and carrying condition on gait recognition. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 4, pp. 441–444. [Google Scholar]

- Zhu, Z.; Guo, X.; Yang, T.; Huang, J.; Deng, J.; Huang, G.; Du, D.; Lu, J.; Zhou, J. Gait Recognition in the Wild: A Benchmark. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Chao, H.; He, Y.; Zhang, J.; Feng, J. Gaitset: Regarding gait as a set for cross-view gait recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8126–8133. [Google Scholar]

- Fan, C.; Peng, Y.; Cao, C.; Liu, X.; Hou, S.; Chi, J.; Huang, Y.; Li, Q.; He, Z. Gaitpart: Temporal part-based model for gait recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14225–14233. [Google Scholar]

- Cosma, A.; Radoi, I.E. WildGait: Learning Gait Representations from Raw Surveillance Streams. Sensors 2021, 21, 8387. [Google Scholar] [CrossRef] [PubMed]

- Catruna, A.; Cosma, A.; Radoi, I.E. From Face to Gait: Weakly-Supervised Learning of Gender Information from Walking Patterns. In Proceedings of the 2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), Jodhpur, India, 15–18 December 2021; pp. 1–5. [Google Scholar]

- Cosma, A.; Radoi, E. Learning Gait Representations with Noisy Multi-Task Learning. Sensors 2022, 22, 6803. [Google Scholar] [CrossRef] [PubMed]

- Kirkcaldy, B.D. Individual Differences in Movement; Kirkcaldy, B.D., Ed.; MTP Press Lancaster: England, UK; Boston, MA, USA, 1985; pp. 14, 309. [Google Scholar]

- Zheng, J.; Liu, X.; Liu, W.; He, L.; Yan, C.; Mei, T. Gait Recognition in the Wild with Dense 3D Representations and A Benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–20 June 2022. [Google Scholar]

- Liu, X.; Zhang, F.; Hou, Z.; Mian, L.; Wang, Z.; Zhang, J.; Tang, J. Self-supervised learning: Generative or contrastive. IEEE Trans. Knowl. Data Eng. 2021, 35, 857–876. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 9650–9660. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the Thirty-second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Xu, C.; Makihara, Y.; Liao, R.; Niitsuma, H.; Li, X.; Yagi, Y.; Lu, J. Real-Time Gait-Based Age Estimation and Gender Classification From a Single Image. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 3460–3470. [Google Scholar]

- Cao, Z.; Hidalgo Martinez, G.; Simon, T.; Wei, S.; Sheikh, Y.A. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Wang, C.; Zhu, H.; Mao, Y.; Fang, H.S.; Lu, C. Crowdpose: Efficient crowded scenes pose estimation and a new benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10863–10872. [Google Scholar]

- Liu, Z.; Zhang, H.; Chen, Z.; Wang, Z.; Ouyang, W. Disentangling and Unifying Graph Convolutions for Skeleton-Based Action Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 143–152. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Touvron, H.; Cord, M.; Sablayrolles, A.; Synnaeve, G.; Jégou, H. Going deeper with image transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 32–42. [Google Scholar]

- Wang, W.; Yao, L.; Chen, L.; Lin, B.; Cai, D.; He, X.; Liu, W. CrossFormer: A versatile vision transformer hinging on cross-scale attention. arXiv 2021, arXiv:2108.00154. [Google Scholar]

- Chu, X.; Tian, Z.; Wang, Y.; Zhang, B.; Ren, H.; Wei, X.; Xia, H.; Shen, C. Twins: Revisiting the design of spatial attention in vision transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 9355–9366. [Google Scholar]

- Yuan, L.; Chen, Y.; Wang, T.; Yu, W.; Shi, Y.; Jiang, Z.H.; Tay, F.E.; Feng, J.; Yan, S. Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 558–567. [Google Scholar]

- Zhang, Z.; Tran, L.; Yin, X.; Atoum, Y.; Wan, J.; Wang, N.; Liu, X. Gait Recognition via Disentangled Representation Learning. In Proceedings of the IEEE Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Teepe, T.; Khan, A.; Gilg, J.; Herzog, F.; Hörmann, S.; Rigoll, G. Gaitgraph: Graph convolutional network for skeleton-based gait recognition. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 2314–2318. [Google Scholar]

- Fu, Y.; Wei, Y.; Zhou, Y.; Shi, H.; Huang, G.; Wang, X.; Yao, Z.; Huang, T. Horizontal Pyramid Matching for Person Re-Identification. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar] [CrossRef]

- Song, Y.F.; Zhang, Z.; Shan, C.; Wang, L. Stronger, faster and more explainable: A graph convolutional baseline for skeleton-based action recognition. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1625–1633. [Google Scholar]

- Li, N.; Zhao, X. A Strong and Robust Skeleton-based Gait Recognition Method with Gait Periodicity Priors. IEEE Trans. Multimed. 2022. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 568–578. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Yang, J.; Li, C.; Zhang, P.; Dai, X.; Xiao, B.; Yuan, L.; Gao, J. Focal attention for long-range interactions in vision transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 30008–30022. [Google Scholar]

- Chen, C.F.; Panda, R.; Fan, Q. Regionvit: Regional-to-local attention for vision transformers. arXiv 2021, arXiv:2106.02689. [Google Scholar]

- Graham, B.; El-Nouby, A.; Touvron, H.; Stock, P.; Joulin, A.; Jégou, H.; Douze, M. Levit: A vision transformer in convnet’s clothing for faster inference. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 12259–12269. [Google Scholar]

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lučić, M.; Schmid, C. Vivit: A video vision transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 6836–6846. [Google Scholar]

- Bertasius, G.; Wang, H.; Torresani, L. Is space-time attention all you need for video understanding? In Proceedings of the International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021; Volume 2, p. 4. [Google Scholar]

- Xu, X.; Meng, Q.; Qin, Y.; Guo, J.; Zhao, C.; Zhou, F.; Lei, Z. Searching for alignment in face recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 3065–3073. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning (PMLR), Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Yang, Z.; Li, Y.; Yang, J.; Luo, J. Action recognition with spatio–temporal visual attention on skeleton image sequences. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 2405–2415. [Google Scholar] [CrossRef]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised Contrastive Learning. In Proceedings of the Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 18661–18673. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning (PMLR), Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Smith, L.N. No More Pesky Learning Rate Guessing Games. arXiv 2015, arXiv:1506.01186. [Google Scholar]

- Wang, J.; Jiao, J.; Liu, Y.H. Self-supervised video representation learning by pace prediction. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2020; pp. 504–521. [Google Scholar]

- Xu, C.; Makihara, Y.; Ogi, G.; Li, X.; Yagi, Y.; Lu, J. The OU-ISIR Gait Database Comprising the Large Population Dataset with Age and Performance Evaluation of Age Estimation. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 1–14. [Google Scholar] [CrossRef]

- Zhang, T.; Wu, F.; Katiyar, A.; Weinberger, K.Q.; Artzi, Y. Revisiting Few-sample BERT Fine-tuning. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- van der Maaten, L.; Hinton, G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Architecture | |||||

|---|---|---|---|---|---|

| Resize Type | ViT | CrossFormer | TwinsSVT | Token2Token | CaiT |

| Linear | 75.80 | 68.40 | 66.86 | 77.71 | 49.63 |

| Deconv | 73.90 | 65.24 | 70.96 | 71.26 | 55.05 |

| Upsample Bilinear | 80.49 | 78.66 | 67.66 | 79.32 | 55.20 |

| Upsample Bicubic | 80.57 | 81.01 | 62.60 | 78.73 | 50.36 |

| Dataset | # IDs | Sequences | Views | Env. | Note |

|---|---|---|---|---|---|

| FVG [29] | 226 | 2857 | 1 | Outdoor | Controlled |

| CASIA-B [6] | 124 | 13,640 | 11 | Indoor | Controlled |

| OU-ISIR [49] | 10,307 | 144,298 | 14 | Indoor | Controlled |

| GREW [7] | 26,000 | 128,000 | - | Outdoor | Realistic |

| UWG [10] | 38,502 * | 38,502 | - | Outdoor | Realistic |

| DenseGait [12] | 217,954 * | 217,954 | - | Outdoor | Realistic |

| Method | Kind | Pretraining Dataset | 0 | 18 | 36 | 54 | 72 | 90 | 108 | 126 | 144 | 162 | 180 | Mean | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NM | SimpleViT | Rand. Init. | − | 73.39 | 82.26 | 81.45 | 76.61 | 55.65 | 51.61 | 62.10 | 70.97 | 72.58 | 66.94 | 59.68 | 68.48 |

| SimpleViT | ZS | DenseGait | 69.35 | 62.90 | 69.35 | 71.77 | 48.39 | 49.19 | 50.00 | 68.55 | 71.77 | 72.58 | 58.06 | 62.90 | |

| SimpleViT | ZS | GREW | 59.68 | 76.61 | 70.16 | 69.35 | 38.71 | 40.32 | 47.58 | 64.52 | 63.71 | 60.48 | 54.03 | 58.65 | |

| SimpleViT | FT | DenseGait | 82.26 | 91.94 | 97.58 | 95.16 | 82.26 | 86.29 | 89.52 | 90.32 | 88.71 | 91.13 | 82.26 | 88.86 | |

| SimpleViT | FT | GREW | 88.71 | 91.13 | 91.94 | 92.74 | 79.03 | 72.58 | 82.26 | 83.87 | 87.10 | 92.74 | 74.19 | 85.12 | |

| CaiT | Rand. Init. | − | 65.32 | 70.97 | 58.87 | 51.61 | 39.52 | 49.19 | 58.06 | 68.55 | 55.65 | 42.74 | 43.55 | 54.91 | |

| CaiT | ZS | DenseGait | 76.61 | 67.74 | 70.16 | 60.48 | 48.39 | 61.29 | 65.32 | 64.52 | 75.00 | 75.00 | 61.29 | 65.98 | |

| CaiT | ZS | GREW | 70.97 | 66.13 | 67.74 | 62.10 | 39.52 | 37.90 | 37.10 | 50.81 | 54.03 | 65.32 | 54.84 | 55.13 | |

| CaiT | FT | DenseGait | 87.90 | 91.94 | 97.58 | 91.94 | 87.10 | 82.26 | 90.32 | 93.55 | 90.32 | 87.10 | 78.23 | 88.93 | |

| CaiT | FT | GREW | 85.48 | 91.94 | 92.74 | 93.55 | 76.61 | 81.45 | 88.71 | 85.48 | 82.26 | 86.29 | 84.68 | 86.29 | |

| Token2Token | Rand. Init. | − | 50.00 | 58.87 | 58.87 | 58.06 | 51.61 | 53.23 | 58.06 | 54.03 | 61.29 | 54.03 | 31.45 | 53.59 | |

| Token2Token | ZS | DenseGait | 61.29 | 79.84 | 73.39 | 74.19 | 54.84 | 43.55 | 54.84 | 74.19 | 71.77 | 83.87 | 79.84 | 68.33 | |

| Token2Token | ZS | GREW | 54.03 | 69.35 | 75.00 | 66.94 | 26.61 | 22.58 | 33.06 | 60.48 | 57.26 | 48.39 | 49.19 | 51.17 | |

| Token2Token | FT | DenseGait | 80.65 | 90.32 | 93.55 | 93.55 | 90.32 | 85.48 | 91.13 | 92.74 | 89.52 | 90.32 | 76.61 | 88.56 | |

| Token2Token | FT | GREW | 70.16 | 83.87 | 91.13 | 90.32 | 78.23 | 76.61 | 82.26 | 83.87 | 80.65 | 80.65 | 67.74 | 80.50 | |

| Twins-SVT | Rand. Init. | − | 33.87 | 41.94 | 33.06 | 34.68 | 21.77 | 30.65 | 26.61 | 29.84 | 30.65 | 21.77 | 20.97 | 29.62 | |

| Twins-SVT | ZS | DenseGait | 66.94 | 56.45 | 71.77 | 64.52 | 45.16 | 42.74 | 50.81 | 70.16 | 72.58 | 66.94 | 68.55 | 61.51 | |

| Twins-SVT | ZS | GREW | 50.81 | 70.97 | 66.13 | 60.48 | 36.29 | 33.87 | 29.03 | 54.03 | 59.68 | 51.61 | 47.58 | 50.95 | |

| Twins-SVT | FT | DenseGait | 71.77 | 85.48 | 87.10 | 87.90 | 74.19 | 83.87 | 79.03 | 89.52 | 88.71 | 88.71 | 70.97 | 82.48 | |

| Twins-SVT | FT | GREW | 74.19 | 88.71 | 95.16 | 91.94 | 86.29 | 82.26 | 88.71 | 87.10 | 90.32 | 92.74 | 72.58 | 86.36 | |

| CrossFormer | Rand. Init. | − | 65.32 | 67.74 | 78.23 | 78.23 | 65.32 | 70.97 | 71.77 | 70.16 | 68.55 | 56.45 | 49.19 | 67.45 | |

| CrossFormer | ZS | DenseGait | 87.10 | 91.94 | 95.97 | 91.94 | 70.16 | 60.48 | 72.58 | 86.29 | 89.52 | 83.87 | 75.81 | 82.33 | |

| CrossFormer | ZS | GREW | 54.84 | 63.71 | 62.90 | 61.29 | 54.03 | 40.32 | 25.00 | 41.94 | 37.90 | 42.74 | 39.52 | 47.65 | |

| CrossFormer | FT | DenseGait | 75.00 | 94.35 | 95.97 | 93.55 | 85.48 | 87.90 | 91.13 | 92.74 | 95.97 | 92.74 | 68.55 | 88.49 | |

| CrossFormer | FT | GREW | 75.81 | 88.71 | 95.16 | 91.13 | 92.74 | 85.48 | 88.71 | 88.71 | 89.52 | 86.29 | 69.35 | 86.51 | |

| CL | SimpleViT | Rand. Init. | − | 20.97 | 23.39 | 19.35 | 15.32 | 13.71 | 12.90 | 16.94 | 18.55 | 19.35 | 27.42 | 16.13 | 18.55 |

| SimpleViT | ZS | DenseGait | 12.90 | 29.03 | 23.39 | 16.13 | 10.48 | 11.29 | 13.71 | 17.74 | 22.58 | 15.32 | 18.55 | 17.37 | |

| SimpleViT | ZS | GREW | 7.26 | 20.97 | 12.10 | 8.06 | 8.06 | 6.45 | 11.29 | 18.55 | 13.71 | 12.90 | 6.45 | 11.44 | |

| SimpleViT | FT | DenseGait | 31.45 | 43.55 | 40.32 | 33.06 | 27.42 | 20.16 | 27.42 | 30.65 | 29.84 | 22.58 | 32.26 | 30.79 | |

| SimpleViT | FT | GREW | 34.68 | 33.87 | 26.61 | 20.16 | 16.94 | 17.74 | 25.81 | 27.42 | 20.97 | 31.45 | 25.00 | 25.51 | |

| CaiT | Rand. Init. | − | 12.90 | 20.16 | 11.29 | 10.48 | 10.48 | 7.26 | 12.10 | 16.13 | 9.68 | 17.74 | 12.10 | 12.76 | |

| CaiT | ZS | DenseGait | 6.45 | 19.35 | 11.29 | 11.29 | 4.84 | 11.29 | 20.16 | 12.10 | 15.32 | 12.10 | 10.48 | 12.24 | |

| CaiT | ZS | GREW | 8.06 | 20.97 | 8.87 | 13.71 | 6.45 | 9.68 | 10.48 | 14.52 | 9.68 | 12.10 | 7.26 | 11.07 | |

| CaiT | FT | DenseGait | 25.00 | 30.65 | 29.84 | 21.77 | 20.16 | 20.97 | 24.19 | 24.19 | 22.58 | 25.00 | 25.00 | 24.49 | |

| CaiT | FT | GREW | 18.55 | 16.94 | 21.77 | 16.94 | 13.71 | 18.55 | 25.00 | 20.16 | 19.35 | 26.61 | 14.52 | 19.28 | |

| Token2Token | Rand. Init. | − | 8.87 | 17.74 | 17.74 | 12.90 | 9.68 | 15.32 | 16.13 | 19.35 | 16.13 | 11.29 | 8.87 | 14.00 | |

| Token2Token | ZS | DenseGait | 8.87 | 25.81 | 15.32 | 16.13 | 4.03 | 11.29 | 14.52 | 18.55 | 20.16 | 15.32 | 16.13 | 15.10 | |

| Token2Token | ZS | GREW | 9.68 | 20.16 | 20.97 | 19.35 | 7.26 | 4.84 | 8.87 | 9.68 | 8.87 | 8.06 | 8.06 | 11.44 | |

| Token2Token | FT | DenseGait | 21.77 | 33.06 | 31.45 | 29.03 | 29.03 | 36.29 | 30.65 | 31.45 | 30.65 | 35.48 | 31.45 | 30.94 | |

| Token2Token | FT | GREW | 16.13 | 16.13 | 22.58 | 20.16 | 18.55 | 20.97 | 24.19 | 21.77 | 17.74 | 19.35 | 10.48 | 18.91 | |

| Twins-SVT | Rand. Init. | − | 7.26 | 4.03 | 6.45 | 7.26 | 4.84 | 8.06 | 9.68 | 6.45 | 6.45 | 6.45 | 5.65 | 6.60 | |

| Twins-SVT | ZS | DenseGait | 8.87 | 25.81 | 15.32 | 15.32 | 11.29 | 8.87 | 13.71 | 15.32 | 15.32 | 11.29 | 14.52 | 14.15 | |

| Twins-SVT | ZS | GREW | 9.68 | 24.19 | 8.06 | 10.48 | 12.10 | 8.87 | 8.87 | 16.13 | 11.29 | 11.29 | 7.26 | 11.66 | |

| Twins-SVT | FT | DenseGait | 23.39 | 33.06 | 33.87 | 27.42 | 21.77 | 25.00 | 29.03 | 34.68 | 29.03 | 27.42 | 17.74 | 27.49 | |

| Twins-SVT | FT | GREW | 20.16 | 26.61 | 24.19 | 29.84 | 21.77 | 21.77 | 29.03 | 29.84 | 20.16 | 22.58 | 16.94 | 23.90 | |

| CrossFormer | Rand. Init. | − | 9.68 | 17.74 | 18.55 | 18.55 | 18.55 | 18.55 | 17.74 | 21.77 | 12.10 | 11.29 | 12.10 | 16.06 | |

| CrossFormer | ZS | DenseGait | 16.94 | 29.84 | 23.39 | 20.16 | 11.29 | 8.06 | 16.94 | 20.97 | 15.32 | 19.35 | 18.55 | 18.26 | |

| CrossFormer | ZS | GREW | 16.94 | 14.52 | 13.71 | 11.29 | 7.26 | 8.87 | 11.29 | 11.29 | 11.29 | 11.29 | 8.87 | 11.51 | |

| CrossFormer | FT | DenseGait | 25.00 | 42.74 | 39.52 | 40.32 | 29.84 | 25.00 | 32.26 | 37.90 | 33.06 | 26.61 | 20.97 | 32.11 | |

| CrossFormer | FT | GREW | 20.97 | 29.03 | 39.52 | 23.39 | 23.39 | 22.58 | 25.81 | 31.45 | 24.19 | 26.61 | 15.32 | 25.66 | |

| BG | SimpleViT | Rand. Init. | − | 46.77 | 55.65 | 50.00 | 44.35 | 31.45 | 40.32 | 30.65 | 35.48 | 37.90 | 36.29 | 26.61 | 39.59 |

| SimpleViT | ZS | DenseGait | 54.03 | 53.23 | 55.65 | 45.16 | 37.90 | 25.81 | 37.10 | 45.16 | 44.35 | 44.35 | 40.32 | 43.91 | |

| SimpleViT | ZS | GREW | 49.19 | 50.81 | 50.81 | 46.77 | 41.13 | 25.81 | 27.42 | 43.55 | 36.29 | 29.84 | 29.03 | 39.15 | |

| SimpleViT | FT | DenseGait | 58.87 | 73.39 | 78.23 | 69.35 | 51.61 | 54.84 | 50.81 | 53.23 | 55.65 | 53.23 | 44.35 | 58.51 | |

| SimpleViT | FT | GREW | 61.29 | 68.55 | 70.97 | 61.29 | 47.58 | 44.35 | 49.19 | 42.74 | 49.19 | 53.23 | 38.71 | 53.37 | |

| CaiT | Rand. Init. | − | 46.77 | 43.55 | 45.97 | 34.68 | 20.97 | 28.23 | 33.06 | 32.26 | 35.48 | 29.03 | 25.00 | 34.09 | |

| CaiT | ZS | DenseGait | 60.48 | 55.65 | 58.06 | 41.13 | 35.48 | 28.23 | 37.10 | 47.58 | 49.19 | 50.81 | 42.74 | 46.04 | |

| CaiT | ZS | GREW | 55.65 | 45.16 | 55.65 | 37.90 | 35.48 | 20.97 | 20.16 | 31.45 | 40.32 | 29.03 | 32.26 | 36.73 | |

| CaiT | FT | DenseGait | 74.19 | 71.77 | 75.81 | 58.87 | 49.19 | 54.03 | 52.42 | 55.65 | 54.03 | 55.65 | 45.97 | 58.87 | |

| CaiT | FT | GREW | 62.10 | 66.94 | 73.39 | 58.87 | 53.23 | 48.39 | 51.61 | 42.74 | 49.19 | 50.81 | 38.71 | 54.18 | |

| Token2Token | Rand. Init. | − | 28.23 | 33.06 | 37.90 | 30.65 | 32.26 | 22.58 | 29.03 | 24.19 | 28.23 | 24.19 | 20.16 | 28.23 | |

| Token2Token | ZS | DenseGait | 45.97 | 61.29 | 66.13 | 52.42 | 35.48 | 25.00 | 35.48 | 47.58 | 53.23 | 55.65 | 53.23 | 48.31 | |

| Token2Token | ZS | GREW | 42.74 | 46.77 | 49.19 | 41.94 | 12.10 | 15.32 | 17.74 | 34.68 | 37.90 | 29.84 | 27.42 | 32.33 | |

| Token2Token | FT | DenseGait | 66.13 | 70.16 | 70.16 | 61.29 | 57.26 | 52.42 | 55.65 | 56.45 | 46.77 | 51.61 | 44.35 | 57.48 | |

| Token2Token | FT | GREW | 50.81 | 55.65 | 59.68 | 50.81 | 41.13 | 37.90 | 47.58 | 50.00 | 48.39 | 53.23 | 34.68 | 48.17 | |

| Twins-SVT | Rand. Init. | − | 20.16 | 26.61 | 24.19 | 18.55 | 12.90 | 12.10 | 10.48 | 12.10 | 9.68 | 12.90 | 12.90 | 15.69 | |

| Twins-SVT | ZS | DenseGait | 45.97 | 50.00 | 49.19 | 38.71 | 25.00 | 31.45 | 33.87 | 38.71 | 50.81 | 47.58 | 48.39 | 41.79 | |

| Twins-SVT | ZS | GREW | 37.90 | 45.16 | 39.52 | 30.65 | 25.81 | 13.71 | 18.55 | 24.19 | 28.23 | 33.87 | 27.42 | 29.55 | |

| Twins-SVT | FT | DenseGait | 45.97 | 56.45 | 61.29 | 50.00 | 43.55 | 37.10 | 53.23 | 52.42 | 50.81 | 58.87 | 42.74 | 50.22 | |

| Twins-SVT | FT | GREW | 59.68 | 58.06 | 64.52 | 54.84 | 46.77 | 49.19 | 50.81 | 52.42 | 43.55 | 56.45 | 37.90 | 52.20 | |

| CrossFormer | Rand. Init. | − | 40.32 | 42.74 | 41.94 | 45.16 | 34.68 | 34.68 | 38.71 | 40.32 | 34.68 | 42.74 | 31.45 | 38.86 | |

| CrossFormer | ZS | DenseGait | 75.00 | 70.16 | 70.97 | 62.10 | 44.35 | 39.52 | 39.52 | 51.61 | 56.45 | 45.16 | 50.00 | 54.99 | |

| CrossFormer | ZS | GREW | 41.94 | 41.13 | 40.32 | 28.23 | 33.06 | 17.74 | 22.58 | 26.61 | 26.61 | 25.81 | 20.16 | 29.47 | |

| CrossFormer | FT | DenseGait | 59.68 | 70.16 | 70.16 | 66.13 | 56.45 | 54.84 | 49.19 | 56.45 | 59.68 | 62.90 | 48.39 | 59.46 | |

| CrossFormer | FT | GREW | 58.06 | 62.90 | 63.71 | 50.81 | 51.61 | 48.39 | 46.77 | 41.13 | 50.81 | 52.42 | 40.32 | 51.54 |

| FVG | ||||||||

|---|---|---|---|---|---|---|---|---|

| Architecture | Kind | Pretraining Dataset | WS | CB | CL | CBG | ALL | Mean |

| SimpleViT | Rand. Init. | − | 69.33 | 81.82 | 36.32 | 70.51 | 69.33 | 65.46 |

| SimpleViT | ZS | DenseGait | 71.00 | 57.58 | 43.16 | 70.09 | 71.00 | 62.57 |

| SimpleViT | ZS | GREW | 68.67 | 69.70 | 27.78 | 65.81 | 68.67 | 60.13 |

| SimpleViT | FT | DenseGait | 88.33 | 90.91 | 49.57 | 87.18 | 88.33 | 80.86 |

| SimpleViT | FT | GREW | 87.67 | 90.91 | 44.44 | 91.45 | 87.67 | 80.43 |

| CaiT | Rand. Init. | − | 57.67 | 72.73 | 25.21 | 65.81 | 57.67 | 55.82 |

| CaiT | ZS | DenseGait | 75.00 | 72.73 | 43.16 | 80.77 | 75.00 | 69.33 |

| CaiT | ZS | GREW | 66.33 | 54.55 | 32.48 | 68.80 | 66.33 | 57.70 |

| CaiT | FT | DenseGait | 86.00 | 90.91 | 45.73 | 86.32 | 86.00 | 78.99 |

| CaiT | FT | GREW | 82.00 | 78.79 | 45.30 | 82.05 | 82.00 | 74.03 |

| Token2Token | Rand. Init. | − | 66.33 | 81.82 | 32.48 | 72.65 | 66.33 | 63.92 |

| Token2Token | ZS | DenseGait | 73.33 | 63.64 | 43.16 | 81.20 | 73.33 | 66.93 |

| Token2Token | ZS | GREW | 53.00 | 57.58 | 24.79 | 58.55 | 53.00 | 49.38 |

| Token2Token | FT | DenseGait | 87.00 | 93.94 | 49.15 | 88.46 | 87.00 | 81.11 |

| Token2Token | FT | GREW | 82.33 | 78.79 | 34.62 | 84.62 | 82.33 | 72.54 |

| Twins-SVT | Rand. Init. | − | 30.67 | 48.48 | 16.67 | 32.05 | 30.67 | 31.71 |

| Twins-SVT | ZS | DenseGait | 70.33 | 60.61 | 42.74 | 78.21 | 70.33 | 64.44 |

| Twins-SVT | ZS | GREW | 61.00 | 66.67 | 25.21 | 53.85 | 61.00 | 53.55 |

| Twins-SVT | FT | DenseGait | 87.67 | 90.91 | 43.59 | 84.62 | 87.67 | 78.89 |

| Twins-SVT | FT | GREW | 85.33 | 69.70 | 33.76 | 84.62 | 85.33 | 71.75 |

| CrossFormer | Rand. Init. | − | 74.00 | 87.88 | 41.45 | 78.63 | 74.00 | 71.19 |

| CrossFormer | ZS | DenseGait | 77.67 | 72.73 | 45.30 | 79.49 | 77.67 | 70.57 |

| CrossFormer | ZS | GREW | 54.33 | 48.48 | 17.09 | 50.85 | 54.33 | 45.02 |

| CrossFormer | FT | DenseGait | 89.33 | 93.94 | 50.85 | 92.74 | 89.33 | 83.24 |

| CrossFormer | FT | GREW | 87.00 | 90.91 | 47.44 | 85.90 | 87.00 | 79.65 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cosma, A.; Catruna, A.; Radoi, E. Exploring Self-Supervised Vision Transformers for Gait Recognition in the Wild. Sensors 2023, 23, 2680. https://doi.org/10.3390/s23052680

Cosma A, Catruna A, Radoi E. Exploring Self-Supervised Vision Transformers for Gait Recognition in the Wild. Sensors. 2023; 23(5):2680. https://doi.org/10.3390/s23052680

Chicago/Turabian StyleCosma, Adrian, Andy Catruna, and Emilian Radoi. 2023. "Exploring Self-Supervised Vision Transformers for Gait Recognition in the Wild" Sensors 23, no. 5: 2680. https://doi.org/10.3390/s23052680

APA StyleCosma, A., Catruna, A., & Radoi, E. (2023). Exploring Self-Supervised Vision Transformers for Gait Recognition in the Wild. Sensors, 23(5), 2680. https://doi.org/10.3390/s23052680