Double-Center-Based Iris Localization and Segmentation in Cooperative Environment with Visible Illumination

Abstract

1. Introduction

- (1)

- We propose to locate the iris’s inner and outer circles as center points and regression radius on feature maps directly, thereby solving the problems of prediction inaccuracies and lacking robustness of iris localization on the visible iris image. Compared with existing methods that use massive post-processing on the predicted mask to obtain the circle parameters, our approach is post-processing-free and the localization module output is the final inner-/outer-circle localization result;

- (2)

- By explicitly analyzing the distribution of the center points of the pupil and iris, we propose a novel auxiliary sample strategy to accelerate model training on non-standard iris images. These images have irrelevant face regions, such as chin, nose, and environments;

- (3)

- We design an end-to-end dedicated iris localization and segmentation framework, which is simple, effective, and achieves excellent performance in multiple benchmarks. The proposed method provides a good foundation for iris localization and segmentation in a non-cooperative environment.

2. Related Research

3. Methods

3.1. Localization Module

3.1.1. Double-Center Localization

3.1.2. Radius Regression

3.2. Segmentation Module

3.3. Total Loss

3.4. Backbone

3.5. Concentric Sampling Strategy

3.6. Double Center to Circular Boxes

4. Experiments

4.1. Experimental Environment

4.2. Data Set and Evaluation Protocols

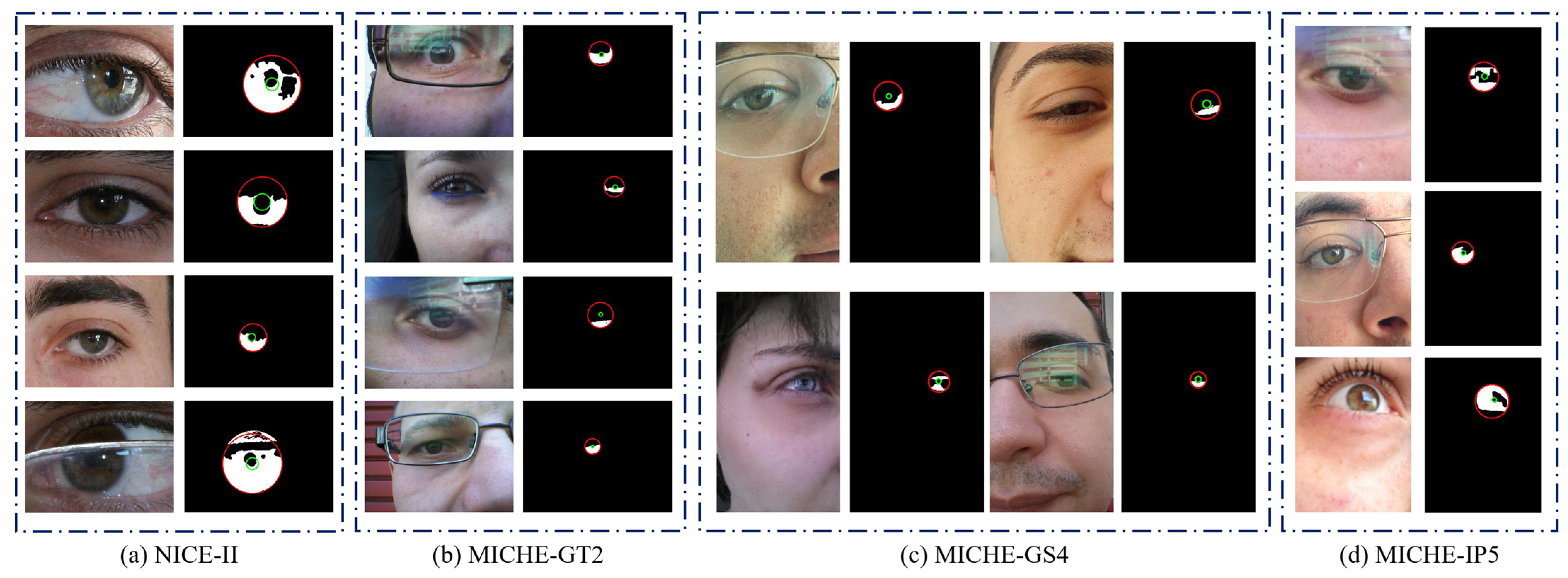

4.2.1. Data Set

4.2.2. Evaluation Protocols

- (1).

- Localization. We compute the inner-/outer-circle box IoU , which ranges from [0,1]. The closer the value to 1, the better the localization. We also compute the Hausdorff distance, similar to [7]. We add points coordinate normalization, and the range of normalization Hausdorff distances is between [0,1]. The smaller the value, the higher the shape similarity;

- (2).

- Segmentation. We use and , which are the mask errors of the iris and normalized iris masks, respectively, which are the same as [7]. The value range is [0,1]. The smaller the value, the better the result. In addition, we use to evaluate the segmentation performance, and the value range is [0,1]. The larger the value, the better the segmentation result.

4.3. Ablation Study

4.4. Compared with Other Methods and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Boles, W.W.; Boashash, B. A human identification technique using images of the iris and wavelet transform. IEEE Trans. Signal Process. 1998, 46, 1185–1188. [Google Scholar] [CrossRef]

- Jain, A.K.; Chen, H. Matching of dental X-ray images for human identification. Pattern Recognit. 2004, 37, 1519–1532. [Google Scholar] [CrossRef]

- Ma, L.; Tan, T.; Wang, Y.; Zhang, D. Efficient Iris Recognition by Characterizing Key Local Variations. IEEE Trans. Image Process. 2004, 13, 739–750. [Google Scholar] [CrossRef] [PubMed]

- Daugman, J. New Methods in Iris Recognition. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2007, 37, 1167–1175. [Google Scholar] [CrossRef] [PubMed]

- Daugman, J. How iris recognition works. In The Essential Guide to Image Processing; Elsevier: Amsterdam, The Netherlands, 2009; pp. 715–739. [Google Scholar] [CrossRef]

- Wildes, R.P. Iris recognition: An emerging biometric technology. Proc. IEEE 1997, 85, 1348–1363. [Google Scholar] [CrossRef]

- Wang, C.; Muhammad, J.; Wang, Y.; He, Z.; Sun, Z. Towards Complete and Accurate Iris Segmentation Using Deep Multi-Task Attention Network for Non-Cooperative Iris Recognition. IEEE Trans. Inf. Forensics Secur. 2020, 15, 2944–2959. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Y.; Zhang, K.; Muhammad, J.; Lu, T.; Zhang, Q.; Tian, Q.; He, Z.; Sun, Z.; Zhang, Y.; et al. NIR Iris Challenge Evaluation in Non-cooperative Environments: Segmentation and Localization. In Proceedings of the 2021 IEEE International Joint Conference on Biometrics (IJCB), Shenzhen, China, 4–7 August 2021. [Google Scholar] [CrossRef]

- Bowyer, K.W.; Hollingsworth, K.; Flynn, P.J. Image understanding for iris biometrics: A survey. Comput. Vis. Image Underst. 2008, 110, 281–307. [Google Scholar] [CrossRef]

- Jan, F.; Usman, I.; Agha, S. Iris localization in frontal eye images for less constrained iris recognition systems. Digit. Signal Process. 2012, 22, 971–986. [Google Scholar] [CrossRef]

- Zhao, Z.; Kumar, A. An Accurate Iris Segmentation Framework under Relaxed Imaging Constraints Using Total Variation Model. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3828–3836. [Google Scholar] [CrossRef]

- Proença, H.; Alexandre, L.A. Iris segmentation methodology for non-cooperative recognition. IEE Proc. Vis. Image Signal Process. 2006, 153, 199. [Google Scholar] [CrossRef]

- Tan, T.; He, Z.; Sun, Z. Efficient and robust segmentation of noisy iris images for non-cooperative iris recognition. Image Vis. Comput. 2010, 28, 223–230. [Google Scholar] [CrossRef]

- Sutra, G.; Garcia-Salicetti, S.; Dorizzi, B. The Viterbi algorithm at different resolutions for enhanced iris segmentation. In Proceedings of the 2012 5th IAPR International Conference on Biometrics (ICB), New Delhi, India, 29 March–1 April 2012; pp. 310–316. [Google Scholar] [CrossRef]

- Liu, N.; Li, H.; Zhang, M.; Liu, J.; Sun, Z.; Tan, T. Accurate iris segmentation in non-cooperative environments using fully convolutional networks. In Proceedings of the 2016 International Conference on Biometrics (ICB), Halmstad, Sweden, 13–16 June 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Othman, N.; Dorizzi, B.; Garcia-Salicetti, S. OSIRIS: An open source iris recognition software. Pattern Recognit. Lett. 2016, 82, 124–131. [Google Scholar] [CrossRef]

- Jalilian, E.; Uhl, A. Iris segmentation using fully convolutional encoder–decoder networks. In Deep Learning for Biometrics; Springer: Berlin/Heidelberg, Germany, 2017; pp. 133–155. [Google Scholar]

- Bazrafkan, S.; Thavalengal, S.; Corcoran, P. An end to end Deep Neural Network for iris segmentation in unconstrained scenarios. Neural Netw. 2018, 106, 79–95. [Google Scholar] [CrossRef] [PubMed]

- Arsalan, M.; Naqvi, R.A.; Kim, D.S.; Nguyen, P.H.; Owais, M.; Park, K.R. IrisDenseNet: Robust Iris Segmentation Using Densely Connected Fully Convolutional Networks in the Images by Visible Light and Near-Infrared Light Camera Sensors. Sensors 2018, 18, 1501. [Google Scholar] [CrossRef] [PubMed]

- Arsalan, M.; Kim, D.S.; Lee, M.B.; Owais, M.; Park, K.R. FRED-Net: Fully residual encoder–decoder network for accurate iris segmentation. Expert Syst. Appl. 2019, 122, 217–241. [Google Scholar] [CrossRef]

- Hofbauer, H.; Jalilian, E.; Uhl, A. Exploiting superior CNN-based iris segmentation for better recognition accuracy. Pattern Recognit. Lett. 2019, 120, 17–23. [Google Scholar] [CrossRef]

- Zhao, Z.; Kumar, A. A deep learning based unified framework to detect, segment and recognize irises using spatially corresponding features. Pattern Recognit. 2019, 93, 546–557. [Google Scholar] [CrossRef]

- Kerrigan, D.; Trokielewicz, M.; Czajka, A.; Bowyer, K.W. Iris recognition with image segmentation employing retrained off-the-shelf deep neural networks. In Proceedings of the 2019 International Conference on Biometrics (ICB), Crete, Greece, 4–7 June 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Hassaballah, M.; Salem, E.; Ali AM, M.; Mahmoud, M.M. Deep Recurrent Regression with a Heatmap Coupling Module for Facial Land-marks Detection. Cogn. Comput. 2022, 1–15. [Google Scholar] [CrossRef]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6568–6577. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–27 October 2017; pp. 2980–2988. [Google Scholar]

- Liu, Z.; Zheng, T.; Xu, G.; Yang, Z.; Liu, H.; Cai, D. Training-time-friendly network for real-time object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 7 February 2020; pp. 11685–11692. [Google Scholar]

- Yu, F.; Wang, D.; Shelhamer, E.; Darrell, T. Deep layer aggregation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2403–2412. [Google Scholar]

- Ma, Y.; Liu, S.; Li, Z.; Sun, J. Iqdet: Instance-wise quality distribution sampling for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 1717–1725. [Google Scholar]

- Proenca, H.; Filipe, S.; Santos, R.; Oliveira, J.; Alexandre, L.A. The UBIRIS.v2: A Database of Visible Wavelength Iris Images Captured On-the-Move and At-a-Distance. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1529–1535. [Google Scholar] [CrossRef]

- De Marsico, M.; Galdi, C.; Nappi, M.; Riccio, D. FIRME: Face and Iris Recognition for Mobile Engagement. Image Vis. Comput. 2014, 32, 1161–1172. [Google Scholar] [CrossRef]

| Data Sets | None | DC-CGR | |||

|---|---|---|---|---|---|

| NICEII | √ | 73.99% | 94.06% | −4.11% | |

| √ | 69.40% | 90.43% | |||

| MICHE-GS | √ | 59.01% | 80.66% | 5.29% | |

| √ | 64.06% | 86.20% | |||

| MICHE-IP | √ | 58.01% | 82.65% | 4.87% | |

| √ | 63.07% | 87.34% | |||

| MICHE-GT2 | √ | 54.30% | 79.65% | 4.79% | |

| √ | 57.47% | 86.06% |

| Methods | ||||||||

|---|---|---|---|---|---|---|---|---|

| FCEDN | 0.5564 | 0.9083 | 0.7323 | 0.0298 | 0.0175 | 0.0108 | 0.7246 | 0.2323 |

| IrisDenseNet | 0.5761 | 0.9107 | 0.7434 | 0.0279 | 0.0174 | 0.0103 | 0.7320 | 0.2243 |

| FRED-Net | 0.6373 | 0.9034 | 0.7703 | 0.0238 | 0.0184 | 0.0122 | 0.7276 | 0.2112 |

| IrisParseNet | 0.7026 | 0.9019 | 0.8023 | 0.0151 | 0.0185 | 0.0096 | 0.8751 | 0.1284 |

| NIR-Zhang | 0.7308 | 0.9394 | 0.8351 | 0.0149 | 0.0134 | 0.0072 | 0.9013 | 0.0909 |

| ICSNet (ours) | 0.7399 | 0.9406 | 0.8402 | 0.0135 | 0.0102 | 0.0079 | 0.8915 | 0.0913 |

| Methods | Data Sets | |||||||

|---|---|---|---|---|---|---|---|---|

| FCEDN | GS | 0.2358 | 0.7743 | 0.0469 | 0.0268 | 0.0062 | 0.6067 | 0.3457 |

| IP | 0.1735 | 0.7651 | 0.0518 | 0.0276 | 0.0046 | 0.6186 | 0.3152 | |

| GT2 | 0.0849 | 0.6488 | 0.1053 | 0.0687 | 0.0100 | 0.5240 | 0.3827 | |

| IrisDenseNet | GS | 0.3858 | 0.7733 | 0.0328 | 0.0346 | 0.0055 | 0.6956 | 0.3018 |

| IP | 0.2317 | 0.7668 | 0.0409 | 0.0409 | 0.0070 | 0.6498 | 0.3013 | |

| GT2 | 0.3998 | 0.7595 | 0.0434 | 0.0415 | 0.0043 | 0.7077 | 0.2882 | |

| FRED-Net | GS | 0.5628 | 0.7710 | 0.0306 | 0.0314 | 0.0054 | 0.6388 | 0.2717 |

| IP | 0.4014 | 0.7799 | 0.0360 | 0.0325 | 0.0052 | 0.6404 | 0.2440 | |

| GT2 | 0.3767 | 0.7532 | 0.0474 | 0.0442 | 0.0048 | 0.7148 | 0.2418 | |

| IrisParseNet | GS | 0.5353 | 0.7640 | 0.0650 | 0.0743 | 0.0046 | 0.7362 | 0.2545 |

| IP | 0.5290 | 0.7071 | 0.0740 | 0.0875 | 0.0052 | 0.6823 | 0.2753 | |

| GT2 | 0.5321 | 0.7771 | 0.0439 | 0.0519 | 0.0041 | 0.7504 | 0.1867 | |

| NIR-Zhang | GS | 0.5327 | 0.8058 | 0.0408 | 0.0326 | 0.0051 | 0.6919 | 0.2605 |

| IP | 0.5369 | 0.8436 | 0.0245 | 0.0224 | 0.0037 | 0.7429 | 0.2178 | |

| GT2 | 0.5930 | 0.8516 | 0.0265 | 0.0260 | 0.0053 | 0.7039 | 0.2118 | |

| ICSNet (ours) | GS | 0.6406 | 0.8620 | 0.0132 | 0.0162 | 0.0050 | 0.7001 | 0.2465 |

| IP | 0.6307 | 0.8734 | 0.0165 | 0.0186 | 0.0036 | 0.7431 | 0.2099 | |

| GT2 | 0.5747 | 0.8606 | 0.0381 | 0.0227 | 0.0061 | 0.6857 | 0.2319 |

| Methods | Parameters | GFLOPs |

|---|---|---|

| FCEDN | 115.18 M | 62.65 |

| IrisDenseNet | 142.95 M | 75.67 |

| IrisParseNet | 125.18 M | 87.03 |

| NIR-Zhang | 95.61 M | 50.12 |

| ICSNet (ours) | 69.53 M | 47.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Feng, X. Double-Center-Based Iris Localization and Segmentation in Cooperative Environment with Visible Illumination. Sensors 2023, 23, 2238. https://doi.org/10.3390/s23042238

Li J, Feng X. Double-Center-Based Iris Localization and Segmentation in Cooperative Environment with Visible Illumination. Sensors. 2023; 23(4):2238. https://doi.org/10.3390/s23042238

Chicago/Turabian StyleLi, Jiangang, and Xin Feng. 2023. "Double-Center-Based Iris Localization and Segmentation in Cooperative Environment with Visible Illumination" Sensors 23, no. 4: 2238. https://doi.org/10.3390/s23042238

APA StyleLi, J., & Feng, X. (2023). Double-Center-Based Iris Localization and Segmentation in Cooperative Environment with Visible Illumination. Sensors, 23(4), 2238. https://doi.org/10.3390/s23042238