Fast and Accurate Gamma Imaging System Calibration Based on Deep Denoising Networks and Self-Adaptive Data Clustering

Abstract

:1. Introduction

2. Materials and Methods

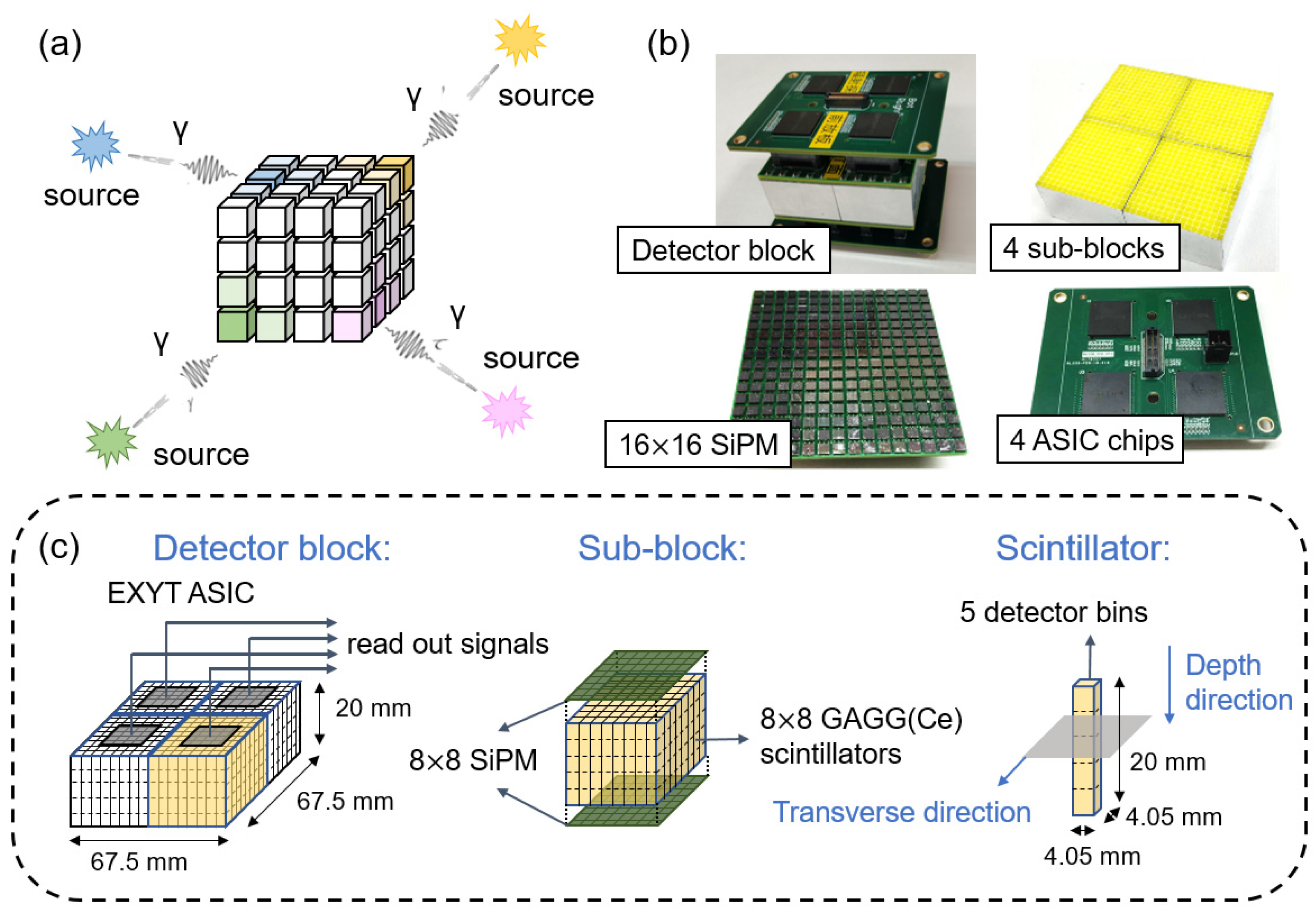

2.1. The 4π-View Gamma Imager

2.2. System Matrix Calibration and Detector Response Function

- (1)

- Define a 10 × 36 grid in the 2π image FOV, with θ ranging from 0° to 90° with 10° intervals and φ ranging from 0° to 350° with 10° intervals.

- (2)

- Place a point source at the intersection of the grid and measure the projection point by point to generate a coarse-grid SM. The size is 360 (image domain: 10 × 36) × 1280 (projection domain: 16 × 16 × 5).

- (3)

- Perform spline interpolation to generate a fine-grid SM with a 1° interval. As a result, the size of the fine-grid SM is 32,760 (image domain: 91 × 360) × 1280 (projection domain: 16 × 16 × 5).

2.3. Self-Adaptive, Sensitivity-Dependent Data-Grouping Strategy

2.4. Deep-Learning-Based Denoising

2.4.1. Network Architectures

U-Net Architecture

Res-U-Net Architecture

2.4.2. Dataset Preparation and Network Training

- (1)

- By using all the events acquired in the full acquisition time of Imager 1, we produced a full-count SM (FC-SM).

- (2)

- We generated low-count SM (LC-SM) by randomly picking 10% events from the fully acquired list mode data, representing an SM that can be measured with a 10% acquisition time.

- (3)

- We extracted 1280 pairs of full-count DRFs and low-count DRFs from FC-SM and LC-SM and used them as the label and input dataset, respectively, which were fed into the deep networks. For each source energy and each DRF group, an individual network was trained.

- (4)

- We repeated down-sampling steps (2) and (3) 20 times to produce 20 independent LC-SMs and used all of them as the training data so that the deep networks had sufficient input data to avoid overfitting.

- (1)

- Calculate DRF-wise scaling factors , ;

- (2)

- Generate the input DRFs: ;

- (3)

- Apply the denoising networks on and obtain the outputs ;

- (4)

- Implement inverse scaling on the outputs and obtain : ;

- (5)

- Re-organize to form a denoised SM of Imager 2.

2.4.3. Implementation Details

2.5. Conventional Gaussian-Filtering-Based Denoise Approach

2.6. Performance Evaluation

2.6.1. SSIM between System Matrices

2.6.2. Positioning Bias

2.6.3. FWHM Resolution

3. Results

3.1. Intra-Device Evaluation

3.1.1. Denoised SMs

3.1.2. Performance of Reconstructed Images—Positioning Bias

3.1.3. Image Performance—FWHM Resolution

3.2. Inter-Device Evaluation

3.2.1. Imaging Performance—Positioning Bias

3.2.2. Imaging Performance—FWHM Resolution

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fenimore, E.E.; Cannon, T.M. Coded aperture imaging with uniformly redundant arrays. Appl. Opt. 1978, 17, 337–347. [Google Scholar] [CrossRef] [PubMed]

- Cieślak, M.J.; Gamage, K.A.A.; Glover, R. Coded-aperture imaging systems: Past, present and future development—A review. Radiat. Meas. 2016, 92, 59–71. [Google Scholar] [CrossRef]

- Kishimoto, A.; Kataoka, J.; Nishiyama, T.; Taya, T.; Kabuki, S. Demonstration of three-dimensional imaging based on handheld Compton camera. J. Instrum. 2015, 10, P11001. [Google Scholar] [CrossRef]

- Liu, Y.; Fu, J.; Li, Y.; Li, Y.; Ma, X.; Zhang, L. Preliminary results of a Compton camera based on a single 3D position-sensitive CZT detector. Nucl. Sci. Tech. 2018, 29, 145. [Google Scholar] [CrossRef]

- Lange, K.; Carson, R. EM Reconstruction Algorithms for Emission and Transmission Tomography. J. Comput. Assist. Tomogr. 1984, 8, 306–316. [Google Scholar]

- Sengee, N.; Radnaabazar, C.; Batsuuri, S.; Tsedendamba, K.O. A Comparison of Filtered Back Projection and Maximum Likelihood Expected Maximization. In Proceedings of the 2017 International Conference on Computational Biology and Bioinformatics—ICCBB 2017, Newark, NJ, USA, 18–20 October 2017; pp. 68–73. [Google Scholar]

- Gottesman, S.R.; Fenimore, E.E. New family of binary arrays for coded aperture imaging. Appl. Opt. 1989, 28, 4344–4352. [Google Scholar] [CrossRef]

- Rahmim, A.; Qi, J.; Sossi, V. Resolution modeling in PET imaging: Theory, practice, benefits, and pitfalls. Med. Phys. 2013, 40, 64301. [Google Scholar] [CrossRef] [Green Version]

- Presotto, L.; Gianolli, L.; Gilardi, M.C.; Bettinardi, V. Evaluation of image reconstruction algorithms encompassing Time-Of-Flight and Point Spread Function modelling for quantitative cardiac PET: Phantom studies. J. Nucl. Cardiol. 2015, 22, 351–363. [Google Scholar] [CrossRef]

- Laurette, I.; Zeng, G.L.; Welch, A.; Christian, P.E.; Gullberg, G.T. A three-dimensional ray-driven attenuation, scatter and geometric response correction technique for SPECT in inhomogeneous media. Phys. Med. Biol. 2000, 45, 3459. [Google Scholar] [CrossRef]

- Rafecas, M.; Boning, G.; Pichler, B.J.; Lorenz, E.; Schwaiger, M.; Ziegler, S.I. Effect of Noise in the Probability Matrix Used for Statistical Reconstruction of PET Data. IEEE Trans. Nucl. Sci. 2004, 51, 149–156. [Google Scholar] [CrossRef]

- Metzler, S.D.; Bowsher, J.E.; Greer, K.L.; Jaszczak, R.J. Analytic determination of the pinhole collimator’s point-spread function and RMS resolution with penetration. IEEE Trans. Med. Imaging 2002, 21, 878–887. [Google Scholar] [CrossRef] [PubMed]

- Bequé, D.; Nuyts, J.; Bormans, G.; Suetens, P.; Dupont, P. Characterization of pinhole SPECT acquisition geometry. IEEE Trans. Med. Imaging 2003, 22, 599–612. [Google Scholar] [CrossRef] [PubMed]

- Accorsi, R.; Metzler, S.D. Analytic determination of the resolution-equivalent effective diameter of a pinhole collimator. IEEE Trans. Med. Imaging 2004, 23, 750–763. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, M.P.; Goorden, M.C.; Ramakers, R.M.; Beekman, F.J. Efficient Monte-Carlo based system modelling for image reconstruction in preclinical pinhole SPECT. Phys. Med. Biol. 2021, 66, 125013. [Google Scholar] [CrossRef] [PubMed]

- Auer, B.; Zeraatkar, N.; Banerjee, S.; Goding, J.C.; Furenlid, L.R.; King, M.A. Preliminary investigation of a Monte Carlo-based system matrix approach for quantitative clinical brain 123I SPECT imaging. In Proceedings of the 2018 IEEE Nuclear Science Symposium and Medical Imaging Conference Proceedings (NSS/MIC), Sydney, NSW, Australia, 10–17 November 2018; pp. 1–2. [Google Scholar]

- Rafecas, M.; Mosler, B.; Dietz, M.; Pögl, M.; Stamatakis, A.; McElroy, D.P.; Ziegler, S.I. Use of a monte carlo-based probability matrix for 3-D iterative reconstruction of MADPET-II data. IEEE Trans. Nucl. Sci. 2004, 51, 2597–2605. [Google Scholar] [CrossRef]

- Rowe, R.K.; Aarsvold, J.N.; Barrett, H.H.; Chen, J.-C.; Klein, W.P.; Moore, B.A.; Pang, I.W.; Patton, D.D.; White, T.A. A Stationary Hemispherical SPECT Imager for Three-Dimensional Brain Imaging. J. Nucl. Med. 1993, 34, 474. [Google Scholar]

- Furenlid, L.R.; Wilson, D.W.; Chen, Y.C.; Kim, H.; Pietraski, P.J.; Crawford, M.J.; Barrett, H.H. FastSPECT II: A Second-Generation High-Resolution Dynamic SPECT Imager. IEEE Trans. Nucl. Sci. 2004, 51, 631–635. [Google Scholar] [CrossRef]

- Van der Have, F.; Vastenhouw, B.; Rentmeester, M.; Beekman, F.J. System calibration and statistical image reconstruction for ultra-high resolution stationary pinhole SPECT. IEEE Trans. Med. Imaging 2008, 27, 960–971. [Google Scholar] [CrossRef] [Green Version]

- Miller, B.W.; Van Holen, R.; Barrett, H.H.; Furenlid, L.R. A System Calibration and Fast Iterative Reconstruction Method for Next-Generation SPECT Imagers. IEEE Trans. Nucl. Sci. 2012, 59, 1990–1996. [Google Scholar] [CrossRef] [Green Version]

- Hu, Y.; Fan, P.; Lyu, Z.; Huang, J.; Wang, S.; Xia, Y.; Liu, Y.; Ma, T. Design and performance evaluation of a 4π-view gamma camera with mosaic-patterned 3D position-sensitive scintillators. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2022, 1023, 165971. [Google Scholar] [CrossRef]

- Murata, T.; Miwa, K.; Miyaji, N.; Wagatsuma, K.; Hasegawa, T.; Oda, K.; Umeda, T.; Iimori, T.; Masuda, Y.; Terauchi, T.; et al. Evaluation of spatial dependence of point spread function-based PET reconstruction using a traceable point-like (22)Na source. EJNMMI Phys. 2016, 3, 26. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Y.; Xiao, X.; Zhang, Z.; Wei, L. Near-field artifacts reduction in coded aperture push-broom Compton scatter imaging. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2020, 957, 163385. [Google Scholar] [CrossRef]

- Nuyts, J.; Vunckx, K.; Defrise, M.; Vanhove, C. Small animal imaging with multi-pinhole SPECT. Methods 2009, 48, 83–91. [Google Scholar] [CrossRef] [PubMed]

- Ye, Q.; Fan, P.; Wang, R.; Lyu, Z.; Hu, A.; Wei, Q.; Xia, Y.; Yao, R.; Liu, Y.; Ma, T. A high sensitivity 4π view gamma imager with a monolithic 3D position-sensitive detector. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2019, 937, 31–40. [Google Scholar] [CrossRef]

- Fan, P.; Xu, T.; Lyu, Z.; Wang, S.; Liu, Y.; Ma, T. 3D positioning and readout channel number compression methods for monolithic PET detector. In Proceedings of the 2016 IEEE Nuclear Science Symposium, Medical Imaging Conference and Room-Temperature Semiconductor Detector Workshop (NSS/MIC/RTSD), Strasbourg, France, 29 October–6 November 2016; pp. 1–4. [Google Scholar]

- Lyu, Z.; Fan, P.; Liu, Y.; Wang, S.; Wu, Z.; Ma, T. Timing Estimation Algorithm Incorporating Spatial Position for Monolithic PET Detector. In Proceedings of the 2017 IEEE Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC), Atlanta, GA, USA, 21–28 October 2017; pp. 1–3. [Google Scholar]

- Lyu, Z.; Fan, P.; Xu, T.; Wang, R.; Liu, Y.; Wang, S.; Wu, Z.; Ma, T. Improved Spatial Resolution and Resolution Uniformity of Monolithic PET Detector by Optimization of Photon detector Arrangement. In Proceedings of the 2017 IEEE Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC), Atlanta, GA, USA, 21–28 October 2017; pp. 1–4. [Google Scholar]

- Deng, G.; Cahill, L.W. An adaptive Gaussian filter for noise reduction and edge detection. In Proceedings of the 1993 IEEE Conference Record Nuclear Science Symposium and Medical Imaging Conference, San Francisco, CA, USA, 31 October–6 November 1993; Volume 1613, pp. 1615–1619. [Google Scholar]

- Buades, A.; Coll, B.; Morel, J. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 62, pp. 60–65. [Google Scholar]

- Dougherty, E.R.; Dabov, K.; Astola, J.T.; Foi, A.; Katkovnik, V.; Egiazarian, K.O.; Nasrabadi, N.M.; Egiazarian, K.; Rizvi, S.A. Image denoising with block-matching and 3D filtering. In Proceedings of the Image Processing: Algorithms and Systems, Neural Networks, and Machine Learning, San Jose, CA, USA, 16–18 January 2006. [Google Scholar]

- Lu, S.; Tan, J.; Gao, Y.; Shi, Y.; Liang, Z.; Bosmans, H.; Chen, G.-H. Prior knowledge driven machine learning approach for PET sinogram data denoising. In Proceedings of the Medical Imaging 2020: Physics of Medical Imaging, Houston, TX, USA, 16–19 February 2020. [Google Scholar]

- Ma, Y.; Ren, Y.; Feng, P.; He, P.; Guo, X.; Wei, B. Sinogram denoising via attention residual dense convolutional neural network for low-dose computed tomography. Nucl. Sci. Tech. 2021, 32, 1–14. [Google Scholar] [CrossRef]

- Lu, W.; Onofrey, J.A.; Lu, Y.; Shi, L.; Ma, T.; Liu, Y.; Liu, C. An investigation of quantitative accuracy for deep learning based denoising in oncological PET. Phys. Med. Biol. 2019, 64, 165019. [Google Scholar] [CrossRef] [PubMed]

- Geng, M.; Meng, X.; Yu, J.; Zhu, L.; Jin, L.; Jiang, Z.; Qiu, B.; Li, H.; Kong, H.; Yuan, J.; et al. Content-Noise Complementary Learning for Medical Image Denoising. IEEE Trans. Med. Imaging 2022, 41, 407–419. [Google Scholar] [CrossRef]

- Gong, K.; Guan, J.; Kim, K.; Zhang, X.; Yang, J.; Seo, Y.; Fakhri, E.G.; Qi, J.; Li, Q. Iterative PET Image Reconstruction Using Convolutional Neural Network Representation. IEEE Trans. Med. Imaging 2019, 38, 675–685. [Google Scholar] [CrossRef]

- Haggstrom, I.; Schmidtlein, C.R.; Campanella, G.; Fuchs, T.J. DeepPET: A deep encoder-decoder network for directly solving the PET image reconstruction inverse problem. Med. Image Anal. 2019, 54, 253–262. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Tripathi, M. Facial image denoising using AutoEncoder and UNET. Herit. Sustain. Dev. 2021, 3, 89–96. [Google Scholar] [CrossRef]

- Zhu, X.; Deng, Z.; Chen, Y.; Liu, Y.; Liu, Y. Development of a 64-Channel Readout ASIC for an SSPM Array for PET and TOF-PET Applications. IEEE Trans. Nucl. Sci. 2016, 63, 1–8. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Neural Information Processing Systems, Montréal, QC, Canada, 8–13 December 2014. [Google Scholar]

| Epoch Number | U-Net | Res-U-Net | ||||

|---|---|---|---|---|---|---|

| Group 1 | Group 2 | Group 3 | Group 1 | Group 2 | Group 3 | |

| 99mTc | 250 | 225 | 150 | 250 | 200 | 135 |

| 137Cs | 125 | 125 | 120 | 120 | 125 | 130 |

| SM | LC-SM | G-DSM | U-DSM | R-DSM |

|---|---|---|---|---|

| SSIM | 0.6484 ± 0.0005 | 0.7433 ± 0.0004 | 0.8490 ± 0.0005 | 0.8542 ± 0.0004 |

| SM | LC-SM | G-DSM | U-DSM | R-DSM |

|---|---|---|---|---|

| SSIM | 0.5208 ± 0.0005 | 0.6146 ± 0.0004 | 0.8641 ± 0.0007 | 0.8542 ± 0.0004 |

| SSIM | LC-SM | G-DSM | U-DSM | R-DSM |

|---|---|---|---|---|

| Group 1 | 0.8984 ± 0.0010 | 0.9450 ± 0.0008 | 0.9861 ± 0.0002 | 0.9928 ± 0.0002 |

| Group 2 | 0.7766 ± 0.0008 | 0.8267 ± 0.0007 | 0.9261 ± 0.0006 | 0.9400 ± 0.0008 |

| Group 3 | 0.6097 ± 0.0006 | 0.7153 ± 0.0006 | 0.8267 ± 0.0006 | 0.8303 ± 0.0005 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Y.; Lyu, Z.; Lu, W.; Liu, Y.; Ma, T. Fast and Accurate Gamma Imaging System Calibration Based on Deep Denoising Networks and Self-Adaptive Data Clustering. Sensors 2023, 23, 2689. https://doi.org/10.3390/s23052689

Zhu Y, Lyu Z, Lu W, Liu Y, Ma T. Fast and Accurate Gamma Imaging System Calibration Based on Deep Denoising Networks and Self-Adaptive Data Clustering. Sensors. 2023; 23(5):2689. https://doi.org/10.3390/s23052689

Chicago/Turabian StyleZhu, Yihang, Zhenlei Lyu, Wenzhuo Lu, Yaqiang Liu, and Tianyu Ma. 2023. "Fast and Accurate Gamma Imaging System Calibration Based on Deep Denoising Networks and Self-Adaptive Data Clustering" Sensors 23, no. 5: 2689. https://doi.org/10.3390/s23052689