Uncontrolled Two-Step Iterative Calibration Algorithm for Lidar–IMU System

Abstract

:1. Introduction

2. Materials and Methods

2.1. Related Work

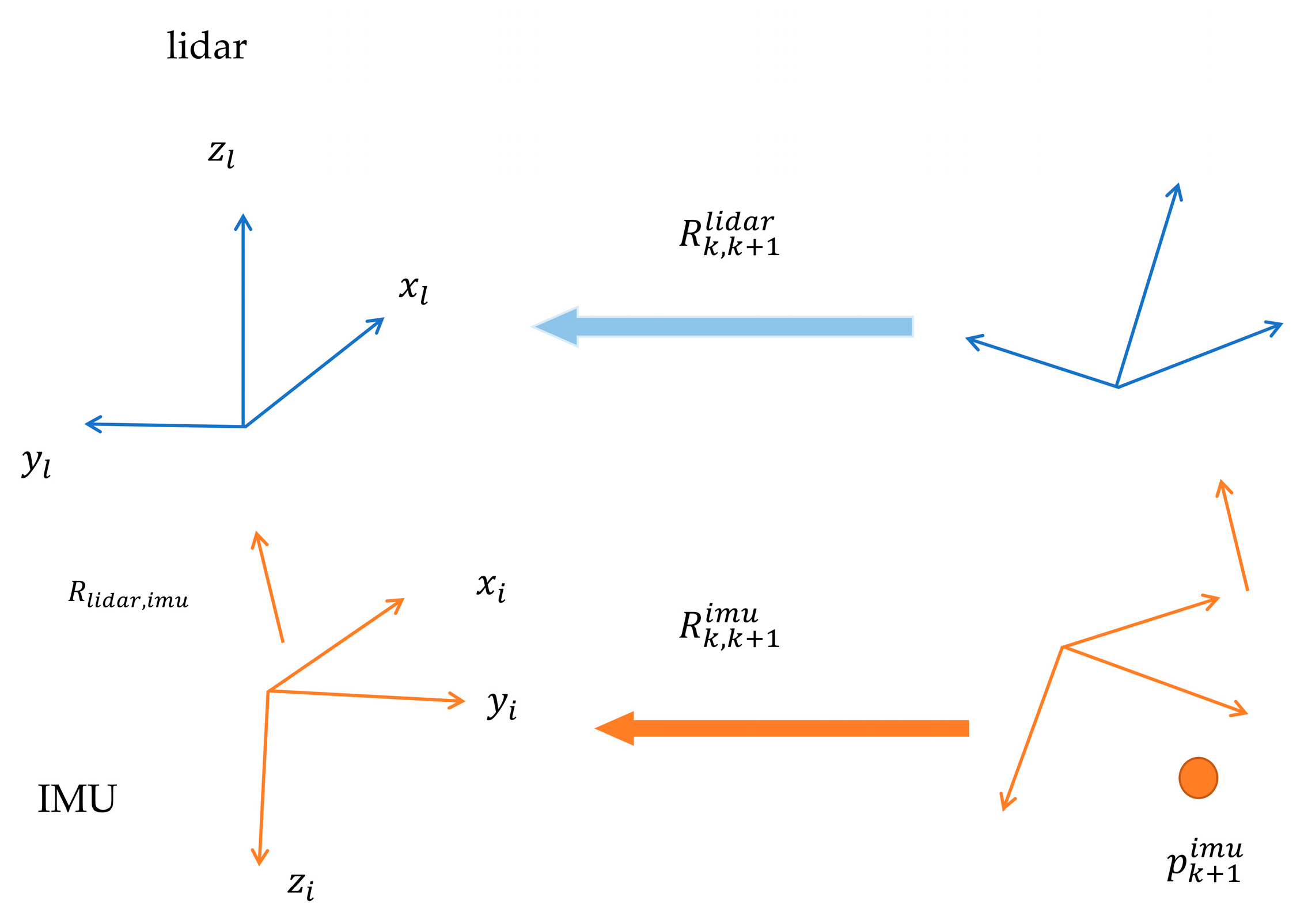

2.1.1. Calibration Principle for Lidar–IMU System

2.1.2. Matching Algorithms for Point Clouds

2.2. Uncontrolled Two-Step Iterative Calibration Algorithm

3. Results

3.1. Experimental Data

3.2. Comparative Analysis of Calibration Accuracy of Different Processing Methods

3.3. Calibration Accuracy of Different Point Cloud Matching Algorithms

3.4. Analysis of the Influence of the Scenario on the Calibration Accuracy

4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, Z.; Cao, J.; Di, S. Overview of 3D Lidar SLAM algorithms. Chin. J. Sci. Instrum. 2021, 42, 13–27. [Google Scholar]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Bailey, T.; Durrant-Whyte, H. Simultaneous localization and mapping (SLAM): Part II. IEEE Robot. Autom. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef]

- Yousif, K.; Bab-Hadiashar, A.; Hoseinnezhad, R. An Overview to Visual Odometry and Visual SLAM: Applications to Mobile Robotics. Intell. Ind. Syst. 2015, 1, 289–311. [Google Scholar] [CrossRef]

- Hauke, S.; Montiel, J.M.M.; Davison, A.J. Visual SLAM: Why filter? Image Vis. Comput. 2012, 30, 65–77. [Google Scholar]

- Raul, M.-A.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar]

- Jonathan, K.; Sukhatme, G.S. Visual-inertial sensor fusion: Localization, mapping and sensor-to-sensor self-calibration. Int. J. Robot. Res. 2011, 30, 56–79. [Google Scholar]

- Raúl, M.-A.; Tardós, J.D. Visual-inertial monocular SLAM with map reuse. IEEE Robot. Autom. Lett. 2017, 2, 796–803. [Google Scholar]

- Tong, Q.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar]

- He, Y.; Zhao, J.; Guo, Y.; He, W.; Yuan, K. Pl-vio: Tightly-coupled monocular visual–inertial odometry using point and line features. Sensors 2018, 18, 1159. [Google Scholar] [CrossRef] [PubMed]

- Qin, T.; Pan, J.; Cao, S.; Shen, S. A General Optimization-based Framework for Local Odometry Estimation with Multiple Sensors. arXiv 2019, arXiv:1901.03638. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. Robot. Sci. Syst. 2014, 2, 109–111. [Google Scholar]

- Available online: https://github.com/HKUST-Aerial-Robotics/A-LOAM (accessed on 6 June 2022).

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5135–5142. [Google Scholar]

- Wang, H.; Wang, C.; Chen, C.-L.; Xie, L. F-LOAM: Fast LiDAR Odometry and Mapping. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 4390–4396. [Google Scholar]

- Liu, W.; Xia, X.; Xiong, L.; Lu, Y.; Gao, L.; Yu, Z. Automated Vehicle Sideslip Angle Estimation Considering Signal Measurement Characteristic. IEEE Sens. J. 2021, 21, 21675–21687. [Google Scholar] [CrossRef]

- Yan, G.; Liu, Z.; Wang, C.; Shi, C.; Wei, P.; Cai, X.; Ma, T.; Liu, Z.; Zhong, Z.; Liu, Y.; et al. Opencalib: A multi-sensor calibration toolbox for autonomous driving. Softw. Impacts 2022, 14, 100393. [Google Scholar] [CrossRef]

- Xiong, L.; Xia, X.; Lu, Y.; Liu, W.; Gao, L.; Song, S.; Yu, Z. IMU-Based Automated Vehicle Body Sideslip Angle and Attitude Estimation Aided by GNSS Using Parallel Adaptive Kalman Filters. IEEE Trans. Veh. Technol. 2020, 69, 10668–10680. [Google Scholar] [CrossRef]

- Feng, Z.; Li, J. Monocular Visual-Inertial State Estimation with Online Temporal Calibration. In Proceedings of the Ubiquitous Positioning, Indoor Navigation and Location-Based Services, Wuhan, China, 22–23 March 2018; pp. 1–8. [Google Scholar]

- Tong, Q.; Shaojie, S. Online Temporal Calibration for Monocular Visual-Inertial Systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 3662–3669. [Google Scholar]

- Feng, Z.; Li, J.; Zhang, L.; Chen, C. Online Spatial and Temporal Calibration for Monocular Direct Visual-Inertial Odometry. Sensors 2019, 19, 2273. [Google Scholar] [CrossRef] [PubMed]

- Rehder, J.; Nikolic, J.; Schneider, T.; Hinzmann, T.; Siegwart, R. Extending kalibr: Calibrating the extrinsics of multiple IMUs and of individual axes. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 4304–4311. [Google Scholar]

- Lv, J.; Xu, J.; Hu, K.; Liu, Y.; Zuo, X. Targetless Calibration of LiDAR-IMU System Based on Continuous-time Batch Estimation. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 9968–9975. [Google Scholar]

- Zhu, F.; Ren, Y.; Zhang, F. Robust Real-time LiDAR-inertial Initialization. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022. [Google Scholar]

- Joan, S. Quaternion kinematics for the error-state Kalman filter. arXiv 2017, arXiv:1711.02508. [Google Scholar]

- Magnusson, M. The Three-Dimensional Normal-Distributions Transform: An Efficient Representation for Registration, Surface Analysis, and Loop Detection. Ph.D. Thesis, Örebro Universitet, Örebro, Sweden, 2009. [Google Scholar]

- Aleksandr, S.; Haehnel, D.; Thrun, S. Generalized-icp. Robot. Sci. Syst. 2009, 2, 435. [Google Scholar]

- Biber, P.; Strasser, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003; Volume 3, pp. 2743–2748. [Google Scholar]

- Available online: https://github.com/koide3/ndt_omp (accessed on 6 June 2022).

| Name | Duration (Seconds) | Acquisition Platform | Location |

|---|---|---|---|

| Rotation | 58.60 | Handheld | MIT |

| Park | 560.00 | Vehicle-mounted | MIT |

| Campus | 994.00 | Handheld | MIT |

| Walk | 655.00 | Handheld | MIT |

| Cnu | 1199.20 | Backpack | Capital Normal University |

| Name | Method II (°) | LI-Calib (°) |

|---|---|---|

| Park | 11.01 | 18.74 |

| 11.01 | 53.09 | |

| 11.01 | 38.05 | |

| Average error | 11.01 | 36.63 |

| Method | Rotation (°) | Time (s) |

|---|---|---|

| Method II | 3.41 | 40.2 |

| LI-Init | 4.08 | 47.3 |

| Matching Algorithm | Name | Method I (°) | Method II (°) | Method III (°) | Average Error (°) |

|---|---|---|---|---|---|

| NDT | Rotation | 4.16 | 3.29 | 3.06 | 3.50 |

| Park | 11.91 | 9.64 | 12.77 | 11.44 | |

| Campus | 2.31 | 1.80 | 1.89 | 2.00 | |

| Walk | 4.97 | 4.19 | 5.65 | 4.94 | |

| Cnu | 16.13 | 3.00 | 9.60 | 9.58 | |

| OMP-NDT | Rotation | 4.37 | 3.41 | 3.68 | 3.82 |

| Park | 11.70 | 11.01 | 12.03 | 11.58 | |

| Campus | 1.49 | 1.36 | 0.84 | 1.23 | |

| Walk | 3.09 | 2.87 | 3.17 | 3.04 | |

| Cnu | 15.27 | 2.76 | 10.59 | 9.54 | |

| ICP | Rotation | 4.98 | 3.55 | 3.40 | 3.98 |

| Park | 17.24 | 14.23 | 14.29 | 15.25 | |

| Campus | 2.08 | 1.48 | 1.53 | 1.70 | |

| Walk | 12.27 | 8.77 | 6.08 | 9.04 | |

| Cnu | 17.79 | 6.87 | 4.17 | 9.61 | |

| GICP | Rotation | 4.26 | 2.39 | 2.45 | 3.03 |

| Park | 11.16 | 8.11 | 8.16 | 9.14 | |

| Campus | 1.91 | 1.31 | 1.35 | 1.52 | |

| Walk | 8.11 | 6.65 | 4.44 | 6.40 | |

| Cnu | 12.45 | 6.17 | 4.26 | 7.63 |

| Name | Average Angle Change | Average Calibration Error |

|---|---|---|

| Park | 2.23 | 11.85 |

| Walk | 2.30 | 5.86 |

| Rotation | 3.79 | 3.58 |

| Campus | 3.06 | 1.61 |

| Cnu | 0.99 | 9.09 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, S.; Xie, D.; Fu, Y.; Wang, Z.; Zhong, R. Uncontrolled Two-Step Iterative Calibration Algorithm for Lidar–IMU System. Sensors 2023, 23, 3119. https://doi.org/10.3390/s23063119

Yin S, Xie D, Fu Y, Wang Z, Zhong R. Uncontrolled Two-Step Iterative Calibration Algorithm for Lidar–IMU System. Sensors. 2023; 23(6):3119. https://doi.org/10.3390/s23063119

Chicago/Turabian StyleYin, Shilun, Donghai Xie, Yibo Fu, Zhibo Wang, and Ruofei Zhong. 2023. "Uncontrolled Two-Step Iterative Calibration Algorithm for Lidar–IMU System" Sensors 23, no. 6: 3119. https://doi.org/10.3390/s23063119