Determination of Munsell Soil Colour Using Smartphones

Abstract

:1. Introduction

- Analyse the colour discrepancy between images captured by smartphones and the Nix Pro colour sensor;

- Propose a novel approach to accurately capture soil colour, irrespective of the capturing method for a specific geographic area; and

- Find the most suitable colour model and corresponding distance function by investigating different colour models and colour-matching distance functions.

2. Materials and Methodology

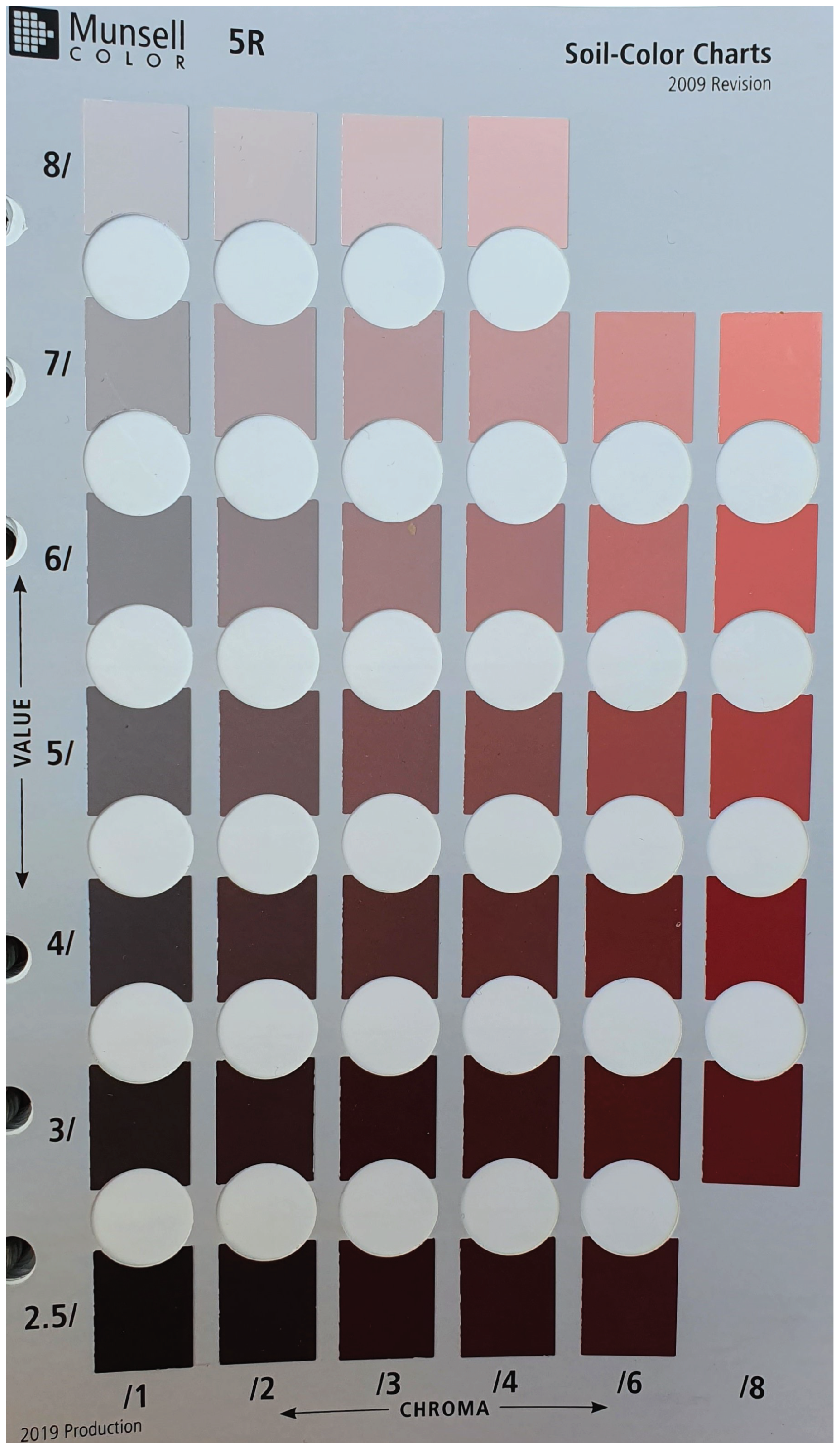

2.1. Munsell Soil Colour Book

2.2. Nix Pro Colour Sensor

- Hue rotation term to mitigate the problem of blue region;

- Compensate for neutral colours;

- Compensate for lightness, chroma, and hue.

2.3. Data Collection and Pre-Processing

2.4. Determining the Closest Munsell Soil Colour and Rank

2.5. Average Colour Difference

2.6. Employ Location-Based Prediction

3. Results

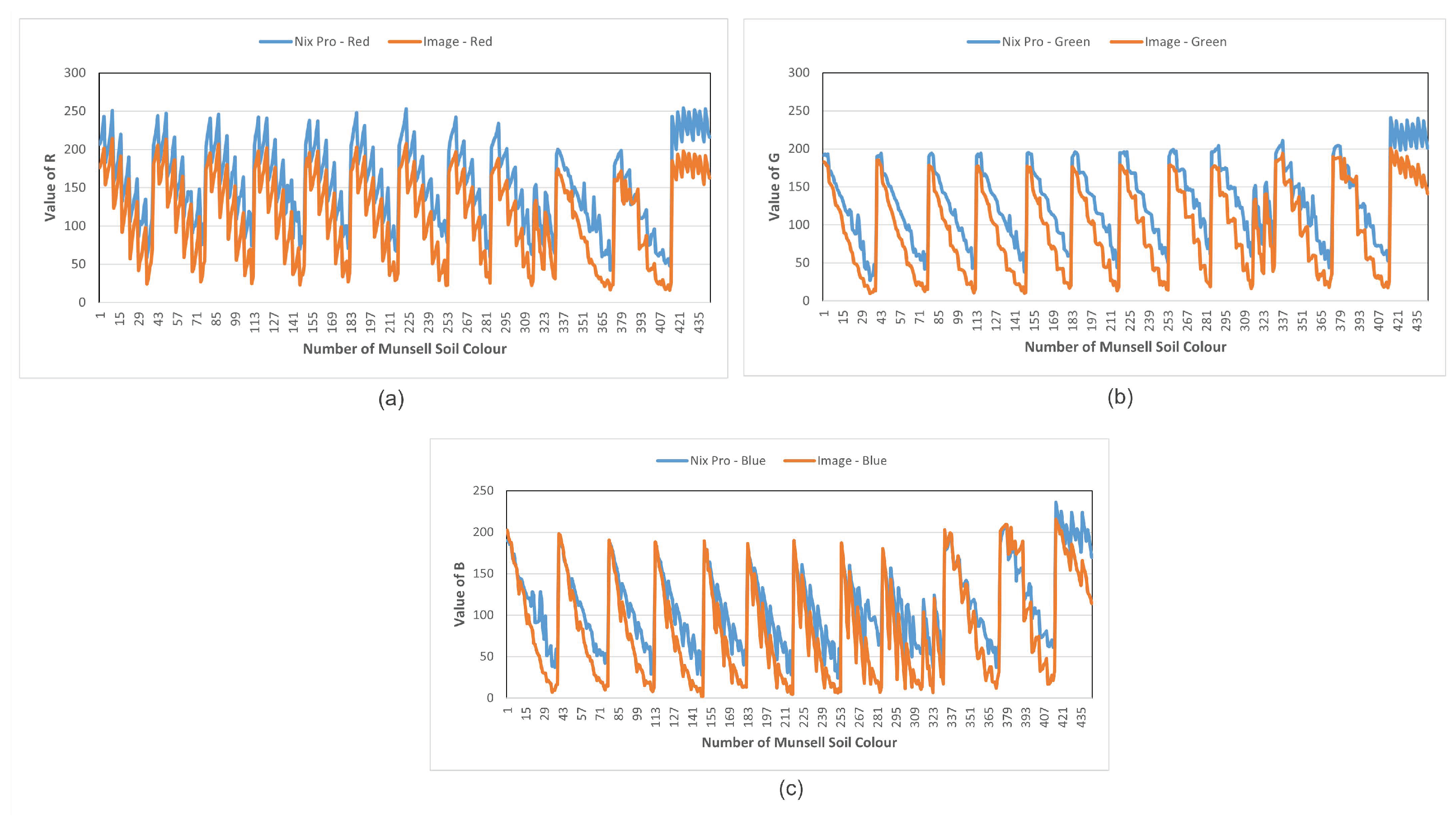

3.1. Better-Performing Colour Model

3.2. Best Time of the Day to Capture Images Using a Smartphone Camera

3.3. Added Weight and Location-Based Prediction

3.4. Distance Function

- Between RGB and CIELAB colour model, CIELAB performs much better.

- CIE1976 and CIE2000 distance functions perform similarly for both the Samsung and Google

- The best time to capture images to determine Munsell soil colour is between 10 a.m. and 4 p.m. (35,000 lux to 76,000 lux). The prediction rate decreased in the early morning and evening times.

- According to our data and analysis between the Samsung and Google , the Google performed better for Munsell soil colour prediction (Figure 8).

- The best prediction rate was achieved when we employed our proposed weight and location-based prediction model. After adding weight and focusing on soil colours in Australia, of the time, the exact match was found in the top 5 prediction when we employed Samsung . The Google (Table 9) was able to match the colour in the top 5 predictions of the time. However, we performed our study on sunny days, and the results may vary on cloudy and rainy days. To achieve similar determinations under different weather conditions, further research and investigations are necessary.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Owens, P.; Rutledge, E. Morphology. Encyclopedia of Soils in the Environment; Hillel, D., Ed.; Elsevier: Oxford, UK, 2005. [Google Scholar]

- Thompson, J.A.; Pollio, A.R.; Turk, P.J. Comparison of Munsell soil color charts and the GLOBE soil color book. Soil Sci. Soc. Am. J. 2013, 77, 2089–2093. [Google Scholar] [CrossRef]

- Pendleton, R.L.; Nickerson, D. Soil colors and special Munsell soil color charts. Soil Sci. 1951, 71, 35–44. [Google Scholar] [CrossRef]

- National Committee for Soil and Terrain; National Committee on Soil; Terrain (Australia); CSIRO Publishing. Australian Soil and Land Survey Field Handbook; Number 1; CSIRO Publishing: Collingwood, VIC, Australia, 2009. [Google Scholar]

- Conway, B.R.; Livingstone, M.S. A different point of hue. Proc. Natl. Acad. Sci. USA 2005, 102, 10761–10762. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mancini, M.; Weindorf, D.C.; Monteiro, M.E.C.; de Faria, Á.J.G.; dos Santos Teixeira, A.F.; de Lima, W.; de Lima, F.R.D.; Dijair, T.S.B.; Marques, F.D.; Ribeiro, D.; et al. From sensor data to Munsell color system: Machine learning algorithm applied to tropical soil color classification via Nix™ Pro sensor. Geoderma 2020, 375, 114471. [Google Scholar] [CrossRef]

- Kirillova, N.P.; Grauer-Gray, J.; Hartemink, A.E.; Sileova, T.; Artemyeva, Z.S.; Burova, E. New perspectives to use Munsell color charts with electronic devices. Comput. Electron. Agric. 2018, 155, 378–385. [Google Scholar] [CrossRef]

- Kirillova, N.; Sileva, T.; Ul’yanova, T.Y.; Smirnova, I.; Ul’yanova, A.; Burova, E. Color diagnostics of soil horizons (by the example of soils from Moscow region). Eurasian Soil Sci. 2018, 51, 1348–1356. [Google Scholar] [CrossRef]

- Marqués-Mateu, Á.; Moreno-Ramón, H.; Balasch, S.; Ibáñez-Asensio, S. Quantifying the uncertainty of soil colour measurements with Munsell charts using a modified attribute agreement analysis. Catena 2018, 171, 44–53. [Google Scholar] [CrossRef]

- Pegalajar, M.C.; Sánchez-Marañón, M.; Baca Ruíz, L.G.; Mansilla, L.; Delgado, M. Artificial neural networks and fuzzy logic for specifying the color of an image using munsell soil-color charts. In Proceedings of the International Conference on Information Processing and Management of Uncertainty in Knowledge-Based Systems, Cadiz, Spain, 11–15 June 2018; pp. 699–709. [Google Scholar]

- Sánchez-Marañón, M.; Huertas, R.; Melgosa, M. Colour variation in standard soil-colour charts. Soil Res. 2005, 43, 827–837. [Google Scholar] [CrossRef]

- Gómez-Robledo, L.; López-Ruiz, N.; Melgosa, M.; Palma, A.J.; Capitán-Vallvey, L.F.; Sánchez-Marañón, M. Using the mobile phone as Munsell soil-colour sensor: An experiment under controlled illumination conditions. Comput. Electron. Agric. 2013, 99, 200–208. [Google Scholar] [CrossRef]

- Stiglitz, R.; Mikhailova, E.; Post, C.; Schlautman, M.; Sharp, J. Evaluation of an inexpensive sensor to measure soil color. Comput. Electron. Agric. 2016, 121, 141–148. [Google Scholar] [CrossRef] [Green Version]

- Hogarty, D.T.; Hogarty, J.P.; Hewitt, A.W. Smartphone use in ophthalmology: What is their place in clinical practice? Surv. Ophthalmol. 2020, 65, 250–262. [Google Scholar] [CrossRef] [PubMed]

- Wang, N.; Deng, Z.; Wen, L.M.; Ding, Y.; He, G. Understanding the use of smartphone apps for health information among pregnant Chinese women: Mixed methods study. JMIR mHealth uHealth 2019, 7, e12631. [Google Scholar] [CrossRef] [PubMed]

- Han, P.; Dong, D.; Zhao, X.; Jiao, L.; Lang, Y. A smartphone-based soil color sensor: For soil type classification. Comput. Electron. Agric. 2016, 123, 232–241. [Google Scholar] [CrossRef]

- Milotta, F.L.; Stanco, F.; Tanasi, D.; Gueli, A.M. Munsell color specification using arca (automatic recognition of color for archaeology). J. Comput. Cult. Herit. JOCCH 2018, 11, 1–15. [Google Scholar] [CrossRef]

- Milotta, F.L.M.; Quattrocchi, C.; Stanco, F.; Tanasi, D.; Pasquale, S.; Gueli, A.M. ARCA 2.0: Automatic Recognition of Color for Archaeology through a Web-Application. In Proceedings of the 2018 Metrology for Archaeology and Cultural Heritage (MetroArchaeo), Cassino, Italy, 22–24 October 2018; pp. 466–470. [Google Scholar]

- Turk, J.K.; Young, R.A. Field conditions and the accuracy of visually determined Munsell soil color. Soil Sci. Soc. Am. J. 2020, 84, 163–169. [Google Scholar] [CrossRef]

- Kwon, O.; Park, T. Applications of smartphone cameras in agriculture, environment, and food: A review. J. Biosyst. Eng. 2017, 42, 330–338. [Google Scholar]

- Aitkenhead, M.; Coull, M.; Gwatkin, R.; Donnelly, D. Automated soil physical parameter assessment using Smartphone and digital camera imagery. J. Imaging 2016, 2, 35. [Google Scholar] [CrossRef] [Green Version]

- Aitkenhead, M.; Cameron, C.; Gaskin, G.; Choisy, B.; Coull, M.; Black, H. Digital RGB photography and visible-range spectroscopy for soil composition analysis. Geoderma 2018, 313, 265–275. [Google Scholar] [CrossRef]

- Google Pixel 5 Camera Test: Software Power. 2020. Available online: https://www.dxomark.com/google-pixel-5-camera-review-software-power/ (accessed on 3 March 2023).

- Updated: Samsung Galaxy S10 5G (Exynos) Camera Test. 2019. Available online: https://www.dxomark.com/samsung-galaxy-s10-5g-camera-review/ (accessed on 3 March 2023).

- Camera Specifications on the Samsung Galaxy S10. 2022. Available online: https://www.samsung.com/sg/support/mobile-devices/camera-specifications-on-the-galaxy-s10/ (accessed on 3 March 2023).

- Stiglitz, R.; Mikhailova, E.; Post, C.; Schlautman, M.; Sharp, J. Using an inexpensive color sensor for rapid assessment of soil organic carbon. Geoderma 2017, 286, 98–103. [Google Scholar] [CrossRef] [Green Version]

- Swetha, R.; Bende, P.; Singh, K.; Gorthi, S.; Biswas, A.; Li, B.; Weindorf, D.C.; Chakraborty, S. Predicting soil texture from smartphone-captured digital images and an application. Geoderma 2020, 376, 114562. [Google Scholar] [CrossRef]

- Jha, G.; Sihi, D.; Dari, B.; Kaur, H.; Nocco, M.A.; Ulery, A.; Lombard, K. Rapid and inexpensive assessment of soil total iron using Nix Pro color sensor. Agric. Environ. Lett. 2021, 6, e20050. [Google Scholar] [CrossRef]

- Mukhopadhyay, S.; Chakraborty, S.; Bhadoria, P.; Li, B.; Weindorf, D.C. Assessment of heavy metal and soil organic carbon by portable X-ray fluorescence spectrometry and NixPro™ sensor in landfill soils of India. Geoderma Reg. 2020, 20, e00249. [Google Scholar] [CrossRef]

- Nix Pro 2-Color Sensor. 2015. Available online: https://www.nixsensor.com/nix-pro/ (accessed on 18 January 2023).

- Li, Z.; Li, Z.; Zhao, D.; Wen, F.; Jiang, J.; Xu, D. Smartphone-based visualized microarray detection for multiplexed harmful substances in milk. Biosens. Bioelectron. 2017, 87, 874–880. [Google Scholar] [CrossRef] [PubMed]

- Yu, L.; Shi, Z.; Fang, C.; Zhang, Y.; Liu, Y.; Li, C. Disposable lateral flow-through strip for smartphone-camera to quantitatively detect alkaline phosphatase activity in milk. Biosens. Bioelectron. 2015, 69, 307–315. [Google Scholar] [CrossRef] [PubMed]

- de Oliveira Krambeck Franco, M.; Suarez, W.T.; Maia, M.V.; dos Santos, V.B. Smartphone application for methanol determination in sugar cane spirits employing digital image-based method. Food Anal. Methods 2017, 10, 2102–2109. [Google Scholar] [CrossRef]

- San Park, T.; Li, W.; McCracken, K.E.; Yoon, J.Y. Smartphone quantifies Salmonella from paper microfluidics. Lab Chip 2013, 13, 4832–4840. [Google Scholar] [CrossRef]

- Liang, P.S.; Park, T.S.; Yoon, J.Y. Rapid and reagentless detection of microbial contamination within meat utilizing a smartphone-based biosensor. Sci. Rep. 2014, 4, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Cruz-Fernández, M.; Luque-Cobija, M.; Cervera, M.; Morales-Rubio, A.; De La Guardia, M. Smartphone determination of fat in cured meat products. Microchem. J. 2017, 132, 8–14. [Google Scholar] [CrossRef]

- Zhihong, M.; Yuhan, M.; Liang, G.; Chengliang, L. Smartphone-based visual measurement and portable instrumentation for crop seed phenotyping. IFAC-PapersOnLine 2016, 49, 259–264. [Google Scholar] [CrossRef]

- Machado, B.B.; Orue, J.P.; Arruda, M.S.; Santos, C.V.; Sarath, D.S.; Goncalves, W.N.; Silva, G.G.; Pistori, H.; Roel, A.R.; Rodrigues, J.F., Jr. BioLeaf: A professional mobile application to measure foliar damage caused by insect herbivory. Comput. Electron. Agric. 2016, 129, 44–55. [Google Scholar] [CrossRef] [Green Version]

- Vesali, F.; Omid, M.; Kaleita, A.; Mobli, H. Development of an android app to estimate chlorophyll content of corn leaves based on contact imaging. Comput. Electron. Agric. 2015, 116, 211–220. [Google Scholar] [CrossRef]

- Rahman, M.; Blackwell, B.; Banerjee, N.; Saraswat, D. Smartphone-based hierarchical crowdsourcing for weed identification. Comput. Electron. Agric. 2015, 113, 14–23. [Google Scholar] [CrossRef]

- Wang, Y.; Li, Y.; Bao, X.; Han, J.; Xia, J.; Tian, X.; Ni, L. A smartphone-based colorimetric reader coupled with a remote server for rapid on-site catechols analysis. Talanta 2016, 160, 194–204. [Google Scholar] [CrossRef]

- Fang, J.; Qiu, X.; Wan, Z.; Zou, Q.; Su, K.; Hu, N.; Wang, P. A sensing smartphone and its portable accessory for on-site rapid biochemical detection of marine toxins. Anal. Methods 2016, 8, 6895–6902. [Google Scholar] [CrossRef]

- Hussain, I.; Das, M.; Ahamad, K.U.; Nath, P. Water salinity detection using a smartphone. Sens. Actuators Chem. 2017, 239, 1042–1050. [Google Scholar] [CrossRef]

- Sumriddetchkajorn, S.; Chaitavon, K.; Intaravanne, Y. Mobile device-based self-referencing colorimeter for monitoring chlorine concentration in water. Sens. Actuators B Chem. 2013, 182, 592–597. [Google Scholar] [CrossRef]

- Dutta, S.; Sarma, D.; Nath, P. Ground and river water quality monitoring using a smartphone-based pH sensor. Aip Adv. 2015, 5, 057151. [Google Scholar] [CrossRef]

- Prosdocimi, M.; Burguet, M.; Di Prima, S.; Sofia, G.; Terol, E.; Comino, J.R.; Cerdà, A.; Tarolli, P. Rainfall simulation and Structure-from-Motion photogrammetry for the analysis of soil water erosion in Mediterranean vineyards. Sci. Total Environ. 2017, 574, 204–215. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bloch, L.C.; Hosen, J.D.; Kracht, E.C.; LeFebvre, M.J.; Lopez, C.J.; Woodcock, R.; Keegan, W.F. Is it better to be objectively wrong or subjectively right?: Testing the accuracy and consistency of the Munsell capsure spectrocolorimeter for archaeological applications. Adv. Archaeol. Pract. 2021, 9, 132–144. [Google Scholar] [CrossRef]

- Hunt, R.W.G.; Pointer, M.R. Measuring Colour; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Mokrzycki, W.; Tatol, M. Colour differenceΔ E-A survey. Mach. Graph. Vis. 2011, 20, 383–411. [Google Scholar]

- Sharma, G.; Wu, W.; Dalal, E.N. The CIEDE2000 color-difference formula: Implementation notes, supplementary test data, and mathematical observations. Color Res. Appl. 2005, 30, 21–30. [Google Scholar] [CrossRef]

- Lindbloom, B.J. Delta E (CIE 2000). 2017. Available online: http://www.brucelindbloom.com/index.html?EqnDeltaECIE2000.html (accessed on 18 January 2023).

- Searle, R. The Australian site data collation to support the GlobalSoilMap. In GlobalSoilMap: Basis of the Global Spatial Soil Information System; CRC Press: London, UK, 2014; p. 127. [Google Scholar]

- Rossel, R.V.; Minasny, B.; Roudier, P.; Mcbratney, A.B. Colour space models for soil science. Geoderma 2006, 133, 320–337. [Google Scholar] [CrossRef]

| No. | Application Area | Publication |

|---|---|---|

| 1. | Detect harmful substance in milk | [31] |

| 2. | Detect alkaline phosphate | [32] |

| 3. | Methanol determination in sugar cane | [33] |

| 4. | Quantify salmonella | [34] |

| 5. | Detect microbial contamination | [35] |

| 6. | Determine fat | [36] |

| 7. | Determine crop seed | [37] |

| 8. | Measure foliar damage | [38] |

| 9. | Estimate chlorophyll | [39] |

| 10. | Identify weed | [40] |

| 11. | Colorimetric reader | [41] |

| 12. | Detect biochemicals | [42] |

| 13. | Measure water salinity | [43] |

| 14. | Chlorine monitor | [44] |

| 15. | Water quality monitor | [45] |

| 16. | Soil type classification | [16] |

| 17. | Soil water erosion | [46] |

| Set | Time | RGB-Euclidean Distance | CIELAB-CIE1976 | CIELAB-CIE2000 | CIELAB- AW-CIE1976 | CIELAB- AW-CIE2000 |

|---|---|---|---|---|---|---|

| Set 2 | 10:00 a.m. | 88.59 | 43.01 | 43.44 | 15.50 | 15.02 |

| Set 3 | 11:00 a.m. | 89.66 | 53.03 | 50.58 | 12.02 | 12.08 |

| Set 4 | 12:00 p.m. | 101.16 | 58.12 | 57.80 | 12.37 | 12.83 |

| Set 5 | 1:00 p.m. | 83.81 | 48.76 | 46.66 | 11.86 | 11.30 |

| Set 7 | 3:00 p.m. | 82.81 | 48.16 | 45.92 | 11.77 | 11.42 |

| AVERAGE | 89.21 | 50.22 | 48.88 | 12.70 | 12.53 | |

| Set | Time | RGB-Euclidean Distance | CIELAB-CIE1976 | CIELAB-CIE2000 | CIELAB- AW-CIE1976 | CIELAB- AW-CIE2000 |

|---|---|---|---|---|---|---|

| Set 1 | 10:00 a.m. | 62.53 | 34.47 | 30.81 | 13.49 | 14.60 |

| Set 2 | 11:00 a.m. | 40.62 | 20.89 | 18.29 | 13.67 | 13.85 |

| Set 3 | 12:00 p.m. | 46.29 | 26.32 | 21.63 | 13.28 | 13.88 |

| Set 4 | 1:00 p.m. | 51.56 | 37.12 | 29.66 | 16.45 | 15.74 |

| Set 5 | 3:00 p.m. | 80.67 | 44.86 | 44.25 | 21.34 | 22.64 |

| AVERAGE | 56.33 | 32.73 | 28.93 | 15.65 | 16.14 | |

| L | A | B | |

|---|---|---|---|

| Samsung | 12.89 | 3.14 | 5.06 |

| Google Pixel 5 | 8.06 | 3.02 | 4.49 |

| Set | TIME | Location-Based Focused Hue-CIE1976 | Location-Based CIE2000 | Location-Based AW-CIE1976 | Location-Based AW-CIE2000 |

|---|---|---|---|---|---|

| Set 2 | 10:00 a.m. | 28.08 | 33.41 | 9.92 | 9.58 |

| Set 3 | 11:00 a.m. | 35.55 | 38.95 | 7.06 | 7.37 |

| Set 4 | 12:00 p.m. | 39.42 | 44.57 | 8.04 | 8.64 |

| Set 5 | 1:00 p.m. | 30.47 | 33.10 | 6.12 | 6.62 |

| Set 7 | 3:00 p.m. | 29.82 | 32.70 | 6.78 | 7.18 |

| AVERAGE | 32.76 | 36.55 | 7.58 | 7.88 | |

| Set | TIME | Location-Based Focused Hue-CIE1976 | Location-Based CIE2000 | Location-Based AW-CIE1976 | Location-Based AW-CIE2000 |

|---|---|---|---|---|---|

| Set 1 | 10:00 a.m. | 17.86 | 17.08 | 5.13 | 5.80 |

| Set 2 | 11:00 a.m. | 13.83 | 13.58 | 5.40 | 5.84 |

| Set 3 | 12:00 p.m. | 13.65 | 11.97 | 5.85 | 6.52 |

| Set 4 | 1:00 p.m. | 25.03 | 20.66 | 7.24 | 6.89 |

| Set 5 | 3:00 p.m. | 28.04 | 30.12 | 8.03 | 9.81 |

| AVERAGE | 19.68 | 18.68 | 6.33 | 6.97 | |

| Set | TIME | Weather Condition (lx) | Indirect Sun Light Intensity (lx) | CIELAB- AW-CIE1976 | CIELAB- AW-CIE2000 | Location-Based AW-CIE1976 | Location-Based AW-CIE2000 |

|---|---|---|---|---|---|---|---|

| Set 1 | 9:00 a.m. | 11,982 | 3098 | 24.43 | 21.16 | 19.43 | 15.39 |

| Set 2 | 10:00 a.m. | 35,510 | 2673 | 15.5 | 15.02 | 9.92 | 9.58 |

| Set 3 | 11:00 a.m. | 46,329 | 3432 | 12.02 | 12.08 | 7.06 | 7.37 |

| Set 4 | 12:00 p.m. | 75,952 | 3380 | 12.37 | 12.83 | 8.04 | 8.64 |

| Set 5 | 1:00 p.m. | 74,220 | 3054 | 11.86 | 11.3 | 6.12 | 6.62 |

| Set 6 | 2:00 p.m. | 75,856 | 2993 | 11.09 | 10.73 | 6.05 | 6.73 |

| Set 7 | 3:00 p.m. | 59,037 | 3300 | 11.77 | 11.42 | 6.78 | 7.18 |

| Set 8 | 4:00 p.m. | 38,204 | 3180 | 11.63 | 11.22 | 7.51 | 7.43 |

| Set 9 | 5:00 p.m. | 13,715 | 1675 | 22.51 | 23.68 | 14.41 | 15.12 |

| Colour Difference | Samsung S10 | Google Pixel5 | Samsung S10-Location-Based | Google Pixel5-Location-Based |

|---|---|---|---|---|

| CIE1976 | 50.22 | 32.73 | 32.76 | 19.68 |

| CIE2000 | 48.88 | 28.93 | 36.55 | 18.68 |

| AW-CIE1976 | 12.70 | 15.65 | 7.58 | 6.33 |

| AW-CIE2000 | 12.53 | 16.14 | 7.88 | 6.97 |

| Colour Difference | Samsung S10 TOP 5 Prediction Accuracy (%) | Samsung S10 Location-Based TOP 5 Prediction Accuracy (%) | Google PIXEL5 TOP 5 Prediction Accuracy (%) | Google PIXEL5 Location-Based TOP 5 Prediction Accuracy (%) |

|---|---|---|---|---|

| CIE1976 | 7.18 | 5.71 | 9.07 | 18.99 |

| CIE2000 | 6.32 | 4.96 | 12.19 | 24.54 |

| AW-CIE1976 | 51.74 | 66.81 | 34.13 | 73.70 |

| AW-CIE2000 | 52.19 | 69.75 | 34.40 | 71.51 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nodi, S.S.; Paul, M.; Robinson, N.; Wang, L.; Rehman, S.u. Determination of Munsell Soil Colour Using Smartphones. Sensors 2023, 23, 3181. https://doi.org/10.3390/s23063181

Nodi SS, Paul M, Robinson N, Wang L, Rehman Su. Determination of Munsell Soil Colour Using Smartphones. Sensors. 2023; 23(6):3181. https://doi.org/10.3390/s23063181

Chicago/Turabian StyleNodi, Sadia Sabrin, Manoranjan Paul, Nathan Robinson, Liang Wang, and Sabih ur Rehman. 2023. "Determination of Munsell Soil Colour Using Smartphones" Sensors 23, no. 6: 3181. https://doi.org/10.3390/s23063181