Unsupervised Depth Completion Guided by Visual Inertial System and Confidence

Abstract

1. Introduction

- (1)

- We design a tight coupling network of depth completion and motion residuals which can meet the real-time dense depth prediction of robots in dynamic complex environments with less computing resources.

- (2)

- In the training process of our network, we do not need any auxiliary information except RGB images, pose information, and sparse depth maps provided by the visual-inertial system. That is, there is no need for semantic information, optical flow information, or any kind of ground truth.

- (3)

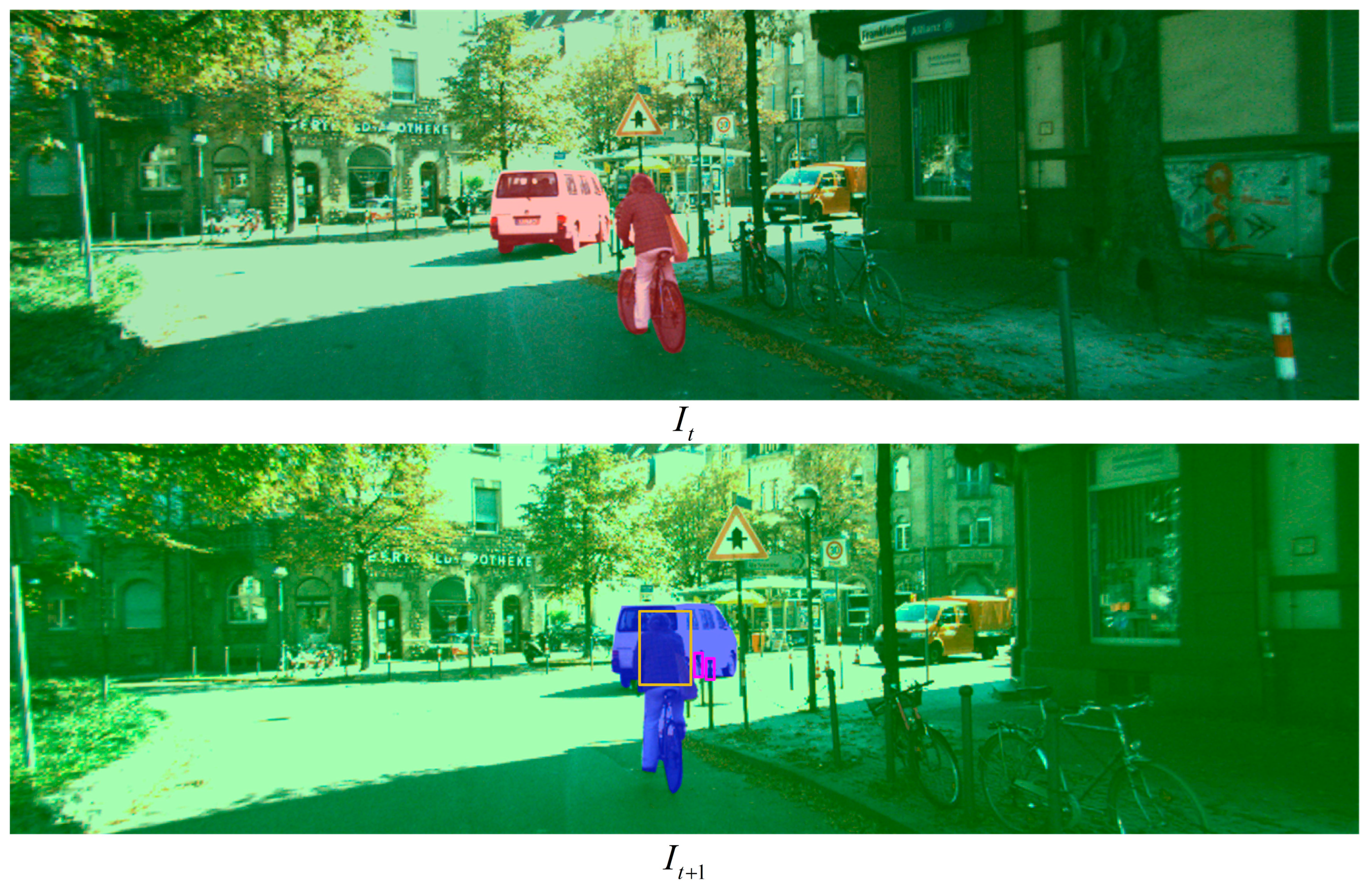

- We propose a method to classify the image into static, dynamic, and occluded regions and design the loss function of each region separately. This method can solve the problem of dynamic object motion and occlusion in our network training, and can improve the precision of network output dense depth.

- (4)

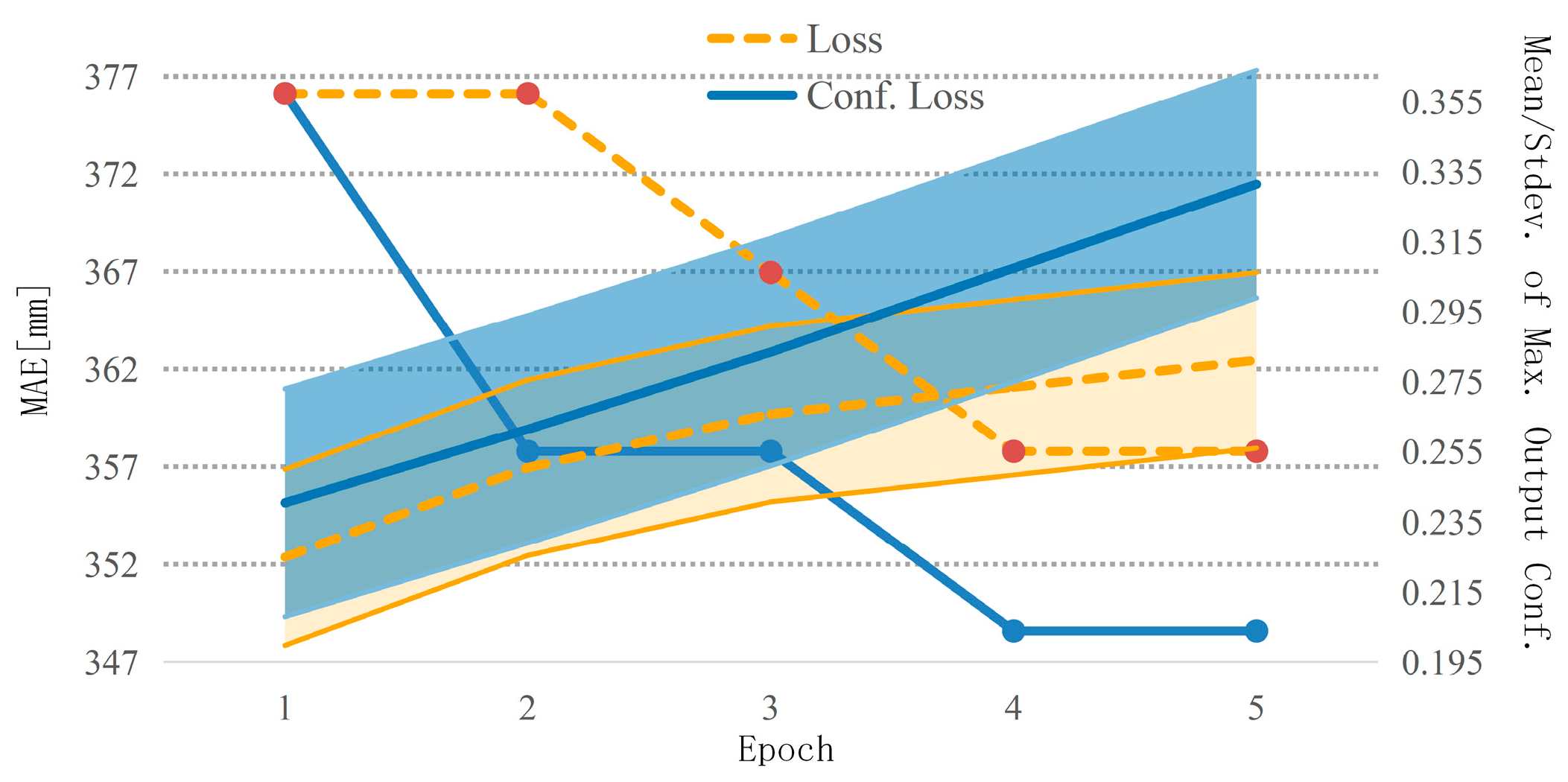

- In the KITTI depth completion dataset, the VIDO dataset, and the generalization in real dynamic scenes, our proposed method exceeds the accuracy achieved by the previous unsupervised depth completion work and requires only a small number of parameters. In addition, we also explicitly show the influence of confidence on the loss function, which can improve the data error until convergence.

2. Related Work

2.1. Supervised Depth Completion

2.2. Unsupervised Depth Completion

2.3. Depth and Motion

3. Methodology

3.1. VI-SLAM: Sparse Depth Map Generation

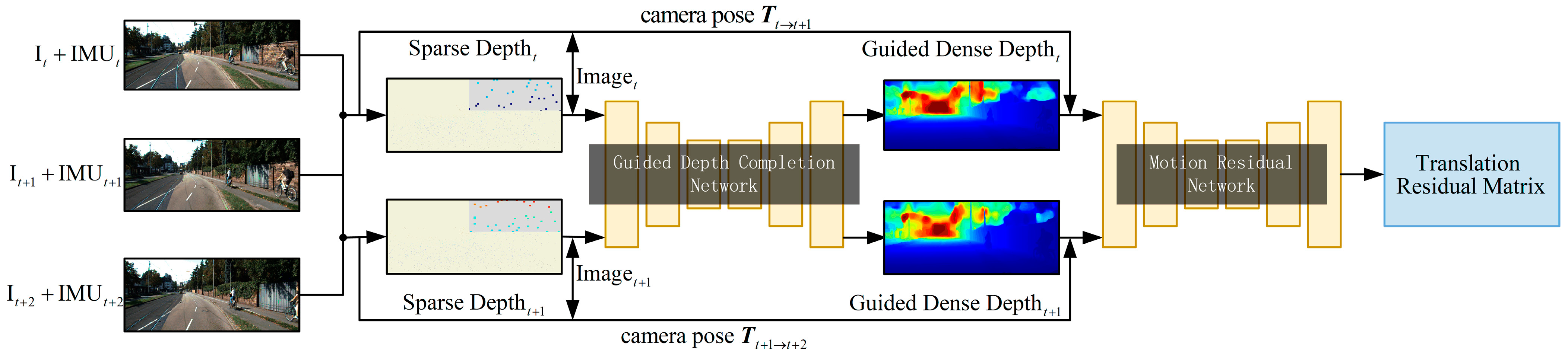

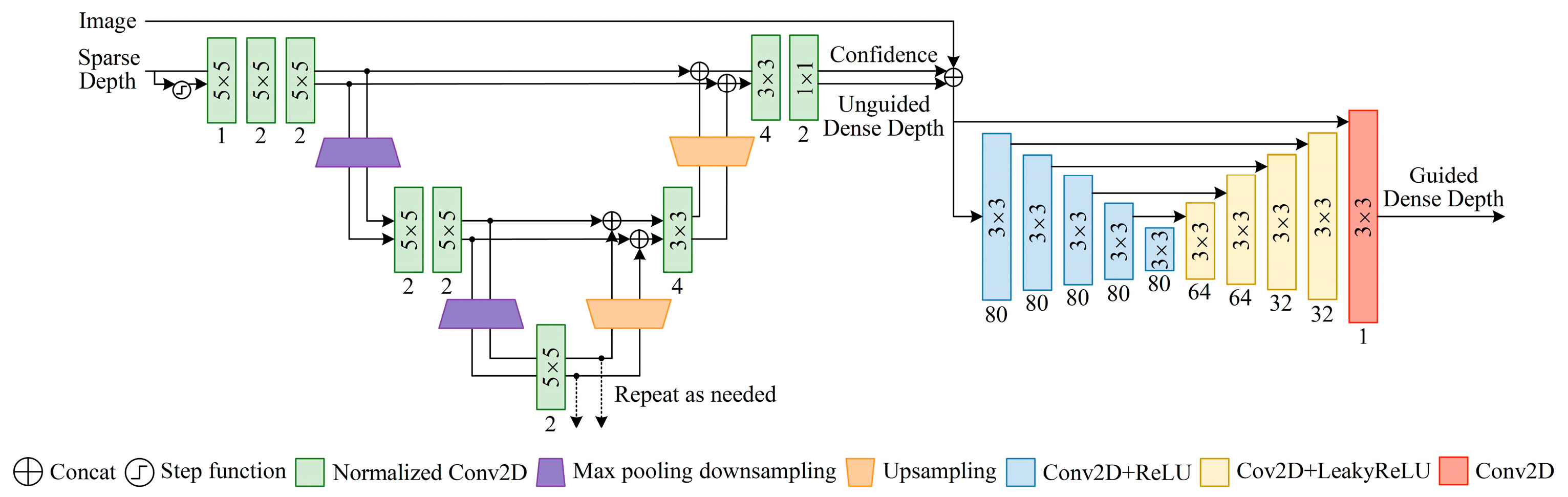

3.2. Depth and Motion Networks

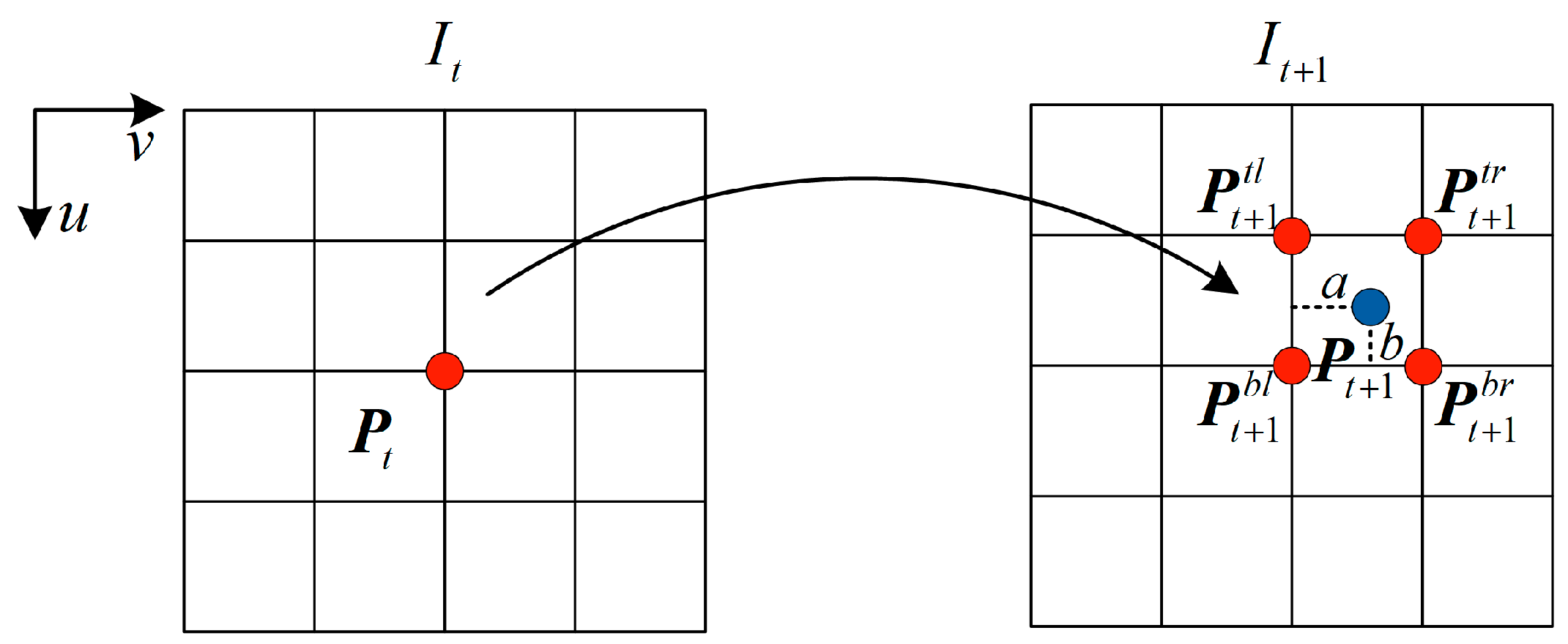

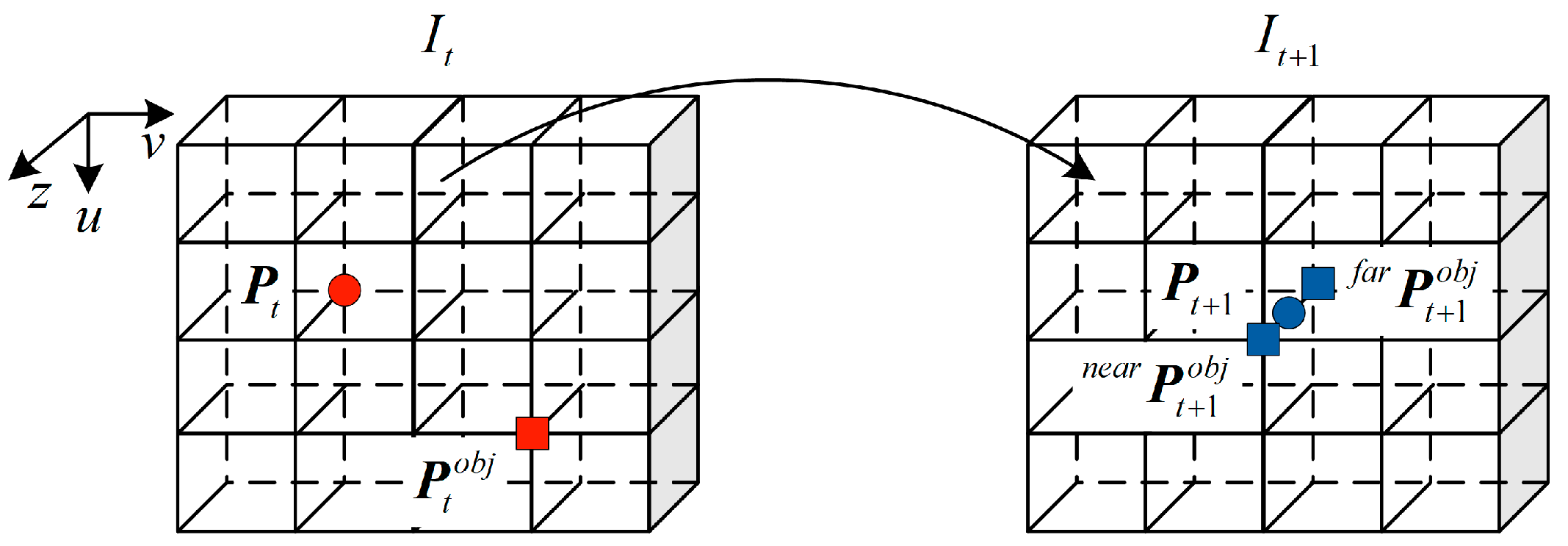

3.3. Losses

4. Experiments and Discussion

4.1. Datasets and Setup

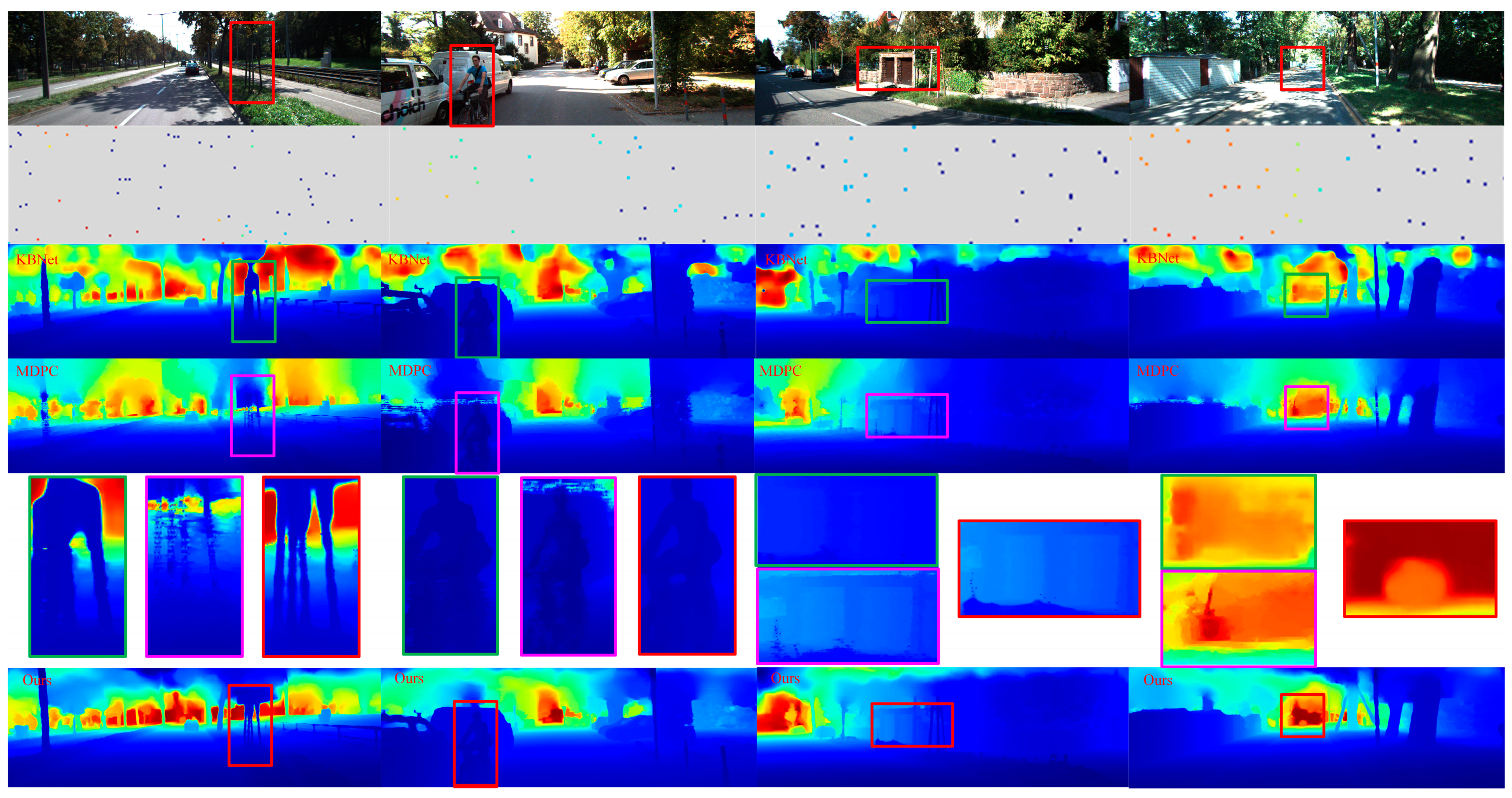

4.2. Comparison of the KITTI Depth Completion Dataset

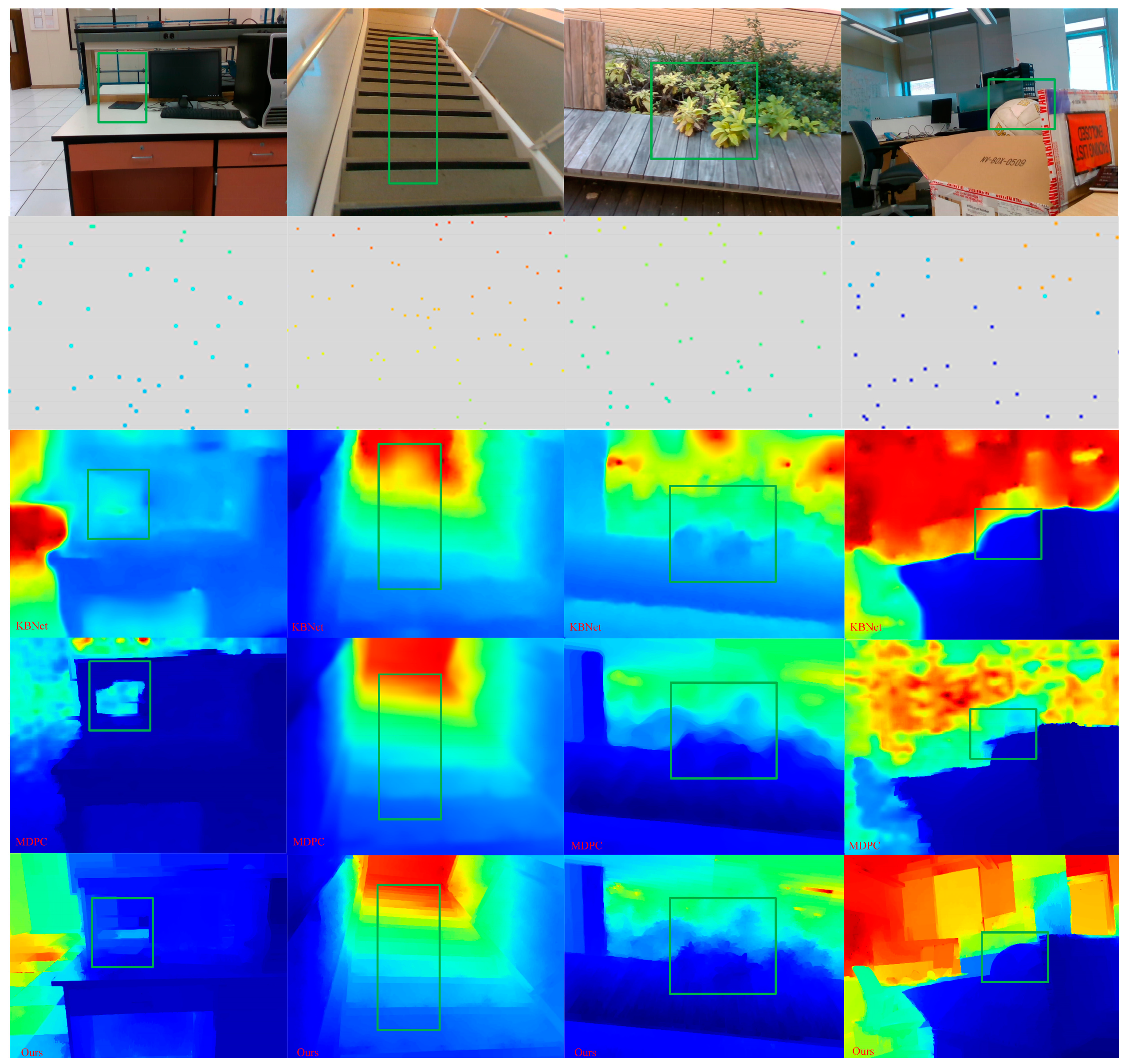

4.3. Comparison of the VOID Dataset

4.4. The Impact of the Proposed Losses

4.5. The Number of Parameters and Runtime Comparison

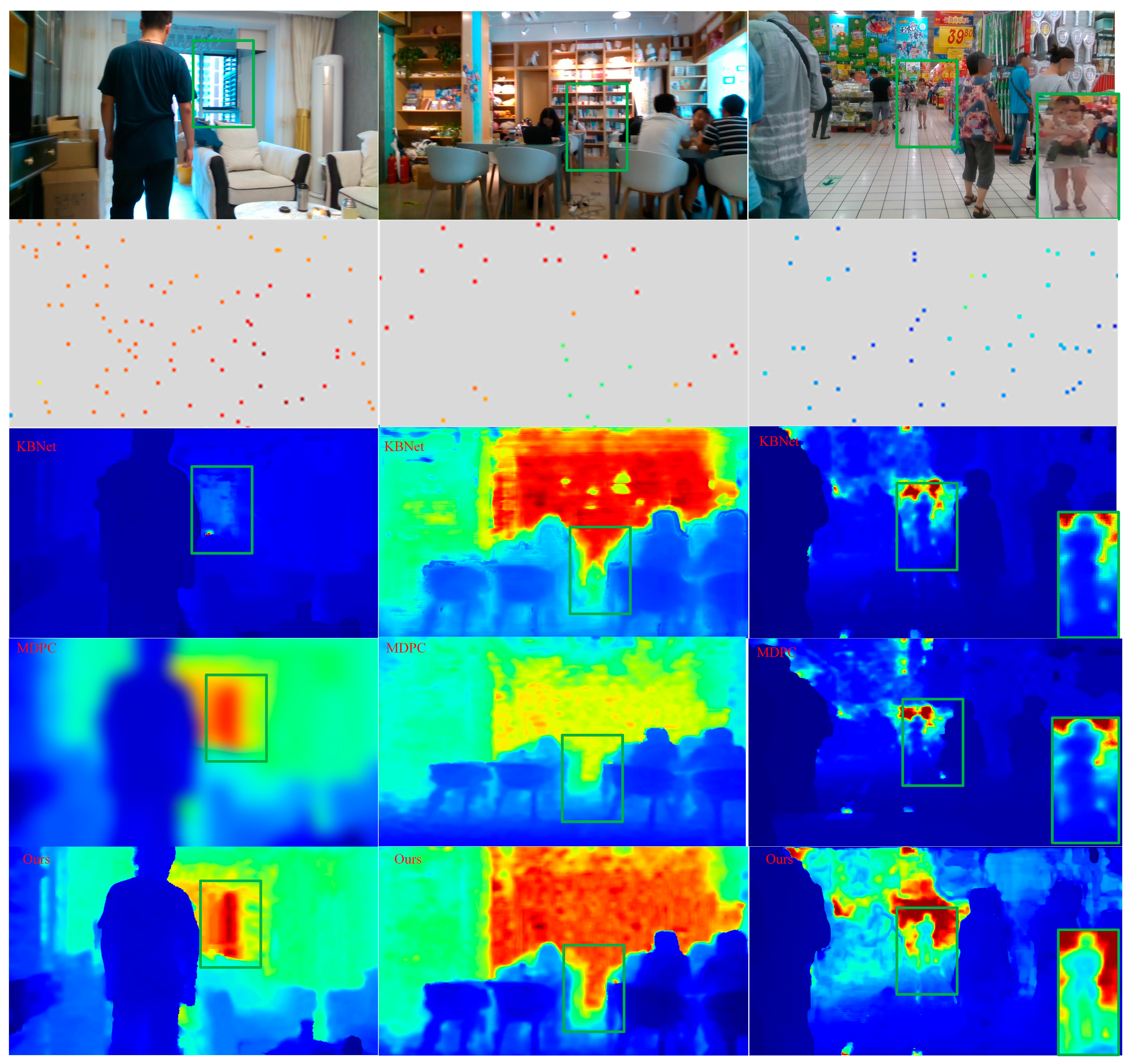

4.6. Generalization Capability on Real Scenes

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hu, J.; Bao, C.; Ozay, M.; Fan, C.; Gao, Q.; Liu, H.; Lam, T.L. Deep Depth Completion from Extremely Sparse Data: A Survey. arXiv 2022, arXiv:2205.05335. Available online: https://arxiv.org/abs/2205.05335 (accessed on 13 November 2022). [CrossRef]

- Zhang, Y.; Wei, P.; Zheng, N. A multi-cue guidance network for depth completion. Neurocomputing 2021, 441, 291–299. [Google Scholar] [CrossRef]

- Sartipi, K.; Do, T.; Ke, T.; Vuong, K.; Roumeliotis, S.I. Deep Depth Estimation from Visual-Inertial SLAM. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 10038–10045. [Google Scholar] [CrossRef]

- Lin, Y.; Cheng, T.; Thong, Q.; Zhou, W.; Yang, H. Dynamic Spatial Propagation Network for Depth Completion. arXiv 2022, arXiv:2202.09769. Available online: https://arxiv.org/abs/2202.09769 (accessed on 25 December 2022). [CrossRef]

- Van Gansbeke, W.; Neven, D.; de Brabandere, B.; van Gool, L. Sparse and Noisy LiDAR Completion with RGB Guidance and Uncertainty. In Proceedings of the 2019 16th International Conference on Machine Vision Applications (MVA), Tokyo, Japan, 27–31 May 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Zuo, X.; Merrill, N.; Li, W.; Liu, Y.; Pollefeys, M.; Huang, G. CodeVIO: Visual-Inertial Odometry with Learned Optimizable Dense Depth. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 14382–14388. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, R.; Shen, C.; Kong, T.; Li, L. Dense Contrastive Learning for Self-Supervised Visual Pre-Training. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 3023–3032. [Google Scholar] [CrossRef]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised Learning of Depth and Ego-Motion from Video. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6612–6619. [Google Scholar] [CrossRef]

- Wong, A.; Soatto, S. Unsupervised Depth Completion with Calibrated Backprojection Layers. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 12727–12736. [Google Scholar] [CrossRef]

- Zhang, Q.; Chen, X.; Wang, X.; Han, J.; Zhang, Y.; Yue, J. Self-Supervised Depth Completion Based on Multi-Modal Spatio-Temporal Consistency. Remote Sens. 2023, 15, 135. [Google Scholar] [CrossRef]

- Nekrasov, V.; Dharmasiri, T.; Spek, A.; Drummond, T.; Shen, C.; Reid, I. Real-Time Joint Semantic Segmentation and Depth Estimation Using Asymmetric Annotations. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 7101–7107. [Google Scholar] [CrossRef]

- Park, J.; Joo, K.; Hu, Z.; Liu, C.; Kweon, I.S. Non-local Spatial Propagation Network for Depth Completion. In 2020 European Conference on Computer Vision (ECCV); Springer: Cham, Switzerland, 2020; pp. 120–136. [Google Scholar] [CrossRef]

- Jaritz, M.; Charette, R.D.; Wirbel, E.; Perrotton, X.; Nashashibi, F. Sparse and Dense Data with CNNs: Depth Completion and Semantic Segmentation. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 52–60. [Google Scholar] [CrossRef]

- Eldesokey, A.; Felsberg, M.; Khan, F.S. Confidence Propagation through CNNs for Guided Sparse Depth Regression. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2423–2436. [Google Scholar] [CrossRef] [PubMed]

- Teixeira, L.; Oswald, M.R.; Pollefeys, M.; Chli, M. Aerial Single-View Depth Completion With Image-Guided Uncertainty Estimation. IEEE Robot. Autom. Lett. 2020, 5, 1055–1062. [Google Scholar] [CrossRef]

- Nazir, D.; Pagani, A.; Liwicki, M.; Stricker, D.; Afzal, M.Z. SemAttNet: Toward Attention-Based Semantic Aware Guided Depth Completion. IEEE Access 2022, 10, 120781–120791. [Google Scholar] [CrossRef]

- Jeong, Y.; Park, J.; Cho, D.; Hwang, Y.; Choi, S.B.; Kweon, I.S. Lightweight Depth Completion Network with Local Similarity-Preserving Knowledge Distillation. Sensors 2022, 22, 7388. [Google Scholar] [CrossRef] [PubMed]

- Ma, F.; Cavalheiro, G.V.; Karaman, S. Self-Supervised Sparse-to-Dense: Self-Supervised Depth Completion from LiDAR and Monocular Camera. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3288–3295. [Google Scholar] [CrossRef]

- Yang, Y.; Wong, A.; Soatto, S. Dense Depth Posterior (DDP) From Single Image and Sparse Range. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2019; pp. 3348–3357. [Google Scholar] [CrossRef]

- Wong, A.; Fei, X.; Tsuei, S.; Soatto, S. Unsupervised Depth Completion From Visual Inertial Odometry. IEEE Robot. Autom. Lett. 2020, 5, 1899–1906. [Google Scholar] [CrossRef]

- Moreau, A.; Mancas, M.; Dutoit, T. Unsupervised Depth Prediction from Monocular Sequences: Improving Performances Through Instance Segmentation. In Proceedings of the 2020 17th Conference on Computer and Robot Vision (CRV), Ottawa, ON, Canada, 13–15 May 2020; pp. 54–61. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, C.; Wang, H.; Wang, J.; Wang, Y.; Wang, X. Unsupervised Learning of Depth, Optical Flow and Pose With Occlusion From 3D Geometry. IEEE Trans. Intell. Transp. Syst. 2022, 23, 308–320. [Google Scholar] [CrossRef]

- Lu, Y.; Sarkis, M.; Lu, G. Multi-Task Learning for Single Image Depth Estimation and Segmentation Based on Unsupervised Network. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10788–10794. [Google Scholar] [CrossRef]

- Li, H.; Gordon, A.; Zhao, H.; Casser, V.; Angelova, A. Unsupervised Monocular Depth Learning in Dynamic Scenes. arXiv 2020, arXiv:2010.16404, 1908–1917. [Google Scholar] [CrossRef]

- Zhang, H.; Huo, J.; Sun, W.; Xue, M.; Zhou, J. A Static Feature Point Extraction Algorithm for Visual-Inertial SLAM. In Proceedings of the 2022 Chinese Automation Congress (CAC), Xiamen, China, 25–27 November 2022; pp. 1198–1203. [Google Scholar]

- Knutsson, H.; Westin, C.-F. Normalized and differential convolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 15–17 June 1993; pp. 515–523. [Google Scholar] [CrossRef]

- Westelius, C.-J. Focus of Attention and Gaze Control for Robot Vision. Ph.D. Dissertation, Department of Electrical Engineering, Linköping University, Linköping, Sweden, 1995. No. 379. p. 185. [Google Scholar]

- Gribbon, K.T.; Bailey, D.G. A novel approach to real-time bilinear interpolation. In Proceedings of the DELTA 2004: Second IEEE International Workshop on Electronic Design, Test and Applications, Perth, WA, Australia, 28–30 January 2004; pp. 126–131. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Uhrig, J.; Schneider, N.; Schneider, L.; Franke, U.; Brox, T.; Geiger, A. Sparsity Invariant CNNs. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 11–20. [Google Scholar] [CrossRef]

- She, Q.; Feng, F.; Hao, X.; Yang, Q.; Lan, C.; Lomonaco, V.; Shi, X.; Wang, Z.; Guo, Y.; Zhang, Y.; et al. OpenLORIS-Object: A Robotic Vision Dataset and Benchmark for Lifelong Deep Learning. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4767–4773. [Google Scholar] [CrossRef]

- Liu, T.; Agrawal, P.; Chen, A.; Hong, B.; Wong, A. Monitored Distillation for Positive Congruent Depth Completion. In 2022 European Conference on Computer Vision (ECCV); Springer: Cham, Switzerland, 2022; pp. 35–53. [Google Scholar] [CrossRef]

- Liu, L.; Song, X.; Sun, J.; Lyu, X.; Li, L.; Liu, Y.; Zhang, L. MFF-Net: Towards Efficient Monocular Depth Completion With Multi-Modal Feature Fusion. IEEE Robot. Autom. Lett. 2023, 8, 920–927. [Google Scholar] [CrossRef]

| Metric | Units | Definition |

|---|---|---|

| MAE | ||

| RMSE | ||

| iMAE | ||

| iRMSE |

| Method | Supervised | MAE | RMSE | iMAE | iRMSE |

|---|---|---|---|---|---|

| Ma [18] | No | 350.32 | 2312.57 | 2.05 | 7.38 |

| Yang [19] | No | 343.46 | 1299.85 | 1.57 | 4.07 |

| Wong [20] | No | 299.41 | 1169.97 | 1.20 | 3.56 |

| Wong [9] | No | 256.76 | 1069.47 | 1.02 | 2.95 |

| Liu [32] | No | 218.60 | 785.06 | 0.92 | 2.11 |

| Eldesokey [14] | Yes | 233.26 | 829.98 | 1.03 | 2.60 |

| Teixeira [15] | Yes | 218.57 | 737.49 | 0.97 | 2.31 |

| Park [12] | Yes | 199.59 | 741.68 | 0.84 | 1.99 |

| Liu [33] | Yes | 210.55 | 719.98 | 0.94 | 2.21 |

| Ours | No | 214.38 | 771.23 | 0.89 | 2.03 |

| Method | Density | MAE | RMSE | iMAE | iRMSE |

|---|---|---|---|---|---|

| Ours | 0.5% | 214.38 | 771.23 | 0.89 | 2.03 |

| 0.15% | 362.91 | 1358.14 | 1.65 | 3.87 | |

| 0.05% | 420.73 | 1536.49 | 1.90 | 4.35 |

| Method | Supervised | MAE | RMSE | iMAE | iRMSE |

|---|---|---|---|---|---|

| Ma [18] | No | 198.76 | 260.67 | 88.07 | 114.96 |

| Yang [19] | No | 151.86 | 222.36 | 74.59 | 112.36 |

| Wong [20] | No | 73.14 | 146.40 | 42.55 | 93.16 |

| Wong [9] | No | 39.80 | 95.86 | 21.16 | 49.72 |

| Liu [32] | No | 36.42 | 87.78 | 19.18 | 43.83 |

| Park [12] | Yes | 26.74 | 79.12 | 12.70 | 33.88 |

| Ours | No | 30.51 | 83.49 | 14.27 | 36.41 |

| Method | Density | MAE | RMSE | iMAE | iRMSE |

|---|---|---|---|---|---|

| Ours | 0.5% | 30.15 | 83.49 | 14.27 | 36.41 |

| 0.15% | 65.41 | 153.90 | 40.75 | 94.17 | |

| 0.05% | 76.92 | 174.26 | 51.84 | 105.03 |

| Methods | Losses | Confidence | MAE |

|---|---|---|---|

| Ours | No | 305.74 | |

| No | 330.56 | ||

| No | 238.12 | ||

| Yes | 227.99 | ||

| No | 231.05 | ||

| Yes | 223.47 | ||

| No | 226.34 | ||

| Yes | 218.02 | ||

| No | 226.51 | ||

| Yes | 214.38 | ||

| No | 224.78 | ||

| Yes | 217.91 | ||

| No | 230.43 | ||

| Yes | 222.65 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Huo, J. Unsupervised Depth Completion Guided by Visual Inertial System and Confidence. Sensors 2023, 23, 3430. https://doi.org/10.3390/s23073430

Zhang H, Huo J. Unsupervised Depth Completion Guided by Visual Inertial System and Confidence. Sensors. 2023; 23(7):3430. https://doi.org/10.3390/s23073430

Chicago/Turabian StyleZhang, Hanxuan, and Ju Huo. 2023. "Unsupervised Depth Completion Guided by Visual Inertial System and Confidence" Sensors 23, no. 7: 3430. https://doi.org/10.3390/s23073430

APA StyleZhang, H., & Huo, J. (2023). Unsupervised Depth Completion Guided by Visual Inertial System and Confidence. Sensors, 23(7), 3430. https://doi.org/10.3390/s23073430