Head-Mounted Projector for Manual Precision Tasks: Performance Assessment

Abstract

1. Introduction

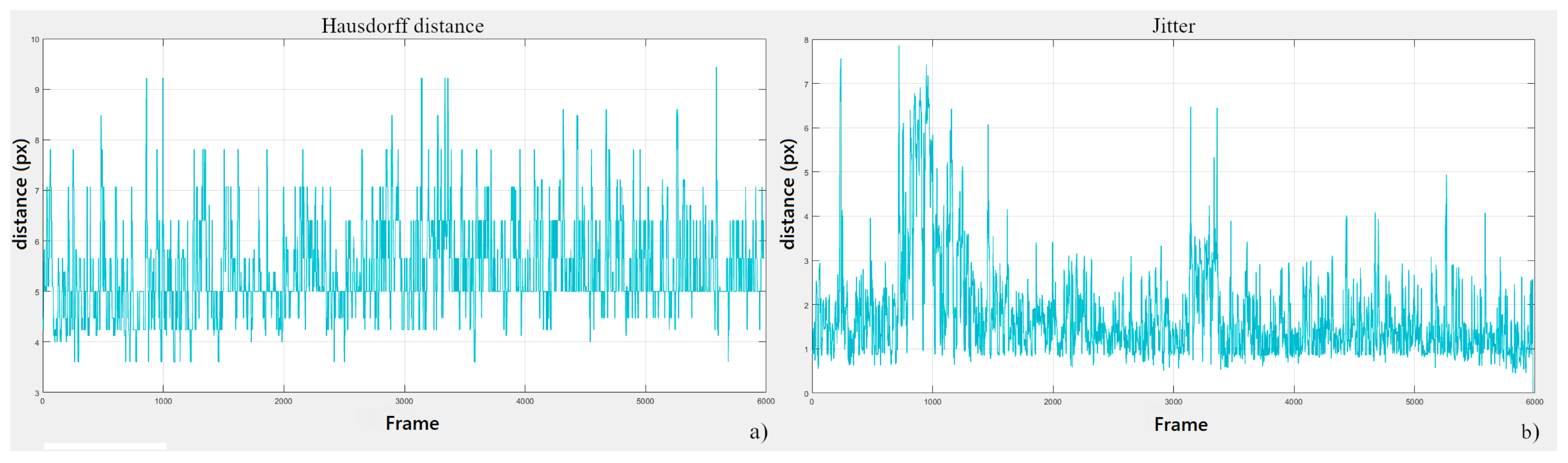

- Measure the temporal registration error of the HMP in terms of MTP latency.

- Measure the static spatial registration error in terms of accuracy by evaluating the distance between the actual position of the virtual content and the desired position for perfect alignment in static conditions.

- Measure how these two errors combine when a user wears the device and uses it to augment a tracked structure in real time.

2. Materials and Methods

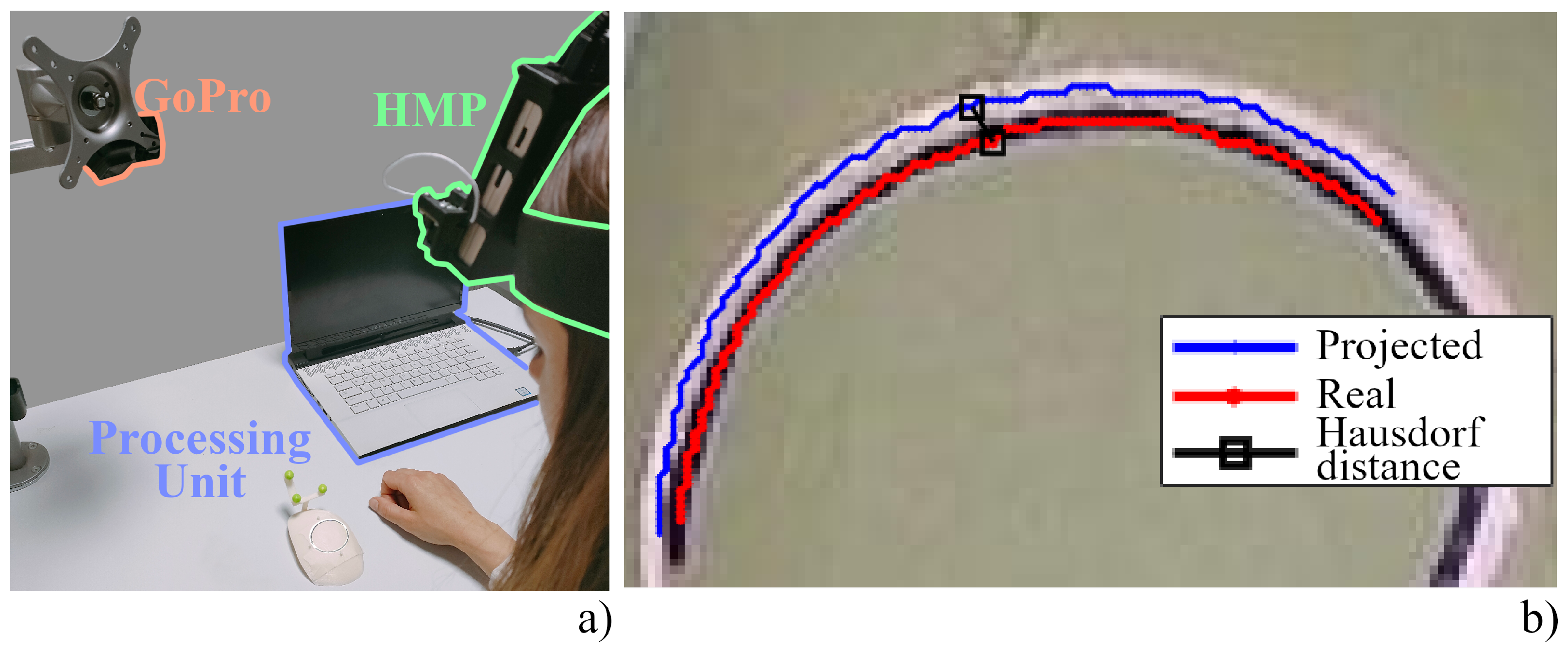

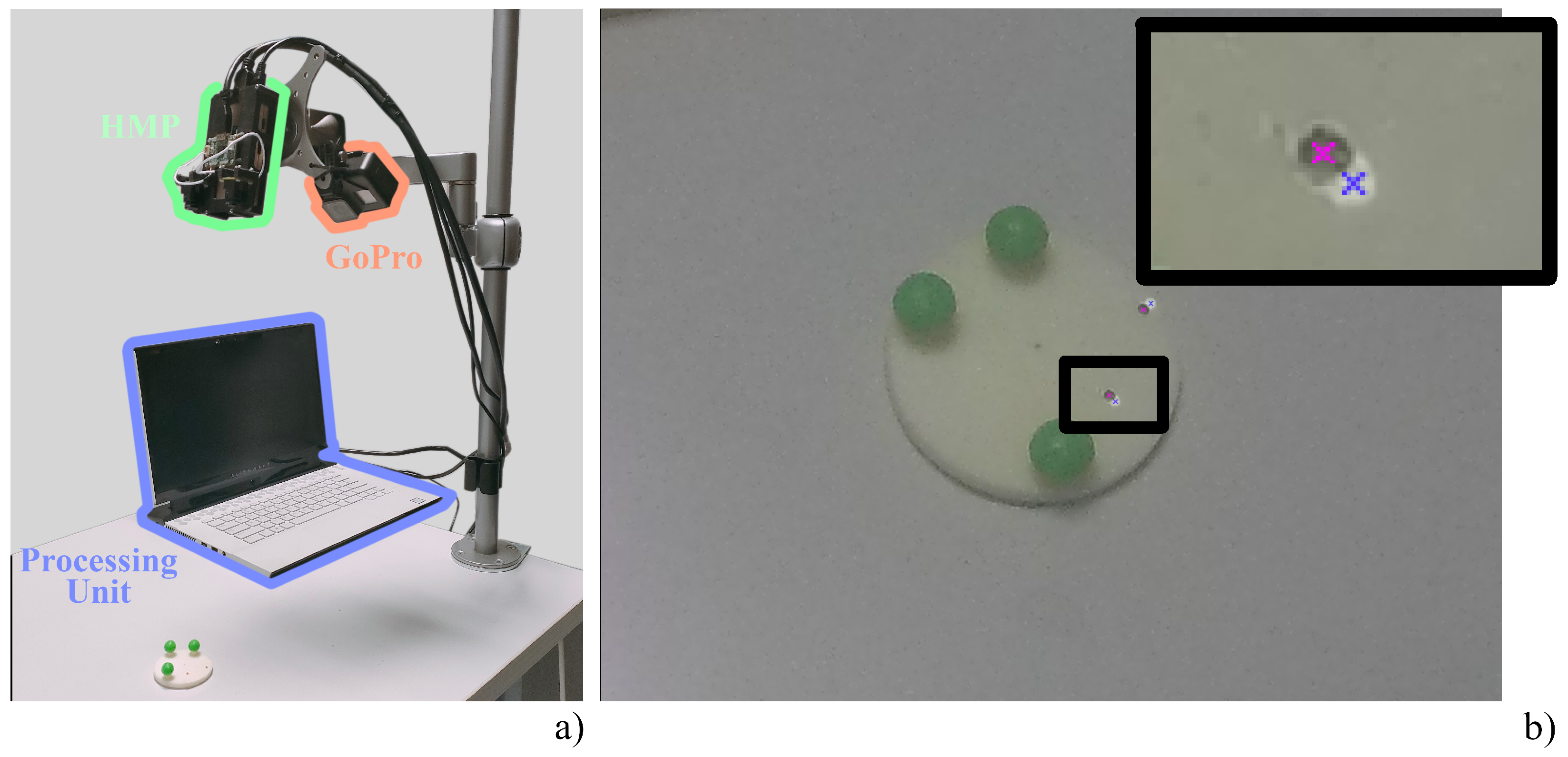

2.1. The Custom-Made Head-Mounted Projector

2.2. Augmented Reality Software Framework

- The software, originally conceived for the deployment of AR applications on video see-through (VST) and optical see-through (OST) display interfaces, is capable also of supporting the deployment of AR applications on different typologies of AR projectors. The software features a non-distributed architecture, which makes it also compatible with embedded computing units.

- The software framework is based on Compute Unified Device Architecture (CUDA by Nvidia) to leverage parallel computing over the multi-core GPU card.

- The software supports in situ visualization of 3D structures, thanks to the employment of the open-source library VTK for 3D computer graphics, modeling, and volume rendering.

- The software framework is highly configurable in terms of rendering and tracking capabilities.

- The software features a robust inside-out video-based tracking algorithm based on OpenCV API 3.4.1,

2.3. Experimental Protocol

2.3.1. Spatial Registration

2.3.2. Temporal Registration

2.3.3. User Test

3. Results and Discussion

3.1. Tests Results

3.2. Ergonomics Considerations for Future Development

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HMD | Head-mounted display |

| AR | Augmented reality |

| HMP | Head-mounted projector |

| VST | Video see-through |

| OST | Optical see-through |

References

- Fukuda, T.; Orlosky, J.; Kinoshita, T. Head mounted display implementations for use in industrial augmented and virtual reality applications. In Proceedings of the International Display Workshops, Sendai, Japan, 6–8 December 2017; pp. 156–159. [Google Scholar]

- Dhiman, H.; Martinez, S.; Paelke, V.; Röcker, C. Head-Mounted Displays in Industrial AR-Applications: Ready for Prime Time? In Proceedings of the HCI in Business, Government, and Organizations, Las Vegas, NV, USA, 15–20 July 2018; Nah, F.F.H., Xiao, B.S., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 67–78. [Google Scholar]

- Duncan-Vaidya, E.A.; Stevenson, E.L. The Effectiveness of an Augmented Reality Head-Mounted Display in Learning Skull Anatomy at a Community College. Anat. Sci. Educ. 2021, 14, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Morozov, M. Augmented Reality in Military: AR Can Enhance Warfare and Training. Obtido de. 2018. Available online: https://jasoren.com/augmented-reality-military (accessed on 1 February 2023).

- Kallberg, J.; Beitelman, M.V.; Mitsuoka, M.V.; Officer, C.W.; Pittman, J.; Boyce, M.W.; Arnold, L.C.T.W. The Tactical Considerations of Augmented and Mixed Reality Implementation. Mil. Rev. 2022, 662, 105. [Google Scholar]

- Rahman, R.; Wood, M.; Qian, L.; Price, C.; Johnson, A.; Osgood, G. Head-Mounted Display Use in Surgery: A Systematic Review. Surg. Innov. 2019, 27, 88–100. [Google Scholar] [CrossRef] [PubMed]

- Innocente, C.; Piazzolla, P.; Ulrich, L.; Moos, S.; Tornincasa, S.; Vezzetti, E. Mixed Reality-Based Support for Total Hip Arthroplasty Assessment. In Proceedings of the Advances on Mechanics, Design Engineering and Manufacturing IV, Ischia, Italy, 1–3 June 2022; Gerbino, S., Lanzotti, A., Martorelli, M., Mirálbes Buil, R., Rizzi, C., Roucoules, L., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 159–169. [Google Scholar]

- Aggarwal, R.; Singhal, A. Augmented Reality and its effect on our life. In Proceedings of the 2019 9th International Conference on Cloud Computing, Data Science Engineering (Confluence), Noida, India, 10–11 January 2019; pp. 510–515. [Google Scholar] [CrossRef]

- Aromaa, S.; Väätänen, A.; Aaltonen, I.; Goriachev, V.; Helin, K.; Karjalainen, J. Awareness of the real-world environment when using augmented reality head-mounted display. Appl. Ergon. 2020, 88, 103145. [Google Scholar] [CrossRef] [PubMed]

- Stauffert, J.P.; Niebling, F.; Latoschik, M. Latency and Cybersickness: Impact, Causes, and Measures. A Review. Front. Virtual Real. 2020, 1, 582204. [Google Scholar] [CrossRef]

- Meyers, M.; Hughes, C.; Fidopiastis, C.; Stanney, K. Long Duration AR Exposure and the Potential for Physiological Effects. MODSIM World 2020, 61, 1–9. [Google Scholar]

- Condino, S.; Carbone, M.; Piazza, R.; Ferrari, M.; Ferrari, V. Perceptual Limits of Optical See-Through Visors for Augmented Reality Guidance of Manual Tasks. IEEE Trans. Biomed. Eng. 2019, 67, 411–419. [Google Scholar] [CrossRef] [PubMed]

- Nazarova, E.; Sautenkov, O.; Altamirano Cabrera, M.; Tirado, J.; Serpiva, V.; Rakhmatulin, V.; Tsetserukou, D. CobotAR: Interaction with Robots using Omnidirectionally Projected Image and DNN-based Gesture Recognition. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 2590–2595. [Google Scholar] [CrossRef]

- Hartmann, J.; Yeh, Y.T.; Vogel, D. AAR: Augmenting a Wearable Augmented Reality Display with an Actuated Head-Mounted Projector. In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology, Virtual, 20–23 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 445–458. [Google Scholar]

- Cortes, G.; Marchand, E.; Brincin, G.; Lécuyer, A. MoSART: Mobile Spatial Augmented Reality for 3D Interaction with Tangible Objects. Front. Robot. AI 2018, 5, 93. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T. Low-Latency Mixed Reality Headset. In Low-Latency VR/AR Headset Project from Conix Research Center, Computing on Network Infrastructure for Pervasive Perception, Cognition and Action. 2020. Available online: https://people.eecs.berkeley.edu/~kubitron/courses/cs262a-F19/projects/reports/project14_report.pdf (accessed on 1 February 2023).

- Jerald, J.; Whitton, M. Relating Scene-Motion Thresholds to Latency Thresholds for Head-Mounted Displays. In Proceedings of the 2009 IEEE Virtual Reality Conference, Lafayette, LA, USA, 14–18 March 2009; pp. 211–218. [Google Scholar] [CrossRef]

- Kress, B.C. Digital optical elements and technologies (EDO19): Applications to AR/VR/MR. In Proceedings of the Digital Optical Technologies 2019, Munich, Germany, 24–26 June 2019; Kress, B.C., Schelkens, P., Eds.; International Society for Optics and Photonics (SPIE): Bellingham, WA, USA, 2019; Volume 11062, pp. 343–355. [Google Scholar] [CrossRef]

- Louis, T.; Troccaz, J.; Rochet-Capellan, A.; Bérard, F. Is It Real? Measuring the Effect of Resolution, Latency, Frame Rate and Jitter on the Presence of Virtual Entities. In Proceedings of the 2019 ACM International Conference on Interactive Surfaces and Spaces, Daejeon, Republic of Korea, 10–13 November 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 5–16. [Google Scholar] [CrossRef]

- Zheng, F. Spatio-Temporal Registration in Augmented Reality. Ph.D. Thesis, The University of North Carolina at Chapel Hill, Chapel Hill, NC, USA, 2015. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Chang, W.; Wu, C. Plane-based geometric calibration of a projector-camera reconstruction system. In Proceedings of the 2014 10th France-Japan/8th Europe-Asia Congress on Mecatronics (MECATRONICS2014-Tokyo), Tokyo, Japan, 27–29 November 2014; pp. 219–223. [Google Scholar]

- Cutolo, F.; Fida, B.; Cattari, N.; Ferrari, V. Software Framework for Customized Augmented Reality Headsets in Medicine. IEEE Access 2020, 8, 706–720. [Google Scholar] [CrossRef]

- Mamone, V.; Fonzo, M.D.; Esposito, N.; Ferrari, M.; Ferrari, V. Monitoring Wound Healing With Contactless Measurements and Augmented Reality. IEEE J. Transl. Eng. Health Med. 2020, 8, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Cutolo, F.; Cattari, N.; Carbone, M.; D’Amato, R.; Ferrari, V. Device-Agnostic Augmented Reality Rendering Pipeline for AR in Medicine. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bari, Italy, 4–8 October 2021; pp. 340–345. [Google Scholar] [CrossRef]

- Mamone, V.; Cutolo, F.; Condino, S.; Ferrari, V. Projected Augmented Reality to Guide Manual Precision Tasks: An Alternative to Head Mounted Displays. IEEE Trans. Hum.-Mach. Syst. 2021, 52, 567–577. [Google Scholar] [CrossRef]

- Mamone, V.; Ferrari, V.; Condino, S.; Cutolo, F. Projected Augmented Reality to Drive Osteotomy Surgery: Implementation and Comparison With Video See-Through Technology. IEEE Access 2020, 8, 169024–169035. [Google Scholar] [CrossRef]

- Gao, Y.; Zhao, Y.; Xie, L.; Zheng, G. A Projector-Based Augmented Reality Navigation System for Computer-Assisted Surgery. Sensors 2021, 21, 2931. [Google Scholar] [CrossRef] [PubMed]

- Hussain, R.; Lalande, A.; Guigou, C.; Grayeli, A.B. Contribution of Augmented Reality to Minimally Invasive Computer-Assisted Cranial Base Surgery. IEEE J. Biomed. Health Infor. 2020, 24, 2093–2106. [Google Scholar] [CrossRef] [PubMed]

- D’Amato, R.; Cutolo, F.; Badiali, G.; Carbone, M.; Lu, H.; Hogenbirk, H.; Ferrari, V. Key Ergonomics Requirements and Possible Mechanical Solutions for Augmented Reality Head-Mounted Displays in Surgery. Multimodal Technol. Interact. 2022, 6, 15. [Google Scholar] [CrossRef]

- Nguyen, N.Q.; Cardinell, J.; Ramjist, J.M.; Androutsos, D.; Yang, V.X. Augmented reality and human factors regarding the neurosurgical operating room workflow. In Proceedings of the Optical Architectures for Displays and Sensing in Augmented, Virtual, 28–31 March 2021; SPIE: Bellingham, WA, USA, 2020; Volume 11310, pp. 119–125. [Google Scholar]

- Lin, C.; Andersen, D.; Popescu, V.; Rojas-Muñoz, E.; Cabrera, M.E.; Mullis, B.; Zarzaur, B.; Anderson, K.; Marley, S.; Wachs, J. A First-Person Mentee Second-Person Mentor AR Interface for Surgical Telementoring. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Munich, Germany, 16–20 October 2018; pp. 3–8. [Google Scholar] [CrossRef]

- Ito, K.; Tada, M.; Ujike, H.; Hyodo, K. Effects of Weight and Balance of Head Mounted Display on Physical Load. In Proceedings of the Virtual, Augmented and Mixed Reality, Multimodal Interaction, Orlando, FL, USA, 26–31 July 2019; Chen, J.Y., Fragomeni, G., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 450–460. [Google Scholar]

- Design Criteria Standard: Human Engineering; Department of Defense: Washington, DC, USA, 2020.

- Cummings, M.L. Provocations: Technology Impedances to Augmented Cognition. Ergon. Des. 2010, 18, 25–27. [Google Scholar] [CrossRef]

| Setting | Test | Resolution | Frame-Rate | Angular Resolution | Working Depth |

|---|---|---|---|---|---|

| 1 | Spatial registration | 3000 × 4000 | - | 0.40 arcmin/px | 350–600 mm |

| 2 | Temporal registration | 1280 × 720 | 240 fps | 1.23 arcmin/px | 475 mm |

| 3 | User test | 1280 × 720 | 240 fps | 1.23 arcmin/px | 475 mm |

| Setting | Test | On Image Registration Error (px) | Visual Angle Registration Error (arcmin) | Absolute Registration Error (mm) |

|---|---|---|---|---|

| 1 | Spatial registration | |||

| 3 | User test |

| Setting | Test | Motion-to-Photon Latency (ms) |

|---|---|---|

| 2 | Temporal registration |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mamone, V.; Ferrari, V.; D’Amato, R.; Condino, S.; Cattari, N.; Cutolo, F. Head-Mounted Projector for Manual Precision Tasks: Performance Assessment. Sensors 2023, 23, 3494. https://doi.org/10.3390/s23073494

Mamone V, Ferrari V, D’Amato R, Condino S, Cattari N, Cutolo F. Head-Mounted Projector for Manual Precision Tasks: Performance Assessment. Sensors. 2023; 23(7):3494. https://doi.org/10.3390/s23073494

Chicago/Turabian StyleMamone, Virginia, Vincenzo Ferrari, Renzo D’Amato, Sara Condino, Nadia Cattari, and Fabrizio Cutolo. 2023. "Head-Mounted Projector for Manual Precision Tasks: Performance Assessment" Sensors 23, no. 7: 3494. https://doi.org/10.3390/s23073494

APA StyleMamone, V., Ferrari, V., D’Amato, R., Condino, S., Cattari, N., & Cutolo, F. (2023). Head-Mounted Projector for Manual Precision Tasks: Performance Assessment. Sensors, 23(7), 3494. https://doi.org/10.3390/s23073494