ROS-Based Autonomous Navigation Robot Platform with Stepping Motor

Abstract

:1. Introduction

2. Previous Work

3. Proposed Robot Architecture

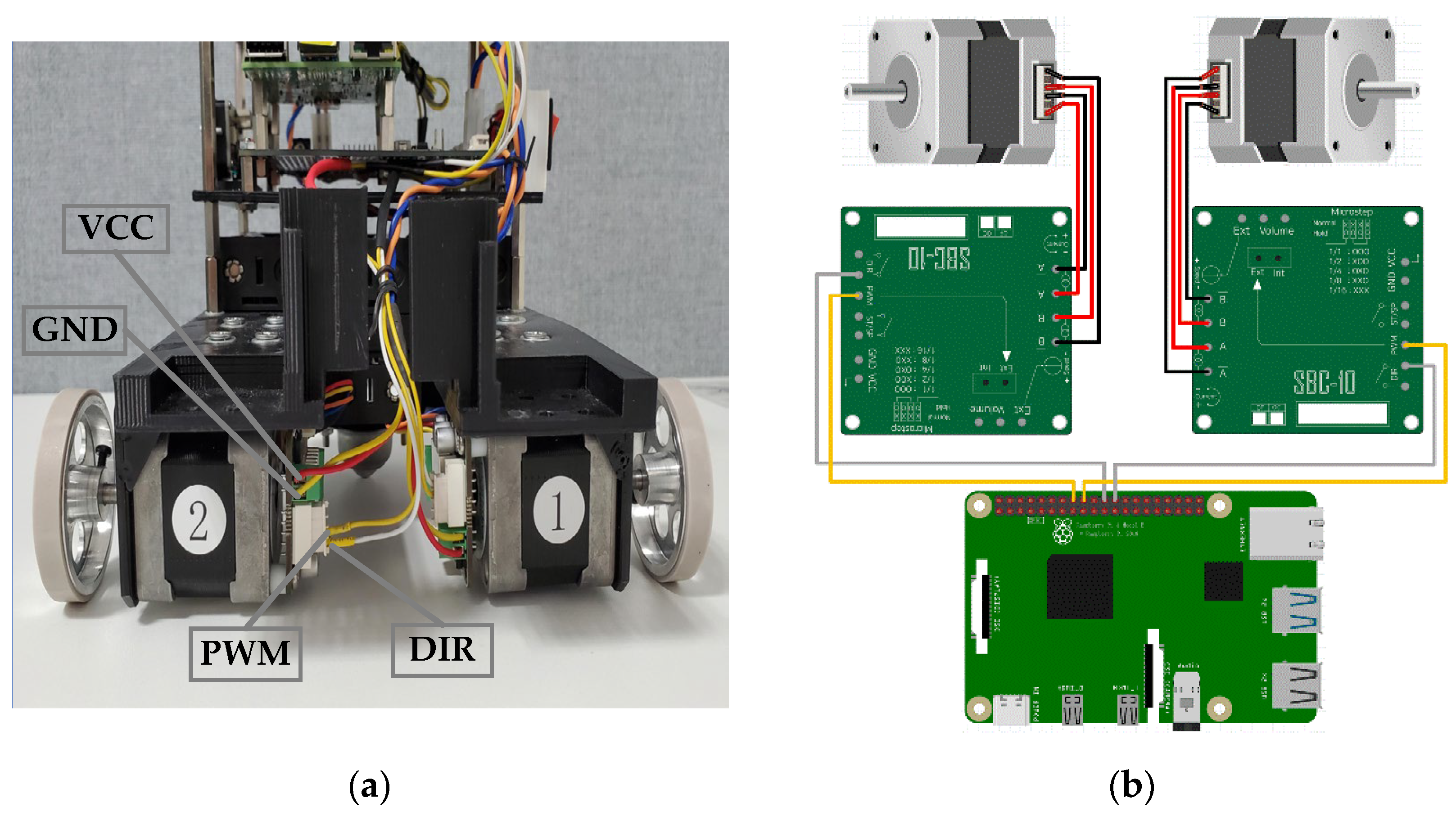

3.1. Hardware

3.2. Software

4. Proposed Stepping Motor Control Algorithm

- Step 1: Initialization and variable declaration: We use Raspberry Pi 4B as the control board of the stepping motor. After connecting the Raspberry Pi and the stepping motor, the WiringPI interface is initialised. Initial values are assigned to the relevant parameters of the left and right motors and wheels.

- Step 2: Subscribe to ROS topics:/cmd_vel. Obtain the linear velocity () and angular velocity () that the robot needs to achieve.

- Step 3: Calculate the motor velocity and direction. Convert the speed command (/cmd_vel) into the left wheel velocity () and right wheel velocity (). Use the judgement condition to define whether the motor rotates clockwise or counterclockwise. A value of 1 represents clockwise rotation.

- Step 4: Calculate the rotation speed (, ) of the left and right motors. Because the frequency of the speed instruction is 1 Hz, in this study, we consider the instantaneous rotation speed of the wheel at a point in revolutions per second. Using the formula for rotation speed shown in Figure 7, we can easily convert the speed of the wheel into the rotation speed of the motor.

- Step 5: Calculate the number of pulses for the left and right motors. Based on the calculation in the previous step, we have the required and for the left and right motors. Therefore, we calculate the number of pulses (, ) required to achieve the specified values (, ).

- Step 6: Determine whether the robot is moving for the first time. For the first move, the robot must calculate and output the coefficients of polynomial equations. If the robot has already started moving, the polynomial equation does not need to be recalculated.

- Step 7: Solve the polynomial regression equation to obtain the fitting curve of the wheel speed () and PWM time delay (), that is, a polynomial equation.

- o

- Step 7.1: Calculate the rotation speed () and number of pulses (). Unlike steps 4 and 5, only the speed of one wheel and number of pulses required must be considered in this step. Notably, in the online phase, only the directions of rotation of the left and right wheels are different.

- o

- Step 7.2: Consider different t values to calculate . According to the actual motor test, the clock frequency of the Raspberry Pi indirectly affects the pulse frequency. In other words, the generation time of each pulse is not fixed. We calculate the delay under different t within a range of 10–100 ms in intervals of 10 ms to improve the robustness of the dataset, t is randomly generated ten times within the range, and is calculated.

- o

- Step 7.3: Output polynomial coefficients (α, β, γ, δ). Determine the data lists of A and B for the wheel speed () and time delay (), respectively, and perform polynomial equation fitting. In this process, and are the input and output values, respectively. Obtain the polynomial coefficients.

- Step 8: Calculate the time delay according to the polynomial regression formula.

- Step 9: Drive the stepping motor. According to the calculated parameter values, the Raspberry Pi board will use PWM software to simultaneously drive the left and right stepping motors to ensure that Owlbot reaches the specified speed.

| Algorithm 1: Proposed stepping motor control algorithm |

| Iutput: |

| Output: |

| 1. Initialize the WiringPi interface for the LM and RM |

| 2. Declare the variables of LW and RW: . |

| 3. Declare the variables of LM and RM: , … |

| 4. Measure |

| 5. Set |

| 6. Subscribe to the ROS topic/cmd_vel and obtain |

| 7. |

| 8. for all/cmd_vel(Vx, Vθ) do |

| 9. if then |

| 10. |

| 11. |

| 12. else |

| 13. |

| 14. |

| 15. end if |

| 16. if then |

| 17. |

| 18. if then |

| 19. |

| 20. if then |

| 21. |

| 22. else |

| 23. |

| 24. end if |

| 25. |

| 26. |

| 27. |

| 28. |

| 29. if then |

| 30. Generate array ] |

| 31. for each do |

| 32. |

| 33. |

| 34. In the range from 10 ms to 100 ms, randomly generate value t 10 times |

| 35. |

| 36. Insert Wv into list A, and insert Md into list B |

| 37. end for |

| 38. Create a polynomial regression equation from list A and B |

| 39. Output polynomial coefficients: |

| 40. else |

| 41. |

| 42. |

| 43. Drive the stepping motor |

| 44. |

| 45. end if |

| 46. end for |

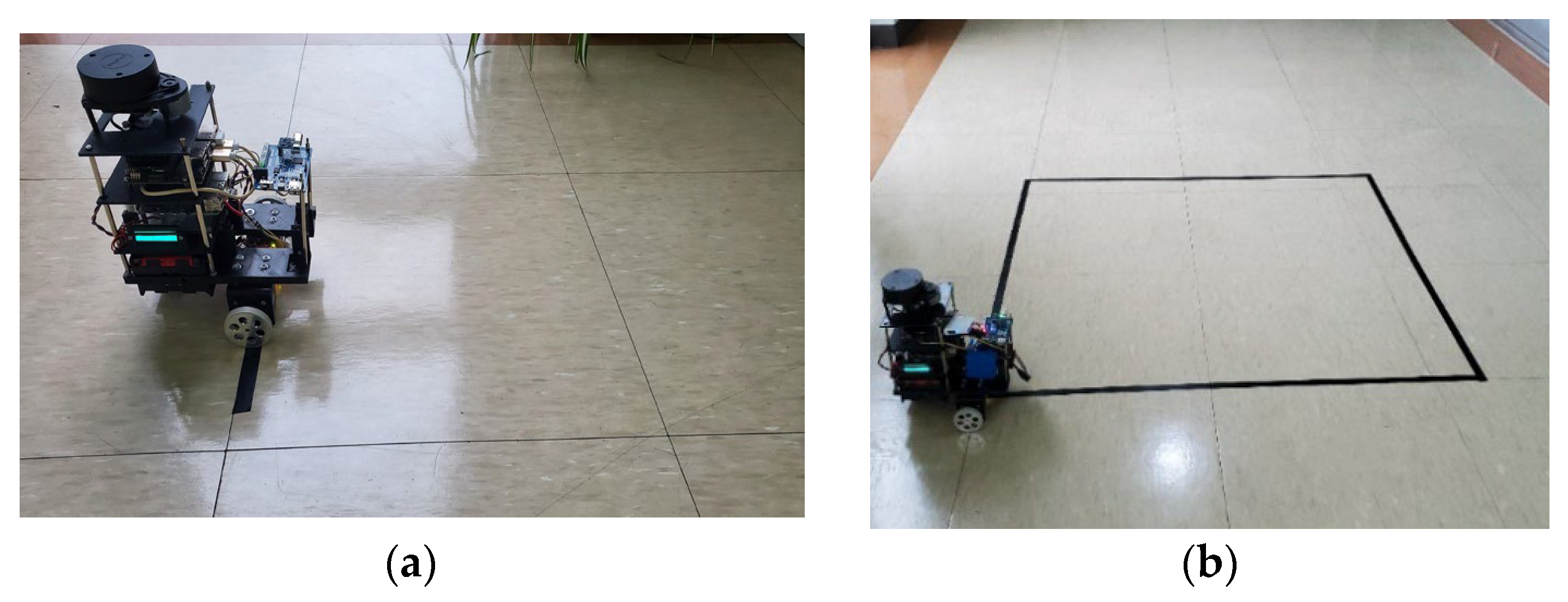

5. Experiments

5.1. Teleoperation Movement and Odometry Evaluation

5.2. Mapping

5.3. Navigation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bahrin, M.A.K.; Othman, M.F.; Azli, N.H.N.; Talib, M.F. Industry 4.0: A Review on Industrial Automation and Robotic. J. Teknol. 2016, 78, 137–143. [Google Scholar]

- Rubio, F.; Valero, F.; Llopis-Albert, C. A Review of Mobile Robots: Concepts, Methods, Theoretical Framework, and Applications. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419839596. [Google Scholar] [CrossRef]

- Peng, Z.; Wang, J.; Han, Q.-L. Path-Following Control of Autonomous Underwater Vehicles Subject to Velocity and Input Constraints via Neurodynamic Optimization. IEEE Trans. Ind. Electron. 2019, 66, 8724–8732. [Google Scholar] [CrossRef]

- Chen, D.; Li, S.; Wu, Q. A Novel Supertwisting Zeroing Neural Network with Application to Mobile Robot Manipulators. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 1776–1787. [Google Scholar] [CrossRef] [PubMed]

- Fumagalli, M.; Naldi, R.; Macchelli, A.; Carloni, R.; Stramigioli, S.; Marconi, L. Modeling and Control of a Flying Robot for Contact Inspection. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve Portugal, 7–12 October 2012; pp. 3532–3537. [Google Scholar]

- Cashmore, M.; Fox, M.; Long, D.; Magazzeni, D.; Ridder, B.; Carrera, A.; Palomeras, N.; Hurtos, N.; Carreras, M. Rosplan: Planning in the Robot Operating System. In Proceedings of the International Conference on Automated Planning and Scheduling, Jerusalem, Israel, 7–11 June 2015; Volume 25, pp. 333–341. [Google Scholar]

- Joseph, L. Robot Operating System (Ros) for Absolute Beginners; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Joseph, L.; Cacace, J. Mastering ROS for Robotics Programming: Design, Build, and Simulate Complex Robots Using the Robot Operating System; Packt Publishing, Ltd.: Birmingham, UK, 2018. [Google Scholar]

- Kam, H.R.; Lee, S.-H.; Park, T.; Kim, C.-H. Rviz: A Toolkit for Real Domain Data Visualization. Telecommun. Syst. 2015, 60, 337–345. [Google Scholar] [CrossRef]

- Rviz—ROS Wiki. Available online: http://wiki.ros.org/rviz (accessed on 30 January 2023).

- Pritsker, A.A.B. Introduction to Simulation and SLAM II; Halsted Press: Ultimo, NSW, Australia, 1984. [Google Scholar]

- DeSouza, G.N.; Kak, A.C. Vision for Mobile Robot Navigation: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 237–267. [Google Scholar] [CrossRef]

- Gomes, L.; Vale, Z.A.; Corchado, J.M. Multi-Agent Microgrid Management System for Single-Board Computers: A Case Study on Peer-to-Peer Energy Trading. IEEE Access 2020, 8, 64169–64183. [Google Scholar] [CrossRef]

- Upton, E.; Halfacree, G. Raspberry Pi User Guide; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Sati, V.; Sánchez, S.M.; Shoeibi, N.; Arora, A.; Corchado, J.M. Face Detection and Recognition, Face Emotion Recognition through NVIDIA Jetson Nano. In Proceedings of the Ambient Intelligence–Software and Applications: 11th International Symposium on Ambient Intelligence, L′Aquila, Italy, 7–9 October 2020; pp. 177–185. [Google Scholar]

- Li, X.; Ge, M.; Dai, X.; Ren, X.; Fritsche, M.; Wickert, J.; Schuh, H. Accuracy and Reliability of Multi-GNSS Real-Time Precise Positioning: GPS, GLONASS, BeiDou, and Galileo. J. Geod. 2015, 89, 607–635. [Google Scholar] [CrossRef]

- Ahmad, N.; Ghazilla, R.A.R.; Khairi, N.M.; Kasi, V. Reviews on Various Inertial Measurement Unit (IMU) Sensor Applications. Int. J. Signal Process. Syst. 2013, 1, 256–262. [Google Scholar] [CrossRef]

- Quigley, M.; Gerkey, B.; Smart, W.D. Programming Robots with ROS: A Practical Introduction to the Robot Operating System; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2015. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-Time Loop Closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Brooker, G.M. Mutual Interference of Millimeter-Wave Radar Systems. IEEE Trans. Electromagn. Compat. 2007, 49, 170–181. [Google Scholar] [CrossRef]

- Holtz, J. Advanced PWM and Predictive Control—An Overview. IEEE Trans. Ind. Electron. 2016, 63, 3837–3844. [Google Scholar] [CrossRef]

- Huang, G.; Lee, S. PC-Based PID Speed Control in DC Motor. In Proceedings of the 2008 International Conference on Audio, Language and Image Processing, Shanghai, China, 7–9 July 2008; pp. 400–407. [Google Scholar]

- Betin, F.; Pinchon, D.; Capolino, G.-A. Fuzzy Logic Applied to Speed Control of a Stepping Motor Drive. IEEE Trans. Ind. Electron. 2000, 47, 610–622. [Google Scholar] [CrossRef]

- MBot—Makeblock. Available online: https://www.makeblock.com/cn/mbot (accessed on 12 March 2023).

- Tiny:Bit Robot. Available online: http://www.yahboom.net/study/Tiny:bit (accessed on 12 March 2023).

- Yahboom. Available online: http://www.yahboom.net/study/G1-T-PI (accessed on 12 March 2023).

- Yahboom. Available online: http://www.yahboom.net/study/JETBOT-mini (accessed on 12 March 2023).

- AI Robot Kits from NVIDIA JetBot Partners. Available online: https://www.nvidia.com/en-us/autonomous-machines/embedded-systems/jetbot-ai-robot-kit/ (accessed on 12 March 2023).

- Yahboom. Available online: http://www.yahboom.net/study/Transbot-jetson_nano (accessed on 12 March 2023).

- TurtleBot. Available online: https://www.turtlebot.com/ (accessed on 12 March 2023).

- Leo Rover | Robot Developer Kit|Open-Source: ROS and for Outdoor Use. Available online: https://www.leorover.tech/ (accessed on 12 March 2023).

- SUMMIT-XL Mobile Robot—Indoor & Outdoor|Robotnik®. Available online: https://robotnik.eu/products/mobile-robots/summit-xl-en-2/ (accessed on 12 March 2023).

- Pinto, V.H.; Gonçalves, J.; Costa, P. Model of a DC Motor with Worm Gearbox. In Proceedings of the CONTROLO 2020: Proceedings of the 14th APCA International Conference on Automatic Control and Soft Computing, Bragança, Portugal, 1–3 July 2020; pp. 638–647. [Google Scholar]

- Hector_slam—ROS Wiki. Available online: http://wiki.ros.org/hector_slam (accessed on 12 March 2023).

- Gmapping—ROS Wiki. Available online: http://wiki.ros.org/gmapping (accessed on 12 March 2023).

- Slam_karto—ROS Wiki. Available online: http://wiki.ros.org/slam_karto (accessed on 12 March 2023).

- Rf2o—ROS Wiki. Available online: http://wiki.ros.org/rf2o (accessed on 12 March 2023).

- Robot_pose_ekf—ROS Wiki. Available online: http://wiki.ros.org/robot_pose_ekf (accessed on 12 March 2023).

- Navigation—ROS Wiki. Available online: http://wiki.ros.org/navigation (accessed on 12 March 2023).

- Duchoň, F.; Babinec, A.; Kajan, M.; Beňo, P.; Florek, M.; Fico, T.; Jurišica, L. Path Planning with Modified a Star Algorithm for a Mobile Robot. Procedia Eng. 2014, 96, 59–69. [Google Scholar] [CrossRef]

- Base_local_planner—ROS Wiki. Available online: http://wiki.ros.org/base_local_planner (accessed on 12 March 2023).

- Amcl—ROS Wiki. Available online: http://wiki.ros.org/amcl (accessed on 12 March 2023).

| Robot | Hardware | Software | Main Functions | |||

|---|---|---|---|---|---|---|

| Controller/Driver | Actuators | Sensors | ROS | Programming Tool | ||

| Mbot [24] | ATmega328 | Plastic DC | UDS, LF, BT | No | Arduino IDE | OA, LP |

| Tiny:bit [25] | Micro:bit board | Plastic DC | UDS, LF, SS, IR | No | Graphical module/ Python | OA, LP |

| G1 tank [26] | Raspberry Pi/ Expansion board | DC | UDS, TR, LS, Camera | No | C/Python | OA, LP, OR |

| Jetbot Mini [27] | Jetson Nano/ Expansion board | Plastic DC | Camera | Yes | Python | OA, LP, OR |

| Jetbot [28] | Jetson Nano/ Expansion board | Plastic DC | Camera | Yes | Python | OA, FD, OR |

| Transbot [29] | Jetson Nano/ Expansion board | DC with OE | Depth Camera, LiDAR | Yes | Python | SLAM, Navigation |

| Turtlebot 3 [30] | Raspberry Pi/ Open CR | DYNAMIXELAX DC with OE | Camera, LiDAR, IMU | Yes | Python | SLAM, Navigation |

| Leo Rover [31] | Raspberry Pi/ LeoCore | 4× DC gear motor with OE | Camera, LiDAR, IMU | Yes | Python | SLAM, Navigation |

| Summit-XL [32] | Intel processor/PC | 4× DC gear motor with OE | Camera, LiDAR, IMU | Yes | Python | SLAM, Navigation |

| Owlbot | Jetson Nano/ Raspberry Pi | Stepping motor with controller | LiDAR, IMU | Yes | Python | SLAM, Navigation |

| Items | Detail | Number |

|---|---|---|

| LiDAR | RPLIDAR A1 | 1 |

| Single-board computer | Jetson Nano | 1 |

| Raspberry Pi 4 | 1 | |

| Power management board | 5 V and 12 V Output | 1 |

| Stepping motors A and B | SBC-103H548-0440 | 2 |

| Motor controller | SBC-10 | 2 |

| USB Wi-Fi adapter | 802.11n | 2 |

| IMU | GY9250 | 1 |

| Polylactide (PLA) base | 3D printed | 4 |

| Wheel | Aluminium | 2 |

| Battery | Li-Po 18650 | 8 |

| Parameter | Value |

|---|---|

| Working voltage | 9–16 V |

| Rated voltage | 12 V |

| Step angle | |

| Holding torque | 0.265 Nm |

| Current | 1.2 A (A/Phase) |

| Inductance | 4.3 mH |

| Weight | 280 g |

| Controller | SBC-10 |

| Variables | Parameter Description |

|---|---|

| , | left and right motors |

| , | left and right wheel |

| , | linear and angular velocities of the topic/cmd_vel. |

| , | velocity of the left and right wheels |

| , | direction of the left and right wheels |

| spacing between the two wheels | |

| wheel radius | |

| , | step angle of the left and right motors |

| , | microstepping of the left and right motors |

| , | left and right motor rotation speed (revolutions per second) |

| , | number of pulses for the left and right motors |

| , | delay of left and right motors |

| linear velocity of the wheel in the offline phase | |

| , | maximum and minimum values of |

| Command | Linear Velocity (m/s) | Runtime (s) | Actual Movement Distance (m) | Distance Error (m) | Left Motor | Right Motor | ||

|---|---|---|---|---|---|---|---|---|

| 1 | 0.09 | 1 | 0.088 | 0.002 | 429 | 2250 | 429 | 2250 |

| 2 | 0.026 | 1 | 0.026 | 0 | 127 | 7800 | 127 | 7800 |

| 3 | 0.068 | 1 | 0.07 | 0.002 | 343 | 2800 | 343 | 2800 |

| 4 | 0.042 | 1 | 0.04 | 0.002 | 195 | 5000 | 195 | 5000 |

| 5 | 0.072 | 1 | 0.07 | 0.002 | 343 | 2800 | 343 | 2800 |

| 6 | 0.084 | 2 | 0.164 | 0.004 | 800 | 2400 | 800 | 2400 |

| 7 | 0.048 | 2 | 0.088 | 0.008 | 430 | 4500 | 430 | 4500 |

| 8 | 0.066 | 2 | 0.136 | 0.004 | 662 | 2900 | 662 | 2900 |

| 9 | 0.062 | 2 | 0.12 | 0.004 | 604 | 3200 | 604 | 3200 |

| 10 | 0.045 | 2 | 0.096 | 0.006 | 468 | 4100 | 468 | 4100 |

| 11 | 0.026 | 3 | 0.09 | 0.012 | 438 | 7874 | 438 | 7874 |

| 12 | 0.049 | 3 | 0.162 | 0.015 | 789 | 3700 | 789 | 3700 |

| 13 | 0.048 | 3 | 0.162 | 0.018 | 789 | 3700 | 789 | 3700 |

| 14 | 0.025 | 3 | 0.084 | 0.009 | 411 | 7200 | 411 | 7200 |

| 15 | 0.049 | 3 | 0.15 | 0.003 | 732 | 4000 | 732 | 4000 |

| 16 | –0.046 | 1 | –0.045 | 0.001 | 220 | 4400 | 220 | 4400 |

| 17 | –0.066 | 1 | –0.068 | 0.002 | 331 | 2900 | 331 | 2900 |

| 18 | –0.078 | 1 | –0.075 | 0.003 | 365 | 2600 | 365 | 2600 |

| 19 | –0.072 | 1 | –0.07 | 0.002 | 349 | 2800 | 349 | 2800 |

| 20 | –0.064 | 1 | –0.066 | 0.002 | 322 | 3000 | 322 | 3000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, S.; Hwang, S.-H. ROS-Based Autonomous Navigation Robot Platform with Stepping Motor. Sensors 2023, 23, 3648. https://doi.org/10.3390/s23073648

Zhao S, Hwang S-H. ROS-Based Autonomous Navigation Robot Platform with Stepping Motor. Sensors. 2023; 23(7):3648. https://doi.org/10.3390/s23073648

Chicago/Turabian StyleZhao, Shengmin, and Seung-Hoon Hwang. 2023. "ROS-Based Autonomous Navigation Robot Platform with Stepping Motor" Sensors 23, no. 7: 3648. https://doi.org/10.3390/s23073648