1. Introduction

Anomaly detection refers to the process of detecting data events that significantly deviate from the norm. Owing to the increasing demand for such applications in domains such as risk management, security, financial surveillance, health, and medicine, a variety of machine-learning anomaly detection methods are being developed [

1]. Video data are a common resource for anomaly detection [

2,

3]. However, three key challenges hamper the deployment of machine learning video-event anomaly solutions. First, deployment is difficult and incurs high costs. Furthermore, systems can only be installed in specific areas and abnormal events can occur anywhere. Hence, blind and dead spots are problematic. Second, troubleshooting and maintenance are difficult and require vast human and material resources. Last, events can be easily disturbed by external factors, such as haze, weather, luminance, and reflections. Distributed optical fiber acoustic sensing (DAS) can solve these problems because it has many advantages, such as ultra-long detection distances, easy deployment, and resistance to harsh environments [

4]. Therefore, it is highly suitable for anomaly detection [

5].

DAS utilizes Rayleigh backscattering to measure sound or vibrations along an optical fiber over ultra-long distances [

6]. Presently, the application of DAS is being studied in several fields, such as pipeline monitoring [

7,

8,

9,

10] that uses a buried optical fiber cable next to the pipeline. Perimeter security [

11,

12,

13] is similarly monitored using a DAS system at the boundary of important facilities to detect intrusions. Earthquake detection [

14,

15,

16,

17] and other vibrational events are also monitored in this manner.

Numerous studies have focused on improving the performance of DAS, e.g., by applying new transmitted light schemes and scattering phase demodulation. Sensing range performance parameters, e.g., spatial and sensing resolutions, have significantly improved over the years [

18]. To improve performance further, DAS methods are being combined with deep learning techniques for the intelligent and real-time identification of vibrations along the optical fiber [

19,

20,

21,

22,

23].

However, the integration of deep learning inherits the same challenges faced by other machine learning anomaly detection models [

1,

24]. For example, the extreme imbalance between normal and abnormal samples in sensory datasets reduces the generalizability of the model. Many studies use artificially simulated abnormal data; however, it is impossible to simulate all DAS abnormalities because there is an abundance of noise in real environments. These challenges make it difficult to apply traditional supervised learning methods directly.

To address these challenges, this paper proposes an unsupervised method that only learns normal data features. Because the DAS systems collect multi-channel time series signals, the proposed unsupervised method considers the space and time dimensions. Thus, over a given period the original DAS signal is divided into windows consisting of several nearby channels and becomes the model’s input. Then, an autoencoder is used to extract normal data features, and a clustering algorithm is used to establish feature centers. During testing, if a feature in the window is sufficiently distant from the center, it is judged to be abnormal. Notably, the autoencoder’s shallow convolution structure is time efficient. To evaluate the efficacy of the proposed method, experiments were performed on the data from a real-world high-speed rail intrusion scenario. Intrusion behaviors, such as wall climbing and wall breaking, are regarded as abnormal. Since the scene contains intense background noise (e.g., high-speed trains and heavy trucks), the proposed identification method’s complexity escalates. Nevertheless, the experimental results show that our method improves the threat detection rate by 7.6% and reduces the false alarm rate by 0.7% compared to the state-of-the-art supervised network.

3. Experiment

3.1. Evaluation Metrics and Parameters

The threat detection rate (

) is the ratio of true positive intrusion windows detected after the window accumulation is equivalent to the recall rate:

where

is a true positive, and

is a false negative.

The false alarm rate (

) is the ratio of false alarms after the window accumulation is equivalent to the one-minus-precision rate:

is a false positive. The

is used to evaluate the balance between intrusion detection accuracy and recall rate; the higher the

, the better:

The objectively measures overall performance according to the false positive and intrusion detection rates. A higher is obtained only when the intrusion detection rate is high, and the false positive rate is low. The response time (RT) is the average time between the space-time window at the beginning of the true-positive intrusion signal at the predicted value.

Several settable parameters can be used to fine-tune model performance. The threshold (TH) determines whether the space-time window is indeed an intrusion window. If the distance of the event from the center is greater than this threshold, it is determined to be an intrusion window. The threshold setting is used to control model sensitivity. Unless otherwise specified, the threshold is obtained when the false alarm rate of the validation set is 3%. The scanning period controls the temporality of the intercepted space-time window, whereas the channel width controls its spatiality. Variable m is the average of the largest distances; thus, model sensitivity can be controlled by adjusting m. Unless otherwise specified, m is set to 64. is the number of windows traversed in a retrograde strategy to control model sensitivity. Last, is the window threshold that triggers judgment in the window accumulation strategy, which also controls model sensitivity.

3.2. Experimental Set Up

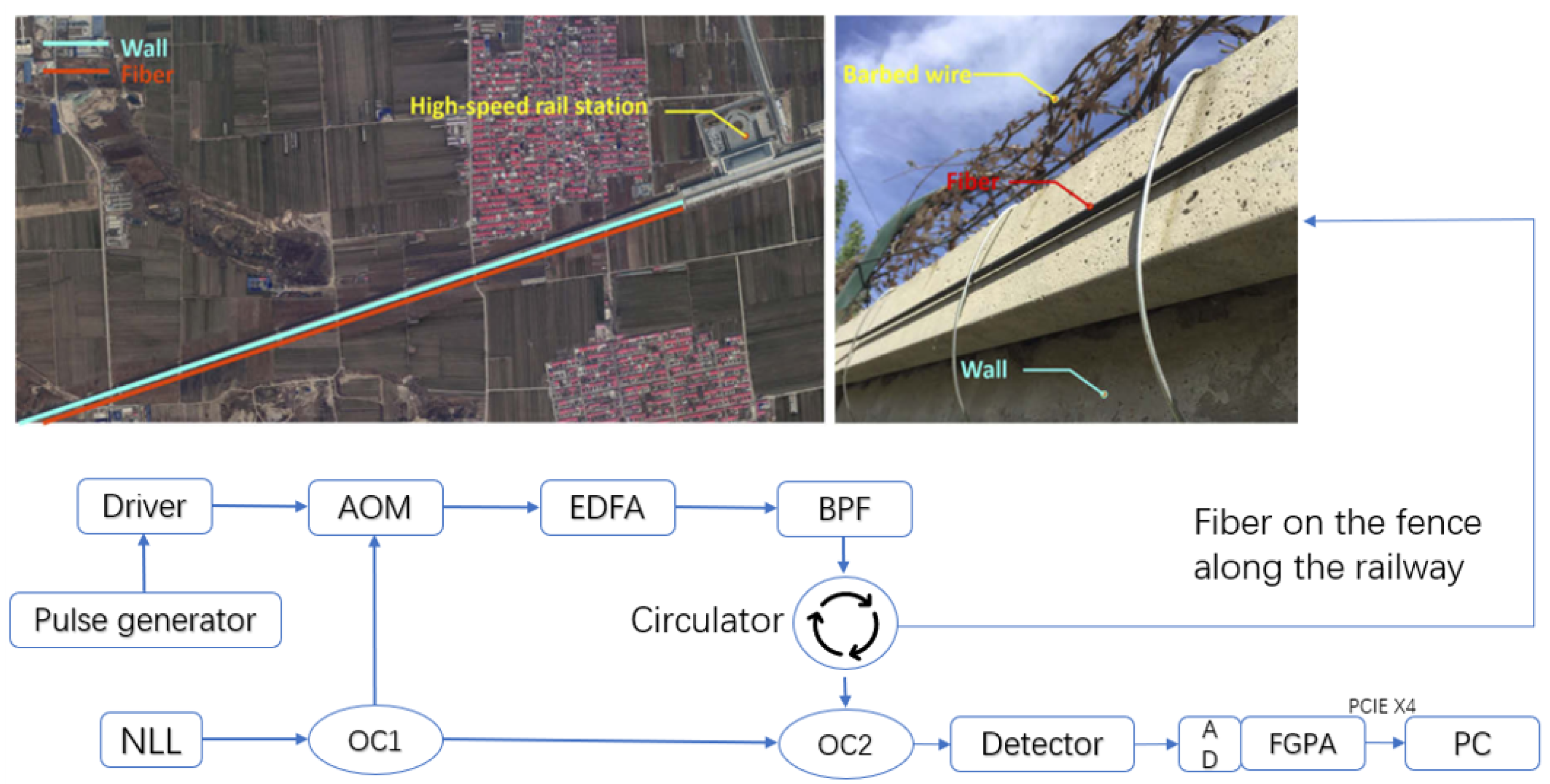

First, the DAS system illustrated in

Figure 1 was used to collect vibration signals, in which the DAS spatial resolution was set to 10 m (i.e., the space interval between two channels), and the signal sampling rate was 488 Hz. The DAS system was deployed at a high-speed railway station, and the optical fiber was fixed along the barrier beside the tracks for approximately 40 km.

In a normal environment, 60 min signal data were randomly and intermittently collected and 40 min were randomly selected for the training set. The other data were used for validation. The test data were the same as those used in [

13], which included approximately 30 min of multiple intrusion behaviors. The number of windows generated is shown in

Table 1 (The ratio of abnormal to normal data is about 1:10). To eliminate the bias between the various channels and the effect of some too large data on the results, before being input to the model, all channels were standardized at each moment, and the maximum value of each channel was set to 3.5 times the standard deviation of the same channel in the training set. Finally, the vibration data were normalized in the [0, 1] range.

3.3. Influence of Model Parameters

3.3.1. Influence of Model Depth

Different model depths are listed in

Table 2, and Bold in the table represents the best result for this item. The autoencoder model was used to extract latent features. The encoder structure of each layer was a Conv-ReLU-BatchNorm-Maxpool, and the decoder structure of each layer was an Interpolate-Conv-ReLU/Sigmoid, the convolution kernel is 3. Each encoder layer corresponded to one decoder layer. Considering the model result and calculation time, autoencoder models with 1–3 layers were compared. The results showed that the autoencoder using only one convolutional layer achieved the best performance, and owing to the shallow structure, the model saved computation time.

3.3.2. Influence of Scanning Period and Channel Width

The scanning period was used to control the space-time window and the channel width was used to control the spatial range. When experimentally compared, the window-scanning period range was 128∼4096, and the channel widths range was 10∼35.

The table shows that model detection accuracy improved with the increasing scanning period. In particular, the FAR dropped significantly. The response time increased accordingly, as a longer scanning period caused an increased delay in model output, as detailed in

Table 3.

Increasing the channel width improved anomaly detection performance and lowered the FAR. Additionally, owing to the fixed scanning period, the change in RT was small. When the channel width was too small, the missing spatial relationships led to poor detection. However, when the channel width was much larger a large amount of useless interference information was extracted and TDR dropped. A too-large channel width affected the accurate positioning of anomalies. When the channel width approximated the width of the channel affected by the abnormal behavior, the model reached its peak performance, as detailed in

Table 4.

3.3.3. Impact of Window Accumulation Strategy

The window accumulation was controlled by two parameters, and , which balanced model sensitivity and detection accuracy. The value of was varied from 2 to 10 and that of from 1 to to examine their influence on the model’s sensitivity and adopted a scanning period of 128.

With the increase in

, model sensitivity increased along with the rate of anomaly detection. In contrast, with the increase in

, the anomaly detection rate declined, as shown in

Figure 5. The false detection of the model increased with

, and it decreased as

increased, as shown in

Figure 6.

Considering the trade-off performances of TDR and FAR, the F1 scores were compared, as shown in

Figure 7.

3.3.4. Impact of Maximum Average Distance

Adjusting

m can be used to tune model performance, as shown in

Table 5. With an increase in

m, various model indicators were improved. When

m reached a reasonable value range, the TDR also improved, and the FAR decreased. Concomitantly, a larger window width requires a larger

m.

3.4. Model Performance Comparison

To verify the model’s performance, experiments were conducted on this dataset using a variety of supervised algorithms as the baseline. The results are shown in

Table 6. The proposed method was trained using normal data and the supervised methods were trained on labeled anomaly data of the same size, as were the other configurations.

Table 6 shows that the proposed method’s anomaly detection rate was approximately 1% lower than those of ConvLSTM and DenseNet but above those of other models. The FAR was only 0.7% higher than the lowest model, Shiloh18, while the F1 score was higher than most supervised models and only 0.6% lower than ConvLSTM. The poor performance of traditional machine learning methods (e.g., LR and LightGBM) showed that deep learning methods have certain advantages in processing DAS signal data. Overall, the proposed model outperformed most supervised networks and was second only to ConvLSTM.

Table 7 presents the post-tuned comparisons with ConvLSTM using the same channel widths. In addition, directly applying unsupervised methods(e.g., Chen and Ji) from other fields to DAS can lead to a high false alarm rate

With a similar RT, the TDR of the proposed model was 7.6% higher than that of ConvLSTM. The FAR was 0.7% lower, and the F1 score was 4.5% higher than ConvLSTM. The experimental results show that unsupervised anomaly detection methods can achieve better performances than state-of-the-art methods.

3.5. Visualization of Results

As one of the test samples, the visualization of model detection shown in

Figure 3 is shown in

Figure 8. Nearly all intrusion signals were detected even with strong noise interference.

The results after window smoothing are shown in

Figure 9, and further confirm the model detection performance. Window smoothing also eliminated the false positives caused by single-window identification errors.

As shown in

Figure 10, the scanning period produced 1024 results. Furthermore, the false positives reduced significantly as the scanning period increased.

4. Conclusions

This study developed a label-free anomaly detection method based on DAS, which, to detect a variety of anomalous events, required only normal-state data for training. Because this method does not require labeled abnormal data, it addresses the problem that labeled anomaly data are generally lacking and sidesteps the impossibility of defining all types of anomalies for supervised networks. In particular, the proposed lightweight model reduces several parameters and vast amounts of computation.

The proposed method was validated using a high-speed rail intrusion dataset and was compared with multiple supervised methods under the same experimental configuration. The results show that the threat detection rate of this method reaches 91.5%, which is 5.9% higher than that of the state-of-the-art supervised network, and the false alarm rate reaches 7.2% is 0.8% lower than the supervised network.

We intend to test the model in complex environments (e.g., rain and thunder). By evaluating the efficacy of the proposed approach in more complex environments, the model will be refined for even better performance.