The Future of Mine Safety: A Comprehensive Review of Anti-Collision Systems Based on Computer Vision in Underground Mines

Abstract

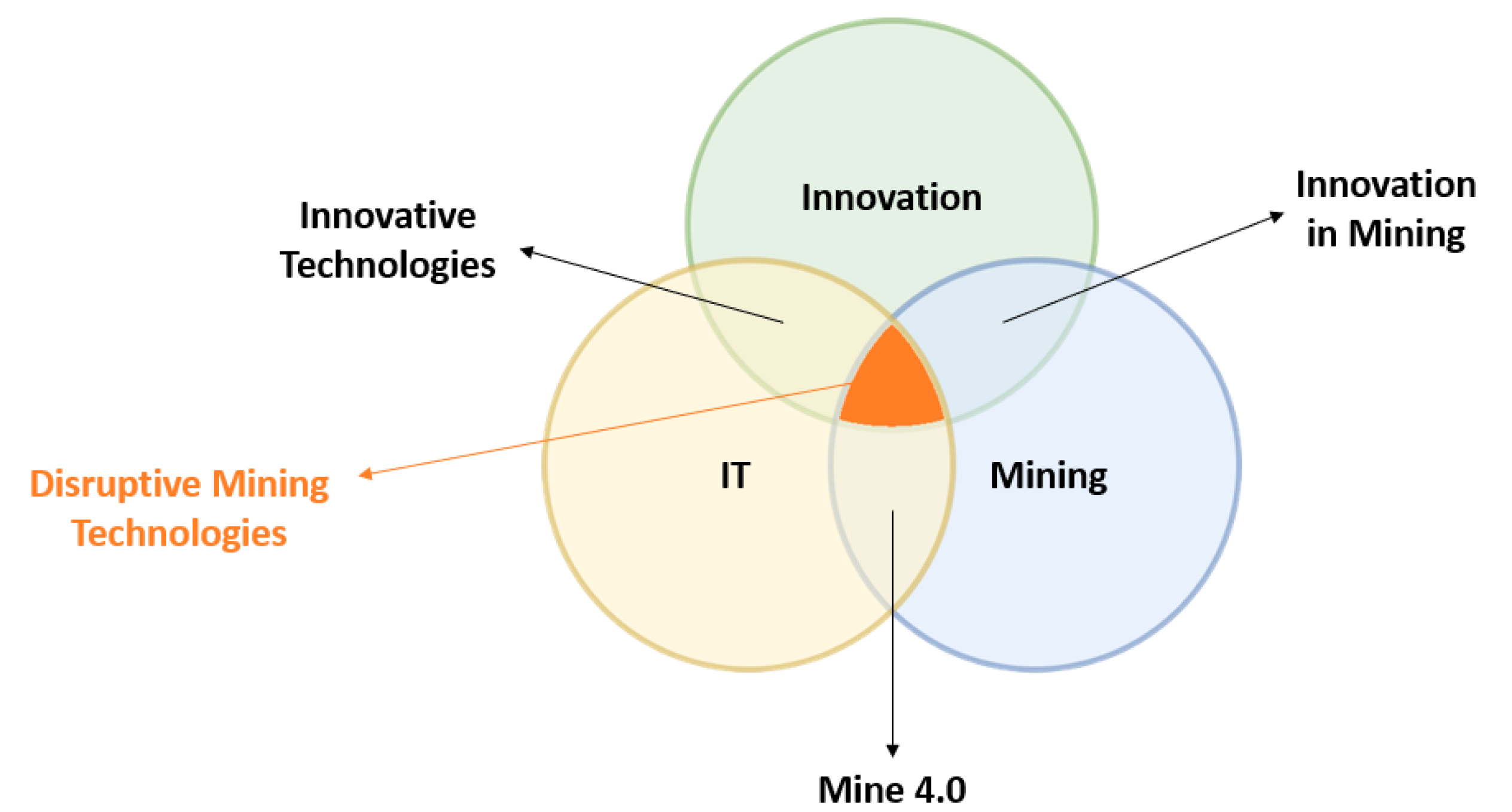

:1. Introduction

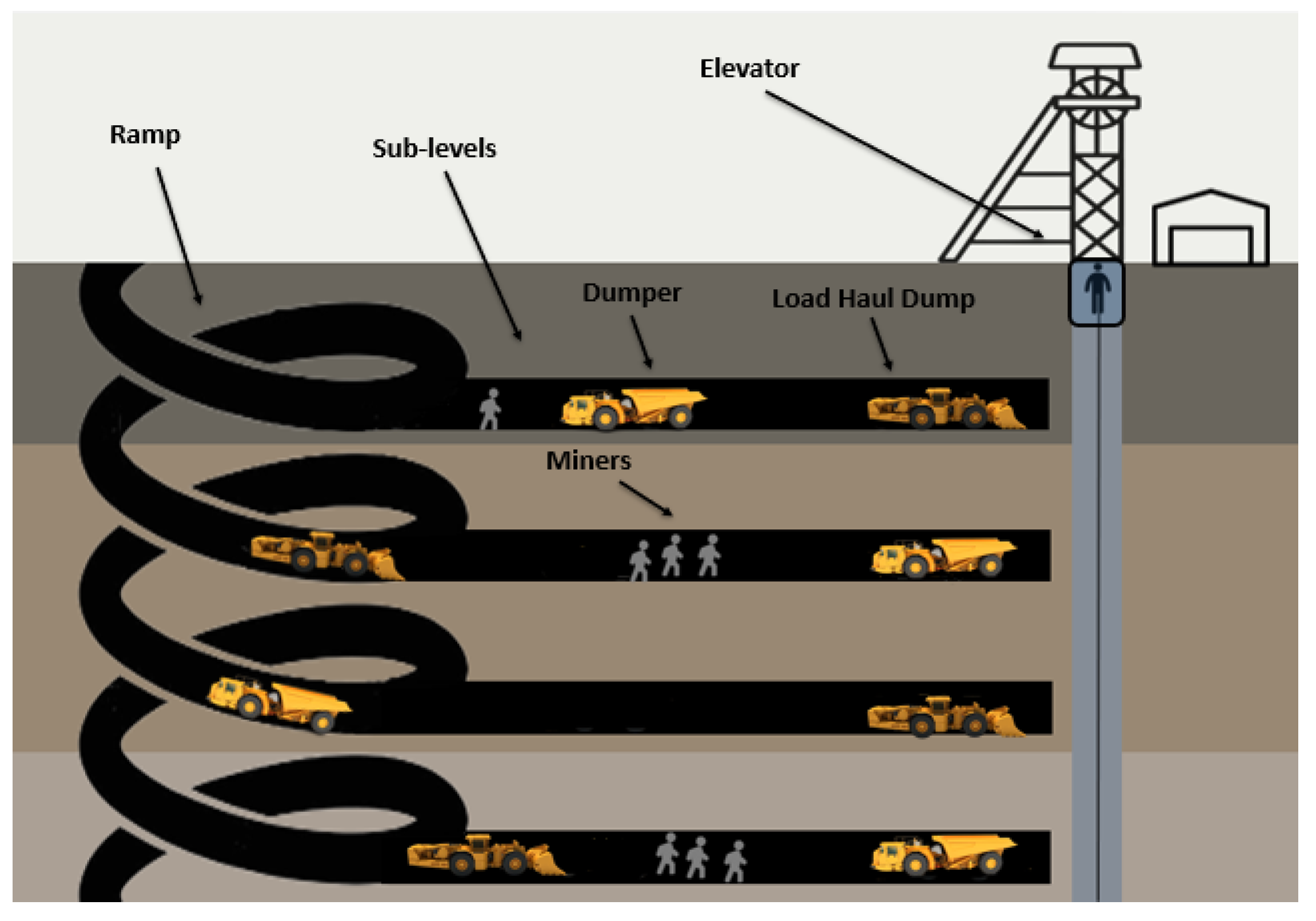

1.1. Safety Challenges in Underground Mines

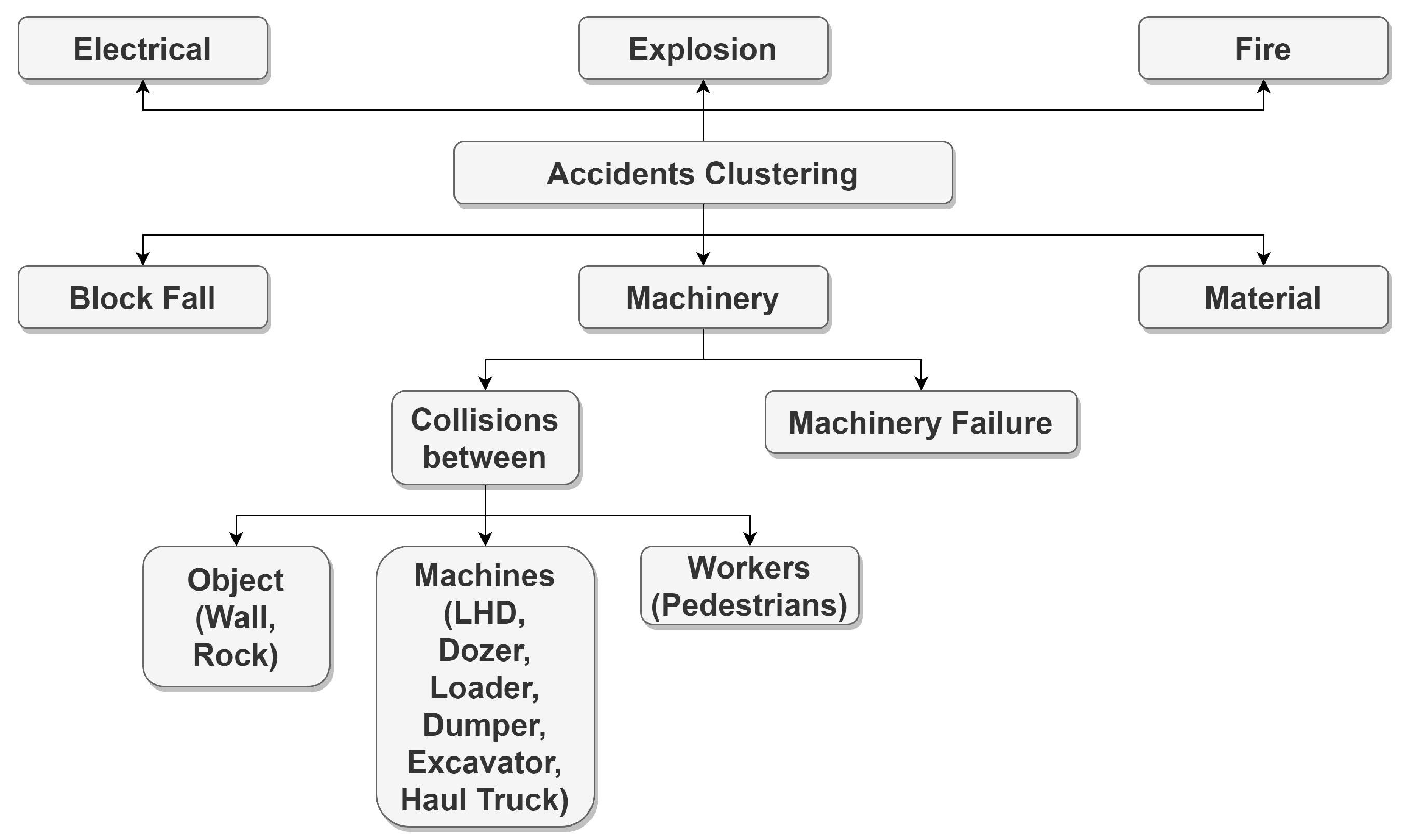

1.2. Taxonomy of Accidents in Underground Mines

- Electrical: caused by electric current.

- Explosions: involving the detonation of manufactured explosives, Airdox, or Cardox and can result in flying debris, concussive forces, fumes, or the ignition or explosion of gas or dust. This category also includes accidents involving exploding gasoline vapors, space heaters, or furnaces.

- Fire: including unplanned fires that are not extinguished within 10 min in underground mines or 30 min in surface mines and surface areas of underground mines.

- Materials: involving lifting, pulling, pushing, or shoveling material and are caused by handling the material itself. The material may be in bags or boxes, or it may be loose, such as sand, coal, rock, or timber.

- Machinery: caused by the action or motion of machinery or by the failure of component parts. This category includes all haulage machines, such as dozers, haul trucks, front-end loaders, load–haul–dumps, dumpers, and excavators. Accidents caused by an energized or moving unit or failure of component parts, as well as collisions between machines and objects or workers, are also included.

- Block Fall: caused by falling material and the result of improper blocking of equipment during repair or inspection. In this case, the accident should be classified as the type of equipment most directly responsible for the resulting accident.

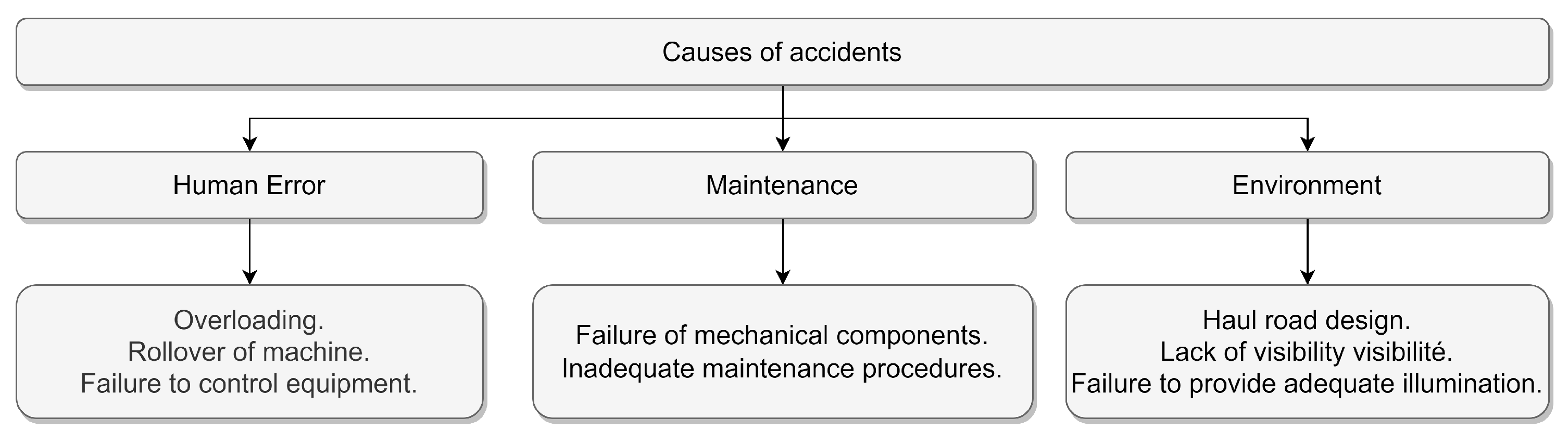

1.3. Typology of Underground Mining Machinery and Its Associated Injuries

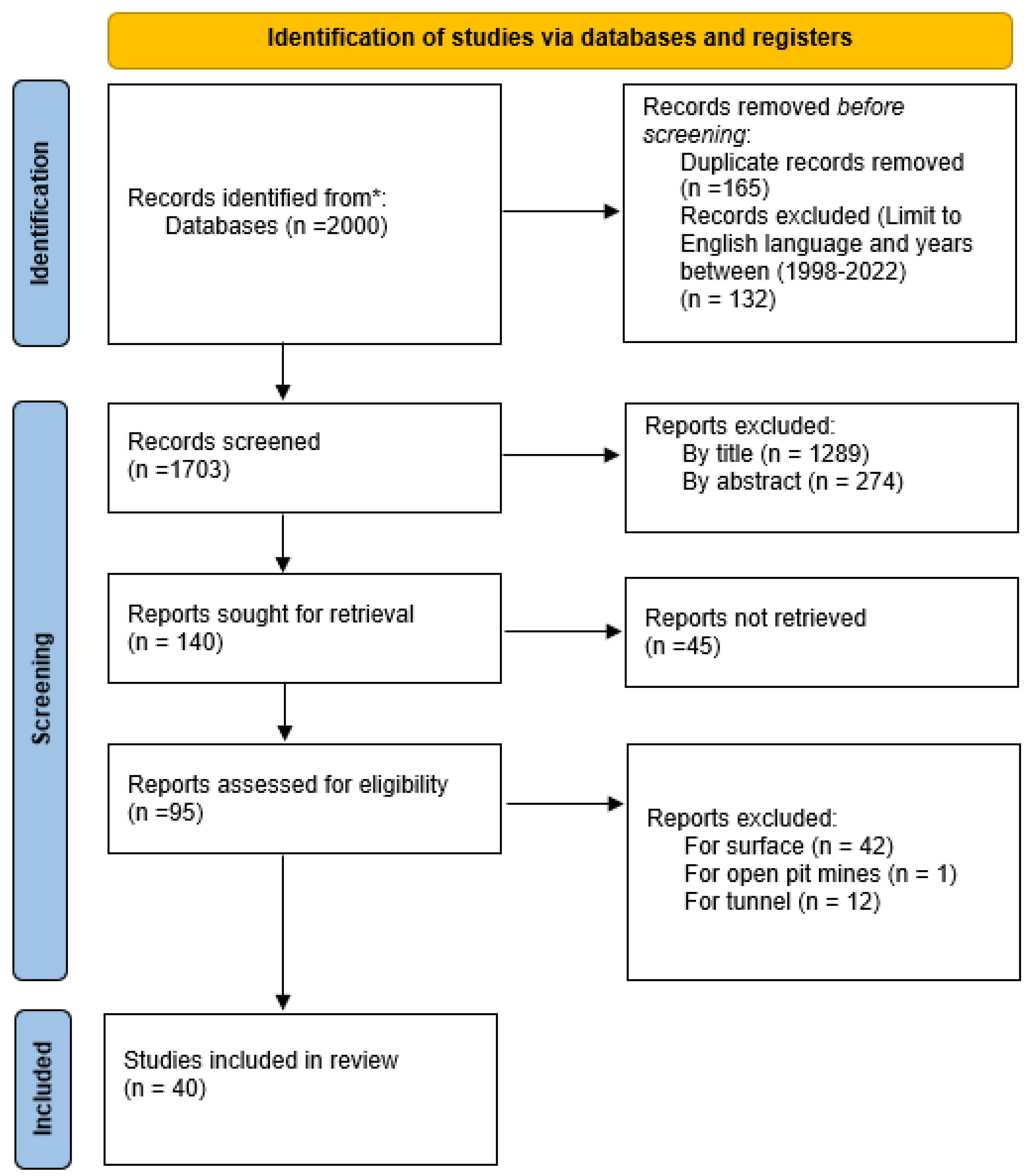

2. Materials and Methods

3. Proposed Solutions for Pedestrian Detection in Underground Mines

3.1. The Application of Computer Vision in Underground Mining Operations

3.2. Sensory Part

3.2.1. RGB Camera

3.2.2. Infrared Camera

| Name | Wavelength |

|---|---|

| NIR (Near infrared) | 0.75–1.4 µm |

| SWIR (Short wavelength infrared) | 1.4–3 µm |

| MWIR (Medium wavelength infrared) | 3–8 µm |

| LWIR (Long wavelength infrared) | 8–15 µm |

| FIR (Far infrared) | 15–1000 µm |

3.2.3. Stereoscopic Camera

3.2.4. LIDAR (Light Detection and Ranging)

3.3. Data Collection Method

3.3.1. Drones

3.3.2. Unmanned Ground Vehicle

3.3.3. Mobile Machines

3.3.4. Surveillance Cameras

3.4. Algorithmic Part

| Algorithm | Data Type | Purpose |

|---|---|---|

| Yolo (v1, v2, v3, v4 and v5) [102,103,105,113,117] | RGB Images [102], Thermal Images [103,105,113,117] | Pedestrian detection [102,103,104,105,106,107,108,109,110,111,112,113,114,117,118], falling elderly people [118] |

| Tiny (v3,l3, v2) [103,105,113] | Thermal Images [103,105,113] | |

| Fast R-CNN [104,106] | RGB Images [106], Thermal Images [104,106] | |

| HOG [107,110,113] | RGB Images [110], Thermal Images [107,110,113] | |

| SVM [107,108,109,110,113,118] | RGB Images [110,118], Thermal Images [107,108,109,110,113] | |

| ROI [111,112] | Stereoscopic Images [111,112] |

| Reference | Algorithm | AP (%) | MAP (%) | fps |

|---|---|---|---|---|

| [102] | Yolov3 | 78 | N/A | 22.2 |

| VggPrioriBoxes-Yolo | 80.5 | N/A | 81.7 | |

| MNPrioriBoxes-Yolo | 80.5 | N/A | 151.9 | |

| [103] | Yolov3 | N/A | 80.5 | |

| TINYv3 | N/A | 66.3 | ||

| [105] | TINYv3 | N/A | 73.26 | 55.57 |

| TINYL3 | N/A | 80.14 | 43.01 | |

| Yolov3 | N/A | 80.48 | 17.88 | |

| Yolov4 | N/A | 86.05 | 15.97 | |

| ResNet50 | N/A | 81 | 19.82 | |

| ResNet50 | N/A | 77.07 | 17.7 | |

| [113] | Tiny Yolov2 with ABMS | 87.12 | N/A | 62.8 |

| Tiny Yolov2 with BMS | 85.37 | N/A | 62.8 | |

| Tiny Yolov2 without preprocessing | 78.4 | 63.8 | ||

| [117] | Yolov5s | 90 | N/A | N/A |

| Purpose | Sensors | Algorithms |

|---|---|---|

| Pedestrian Detection |

|

|

| Depth Estimation |

|

|

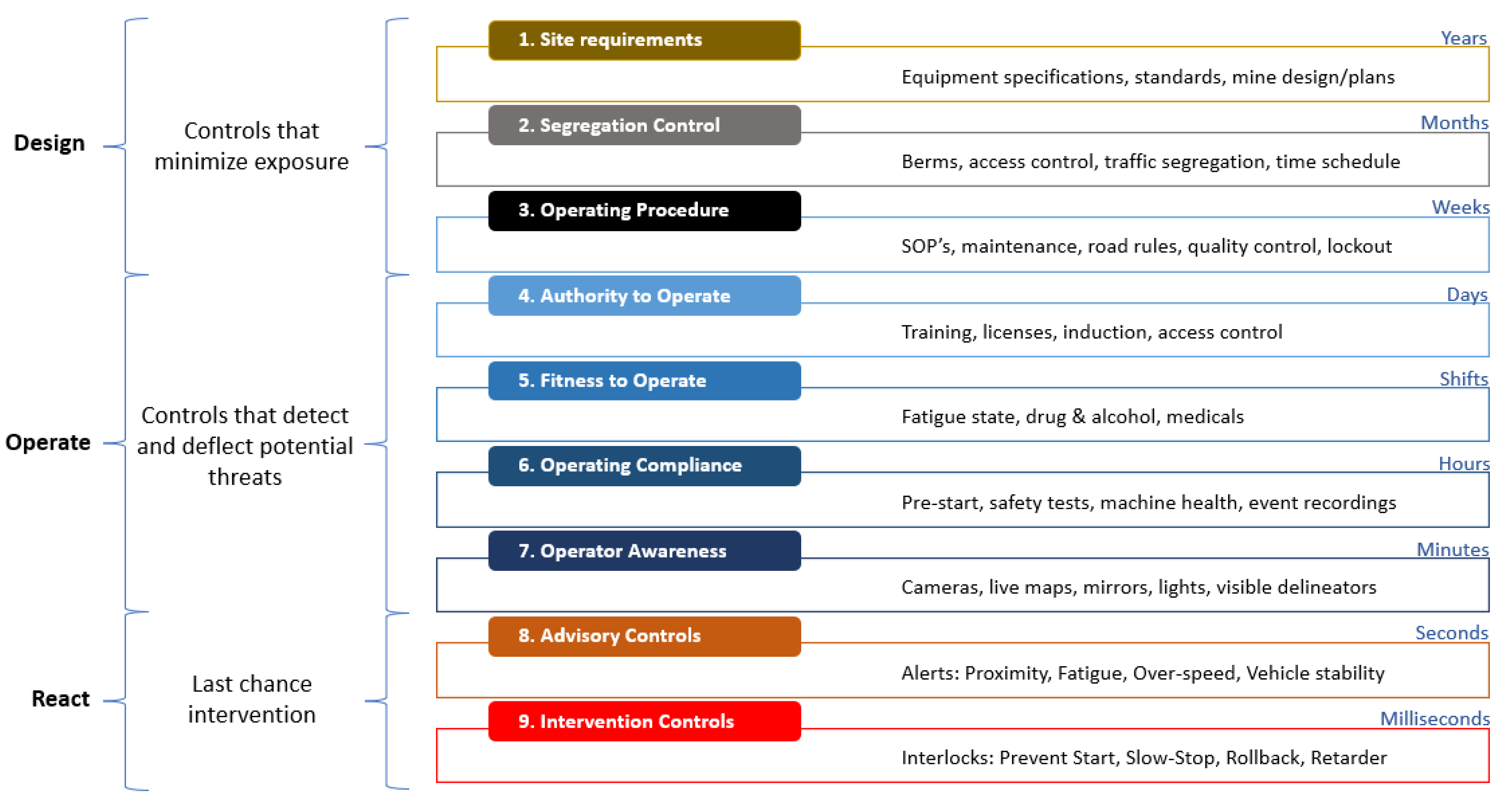

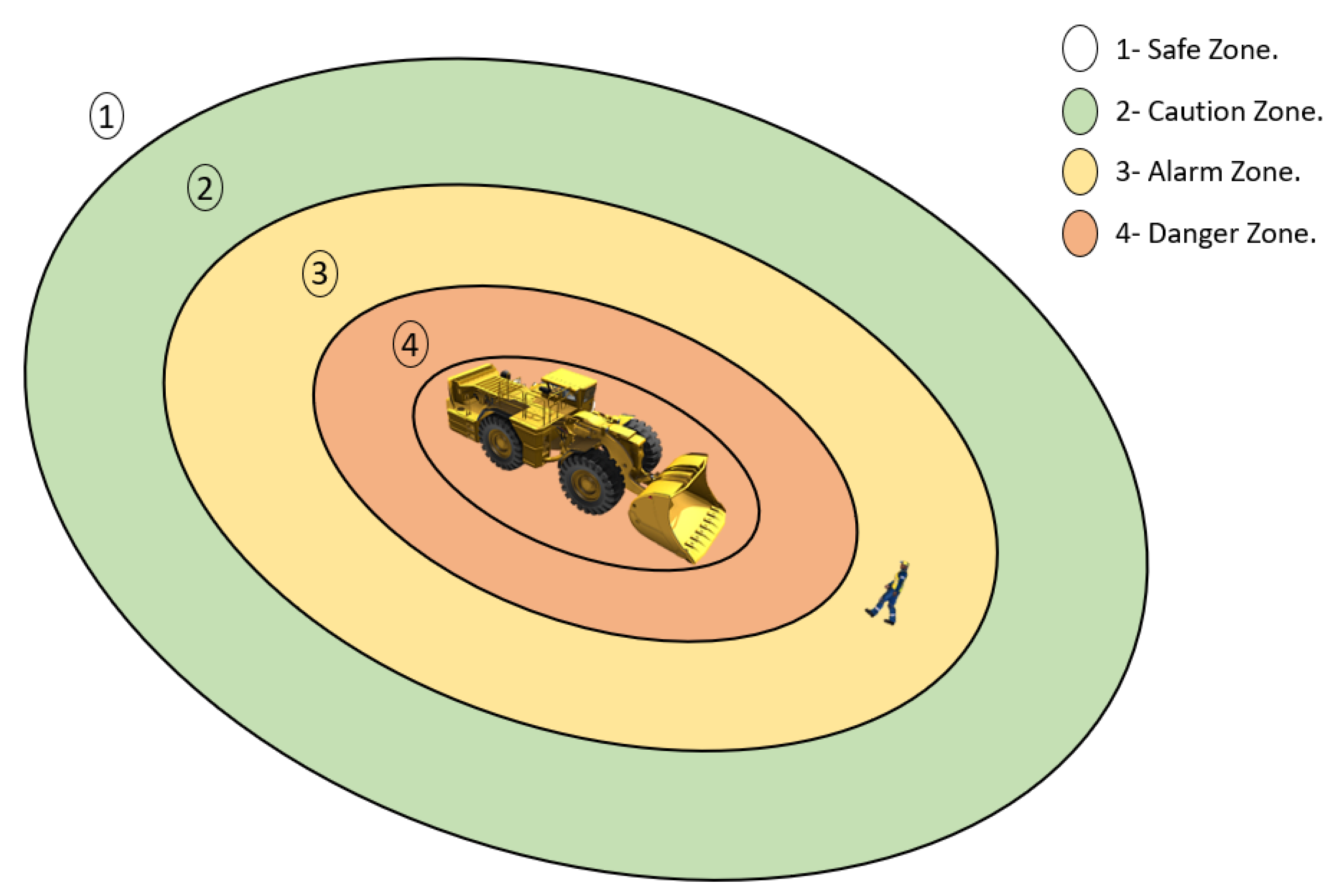

3.5. Industrial Solutions

4. Discussion

4.1. General Discussion

- RGB Cameras + Thermal Cameras + Stereoscopic Cameras;

- Thermal Cameras + Stereoscopic Cameras;

- RGB Cameras + Lidar Sensor;

- Thermal Cameras + Lidar Sensor.

- RGB images;

- Nir images;

- LWIR images;

- Fir images.

4.2. Improving Mine Safety Based on Computer Vision

5. Limitations

6. Conclusions

6.1. General Conclusions

6.2. Practical Application

6.3. Current and Future Trend

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MSHA | Mine Safety and Health Administration |

| CPS | Current Population Survey |

| LHD | Load–Haul–Dump |

| PRISMA | The Preferred Reporting of Items for Systematic Reviews and Meta-Analyses |

| GPS | Global Positioning System |

| UWB | Ultra-Wide band Radar |

| RGB | Red Green Blue |

| 2D | Two-dimensional |

| 3D | Three-dimensional |

| LIDAR | Light Detection And Ranging |

| RFID | Radio Frequency Identification |

| RADAR | Radio Detection and Ranging |

| IR | Infrared |

| NIR | Near Infrared |

| SWIR | Short Wavelength Infrared |

| MWIR | Medium Wavelength Infrared |

| LWIR | Long Wavelength Infrared |

| FIR | Far Infrared |

| UGV | Unmanned Ground Vehicle |

| UAV | Unmanned Aerial Vehicle |

| YOLO | You Only Look Once |

| HOG | Histogram of Oriented Gradients |

| SVM | Support Vector Machine |

| AP | Average Precision |

| MAP | Mean Average Precision |

| fps | Frames per second |

| CAS | Collision-avoidance systems |

| EMESRT | Earth Moving Equipment Safety Round Table |

References

- Kusi-Sarpong, S.; Bai, C.; Sarkis, J.; Wang, X. Green supply chain practices evaluation in the mining industry using a joint rough sets and fuzzy TOPSIS methodology. Resour. Policy 2015, 46, 86–100. [Google Scholar] [CrossRef]

- Holmberg, K.; Kivikytö-Reponen, P.; Härkisaari, P.; Valtonen, K.; Erdemir, A. Global energy consumption due to friction and wear in the mining industry. Tribol. Int. 2017, 115, 116–139. [Google Scholar] [CrossRef]

- Ranängen, H.; Lindman, Å. A path towards sustainability for the Nordic mining industry. J. Clean. Prod. 2017, 151, 43–52. [Google Scholar] [CrossRef]

- Sanmiquel, L.; Rossell, J.M.; Vintró, C. Study of Spanish mining accidents using data mining techniques. Saf. Sci. 2015, 75, 49–55. [Google Scholar] [CrossRef]

- Permana, H. Risk assessment as a strategy to prevent mine accidents in Indonesian mining. Rev. Min. 2010, 4, 43–50. [Google Scholar]

- Kizil, M. Virtual Reality Applications in the Australian Minerals Industry; South African Institute of Mining and Metallurgy: Johannesburg, South Africa, 2003; pp. 569–574. [Google Scholar]

- Kazan, E.; Usmen, M.A. Worker safety and injury severity analysis of earthmoving equipment accidents. J. Saf. Res. 2018, 65, 73–81. [Google Scholar] [CrossRef] [PubMed]

- Squelch, A.P. Virtual reality for mine safety training in South Africa. J. S. Afr. Inst. Min. Metall. 2001, 101, 209–216. [Google Scholar]

- Kia, K.; Fitch, S.M.; Newsom, S.A.; Kim, J.H. Effect of whole-body vibration exposures on physiological stresses: Mining heavy equipment applications. Appl. Ergon. 2020, 85, 103065. [Google Scholar] [CrossRef]

- Domingues, M.S.Q.; Baptista, A.L.F.; Diogo, M.T. Engineering complex systems applied to risk management in the mining industry. Int. J. Min. Sci. Technol. 2017, 27, 611–616. [Google Scholar] [CrossRef]

- Tichon, J.; Burgess-Limerick, R. A review of virtual reality as a medium for safety related training in mining. J. Health Saf. Res. Pract. 2011, 3, 33–40. [Google Scholar]

- Lööw, J.; Abrahamsson, L.; Johansson, J. Mining 4.0—the Impact of New Technology from a Work Place Perspective. Mining Metall. Explor. 2019, 36, 701–707. [Google Scholar] [CrossRef]

- Sishi, M.; Telukdarie, A. Implementation of Industry 4.0 technologies in the mining industry—A case study. Int. J. Min. Miner. Eng. 2020, 11, 1–22. [Google Scholar] [CrossRef]

- Sánchez, F.; Hartlieb, P. Innovation in the Mining Industry: Technological Trends and a Case Study of the Challenges of Disruptive Innovation. Mining Met. Explor. 2020, 37, 1385–1399. [Google Scholar] [CrossRef]

- Dong, L.; Sun, D.; Han, G.; Li, X.; Hu, Q.; Shu, L. Velocity-free localization of autonomous driverless vehicles in underground intelligent mines. IEEE Trans. Veh. Technol. 2020, 69, 9292–9303. [Google Scholar] [CrossRef]

- Baïna, K. Society/Industry 5.0—Paradigm Shift Accelerated by COVID-19 Pandemic, beyond Technological Economy and Society. In Proceedings of the BML’21: 2nd International Conference on Big Data, Modelling and Machine Learning, Kenitra, Morocco, 15–16 July 2021. [Google Scholar]

- Ali, D.; Frimpong, S. Artificial intelligence, machine learning and process automation: Existing knowledge frontier and way forward for mining sector. Artif. Intell. Rev. 2020, 53, 6025–6042. [Google Scholar] [CrossRef]

- Zeshan, H.; Siau, K.; Nah, F. Artificial Intelligence, Machine Learning, and Autonomous Technologies in Mining Industry. J. Database Manag. 2019, 30, 67–79. [Google Scholar] [CrossRef]

- Shahmoradi, J.; Talebi, E.; Roghanchi, P.; Hassanalian, M. A Comprehensive Review of Applications of Drone Technology in the Mining Industry. Drones 2020, 4, 34. [Google Scholar] [CrossRef]

- Patrucco, M.; Pira, E.; Pentimalli, S.; Nebbia, R.; Sorlini, A. Anti-Collision Systems in Tunneling to Improve Effectiveness and Safety in a System-Quality Approach: A Review of the State of the Art. Infrastructures 2021, 6, 42. [Google Scholar] [CrossRef]

- Wang, L.; Li, W.; Zhang, Y.; Wei, C. Pedestrian Detection Based on YOLOv2 with Skip Structure in Underground Coal Mine; IEEE: Piscateway, NJ, USA, 2017. [Google Scholar]

- Szrek, J.; Zimroz, R.; Wodecki, J.; Michalak, A.; Góralczyk, M.; Worsa-Kozak, M. Application of the Infrared Thermography and Unmanned Ground Vehicle for Rescue Action Support in Underground Mine—The AMICOS Project. Remote Sens. 2021, 13, 69. [Google Scholar] [CrossRef]

- Mansouri, S.S.; Karvelis, P.; Kanellakis, C.; Kominiak, D.; Niko-lakopoulos, G. Vision-Based MAV Navigation in Underground Mine Using Convolutional Neural Network; IEEE: Piscateway, NJ, USA, 2019. [Google Scholar]

- Papachristos, C.; Khattak, S.; Mascarich, F. Autonomous Navigation and Mapping in Underground Mines Using Aerial Robots; IEEE: Piscateway, NJ, USA, 2019. [Google Scholar]

- Kim, H.; Choi, Y. Autonomous Driving Robot That Drives and Returns along a Planned Route in Underground Mines by Recognizing Road Signs. Appl. Sci. 2021, 11, 10235. [Google Scholar] [CrossRef]

- Kim, H.; Choi, Y. Comparison of Three Location Estimation Methods of an Autonomous Driving Robot for Underground Mines. Appl. Sci. 2020, 10, 4831. [Google Scholar] [CrossRef]

- Backman, S.; Lindmark, D.; Bodin, K.; Servin, M.; Mörk, J.; Löfgren, L. Continuous Control of an Underground Loader Using Deep Reinforcement Learning. Machines 2021, 9, 216. [Google Scholar] [CrossRef]

- Losch, R.; Grehl, S.; Donner, M.; Buhl, C.; Jung, B. Design of an Autonomous Robot for Mapping, Navigation, and Manipulation in Underground Mines. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Li, X.; Wang, S.; Liu, B.; Chen, W.; Fan, W.; Tian, Z. Improved YOLOv4 network using infrared images for personnel detection in coal mines. J. Electron. Imaging 2022, 31, 013017. [Google Scholar] [CrossRef]

- Wang, W.; Wang, S.; Guo, Y.; Zhao, Y. Obstacle detection method of unmanned electric locomotive in coal mine based on YOLOv3-4L. J. Electron. Imaging 2022, 31, 023032. [Google Scholar] [CrossRef]

- Fengbo, W.; Liu, W.; Wang, S.; Zhang, G. Improved Mine Pedestrian Detection Algorithm Based on YOLOv4-Tiny. In Proceedings of the Third International Symposium on Computer Engineering and Intelligent Communications (ISCEIC 2022), Xi’an, China, 16–18 September 2023. [Google Scholar] [CrossRef]

- Biao, L.; Tian, B.; Qiao, J. Mine Track Obstacle Detection Method Based on Information Fusion. J. Phys. Conf. Ser. 2022, 2229, 012023. [Google Scholar] [CrossRef]

- Zhang, H. Head-mounted display-based intuitive virtual reality training system for the mining industry. Int. J. Min. Sci. Technol. 2017, 27, 717–722. [Google Scholar] [CrossRef]

- Guo, H.; Yu, Y.; Skitmore, M. Visualization technology-based construction safety management: A review. Autom. Constr. 2017, 73, 135–144. [Google Scholar] [CrossRef]

- Melendez Guevara, J.A.; Torres Guerrero, F. Evaluation the role of virtual reality as a safety training tool in the context NOM-026- STPS-2008 BT. In Smart Technology. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Torres Guerrero, F., Lozoya-Santos, J., Gonzalez Mendivil, E., Neira-Tovar, L., Ramírez Flores, P.G., Martin-Gutierrez, J., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 213, pp. 3–9. [Google Scholar]

- Mallett, L.; Unger, R. Virtual reality in mine training. Mining Metall. Explor. 2007, 2, 1–4. [Google Scholar]

- Laciok, V.; Bernatik, A.; Lesnak, M. Experimental implementation of new technology into the area of teaching occupational safety for Industry 4.0. Int. J. Saf. Secur. Eng. 2020, 10, 403–407. [Google Scholar] [CrossRef]

- Qassimi, S.; Abdelwahed, E.H. Disruptive Innovation in Mining Industry 4.0. In Distributed Sensing and Intelligent Systems. Studies in Distributed Intelligence; Elhoseny, M., Yuan, X., Krit, S., Eds.; Springer: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- Groves, W.A.; Kecojevic, V.J.; Komljenovic, D. Analysis of fatalities and injuries involving mining equipment. J. Saf. Res. 2007, 38, 461–470. [Google Scholar] [CrossRef]

- Boniface, R.; Museru, L.; Munthali, V.; Lett, R. Occupational injuries and fatalities in a tanzanite mine: Need to improve workers safety in Tanzania. Pan Afr. Med. J. 2013, 16, 120. [Google Scholar] [CrossRef] [PubMed]

- Amponsah-Tawiah, K.; Jain, A.; Leka, S.; Hollis, D.; Cox, T. Examining psychosocial and physical hazards in the Ghanaian mining industry and their implications for employees’ safety experience. J. Saf. Res. 2013, 45, 75–84. [Google Scholar] [CrossRef] [PubMed]

- Kumar, R.; Ghosh, A.K. The accident analysis of mobile mine machinery in Indian opencast coal mines. Int. J. Inj. Contr. Saf. Promot. 2014, 21, 54–60. [Google Scholar] [CrossRef]

- Mine Safety and Health Administration (MSHA). Available online: https://www.msha.gov/ (accessed on 8 March 2023).

- Ruff, T.; Coleman, P.; Martini, L. Machine-related injuries in the US mining industry and priorities for safety research. Int. J. Inj. Control. Saf. Promot. 2010, 18, 11–20. [Google Scholar] [CrossRef]

- Kecojevic, V.; Komljenovic, D.; Groves, W.; Radomsky, M. An analysis of equipment-related fatal accidents in U.S. mining operations: 1995–2005. Saf. Sci. 2007, 45, 864–874. [Google Scholar] [CrossRef]

- Mitchell, R.J.; Driscoll, T.R.; Harrison, J.E. Traumatic work-related fatalities involving mining in Australia. Saf. Sci. 1998, 29, 107–123. [Google Scholar] [CrossRef]

- Dhillon, B.S. Mining equipment safety: A review, analysis methods and improvement strategies. Int. J. Mining Reclam. Environ. 2009, 23, 168–179. [Google Scholar] [CrossRef]

- Ural, S.; Demirkol, S. Evaluation of occupational safety and health in surface mines. Saf. Sci. 2008, 46, 1016–1024. [Google Scholar] [CrossRef]

- Stemn, E. Analysis of Injuries in the Ghanaian Mining Industry and Priority Areas for Research. Saf. Health Work. 2019, 10, 151–165. [Google Scholar] [CrossRef]

- Bonsu, J.; Van Dyk, W.; Franzidis, J.P.; Petersen, F.; Isafiade, A. A systemic study of mining accident causality: An analysis of 91 mining accidents from a platinum mine in South Africa. J. S. Afr. Inst. Min. Metall. 2017, 117, 59–66. [Google Scholar] [CrossRef]

- Kecojevic, V.; Radomsky, M. The causes and control of loader- and truck-related fatalities in surface mining operations. Int. J. Inj. Contr. Saf. Promot. 2004, 11, 239–251. [Google Scholar] [CrossRef] [PubMed]

- Md-Nor, Z.; Kecojevic, V.; Komljenovic, D.; Groves, W. Risk assessment for loader- and dozer-related fatal incidents in US mining. Int. J. Inj. Contr. Saf. Promot. 2008, 15, 65–75. [Google Scholar] [CrossRef] [PubMed]

- Dindarloo, S.R.; Pollard, J.P.; Siami-Irdemoos, E. Off-road truck-related accidents in US mines. J. Saf. Res. 2016, 58, 79–87. [Google Scholar] [CrossRef] [PubMed]

- Burgess-Limerick, R. Injuries associated with underground coal mining equipment in Australia. Ergon. Open J. 2011, 4, 62–73. [Google Scholar] [CrossRef]

- Nasarwanji, M.F.; Pollard, J.; Porter, W. An analysis of injuries to front-end loader operators during ingress and egress. Int. J. Ind. Ergon. 2017, 65, 84–92. [Google Scholar] [CrossRef]

- Duarte, J.; Torres Marques, A.; Santos Baptista, J. Occupational Accidents Related to Heavy Machinery: A Systematic Review. Safety 2021, 7, 21. [Google Scholar] [CrossRef]

- Dash, A.K.; Bhattcharjee, R.M.; Paul, P.S.; Tikader, M. Study and analysis of accidents due to wheeled trackless transportation machinery in Indian coal mines: Identification of gap in current investigation system. Procedia Earth Planet. Sci. 2015, 11, 539–547. [Google Scholar] [CrossRef]

- Zhang, M.; Kecojevic, V.; Komljenovic, D. Investigation of haul truck-related fatal accidents in surface mining using fault tree analysis. Saf. Sci. 2014, 65, 106–117. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.; Altman, D.; Antes, G.; Atkins, D.; Barbour, V.; Barrowman, N.; Berlin, J.; et al. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009, 6. [Google Scholar] [CrossRef]

- Shamseer, L.; Moher, D.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A.; Altman, D.G.; Booth, A.; et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015: Elaboration and explanation. BMJ 2015, 349, 1–25. [Google Scholar] [CrossRef]

- Wohlin, C. Guidelines for snowballing in systematic literature studies and a replication in software engineering. In Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering—EASE’14, London, UK, 12–14 May 2014; ACM: New York, NY, USA, 2014; pp. 1–10. [Google Scholar]

- Dickens, J.S.; van Wyk, M.A.; Green, J.J. Pedestrian Detection for Underground Mine Vehicles Using Thermal Images; IEEE: Piscateway, NJ, USA, 2011. [Google Scholar]

- Dickens, J.; Green, J.; Teleka, R.; Sabatta, D. A Global Survey of Systems and Technologies Suitable for Vehicle to Person Collision Avoidance in Underground Rail-Bound Operations; Mine Health and Safety Council: Woodmead, South Africa, 2014. [Google Scholar]

- BlackFlyS USB 3.0, Flir. Available online: https://www.flir.com/products/blackfly-s-usb3/ (accessed on 8 March 2023).

- Slim Led Light. Available online: https://www.osculati.com/en/ (accessed on 8 March 2023).

- Tau 2, Flir. Available online: https://www.flir.fr/products/tau-2/ (accessed on 8 March 2023).

- Thermal Range. Available online: https://www.ametek-land.fr/pressreleases/blog/2021/june/thermalinfraredrangeblog (accessed on 8 March 2023).

- Dickens, J. Thermal Imagery to Prevent Pedestrian-Vehicle Collisions in Mines; CSIR Centre for Mining Innovation. Automation & Controls, Vector. 2013. Available online: https://www.academia.edu/96375466/Thermal_imagery_to_prevent_pedestrian_vehicle_collisions_in_mines (accessed on 24 April 2023).

- Zed 2i, Stereolabs. Available online: https://www.stereolabs.com/zed-2i/ (accessed on 8 March 2023).

- Lidar Sensor 2d, SICK LMS 111. Available online: https://www.sick.com (accessed on 8 March 2023).

- Lidar Sensor 3d, Velodyne Puck VLP-16. Available online: https://velodynelidar.com (accessed on 8 March 2023).

- Ren, H.; Zhao, Y.; Xiao, W.; Hu, Z. A review of UAV monitoring in mining areas: Current status and future perspectives. Int. J. Coal Sci. Technol. 2019, 6, 320–333. [Google Scholar] [CrossRef]

- Jones, E.; Sofonia, J.; Canales, C.; Hrabar, S.; Kendoul, F. Advances and applications for automated drones in underground mining operations. In Proceedings of the Deep Mining 2019: Ninth International Conference on Deep and High Stress Mining, The Southern African Institute of Mining and Metallurgy, Johannesburg, South Africa, 24–25 June 2019; pp. 323–334. [Google Scholar] [CrossRef]

- Shahmoradi, J.; Mirzaeinia, A.; Roghanchi, P.; Hassanalian, M. Monitoring of Inaccessible Areas in GPS-Denied Underground Mines using a Fully Autonomous Encased Safety Inspection Drone. In Proceedings of the AIAA Scitech 2020 Forum, Orlando, FL, USA, 6–10 January 2020. [Google Scholar] [CrossRef]

- Freire, G.R.; Cota, R.F. Capture of images in inaccessible areas in an underground mine using an unmanned aerial vehicle. In Proceedings of the UMT 2017: Proceedings of the First International Conference on Underground Mining Technology, Australian Centre for Geomechanics, Perth, Australia, 11–13 October 2017. [CrossRef]

- Zhai, G.; Zhang, W.; Hu, W.; Ji, A.Z. Coal Mine Rescue Robots Based on Binocular Vision: A Review of the State of the Art. IEEE Access 2020, 8, 130561–130575. [Google Scholar] [CrossRef]

- Green, J.J.; Coetzee, S. Novel Sensors for Underground Robotics; IEEE: Piscateway, NJ, USA, 2012. [Google Scholar] [CrossRef]

- Szrek, J.; Wodecki, J.; Blazej, R.; Zimroz, R. An Inspection Robot for Belt Conveyor Maintenance in Underground Mine—Infrared Thermography for Overheated Idlers Detection. Appl. Sci. 2020, 10, 4984. [Google Scholar] [CrossRef]

- Wei, X.; Zhang, H.; Liu, S.; Lu, Y. Pedestrian Detection in Underground Mines via Parallel Feature Transfer Network; Elsevier Ltd.: Amsterdam, The Netherlands, 2020. [Google Scholar] [CrossRef]

- Wei, L.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Joseph, R.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar]

- Farhadi, R.A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Alexey, B.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ross, G.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. arXiv 2014, arXiv:1311.2524. [Google Scholar]

- Kaiming, H.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. arXiv 2018, arXiv:1703.06870. [Google Scholar]

- Shaoqing, R.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497. [Google Scholar]

- Xue, G.; Li, R.; Liu, S.; Wei, J. Research on Underground Coal Mine Map Construction Method Based on LeGO-LOAM Improved Algorithm. Energies 2022, 15, 6256. [Google Scholar] [CrossRef]

- Load Haul Dump, Epiroc. Available online: https://www.epiroc.com/en-za/products/loaders-and-trucks (accessed on 8 March 2023).

- Load Haul Dump, Caterpillar. Available online: https://www.cat.com/en_US/products/new/equipment/underground-hard-rock/underground-mining-load-haul-dump-lhd-loaders.html (accessed on 8 March 2023).

- Zeng, F.; Jacobson, A.; Smith, D.; Boswell, N.; Peynot, T.; Milford, M. Enhancing Underground Visual Place Recognition with Shannon Entropy Saliency. In Proceedings of the Australasian Conference on Robotics and Automation (ACRA 2017), Sydney, Australia, 11–13 December 2017. [Google Scholar]

- Kazi, N.; Parasar, D. Human Identification Using Thermal Sensing Inside Mines; IEEE Xplore Part Number: CFP21K74-ART; IEEE: Piscateway, NJ, USA, 2021; ISBN 978-0-7381-1327-2. [Google Scholar]

- Pengfei, X.; Zhou, Z.; Geng, Z. Safety Monitoring Method of Moving Target in Underground Coal Mine Based on Computer Vision Processing. Sci. Rep. 2022, 12, 17899. [Google Scholar] [CrossRef]

- Imam, M.; Baïna, K.; Tabii, Y.; Benzakour, I.; Adlaoui, Y.; Ressami, E.M.; Abdelwahed, E.H. Anti-Collision System for Accident Prevention in Underground Mines using Computer Vision. In Proceedings of the 6th International Conference on Advances in Artificial Intelligence (ICAAI ’22), Brimingham, UK, 21–23 October 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 94–101. [Google Scholar] [CrossRef]

- Yuanjian, J.; Peng, P.; Wang, L.; Wang, J.; Wu, J.; Liu, Y. LIDAR-Based Local Path Planning Method for Reactive Navigation in Underground Mines. Remote Sens. 2023, 15, 309. [Google Scholar] [CrossRef]

- Zhou, X.; Chen, K.; Zhou, Q. Human Tracking by Employing the Scene Information in Underground Coal Mines; IEEE: Piscateway, NJ, USA, 2017. [Google Scholar] [CrossRef]

- Lu, Y.; Huang, Q.X. Object Detection Algorithm in Underground Mine Based on Sparse Representation. Int. J. Adv. Technol. 2018, 9, 210. [Google Scholar] [CrossRef]

- Limei, C.; Jiansheng, Q. A Method for Detecting Miners in Underground Coal Mine Videos; IEEE: Piscateway, NJ, USA, 2009. [Google Scholar] [CrossRef]

- Bai, Z.; Li, Y.; Chen, X.; Yi, T.; Wei, W.; Wozniak, M.; Damasevicius, R. Real-Time Video Stitching for Mine Surveillance Using a Hybrid Image Registration Method. Electronics 2020, 9, 1336. [Google Scholar] [CrossRef]

- Qu, S.; Bao, Z.; Yin, Y.; Yang, X. MineBL: A Battery-Free Localization Scheme with Binocular Camera for Coal Mine. Sensors 2022, 22, 6511. [Google Scholar] [CrossRef] [PubMed]

- Dickens, J.S.; Green, J.J.; van Wyk, M.A. Human Detection For Underground Autonomous Mine Vehicles Using Thermal Imaging. In Proceedings of the 26th International Conference on CAD/CAM, Robotics & Factories of the Future, Kuala Lumpur, Malaysia, 26–28 July 2011. [Google Scholar]

- Dickens, J.S.; Green, J.J. Segmentation techniques for extracting humans from thermal images. In Proceedings of the 4th Robotics & Mechatronics Conference of South Africa, Pretoria, South Africa, 23–25 November 2011. [Google Scholar]

- Li, G.; Yang, Y.; Qu, X. Deep Learning Approaches on Pedestrian Detection in Hazy Weather. IEEE Trans. Ind. Electron. 2019, 67, 8889–8899. [Google Scholar] [CrossRef]

- Tumas, P.; Nowosielski, A.; Serackis, A. Pedestrian detection in severe weather conditions. IEEE Access 2020, 8, 62775–62784. [Google Scholar] [CrossRef]

- Xu, Z.; Zhuang, J.; Liu, Q.; Zhou, J.; Peng, S. Benchmarking a large-scale FIR dataset for on-road pedestrian detection. Infrared Phys. Technol. 2019, 96, 199–208. [Google Scholar] [CrossRef]

- Tumas, P.; Serackis, A.; Nowosielski, A. Augmentation of Severe Weather Impact to Far-Infrared Sensor Images to Improve Pedestrian Detection System. Electronics 2021, 10, 934. [Google Scholar] [CrossRef]

- Li, C.; Song, D.; Tong, R.; Tang, M. Illumination-aware faster R-CNN for robust multi-spectral pedestrian detection. Pattern Recognition 2019, 85, 161–171. [Google Scholar] [CrossRef]

- Pawlowski, P.; Dabrowski, A.; Piniarski, K. Pedestrian detection in low resolution night vision images. In 2015 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA); IEEE: Piscateway, NJ, USA, 2015; p. 7365157. [Google Scholar] [CrossRef]

- Kim, D.; Lee, K. Segment-based region of interest generation for pedestrian detection in far-infrared images. Infrared Phys. Technol. 2013, 61, 120–128. [Google Scholar] [CrossRef]

- Xu, F.; Liu, X.; Fujimura, K. Pedestrian detection and tracking with night vision. IEEE Trans. Intell. Transp. Syst. 2005, 6, 63–71. [Google Scholar] [CrossRef]

- Bertozzi, M.; Broggi, A.; Del Rose, M.; Felisa, M.; Rakotomamonjy, A.; Suard, F. A pedestrian detector using histograms of oriented gradients and a support vector machine classifier. In Proceedings of the 2007 IEEE Intelligent Transportation Systems Conference, Seattle, WA, USA, 30 September–3 October 2007; pp. 143–148. [Google Scholar]

- Bajracharya, M.; Moghaddam, B.; Howard, A.; Matthies, L.H. Detecting personnel around UGVs using stereo vision. In Proceedings of the Unmanned Systems Technology X. International Society for Optics and Photonics, Orlando, FL, USA, 16 April 2008; Volume 6962, p. 696202. [Google Scholar]

- Bajracharya, M.; Moghaddam, B.; Howard, A.; Brennan, S.; Matthies, L.H. A fast stereo-based system for detecting and tracking pedestrians from a moving vehicle. Int. J. Robot. Res. 2009, 28, 1466–1485. [Google Scholar] [CrossRef]

- Heo, D.; Lee, E.; Ko, B.C. Pedestrian detection at night using deep neural networks and saliency maps. Electron. Imaging 2018, 2018, 060403-1. [Google Scholar]

- Chen, Y.; Shin, H. Pedestrian detection at night in infrared images using an attention-guided encoder-decoder convolutional neural network. Appl. Sci. 2020, 10, 809. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Available online: https://www.flir.eu/oem/adas/adas-dataset-form/ (accessed on 8 March 2023).

- Rivera Velazquez, J.M.; Khoudour, L.; Saint Pierre, G.; Duthon, P.; Liandrat, S.; Bernardin, F.; Fiss, S.; Ivanov, I.; Peleg, R. Analysis of thermal Imaging Performance under Extreme Foggy Conditions: Applications to Autonomous Driving. J. Imaging 2022, 8, 306. [Google Scholar] [CrossRef]

- Youssfi Alaoui, A.; Tabii, Y.; Oulad Haj Thami, R.; Daoudi, M.; Berretti, S.; Pala, P. Fall Detection of Elderly People Using the Manifold of Positive Semidefinite Matrices. J. Imaging 2021, 7, 109. [Google Scholar] [CrossRef]

- EMESRT Vehicle Interaction. Available online: https://emesrt.org/vehicle-interaction/ (accessed on 8 March 2023).

- The EMERST 9-Layer Control Effectiveness Model. Available online: https://emesrt.org/stream/emesrt-journey-steps-v2/2-should-we-change/the-emesrt-nine-layer-control-effectiveness-model/ (accessed on 8 March 2023).

- Qian, M.; Zhao, K.; Li, B.; Gong, H.; Seneviratne, A. Survey of Collision Avoidance Systems for Underground Mines: Sensing Protocols. Sensors 2022, 22, 7400. [Google Scholar] [CrossRef]

- Singh, M.; Kumar, M.; Malhotra, J. Energy efficient cognitive body area network (CBAN) using lookup table and energy harvesting. J. Intell. Fuzzy Syst. 2018, 35, 1253–1265. [Google Scholar] [CrossRef]

- Zhang, F.; Tian, J.; Wang, J.; Liu, G.; Liu, Y. ECViST: Mine Intelligent Monitoring Based on Edge Computing and Vision Swin Transformer-YOLOv5. Energies 2022, 15, 9015. [Google Scholar] [CrossRef]

- Bing, Z.; Wang, X.; Dong, Z.; Dong, L.; He, T. A novel edge computing architecture for intelligent coal mining system. Wireless Netw. 2022, 1–10. [Google Scholar] [CrossRef]

- Sakshi, G.; Snigdh, I. Multi-Sensor Fusion in Autonomous Heavy Vehicles. In Autonomous and Connected Heavy Vehicle Technology; Elsevier: Amsterdam, The Netherlands, 2022; pp. 375–389. [Google Scholar] [CrossRef]

- Russell, B.; Dellaert, F.; Camurri, M.; Fallon, M. Learning Inertial Odometry for Dynamic Legged Robot State Estimation. In Proceedings of the 5th Conference on Robot Learning, London, UK, 8–11 January 2022. [Google Scholar]

- Fang, J.; Fan, C.; Wang, F.; Bai, D. Augmented Reality Platform for the Unmanned Mining Process in Underground Mines. Mining Metall. Explor. 2022, 39, 385–395. [Google Scholar] [CrossRef]

| Country | Period | Fatal Accidents (%) | Causes |

|---|---|---|---|

| USA | 1995–2005 | 31.6% | Haulage Equipment |

| Australia | 1982–1984 | 29% | Vehicles |

| United Kingdom | 1983–1993 | 41% | Haulage equipment |

| Turkey | 2004 | 16% | Haulage equipment |

| Ghana | 2008–2017 | 48.3% | Mobile Equipment |

| Type of Machines | |||

|---|---|---|---|

| Dumper | Load Haul Dump (LHD) | ||

|  | ||

| type of accidents | Collision with pedestrian | X | |

| Collision with machine | X | X | |

| (Front/Reversal) Run over | X | ||

| Fall from machine | X | ||

| Rollover | |||

| Caught between | X | ||

| Country | India | Australia | |

| Type of Accident | Frequency of Accidents |

|---|---|

| Reversal run over | 51 |

| Front run over | 68 |

| Lost control | 30 |

| Collision | 51 |

| Other | 46 |

| Type of Accident | Frequency of Accidents |

|---|---|

| Caught between | 45 |

| Collision with machine | 87 |

| Fall from machine | 27 |

| Date | In the initial phase, only papers published between 2000 and 2022 were taken in consideration. The year 2000 was selected after a sensitivity analysis of the quantity of publications identified using the designated keywords. |

| Paper type | We limited our consideration to research papers. |

| Language | Our evaluation was restricted exclusively to papers written in English. |

| Algorithm | Data Type | Purpose |

|---|---|---|

| Yolo (v2,v3,v4 and v5) [21,22,29,30,31,32] | RGB Images [21,22,30,31,32], Thermal Images [22,29] | Pedestrian detection [21,22,29,30,31,32], electric locomotives and stones falling [30] |

| HOG [22] | RGB Images [22], Thermal Images [22] | Pedestrian detection [22] |

| SVM [90,100] | RGB Images [90,100], Thermal Images [100] | Enhancing underground visual place [90], pedestrian segmentation [100] |

| Image Segmentation and Thresholding [78,100,101] | RGB Images [78], Thermal images [78,100,101] | Overhead boulders detection [78], pedestrian detection [100,101] |

| Navigation and mapping [23,24,25,26,27,28] | RGB Image [23,25,27], Stereoscopic Image [24,28], Thermal Image [24], Image from LIDAR [23,24,25,26,27,28] | Anti-collision [23], exploration path planning solutions [24], road signs recognition [25], location estimation method [26], trajectory controller [27] |

| Reference | Algorithm | AP (%) | MAP (%) | fps |

|---|---|---|---|---|

| [21] | Yolov2 | N/A | 54 | 43 |

| YWSSv1 | N/A | 54.4 | 43 | |

| YWSS2 | N/A | 66.3 | 5 | |

| [29] | Yolov4 | 95.47 | N/A | 44.3 |

| SPAD | 91.63 | N/A | 35.9 | |

| Faster-RCNN | 88.2 | N/A | 25.1 | |

| SSD | 86.2 | N/A | 38.7 | |

| Improved Yolov4 | 69.25 | N/A | 48.2 | |

| [30] | A -Darknet53 YOLOv3 | 93.1 | N/A | 31 |

| B -Model A + add a fourth feature scale | 96.1 | N/A | 26 | |

| C -Model B + Darknet-37 | 97.5 | N/A | 38 | |

| D- Model C + DIOU + Focal | 98.2 | N/A | 38 |

| Company | Product | Type of Solution | Environment | Technology | Comments | Link |

|---|---|---|---|---|---|---|

| DotNetix | SAFEYE | Object detection (recognition signs, pedestrian, mobile machines ) | Surface and underground | Stereoscopic cameras, Display warning system, Multicamera controller (mini PC) | dotNetix has created a 3D camera capable of determining the distance to pedestrians (up to 25 m) and machines (up to 50 m). | https://www.dotnetix.co.za/safeye (accessed on 8 March 2023) |

| EDEYE | Void and berm detection | EDGEYE provides a berm and void detection system for industrial machines using 3D camera and machine learning. | https://www.dotnetix.co.za/edgeye (accessed on 8 March 2023) | |||

| Blaxtair | Blaxtair | Prevents collisions between industrial machinery and pedestrians | Surface and underground | Stereoscopic camera | When a pedestrian is in danger, the Blaxtair Origin delivers a visual and audio warning to warn the driver owing to its AI algorithms, which are supported by a distinctive and large learning database. | https://blaxtair.com/en/products/blaxtair-pedestrian-obstacle-detection-camera (accessed on 8 March 2023) |

| Blaxtaire Origin | Pedestrian detection | Monoscopic camera | https://blaxtair.com/en/products/blaxtair-origin-pedestrian-detection-camera (accessed on 8 March 2023) | |||

| Blaxtair Omega | Prevents collisions between industrial machinery and pedestrians | Robust stereoscopic camera (−40 °C to +75 °C) | The Omega 3D industrial camera is a robust tool that computes the disparity map and gives the user access to metadata. | https://blaxtair.com/en/products/omega-smart-3d-vision-for-robust-automation (accessed on 8 March 2023) | ||

| AXORA | Radar and video anti-collision system | Collision avoidance | Surface and underground | LIDAR | AXORA provides a LIDAR system that helps vehicles avoid collision in mines using rebounding laser beams to alert operators about obstacles. Additionally, this solution offers a 3D environment map that can be utilized to enhance visibility and exert control over the stability of the ceiling. | https://www.axora.com/marketplace/radar-and-video-anti-collision-system/ (accessed on 8 March 2023) |

| Sick | Over height and narrowness detection in hard rock shafts | Collision-avoidance system | Underground | 2D LIDAR | To avoid collisions with low roofs and in cramped locations, they rely on compact 2D LIDAR sensors to prevent accidents. | https://www.sick.com/cn/en/industries/mining/underground-mining/vehicles-for-mining/over-height-and-narrowness-detection-in-hardrock-shafts/c/p661478 (accessed on 8 March 2023) |

| Identification of mine vehicles in areas with poor visibility | LIDAR | The sensors detect when vehicles are dangerously close, even if dust concentrations are high. They also detect whether a vehicle is moving or stationary. They pass the information on to a traffic control system, which warns incoming traffic with a traffic light system if necessary. | https://www.sick.com/cn/en/industries/mining/underground-mining/vehicles-for-mining/identification-of-mine-vehicles-in-areas-with-poor-visibility/c/p674800 (accessed on 8 March 2023) | |||

| Torsa | Collision avoidance for shovels | Collision avoidance | Surface and underground | LIDAR 3D | This system is based on LIDAR 3D technology and analyzes the interaction between vehicles and the shovel itself with 0.01 cm of precision, guaranteeing safety in the loading operation by informing the operator of the machinery about the type, position, and distance of the different vehicles and obstacles around the shovel. | https://torsaglobal.com/en/solution/collision-avoidance-shovels/ (accessed on 8 March 2023) |

| Collision-avoidance system for haul trucks, auxiliary, and light vehicles | This CAS for trucks and auxiliary vehicles includes LIDAR 3D technology, which is able to analyse its environment with a very high level of precision and definition. The system is designed to protect the vehicle operator at all times, proactively and predictively assessing and alerting potential risk situations. | https://torsaglobal.com/en/solution/collision-avoidance-mining/ (accessed on 8 March 2023) | ||||

| Collision avoidance for drillers and rigs | The system has been conceived to identify any object (mining equipment, rocks, personnel, etc.) that could cause a hazardous situation in the driller’s operating area, and it has a powerful communications interface that allows monitoring its safety remotely while operating the rig remotely and autonomously. | https://torsaglobal.com/en/solution/collision-avoidance-drillers/ (accessed on 8 March 2023) | ||||

| Collision-avoidance system for underground mining operations | Time of Flight (TOF) | This (CAS) can function over the equipment (Level 9) in underground mining operations to prevent any run-overs or collisions. Covering scoops, auxiliary, and light vehicles, and operational staff are its primary targets. | https://torsaglobal.com/en/solution/collision-avoidance-underground/ (accessed on 8 March 2023) | |||

| Wabtec | Collision-management system | Collision-avoidance system | Surface | Camera, RFID, GPS | Wabtec’s Collision Awareness solution is a reporting system developed specifically for the mining industry. In order to reduce the risk of collisions between actors and components of the mine, it gives 360-degree situational awareness of things close to a heavy vehicle throughout stationary, slow-speed, and high-speed operations. | https://www.wabteccorp.com/mining/digital-mine/environmental-health-safety-ehs (accessed on 8 March 2023) |

| Waytronic Security | Collision avoidance | Collision-avoidance system | Manufacturing | Ultrasonic sensors, camera | Pedestrian and forklift collision avoidance | http://www.wt-safe.com/factorycoll_1.html?device=c&kyw=proximity%20detection%20system (accessed on 8 March 2023) |

| LSM technologies | RadarEye | Collision-avoidance system | Mining | Radar, camera | Radar sensor with detection range 2–20 m and virtually 360 degree viewing; | https://www.lsm.com.au/item.cfm?category_id=2869&site_id=3 (accessed on 8 March 2023) |

| Matrix Design Group | IntelliZone | Collision-avoidance system | Underground, coal mine | Magnetic field with optional Lidar/Radar/camera integration | Machine-specific straight-line and angled zones | https://www.matrixteam.com/wp-content/uploads/2018/08/IntelliZone-8_18.pdf (accessed on 8 March 2023) |

| Preco Electronics | PreView | Collision-avoidance system | Surface and underground mine | Radar, camera | Various product lines | https://preco.com/product-manuals/ (accessed on 8 March 2023) |

| Caterpillar | MineStar Detect | Collision-avoidance system | Surface and underground mine | Camera, radar, GNSS | https://www.westrac.com.au/en/technology/minestar/minestar-detect (accessed on 8 March 2023) | |

| GE Mining | CAS | Collision-avoidance system | Surface and underground mine | Surface—GPS tracking, RF unit and camera; underground—VLF magnetic and WiFi | Real-time data, data communication network | https://www.ge.com/digital/sites/default/files/downloadassets/GE-Digital-Mine-Collision-Avoidance-System-datasheet.pdf (accessed on 8 March 2023) |

| Jannatec | SmartView | Collision-avoidance system | Mining | Multi-camera, WiFi Bluetooth (for communication) | Text voice and video communication | https://www.jannatec.com/ensosmartview (accessed on 8 March 2023) |

| Schauenburg Systems | SCAS surface PDS | Collision-avoidance system | Surface mine | GPS, GSM, RFID, camera | Use time of flight with an accuracy <1 m | http://schauenburg.co.za/product/scas-surface-proximity-detection-system/ (accessed on 8 March 2023) |

| SCAS underground PDS | Underground mine | Cameras | Tagless, artificial intelligent | http://schauenburg.co.za/mimacs/ (accessed on 8 March 2023) | ||

| Joy Global, P&H | HawkEye camera system | Collision-avoidance system | Mining | Fisheye cameras with infrared filters | Digital video recorder (DVR)—100 to 200 h video | https://mining.komatsu/en-au/technology/proximity-detection/hawkeye-camera-system (accessed on 8 March 2023) |

| Intec Video Systems | Car Vision | Collision-avoidance system | Industrial | Camera | Vehicle safety monitoring cameras | http://www.intecvideo.com/products.html (accessed on 8 March 2023) |

| PreView | Industrial | Radar, camera | Low power 5.8 GHz radar signal | |||

| Ifm Efector | O3M 3D Smart Sensor | Collision-avoidance system | Outdoor | Optical technology | PMD-based 3D imaging information | http://eval.ifm-electronic.com/ifmza/web/mobile-3d-app-02-Kollisionsvorhersage.htm (accessed on 8 March 2023) |

| Motion Metrics | ShovelMetrics | Collision-avoidance system | Mining and construction | Radar, thermal imaging | Interface with our centralised data analysis platform | https://www.motionmetrics.com/shovel-metrics/ (accessed on 8 March 2023) |

| 3D Laser Mapping | SiteMonitor | Collision-avoidance system | Mining | Laser sensor | Accuracy of 10 mm out of range up to 6000 m | https://www.mining-technology.com/contractors/exploration/3d-laser-mapping/ (accessed on 8 March 2023) |

| Hitachi Mining | SkyAngle | Collision-avoidance system | Mining | Camera | Bird’s-eye view | https://www.mining.com/web/hitachi-introduces-skyangle-advanced-peripheral-vision-support-system-at-minexpo-international/ (accessed on 8 March 2023) |

| Guardvant | ProxGuard CAS | Collision-avoidance system | Mining | Radar, camera, and GPS | Light vehicles and heavy equipment | https://www.mining-technology.com/contractors/health-and-safety/guardvant/pressreleases/pressguardvant-proxguard-collision-avoidance/ (accessed on 8 March 2023) |

| Safety Vision | Vision system | Collision-avoidance system | Diverse range of uses | Camera | http://www.safetyvision.com/products (accessed on 8 March 2023) | |

| ECCO | Vision system | Collision-avoidance system | Diverse range of uses | Camera | https://www.eccoesg.com/us/en/products/camera-systems (accessed on 8 March 2023) | |

| Flir | Vision system | Collision-avoidance system | Wide range of application | Thermal camera | https://www.flir.com.au/applications/camera-cores-components/ (accessed on 8 March 2023) | |

| Nautitech | Vision system | Collision-avoidance system | Harsh environment | Thermal camera | Harsh environment | https://nautitech.com.au/wp-content/uploads/2019/05/Nautitech-Camera-Brochure-2019.pdf (accessed on 8 March 2023) |

| Company | Product | Type of Solution | Environment | Technology | Comments | Link |

|---|---|---|---|---|---|---|

| DotNetix | NEXUS | Monitoring operator fatigue | Surface and underground | Using advanced sensors and algorithms, this system monitors the operator and determines if he is distracted or tired from his facial features. | https://www.dotnetix.co.za/fatigue-monitoring (accessed on 8 March 2023) | |

| Torsa | Human vibration exposure monitoring | Miner safety | Surface and underground | Vibration sensor | The TORSA’s vibration monitor system measures and evaluates the vibrations to which the operators of vehicles of the mining operation are exposed for reducing the number of possible injuries that could result from your daily activity. | https://torsaglobal.com/en/solution/human-vibration-exposure-system/ (accessed on 8 March 2023) |

| Mining3 | SmartCap | Monitoring operator fatigue | SmartCap is a wearable device that monitors driver and heavy vehicle operator tiredness. The life app gives operators the ability to control their own level of awareness. Through the user-friendly in-cab life display, the app gives the driver immediate visual and aural alerts. | https://www.mining3.com/solutions/smartcap/ (accessed on 8 March 2023) | ||

| Hexagon | MineProtect operator alertness system | Fatigue and distraction management solution | Surface and underground | An integrated fatigue and distraction management solution, the HxGN MineProtect operator alertness system helps operators of heavy and light vehicles maintain the level of attention necessary for long hours and monotonous tasks. | https://hexagon.com/products/hxgn-mineprotect-operator-alertness-system (accessed on 8 March 2023) | |

| Caterpillar | Cat Detect | Fatigue and distraction management | Surface and underground | Any operation is susceptible to distraction and exhaustion, especially if the activities are monotonous. Cat Detect provides fatigue and distraction management, a solution that will help operators create a culture where safety comes first to give you piece of mind. | https://www.westrac.com.au/technology/minestar/minestar-detect (accessed on 8 March 2023) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Imam, M.; Baïna, K.; Tabii, Y.; Ressami, E.M.; Adlaoui, Y.; Benzakour, I.; Abdelwahed, E.h. The Future of Mine Safety: A Comprehensive Review of Anti-Collision Systems Based on Computer Vision in Underground Mines. Sensors 2023, 23, 4294. https://doi.org/10.3390/s23094294

Imam M, Baïna K, Tabii Y, Ressami EM, Adlaoui Y, Benzakour I, Abdelwahed Eh. The Future of Mine Safety: A Comprehensive Review of Anti-Collision Systems Based on Computer Vision in Underground Mines. Sensors. 2023; 23(9):4294. https://doi.org/10.3390/s23094294

Chicago/Turabian StyleImam, Mohamed, Karim Baïna, Youness Tabii, El Mostafa Ressami, Youssef Adlaoui, Intissar Benzakour, and El hassan Abdelwahed. 2023. "The Future of Mine Safety: A Comprehensive Review of Anti-Collision Systems Based on Computer Vision in Underground Mines" Sensors 23, no. 9: 4294. https://doi.org/10.3390/s23094294

APA StyleImam, M., Baïna, K., Tabii, Y., Ressami, E. M., Adlaoui, Y., Benzakour, I., & Abdelwahed, E. h. (2023). The Future of Mine Safety: A Comprehensive Review of Anti-Collision Systems Based on Computer Vision in Underground Mines. Sensors, 23(9), 4294. https://doi.org/10.3390/s23094294