A Review on the State of the Art in Copter Drones and Flight Control Systems

Abstract

1. Introduction

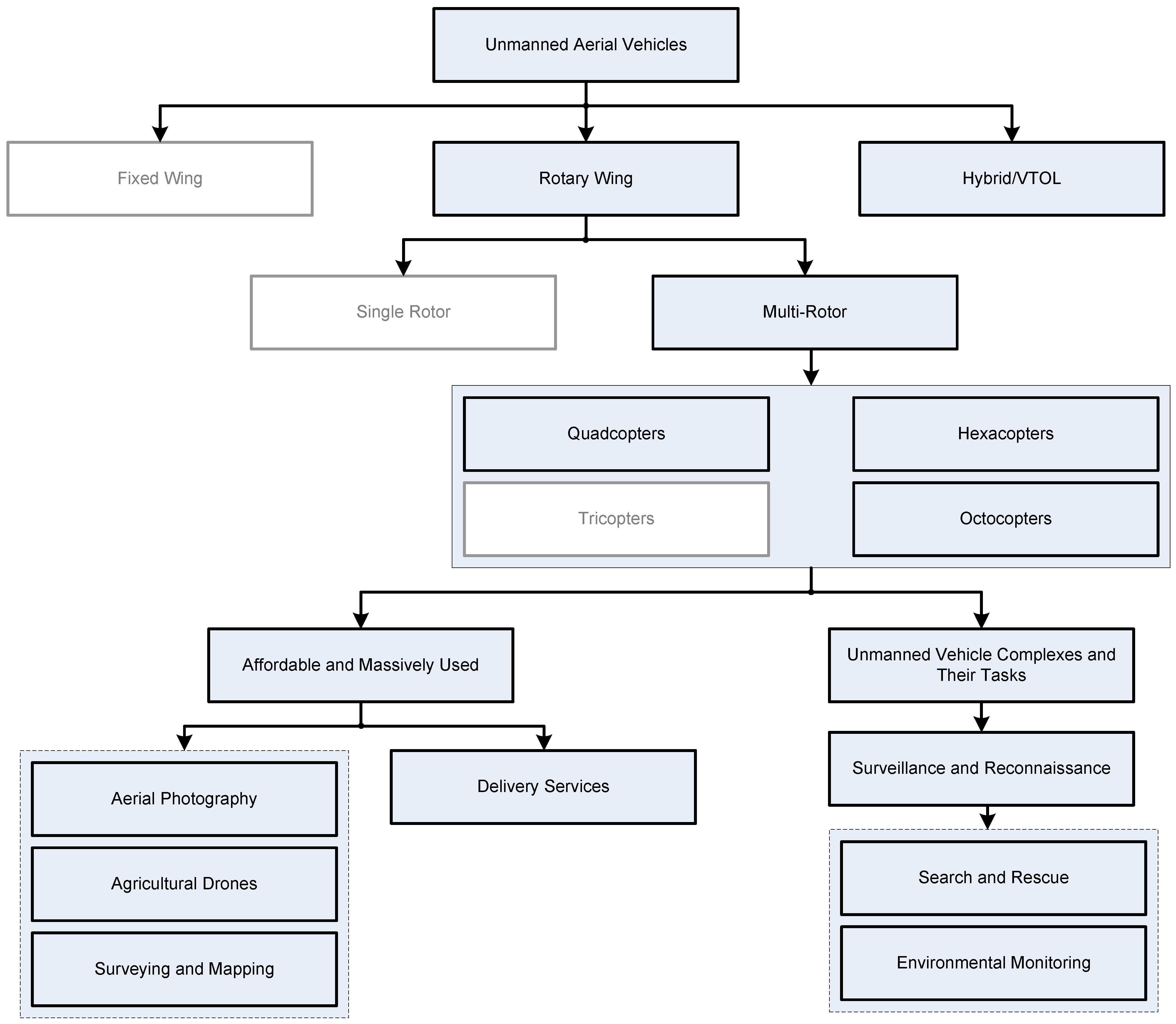

2. Types of Unmanned Aerial Vehicles

2.1. Classifications of Unmanned Aerial Vehicles

- Scope of tasks (purpose);

- Power system (drive type);

- Aircraft type (design);

- Flight duration;

- Control system type;

- Mass;

- Wing type;

- Flight height;

- Base type;

- Type of fuel tank;

- Radius of action;

- Maximum flight speed;

- The number of engines;

- Take-off/landing type;

- The time of receiving the collected information.

- Commercial UAVs used for profit, in particular, in agriculture, video recording, geological research, etc.;

- Military UAVs designed for military operations, reconnaissance, support, communication tasks, etc.;

- Civilian UAVs used for civilian purposes, such as search and rescue, environmental monitoring, scientific research, etc.

- Electric UAVs—need an electric power source for flight;

- Hybrid UAVs—use both electric and fuel as the power system;

- Fuel UAVs—driven by an internal combustion engine.

- (1)

- Aircraft (fixed-wing), including the following:

- Monoplanes—one-wing construction;

- Biplanes—use two wings—upper and lower;

- Triplanes—use three wings located one above the other;

- Wings—delta-shaped construction.

- (2)

- Multirotor UAVs, which include the following:

- Quadcopters, four rotors;

- Hexacopters, six rotors;

- Gyrocopters (octocopters), eight rotors.

- (3)

- Tailsitters—a combination of fixed wings and multirotors that uses the advantages of both designs.

- (4)

- VTOL (vertical take-off and landing)—includes UAVs that can perform vertical take-off and landing and then operate in horizontal flight mode.

- (5)

- Balloons and airships—ultralight vehicles that operate using forces of air and can have a gas cylinder for lifting.

- Short-endurance—with a flight range and duration of up to an hour;

- Medium-endurance—from one to several hours;

- Long-endurance UAVs—the range and flight duration of which are more than several hours (up to several dozen hours).

- Flight mass;

- Flight duration;

- Flight endurance;

- Flight altitude;

- Areas of use.

2.2. Multicopters

2.3. UAV Complex

- Mission planning;

- Setting flight parameters;

- Data monitoring from sensors and cameras;

- Decision making in accordance with the received data.

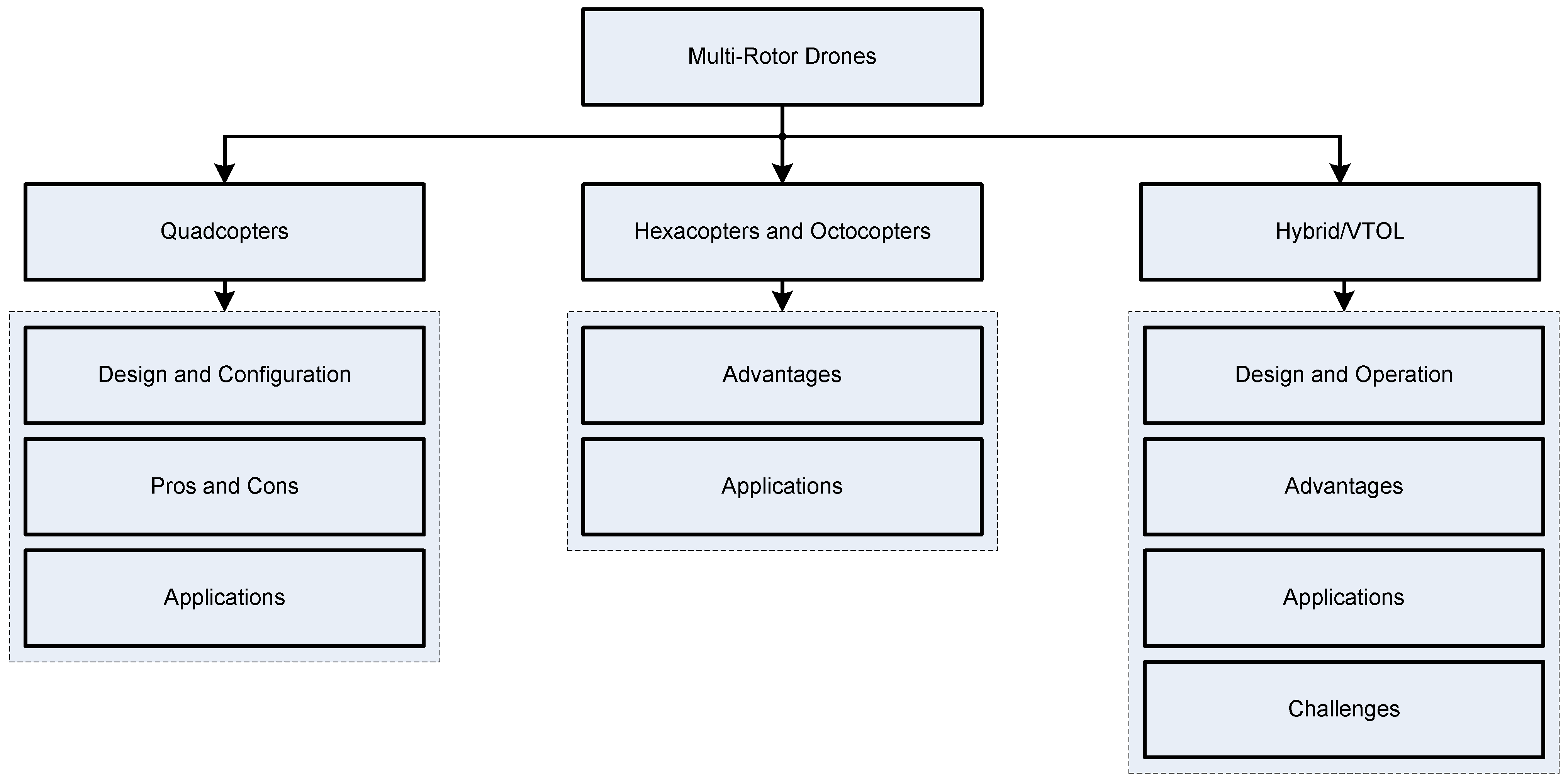

3. Types of Multirotor Drones

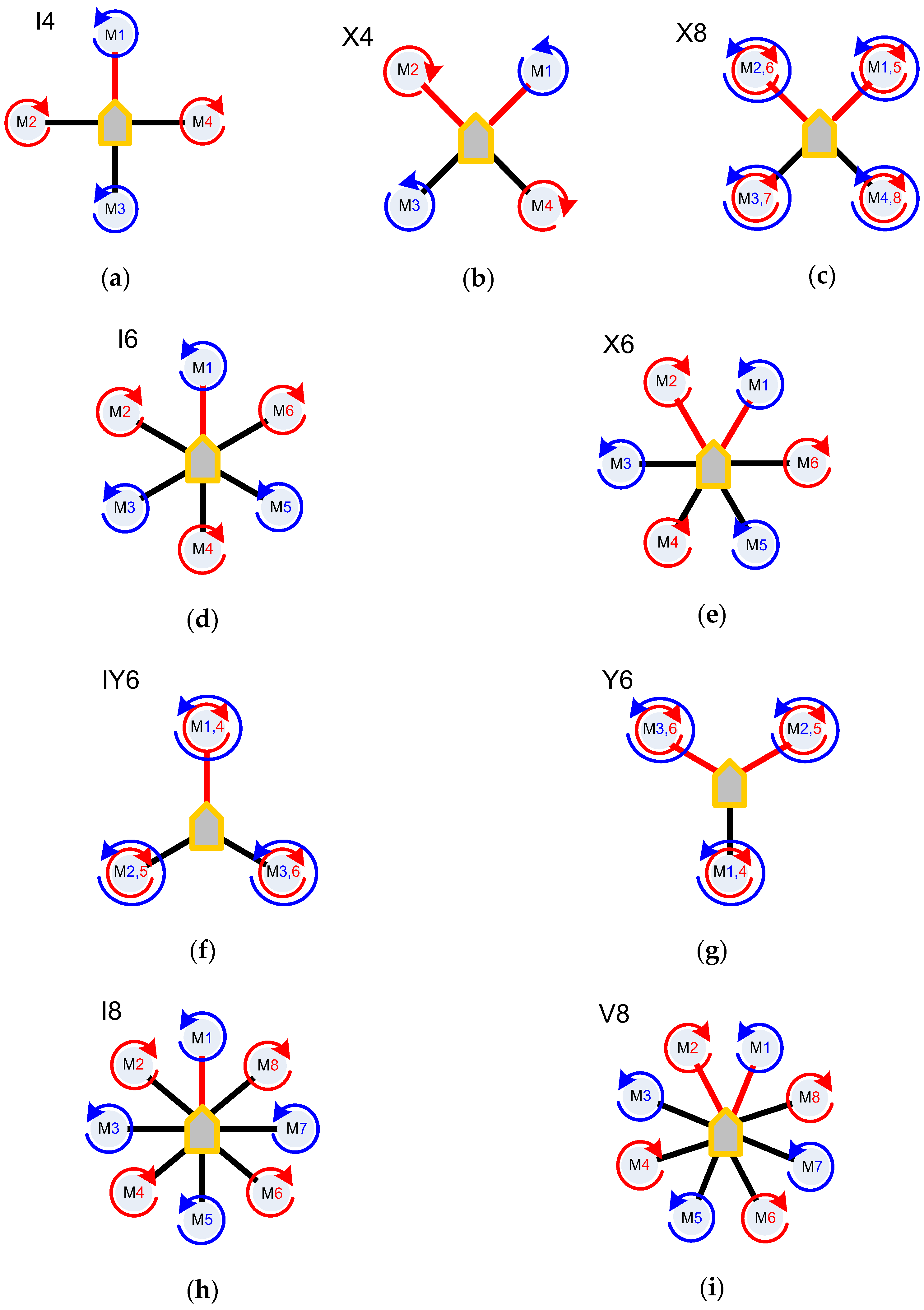

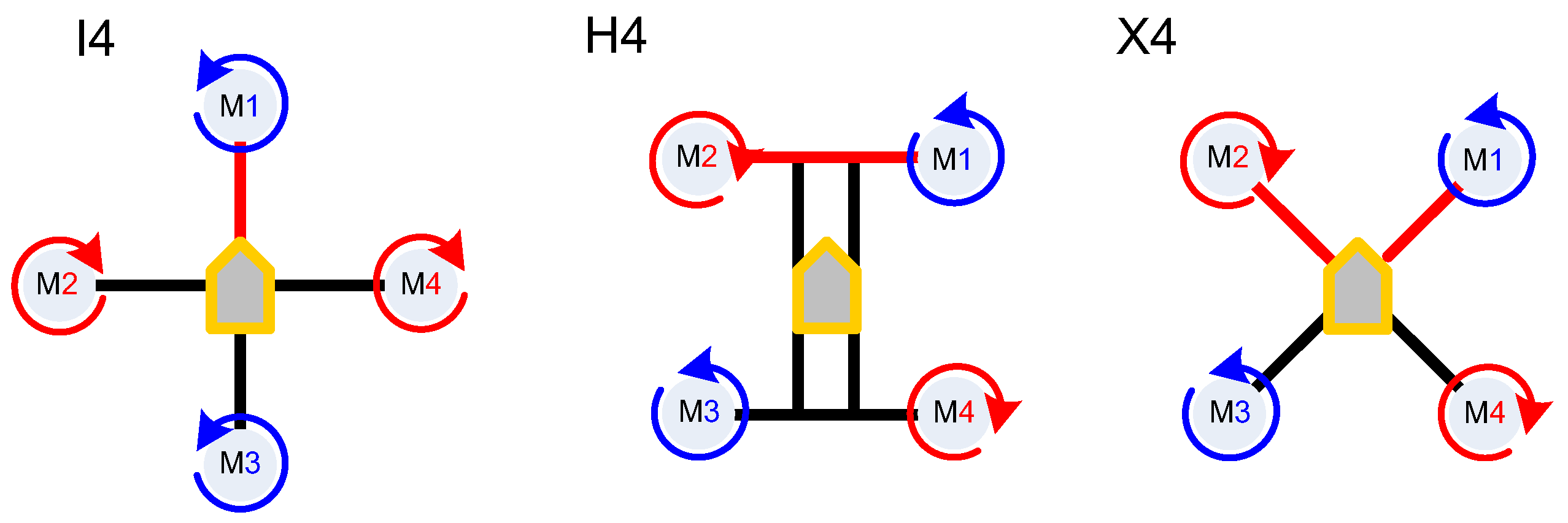

3.1. Quadcopters

3.2. Hexacopters and Octocopters

3.3. Fixed-Wing VTOL Drones

3.4. Comparison of Reviewed Drone Types

4. Applications of Copter Drones

4.1. Aerial Photography and Videography

4.2. Surveillance and Security

4.3. Agriculture

4.4. Search and Rescue

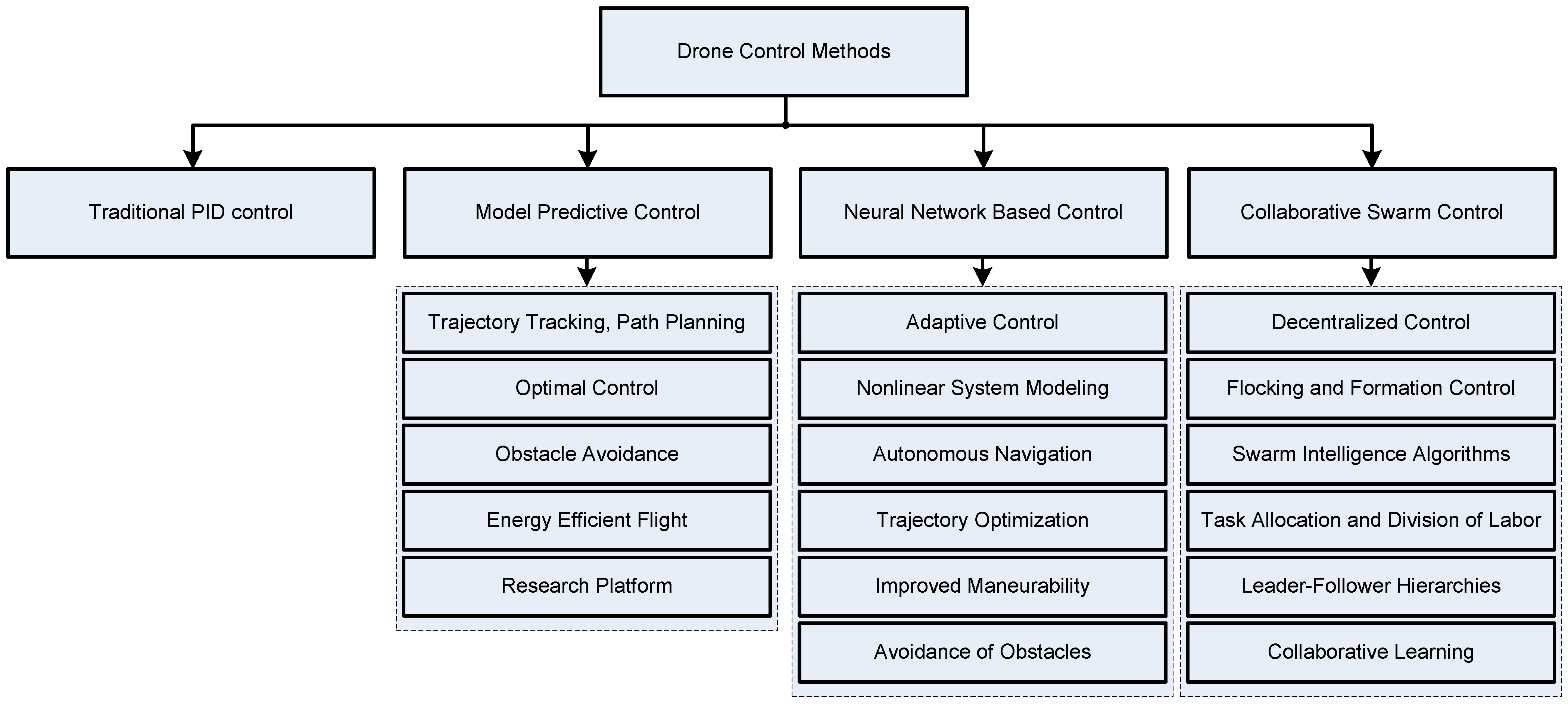

5. Drone Control Methods and Systems

5.1. Typical Set of UAV Electronic Components

- Flight controller;

- Accumulator;

- Brushless motor and ESC;

- Radio transmitters;

- Location and navigation sensors: GPS, air speed, and others;

- Video system (analog or digital): transmitter, receiver, camera, antenna.

- Stabilization of the device in the air using sensors such as a gyroscope, accelerometer, compass (they are usually located on the flight controller board);

- Altitude maintenance using a barometric altimeter (the barometer is usually built into the flight controller) or using a GPS sensor;

- Heading speed measurement using a differential flight speed sensor (Pitot tube) or using a GPS sensor;

- Automatic flight to predetermined points (mission planner);

- Transmission of current flight parameters to the control panel;

- Ensuring flight safety (return to the take-off point in case of signal loss, automatic landing, automatic take-off);

- Stopping in front of an obstacle (for multicopters) or flying around obstacles (for airplanes) if sensors are available;

- Connection of additional peripherals: OSD (on-screen display), servo drives, LED indication, relay, and others.

- UART (RX/TX)—universal asynchronous receiver–transmitter;

- USART (RX/TX)—universal synchronous–asynchronous receiver–transmitter;

- I2C (DA/CL or SDA/SCL)—inter-integrated circuit;

- SBUS—SPARC bus;

- CANBUS (RX/TX)—controller area network bus;

- VTX—video transmitter.

5.2. PID Controllers

5.3. Model Predictive Control (MPC)

5.4. Neural-Network-Based Control

5.5. Collaborative Swarm Control Strategies

- Decentralized control, assuming distributed decision-making authority among individual drones, allowing them to make local decisions based on local information while coordinating with neighboring drones [175]. Each drone operates autonomously, reacting to its environment and communicating with nearby drones to achieve collective objectives without central coordination;

- Flocking and formation control, aiming to maintain desired spatial arrangements among drones, such as maintaining a formation shape or flying in a coordinated flock [176]. Drones adjust their positions and velocities based on local interactions with neighboring drones, following simple rules inspired by natural flocking behaviors observed in birds and insects;

- Swarm intelligence algorithms, such as ant colony optimization, particle swarm optimization, and artificial bee colony optimization, can be adapted for drone swarm control [177]. These algorithms enable drones to collectively explore, search, or optimize objectives in a distributed manner by sharing information and iteratively updating their behaviors;

- Task allocation and division of labor, which assign specific tasks or roles to individual drones based on their capabilities, resources, and proximity to the task [178]. Drones collaborate to divide complex tasks into smaller subtasks and allocate them among the swarm members, optimizing resource utilization and task efficiency;

- Leader–follower hierarchies, which establish hierarchical relationships among drones, with designated leaders guiding the behavior of follower drones [179]. Leaders may provide high-level commands or waypoints for followers to follow, while followers adjust their positions and velocities to maintain the formation relative to the leader;

- Collaborative learning and adaptation mechanisms, which enable drone swarms to learn from collective experiences, share knowledge, and adapt their behaviors over time [180]. Drones may employ machine learning algorithms, reinforcement learning, or evolutionary algorithms to improve performance, optimize strategies, and adapt to evolving mission scenarios.

6. Sensors and Perception

6.1. Inertial Measurement Unit (IMU)

6.2. Global Positioning System and Global Navigation Satellite System (GPS and GNSS)

- GPS (Global Positioning System), which is the most common and best known GNSS. It consists of a satellite constellation that provides signals to determine the exact geographical position;

- GLONASS (Global Navigation Satellite System), which is the Russian alternative to the American GPS. It consists of a constellation of Russian satellites that provide navigation signals;

- Galileo, which is the European Union’s navigation system. It provides independent signals for navigation and positioning;

- BeiDou, which is the Chinese navigation system. It provides signals for navigation in China and neighboring regions;

- NavIC (Navigation with Indian Constellation), which is the Indian navigation system developed by the Indian Space Research Organization (ISRO).

6.3. Computer Vision

6.4. Environmental Information (SLAM)

- The main components of SLAM for drones include the following:

- Cameras used to obtain visual data environment;

- Laser rangefinders (LiDARs), which can be used to measure distances and create accurate three-dimensional maps of the environment;

- Depth cameras that measure distances to objects and allow one to estimate the depth of objects in the image;

- Inertial sensors providing information on the acceleration and rotation of the drone;

- Data processing systems and SLAM algorithms that analyze input data and use them to determine the position and create a map.

- Autonomous flight. SLAM allows the drone to navigate in an unknown environment, simultaneously creating a map and determining its position;

- Avoiding obstacles. This technology helps in avoiding collisions with obstacles, as the drone can detect objects and avoid them;

- Stabilization and accurate position maintenance. SLAM helps to keep the drone stable in the air even when there is no access to the GNSS signal;

- Environmental mapping. The system creates accurate three-dimensional maps of the environment that can be used for further analysis or navigation;

- Recognition and tracking of objects. The technology allows the drone to recognize and track the movement of objects around it.

6.5. Short-Range Radio Navigation Systems (VOR, DME)

- The equipment size and weight of VOR and DME require large and heavy antennas and equipment that is difficult and inconvenient to install on a drone;

- Frequency bands. VOR and DME operate in the high-frequency radio range, requiring large antennas and powerful transmitters;

- Licensing and regulation. The use of VOR and DME requires special permits and licenses from regulatory authorities;

- Intentional range limitations. VOR and DME are for aviation use and have a limited range that varies from airport to airport;

- Low compatibility with drones. The use of VOR and DME in multirotor drones may cause electromagnetic interference and affect the normal operation of other electronic components.

6.6. Object Detection and Tracking

- Cameras and visual systems. Cameras, especially those with high resolution and high frame rates, are the primary source of visual input. They allow the drone to see its surroundings;

- Depth sensors, such as LiDAR or depth cameras, which provide additional information about distances to objects. This can be useful in recognizing and avoiding obstacles;

- Artificial intelligence (AI) and machine learning. These methods are used to train object recognition models. They can detect and classify objects in images;

- Tracking algorithms, which allow the drone to determine the path of movement and accurately track the movement of objects in time;

- Hybrid systems. Some solutions use a combination of cameras, LiDAR, and other sensors to obtain a more complete picture of the environment.

7. UAVs Software

- Ardupilot + Mission Planner;

- Betaflight;

- INAV;

- Qground Control + PX4.

7.1. Ardupilot and Mission Planner

- Multi-platform. Ardupilot supports various operating systems, including Linux, Windows, and MacOS;

- Versatility. It supports a wide range of different types of drones, including quadcopters, airplanes, helicopters, gliders, and more;

- Automated missions. Ardupilot provides the ability to create and execute automated missions, including point, line, circular trajectories, and more;

- Different flight modes, including Loiter (maintain position), RTL (return to launch), Guided (piloting using points on the map), and others;

- Open-source, allowing the development community to adapt and modify the software.

- Parameter control. It allows users to configure various autopilot parameters such as PID controllers, speed limits, geofences, and more;

- Mission creation, which provides tools to create automated missions with waypoints, actions, and conditions;

- Monitoring and diagnostics—a visual interface for monitoring data from the autopilot, including telemetry, logs, graphs, and more;

- Three-dimensional modeling, which allows one to display a three-dimensional model of the terrain and flight path;

- Integration with Google Earth, providing the ability to import and export mission data to Google Earth.

7.2. Betaflight

- Focus on FPV. Betaflight specializes in the most popular types of UAVs for FPV, including quadcopters;

- High speed and accuracy of control. The software is designed with the needs of racers and pilots in mind, who perform complex maneuvers and stunts;

- A wide range of PID settings allows one to fine-tune the control parameters of the flight platform for optimal performance and stability;

- Various flight modes, including Acro, Angle and Horizon;

- Automatic modes, including stabilization modes, RTL (return to launch), and others;

- Monitoring of the state of the flight platform. Betaflight provides tools for displaying and analyzing data from the flight platform, including telemetry, graphs, and more;

- Support for various hardware platforms. This software is compatible with many types of flight platform controllers;

- Open-source code that allows community developers to make changes and extend the capabilities of the software.

7.3. INAV (Intelligent Navigation)

- Focus on airplanes and winged drones. INAV specializes in the control and navigation of aerodynamic UAVs, where aerodynamic control is essential;

- Stability and navigation. The software provides the ability to automatically control flight stability and navigation, including modes that allow one to maintain a stable position and perform automatic tasks;

- Automated missions. INAV enables the planning and execution of automated missions, including point missions, trajectories, and path tracking;

- Automatic take-off and automatic landing;

- Support for GPS and other sensors. INAV interacts with various sensors, including a GPS, compasses, and others for precise navigation and orientation;

- Open-source code. As open-source software, INAV allows the development community to make changes and develop additional functionality.

7.4. Qground Control + PX4

- Multi-platform. The software supports various operating systems, including Windows, macOS, and Linux;

- Open-source code. QGroundControl allows users to modify and adapt it to their own needs;

- Configuration and control. QGroundControl allows users to configure and control flight platform parameters, including flight modes, altitude, speed, pitch angles, and more;

- Missions and ways. It is possible to create and execute automatic missions, including point, line, and others;

- Monitoring and debugging. QGroundControl provides various tools for monitoring the state of the vehicle, including displaying telemetry, logs, graphs, etc.

- Versatility. PX4 is a versatile autopilot that can be used for various types of UAVs, including multicopters, gliders, helicopters, and more;

- Flight platform control algorithms. PX4 provides a wide range of control algorithms, allowing users to customize the parameters of the flight platform;

- Mission support and navigation. PX4 provides the ability to create and execute automatic missions using different navigation algorithms;

- Development environment (DevKit). It provides tools for developing and testing additional autopilot software;

- Open-source code. PX4 is based on open-source code, allowing users to adapt and modify it to their needs.

7.5. Comparison of Ardupilot and PX4

- Versatility. Both platforms can be used to control various types of UAVs, including quadcopters, airplanes, helicopters, gliders, and more;

- Open-source code. Both Ardupilot and PX4 are based on open-source code, allowing the development community to make changes and extend the capabilities of the software.

- Functionality. It provides a rich set of features, including multiple flight modes, automated missions, auto search and rescue, GPS support, remote control, and more;

- Community of developers. It has an active and large community of developers and users who contribute to the continuous improvement and support of the platform.

- Architecture and algorithms. PX4 uses different control and navigation algorithms that allow for high accuracy and reliability;

- Documentation and support. PX4 has detailed documentation and an active user community that helps beginners and advanced users to learn and use the platform.

8. Challenges and Future Directions

8.1. Current Challenges

8.2. Emerging Technologies

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hassanalian, M.; Abdelkefi, A. Classifications, applications, and design challenges of drones: A review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Aabid, A.; Parveez, B.; Parveen, N.; Khan, S.A.; Zayan, J.M.; Shabbir, O. Reviews on design and development of unmanned aerial vehicle (drone) for different applications. J. Mech. Eng. Res. Dev. 2022, 45, 53–69. [Google Scholar]

- Liew, C.F.; DeLatte, D.; Takeishi, N.; Yairi, T. Recent developments in aerial robotics: A survey and prototypes overview. arXiv 2017, arXiv:1711.10085. [Google Scholar]

- Vohra, D.S.; Garg, P.K.; Ghosh, S.K. Usage of UAVs/Drones Based on their Categorisation: A Review. J. Aerosp. Sci. Technol. 2022, 74, 90–101. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef]

- Fan, B.; Li, Y.; Zhang, R.; Fu, Q. Review on the technological development and application of UAV systems. Chin. J. Electron. 2020, 29, 199–207. [Google Scholar] [CrossRef]

- Gupta, A.; Afrin, T.; Scully, E.; Yodo, N. Advances of UAVs toward future transportation: The state-of-the-art, challenges, and opportunities. Future Transp. 2021, 1, 326–350. [Google Scholar] [CrossRef]

- Lee, D.; Hess, D.J.; Heldeweg, M.A. Safety and privacy regulations for unmanned aerial vehicles: A multiple comparative analysis. Technol. Soc. 2022, 71, 102079. [Google Scholar] [CrossRef]

- Jeelani, I.; Gheisari, M. Safety challenges of UAV integration in construction: Conceptual analysis and future research roadmap. Saf. Sci. 2021, 144, 105473. [Google Scholar] [CrossRef]

- Pogaku, A.C.; Do, D.T.; Lee, B.M.; Nguyen, N.D. UAV-assisted RIS for future wireless communications: A survey on optimization and performance analysis. IEEE Access 2022, 10, 16320–16336. [Google Scholar] [CrossRef]

- Alnagar, S.I.; Salhab, A.M.; Zummo, S.A. Unmanned aerial vehicle relay system: Performance evaluation and 3D location optimization. IEEE Access 2020, 8, 67635–67645. [Google Scholar] [CrossRef]

- Budiyono, A.; Higashino, S.I. A review of the latest innovations in uav technology. J. Instrum. Autom. Syst. 2023, 10, 7–16. [Google Scholar]

- Bolick, M.M.; Mikhailova, E.A.; Post, C.J. Teaching Innovation in STEM Education Using an Unmanned Aerial Vehicle (UAV). Educ. Sci. 2022, 12, 224. [Google Scholar] [CrossRef]

- Alzahrani, B.; Oubbati, O.S.; Barnawi, A.; Atiquzzaman, M.; Alghazzawi, D. UAV assistance paradigm: State-of-the-art in applications and challenges. J. Netw. Comput. Appl. 2020, 166, 102706. [Google Scholar]

- Alghamdi, Y.; Munir, A.; La, H.M. Architecture, classification, and applications of contemporary unmanned aerial vehicles. IEEE Consum. Electron. Mag. 2021, 10, 9–20. [Google Scholar] [CrossRef]

- PS, R.; Jeyan, M.L. Mini Unmanned Aerial Systems (UAV)—A Review of the Parameters for Classification of a Mini UAV. Int. J. Aviat. Aeronaut. Aerosp. 2020, 7, 5. [Google Scholar] [CrossRef]

- Lee, C.; Kim, S.; Chu, B. A survey: Flight mechanism and mechanical structure of the UAV. Int. J. Precis. Eng. Manuf. 2021, 2, 719–743. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Othman, N.Q.H.; Li, Y.; Alsharif, M.H.; Khan, M.A. Unmanned aerial vehicles (UAVs): Practical aspects, applications, open challenges, security issues, and future trends. Intell. Serv. Robot. 2023, 16, 109–137. [Google Scholar] [CrossRef] [PubMed]

- Bendea, H.; Boccardo, P.; Dequal, S.; Giulio Tonolo, F.; Marenchino, D.; Piras, M. Low Cost UAV for Post-Disaster Aassessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37 Pt B, 1373–1379. [Google Scholar]

- Mitka, E.; Mouroutsos, S.G. Classification of drones. Am. J. Eng. Res. 2017, 6, 36–41. [Google Scholar]

- Sivakumar, M.; Tyj, N.M. A literature survey of unmanned aerial vehicle usage for civil applications. J. Aerosp. Technol. Manag. 2021, 13, e4021. [Google Scholar] [CrossRef]

- Magnussen, Ø.; Hovland, G.; Ottestad, M. Multicopter UAV design optimization. In Proceedings of the 2014 IEEE/ASME 10th International Conference on Mechatronic and Embedded Systems and Applications (MESA), Senigallia, Italy, 10–12 September 2014; pp. 1–6. [Google Scholar]

- Çabuk, N.; Yıldırım, Ş. Design, modelling and control of an eight-rotors UAV with asymmetric configuration for use in remote sensing systems. J. Aviat. 2021, 5, 72–81. [Google Scholar] [CrossRef]

- Yang, H.; Lee, Y.; Jeon, S.Y.; Lee, D. Multi-rotor drone tutorial: Systems, mechanics, control and state estimation. Intell. Serv. Robot. 2017, 10, 79–93. [Google Scholar] [CrossRef]

- Yildirim, Ş.; İşci, M. Performance analysis of multi rotor drone systems with changeable rotors. Int. Rev. Appl. Sci. Eng. 2023, 14, 45–57. [Google Scholar] [CrossRef]

- Menon, U.K.; Ponmalar, M.; Sreeja, S. Altitude and Attitude Control of X-Configuration Quadrotor Design. In Proceedings of the 2023 International Conference on Control, Communication and Computing (ICCC), Thiruvananthapuram, India, 19–21 May 2023; pp. 1–6. [Google Scholar]

- Ahmed, M.F.; Zafar, M.N.; Mohanta, J.C. Modeling and analysis of quadcopter F450 frame. In Proceedings of the 2020 International Conference on Contemporary Computing and Applications (IC3A), Lucknow, India, 5–7 February 2020; pp. 196–201. [Google Scholar]

- Turkoglu, K.; Najafi, S. Conceptual Study and Prototype Design of a Subsonic Transport UAV with VTOL Capabilities. In Proceedings of the 53rd AIAA Aerospace Sciences Meeting, Kissimmee, FL, USA, 5–9 January 2015; p. 1906. [Google Scholar]

- Driessens, S.; Pounds, P. The triangular quadrotor: A more efficient quadrotor configuration. IEEE Trans. Robot. 2015, 31, 1517–1526. [Google Scholar] [CrossRef]

- Retha, E.A.A. Novel Concepts in Multi-rotor VTOL UAV Dynamics and Stability. In Advanced UAV Aerodynamics, Flight Stability and Control: Novel Concepts, Theory and Applications; Wiley Online Library: Hoboken, NJ, USA, 2017; pp. 667–694. [Google Scholar]

- Santhosh, L.N.; Chandrashekar, T.K. Anti-collision pentacopter for NDT applications. In Proceedings of the 2015 IEEE International Transportation Electrification Conference (ITEC), Chennai, India, 27–29 August 2015; pp. 1–13. [Google Scholar]

- Magsino, E.R.; Obias, K.; Samarista, J.P.; Say, M.F.; Tan, J.A. A redundant flight recovery system implementation during an octocopter failure. In Proceedings of the 2016 IEEE Region 10 Conference (TENCON), Singapore, 22–25 November 2016; pp. 1825–1828. [Google Scholar]

- Yoon, H.J.; Cichella, V.; Hovakimyan, N. Robust adaptive control allocation for an octocopter under actuator faults. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, San Diego, CA, USA, 4–8 January 2016; p. 0635. [Google Scholar]

- Sethi, N.; Ahlawat, S. Low-fidelity design optimization and development of a VTOL swarm UAV with an open-source framework. Array 2022, 14, 100183. [Google Scholar] [CrossRef]

- Chu, P.H.; Huang, Y.T.; Pi, C.H.; Cheng, S. Autonomous Landing System of a VTOL UAV on an Upward Docking Station Using Visual Servoing. IFAC-PapersOnLine 2022, 55, 108–113. [Google Scholar] [CrossRef]

- Huang, H.; Savkin, A.V. Deployment of heterogeneous UAV base stations for optimal quality of coverage. IEEE Internet Things J. 2022, 9, 16429–16437. [Google Scholar] [CrossRef]

- Zhang, Q.; Saad, W.; Bennis, M.; Lu, X.; Debbah, M.; Zuo, W. Predictive deployment of UAV base stations in wireless networks: Machine learning meets contract theory. IEEE Trans. Wirel. Commun. 2020, 20, 637–652. [Google Scholar] [CrossRef]

- Chang, S.Y.; Park, K.; Kim, J.; Kim, J. Securing UAV Flying Base Station for Mobile Networking: A Review. Future Internet 2023, 15, 176. [Google Scholar] [CrossRef]

- Weiss, H.; Patel, A.; Romano, M.; Apodoca, B.; Kuevor, P.; Atkins, E.; Stirling, L. Methods for evaluation of human-in-the-loop inspection of a space station mockup using a quadcopter. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; pp. 1–12. [Google Scholar]

- Giles, K.B.; Davis, D.T.; Jones, K.D.; Jones, M.J. Expanding domains for multi-vehicle unmanned systems. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; pp. 1400–1409. [Google Scholar]

- Mofid, M.; Tabana, S.; El-Sayed, M.; Hussein, O. Navigation of Swarm Quadcopters in GPS-Denied Environment. In Proceedings of the International Undergraduate Research Conference, The Military Technical College, Cairo, Egypt, 5–8 September 2022; Volume 6, pp. 1–5. [Google Scholar]

- Pavan Kumar, V.; Venkateswara Rao, B.; Jagadeesh Harsha, G.; John Saida, M.D.; Mohana Rao, A.B.V. Arduino-Based Unmanned Vehicle to Provide Assistance Under Emergency Conditions. In Recent Trends in Product Design and Intelligent Manufacturing Systems: Select Proceedings of IPDIMS 2021; Springer Nature: Singapore, 2022; pp. 163–169. [Google Scholar]

- Zahed, M.J.H.; Fields, T. Evaluation of pilot and quadcopter performance from open-loop mission-oriented flight testing. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2021, 235, 1817–1830. [Google Scholar] [CrossRef]

- Bashi, O.I.D.; Hasan, W.Z.W.; Azis, N.; Shafie, S.; Wagatsuma, H. Unmanned Aerial Vehicle Quadcopter: A Review. J. Comput. Theor. Nanosci. 2017, 14, 5663–5675. [Google Scholar] [CrossRef]

- Guerrero, J.E.; Pacioselli, C.; Pralits, J.O. Preliminary design of a small-size flapping UAV. I, Aerodynamic Performance and Static Longitudinal Stability, to be Submitted. In Proceedings of the XXI AIMETA Conference Book, Torino, Italy, 17–20 September 2013; pp. 1–10. [Google Scholar]

- Gupte, S.; Mohandas, P.I.T.; Conrad, J.M. A survey of quadrotor unmanned aerial vehicles. In Proceedings of the IEEE Southeastcon, Orlando, FL, USA, 15–18 March 2012; pp. 1–6. [Google Scholar]

- Partovi, A.R.; Kevin, A.Z.Y.; Lin, H.; Chen, B.M.; Cai, G. Development of a cross style quadrotor. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, St. Paul, MN, USA, 13–16 August 2012. [Google Scholar]

- Cabahug, J.; Eslamiat, H. Failure Detection in Quadcopter UAVs Using K-Means Clustering. Sensors 2022, 22, 6037. [Google Scholar] [CrossRef]

- Thanh, H.L.N.N.; Huynh, T.T.; Vu, M.T.; Mung, N.X.; Phi, N.N.; Hong, S.K.; Vu, T.N.L. Quadcopter UAVs Extended States/Disturbance Observer-Based Nonlinear Robust Backstepping Control. Sensors 2022, 22, 5082. [Google Scholar] [CrossRef] [PubMed]

- Barbeau, M.; Garcia-Alfaro, J.; Kranakis, E.; Santos, F. GPS-Free, Error Tolerant Path Planning for Swarms of Micro Aerial Vehicles with Quality Amplification. Sensors 2021, 21, 4731. [Google Scholar] [CrossRef]

- Ukaegbu, U.F.; Tartibu, L.K.; Okwu, M.O.; Olayode, I.O. Development of a Light-Weight Unmanned Aerial Vehicle for Precision Agriculture. Sensors 2021, 21, 4417. [Google Scholar] [CrossRef] [PubMed]

- Veyna, U.; Garcia-Nieto, S.; Simarro, R.; Salcedo, J.V. Quadcopters Testing Platform for Educational Environments. Sensors 2021, 21, 4134. [Google Scholar] [CrossRef]

- Harkare, O.; Maan, R. Design and Control of a Quadcopter. Int. J. Eng. Tech. Res. 2021, 10, 5. [Google Scholar]

- Rauf, M.N.; Khan, R.A.; Shah, S.I.A.; Naqvi, M.A. Design and analysis of stability and control for a small unmanned aerial vehicle. Int. J. Dyn. Control 2023, 1–16. [Google Scholar] [CrossRef]

- Zargarbashi, F.; Talaeizadeh, A.; Nejat Pishkenari, H.; Alasty, A. Quadcopter Stability: The Effects of CoM, Dihedral Angle and Its Uncertainty. Iran. J. Sci. Technol. Trans. Mech. Eng. 2023, 1–9. [Google Scholar] [CrossRef]

- Haddadi, S.J.; Zarafshan, P. Design and fabrication of an autonomous Octorotor flying robot. In Proceedings of the 3rd IEEE RSI International Conference on Robotics and Mechatronics (ICROM), Tehran, Iran, 7–9 October 2015; pp. 702–710. [Google Scholar]

- Stamate, M.-A.; Pupăză, C.; Nicolescu, F.-A.; Moldoveanu, C.-E. Improvement of Hexacopter UAVs Attitude Parameters Employing Control and Decision Support Systems. Sensors 2023, 23, 1446. [Google Scholar] [CrossRef]

- Idrissi, M.; Hussain, A.; Barua, B.; Osman, A.; Abozariba, R.; Aneiba, A.; Asyhari, T. Evaluating the Forest Ecosystem through a Semi-Autonomous Quadruped Robot and a Hexacopter UAV. Sensors 2022, 22, 5497. [Google Scholar] [CrossRef]

- Suprapto, B.Y.; Heryanto, A.; Suprijono, H.; Muliadi, J.; Kusumoputro, B. Design and Development of Heavy-lift Hexacopter for Heavy Payload. In Proceedings of the International Seminar on Application for Technology of Information and Communication (iSemantic), Semarang, Indonesia, 7–8 October 2017; pp. 242–247. [Google Scholar] [CrossRef]

- Setiono, F.Y.; Candrasaputra, A.; Prasetyo, T.B.; Santoso, K.L.B. Designing and Implementation of Autonomous Hexacopter as Unmanned Aerial Vehicle. In Proceedings of the 8th International Conference on Information Technology and Electrical Engineering (ICITEE), Yogyakarta, Indonesia, 5–6 October 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Abarca, M.; Saito, C.; Angulo, A.; Paredes, J.A.; Cuellar, F. Design and Development of an Hexacopter for Air Quality Monitoring at High Altitudes. In Proceedings of the 13th IEEE Conference on Automation Science and Engineering (CASE), Xi’an, China, 20–23 August 2017; pp. 1457–1462. [Google Scholar]

- Ragbir, P.; Kaduwela, A.; Passovoy, D.; Amin, P.; Ye, S.; Wallis, C.; Alaimo, C.; Young, T.; Kong, Z. UAV-Based Wildland Fire Air Toxics Data Collection and Analysis. Sensors 2023, 23, 3561. [Google Scholar] [CrossRef]

- Barzilov, A.; Kazemeini, M. Unmanned Aerial System Integrated Sensor for Remote Gamma and Neutron Monitoring. Sensors 2020, 20, 5529. [Google Scholar] [CrossRef]

- Raja, V.; Solaiappan, S.K.; Rajendran, P.; Madasamy, S.K.; Jung, S. Conceptual design and multi-disciplinary computational investigations of multirotor unmanned aerial vehicle for environmental applications. Appl. Sci. 2021, 1, 8364. [Google Scholar] [CrossRef]

- Raheel, F.; Mehmood, H.; Kadri, M.B. Top-Down Design Approach for the Customization and Development of Multi-rotors Using ROS. In Unmanned Aerial Vehicles Applications: Challenges and Trends; Springer International Publishing: Cham, Switzerland, 2023; pp. 43–83. [Google Scholar]

- Stamate, M.A.; Nicolescu, A.F.; Pupăză, C. Study regarding flight autonomy estimation for hexacopter drones in various equipment configurations. Proc. Manuf. Syst. 2020, 15, 81–90. [Google Scholar]

- Panigrahi, S.; Krishna, Y.S.S.; Thondiyath, A. Design, Analysis, and Testing of a Hybrid VTOL Tilt-Rotor UAV for Increased Endurance. Sensors 2021, 21, 5987. [Google Scholar] [CrossRef]

- Tang, H.; Zhang, D.; Gan, Z. Control System for Vertical Take-Off and Landing Vehicle’s Adaptive Landing Based on Multi-Sensor Data Fusion. Sensors 2020, 20, 4411. [Google Scholar] [CrossRef]

- Li, B.; Zhou, W.; Sun, J.; Wen, C.-Y.; Chen, C.-K. Development of Model Predictive Controller for a Tail-Sitter VTOL UAV in Hover Flight. Sensors 2018, 18, 2859. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Zhao, H.; Liu, Y. An evaluative review of the VTOL technologies for unmanned and manned aerial vehicles. Comput. Commun. 2020, 149, 356–369. [Google Scholar] [CrossRef]

- Ducard, G.J.; Allenspach, M. Review of designs and flight control techniques of hybrid and convertible VTOL UAVs. Aerosp. Sci. Technol. 2021, 18, 107035. [Google Scholar] [CrossRef]

- Simon, M.; Copăcean, L.; Popescu, C.; Cojocariu, L. 3D Mapping of a village with a wingtraone VTOL tailsiter drone using pix4d mapper. Res. J. Agric. Sci. 2021, 53, 228. [Google Scholar]

- Baube, C.A.; Downs, C.; Goodstein, M.; Labrador, D.; Liebergall, E.; Molloy, O. Fli-Bi UAV: A Unique Surveying VTOL for Overhead Intelligence. In Proceedings of the AIAA SCITECH 2024 Forum, Orlando, FL, USA, 8–12 January 2024; p. 0455. [Google Scholar]

- Wu, H.; Wang, Z.; Ren, B.; Wang, L.; Zhang, J.; Zhu, J.; He, Z. Design and experiment OF a high payload fixed wing VTOL UAV system for emergency response. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 1715–1722. [Google Scholar] [CrossRef]

- Qi, H.; Cao, S.J.; Wu, J.Y.; Peng, Y.M.; Nie, H.; Wei, X.H. Research on the Effect Characteristics of Free-Tail Layout Parameters on Tail-Sitter VTOL UAVs. Agriculture 2024, 14, 472. [Google Scholar] [CrossRef]

- Dileep, M.R.; Navaneeth, A.V.; Ullagaddi, S.; Danti, A. A study and analysis on various types of agricultural drones and its applications. In Proceedings of the 2020 Fifth International Conference on Research in Computational Intelligence and Communication Networks (ICRCICN), Bangalore, India, 26–27 November 2020; pp. 181–185. [Google Scholar]

- Caballero, A.; Paneque, J.L.; Martinez-de-Dios, J.R.; Maza, I.; Ollero, A. Multi-UAV Systems for Inspection of Industrial and Public Infrastructures. In Infrastructure Robotics: Methodologies, Robotic Systems and Applications; Wiley Online Library: Hoboken, NJ, USA, 2024; pp. 285–303. [Google Scholar]

- Congress, S.S.C.; Puppala, A.J.; Khan, M.A.; Biswas, N.; Kumar, P. Application of unmanned aerial technologies for inspecting pavement and bridge infrastructure assets conditions. Transp. Res. Rec. 2020, 03611981221105273. [Google Scholar] [CrossRef]

- Goyal, R.; Cohen, A. Advanced air mobility: Opportunities and challenges deploying eVTOLs for air ambulance service. Appl. Sci. 2022, 12, 1183. [Google Scholar] [CrossRef]

- Streßer, M.; Carrasco, R.; Horstmann, J. Video-Based Estimation of Surface Currents Using a Low-Cost Quadcopter. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2027–2031. [Google Scholar] [CrossRef]

- Efaz, E.T.; Mowlee, M.M.; Jabin, J.; Khan, I.; Islam, M.R. Modeling of a high-speed and cost-effective FPV quadcopter for surveillance. In Proceedings of the 2020 23rd International Conference on Computer and Information Technology (ICCIT), Dhaka, Bangladesh, 19–21 December 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Stankov, U.; Vasiljević, Ð.; Jovanović, V.; Kranjac, M.; Vujičić, M.D.; Morar, C.; Bucur, L. Shared Aerial Drone Videos—Prospects and Problems for Volunteered Geographic Information Research. Open Geosci. 2019, 11, 462–470. [Google Scholar] [CrossRef]

- Liao, Y.H.; Juang, J.G. Real-time UAV trash monitoring system. Appl. Sci. 2022, 12, 1838. [Google Scholar] [CrossRef]

- Priandana, K.; Hazim, M.; Kusumoputro, B. Development of autonomous UAV quadcopters using pixhawk controller and its flight data acquisition. In Proceedings of the 2020 International Conference on Computer Science and Its Application in Agriculture (ICOSICA), Bogor, Indonesia, 16–17 September 2020; pp. 1–6. [Google Scholar]

- Saha, H.; Basu, S.; Auddy, S.; Dey, R.; Nandy, A.; Pal, D.; Roy, N.; Jasu, S.; Saha, A.; Chattopadhyay, S.; et al. A low cost fully autonomous GPS (Global Positioning System) based quad copter for disaster management. In Proceedings of the 2018 IEEE 8th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–10 January 2018; pp. 654–660. [Google Scholar]

- Sun, S.; Cioffi, G.; De Visser, C.; Scaramuzza, D. Autonomous quadrotor flight despite rotor failure with onboard vision sensors: Frames vs. events. IEEE Robot. Autom. Lett. 2021, 6, 580–587. [Google Scholar] [CrossRef]

- Lavin, E.P.; Jayewardene, I.; Massih, M. Utilising time lapse and drone imagery to audit construction and certification of works for a coastal revetment at Port Kembla. In Australasian Coasts & Ports 2021: Te Oranga Takutai, Adapt and Thrive: Te Oranga Takutai, Adapt and Thrive; New Zealand Coastal Society: Christchurch, New Zealand, 2022; pp. 767–772. [Google Scholar]

- Zhang, W.; Jordan, G.; Sharp, A. The Potential of Using Drone Affiliated Technologies for Various Types of Traffic and Driver Behavior Studies. In Proceedings of the International Conference on Transportation and Development 2020, Seattle, WA, USA, 26–29 May 2020; American Society of Civil Engineers: Reston, VA, USA, 2020; pp. 221–233. [Google Scholar]

- Sehrawat, A.; Choudhury, T.A.; Raj, G. Surveillance drone for disaster management and military security. In Proceedings of the 2017 International Conference on Computing, Communication and Automation (ICCCA), Greater Noida, India, 5–6 May 2017; pp. 470–475. [Google Scholar] [CrossRef]

- Hassan, F.; Usman, M.R.; Hamid, S.; Usman, M.A.; Politis, C.; Satrya, G.B. Solar Powered Autonomous Hex-Copter for Surveillance, Security and Monitoring. In Proceedings of the 2021 IEEE Asia Pacific Conference on Wireless and Mobile (APWiMob), Bandung, Indonesia, 8–10 April 2021; pp. 188–194. [Google Scholar] [CrossRef]

- Ahmed, F.; Narayan, Y.S. Design and Development of Quad copter for Surveillance. Int. J. Eng. Res. 2016, 5, 312–318. [Google Scholar]

- Mojib, E.B.S.; Haque, A.B.; Raihan, M.N.; Rahman, M.; Alam, F.B. A novel approach for border security; surveillance drone with live intrusion monitoring. In Proceedings of the 2019 IEEE International Conference on Robotics, Automation, Artificial-Intelligence and Internet-of-Things (RAAICON), Dhaka, Bangladesh, 29 November–1 December 2019; pp. 65–68. [Google Scholar]

- Zaporozhets, A. Overview of quadrocopters for energy and ecological monitoring. In Systems, Decision and Control in Energy I; Springer International Publishing: Cham, Switzerland, 2020; pp. 15–36. [Google Scholar]

- Husman, M.A.; Albattah, W.; Abidin, Z.Z.; Mustafah, Y.M.; Kadir, K.; Habib, S.; Islam, M.; Khan, S. Unmanned aerial vehicles for crowd monitoring and analysis. Electronics 2021, 10, 2974. [Google Scholar] [CrossRef]

- Prabu, B.; Malathy, R.; Taj, M.G.; Madhan, N. Drone networks and monitoring systems in smart cities. In AI-Centric Smart City Ecosystems; CRC Press: Boca Raton, FL, USA, 2022; pp. 123–148. [Google Scholar]

- Magsino, E.R.; Chua, J.R.B.; Chua, L.S.; De Guzman, C.M.; Gepaya, J.V.L. A rapid screening algorithm using a quadrotor for crack detection on bridges. In Proceedings of the 2016 IEEE Region 10 Conference (TENCON), Singapore, 22–25 November 2016; pp. 1829–1833. [Google Scholar]

- Damanik, J.A.I.; Sitanggang, I.M.D.; Hutabarat, F.S.; Knight, G.P.B.; Sagala, A. Quadcopter Unmanned Aerial Vehicle (UAV) Design for Search and Rescue (SAR). In Proceedings of the 2022 IEEE International Conference of Computer Science and Information Technology (ICOSNIKOM), Laguboti, North Sumatra, Indonesia, 19–21 October 2022; pp. 01–06. [Google Scholar]

- Hari Ghanessh, A.; Suvendran, L.; Krishna Raj, A.; Dharmalingam, L.; Raj, K. Autonomous Drone Swarm for Surveillance. In Proceedings of the Greetings from Rector of Bandung Islamic University Prof. Dr. H. Edi Setiadi, SH, MH, Bandung, Indonesia, 3–7 September 2023. [Google Scholar]

- Kabra, T.S.; Kardile, A.V.; Deeksha, M.G.; Mane, D.B.; Bhosale, P.R.; Belekar, A.M. Design, development & optimization of a quad-copter for agricultural applications. Int. Res. J. Eng. Technol. 2017, 4, 1632–1636. [Google Scholar]

- Rakesh, D.; Kumar, N.A.; Sivaguru, M.; Keerthivaasan, K.V.R.; Janaki, B.R.; Raffik, R. Role of UAVs in innovating agriculture with future applications: A review. In Proceedings of the 2021 International Conference on Advancements in Electrical, Electronics, Communication, Computing and Automation (ICAECA), Coimbatore, India, 8–9 October 2021; pp. 1–6. [Google Scholar]

- Norasma, C.Y.N.; Fadzilah, M.A.; Roslin, N.A.; Zanariah, Z.W.N.; Tarmidi, Z.; Candra, F.S. Unmanned aerial vehicle applications in agriculture. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 506, p. 012063. [Google Scholar]

- Rahman, M.F.F.; Fan, S.; Zhang, Y.; Chen, L. A comparative study on application of unmanned aerial vehicle systems in agriculture. Agriculture 2021, 1, 22. [Google Scholar] [CrossRef]

- Mogili, U.R.; Deepak, B.B.V.L. Review on application of drone systems in precision agriculture. Procedia Comput. Sci. 2018, 133, 502–509. [Google Scholar] [CrossRef]

- Nikitha, M.V.; Sunil, M.P.; Hariprasad, S.A. Autonomous quad copter for agricultural land surveillance. Int. J. Adv. Res. Eng. Technol. 2021, 12, 892–901. [Google Scholar] [CrossRef]

- Tendolkar, A.; Choraria, A.; Pai, M.M.; Girisha, S.; Dsouza, G.; Adithya, K.S. Modified crop health monitoring and pesticide spraying system using NDVI and Semantic Segmentation: An AGROCOPTER based approach. In Proceedings of the 2021 IEEE International Conference on Autonomous Systems (ICAS), Montreal, QC, Canada, 11–13 August 2021; pp. 1–5. [Google Scholar]

- Ghazali, M.H.M.; Azmin, A.; Rahiman, W. Drone implementation in precision agriculture—A survey. Int. J. Emerg. Technol. Adv. Eng. 2022, 12, 67–77. [Google Scholar] [CrossRef]

- Rokhmana, C.A. The potential of UAV-based remote sensing for supporting precision agriculture in Indonesia. Procedia Environ. Sci. 2015, 24, 245–253. [Google Scholar] [CrossRef]

- Saleem, S.R.; Zaman, Q.U.; Schumann, A.W.; Naqvi, S.M.Z.A. Variable rate technologies: Development, adaptation, and opportunities in agriculture. In Precision Agriculture; Academic Press: New York, NY, USA, 2023; pp. 103–122. [Google Scholar]

- Chávez, J.L.; Torres-Rua, A.F.; Woldt, W.E.; Zhang, H.; Robertson, C.C.; Marek, G.W.; Wang, D.; Heeren, D.M.; Taghvaeian, S.; Neale, C.M. A decade of unmanned aerial systems in irrigated agriculture in the Western US. Appl. Eng. Agric. 2020, 36, 423–436. [Google Scholar] [CrossRef]

- Xu, B.; Wang, W.; Falzon, G.; Kwan, P.; Guo, L.; Sun, Z.; Li, C. Livestock classification and counting in quadcopter aerial images using Mask R-CNN. Int. J. Remote Sens. 2020, 41, 8121–8142. [Google Scholar] [CrossRef]

- Kumar, C.; Mubvumba, P.; Huang, Y.; Dhillon, J.; Reddy, K. Multi-stage corn yield prediction using high-resolution UAV multispectral data and machine learning models. Agronomy 2023, 13, 1277. [Google Scholar] [CrossRef]

- Gao, D.; Sun, Q.; Hu, B.; Zhang, S. A framework for agricultural pest and disease monitoring based on internet-of-things and unmanned aerial vehicles. Sensors 2020, 20, 1487. [Google Scholar] [CrossRef] [PubMed]

- Ganeshkumar, C.; David, A.; Sankar, J.G.; Saginala, M. Application of drone Technology in Agriculture: A predictive forecasting of Pest and disease incidence. In Applying Drone Technologies and Robotics for Agricultural Sustainability; IGI Global: Hershey, PA, USA, 2023; pp. 50–81. [Google Scholar]

- Yaqot, M.; Menezes, B.C. Unmanned aerial vehicle (UAV) in precision agriculture: Business information technology towards farming as a service. In Proceedings of the 2021 1st International Conference on Emerging Smart Technologies and Applications (eSmarTA), Sana’a, Yemen, 10–12 August 2021; pp. 1–7. [Google Scholar]

- Ouafiq, E.M.; Saadane, R.; Chehri, A. Data management and integration of low power consumption embedded devices IoT for transforming smart agriculture into actionable knowledge. Agriculture 2022, 12, 329. [Google Scholar] [CrossRef]

- Naidoo, Y.; Stopforth, R.; Bright, G. Development of an UAV for search & rescue applications. In Proceedings of the IEEE Africon’11, Victoria Falls, Zambia, 13–15 September 2011; pp. 1–6. [Google Scholar]

- Waharte, S.; Trigoni, N. Supporting search and rescue operations with UAVs. In Proceedings of the 2010 International Conference on Emerging Security Technologies, Canterbury, UK, 6–7 September 2010; pp. 142–147. [Google Scholar]

- Ryan, A.; Hedrick, J.K. A mode-switching path planner for UAV-assisted search and rescue. In Proceedings of the 44th IEEE Conference on Decision and Control, Seville, Spain, 15 December 2005; pp. 1471–1476. [Google Scholar]

- Silvagni, M.; Tonoli, A.; Zenerino, E.; Chiaberge, M. Multipurpose UAV for search and rescue operations in mountain avalanche events. Geomat. Nat. Hazards Risk 2017, 8, 18–33. [Google Scholar] [CrossRef]

- Mishra, B.; Garg, D.; Narang, P.; Mishra, V. Drone-surveillance for search and rescue in natural disaster. Comput. Commun. 2020, 156, 1–10. [Google Scholar] [CrossRef]

- Ajith, V.S.; Jolly, K.G. Unmanned aerial systems in search and rescue applications with their path planning: A review. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2021; Volume 2115, p. 012020. [Google Scholar]

- Abhijith, M.S.; Jose, A.; Bhuvanendran, C.; Thomas, D.; George, D.E. Farm-Copter: Computer Vision Based Precision Agriculture. In Proceedings of the 2020 4th International Conference on Computer, Communication and Signal Processing (ICCCSP), Chennai, India, 28–29 September 2020; pp. 1–6. [Google Scholar]

- Dong, J.; Ota, K.; Dong, M. UAV-based real-time survivor detection system in post-disaster search and rescue operations. IEEE J. Miniaturization Air Space Syst. 2021, 2, 209–219. [Google Scholar] [CrossRef]

- Budiharto, W.; Irwansyah, E.; Suroso, J.S.; Chowanda, A.; Ngarianto, H.; Gunawan, A.A.S. Mapping and 3D modelling using quadrotor drone and GIS software. J. Big Data 2021, 8, 48. [Google Scholar] [CrossRef]

- Chatziparaschis, D.; Lagoudakis, M.G.; Partsinevelos, P. Aerial and ground robot collaboration for autonomous mapping in search and rescue missions. Drones 2020, 4, 79. [Google Scholar] [CrossRef]

- Albanese, A.; Sciancalepore, V.; Costa-Pérez, X. SARDO: An automated search-and-rescue drone-based solution for victims localization. IEEE Trans. Mob. Comput. 2021, 21, 3312–3325. [Google Scholar] [CrossRef]

- Papyan, N.; Kulhandjian, M.; Kulhandjian, H.; Aslanyan, L. AI-Based Drone Assisted Human Rescue in Disaster Environments: Challenges and Opportunities. Pattern Recognit. Image Anal. 2024, 34, 169–186. [Google Scholar] [CrossRef]

- Cicek, M.; Pasli, S.; Imamoglu, M.; Yadigaroglu, M.; Beser, M.F.; Gunduz, A. Simulation-based drone assisted search operations in a river. Wilderness Environ. Med. 2022, 3, 311–317. [Google Scholar] [CrossRef] [PubMed]

- Gaur, S.; Kumar, J.S. UAV based Human Detection for Search and Rescue Operations in Flood. In Proceedings of the 2023 10th IEEE Uttar Pradesh Section International Conference on Electrical, Electronics and Computer Engineering (UPCON), Gautam Buddha Nagar, India, 1–3 December 2023; Volume 10, pp. 1038–1043. [Google Scholar]

- Rabajczyk, A.; Zboina, J.; Zielecka, M.; Fellner, R. Monitoring of selected CBRN threats in the air in industrial areas with the use of unmanned aerial vehicles. Atmosphere 2020, 1, 1373. [Google Scholar] [CrossRef]

- Charalampidou, S.; Lygouras, E.; Dokas, I.; Gasteratos, A.; Zacharopoulou, A. A sociotechnical approach to UAV safety for search and rescue missions. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 1416–1424. [Google Scholar]

- Lyu, M.; Zhao, Y.; Huang, C.; Huang, H. Unmanned aerial vehicles for search and rescue: A survey. Remote Sens. 2023, 15, 3266. [Google Scholar] [CrossRef]

- Tuśnio, N.; Wróblewski, W. The efficiency of drones usage for safety and rescue operations in an open area: A case from Poland. Sustainability 2021, 14, 327. [Google Scholar] [CrossRef]

- Ashish, M.; Muraleedharan, A.; Shruthi, C.M.; Bhavani, R.R.; Akshay, N. Autonomous Payload Delivery using Hybrid VTOL UAVs for Community Emergency Response. In Proceedings of the 2020 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 2–4 July 2020; pp. 1–6. [Google Scholar]

- Giacomossi, L.; Maximo, M.R.; Sundelius, N.; Funk, P.; Brancalion, J.F.; Sohlberg, R. Cooperative search and rescue with drone swarm. In International Congress and Workshop on Industrial AI; Springer Nature: Cham, Switzerland, 2023; pp. 381–393. [Google Scholar]

- Arnold, R.; Osinski, M.; Reddy, C.; Lowey, A. Reinforcement learning for collaborative search and rescue using unmanned aircraft system swarms. In Proceedings of the 2022 IEEE International Symposium on Technologies for Homeland Security (HST), Boston, MA, USA, 14–15 November 2022; pp. 1–6. [Google Scholar]

- Cao, Y.; Qi, F.; Jing, Y.; Zhu, M.; Lei, T.; Li, Z.; Xia, J.; Wang, J.; Lu, G. Mission chain driven unmanned aerial vehicle swarms cooperation for the search and rescue of outdoor injured human targets. Drones 2022, 6, 138. [Google Scholar] [CrossRef]

- Mamchur, D.; Peksa, J.; Kolodinskis, A.; Zigunovs, M. The Use of Terrestrial and Maritime Autonomous Vehicles in Nonintrusive Object Inspection. Sensors 2022, 22, 7914. [Google Scholar] [CrossRef] [PubMed]

- Horyna, J.; Baca, T.; Walter, V.; Albani, D.; Hert, D.; Ferrante, E.; Saska, M. Decentralized swarms of unmanned aerial vehicles for search and rescue operations without explicit communication. Auton. Robot. 2023, 47, 77–93. [Google Scholar] [CrossRef]

- D’Urso, M.G.; Manzari, V.; Lucidi, S.; Cuzzocrea, F. Rescue Management and Assessment of Structural Damage by Uav in Post-Seismic Emergency. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 61–70. [Google Scholar] [CrossRef]

- Daud, S.M.S.M.; Yusof, M.Y.P.M.; Heo, C.C.; Khoo, L.S.; Singh, M.K.C.; Mahmood, M.S.; Nawawi, H. Applications of drone in disaster management: A scoping review. Sci. Justice 2022, 62, 30–42. [Google Scholar] [CrossRef]

- NC, A.V.; Yadav, A.R.; Mehta, D.; Belani, J.; Chauhan, R.R. A guide to novice for proper selection of the components of drone for specific applications. Mater. Today Proc. 2022, 65, 3617–3622. [Google Scholar]

- Zhafri, Z.M.; Effendi, M.S.M.; Rosli, M.F. A review on sustainable design and optimum assembly process: A case study on a drone. In AIP Conference Proceedings; AIP Publishing: Woodbury, NY, USA, 2018; Volume 2030. [Google Scholar]

- Hristozov, S.; Zlateva, P. Concept model for drone selection in specific disaster conditions. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 245–249. [Google Scholar] [CrossRef]

- MohamedZain, A.O.; Chua, H.; Yap, K.; Uthayasurian, P.; Jiehan, T. Novel Drone Design Using an Optimization Software with 3D Model, Simulation, and Fabrication in Drone Systems Research. Drones 2022, 6, 97. [Google Scholar] [CrossRef]

- Mac, T.T.; Copot, C.; Duc, T.T.; De Keyser, R. AR.Drone UAV control parameters tuning based on particle swarm optimization algorithm. In Proceedings of the 2016 IEEE International Conference on Automation, Quality and Testing, Robotics (AQTR), Cluj-Napoca, Romania, 19–21 May 2016; pp. 1–6. [Google Scholar]

- Alaimo, A.; Artale, V.; Milazzo, C.L.R.; Ricciardello, A. PID controller applied to hexacopter flight. J. Intell. Robot. Syst. 2014, 73, 261–270. [Google Scholar] [CrossRef]

- Elajrami, M.; Satla, Z.; Bendine, K. Drone Control using the Coupling of the PID Controller and Genetic Algorithm. Commun.-Sci. Lett. Univ. Zilina 2021, 23, C75–C82. [Google Scholar] [CrossRef]

- Zouaoui, S.; Mohamed, E.; Kouider, B. Easy tracking of UAV using PID controller. Period. Polytech. Transp. Eng. 2019, 47, 171–177. [Google Scholar] [CrossRef]

- Oh, J.W.; Seol, J.W.; Gong, Y.H.; Han, S.J.; Lee, S.D. Drone Hovering using PID Control. J. Korea Inst. Electron. Commun. Sci. 2018, 13, 1269–1274. [Google Scholar]

- Yuan, Q.; Zhan, J.; Li, X. Outdoor flocking of quadcopter drones with decentralized model predictive control. ISA Trans. 2017, 71, 84–92. [Google Scholar] [CrossRef]

- Song, Y.; Scaramuzza, D. Policy search for model predictive control with application to agile drone flight. IEEE Trans. Robot. 2022, 38, 2114–2130. [Google Scholar] [CrossRef]

- Michel, N.; Bertrand, S.; Olaru, S.; Valmorbida, G.; Dumur, D. Design and flight experiments of a tube-based model predictive controller for the AR. Drone 2.0 quadrotor. IFAC-PapersOnLine 2019, 52, 112–117. [Google Scholar] [CrossRef]

- Dentler, J.; Kannan, S.; Mendez, M.A.O.; Voos, H. A real-time model predictive position control with collision avoidance for commercial low-cost quadrotors. In Proceedings of the 2016 IEEE Conference on Control Applications (CCA), Buenos Aires, Argentina, 19–22 September 2016; pp. 519–525. [Google Scholar]

- Hernandez, A.; Murcia, H.; Copot, C.; De Keyser, R. Model predictive path-following control of an AR. Drone quadrotor. In Proceedings of the XVI Latin American Control Conference, The International Federation of Automatic Control, Cancun, Mexico, 14–17 October 2014; pp. 618–623. [Google Scholar]

- Saccani, D.; Fagiano, L. Autonomous uav navigation in an unknown environment via multi-trajectory model predictive control. In Proceedings of the 2021 European Control Conference (ECC), Delft, The Netherlands, 29 June–2 July 2021; pp. 1577–1582. [Google Scholar]

- Patel, S.; Sarabakha, A.; Kircali, D.; Kayacan, E. An intelligent hybrid artificial neural network-based approach for control of aerial robots. J. Intell. Robot. Syst. 2020, 97, 387–398. [Google Scholar] [CrossRef]

- Jiang, F.; Pourpanah, F.; Hao, Q. Design, implementation, and evaluation of a neural-network-based quadcopter UAV system. IEEE Trans. Ind. Electron. 2019, 67, 2076–2085. [Google Scholar] [CrossRef]

- Amer, K.; Samy, M.; Shaker, M.; ElHelw, M. Deep convolutional neural network based autonomous drone navigation. In Proceedings of the Thirteenth International Conference on Machine Vision, Rome, Italy, 2–6 November 2020; Volume 11605, pp. 16–24. [Google Scholar]

- Ferede, R.; de Croon, G.; De Wagter, C.; Izzo, D. End-to-end neural network based optimal quadcopter control. Robot. Auton. Syst. 2024, 172, 104588. [Google Scholar] [CrossRef]

- Jafari, M.; Xu, H. Intelligent control for unmanned aerial systems with system uncertainties and disturbances using artificial neural network. Drones 2018, 2, 30. [Google Scholar] [CrossRef]

- Luo, C.; Du, Z.; Yu, L. Neural Network Control Design for an Unmanned Aerial Vehicle with a Suspended Payload. Electronics 2019, 8, 931. [Google Scholar] [CrossRef]

- Nguyen, N.P.; Mung, N.X.; Thanh, H.L.N.N.; Huynh, T.T.; Lam, N.T.; Hong, S.K. Adaptive sliding mode control for attitude and altitude system of a quadcopter UAV via neural network. IEEE Access 2021, 9, 40076–40085. [Google Scholar] [CrossRef]

- Patel, N.; Purwar, S. Adaptive neural control of quadcopter with unknown nonlinearities. In Proceedings of the 2020 IEEE International Conference on Computing, Power and Communication Technologies (GUCON), Greater Noida, India, 2–4 October 2020; pp. 718–723. [Google Scholar]

- Pairan, M.F.; Shamsudin, S.S.; Zulkafli, M.F. Neural network based system identification for quadcopter dynamic modelling: A review. J. Adv. Mech. Eng. Appl. 2020, 1, 20–33. [Google Scholar]

- Krajewski, R.; Hoss, M.; Meister, A.; Thomsen, F.; Bock, J.; Eckstein, L. Using drones as reference sensors for neural-networks-based modeling of automotive perception errors. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 708–715. [Google Scholar]

- Deshpande, A.M.; Kumar, R.; Minai, A.A.; Kumar, M. Developmental reinforcement learning of control policy of a quadcopter UAV with thrust vectoring rotors. In Proceedings of the Dynamic Systems and Control Conference, Virtual, 5–7 October 2020; American Society of Mechanical Engineers: New York, NY, USA, 2020; Volume 84287, p. V002T36A011. [Google Scholar]

- Nguyen, N.P.; Mung, N.X.; Thanh Ha, L.N.N.; Huynh, T.T.; Hong, S.K. Finite-time attitude fault tolerant control of quadcopter system via neural networks. Mathematics 2020, 8, 1541. [Google Scholar] [CrossRef]

- Raiesdana, S. Control of quadrotor trajectory tracking with sliding mode control optimized by neural networks. Proc. Inst. Mech. Eng. Part I J. Syst. Control. Eng. 2020, 234, 1101–1119. [Google Scholar] [CrossRef]

- Muthusamy, P.K.; Garratt, M.; Pota, H.; Muthusamy, R. Real-time adaptive intelligent control system for quadcopter unmanned aerial vehicles with payload uncertainties. IEEE Trans. Ind. Electron. 2021, 69, 1641–1653. [Google Scholar] [CrossRef]

- Liu, M.; Ji, R.; Ge, S.S.; Fellow, IEEE. Adaptive neural control for a tilting quadcopter with finite-time convergence. Neural Comput. Appl. 2021, 33, 15987–16004. [Google Scholar] [CrossRef]

- Back, S.; Cho, G.; Oh, J.; Tran, X.T.; Oh, H. Autonomous UAV trail navigation with obstacle avoidance using deep neural networks. J. Intell. Robot. Syst. 2020, 100, 1195–1211. [Google Scholar] [CrossRef]

- Wang, J.; Liu, J.; Li, Y.; Chen, C.P.; Liu, Z.; Li, F. Prescribed time fuzzy adaptive consensus control for multiagent systems with dead-zone input and sensor faults. IEEE Trans. Autom. Sci. Eng. 2023, 1–12. [Google Scholar] [CrossRef]

- Wang, J.; Li, Y.; Wu, Y.; Liu, Z.; Chen, K.; Chen, C.P. Fixed-time formation control for uncertain nonlinear multi-agent systems with time-varying actuator failures. IEEE Trans. Fuzzy Syst. 2024, 32, 1965–1977. [Google Scholar] [CrossRef]

- Batra, S.; Huang, Z.; Petrenko, A.; Kumar, T.; Molchanov, A.; Sukhatme, G.S. Decentralized control of quadrotor swarms with end-to-end deep reinforcement learning. In Proceedings of the Conference on Robot Learning, London, UK, 8–11 November 2021; pp. 576–586. [Google Scholar]

- Zhang, P.; Chen, G.; Li, Y.; Dong, W. Agile formation control of drone flocking enhanced with active vision-based relative localization. IEEE Robot. Autom. Lett. 2022, 7, 6359–6366. [Google Scholar] [CrossRef]

- Tang, J.; Duan, H.; Lao, S. Swarm intelligence algorithms for multiple unmanned aerial vehicles collaboration: A comprehensive review. Artif. Intell. Rev. 2023, 56, 4295–4327. [Google Scholar] [CrossRef]

- Husheng, W.U.; Hao, L.; Renbin, X.I.A.O. A blockchain bee colony double inhibition labor division algorithm for spatio-temporal coupling task with application to UAV swarm task allocation. J. Syst. Eng. Electron. 2021, 32, 1180–1199. [Google Scholar] [CrossRef]

- Dey, S.; Xu, H. Intelligent distributed swarm control for large-scale multi-uav systems: A hierarchical learning approach. Electronics 2022, 12, 89. [Google Scholar] [CrossRef]

- Alam, M.M.; Moh, S. Q-learning-based routing inspired by adaptive flocking control for collaborative unmanned aerial vehicle swarms. Veh. Commun. 2023, 40, 100572. [Google Scholar] [CrossRef]

- Neumann, P.P.; Bartholmai, M. Real-time wind estimation on a micro unmanned aerial vehicle using its inertial measurement unit. Sens. Actuators A Phys. 2015, 235, 300–310. [Google Scholar] [CrossRef]

- Feng, Z.; Guan, N.; Lv, M.; Liu, W.; Deng, Q.; Liu, X.; Yi, W. An efficient uav hijacking detection method using onboard inertial measurement unit. ACM Trans. Embed. Comput. Syst. (TECS) 2018, 17, 96. [Google Scholar] [CrossRef]

- Hoang, M.L.; Carratù, M.; Paciello, V.; Pietrosanto, A. Noise attenuation on IMU measurement for drone balance by sensor fusion. In Proceedings of the 2021 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Glasgow, UK, 17–20 May 2021; pp. 1–6. [Google Scholar]

- Chen, P.; Dang, Y.; Liang, R.; Zhu, W.; He, X. Real-time object tracking on a drone with multi-inertial sensing data. IEEE Trans. Intell. Transp. Syst. 2017, 19, 131–139. [Google Scholar] [CrossRef]

- Sa, I.; Kamel, M.; Burri, M.; Bloesch, M.; Khanna, R.; Popović, M.; Nieto, J.; Siegwart, R. Build your own visual-inertial drone: A cost-effective and open-source autonomous drone. IEEE Robot. Autom. Mag. 2017, 25, 89–103. [Google Scholar] [CrossRef]

- Daponte, P.; De Vito, L.; Mazzilli, G.; Picariello, F.; Rapuano, S.; Riccio, M. Metrology for drone and drone for metrology: Measurement systems on small civilian drones. In Proceedings of the 2015 IEEE Metrology for Aerospace (MetroAeroSpace), Benevento, Italy, 4–5 June 2015; pp. 306–311. [Google Scholar]

- Vanhie-Van Gerwen, J.; Geebelen, K.; Wan, J.; Joseph, W.; Hoebeke, J.; De Poorter, E. Indoor drone positioning: Accuracy and cost trade-off for sensor fusion. IEEE Trans. Veh. Technol. 2021, 71, 961–974. [Google Scholar] [CrossRef]

- Ouyang, W.; Wu, Y. A trident quaternion framework for inertial-based navigation part I: Rigid motion representation and computation. IEEE Trans. Aerosp. Electron. Syst. 2021, 58, 2409–2420. [Google Scholar] [CrossRef]

- De Alteriis, G.; Bottino, V.; Conte, C.; Rufino, G.; Moriello, R.S.L. Accurate attitude inizialization procedure based on MEMS IMU and magnetometer integration. In Proceedings of the 2021 IEEE 8th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Naples, Italy, 23–25 June 2021; pp. 1–6. [Google Scholar]

- D’Amato, E.; Nardi, V.A.; Notaro, I.; Scordamaglia, V. A particle filtering approach for fault detection and isolation of UAV IMU sensors: Design, implementation and sensitivity analysis. Sensors 2021, 21, 3066. [Google Scholar] [CrossRef]

- Di, J.; Kang, Y.; Ji, H.; Wang, X.; Chen, S.; Liao, F.; Li, K. Low-level control with actuator dynamics for multirotor UAVs. Robot. Intell. Autom. 2023, 43, 290–300. [Google Scholar] [CrossRef]

- Clausen, P.; Skaloud, J. On the calibration aspects of MEMS-IMUs used in micro UAVs for sensor orientation. In Proceedings of the 2020 IEEE/ION Position, Location and Navigation Symposium (PLANS), Portland, OR, USA, 20–23 April 2020; pp. 1457–1466. [Google Scholar]

- Dill, E.; Gutierrez, J.; Young, S.; Moore, A.; Scholz, A.; Bates, E.; Schmitt, K.; Doughty, J. A Predictive GNSS Performance Monitor for Autonomous Air Vehicles in Urban Environments. In Proceedings of the 34th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS+ 2021), St. Louis, MO, USA, 20–24 September 2021; pp. 125–137. [Google Scholar]

- Verma, M.K.; Yadav, M. Navigating the Agricultural Fields: Affordable GNSS and IMU-based System and Data Fusion for Automatic Agricultural Vehicle’s Navigation. In Proceedings of the 6th International Conference on VLSI, Communication and Signal Processing, Prayagraj, India, 12–14 October 2023; pp. 1–8. [Google Scholar]

- Purisai, S.; Sharar, O. A Robust Navigation Solution to Enable Safe Autonomous Aerospace Operations; No. 2022-26-0016; SAE Technical Paper: Warrendale, PA, USA, 2022. [Google Scholar]

- Schleich, D.; Beul, M.; Quenzel, J.; Behnke, S. Autonomous flight in unknown GNSS-denied environments for disaster examination. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; pp. 950–957. [Google Scholar]

- Mondal, M.; Poslavskiy, S. Offline navigation (homing) of aerial vehicles (quadcopters) in GPS denied environments. Unmanned Syst. 2021, 9, 119–127. [Google Scholar] [CrossRef]

- Kuenz, A.; Lieb, J.; Rudolph, M.; Volkert, A.; Geister, D.; Ammann, N.; Zhukov, D.; Feurich, P.; Gonschorek, J.; Gessner, M.; et al. Live Trials of Dynamic Geo-Fencing for the Tactical Avoidance of Hazard Areas. IEEE Aerosp. Electron. Syst. Mag. 2023, 38, 60–71. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Kim, H.; Hyun, C.U.; Park, H.D.; Cha, J. Image mapping accuracy evaluation using UAV with standalone, differential (RTK), and PPP GNSS positioning techniques in an abandoned mine site. Sensors 2023, 23, 5858. [Google Scholar] [CrossRef]

- Shin, Y.; Lee, C.; Kim, E. Enhancing Real-Time Kinematic Relative Positioning for Unmanned Aerial Vehicles. Machines 2024, 12, 202. [Google Scholar] [CrossRef]

- Aziez, S.A.; Al-Hemeary, N.; Reja, A.H.; Zsedrovits, T.; Cserey, G. Using knn algorithm predictor for data synchronization of ultra-tight gnss/ins integration. Electronics 2021, 10, 1513. [Google Scholar] [CrossRef]

- Boguspayev, N.; Akhmedov, D.; Raskaliyev, A.; Kim, A.; Sukhenko, A. A comprehensive review of GNSS/INS integration techniques for land and air vehicle applications. Appl. Sci. 2023, 13, 4819. [Google Scholar] [CrossRef]

- Tombe, R. Computer vision for smart farming and sustainable agriculture. In Proceedings of the 2020 IST-Africa Conference (IST-Africa), Kampala, Uganda, 18–22 May 2020; pp. 1–8. [Google Scholar]

- Akbari, Y.; Almaadeed, N.; Al-Maadeed, S.; Elharrouss, O. Applications, databases and open computer vision research from drone videos and images: A survey. Artif. Intell. Rev. 2021, 54, 3887–3938. [Google Scholar] [CrossRef]

- Kentsch, S.; Lopez Caceres, M.L.; Serrano, D.; Roure, F.; Diez, Y. Computer vision and deep learning techniques for the analysis of drone-acquired forest images, a transfer learning study. Remote Sens. 2020, 12, 1287. [Google Scholar] [CrossRef]

- Patrício, D.I.; Rieder, R. Computer vision and artificial intelligence in precision agriculture for grain crops: A systematic review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef]

- Latif, G.; Alghazo, J.; Maheswar, R.; Vijayakumar, V.; Butt, M. Deep learning based intelligence cognitive vision drone for automatic plant diseases identification and spraying. J. Intell. Fuzzy Syst. 2020, 39, 8103–8114. [Google Scholar] [CrossRef]

- Donmez, C.; Villi, O.; Berberoglu, S.; Cilek, A. Computer vision-based citrus tree detection in a cultivated environment using UAV imagery. Comput. Electron. Agric. 2021, 187, 106273. [Google Scholar] [CrossRef]

- Atik, M.E.; Duran, Z.; Özgünlük, R. Comparison of YOLO versions for object detection from aerial images. Int. J. Environ. Geoinform. 2022, 9, 87–93. [Google Scholar] [CrossRef]

- Mandal, S.; Mones, S.M.B.; Das, A.; Balas, V.E.; Shaw, R.N.; Ghosh, A. Single shot detection for detecting real-time flying objects for unmanned aerial vehicle. In Artificial Intelligence for Future Generation Robotics; Elsevier: Amsterdam, The Netherlands, 2021; pp. 37–53. [Google Scholar]

- Rabah, M.; Rohan, A.; Haghbayan, M.H.; Plosila, J.; Kim, S.H. Heterogeneous parallelization for object detection and tracking in UAVs. IEEE Access 2020, 8, 42784–42793. [Google Scholar] [CrossRef]

- Troll, P.; Szipka, K.; Archenti, A. Indoor Localization of Quadcopters in Industrial Environment. In Proceedings of the 9th Swedish Production Symposium, SPS 2020, Jönköping, Sweden, 7–8 October 2020; pp. 453–464. [Google Scholar]

- Wong, C.C.; Vong, C.M.; Jiang, X.; Zhou, Y. Feature-Based Direct Tracking and Mapping for Real-Time Noise-Robust Outdoor 3D Reconstruction Using Quadcopters. IEEE Trans. Intell. Transp. Syst. 2022, 23, 20489–20505. [Google Scholar] [CrossRef]

- Sonkar, S.; Kumar, P.; George, R.C.; Yuvaraj, T.P.; Philip, D.; Ghosh, A.K. Real-time object detection and recognition using fixed-wing Lale VTOL UAV. IEEE Sens. J. 2022, 2, 20738–20747. [Google Scholar] [CrossRef]

- Lederman, C.; Kirk, K.T.; Perry, V.; Kraczek, B. Simulation environment for development of quad-copter controls incorporating physical environment in urban setting. In Proceedings of the Unmanned Systems Technology XXIII, Online, 12–16 April 2021; Volume 11758, pp. 130–142. [Google Scholar]

- Urieva, N.; McDonald, J.; Uryeva, T.; Ramos, A.S.R.; Bhandari, S. Collision detection and avoidance using optical flow for multicopter UAVs. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 607–614. [Google Scholar]

- Gupta, A.; Fernando, X. Simultaneous localization and mapping (slam) and data fusion in unmanned aerial vehicles: Recent advances and challenges. Drones 2022, 6, 85. [Google Scholar] [CrossRef]

- López, E.; García, S.; Barea, R.; Bergasa, L.M.; Molinos, E.J.; Arroyo, R.; Romera, E.; Pardo, S. A Multi-Sensorial Simultaneous Localization and Mapping (SLAM) System for Low-Cost Micro Aerial Vehicles in GPS-Denied Environments. Sensors 2017, 17, 802. [Google Scholar] [CrossRef] [PubMed]

- Simas, M.; Guerreiro, B.J.; Batista, P. Earth-based Simultaneous Localization and Mapping for Drones in Dynamic Environments. J. Intell. Robot. Syst. 2022, 104, 58. [Google Scholar] [CrossRef]

- Qian, J.; Chen, K.; Chen, Q.; Yang, Y.; Zhang, J.; Chen, S. Robust visual-lidar simultaneous localization and mapping system for UAV. IEEE Geosci. Remote Sens. Lett. 2021, 19, 6502105. [Google Scholar] [CrossRef]

- Tian, Y.; Yue, H.; Yang, B.; Ren, J. Unmanned aerial vehicle visual Simultaneous Localization and Mapping: A survey. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2022; Volume 2278, p. 012006. [Google Scholar]

- Zhang, T.; Liu, C.; Li, J.; Pang, M.; Wang, M. A new visual inertial simultaneous localization and mapping (SLAM) algorithm based on point and line features. Drones 2022, 6, 23. [Google Scholar] [CrossRef]

- Yang, P.; Ye, J.; Liu, J.; Yang, Z.; Liang, F. An On-line Monitoring and Flight Inspection System Based on Unmanned Aerial Vehicle for Navigation Equipment. In Proceedings of the 2018 International Conference on Mechanical, Electrical, Electronic Engineering & Science (MEEES 2018), Chongqing, China, 26–27 May 2018; Atlantis Press: Amsterdam, The Netherlands, 2018; pp. 151–156. [Google Scholar]

- Gwon, S.; Jeong, P.; Park, M.; Kee, C.; Kim, O. Single-Station-Based Positioning System as an Alternative Navigation for Operations Near UAM Vertiports. In Proceedings of the 2024 International Technical Meeting of The Institute of Navigation, Long Beach, CA, USA, 23–25 January 2024; pp. 637–650. [Google Scholar]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef]

- Rohan, A.; Rabah, M.; Kim, S.H. Convolutional neural network-based real-time object detection and tracking for parrot AR drone 2. IEEE Access 2019, 7, 69575–69584. [Google Scholar] [CrossRef]

- Nousi, P.; Mademlis, I.; Karakostas, I.; Tefas, A.; Pitas, I. Embedded UAV real-time visual object detection and tracking. In Proceedings of the 2019 IEEE International Conference on Real-time Computing and Robotics (RCAR), Irkutsk, Russia, 4–9 August 2019; pp. 708–713. [Google Scholar]

- Zhang, H.; Wang, G.; Lei, Z.; Hwang, J.N. Eye in the sky: Drone-based object tracking and 3d localization. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 899–907. [Google Scholar]

- Chen, G.; Wang, W.; He, Z.; Wang, L.; Yuan, Y.; Zhang, D.; Zhang, J.; Zhu, P.; Van Gool, L.; Han, J.; et al. VisDrone-MOT2021: The vision meets drone multiple object tracking challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2839–2846. [Google Scholar]

- Mercado-Ravell, D.A.; Castillo, P.; Lozano, R. Visual detection and tracking with UAVs, following a mobile object. Adv. Robot. 2019, 33, 388–402. [Google Scholar] [CrossRef]

- Micheal, A.A.; Vani, K.; Sanjeevi, S.; Lin, C.H. Object detection and tracking with UAV data using deep learning. J. Indian Soc. Remote Sens. 2021, 49, 463–469. [Google Scholar] [CrossRef]

- Ebeid, E.; Skriver, M.; Terkildsen, K.H.; Jensen, K.; Schultz, U.P. A survey of open-source UAV flight controllers and flight simulators. Microprocess. Microsyst. 2018, 61, 11–20. [Google Scholar] [CrossRef]

- Pütsep, K.; Rassõlkin, A. Methodology for flight controllers for nano, micro and mini drones classification. In Proceedings of the 2021 International Conference on Engineering and Emerging Technologies (ICEET), Istanbul, Turkey, 27–28 October 2021; pp. 1–8. [Google Scholar]

- Burke, P.J. A safe, open source, 4G connected self-flying plane with 1 hour flight time and all up weight (AUW) < 300 g: Towards a new class of internet enabled UAVs. IEEE Access 2019, 7, 67833–67855. [Google Scholar]

- Venkatesh, G.A.; Sumanth, P.; Jansi, K.R. Fully autonomous UAV. In Proceedings of the 2017 International Conference on Technical Advancements in Computers and Communications (ICTACC), Melmaurvathur, India, 10–11 April 2017; pp. 41–44. [Google Scholar]

- Luo, Z.; Xiang, X.; Zhang, Q. Autopilot system of remotely operated vehicle based on ardupilot. In Intelligent Robotics and Applications: 12th International Conference, ICIRA 2019, Shenyang, China, 8–11 August 2019; Proceedings, Part III 12; Springer International Publishing: Cham, Switzerland, 2019; pp. 206–217. [Google Scholar]

- Baldi, S.; Sun, D.; Xia, X.; Zhou, G.; Liu, D. ArduPilot-based adaptive autopilot: Architecture and software-in-the-loop experiments. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 4473–4485. [Google Scholar] [CrossRef]

- Mackay, R.N. Open source drones and AI. J. Soc. Instrum. Control Eng. 2020, 59, 455–459. [Google Scholar]

- Rahman, M.F.A.; Radzuan, S.M.; Hussain, Z.; Khyasudeen, M.F.; Ahmad, K.A.; Ahmad, F.; Ani, A.I.C. Performance of loiter and auto navigation for quadcopter in mission planning application using open source platform. In Proceedings of the 2017 7th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 24–26 November 2017; pp. 342–347. [Google Scholar]

- Chintanadilok, J.; Patel, S.; Zhuang, Y.; Singh, A. Mission Planner: An Open-Source Alternative to Commercial Flight Planning Software for Unmanned Aerial Systems. EDIS 2022, 4, 1–7. [Google Scholar] [CrossRef]

- Foehn, P.; Kaufmann, E.; Romero, A.; Penicka, R.; Sun, S.; Bauersfeld, L.; Laengle, T.; Cioffi, G.; Song, Y.; Loquercio, A.; et al. Agilicious: Open-source and open-hardware agile quadrotor for vision-based flight. Sci. Robot. 2022, 7, eabl6259. [Google Scholar] [CrossRef]

- Burke, C.; Nguyen, H.; Magilligan, M.; Noorani, R. Study of A drone’s payload delivery capabilities utilizing rotational movement. In Proceedings of the 2019 International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 10–12 January 2019; pp. 672–675. [Google Scholar]

- Lienkov, S.; Myasischev, A.; Sieliukov, O.; Pashkov, A.; Zhyrov, G.; Zinchyk, A. Checking the Flight Stability of a Rotary UAV in Navigation Modes for Different Firmware. In CEUR Workshop Proceedings; CEUR-WS Team: Aachen, Germany, 2021; Volume 3126, pp. 46–55. [Google Scholar]

- Pătru, G.C.; Vasilescu, I.; Rosner, D.; Tudose, D. Aerial Drone Platform for Asset Tracking Using an Active Gimbal. In Proceedings of the 2021 23rd International Conference on Control Systems and Computer Science (CSCS), Bucharest, Romania, 26–28 May 2021; pp. 138–142. [Google Scholar]

- Iqbal, S. A study on UAV operating system security and future research challenges. In Proceedings of the 2021 IEEE 11th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 27–30 January 2021; pp. 0759–0765. [Google Scholar]

- Tirado, L.; Vinces, L.; Ronceros, J. An interface based on QgroundControl for the rapid parameterization of flights from an embedded system for the control of an inspection drone. In Proceedings of the 2022 Congreso Internacional de Innovación y Tendencias en Ingeniería (CONIITI), Bogota, Colombia, 5–7 October 2022; pp. 1–5. [Google Scholar]

- Dardoize, T.; Ciochetto, N.; Hong, J.H.; Shin, H.S. Implementation of ground control system for autonomous multi-agents using qgroundcontrol. In Proceedings of the 2019 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED UAS), Cranfield, UK, 25–27 November 2019; pp. 24–30. [Google Scholar]

- Ramirez-Atencia, C.; Camacho, D. Extending QGroundControl for Automated Mission Planning of UAVs. Sensors 2018, 18, 2339. [Google Scholar] [CrossRef] [PubMed]

- Nair, N.; Sareth, K.B.; Bhavani, R.R.; Mohan, A. Simulation and Stabilization of a Custom-Made Quadcopter in Gazebo Using ArduPilot and QGroundControl. In Modeling, Simulation and Optimization: Proceedings of CoMSO 2021; Springer Nature: Singapore, 2022; pp. 191–202. [Google Scholar]

- Nguyen, K.D.; Ha, C.; Jang, J.T. Development of a new hybrid drone and software-in-the-loop simulation using px4 code. In Intelligent Computing Theories and Application: 14th International Conference, ICIC 2018, Wuhan, China, 15–18 August 2018; Proceedings, Part I 14; Springer International Publishing: Cham, Switzerland, 2018; pp. 84–93. [Google Scholar]

- Nguyen, K.D.; Nguyen, T.T. Vision-based software-in-the-loop-simulation for Unmanned Aerial Vehicles using gazebo and PX4 open source. In Proceedings of the 2019 International Conference on System Science and Engineering (ICSSE), Dong Hoi, Vietnam, 20–21 July 2019; pp. 429–432. [Google Scholar]

- Ma, C.; Zhou, Y.; Li, Z. A new simulation environment based on AirSim, ROS, and PX4 for quadcopter aircrafts. In Proceedings of the 2020 6th International Conference on Control, Automation and Robotics (ICCAR), Singapore, 20–23 April 2020; pp. 486–490. [Google Scholar]

- Mirzaeinia, A.; Hassanalian, M.; Lee, K. Drones for borders surveillance: Autonomous battery maintenance station and replacement for multirotor drones. In Proceedings of the AIAA Scitech 2020 Forum, Orlando, FL, USA, 6–10 January 2020; p. 0062. [Google Scholar]

- Apeland, J.; Pavlou, D.; Hemmingsen, T. State-of-technology and barriers for adoption of fuel cell powered multirotor drones. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 1359–1367. [Google Scholar]

- Hasan, A.; Kramar, V.; Hermansen, J.; Schultz, U.P. Development of resilient drones for harsh arctic environment: Challenges, opportunities, and enabling technologies. In Proceedings of the 2022 International Conference on Unmanned Aircraft Systems (ICUAS), Dubrovnik, Croatia, 21–24 June 2022; pp. 1227–1236. [Google Scholar]

- Harun, M.H.; Abdullah, S.S.; Aras, M.S.M.; Bahar, M.B. Collision avoidance control for Unmanned Autonomous Vehicles (UAV): Recent advancements and future prospects. Indian J. Geo-Mar. Sci. (IJMS) 2022, 50, 873–883. [Google Scholar]