Accessibility of Motion Capture as a Tool for Sports Performance Enhancement for Beginner and Intermediate Cricket Players

Abstract

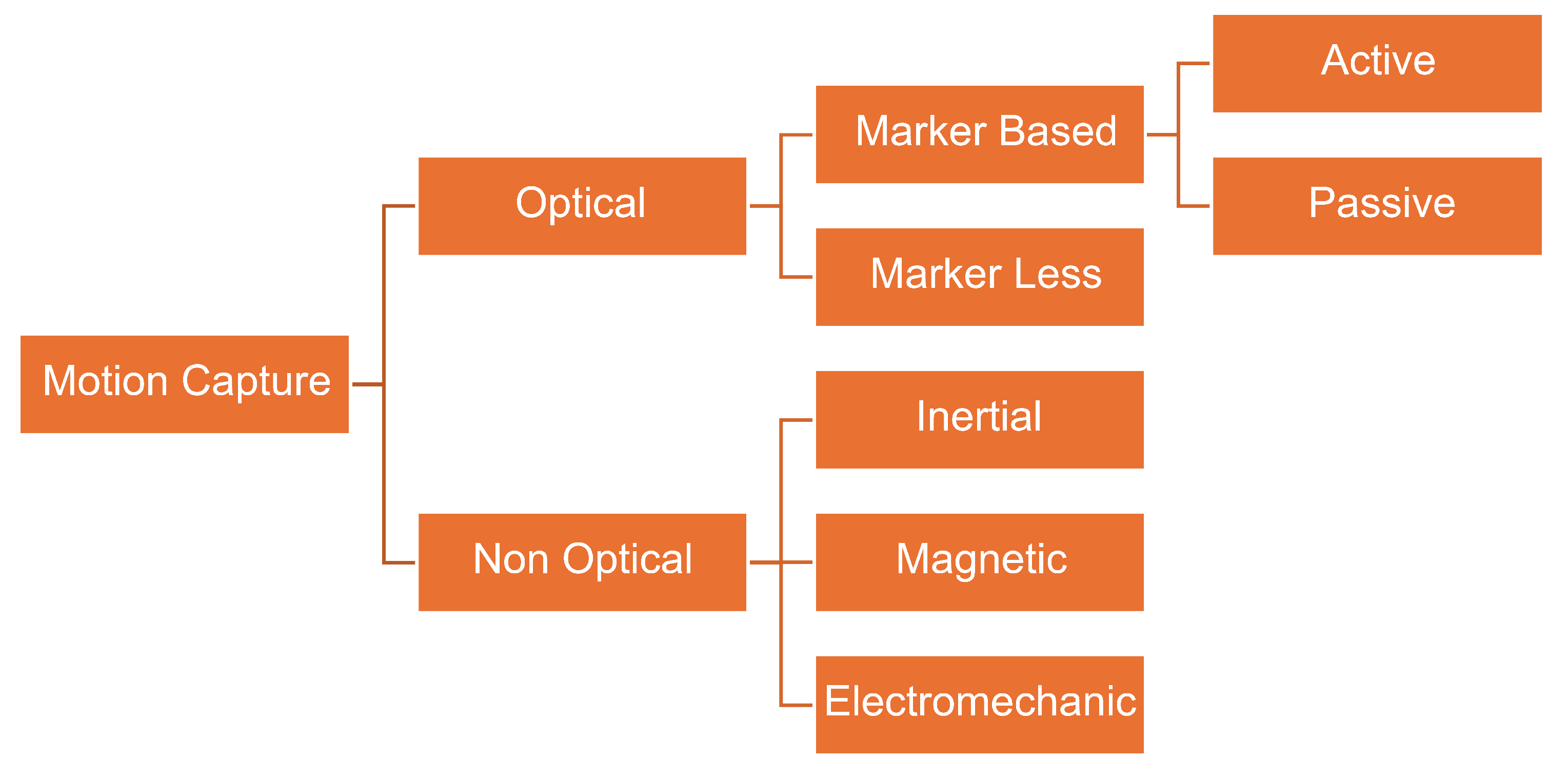

:1. Introduction

2. MoCap in Medicine

3. MoCap in Sports

4. Other Fields

5. Cricket-Related Literature

5.1. Cricket Bowling

5.2. Cricket Batting

6. Cost-Effective MoCap Systems

7. Summary of the Literature

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Noiumkar, S.; Tirakoat, S. Use of optical motion capture in sports science: A case study of golf swing. In Proceedings of the 2013 International Conference on Informatics and Creative Multimedia, Kuala Lumpur, Malaysia, 4–6 September 2013; pp. 310–313. [Google Scholar]

- Daria, B.; Martina, C.; Alessandro, P.; Fabio, S.; Valentina, V.; Zennaro, I. Integrating mocap system and immersive reality for efficient human-centred workstation design. IFAC-PapersOnLine 2018, 51, 188–193. [Google Scholar] [CrossRef]

- Moeslund, T.B.; Hilton, A.; Krüger, V. A survey of advances in vision-based human motion capture and analysis. Comput. Vis. Image Underst. 2006, 104, 90–126. [Google Scholar] [CrossRef]

- Mathis, A.; Schneider, S.; Lauer, J.; Mathis, M.W. A primer on motion capture with deep learning: Principles, pitfalls, and perspectives. Neuron 2020, 108, 44–65. [Google Scholar] [CrossRef] [PubMed]

- Guerra-Filho, G. Optical Motion Capture: Theory and Implementation. Res. Ital. Netw. Approx. 2005, 12, 61–90. [Google Scholar]

- Menolotto, M.; Komaris, D.S.; Tedesco, S.; O’Flynn, B.; Walsh, M. Motion capture technology in industrial applications: A systematic review. Sensors 2020, 20, 5687. [Google Scholar] [CrossRef] [PubMed]

- Cloete, T.; Scheffer, C. Benchmarking of a full-body inertial motion capture system for clinical gait analysis. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 4579–4582. [Google Scholar]

- Pfister, A.; West, A.M.; Bronner, S.; Noah, J.A. Comparative abilities of Microsoft Kinect and Vicon 3D motion capture for gait analysis. J. Med. Eng. Technol. 2014, 38, 274–280. [Google Scholar] [CrossRef] [PubMed]

- Raglio, A.; Panigazzi, M.; Colombo, R.; Tramontano, M.; Iosa, M.; Mastrogiacomo, S.; Baiardi, P.; Molteni, D.; Baldissarro, E.; Imbriani, C.; et al. Hand rehabilitation with sonification techniques in the subacute stage of stroke. Sci. Rep. 2021, 11, 7237. [Google Scholar] [CrossRef] [PubMed]

- Nikmaram, N.; Scholz, D.S.; Großbach, M.; Schmidt, S.B.; Spogis, J.; Belardinelli, P.; Müller-Dahlhaus, F.; Remy, J.; Ziemann, U.; Rollnik, J.D.; et al. Musical sonification of arm movements in stroke rehabilitation yields limited benefits. Front. Neurosci. 2019, 13, 1378. [Google Scholar] [CrossRef] [PubMed]

- Brückner, H.P.; Krüger, B.; Blume, H. Reliable orientation estimation for mobile motion capturing in medical rehabilitation sessions based on inertial measurement units. Microelectron. J. 2014, 45, 1603–1611. [Google Scholar] [CrossRef]

- Khandalavala, K.; Shimon, T.; Flores, L.; Armijo, P.R.; Oleynikov, D. Emerging surgical robotic technology: A progression toward microbots. Ann. Laparosc. Endosc. Surg. 2019, 5. [Google Scholar] [CrossRef]

- Bouget, D.; Allan, M.; Stoyanov, D.; Jannin, P. Vision-based and marker-less surgical tool detection and tracking: A review of the literature. Med. Image Anal. 2017, 35, 633–654. [Google Scholar] [CrossRef]

- Laribi, M.A.; Riviere, T.; Arsicault, M.; Zeghloul, S. A design of slave surgical robot based on motion capture. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 600–605. [Google Scholar]

- DiMaio, S.; Hanuschik, M.; Kreaden, U. The da Vinci Surgical System. In Surgical Robotics: Systems Applications and Visions; Rosen, J., Hannaford, B., Satava, R.M., Eds.; Springer: New York, NY, USA, 2011; pp. 199–217. [Google Scholar]

- Marescaux, J.; Rubino, F. The ZEUS robotic system: Experimental and clinical applications. Surg. Clin. 2003, 83, 1305–1315. [Google Scholar] [CrossRef] [PubMed]

- Yuminaka, Y.; Mori, T.; Watanabe, K.; Hasegawa, M.; Shirakura, K. Non-contact vital sensing systems using a motion capture device: Medical and healthcare applications. Key Eng. Mater. 2016, 698, 171–176. [Google Scholar] [CrossRef]

- Charbonnier, C.; Chagué, S.; Kevelham, B.; Preissmann, D.; Kolo, F.C.; Rime, O.; Lädermann, A. ArthroPlanner: A surgical planning solution for acromioplasty. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 2009–2019. [Google Scholar] [CrossRef] [PubMed]

- Karaliotas, C. When simulation in surgical training meets virtual reality. Hell. J. Surg. 2011, 83, 303–316. [Google Scholar] [CrossRef]

- Gavrilova, M.L.; Ahmed, F.; Bari, A.H.; Liu, R.; Liu, T.; Maret, Y.; Sieu, B.K.; Sudhakar, T. Multi-modal motion-capture-based biometric systems for emergency response and patient rehabilitation. In Research Anthology on Rehabilitation Practices and Therapy; IGI Global: Hershey, PA, USA, 2021; pp. 653–678. [Google Scholar]

- Khoury, A.R. Motion capture for telemedicine: A review of nintendo wii, microsoft kinect, and playstation move. J. Int. Soc. Telemed. eHealth 2018, 6, e14-1. [Google Scholar]

- Armitano-Lago, C.; Willoughby, D.; Kiefer, A.W. A SWOT analysis of portable and low-cost markerless motion capture systems to assess lower-limb musculoskeletal kinematics in sport. Front. Sport. Act. Living 2022, 3, 809898. [Google Scholar] [CrossRef] [PubMed]

- Ortega, B.P.; Olmedo, J.M.J. Application of motion capture technology for sport performance analysis. Retos Nuevas Tendencias Educ. Física Deporte Recreación 2017, 32, 241–247. [Google Scholar]

- Rana, M.; Mittal, V. Wearable sensors for real-time kinematics analysis in sports: A review. IEEE Sens. J. 2020, 21, 1187–1207. [Google Scholar] [CrossRef]

- Lee, C.J.; Lee, J.K. Inertial motion capture-based wearable systems for estimation of joint kinetics: A systematic review. Sensors 2022, 22, 2507. [Google Scholar] [CrossRef]

- Johnson, W.R.; Mian, A.; Donnelly, C.J.; Lloyd, D.; Alderson, J. Predicting athlete ground reaction forces and moments from motion capture. Med. Biol. Eng. Comput. 2018, 56, 1781–1792. [Google Scholar] [CrossRef] [PubMed]

- Godfrey, A.; Stuart, S.; Kenny, I.C.; Comyns, T.M. Methodological Considerations in Sports Science, Technology and Engineering. Front. Sport. Act. Living 2023, 5, 1294412. [Google Scholar] [CrossRef] [PubMed]

- Boddy, K.J.; Marsh, J.A.; Caravan, A.; Lindley, K.E.; Scheffey, J.O.; O’Connell, M.E. Exploring wearable sensors as an alternative to marker-based motion capture in the pitching delivery. PeerJ 2019, 7, e6365. [Google Scholar] [CrossRef] [PubMed]

- Punchihewa, N.G.; Yamako, G.; Fukao, Y.; Chosa, E. Identification of key events in baseball hitting using inertial measurement units. J. Biomech. 2019, 87, 157–160. [Google Scholar] [CrossRef]

- Wang, J.; Wang, Z.; Gao, F.; Zhao, H.; Qiu, S.; Li, J. Swimming stroke phase segmentation based on wearable motion capture technique. IEEE Trans. Instrum. Meas. 2020, 69, 8526–8538. [Google Scholar] [CrossRef]

- Hachaj, T.; Piekarczyk, M.; Ogiela, M.R. Human actions analysis: Templates generation, matching and visualization applied to motion capture of highly-skilled karate athletes. Sensors 2017, 17, 2590. [Google Scholar] [CrossRef] [PubMed]

- Szczkesna, A.; Błaszczyszyn, M.; Pawlyta, M. Optical motion capture dataset of selected techniques in beginner and advanced Kyokushin karate athletes. Sci. Data 2021, 8, 13. [Google Scholar] [CrossRef]

- Bregler, C. Motion capture technology for entertainment [in the spotlight]. IEEE Signal Process. Mag. 2007, 24, 158–160. [Google Scholar] [CrossRef]

- Pilati, F.; Faccio, M.; Gamberi, M.; Regattieri, A. Learning manual assembly through real-time motion capture for operator training with augmented reality. Procedia Manuf. 2020, 45, 189–195. [Google Scholar] [CrossRef]

- Bortolini, M.; Gamberi, M.; Pilati, F.; Regattieri, A. Automatic assessment of the ergonomic risk for manual manufacturing and assembly activities through optical motion capture technology. Procedia CIRP 2018, 72, 81–86. [Google Scholar] [CrossRef]

- Guo, W.J.; Chen, Y.R.; Chen, S.Q.; Yang, X.L.; Qin, L.J.; Liu, J.G. Design of Human Motion Capture System Based on Computer Vision. In Proceedings of the 5th International Conference on Advanced Design and Manufacturing Engineering, Shenzhen, China, 19–20 September 2015; pp. 1891–1894. [Google Scholar]

- Yang, S.X.; Christiansen, M.S.; Larsen, P.K.; Alkjær, T.; Moeslund, T.B.; Simonsen, E.B.; Lynnerup, N. Markerless motion capture systems for tracking of persons in forensic biomechanics: An overview. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2014, 2, 46–65. [Google Scholar] [CrossRef]

- Tirakoat, S. Optimized motion capture system for full body human motion capturing case study of educational institution and small animation production. In Proceedings of the 2011 Workshop on Digital Media and Digital Content Management, Hangzhou, China, 15–16 May 2011; pp. 117–120. [Google Scholar]

- Han, S.; Achar, M.; Lee, S.; Peña-Mora, F. Empirical assessment of a RGB-D sensor on motion capture and action recognition for construction worker monitoring. Vis. Eng. 2013, 1, 1–13. [Google Scholar] [CrossRef]

- Starbuck, R.; Seo, J.; Han, S.; Lee, S. A stereo vision-based approach to marker-less motion capture for on-site kinematic modeling of construction worker tasks. In Proceedings of the Computing in Civil and Building Engineering 2014, Orlando, FL, USA, 23–25 June 2014; pp. 1094–1101. [Google Scholar]

- Seo, J.; Alwasel, A.; Lee, S.; Abdel-Rahman, E.M.; Haas, C. A comparative study of in-field motion capture approaches for body kinematics measurement in construction. Robotica 2019, 37, 928–946. [Google Scholar] [CrossRef]

- Baker, T. The History of Motion Capture within the Entertainment Industry. Ph.D. Thesis, Metropolia Ammattikorkeakoulu, Helsinki, Finland, 2020. [Google Scholar]

- Patoli, M.Z.; Gkion, M.; Newbury, P.; White, M. Real time online motion capture for entertainment applications. In Proceedings of the 2010 Third IEEE International Conference on Digital Game and Intelligent Toy Enhanced Learning, Kaohsiung, Taiwan, 12–16 April 2010; pp. 139–145. [Google Scholar]

- Kammerlander, R.K.; Pereira, A.; Alexanderson, S. Using virtual reality to support acting in motion capture with differently scaled characters. In Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisbon, Portugal, 27 March–1 April 2021; pp. 402–410. [Google Scholar]

- Knyaz, V. Scalable photogrammetric motion capture system “mosca”: Development and application. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 43–49. [Google Scholar] [CrossRef]

- Chan, J.C.; Leung, H.; Tang, J.K.; Komura, T. A virtual reality dance training system using motion capture technology. IEEE Trans. Learn. Technol. 2010, 4, 187–195. [Google Scholar] [CrossRef]

- Kim, H.Y.; Lee, J.S.; Choi, H.L.; Han, J.H. Autonomous formation flight of multiple flapping-wing flying vehicles using motion capture system. Aerosp. Sci. Technol. 2014, 39, 596–604. [Google Scholar] [CrossRef]

- Wu, X.D.; Liu, H.; Mo, S.; Wu, Z.; Li, Y. Research of Maintainability Evaluation for Civil Aircrafts Based on the Motion Capture System. Appl. Mech. Mater. 2012, 198, 1062–1066. [Google Scholar] [CrossRef]

- Phunsa, S.; Potisarn, N.; Tirakoat, S. Edutainment–Thai Art of Self-Defense and Boxing by Motion Capture Technique. In Proceedings of the 2009 International Conference on Computer Modeling and Simulation, Cambridge, UK, 25–27 March 2009; pp. 152–155. [Google Scholar]

- Parks, M.; Chien, J.H.; Siu, K.C. Development of a mobile motion capture (MO2CA) system for future military application. Mil. Med. 2019, 184, 65–71. [Google Scholar] [CrossRef] [PubMed]

- Aquila, I.; Sacco, M.A.; Aquila, G.; Raffaele, R.; Manca, A.; Capoccia, G.; Cordasco, F.; Ricci, P. The reconstruction of the dynamic of a murder using 3D motion capture and 3D model buildings: The investigation of a dubious forensic case. J. Forensic Sci. 2019, 64, 1540–1543. [Google Scholar] [CrossRef]

- Alonso, S.; López, D.; Puente, A.; Romero, A.; Álvarez, I.M.; Manero, B. Evaluation of a motion capture and virtual reality classroom for secondary school teacher training. In Proceedings of the 29th International Conference on Computers in Education, Virtual, 22–26 November 2021; pp. 327–332. [Google Scholar]

- Yokokohji, Y.; Kitaoka, Y.; Yoshikawa, T. Motion capture from demonstrator’s viewpoint and its application to robot teaching. J. Robot. Syst. 2005, 22, 87–97. [Google Scholar] [CrossRef]

- Marshall, R.N.; Ferdinands, R. The biomechanics of the elbow in cricket bowling. Int. Sport. J. 2005, 6, 1–6. [Google Scholar]

- Wells, D.; Alderson, J.; Camomilla, V.; Donnelly, C.; Elliott, B.; Cereatti, A. Elbow joint kinematics during cricket bowling using magneto-inertial sensors a feasibility study. J. Sport. Sci. 2019, 37, 515–524. [Google Scholar] [CrossRef] [PubMed]

- Wixted, A.; Portus, M.; Spratford, W.; James, D. Detection of throwing in cricket using wearable sensors. Sport. Technol. 2011, 4, 134–140. [Google Scholar] [CrossRef]

- Kumar, A.; Espinosa, H.G.; Worsey, M.; Thiel, D.V. Spin rate measurements in cricket bowling using magnetometers. Proceedings 2020, 49, 11. [Google Scholar] [CrossRef]

- Spratford, W.; Whiteside, D.; Elliott, B.; Portus, M.; Brown, N.; Alderson, J. Does performance level affect initial ball flight kinematics in finger and wrist-spin cricket bowlers? J. Sport. Sci. 2018, 36, 651–659. [Google Scholar] [CrossRef] [PubMed]

- Harnett, K.; Plint, B.; Chan, K.Y.; Clark, B.; Netto, K.; Davey, P.; Müller, S.; Rosalie, S. Validating an inertial measurement unit for cricket fast bowling: A first step in assessing the feasibility of diagnosing back injury risk in cricket fast bowlers during a tele-sport-and-exercise medicine consultation. PeerJ 2022, 10, e13228. [Google Scholar] [CrossRef] [PubMed]

- Ferdinands, R.E.; Sinclair, P.J.; Stuelcken, M.C.; Greene, A. Rear leg kinematics and kinetics in cricket fast bowling. Sport. Technol. 2014, 7, 52–61. [Google Scholar] [CrossRef]

- Peploe, C.; King, M.; Harland, A. The effects of different delivery methods on the movement kinematics of elite cricket batsmen in repeated front foot drives. Procedia Eng. 2014, 72, 220–225. [Google Scholar] [CrossRef]

- Moodley, T.; van der Haar, D.; Noorbhai, H. Automated recognition of the cricket batting backlift technique in video footage using deep learning architectures. Sci. Rep. 2022, 12, 1895. [Google Scholar] [CrossRef]

- Peploe, C. The Kinematics of Batting against Fast Bowling in Cricket. Ph.D. Thesis, Loughborough University, Reading, UK, 2016. [Google Scholar]

- McErlain-Naylor, S.; Peploe, C.; Felton, P.; King, M. Hitting for Six: Cricket Power Hitting Biomechanics. 2022. Available online: https://www.stuartmcnaylor.com/publication/cricket_BASES/McErlain-Naylor_et_al_2022.pdf (accessed on 9 November 2023).

- Peploe, C.; McErlain-Naylor, S.A.; Harland, A.; King, M. Relationships between technique and bat speed, post-impact ball speed, and carry distance during a range hitting task in cricket. Hum. Mov. Sci. 2019, 63, 34–44. [Google Scholar] [CrossRef]

- Callaghan, S.J.; Lockie, R.G.; Jeffriess, M.D. The acceleration kinematics of cricket-specific starts when completing a quick single. Sport. Technol. 2014, 7, 39–51. [Google Scholar] [CrossRef]

- Sarkar, A.K. Bat Swing Analysis in Cricket. Ph.D. Thesis, Griffith School of Engineering, Nathan, QLD, Australia, 2013. [Google Scholar]

- Siddiqui, H.U.R.; Younas, F.; Rustam, F.; Flores, E.S.; Ballester, J.B.; Diez, I.d.l.T.; Dudley, S.; Ashraf, I. Enhancing Cricket Performance Analysis with Human Pose Estimation and Machine Learning. Sensors 2023, 23, 6839. [Google Scholar] [CrossRef]

- Stuelcken, M.; Portus, M.; Mason, B. Cricket: Off-side front foot drives in men’s high performance Cricket. Sport. Biomech. 2005, 4, 17–35. [Google Scholar] [CrossRef] [PubMed]

- Dhawan, A.; Cummins, A.; Spratford, W.; Dessing, J.C.; Craig, C. Development of a novel immersive interactive virtual reality cricket simulator for cricket batting. In Proceedings of the 10th International Symposium on Computer Science in Sports (ISCSS), Loughborough, UK, 9–11 September 2015; pp. 203–210. [Google Scholar]

- Curtis, K.M.; Kelly, M.; Craven, M.P. Cricket batting technique analyser/trainer using fuzzy logic. In Proceedings of the 2009 16th International Conference on Digital Signal Processing, Santorini, Greece, 5–7 July 2009; pp. 1–6. [Google Scholar]

- Kelly, M.; Curtis, K.; Craven, M. Fuzzy recognition of cricket batting strokes based on sequences of body and bat postures. In Proceedings of the IEEE SoutheastCon, Ocho Rios, Jamaica, 4–6 April 2003; pp. 140–147. [Google Scholar]

- Callaghan, J.S.; Jeffriess, D.M.; Mackie, L.S.; Jalilvand, F.; Lockie, G.R. The impact of a rolling start on the sprint velocity and acceleration kinematics of a quick single in regional first grade cricketers. Int. J. Perform. Anal. Sport 2015, 15, 794–808. [Google Scholar] [CrossRef]

- Thewlis, D.; Bishop, C.; Daniell, N.; Paul, G. Next-generation low-cost motion capture systems can provide comparable spatial accuracy to high-end systems. J. Appl. Biomech. 2013, 29, 112–117. [Google Scholar] [CrossRef]

- Regazzoni, D.; De Vecchi, G.; Rizzi, C. RGB cams vs RGB-D sensors: Low cost motion capture technologies performances and limitations. J. Manuf. Syst. 2014, 33, 719–728. [Google Scholar] [CrossRef]

- Chatzitofis, A.; Zarpalas, D.; Daras, P.; Kollias, S. DeMoCap: Low-cost marker-based motion capture. Int. J. Comput. Vis. 2021, 129, 3338–3366. [Google Scholar] [CrossRef]

- Sgrò, F.; Nicolosi, S.; Schembri, R.; Pavone, M.; Lipoma, M. Assessing vertical jump developmental levels in childhood using a low-cost motion capture approach. Percept. Mot. Skills 2015, 120, 642–658. [Google Scholar] [CrossRef] [PubMed]

- Robert-Lachaine, X.; Mecheri, H.; Muller, A.; Larue, C.; Plamondon, A. Validation of a low-cost inertial motion capture system for whole-body motion analysis. J. Biomech. 2020, 99, 109520. [Google Scholar] [CrossRef]

- Lee, Y.; Yoo, H. Low-cost 3D motion capture system using passive optical markers and monocular vision. Optik 2017, 130, 1397–1407. [Google Scholar] [CrossRef]

- Choe, N.; Zhao, H.; Qiu, S.; So, Y. A sensor-to-segment calibration method for motion capture system based on low cost MIMU. Measurement 2019, 131, 490–500. [Google Scholar] [CrossRef]

- Patrizi, A.; Pennestrì, E.; Valentini, P.P. Comparison between low-cost marker-less and high-end marker-based motion capture systems for the computer-aided assessment of working ergonomics. Ergonomics 2016, 59, 155–162. [Google Scholar] [CrossRef] [PubMed]

- Sama, M.; Pacella, V.; Farella, E.; Benini, L.; Riccó, B. 3dID: A low-power, low-cost hand motion capture device. In Proceedings of the Design Automation & Test in Europe Conference, Munich, Germany, 6–10 March 2006; Volume 2, p. 6. [Google Scholar]

- Raghavendra, P.; Sachin, M.; Srinivas, P.; Talasila, V. Design and development of a real-time, low-cost IMU based human motion capture system. In Proceedings of the Computing and Network Sustainability: Proceedings of IRSCNS 2016, Goa, India, 1–2 July 2016; pp. 155–165. [Google Scholar]

- Kendall, A.; Grimes, M.; Cipolla, R. Posenet: A convolutional network for real-time 6-dof camera relocalization. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2938–2946. [Google Scholar]

- Gladh, S.; Danelljan, M.; Khan, F.S.; Felsberg, M. Deep motion features for visual tracking. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 1243–1248. [Google Scholar]

- Fang, H.S.; Li, J.; Tang, H.; Xu, C.; Zhu, H.; Xiu, Y.; Li, Y.L.; Lu, C. Alphapose: Whole-body regional multi-person pose estimation and tracking in real-time. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 7157–7173. [Google Scholar] [CrossRef] [PubMed]

- Mosna, P. Integrated Approaches Supported by Novel Technologies in Functional Assessment and Rehabilitation. Ph.D. Thesis, Università Degli Studi di Brescia, Brescia, Italy, 2021. [Google Scholar]

- Li, J.; Su, W.; Wang, Z. Simple pose: Rethinking and improving a bottom-up approach for multi-person pose estimation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11354–11361. [Google Scholar]

- Achilles, F.; Ichim, A.E.; Coskun, H.; Tombari, F.; Noachtar, S.; Navab, N. Patient MoCap: Human pose estimation under blanket occlusion for hospital monitoring applications. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2016: 19th International Conference, Athens, Greece, 17–21 October 2016; pp. 491–499. [Google Scholar]

- Kiely, N.; Pickering Rodriguez, L.; Watsford, M.; Reddin, T.; Hardy, S.; Duffield, R. The influence of technique and physical capacity on ball release speed in cricket fast-bowling. J. Sport. Sci. 2021, 39, 2361–2369. [Google Scholar] [CrossRef] [PubMed]

- Kishita, Y.; Ueda, H.; Kashino, M. Temporally coupled coordination of eye and body movements in baseball batting for a wide range of ball speeds. Front. Sport. Act. Living 2020, 2, 64. [Google Scholar] [CrossRef]

- Jayaraj, L.; Wood, J.; Gibson, M. Improving the immersion in virtual reality with real-time avatar and haptic feedback in a cricket simulation. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; pp. 310–314. [Google Scholar]

- Gao, L.; Zhang, G.; Yu, B.; Qiao, Z.; Wang, J. Wearable human motion posture capture and medical health monitoring based on wireless sensor networks. Measurement 2020, 166, 108252. [Google Scholar] [CrossRef]

- Haque, M.R.; Imtiaz, M.H.; Kwak, S.T.; Sazonov, E.; Chang, Y.H.; Shen, X. A Lightweight Exoskeleton-Based Portable Gait Data Collection System. Sensors 2021, 21, 781. [Google Scholar] [CrossRef]

- Kim, H.g.; Lee, J.w.; Jang, J.; Han, C.; Park, S. Mechanical design of an exoskeleton for load-carrying augmentation. In Proceedings of the IEEE ISR 2013, Seoul, Republic of Korea, 24–26 October 2013; pp. 1–5. [Google Scholar]

- Lafayette, T.B.d.G.; Kunst, V.H.d.L.; Melo, P.V.d.S.; Guedes, P.d.O.; Teixeira, J.M.X.N.; Vasconcelos, C.R.d.; Teichrieb, V.; Da Gama, A.E.F. Validation of angle estimation based on body tracking data from RGB-D and RGB cameras for biomechanical assessment. Sensors 2022, 23, 3. [Google Scholar] [CrossRef]

- Palani, P.; Panigrahi, S.; Jammi, S.A.; Thondiyath, A. Real-time joint angle estimation using mediapipe framework and inertial sensors. In Proceedings of the 2022 IEEE 22nd International Conference on Bioinformatics and Bioengineering (BIBE), Taichung, Taiwan, 7–9 November 2022; pp. 128–133. [Google Scholar]

| Method | Accuracy | Setup Complexity | Cost | Environmental Needs | Prior Usage |

|---|---|---|---|---|---|

| Optical (Marker-based) | Very accurate; captures detailed movements. | Complex setup; needs many cameras and careful placement of markers. | Expensive; requires lots of cameras and computer power. | Needs controlled lighting and clear view of markers. | [5,18,26,44,45,46,51,60,66,70,73,76,89,90,91] |

| Optical (Marker-less) | Very accurate; quality depends on software. | Medium-to-complex setup; depends on software. | Medium to expensive; needs advanced software. | Needs clear view of the person, sensitive to surroundings. | [1,20,22,35,76,92] |

| Inertial | Quite accurate; might lose accuracy over time. | Easy-to-medium setup; no external cameras needed. | Medium cost; sensors and processors are needed. | Very flexible; works anywhere, but needs initial setup. | [2,7,11,28,31,59,76,80,93] |

| Magnetic | Fairly accurate; can be disrupted by metals. | Medium setup; involves placing magnetic sensors. | Medium to expensive; specialized equipment needed. | Must avoid metal in the area. | [71,80] |

| Mechanical | Quite accurate; measures movement at joints directly. | Medium setup; involves wearing a suit with sensors. | Medium cost; suits and sensors can be pricey. | Suit needs to fit well; can limit movement. | [94,95] |

| Sport | Marker-Based Optical | Marker-Less Optical | Inertial & Magnetic | Mechanical |

|---|---|---|---|---|

| Golf | ⊗ | |||

| Baseball | ⊗ | |||

| Gymnastics | ⊗ | ⊗ | ||

| Diving | ⊗ | ⊗ | ||

| Basketball | ⊗ | ⊗ | ||

| Football (Soccer/American) | ⊗ | ⊗ | ||

| Athletics | ⊗ | ⊗ | ||

| Cycling | ⊗ | ⊗ | ||

| Skiing | ⊗ | ⊗ | ||

| Rowing | ⊗ | |||

| Canoeing | ⊗ | |||

| Tennis | ⊗ | |||

| Mixed Martial Arts | ⊗ | ⊗ | ⊗ | |

| Boxing | ⊗ | ⊗ | ⊗ | |

| Cricket | ⊗ | ⊗ | ⊗ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maduwantha, K.; Jayaweerage, I.; Kumarasinghe, C.; Lakpriya, N.; Madushan, T.; Tharanga, D.; Wijethunga, M.; Induranga, A.; Gunawardana, N.; Weerakkody, P.; et al. Accessibility of Motion Capture as a Tool for Sports Performance Enhancement for Beginner and Intermediate Cricket Players. Sensors 2024, 24, 3386. https://doi.org/10.3390/s24113386

Maduwantha K, Jayaweerage I, Kumarasinghe C, Lakpriya N, Madushan T, Tharanga D, Wijethunga M, Induranga A, Gunawardana N, Weerakkody P, et al. Accessibility of Motion Capture as a Tool for Sports Performance Enhancement for Beginner and Intermediate Cricket Players. Sensors. 2024; 24(11):3386. https://doi.org/10.3390/s24113386

Chicago/Turabian StyleMaduwantha, Kaveendra, Ishan Jayaweerage, Chamara Kumarasinghe, Nimesh Lakpriya, Thilina Madushan, Dasun Tharanga, Mahela Wijethunga, Ashan Induranga, Niroshan Gunawardana, Pathum Weerakkody, and et al. 2024. "Accessibility of Motion Capture as a Tool for Sports Performance Enhancement for Beginner and Intermediate Cricket Players" Sensors 24, no. 11: 3386. https://doi.org/10.3390/s24113386