When Trustworthiness Meets Face: Facial Design for Social Robots

Abstract

:1. Introduction

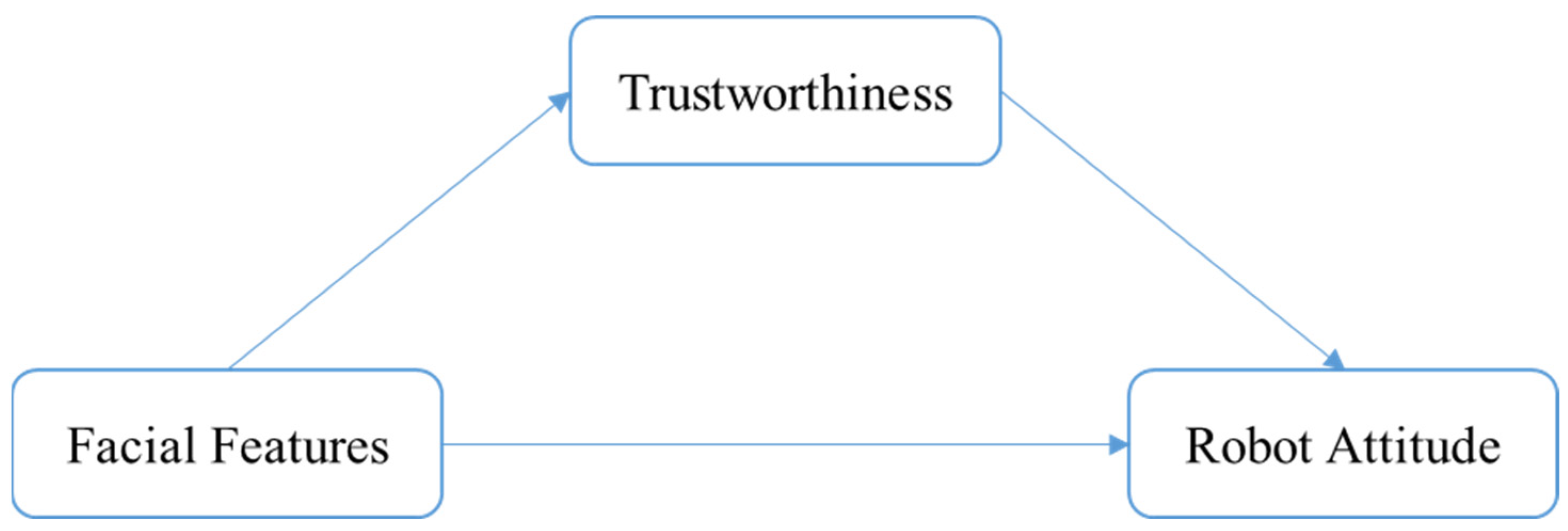

2. Literature Review

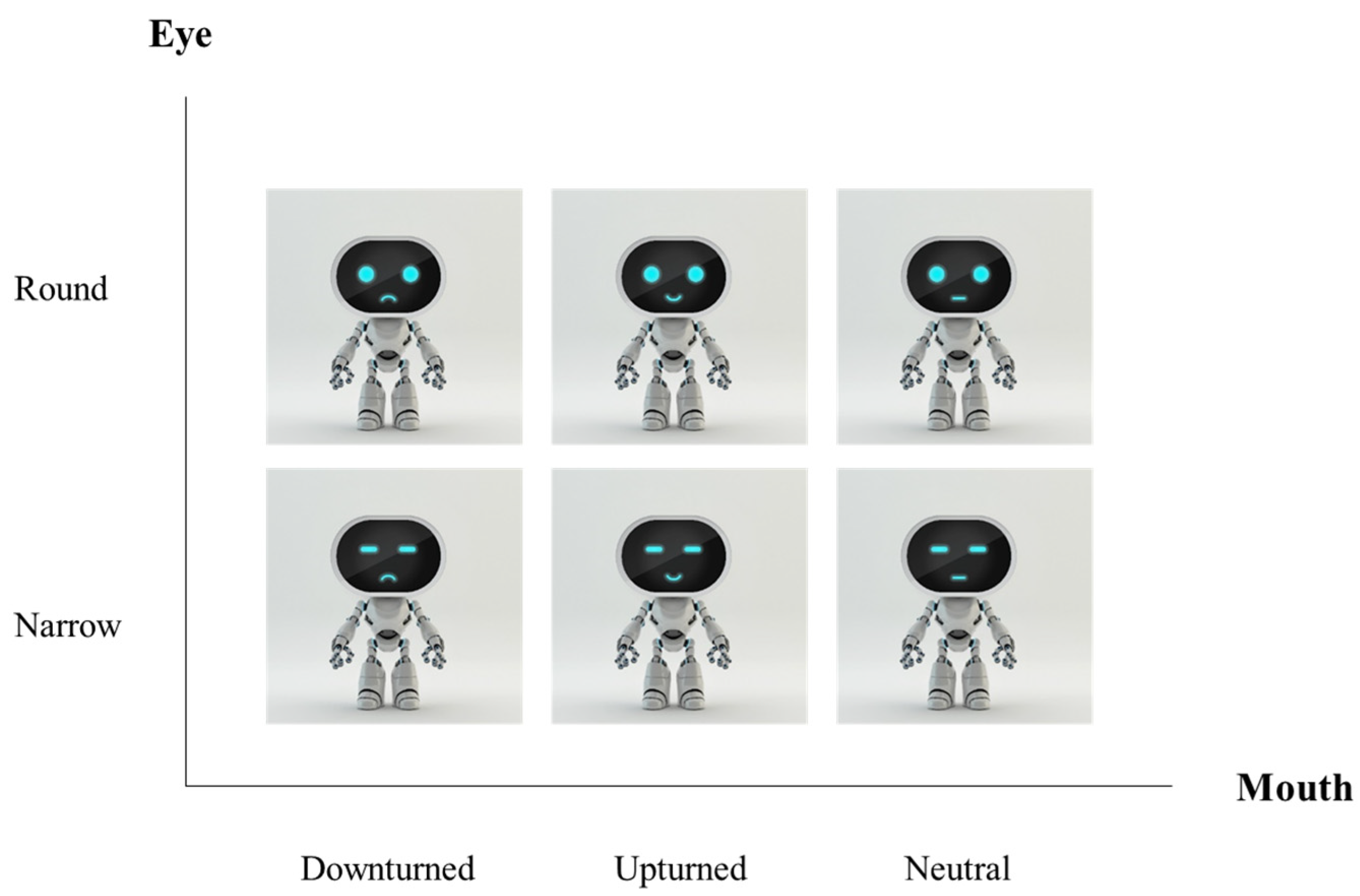

3. Methodology

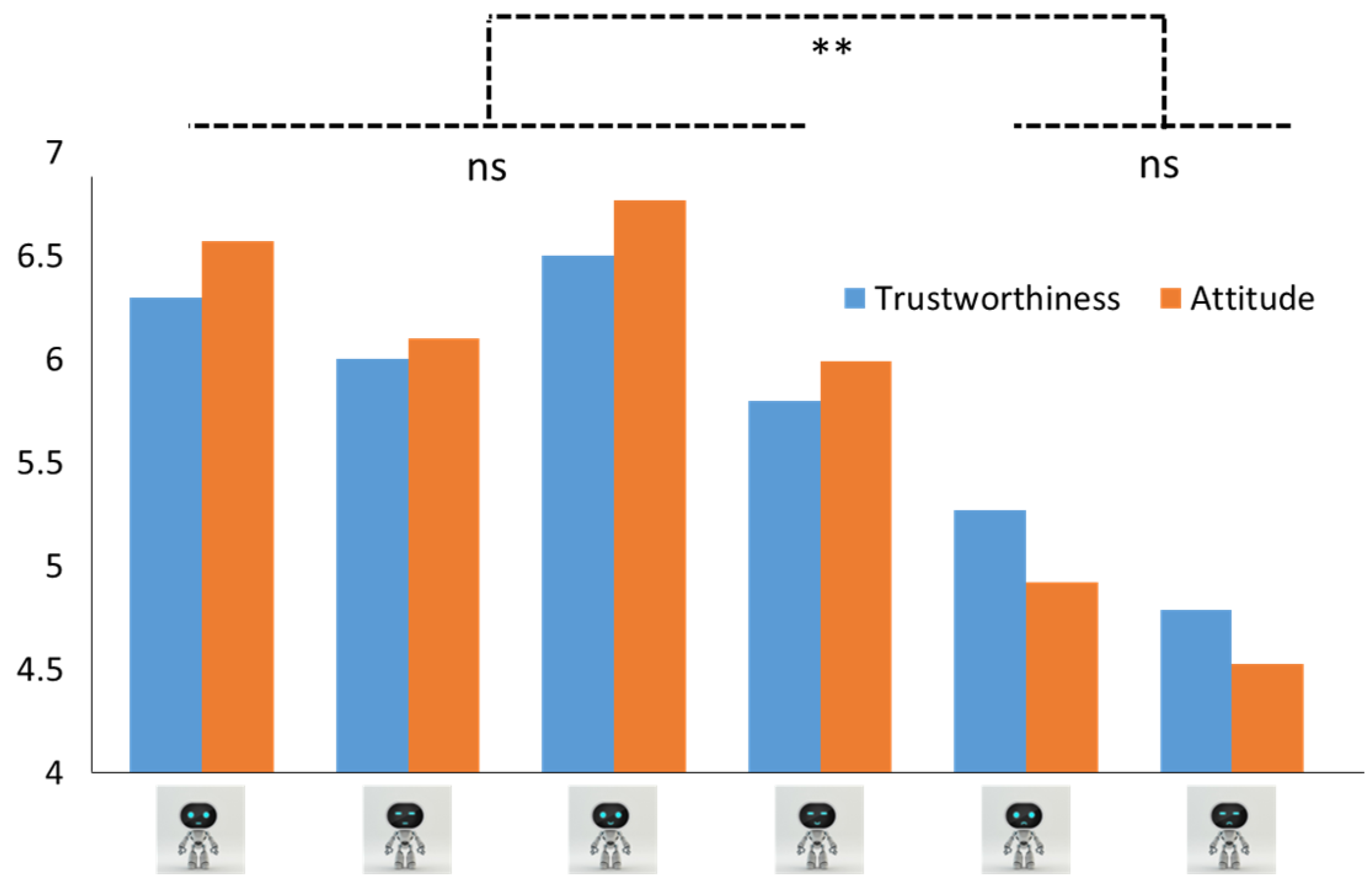

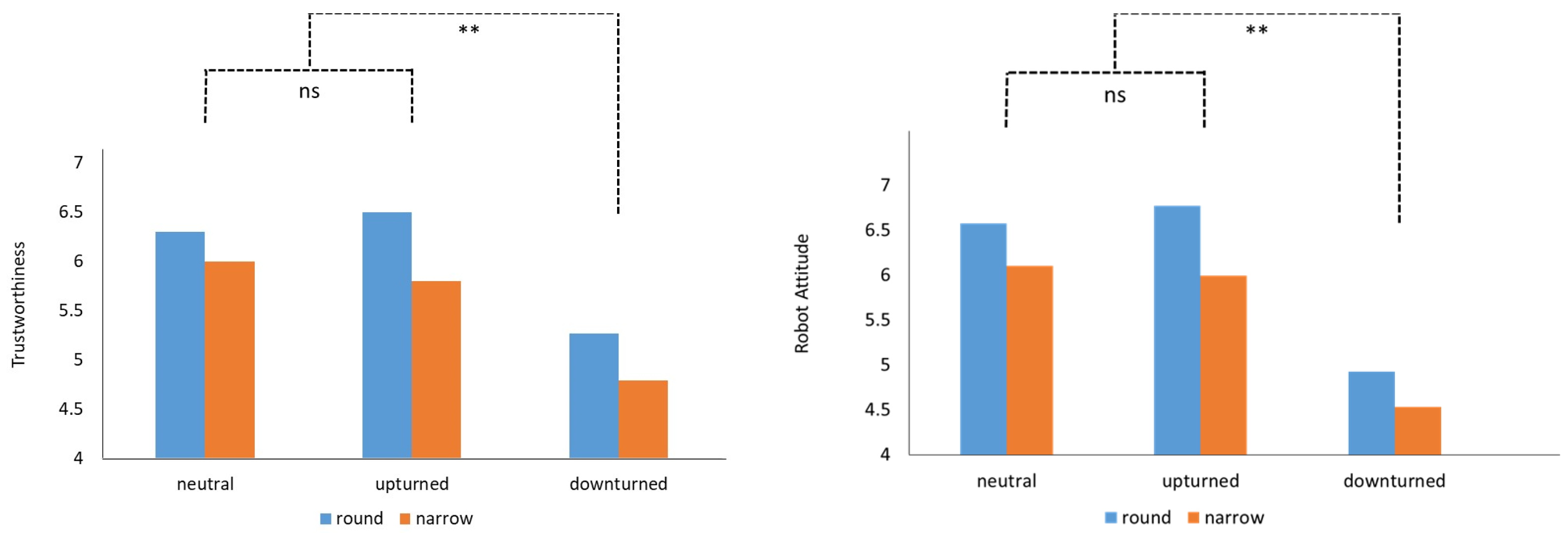

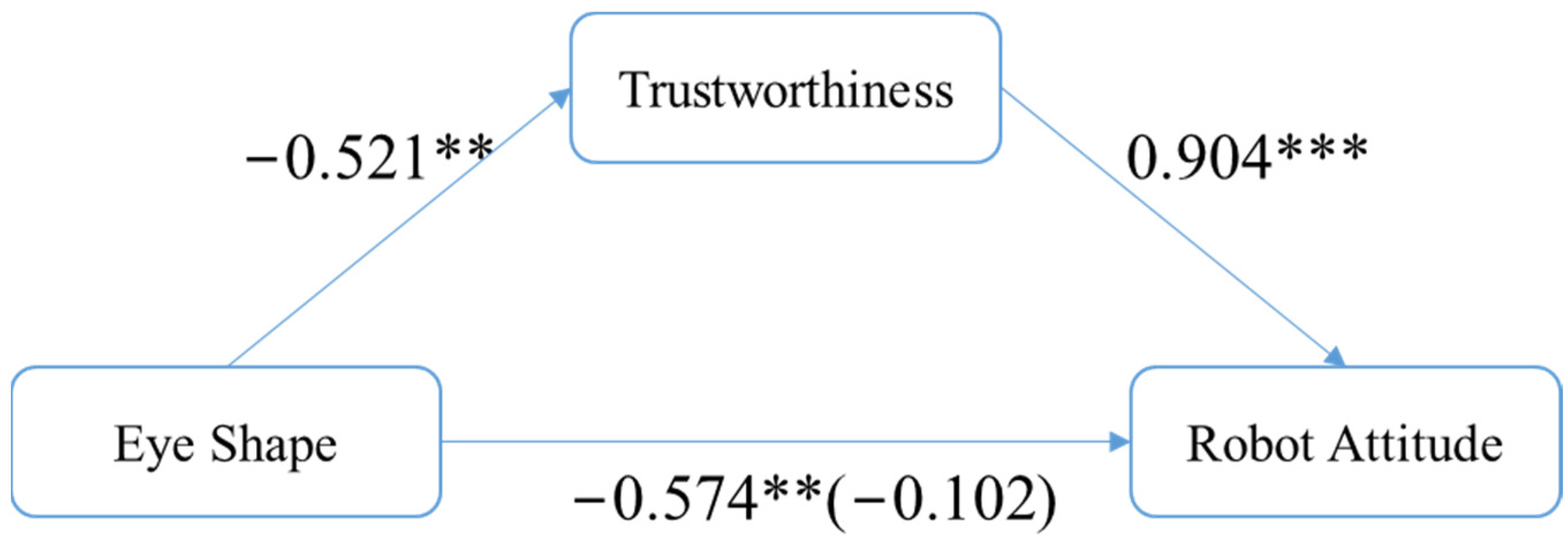

4. Analysis and Results

5. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, Q.; Luximon, Y.; Zhang, J.; Song, Y. Measuring and Classifying Students’ Cognitive Load in Pen-Based Mobile Learning Using Handwriting, Touch Gestural and Eye-Tracking Data. Br. J. Educ. Technol. 2023, 55, 625–653. [Google Scholar] [CrossRef]

- Mei, Y.; Fan, Y.; Zhang, Y.; Yu, J.; Zhou, Y.; Liu, D.; Fu, Y.; Huang, T.S.; Shi, H. Pyramid Attention Network for Image Restoration. Int. J. Comput. Vis. 2023, 131, 3207–3225. [Google Scholar] [CrossRef]

- Sá, J.; Sequeira, J.S. Exploring Behaviours for Social Robots. In Synergetic Cooperation between Robots and Humans; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2024; Volume 811, pp. 185–196. [Google Scholar] [CrossRef]

- Westlund, J.K.; Lee, J.J.; Plummer, L.; Faridi, F.; Gray, J.; Berlin, M.; Quintus-Bosz, H.; Hartmann, R.; Hess, M.; Dyer, S.; et al. Tega: A Social Robot. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; IEEE: Piscataway, NJ, USA, 2016; p. 561. [Google Scholar]

- Song, Y.; Tao, D.; Luximon, Y. In Robot We Trust? The Effect of Emotional Expressions and Contextual Cues on Anthropomorphic Trustworthiness. Appl. Ergon. 2023, 109, 103967. [Google Scholar] [CrossRef] [PubMed]

- Lyons, J.B.; Vo, T.; Wynne, K.T.; Mahoney, S.; Nam, C.S.; Gallimore, D. Trusting Autonomous Security Robots: The Role of Reliability and Stated Social Intent. Hum. Factors 2020, 63, 603–618. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Luximon, A.; Luximon, Y. Facial Anthropomorphic Trustworthiness Scale for Social Robots: A Hybrid Approach. Biomimetics 2023, 8, 335. [Google Scholar] [CrossRef] [PubMed]

- Prakash, A.; Rogers, W.A. Why Some Humanoid Faces Are Perceived More Positively Than Others: Effects of Human-Likeness and Task. Int. J. Soc. Robot. 2015, 7, 309–331. [Google Scholar] [CrossRef]

- Matsui, T.; Yamada, S. Robot’s Impression of Appearance and Their Trustworthy and Emotion Richness. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 88–93. [Google Scholar] [CrossRef]

- Yu, P.L.; Balaji, M.S.; Khong, K.W. Building Trust in Internet Banking: A Trustworthiness Perspective. Ind. Manag. Data Syst. 2015, 115, 235–252. [Google Scholar] [CrossRef]

- Hancock, P.A.; Kessler, T.T.; Kaplan, A.D.; Brill, J.C.; Szalma, J.L. Evolving Trust in Robots: Specification through Sequential and Comparative Meta-Analyses. Hum. Factors 2020, 63, 1196–1229. [Google Scholar] [CrossRef] [PubMed]

- Gompei, T.; Umemuro, H. Factors and Development of Cognitive and Affective Trust on Social Robots. In Social Robotics, 10th International Conference, ICSR 2018, Qingdao, China, 28–30 November 2018; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2018; Volume 11357, pp. 45–54. [Google Scholar]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.C.; De Visser, E.J.; Parasuraman, R. A Meta-Analysis of Factors Affecting Trust in Human-Robot Interaction. Hum. Factors 2011, 53, 517–527. [Google Scholar] [CrossRef]

- Saunderson, S.; Nejat, G. How Robots Influence Humans: A Survey of Nonverbal Communication in Social Human–Robot Interaction. Int. J. Soc. Robot. 2019, 11, 575–608. [Google Scholar] [CrossRef]

- Landwehr, J.R.; McGill, A.L.; Herrmann, A. It’s Got the Look: The Effect of Friendly and Aggressive “Facial” Expressions on Product Liking and Sales. J. Mark. 2011, 75, 132–146. [Google Scholar] [CrossRef]

- Willis, J.; Todorov, A. First Impressions. Psychol. Sci. 2006, 17, 592–598. [Google Scholar] [CrossRef] [PubMed]

- Simion, F.; Giorgio, E. Di Face Perception and Processing in Early Infancy: Inborn Predispositions and Developmental Changes. Front. Psychol. 2015, 6, 969. [Google Scholar] [CrossRef] [PubMed]

- Maeng, A.; Aggarwal, P. Facing Dominance: Anthropomorphism and the Effect of Product Face Ratio on Consumer Preference. J. Consum. Res. 2018, 44, 1104–1122. [Google Scholar] [CrossRef]

- McGinn, C. Why Do Robots Need a Head? The Role of Social Interfaces on Service Robots. Int. J. Soc. Robot. 2019, 12, 281–295. [Google Scholar] [CrossRef]

- Stroessner, S.J.; Benitez, J. The Social Perception of Humanoid and Non-Humanoid Robots: Effects of Gendered and Machinelike Features. Int. J. Soc. Robot. 2019, 11, 305–315. [Google Scholar] [CrossRef]

- Luo, J.T.; McGoldrick, P.; Beatty, S.; Keeling, K.A. On-Screen Characters: Their Design and Influence on Consumer Trust. J. Serv. Mark. 2006, 20, 112–124. [Google Scholar] [CrossRef]

- Stirrat, M.; Perrett, D.I. Valid Facial Cues to Cooperation and Trust. Psychol. Sci. 2010, 21, 349–354. [Google Scholar] [CrossRef] [PubMed]

- Johnston, L.; Miles, L.; Macrae, C.N. Why Are You Smiling at Me? Social Functions of Enjoyment and Non-Enjoyment Smiles. Br. J. Soc. Psychol. 2010, 49, 107–127. [Google Scholar] [CrossRef]

- Holliday, R. Evolutionary Biology of Aging. Michael R. Rose. Q. Rev. Biol. 1993, 68, 93–94. [Google Scholar] [CrossRef]

- Reece, J.B.; Urry, L.A.; Cain, M.L.; Wasserman, S.A.; Minorsky, P.V.; Jackson, R.B. Campbell Biology; Benjamin Cummings: San Francisco, CA, USA, 2010; 1472p. [Google Scholar]

- Verberne, F.M.F.; Ham, J.; Midden, C.J.H. Trusting a Virtual Driver That Looks, Acts, and Thinks like You. Hum. Factors 2015, 57, 895–909. [Google Scholar] [CrossRef] [PubMed]

- Yan, Y.; Nie, J.; Huang, L.; Li, Z.; Cao, Q.; Wei, Z. Is Your First Impression Reliable? Trustworthy Analysis Using Facial Traits in Portraits. In MultiMedia Modeling: 21st International Conference, MMM 2015, Sydney, NSW, Australia, 5–7 January 2015, Proceedings, Part II 21; Springer: Cham, Switzerland, 2015; pp. 148–158. [Google Scholar]

- Bar, M.; Neta, M.; Linz, H. Very First Impressions. Emotion 2006, 6, 269–278. [Google Scholar] [CrossRef] [PubMed]

- Etcoff, N.L.; Stock, S.; Haley, L.E.; Vickery, S.A.; House, D.M. Cosmetics as a Feature of the Extended Human Phenotype: Modulation of the Perception of Biologically Important Facial Signals. PLoS ONE 2011, 6, e25656. [Google Scholar] [CrossRef] [PubMed]

- Sofer, C.; Dotsch, R.; Wigboldus, D.H.J.; Todorov, A. What Is Typical Is Good. Psychol. Sci. 2015, 26, 39–47. [Google Scholar] [CrossRef] [PubMed]

- Etcoff, N.L. Beauty and the Beholder. Nature 1994, 368, 186–187. [Google Scholar] [CrossRef] [PubMed]

- Aharon, I.; Etcoff, N.; Ariely, D.; Chabris, C.F.; O’Connor, E.; Breiter, H.C. Beautiful Faces Have Variable Reward Value. Neuron 2004, 32, 537–551. [Google Scholar] [CrossRef] [PubMed]

- Calvo, M.G.; Álvarez-Plaza, P.; Fernández-Martín, A. The Contribution of Facial Regions to Judgements of Happiness and Trustworthiness from Dynamic Expressions. J. Cogn. Psychol. 2017, 29, 618–625. [Google Scholar] [CrossRef]

- Song, Y.; Luximon, Y. Design for Sustainability: The Effect of Lettering Case on Environmental Concern from a Green Advertising Perspective. Sustainability 2019, 11, 1333. [Google Scholar] [CrossRef]

- Hoff, K.A.; Bashir, M. Trust in Automation: Integrating Empirical Evidence on Factors That Influence Trust. Hum. Factors 2015, 57, 407–434. [Google Scholar] [CrossRef]

- Todorov, A.; Said, C.P.; Engell, A.D.; Oosterhof, N.N. Understanding Evaluation of Faces on Social Dimensions. Trends Cogn. Sci. 2008, 12, 455–460. [Google Scholar] [CrossRef]

- van’ t Wout, M.; Sanfey, A.G. Friend or Foe: The Effect of Implicit Trustworthiness Judgments in Social Decision-Making. Cognition 2008, 108, 796–803. [Google Scholar] [CrossRef] [PubMed]

- Todorov, A.; Olivola, C.Y.; Dotsch, R.; Mende-Siedlecki, P. Social Attributions from Faces: Determinants, Consequences, Accuracy, and Functional Significance. Annu. Rev. Psychol. 2015, 66, 519–545. [Google Scholar] [CrossRef] [PubMed]

- Sofer, C.; Dotsch, R.; Oikawa, M.; Oikawa, H.; Wigboldus, D.H.J.; Todorov, A. For Your Local Eyes Only: Culture-Specific Face Typicality Influences Perceptions of Trustworthiness. Perception 2017, 46, 914–928. [Google Scholar] [CrossRef] [PubMed]

- Dong, Y.; Liu, Y.; Jia, Y.; Li, Y.; Li, C. Effects of Facial Expression and Facial Gender on Judgment of Trustworthiness: The Modulating Effect of Cooperative and Competitive Settings. Front. Psychol. 2018, 9, 2022. [Google Scholar] [CrossRef] [PubMed]

- Kleisner, K.; Priplatova, L.; Frost, P.; Flegr, J. Trustworthy-Looking Face Meets Brown Eyes. PLoS ONE 2013, 8, e53285. [Google Scholar] [CrossRef] [PubMed]

- Santos, I.M.; Young, A.W. Inferring Social Attributes from Different Face Regions: Evidence for Holistic Processing. Q. J. Exp. Psychol. 2011, 64, 751–766. [Google Scholar] [CrossRef] [PubMed]

- Ma, F.; Xu, F.; Luo, X. Children’s and Adults’ Judgments of Facial Trustworthiness: The Relationship to Facial Attractiveness. Percept. Mot. Skills 2015, 121, 179–198. [Google Scholar] [CrossRef] [PubMed]

- Stanton, C.J.; Stevens, C.J. Don’t Stare at Me: The Impact of a Humanoid Robot’s Gaze upon Trust during a Cooperative Human–Robot Visual Task. Int. J. Soc. Robot. 2017, 9, 745–753. [Google Scholar] [CrossRef]

- Ramanathan, S.; Katti, H.; Sebe, N.; Kankanhalli, M.; Chua, T.S. An Eye Fixation Database for Saliency Detection in Images. In Computer Vision–ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010, Proceedings, Part IV 11; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2010; Volume 6314, pp. 30–43. [Google Scholar]

- Zebrowitz, L.A.; Voinescu, L.; Collins, M.A. “Wide-Eyed” and “Crooked-Faced”: Determinants of Perceived and Real Honesty across the Life Span. Personal. Soc. Psychol. Bull. 1996, 22, 1258–1269. [Google Scholar] [CrossRef]

- Windhager, S.; Hutzler, F.; Carbon, C.-C.; Oberzaucher, E.; Schaefer, K.; Thorstensen, T.; Leder, H.; Grammer, K. Laying Eyes on Headlights: Eye Movements Suggest Facial Features in Cars. Coll. Antropol. 2010, 34, 1075–1080. [Google Scholar]

- Cyr, D.; Head, M.; Larios, H. Colour Appeal in Website Design within and across Cultures: A Multi-Method Evaluation. Int. J. Hum. Comput. Stud. 2010, 68, 1–21. [Google Scholar] [CrossRef]

- Kaisler, R.E.; Leder, H. Trusting the Looks of Others: Gaze Effects of Faces in Social Settings. Perception 2016, 45, 875–892. [Google Scholar] [CrossRef] [PubMed]

- Ichikawa, H.; Kanazawa, S.; Yamaguchi, M.K. Finding a Face in a Face-like Object. Perception 2011, 40, 500–502. [Google Scholar] [CrossRef] [PubMed]

- Masip, J.; Garrido, E.; Herrero, C. Facial Appearance and Impressions of ‘Credibility’: The Effects of Facial Babyishness and Age on Person Perception. Int. J. Psychol. 2004, 39, 276–289. [Google Scholar] [CrossRef]

- Ferstl, Y.; Kokkinara, E.; Mcdonnell, R. Facial Features of Non-Player Creatures Can Influence Moral Decisions in Video Games. ACM Trans. Appl. Percept. 2017, 15, 1–12. [Google Scholar] [CrossRef]

- Maoz, I. The Face of the Enemy: The Effect of Press-Reported Visual Information Regarding the Facial Features of Opponent Politicians on Support for Peace. Polit. Commun. 2012, 29, 243–256. [Google Scholar] [CrossRef]

- Haselhuhn, M.P.; Wong, E.M.; Ormiston, M.E. Self-Fulfilling Prophecies as a Link between Men’s Facial Width-to-Height Ratio and Behavior. PLoS ONE 2013, 8, e72259. [Google Scholar] [CrossRef] [PubMed]

- Okubo, M.; Ishikawa, K.; Kobayashi, A. No Trust on the Left Side: Hemifacial Asymmetries for Trustworthiness and Emotional Expressions. Brain Cogn. 2013, 82, 181–186. [Google Scholar] [CrossRef]

- Arminjon, M.; Chamseddine, A.; Kopta, V.; Paunović, A.; Mohr, C. Are We Modular Lying Cues Detectors? The Answer Is “Yes, Sometimes”. PLoS ONE 2015, 10, e0136418. [Google Scholar] [CrossRef]

- Yoon, K.; Kim, C.H.; Kim, M.-S. A Cross-Cultural Comparison of the Effects of Source Credibility on Attitudes and Behavioral Intentions. Mass Commun. Soc. 1998, 1, 153–173. [Google Scholar] [CrossRef]

- Seymour, B.; Dolan, R. Emotion, Decision Making, and the Amygdala. Neuron 2008, 58, 662–671. [Google Scholar] [CrossRef] [PubMed]

- Stafford, R.Q.; Broadbent, E.; Jayawardena, C.; Unger, U.; Kuo, I.H.; Igic, A.; Wong, R.; Kerse, N.; Watson, C.; MacDonald, B.A. Improved Robot Attitudes and Emotions at a Retirement Home after Meeting a Robot. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010; pp. 82–87. [Google Scholar]

- Bailey, P.E.; Szczap, P.; McLennan, S.N.; Slessor, G.; Ruffman, T.; Rendell, P.G. Age-Related Similarities and Differences in First Impressions of Trustworthiness. Cogn. Emot. 2016, 30, 1017–1026. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Ham, J.; Postma, E.; Midden, C.; Joosten, B.; Goudbeek, M. Representing Affective Facial Expressions for Robots and Embodied Conversational Agents by Facial Landmarks. Int. J. Soc. Robot. 2013, 5, 619–626. [Google Scholar] [CrossRef]

- Mortensen, K.; Hughes, T.L. Comparing Amazon’s Mechanical Turk Platform to Conventional Data Collection Methods in the Health and Medical Research Literature. J. Gen. Intern. Med. 2018, 33, 533–538. [Google Scholar] [CrossRef]

- Deal, S.B.; Lendvay, T.S.; Haque, M.I.; Brand, T.; Comstock, B.; Warren, J.; Alseidi, A. Crowd-Sourced Assessment of Technical Skills: An Opportunity for Improvement in the Assessment of Laparoscopic Surgical Skills. Am. J. Surg. 2016, 211, 398–404. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Luximon, Y.; Luo, J. A Moderated Mediation Analysis of the Effect of Lettering Case and Color Temperature on Trustworthiness Perceptions and Investment Decisions. Int. J. Bank Mark. 2020, 38, 987–1005. [Google Scholar] [CrossRef]

- Harber, P.; Leroy, G. Assessing Work–Asthma Interaction With Amazon Mechanical Turk. J. Occup. Environ. Med. 2015, 57, 381–385. [Google Scholar] [CrossRef] [PubMed]

- Gorn, G.J.; Jiang, Y.; Johar, G.V. Babyfaces, Trait Inferences, and Company Evaluations in a Public Relations Crisis. J. Consum. Res. 2008, 35, 36–49. [Google Scholar] [CrossRef]

- Howard, D.J.; Gengler, C. Emotional Contagion Effects on Product Attitudes: Figure 1. J. Consum. Res. 2001, 28, 189–201. [Google Scholar] [CrossRef]

- Hayes, A.F. An Index and Test of Linear Moderated Mediation. Multivariate Behav. Res. 2015, 50, 1–22. [Google Scholar] [CrossRef]

- Breazeal, C.; Dautenhahn, K.; Kanda, T. Social Robotics. In Springer Handbook of Robotics; Springer International Publishing: Cham, Switzerland, 2016; pp. 1935–1972. [Google Scholar]

- Song, Y.; Luximon, Y.; Leong, B.D.; Qin, Z. The E-Commerce Performance of Internet of Things (IoT) in Disruptive Innovation: Case of Xiaomi. In Proceedings of the ACM International Conference Proceeding Series, Tokyo, Japan, 9–11 December 2019; Association for Computing Machinery (ACM): New York, NY, USA, 2019; pp. 188–192. [Google Scholar]

- Solomon, M.R. The Role of Products as Social Stimuli: A Symbolic Interactionism Perspective. J. Consum. Res. 1983, 10, 319. [Google Scholar] [CrossRef]

- CES BUDDY Named CES 2018 BEST OF INNOVATION AWARDS Honoree–BUDDY The Emotional Robot. Available online: https://buddytherobot.com/en/news/buddy-named-ces-2018-best-innovation-awards-honoree/ (accessed on 10 April 2019).

- Vanderborght, B.; Simut, R.; Saldien, J.; Pop, C.; Rusu, A.S.; Pintea, S.; Lefeber, D.; David, D.O. Using the Social Robot Probo as a Social Story Telling Agent for Children with ASD. Interact. Stud. Stud. Soc. Behav. Commun. Biol. Artif. Syst. 2012, 13, 348–372. [Google Scholar] [CrossRef]

- Ulrich, K. Product Design and Development. Biosens. Bioelectron. 1992, 7, 85–89. [Google Scholar] [CrossRef]

- Song, Y.; Luximon, A.; Luximon, Y. The Effect of Facial Features on Facial Anthropomorphic Trustworthiness in Social Robots. Appl. Ergon. 2021, 94, 103420. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Luximon, Y. Trust in AI Agent: A Systematic Review of Facial Anthropomorphic Trustworthiness for Social Robot Design. Sensors 2020, 20, 5087. [Google Scholar] [CrossRef] [PubMed]

- Appel, M.; Izydorczyk, D.; Weber, S.; Mara, M.; Lischetzke, T. The Uncanny of Mind in a Machine: Humanoid Robots as Tools, Agents, and Experiencers. Comput. Human Behav. 2020, 102, 274–286. [Google Scholar] [CrossRef]

- Song, Y. Initial Trust in AI Agent: Communicating Facial Anthropomorphic Trustworthiness for Social Robot Design. Ph.D. Thesis, Hong Kong Polytechnic University, Hong Kong, China, 2021. [Google Scholar]

- Song, Y.; Qin, Z.; Kang, T.; Jin, Y. Robot Helps When Robot Fits: Examining the Role of Baby Robots in Fertility Promotion. Healthcare 2019, 7, 147. [Google Scholar] [CrossRef] [PubMed]

- Gordon, G.; Spaulding, S.; Westlund, J.K.; Lee, J.J.; Plummer, L.; Martinez, M.; Das, M.; Breazeal, C. Affective Personalization of a Social Robot Tutor for Children’s Second Language Skills. In Proceedings of the 30th Conference Artificial Intelligence (AAAI 2016), Phoenix, AZ, USA, 12–17 February 2016; pp. 3951–3957. [Google Scholar]

- Song, Y.; Luximon, Y. The Face of Trust: The Effect of Robot Face Ratio on Consumer Preference. Comput. Human Behav. 2021, 116, 106620. [Google Scholar] [CrossRef]

- Deutsch, I.; Erel, H.; Paz, M.; Hoffman, G.; Zuckerman, O. Home Robotic Devices for Older Adults: Opportunities and Concerns. Comput. Human Behav. 2019, 98, 122–133. [Google Scholar] [CrossRef]

- Peek, S.T.M.; Wouters, E.J.M.; van Hoof, J.; Luijkx, K.G.; Boeije, H.R.; Vrijhoef, H.J.M. Factors Influencing Acceptance of Technology for Aging in Place: A Systematic Review. Int. J. Med. Inform. 2014, 83, 235–248. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, Y.; Luximon, Y. When Trustworthiness Meets Face: Facial Design for Social Robots. Sensors 2024, 24, 4215. https://doi.org/10.3390/s24134215

Song Y, Luximon Y. When Trustworthiness Meets Face: Facial Design for Social Robots. Sensors. 2024; 24(13):4215. https://doi.org/10.3390/s24134215

Chicago/Turabian StyleSong, Yao, and Yan Luximon. 2024. "When Trustworthiness Meets Face: Facial Design for Social Robots" Sensors 24, no. 13: 4215. https://doi.org/10.3390/s24134215