Online Scene Semantic Understanding Based on Sparsely Correlated Network for AR

Abstract

:1. Introduction

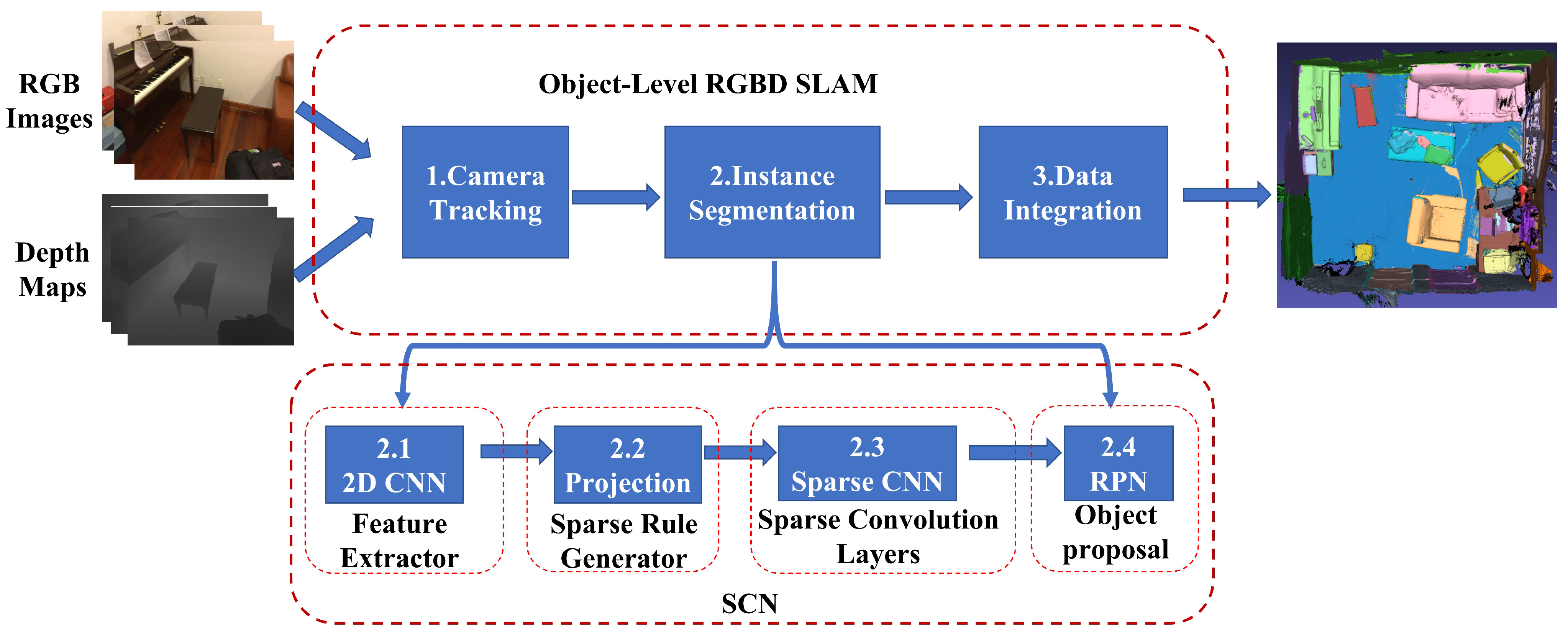

- We introduce a novel neural network architecture called SCN, which utilizes sparse convolution for online instance segmentation. Combining the instance segmentation network with an RGB-D SLAM system achieves scene understanding at the object level.

- We recognize projective associations between contexts and use hash tables to organize sparse data without association. We also design a sparse convolution architecture to process data without context relevance, which satisfies the real-time requirement.

- We devise a sparse rule for sparse convolution and develop a grouping strategy for dense data by leveraging spatial topological structures and temporal correlation properties.

2. Related Work

3. Method

3.1. Camera Tracking

3.2. Instance Segmentation

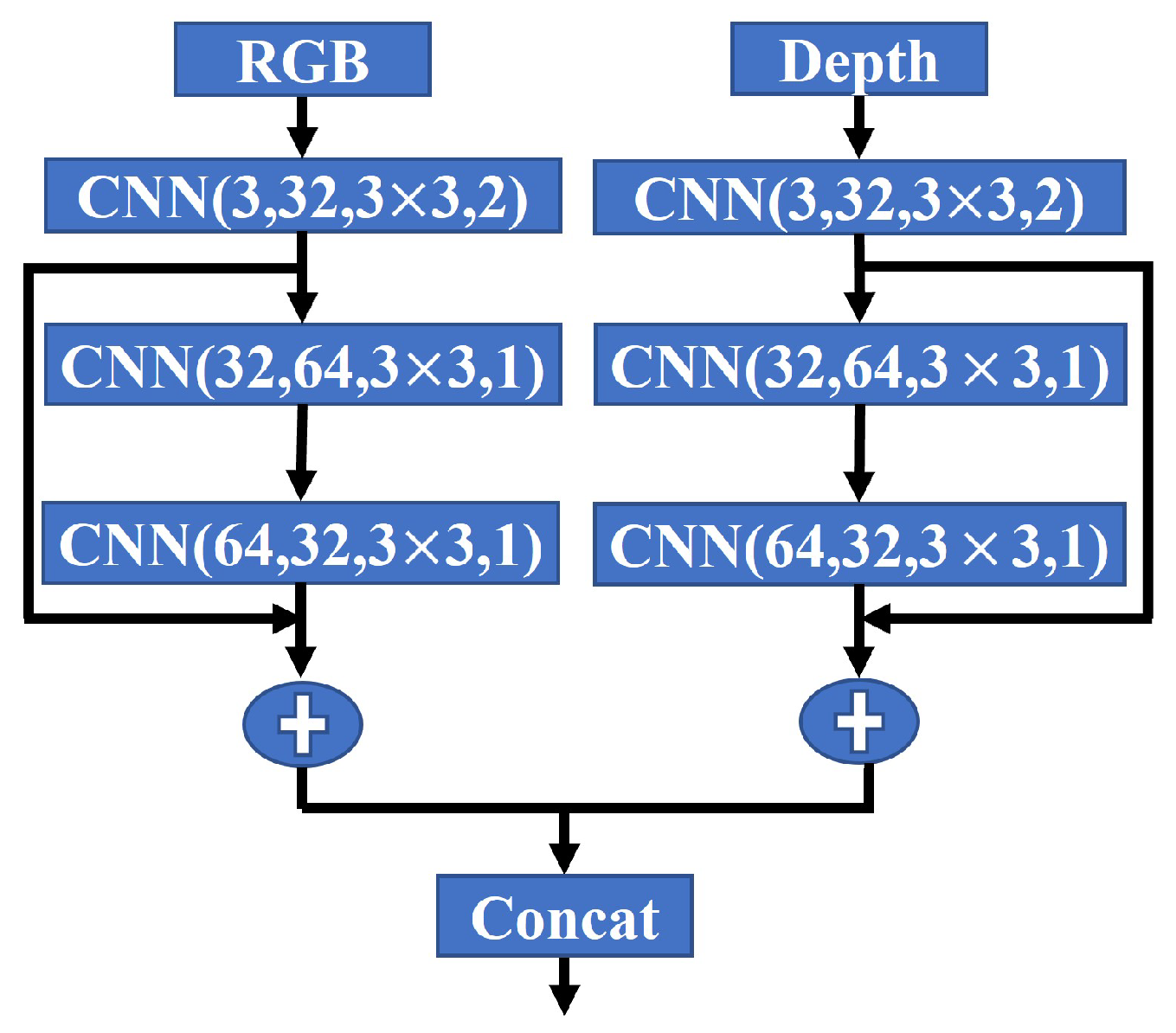

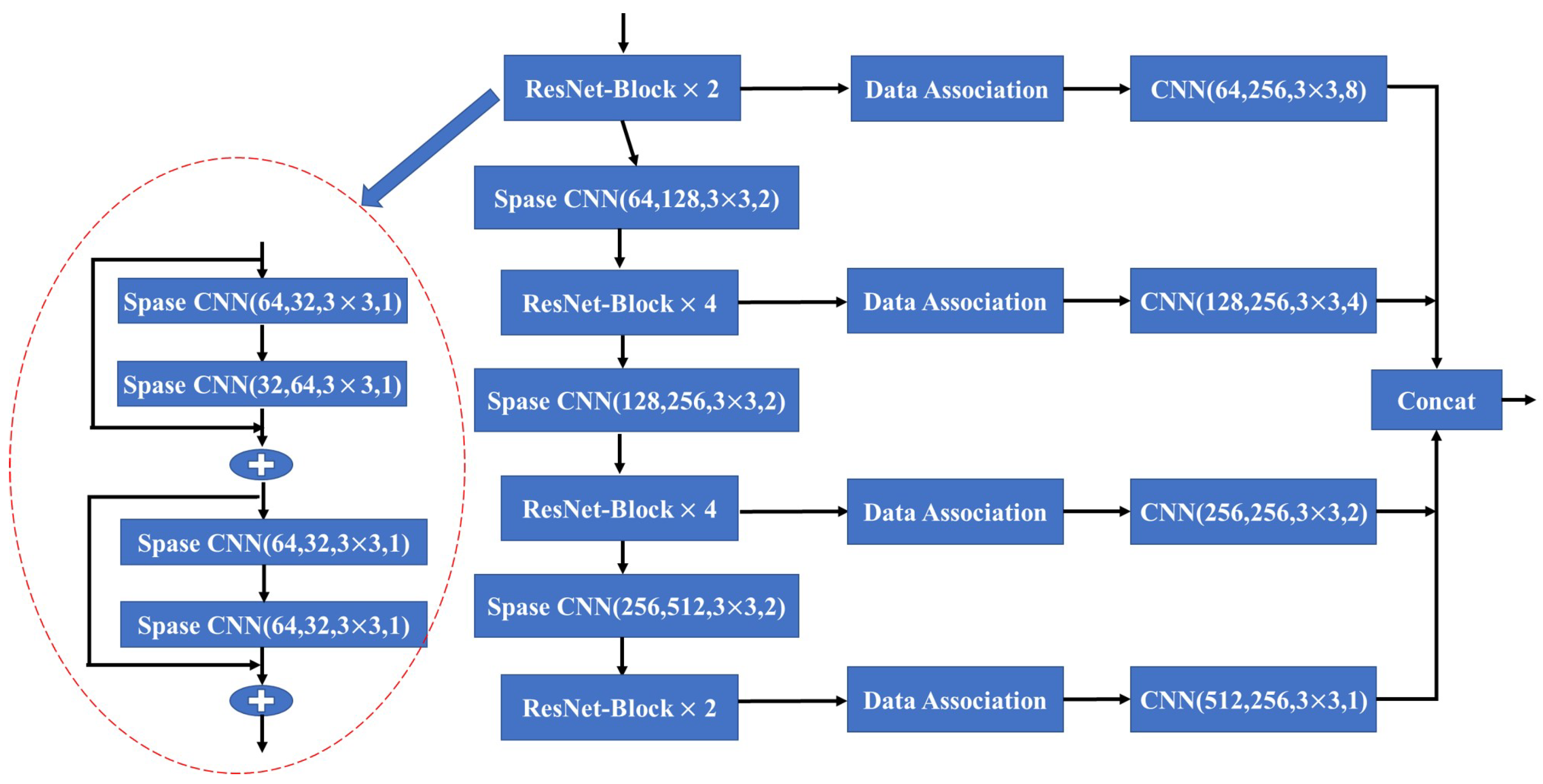

3.2.1. Feature Extractor

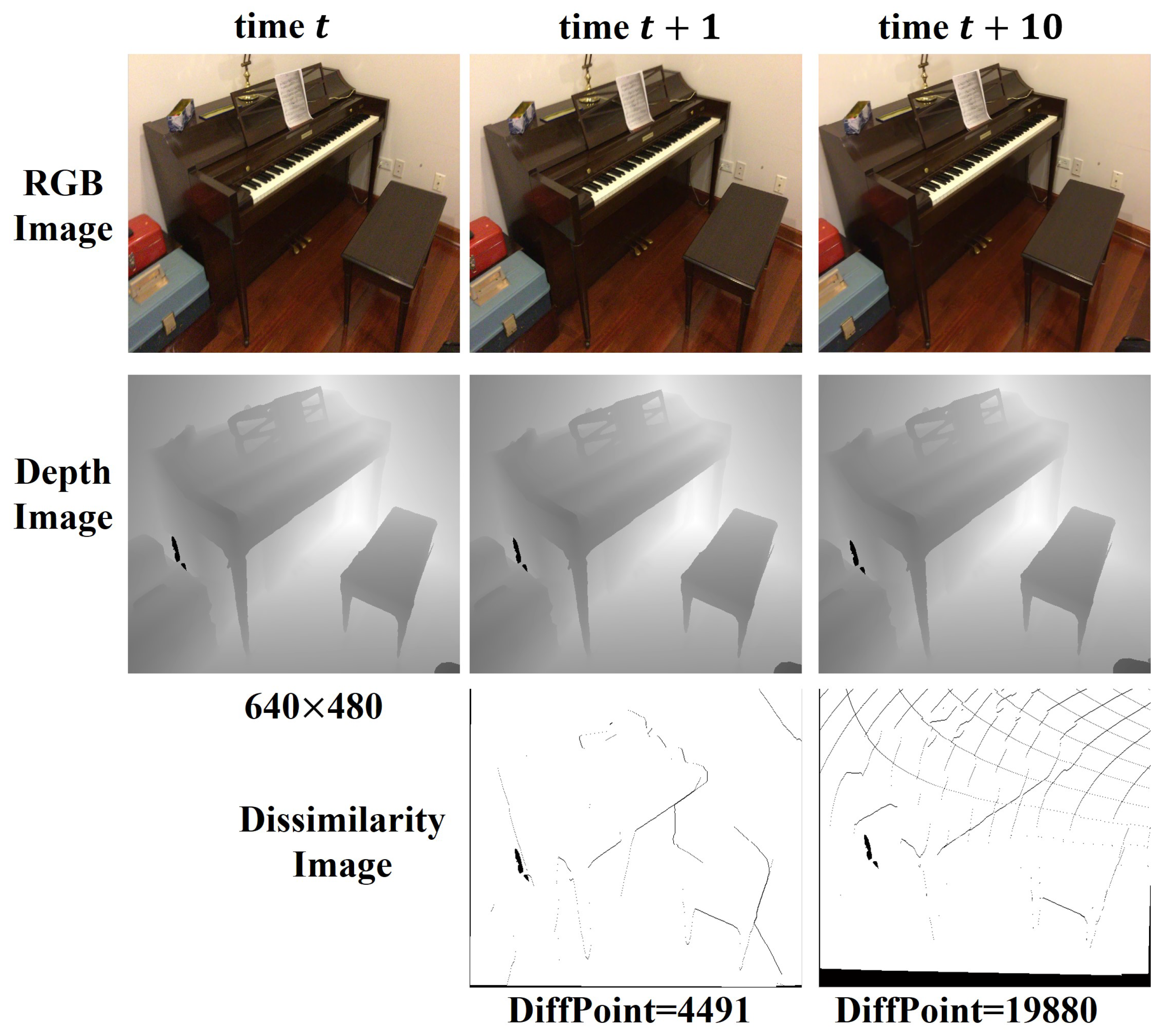

3.2.2. Sparse Rule Generator

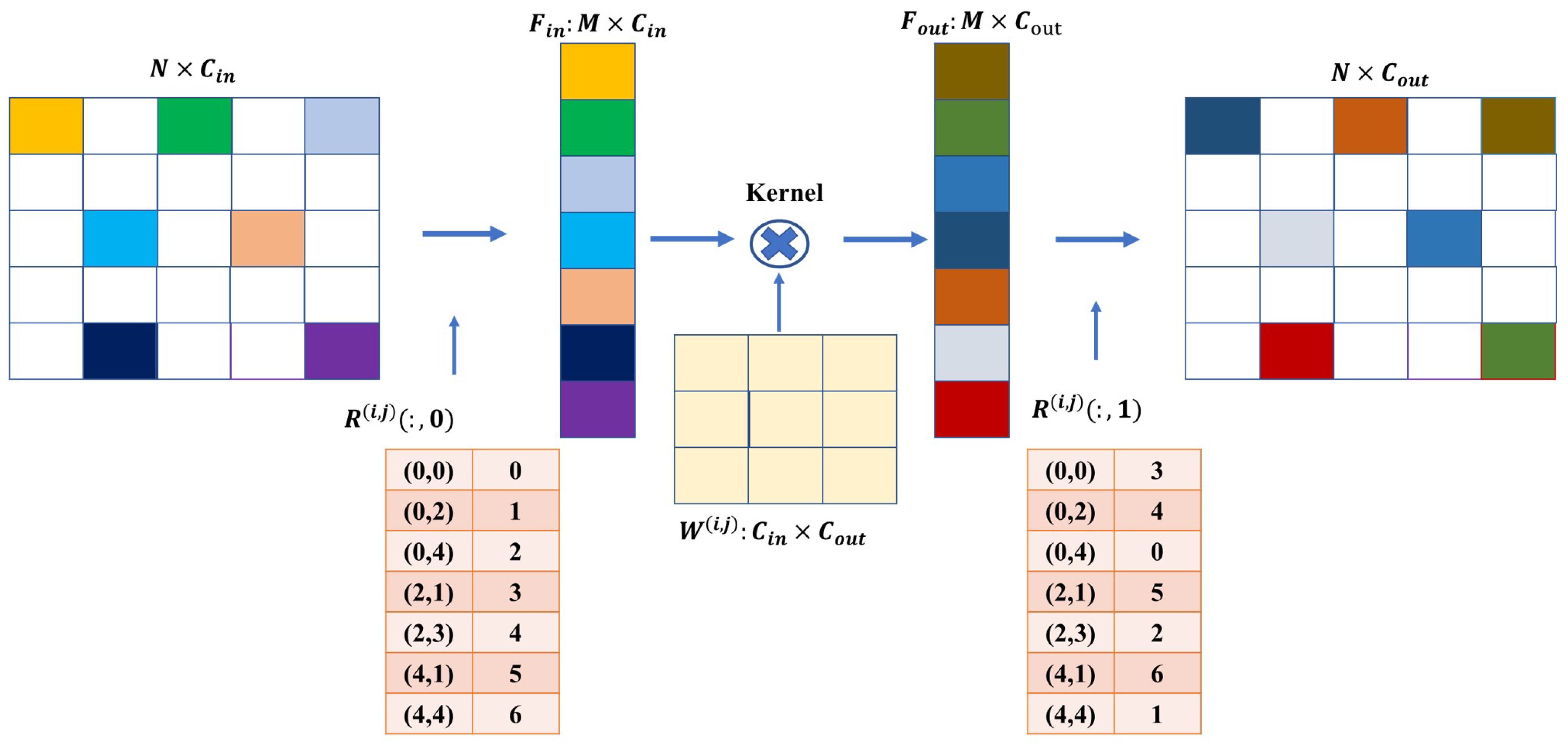

3.2.3. Sparse Convolution Layers

3.2.4. Object Proposal

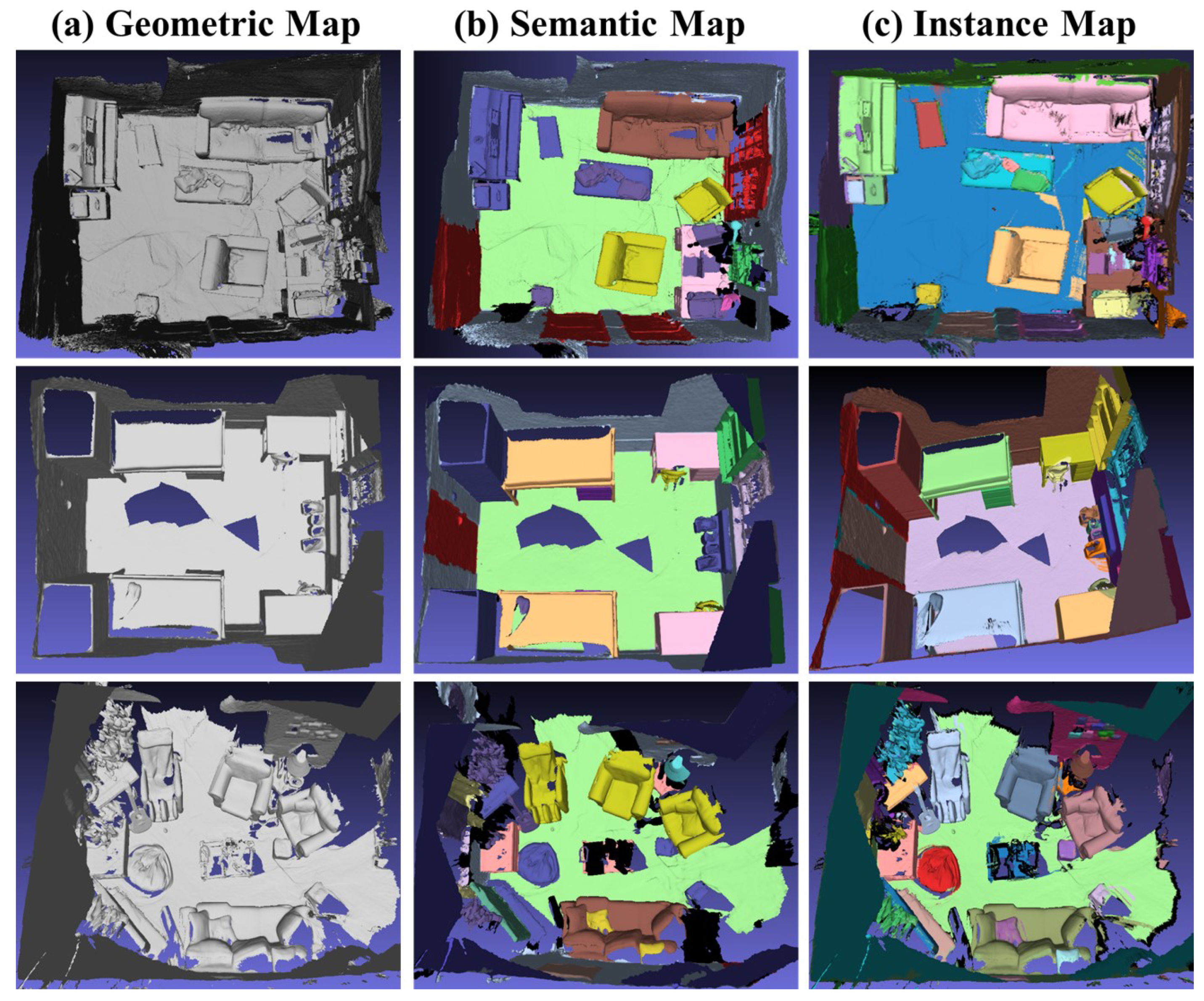

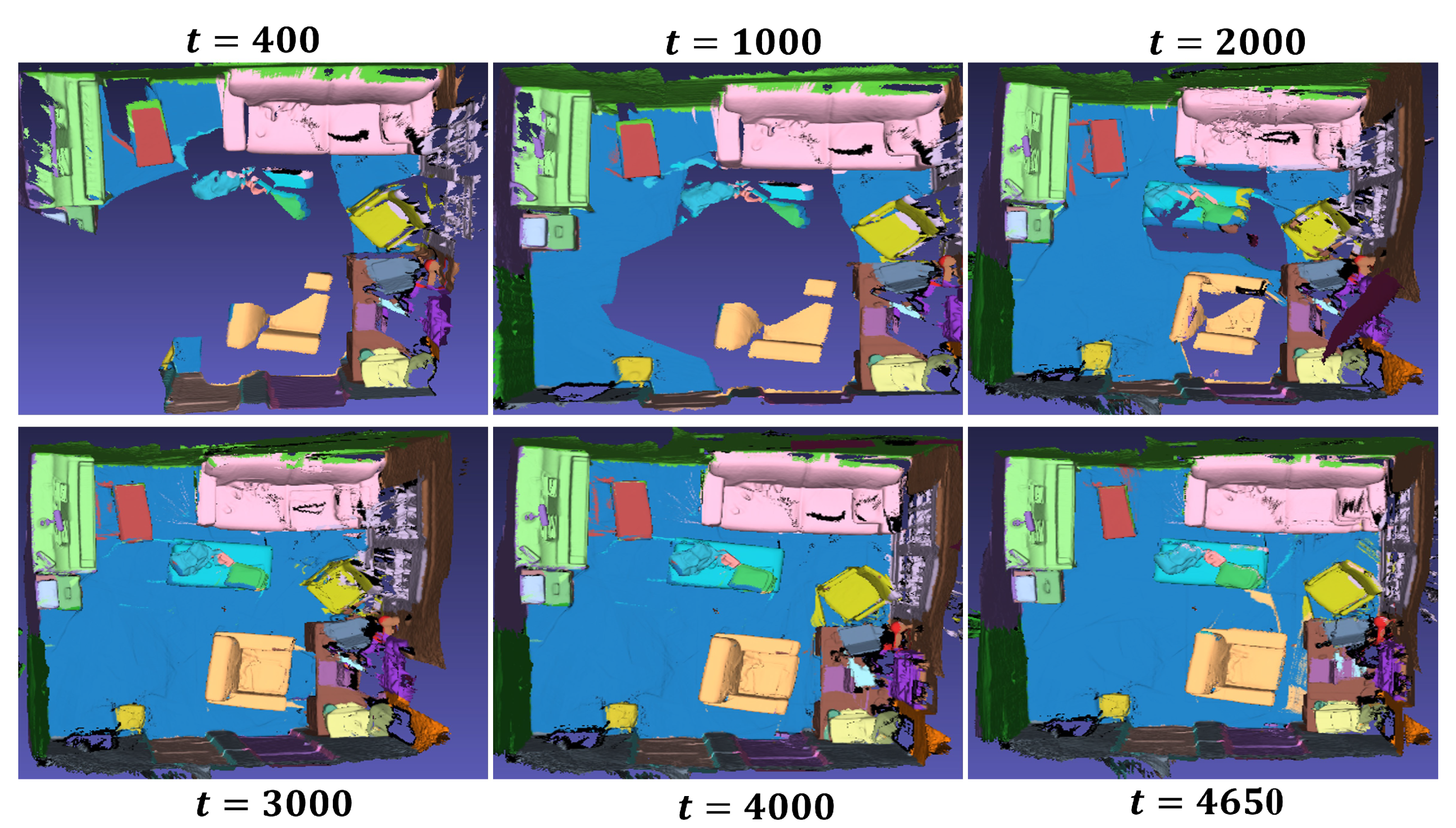

3.3. Data Integration

4. Evaluation

4.1. Dataset

4.2. Network Details

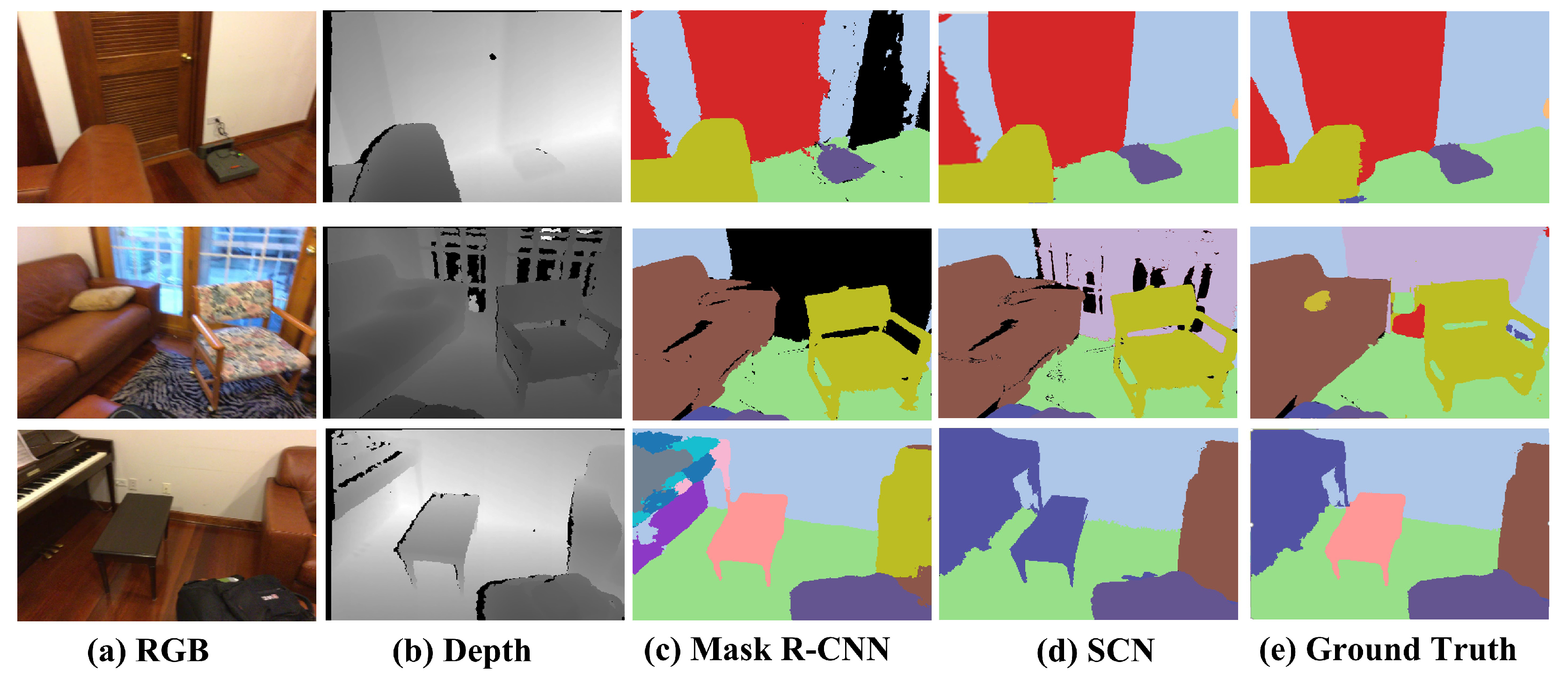

4.3. Quantitative and Qualitative Results

4.4. Run-Time Analysis

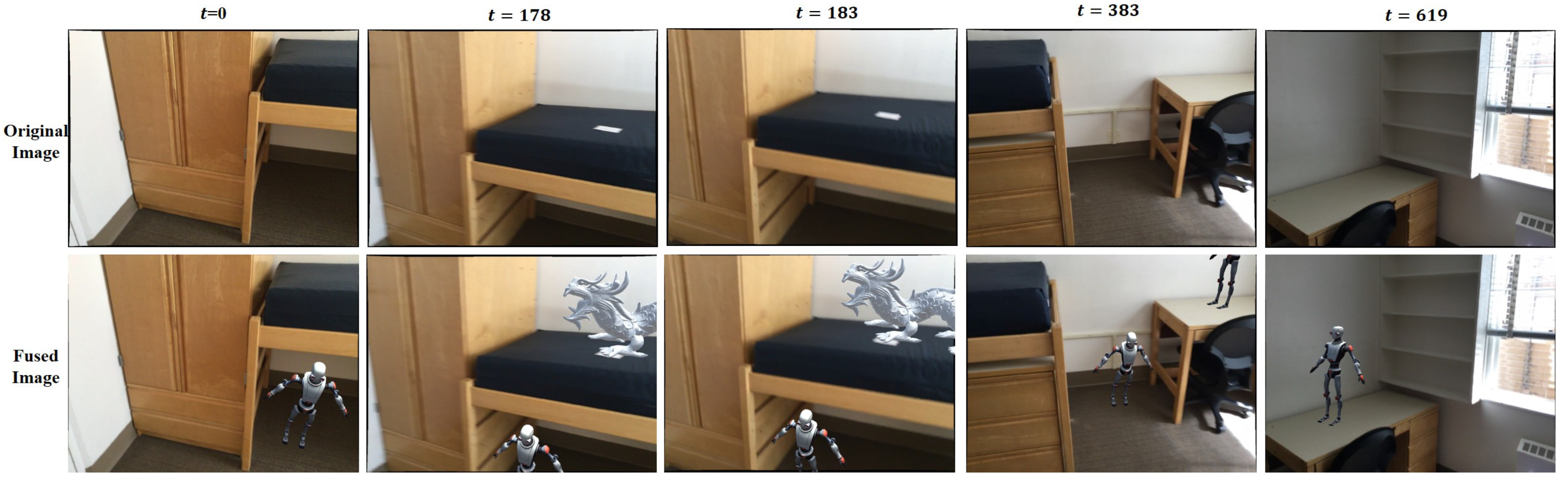

4.5. AR Application

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, M.; Ye, Z.-M.; Shi, J.-C.; Yang, Y.-L. Scene-Context-Aware Indoor Object Selection and Movement in VR. In Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, 27 March–1 April 2021; pp. 235–244. [Google Scholar] [CrossRef]

- Zhang, A.; Zhao, Y.; Wang, S.; Wei, J. An improved augmented-reality method of inserting virtual objects into the scene with transparent objects. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 12–16 March 2022; pp. 38–46. [Google Scholar] [CrossRef]

- Seichter, D.; Köhler, M.; Lewandowski, B.; Wengefeld, T.; Gross, H.-M. Efficient RGB-D Semantic Segmentation for Indoor Scene Analysis. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13525–13531. [Google Scholar] [CrossRef]

- Zhou, W.; Yang, E.; Lei, J.; Wan, J.; Yu, L. PGDENet: Progressive Guided Fusion and Depth Enhancement Network for RGB-D Indoor Scene Parsing. IEEE Trans. Multimed. 2023, 25, 3483–3494. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Fitzgibbon, A. KinectFusion: Real-time dense surface mapping and tracking. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar] [CrossRef]

- Dai, A.; Nießner, M.; Zollhöfer, M.; Izadi, S.; Theobalt, C. Bundlefusion: Real-time globally consistent 3D reconstruction using on-the-fly surface reintegration. ACM Trans. Graph. (TOG) 2017, 36, 1. [Google Scholar] [CrossRef]

- Lin, W.; Zheng, C.; Yong, J.-H.; Xu, F. OcclusionFusion: Occlusion-aware Motion Estimation for Real-time Dynamic 3D Reconstruction. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–22 June 2022; pp. 1726–1735. [Google Scholar] [CrossRef]

- Fan, Y.; Zhang, Q.; Tang, Y.; Liu, S.; Han, H. Blitz-SLAM: A semantic SLAM in dynamic environments. Pattern Recognit. 2022, 121, 108225, ISSN 0031-3203. [Google Scholar] [CrossRef]

- Wu, W.; Guo, L.; Gao, H.; You, Z.; Liu, Y.; Chen, Z. YOLO-SLAM: A semantic SLAM system towards dynamic environment with geometric constraint. Neural Comput. Appl. 2022, 34, 6011–6026. [Google Scholar] [CrossRef]

- Dai, A.; Diller, C.; Niessner, M. SG-NN: Sparse Generative Neural Networks for Self-Supervised Scene Completion of RGB-D Scans. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 846–855. [Google Scholar] [CrossRef]

- Nießner, M.; Zollhöfer, M.; Izadi, S.; Stamminger, M. Real-time 3D reconstruction at scale using voxel hashing. ACM Trans. Graph. (TOG) 2013, 32, 1–11. [Google Scholar] [CrossRef]

- Whelan, T.; Leutenegger, S.; Salas-Moreno, R.F.; Glocker, B.; Davison, A.J. ElasticFusion: Dense SLAM without a Pose Graph. In Robotics: Science and Systems; 2015; Available online: https://roboticsproceedings.org/rss11/p01.pdf (accessed on 17 June 2024).

- Fontán, A.; Civera, J.; Triebel, R. Information-Driven Direct RGB-D Odometry. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4928–4936. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Gu, W.; Bai, S.; Kong, L. A review on 2D instance segmentation based on deep neural networks. Image Vis. Comput. 2022, 120, 104401, ISSN 0262-8856. [Google Scholar] [CrossRef]

- Fathi, A.; Wojna, Z.; Rathod, V.; Wang, P.; Song, H.O.; Guadarrama, S.; Murphy, K.P. Semantic instance segmentation via deep metric learning. arXiv 2017, arXiv:1703.10277. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-Time Instance Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9156–9165. [Google Scholar] [CrossRef]

- Hazirbas, C.; Ma, L.; Domokos, C.; Cremers, D. FuseNet: Incorporating Depth into Semantic Segmentation via Fusion-Based CNN Architecture; Springer: Cham, Switherland, 2016. [Google Scholar]

- Zhou, W.; Yuan, J.; Lei, J.; Luo, T. TSNet: Three-Stream Self-Attention Network for RGB-D Indoor Semantic Segmentation. IEEE Intell. Syst. 2021, 36, 73–78. [Google Scholar] [CrossRef]

- Shen, X.; Stamos, I. Frustum VoxNet for 3D object detection from RGB-D or Depth images. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass, CO, USA, 1–5 March 2020; pp. 1687–1695. [Google Scholar] [CrossRef]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar] [CrossRef]

- Han, X.; Dong, Z.; Yang, B. A point-based deep learning network for semantic segmentation of MLS point clouds. ISPRS J. Photogramm. Remote Sens. 2021, 175, 199–214, ISSN 0924-2716. [Google Scholar] [CrossRef]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11105–11114. [Google Scholar] [CrossRef]

- Thomas, H.; Qi, C.R.; Deschaud, J.-E.; Marcotegui, B.; Goulette, F.; Guibas, L. KPConv: Flexible and Deformable Convolution for Point Clouds. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6410–6419. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Sun, P.; Zhang, R.; Jiang, Y.; Kong, T.; Xu, C.; Zhan, W.; Luo, P. Sparse R-CNN: End-to-End Object Detection with Learnable Proposals. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 14449–14458. [Google Scholar] [CrossRef]

- Jiang, L.; Zhao, H.; Shi, S.; Liu, S.; Fu, C.-W.; Jia, J. PointGroup: Dual-Set Point Grouping for 3D Instance Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4866–4875. [Google Scholar] [CrossRef]

- Zhong, M.; Chen, X.; Chen, X.; Zeng, G.; Wang, Y. Maskgroup: Hierarchical point grouping and masking for 3d instance segmentation. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- McCormac, J.; Handa, A.; Davison, A.; Leutenegger, S. SemanticFusion: Dense 3D semantic mapping with convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4628–4635. [Google Scholar] [CrossRef]

- Xiang, Y.; Fox, D. DA-RNN: Semantic mapping with data associated recurrent neural networks. arXiv 2017, arXiv:1703.03098. [Google Scholar]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.J.; Davison, A.J. SLAM++: Simultaneous Localisation and Mapping at the Level of Objects. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1352–1359. [Google Scholar] [CrossRef]

- Tateno, K.; Tombari, F.; Navab, N. When 2.5D is not enough: Simultaneous reconstruction, segmentation and recognition on dense SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2295–2302. [Google Scholar] [CrossRef]

- Runz, M.; Buffier, M.; Agapito, L. Maskfusion: Real-time recognition, tracking and reconstruction of multiple moving objects. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 10–20. [Google Scholar]

- McCormac, J.; Clark, R.; Bloesch, M.; Davison, A.; Leutenegger, S. Fusion++: Volumetric object-level slam. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 32–41. [Google Scholar]

- Xu, B.; Li, W.; Tzoumanikas, D.; Bloesch, M.; Davison, A.; Leutenegger, S. MID-Fusion: Octree-based Object-Level Multi-Instance Dynamic SLAM. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5231–5237. [Google Scholar] [CrossRef]

- Narita, G.; Seno, T.; Ishikawa, T.; Kaji, Y. Panopticfusion: Online volumetric semantic mapping at the level of stuff and things. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2018; pp. 4205–4212. [Google Scholar]

- Li, G.; Fan, H.; Jiang, G.; Jiang, D.; Liu, Y.; Tao, B.; Yun, J. RGBD-SLAM Based on Object Detection with Two-Stream YOLOv4-MobileNetv3 in Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2024, 25, 2847–2857. [Google Scholar] [CrossRef]

- Wang, C.; Oi, Y.; Yang, S. Recurrent R-CNN: Online Instance Mapping with context correlation. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 21–26 July 2020; pp. 776–777. [Google Scholar] [CrossRef]

- Li, X.; Guivant, J.; Kwok, N.; Xu, Y.; Li, R.; Wu, H. Three-dimensional backbone network for 3D object detection in traffic scenes. arXiv 2019, arXiv:1901.08373. [Google Scholar]

- Dai, A.; Chang, X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. ScanNet: Richly-Annotated 3D Reconstructions of Indoor Scenes. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2432–2443. [Google Scholar] [CrossRef]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from rgbd images. ECCV 2012, 7576, 746–760. [Google Scholar]

| Method | Online | TSDF Volume | Surfels | Large-Scale | Dense Labeling | Object-Level |

|---|---|---|---|---|---|---|

| SemanticFusion [30] | √ | √ | √ | √ | ||

| DA-RNN [31] | √ | √ | √ | |||

| SLAM++ [32] | √ | √ | ||||

| Tateno et al. [33] | √ | √ | √ | |||

| MaskFusion [34] | √ | √ | √ | |||

| Fusion++ [35] | √ | √ | √ | |||

| MID-Fusion [36] | √ | √ | √ | √ | ||

| PanopticFusion [37] | √ | √ | √ | √ | √ | |

| Li et al. [38] | √ | √ | √ |

| Method | Input | Baseline | mAP (%) |

|---|---|---|---|

| Mask R-CNN [26] | RGB + Depth | VGG | 56.9 |

| Mask R-CNN [26] | RGB + Depth | ResNet-101 | 62.5 |

| SCN | RGB + Depth | VGG | 63.4 |

| SCN | RGB + Depth | ResNet-101 | 71.2 |

| Category | Mask R-CNN [26] | PanopticFusion [37] | Sparse R-CNN [27] | PointGroup [28] | Mask-Group [29] | SCN |

|---|---|---|---|---|---|---|

| bathtub | 0.333 | 0.667 | 1.000 | 1.000 | 1.000 | 1.000 |

| bed | 0.002 | 0.712 | 0.538 | 0.765 | 0.822 | 0.841 |

| bookshelf | 0.000 | 0.595 | 0.282 | 0.624 | 0.764 | 0.752 |

| cabinet | 0.053 | 0.259 | 0.468 | 0.505 | 0.616 | 0.620 |

| chair | 0.002 | 0.550 | 0.790 | 0.797 | 0.815 | 0.772 |

| counter | 0.002 | 0.000 | 0.173 | 0.116 | 0.139 | 0.163 |

| curtain | 0.021 | 0.613 | 0.345 | 0.696 | 0.694 | 0.685 |

| desk | 0.000 | 0.175 | 0.429 | 0.384 | 0.597 | 0.629 |

| door | 0.045 | 0.250 | 0.413 | 0.441 | 0.459 | 0.462 |

| otherfurniture | 0.024 | 0.434 | 0.484 | 0.559 | 0.566 | 0.495 |

| picture | 0.238 | 0.437 | 0.176 | 0.476 | 0.599 | 0.424 |

| refrigerator | 0.065 | 0.411 | 0.595 | 0.596 | 0.600 | 0.637 |

| shower curtain | 0.000 | 0.857 | 0.591 | 1.000 | 0.516 | 0.480 |

| sink | 0.014 | 0.485 | 0.522 | 0.666 | 0.715 | 0.692 |

| sofa | 0.107 | 0.591 | 0.668 | 0.756 | 0.819 | 0.820 |

| table | 0.020 | 0.267 | 0.476 | 0.556 | 0.635 | 0.661 |

| toilet | 0.110 | 0.944 | 0.986 | 0.997 | 1.000 | 0.960 |

| window | 0.006 | 0.359 | 0.327 | 0.513 | 0.603 | 0.721 |

| mAP | 0.058 | 0.478 | 0.515 | 0.636 | 0.664 | 0.656 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Song, J.; Du, C.; Wang, C. Online Scene Semantic Understanding Based on Sparsely Correlated Network for AR. Sensors 2024, 24, 4756. https://doi.org/10.3390/s24144756

Wang Q, Song J, Du C, Wang C. Online Scene Semantic Understanding Based on Sparsely Correlated Network for AR. Sensors. 2024; 24(14):4756. https://doi.org/10.3390/s24144756

Chicago/Turabian StyleWang, Qianqian, Junhao Song, Chenxi Du, and Chen Wang. 2024. "Online Scene Semantic Understanding Based on Sparsely Correlated Network for AR" Sensors 24, no. 14: 4756. https://doi.org/10.3390/s24144756

APA StyleWang, Q., Song, J., Du, C., & Wang, C. (2024). Online Scene Semantic Understanding Based on Sparsely Correlated Network for AR. Sensors, 24(14), 4756. https://doi.org/10.3390/s24144756