Peer-to-Peer Ultra-Wideband Localization for Hands-Free Control of a Human-Guided Smart Stroller

Abstract

:1. Introduction

1.1. Human Tracking Methods

1.2. Human-Following Robot

1.3. Human-Guided Robot

- This study presents information on the preferred positions of the stroller relative to the human based on questionnaires in a smart stroller scenario.

- This study introduces a model of human-guided hands-free robot control based on the UWB localization system, in particular, the robot spatially precedes the human based on the preferred positions.

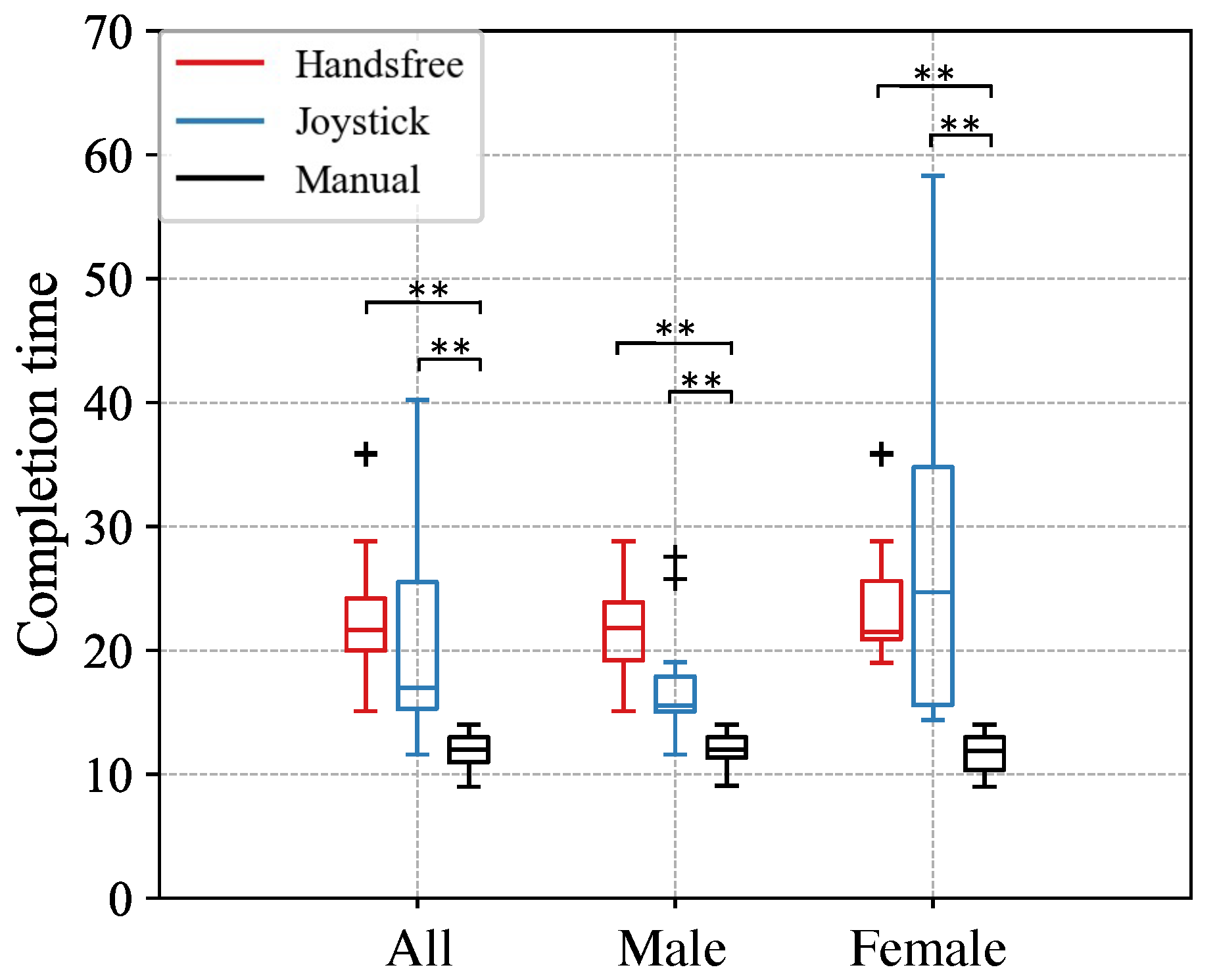

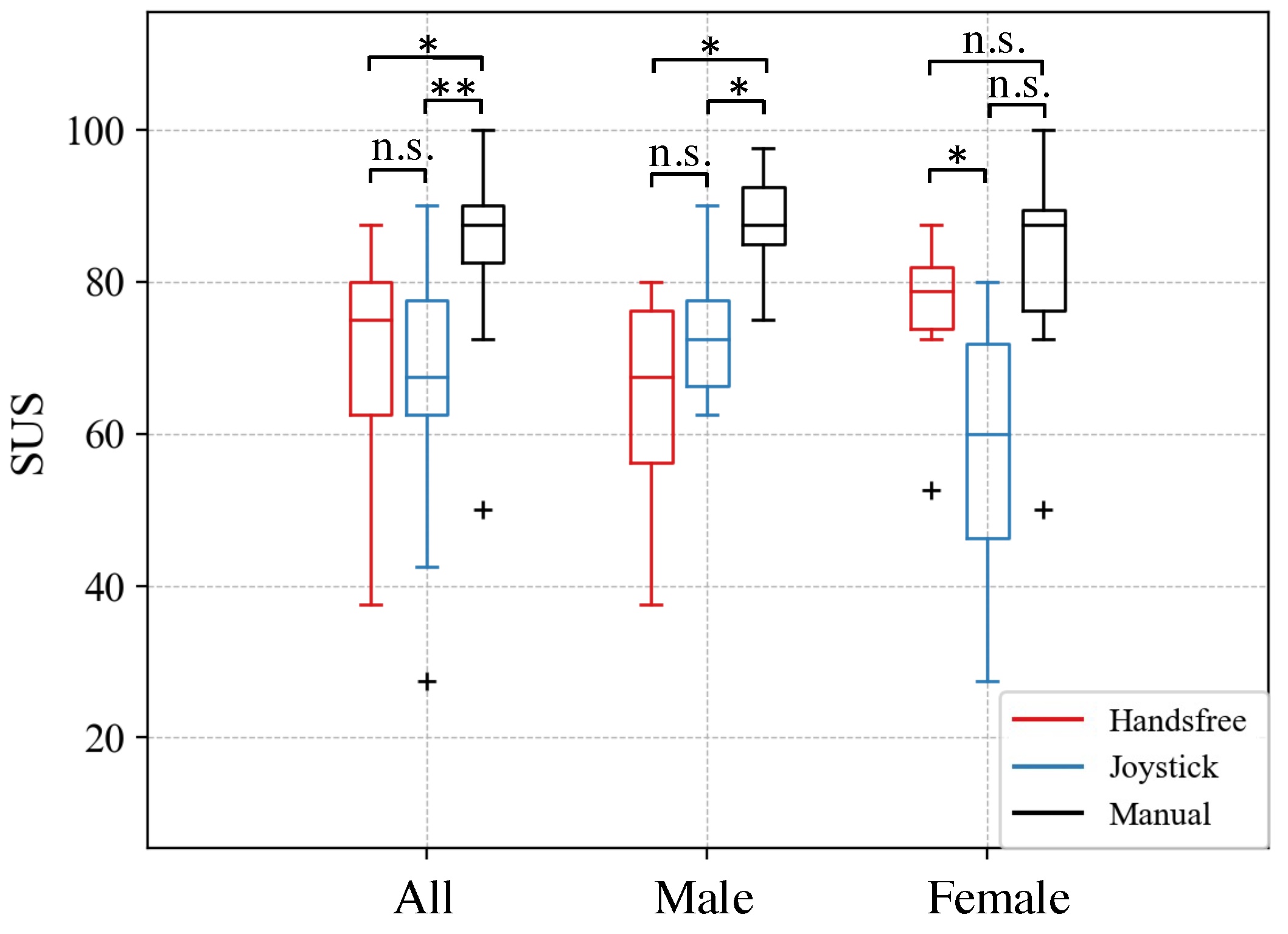

- This study presents quantitative and qualitative evaluations of hands-free control of a smart stroller, compared to joy-stick control, and manual operation of the stroller. The results show the comparable performance between the hands-free interface and the joystick in controlling the stroller. The results of this work also show gender differences in the preference of a control interface, which is presented for the first time in the field of human-guided robot control, to the best of our knowledge.

2. Methodology

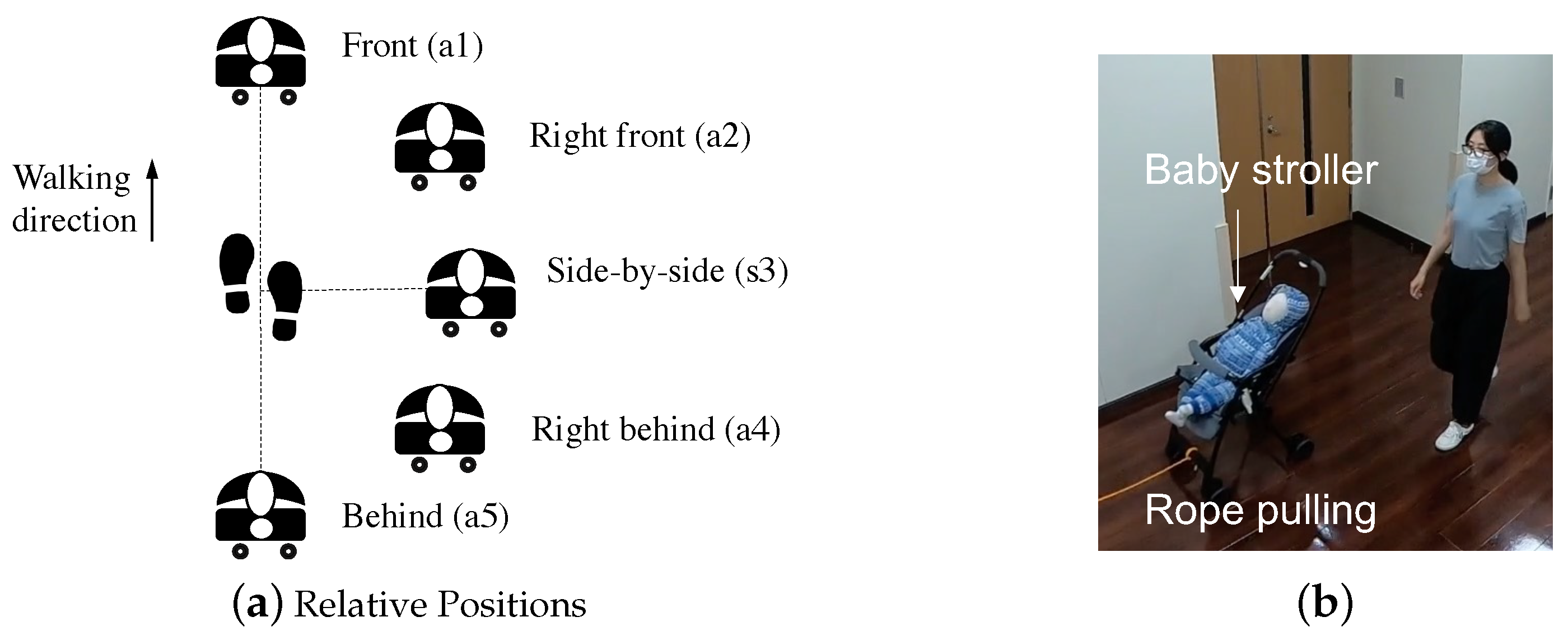

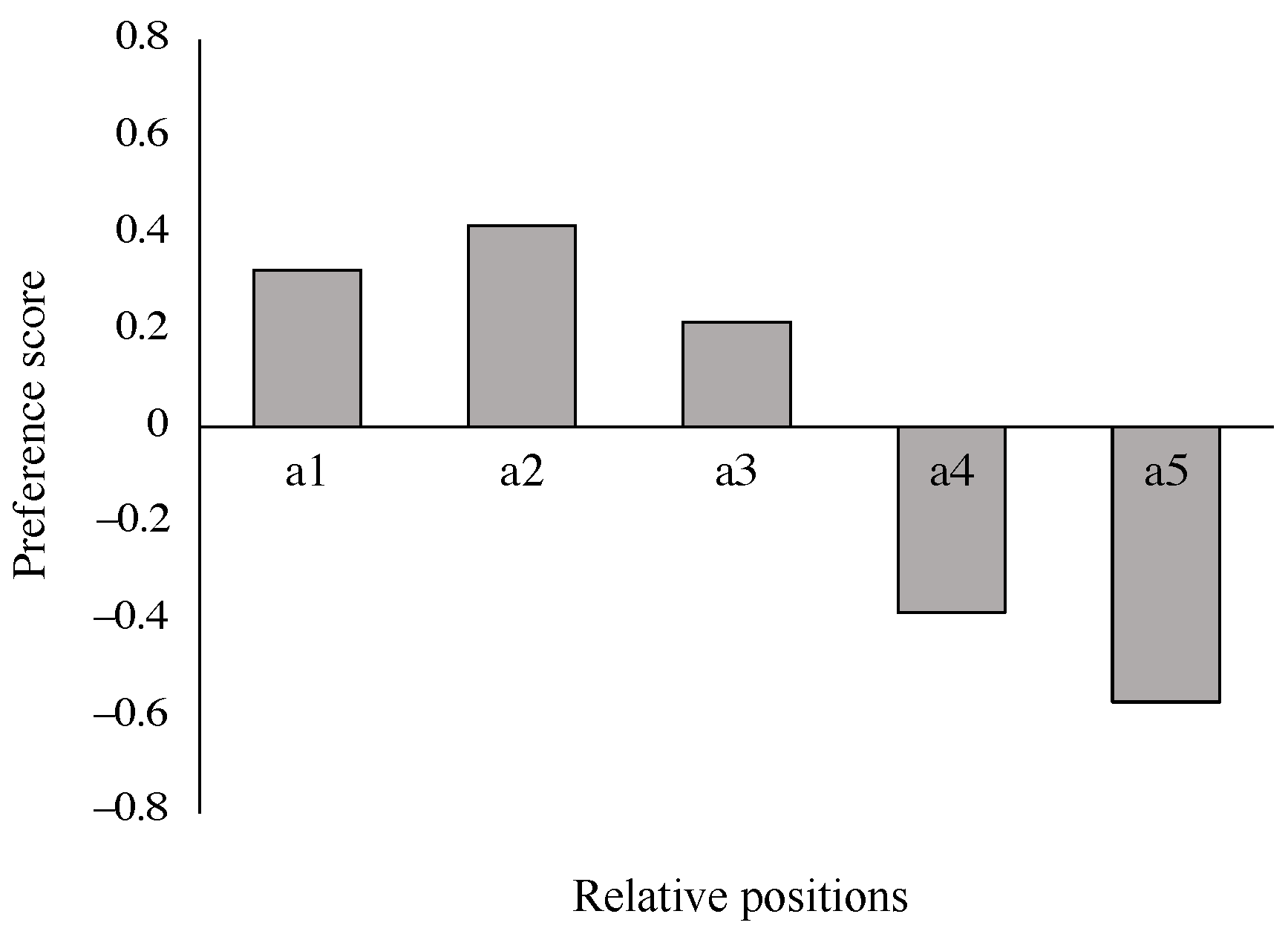

2.1. Preferred Relative Positions between the Human and the Stroller

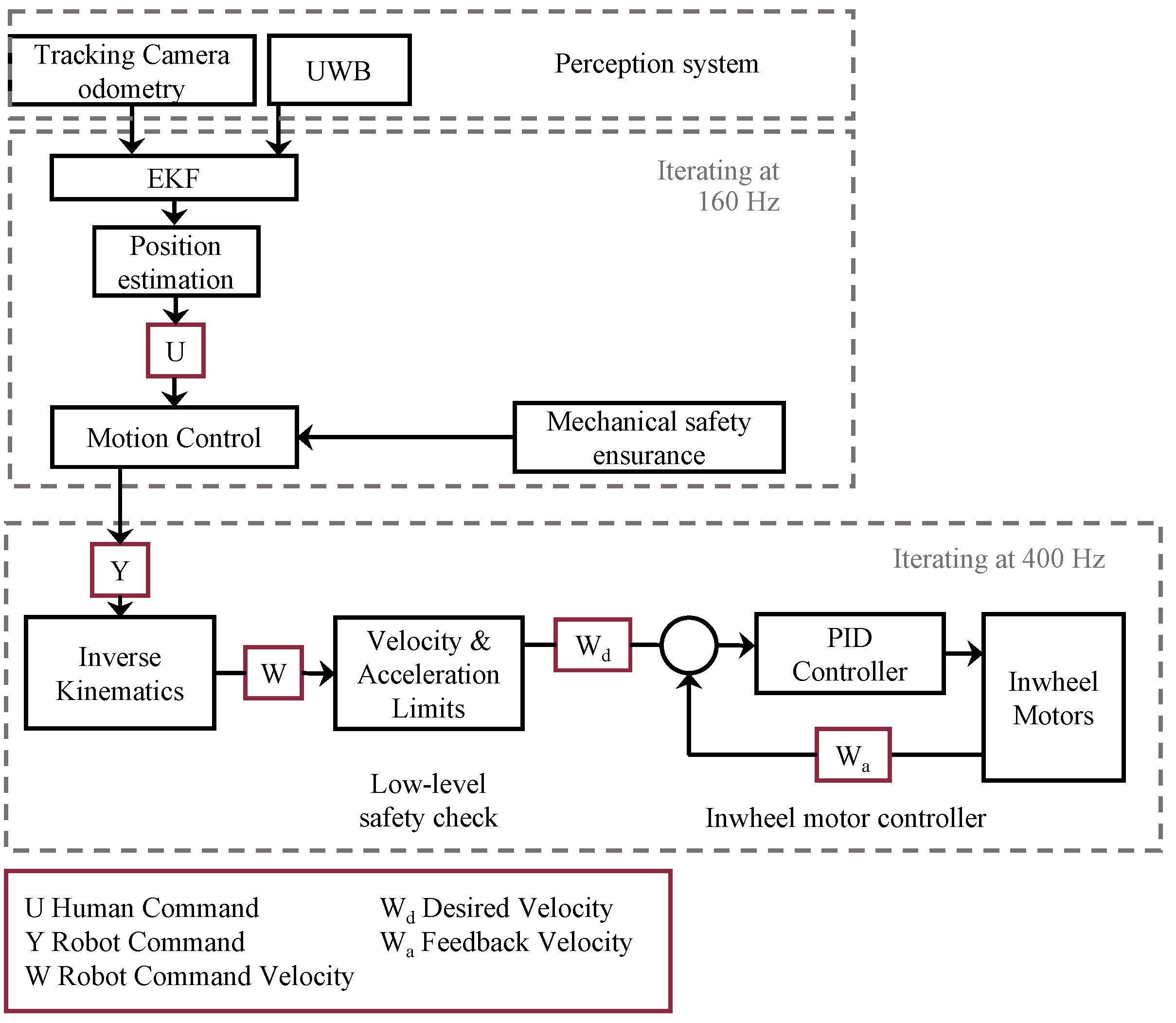

2.2. Human State Estimation

2.3. Control Method

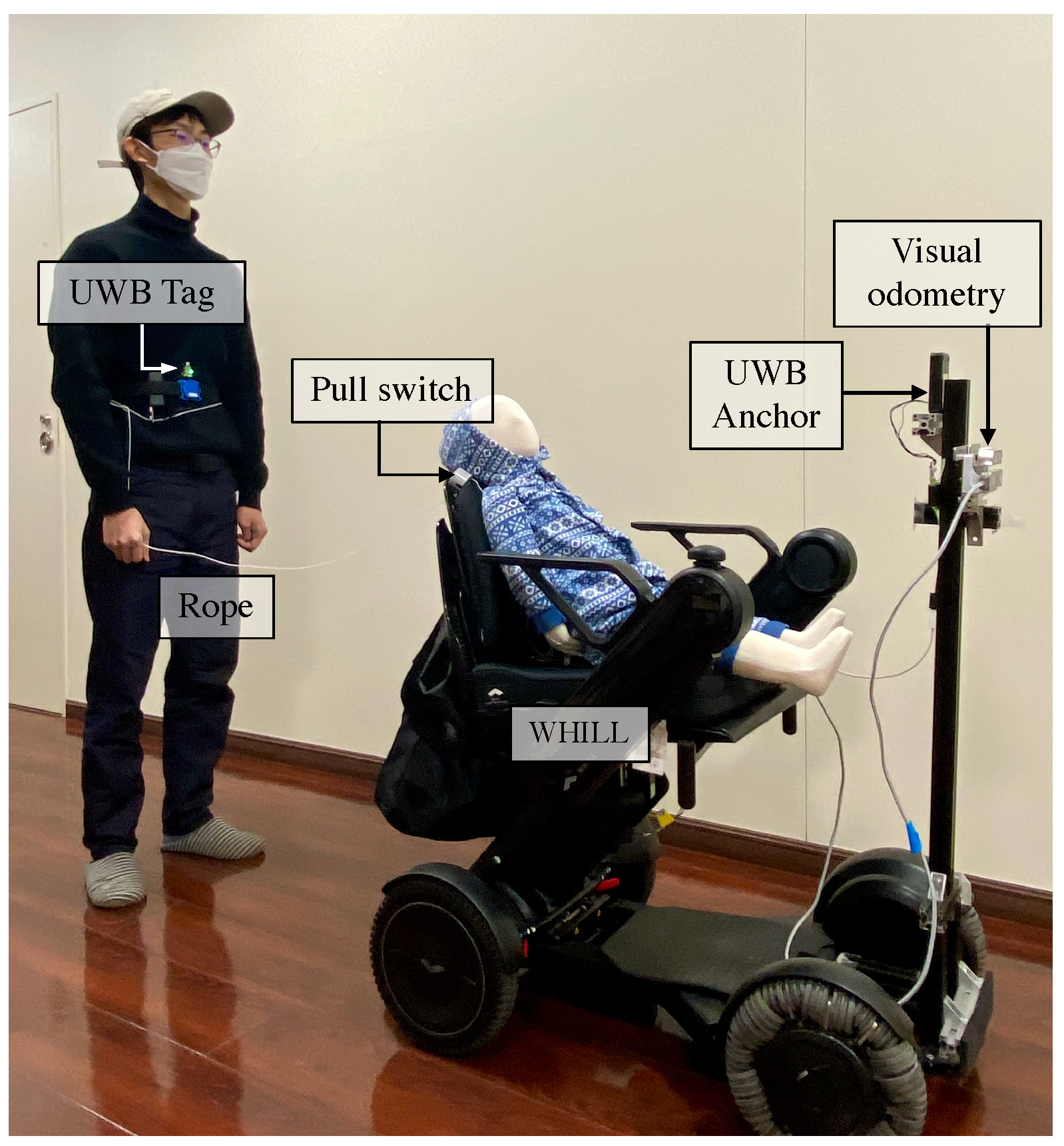

3. Prototype Overview

4. Experiments

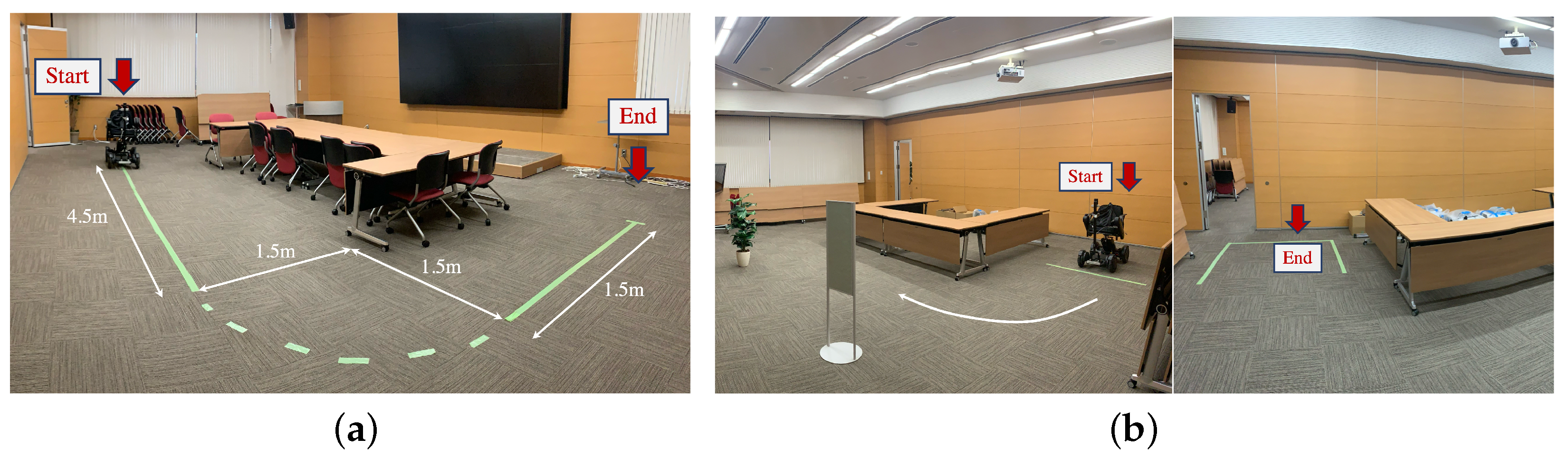

4.1. Experiment 1: Path-Following Test

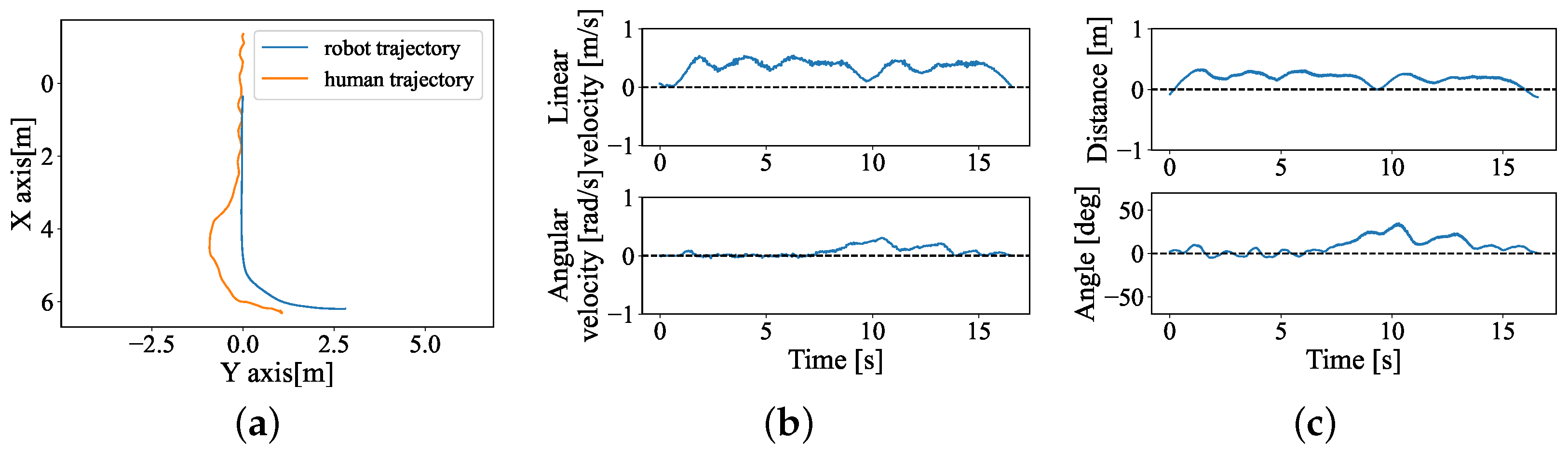

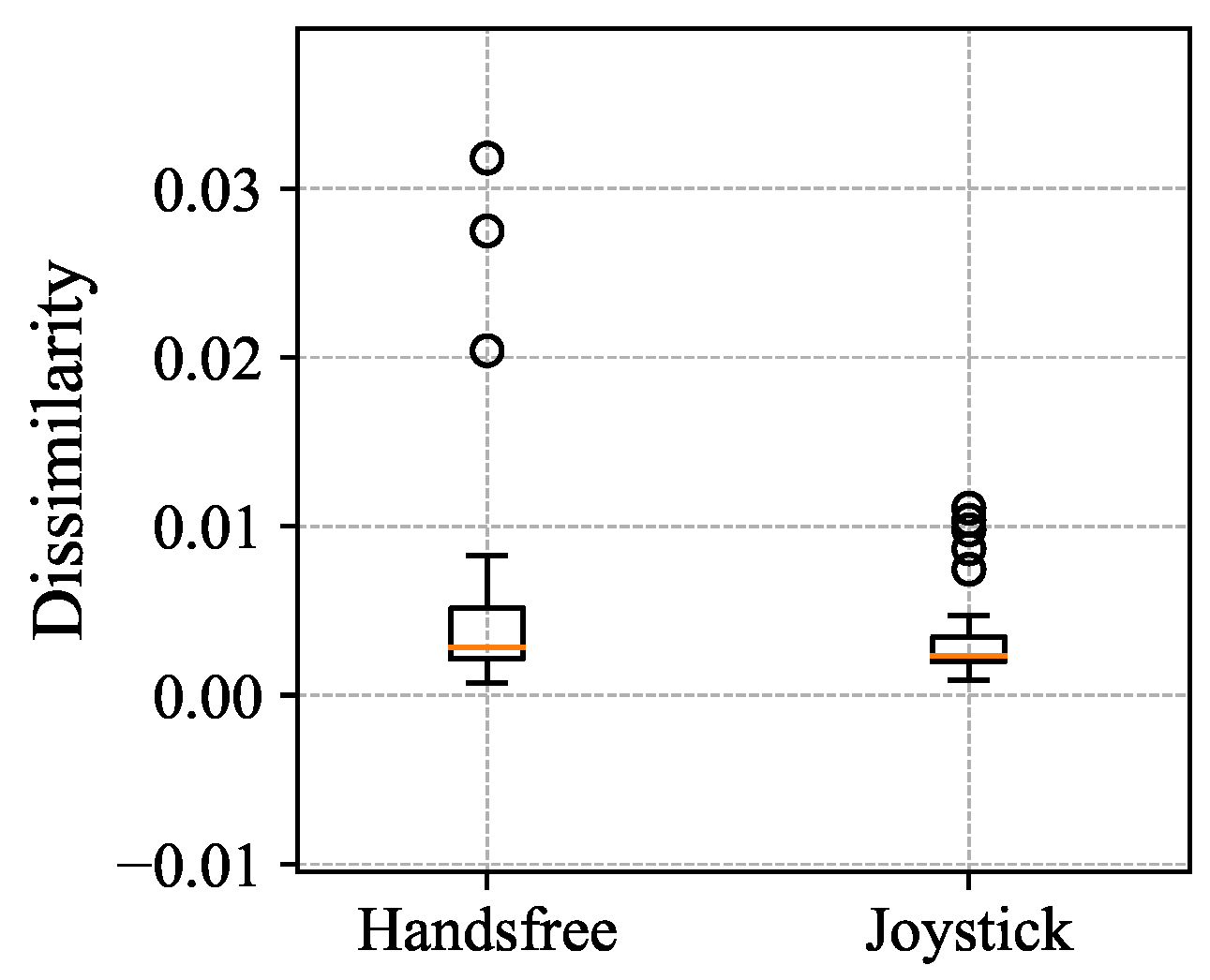

- Procrustes Distance The Procrustes distance is a measure of dissimilarity between shapes based on Procrustes analysis. The Procrustes function finds the best shape-preserving Euclidean transformation between two shapes. In this work, we compare the two trajectories and using the Procrustes analysis, the trajectories would be optimally superimposed, including translating, rotating, and uniformly scaling, to minimize the Procrustes Distance between transformed metrics and . The Procrustes Distance (PD) is calculated byHere, , are the coordinates of the i-th point in shapes and , separately; n is the number of points on the trajectory; and k is the spatial dimensions. The Procrustes distances between the experimental robot and ground truth trajectories are calculated using the Python library [39]. The returned numeric scalar is within [0, 1], with higher values representing less similarity.

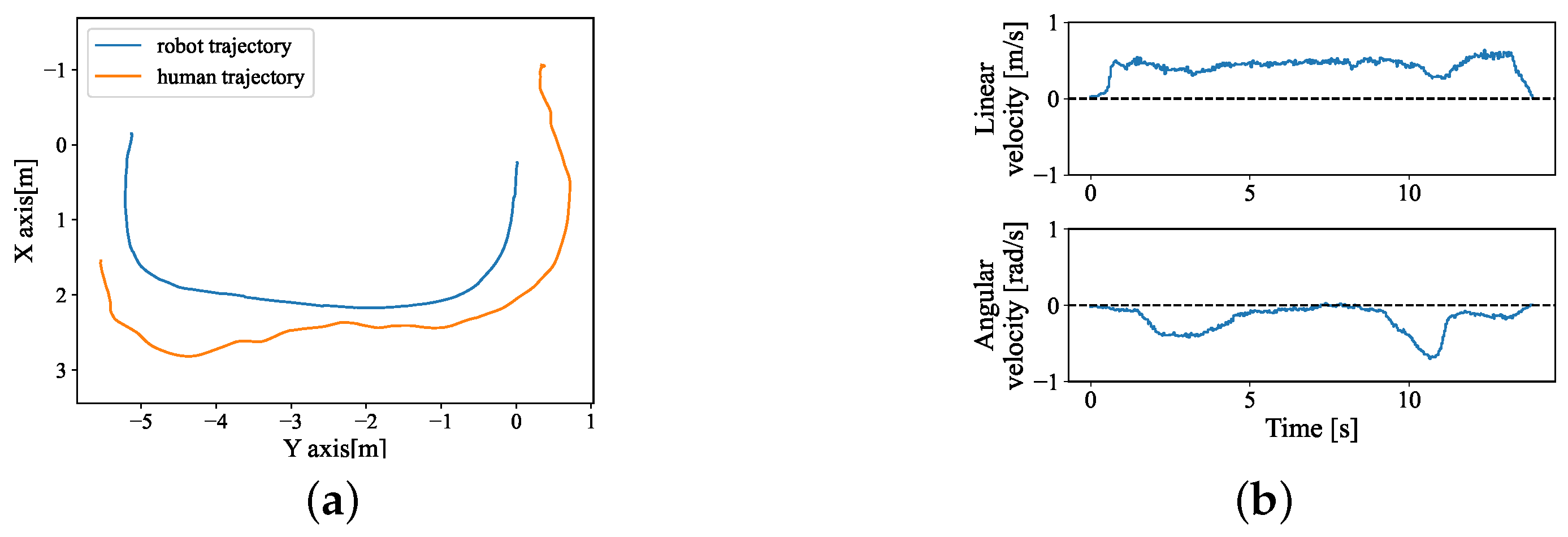

4.2. Experiment 2: Simulated Real-Life Scenario

4.3. User Evaluation

5. Results and Analysis

5.1. Path-Following Results: Experiment 1

5.2. Completion Time Results: Experiment 2

5.3. User Evaluation Results: Experiments 1 and 2

6. Discussion

6.1. Controllability

6.2. System Usability

6.3. Task Load

6.4. Safety Insurance

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| UWB | Ultra-wide-band |

| AOA | Angle of arrival |

| LRF | Laser range finder |

| IMU | Inertial measurement units |

| FOV | Field of view |

| RFID | Radio frequency identification |

| BLE | Bluetooth low energy |

| LOS | Line of sight |

| NLOS | No line of sight |

| HRI | Human–robot interaction |

| EKF | Extended Kalman filter |

| SUS | System usability scale |

| NASA-TLX | NASA Task Load Index |

References

- Smartbe Intelligent Stroller. Available online: https://www.indiegogo.com/projects/smartbe-intelligent-stroller#/ (accessed on 28 May 2024).

- Raihan, M.J.; Hasan, M.T.; Nahid, A.A. Smart human following baby stroller using computer vision. Khulna Univ. Stud. 2022, 8–18. [Google Scholar] [CrossRef]

- Zhang, C.; He, Z.; He, X.; Shen, W.; Dong, L. The modularization design and autonomous motion control of a new baby stroller. Front. Hum. Neurosci. 2022, 16, 1000382. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Su, D.; Shi, L.; Liu, Y.; Miro, J.V. Real-time 3D human tracking for mobile robots with multisensors. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5081–5087. [Google Scholar]

- Mi, W.; Wang, X.; Ren, P.; Hou, C. A system for an anticipative front human following robot. In Proceedings of the International Conference on Artificial Intelligence and Robotics and the International Conference on Automation, Control and Robotics Engineering, Kitakyushu, Japan, 13–15 July 2016; pp. 1–6. [Google Scholar]

- Islam, M.J.; Hong, J.; Sattar, J. Person-following by autonomous robots: A categorical overview. Int. J. Robot. Res. 2019, 38, 1581–1618. [Google Scholar] [CrossRef]

- Jin, D.; Fang, Z.; Zeng, J. A robust autonomous following method for mobile robots in dynamic environments. IEEE Access 2020, 8, 150311–150325. [Google Scholar] [CrossRef]

- Germa, T.; Lerasle, F.; Ouadah, N.; Cadenat, V. Vision and RFID data fusion for tracking people in crowds by a mobile robot. Comput. Vis. Image Underst. 2010, 114, 641–651. [Google Scholar] [CrossRef]

- Liu, R.; Huskić, G.; Zell, A. On tracking dynamic objects with long range passive UHF RFID using a mobile robot. Int. J. Distrib. Sens. Netw. 2015, 11, 781380. [Google Scholar] [CrossRef]

- Geetha, V.; Salvi, S.; Saini, G.; Yadav, N.; Singh Tomar, R.P. Follow me: A human following robot using wi-fi received signal strength indicator. In Proceedings of the ICT Systems and Sustainability: Proceedings of ICT4SD 2020; Springer: Singapore, 2021; Volume 1, pp. 585–593. [Google Scholar]

- Altaf Khattak, S.B.; Fawad; Nasralla, M.M.; Esmail, M.A.; Mostafa, H.; Jia, M. WLAN RSS-based fingerprinting for indoor localization: A machine learning inspired bag-of-features approach. Sensors 2022, 22, 5236. [Google Scholar] [CrossRef]

- Khattak, S.B.A.; Jia, M.; Marey, M.; Nasralla, M.M.; Guo, Q.; Gu, X. A novel single anchor localization method for wireless sensors in 5G satellite-terrestrial network. Alex. Eng. J. 2022, 61, 5595–5606. [Google Scholar] [CrossRef]

- Magsino, E.R.; Sim, J.K.; Tagabuhin, R.R.; Tirados, J.J.S. Indoor Localization of a Multi-story Residential Household using Multiple WiFi Signals. In Proceedings of the 2021 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT), Zallaq, Bahrain, 29–30 September 2021; pp. 365–370. [Google Scholar]

- Bai, L.; Ciravegna, F.; Bond, R.; Mulvenna, M. A low cost indoor positioning system using bluetooth low energy. IEEE Access 2020, 8, 136858–136871. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, Y.; Chen, L.; Chen, R. Intelligent fusion structure for Wi-Fi/BLE/QR/MEMS sensor-based indoor localization. Remote Sens. 2023, 15, 1202. [Google Scholar] [CrossRef]

- Pradeep, B.V.; Rahul, E.; Bhavani, R.R. Follow me robot using bluetooth-based position estimation. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; pp. 584–589. [Google Scholar]

- Kunhoth, J.; Karkar, A.; Al-Maadeed, S.; Al-Ali, A. Indoor positioning and wayfinding systems: A survey. Hum.-Centric Comput. Inf. Sci. 2020, 10, 1–41. [Google Scholar] [CrossRef]

- Feng, T.; Yu, Y.; Wu, L.; Bai, Y.; Xiao, Z.; Lu, Z. A human-tracking robot using ultra wideband technology. IEEE Access 2018, 6, 42541–42550. [Google Scholar] [CrossRef]

- Hepp, B.; Nägeli, T.; Hilliges, O. Omni-directional person tracking on a flying robot using occlusion-robust ultra-wideband signals. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 189–194. [Google Scholar]

- Jiménez, A.R.; Seco, F. Comparing Decawave and Bespoon UWB location systems: Indoor/outdoor performance analysis. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Alcala de Henares, Spain, 4–7 October 2016; pp. 1–8. [Google Scholar]

- Elsanhoury, M.; Mäkelä, P.; Koljonen, J.; Välisuo, P.; Shamsuzzoha, A.; Mantere, T.; Elmusrati, M.; Kuusniemi, H. Precision positioning for smart logistics using ultra-wideband technology-based indoor navigation: A review. IEEE Access 2022, 10, 44413–44445. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, Z.; Dong, T. A review of wearable technologies for elderly care that can accurately track indoor position, recognize physical activities and monitor vital signs in real time. Sensors 2017, 17, 341. [Google Scholar] [CrossRef]

- Alarifi, A.; Al-Salman, A.; Alsaleh, M.; Alnafessah, A.; Al-Hadhrami, S.; Al-Ammar, M.A.; Al-Khalifa, H.S. Ultra wideband indoor positioning technologies: Analysis and recent advances. Sensors 2016, 16, 707. [Google Scholar] [CrossRef] [PubMed]

- List of UWB-Enabled Mobile Devices. Available online: https://en.wikipedia.org/wiki/List_of_UWB-enabled_mobile_devices (accessed on 21 July 2024).

- Hu, J.S.; Wang, J.J.; Ho, D.M. Design of sensing system and anticipative behavior for human following of mobile robots. IEEE Trans. Ind. Electron. 2013, 61, 1916–1927. [Google Scholar] [CrossRef]

- Ferrer, G.; Sanfeliu, A. Anticipative kinodynamic planning: Multi-objective robot navigation in urban and dynamic environments. Auton. Robot. 2019, 43, 1473–1488. [Google Scholar] [CrossRef]

- Repiso, E.; Garrell, A.; Sanfeliu, A. Adaptive side-by-side social robot navigation to approach and interact with people. Int. J. Soc. Robot. 2020, 12, 909–930. [Google Scholar] [CrossRef]

- Karunarathne, D.; Morales, Y.; Kanda, T.; Ishiguro, H. Model of side-by-side walking without the robot knowing the goal. Int. J. Soc. Robot. 2018, 10, 401–420. [Google Scholar] [CrossRef]

- Jung, E.J.; Yi, B.J.; Yuta, S. Control algorithms for a mobile robot tracking a human in front. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 2411–2416. [Google Scholar]

- Cifuentes, C.A.; Frizera, A.; Carelli, R.; Bastos, T. Human–robot interaction based on wearable IMU sensor and laser range finder. Robot. Auton. Syst. 2014, 62, 1425–1439. [Google Scholar] [CrossRef]

- Yan, Q.; Huang, J.; Yang, Z.; Hasegawa, Y.; Fukuda, T. Human-following control of cane-type walking-aid robot within fixed relative posture. IEEE/ASME Trans. Mechatron. 2021, 27, 537–548. [Google Scholar] [CrossRef]

- Nikdel, P.; Shrestha, R.; Vaughan, R. The hands-free push-cart: Autonomous following in front by predicting user trajectory around obstacles. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 4548–4554. [Google Scholar]

- Conte, D.; Furukawa, T. Autonomous Bayesian escorting of a human integrating intention and obstacle avoidance. J. Field Robot. 2022, 39, 679–693. [Google Scholar] [CrossRef]

- Leica, P.; Roberti, F.; Monllor, M.; Toibero, J.M.; Carelli, R. Control of bidirectional physical human–robot interaction based on the human intention. Intell. Serv. Robot. 2017, 10, 31–40. [Google Scholar] [CrossRef]

- Young, J.E.; Kamiyama, Y.; Reichenbach, J.; Igarashi, T.; Sharlin, E. How to walk a robot: A dog-leash human-robot interface. In Proceedings of the 2011 RO-MAN, Atlanta, GA, USA, 31 July–3 August 2011; pp. 376–382. [Google Scholar]

- Scheidegger, W.M.; De Mello, R.C.; Sierra, S.D.; Jimenez, M.F.; Múnera, M.C.; Cifuentes, C.A.; Frizera-Neto, A. A novel multimodal cognitive interaction for walker-assisted rehabilitation therapies. In Proceedings of the 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR), Toronto, ON, Canada, 24–28 June 2019; pp. 905–910. [Google Scholar]

- Honig, S.S.; Oron-Gilad, T.; Zaichyk, H.; Sarne-Fleischmann, V.; Olatunji, S.; Edan, Y. Toward socially aware person-following robots. IEEE Trans. Cogn. Dev. Syst. 2018, 10, 936–954. [Google Scholar] [CrossRef]

- Arechavaleta, G.; Laumond, J.P.; Hicheur, H.; Berthoz, A. On the nonholonomic nature of human locomotion. Auton. Robot. 2008, 25, 25–35. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef]

- Brooke, J. SUS—A quick and dirty usability scale. In Usability Evaluation In Industry; CRC Press: Boca Raton, FL, USA, 1996; Volume 189, pp. 4–7. [Google Scholar]

- Hart, S.G. NASA-task load index (NASA-TLX); 20 years later. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; Sage Publications: Los Angeles, CA, USA, 2006; Volume 50, pp. 904–908. [Google Scholar]

- Mathew, J.; Masson, G.; Danion, F. Sex differences in visuomotor tracking. Sci. Rep. 2020, 10, 11863. [Google Scholar] [CrossRef] [PubMed]

- Kitson, A.; Riecke, B.E.; Hashemian, A.M.; Neustaedter, C. NaviChair: Evaluating an embodied interface using a pointing task to navigate virtual reality. In Proceedings of the 3rd ACM Symposium on Spatial User Interaction, Los Angeles, CA, USA, 8–9 August 2015; pp. 123–126. [Google Scholar]

- Chang, W.T. The effects of age, gender, and control device in a virtual reality driving simulation. Symmetry 2020, 12, 995. [Google Scholar] [CrossRef]

- Cherney, I.D. Mom, let me play more computer games: They improve my mental rotation skills. Sex Roles 2008, 59, 776–786. [Google Scholar] [CrossRef]

- Nenna, F.; Gamberini, L. The influence of gaming experience, gender and other individual factors on robot teleoperations in vr. In Proceedings of the 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Sapporo, Japan, 7–10 March 2022; pp. 945–949. [Google Scholar]

| Component | Details |

|---|---|

| Platform | Model C2 (WHILL) |

| UWB sensor | LinkTrack AOA (Nooploop) |

| Visual odometry | T265 (Realsense) |

| Microcomputer | Jetson Nano (Nvidia Corporation) |

| Canopy switch | WS5201HP (Panasonic) |

| Human-Guided (Hands-Free) | Joystick | Manual | |

|---|---|---|---|

| All | 69.43 (14.79) | 65.96 (16.47) | 84.81 (13.17) |

| Male | 64.29 (15.66) | 73.21 (9.43) | 87.85 (7.96) |

| Female | 75.42 (12.29) | 57.50 (19.81) | 81.25 (17.66) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Chen, Y.; Hassan, M.; Suzuki, K. Peer-to-Peer Ultra-Wideband Localization for Hands-Free Control of a Human-Guided Smart Stroller. Sensors 2024, 24, 4828. https://doi.org/10.3390/s24154828

Zhang X, Chen Y, Hassan M, Suzuki K. Peer-to-Peer Ultra-Wideband Localization for Hands-Free Control of a Human-Guided Smart Stroller. Sensors. 2024; 24(15):4828. https://doi.org/10.3390/s24154828

Chicago/Turabian StyleZhang, Xiaoxi, Yang Chen, Modar Hassan, and Kenji Suzuki. 2024. "Peer-to-Peer Ultra-Wideband Localization for Hands-Free Control of a Human-Guided Smart Stroller" Sensors 24, no. 15: 4828. https://doi.org/10.3390/s24154828