Simplification of Mobility Tests and Data Processing to Increase Applicability of Wearable Sensors as Diagnostic Tools for Parkinson’s Disease

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Clinical Evaluations

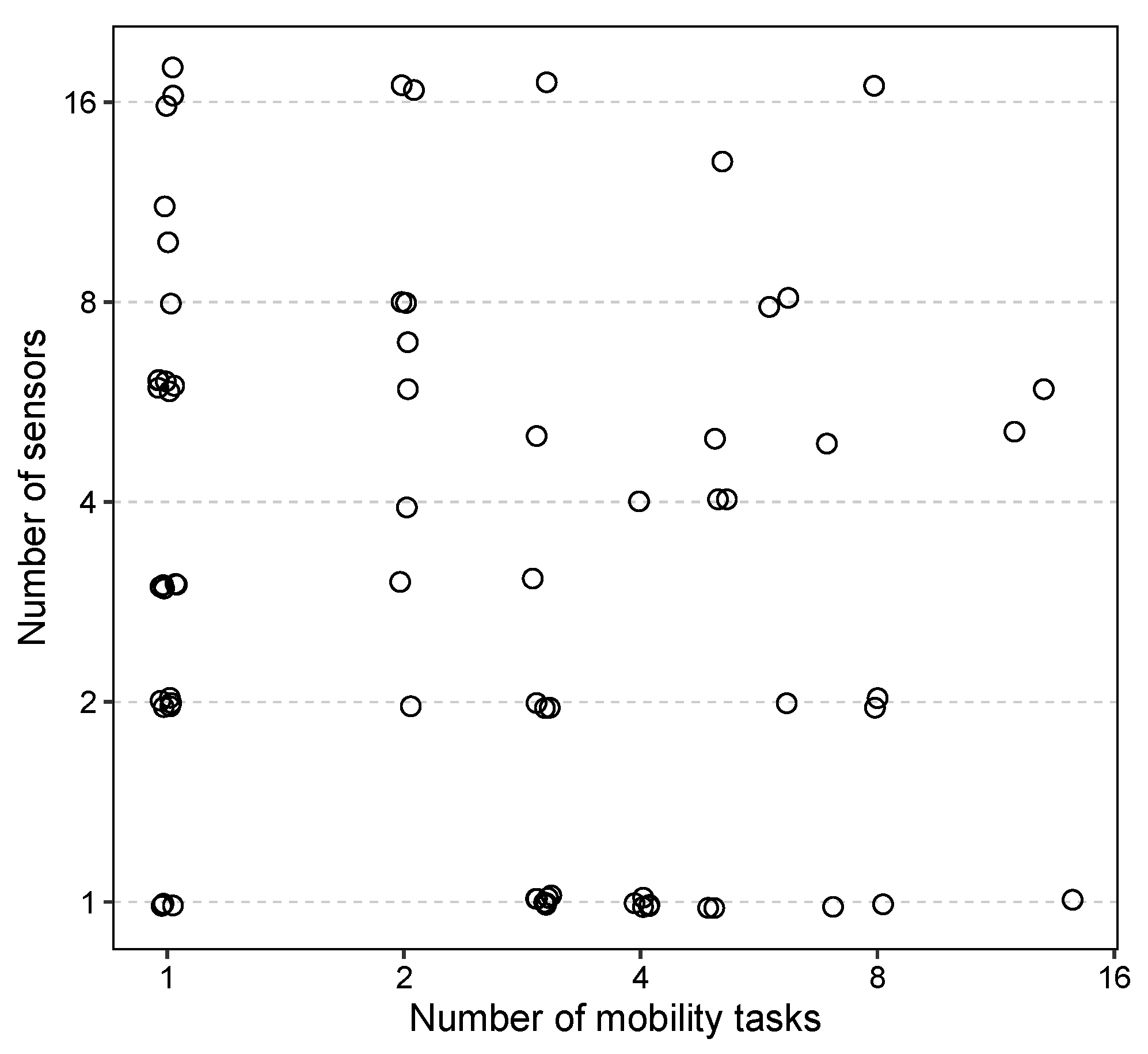

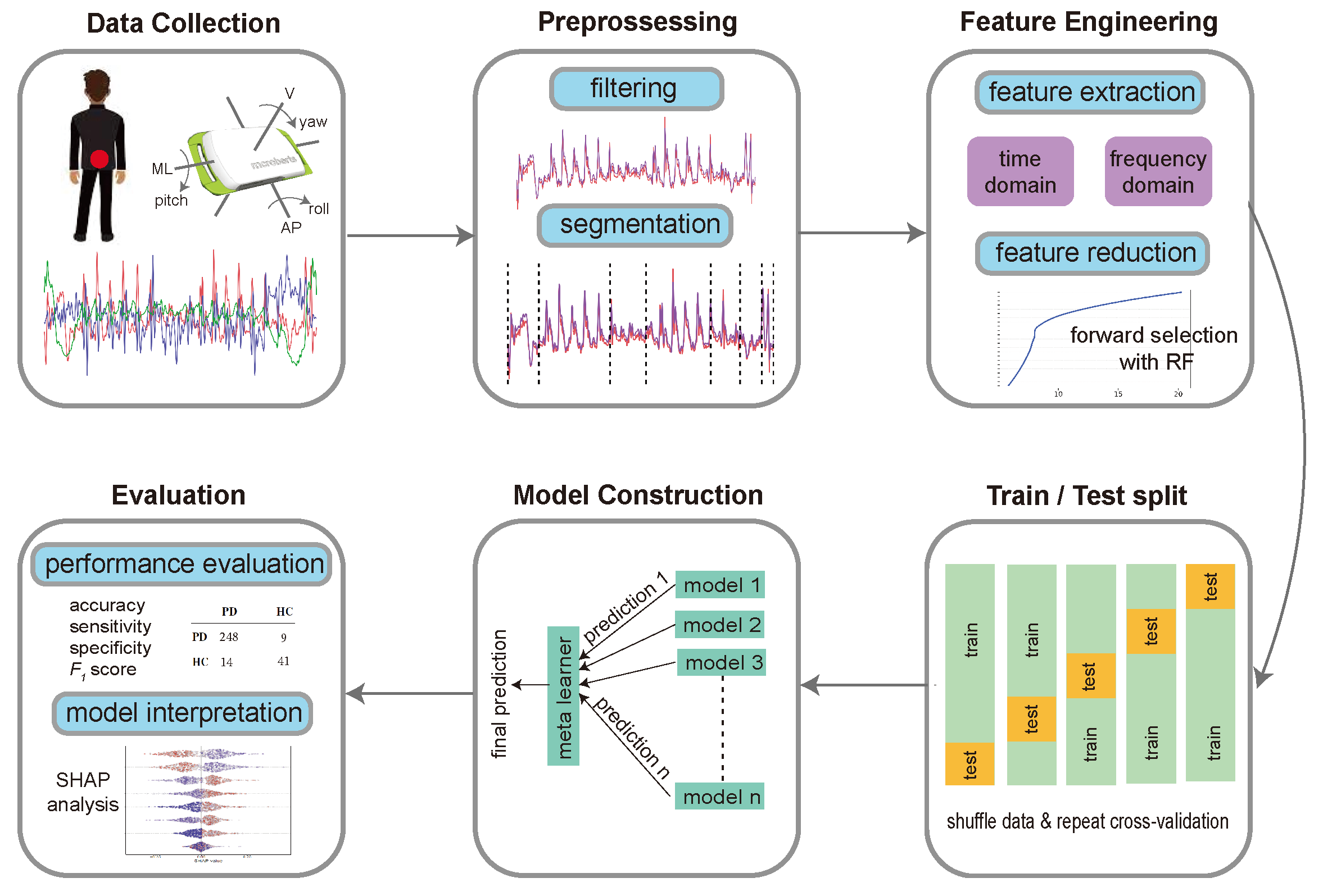

2.3. Assessment of Mobility

2.4. Device and Data Collection

2.5. Data Extraction

2.6. Segmentation

2.7. Feature Engineering

2.8. Feature Selection

2.9. Machine Learning Model

2.10. Group Feature Importance

2.11. Statistical Analysis

3. Results

3.1. Classification of PD versus Control

3.2. Strategies for Simplifying Mobility Testing and Its Associated Workload

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pringsheim, T.; Jette, N.; Frolkis, A.; Steeves, T.D. The prevalence of Parkinson’s disease: A systematic review and meta-analysis. Mov. Disord. 2014, 29, 1583–1590. [Google Scholar] [CrossRef]

- Geraghty, J.J.; Jankovic, J.; Zetusky, W.J. Association between essential tremor and Parkinson’s disease. Ann. Neurol. 1985, 17, 329–333. [Google Scholar] [CrossRef] [PubMed]

- Tan, E.K.; Lee, S.S.; Lum, S.Y. Evidence of increased odds of essential tremor in Parkinson’s disease. Mov. Disord. 2008, 23, 993–997. [Google Scholar] [CrossRef]

- Berardelli, A.; Sabra, A.F.; Hallett, M. Physiological mechanisms of rigidity in Parkinson’s disease. J. Neurol. Neurosurg. Psychiatry 1983, 46, 45. [Google Scholar] [CrossRef]

- Bologna, M.; Leodori, G.; Stirpe, P.; Paparella, G.; Colella, D.; Belvisi, D.; Fasano, A.; Fabbrini, G.; Berardelli, A. Bradykinesia in early and advanced Parkinson’s disease. J. Neurol. Sci. 2016, 369, 286–291. [Google Scholar] [CrossRef]

- Cantello, R.; Gianelli, M.; Bettucci, D.; Civardi, C.; De Angelis, M.S.; Mutani, R. Parkinson’s disease rigidity: Magnetic motor evoked potentials in a small hand muscle. Neurology 1991, 41, 1449–1456. [Google Scholar] [CrossRef]

- Pongmala, C.; Roytman, S.; van Emde Boas, M.; Dickinson, O.; Kanel, P.; Bohnen, N.I. Composite measures of motor performance and self-efficacy are better determinants of postural instability and gait difficulties than individual clinical measures in Parkinson’s disease. Park. Relat. Disord. 2023, 107, 105251. [Google Scholar] [CrossRef]

- Morris, M.E.; Iansek, R.; Matyas, T.A.; Summers, J.J. The pathogenesis of gait hypokinesia in Parkinson’s disease. Brain 1994, 117, 1169–1181. [Google Scholar] [CrossRef] [PubMed]

- Sofuwa, O.; Nieuwboer, A.; Desloovere, K.; Willems, A.M.; Chavret, F.; Jonkers, I. Quantitative gait analysis in Parkinson’s disease: Comparison with a healthy control group. Arch. Phys. Med. Rehabil. 2005, 86, 1007–1013. [Google Scholar] [CrossRef]

- Snijders, A.H.; Leunissen, I.; Bakker, M.; Overeem, S.; Helmich, R.C.; Bloem, B.R.; Toni, I. Gait-related cerebral alterations in patients with Parkinson’s disease with freezing of gait. Brain 2010, 134, 59–72. [Google Scholar] [CrossRef]

- Zach, H.; Janssen, A.M.; Snijders, A.H.; Delval, A.; Ferraye, M.U.; Auff, E.; Weerdesteyn, V.; Bloem, B.R.; Nonnekes, J. Identifying freezing of gait in Parkinson’s disease during freezing provoking tasks using waist-mounted accelerometry. Park. Relat. Disord. 2015, 21, 1362–1366. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.; Chen, H.; Schwarzschild, M.A.; Logroscino, G.; Ascherio, A. Perceived imbalance and risk of Parkinson’s disease. Mov. Disord. 2008, 23, 613–616. [Google Scholar] [CrossRef] [PubMed]

- Shulman, L.M.; Gruber-Baldini, A.L.; Anderson, K.E.; Vaughan, C.G.; Reich, S.G.; Fishman, P.S.; Weiner, W.J. The evolution of disability in Parkinson disease. Mov. Disord. 2008, 23, 790–796. [Google Scholar] [CrossRef] [PubMed]

- Hoehn, M.M.; Yahr, M.D. Parkinsonism: Onset, progression, and mortality. Neurology 1967, 17, 427–442. [Google Scholar] [CrossRef] [PubMed]

- Rizzo, G.; Copetti, M.; Arcuti, S.; Martino, D.; Fontana, A.; Logroscino, G. Accuracy of clinical diagnosis of Parkinson disease: A systematic review and meta-analysis. Neurology 2016, 86, 566–576. [Google Scholar] [CrossRef] [PubMed]

- Sieber, B.A.; Landis, S.; Koroshetz, W.; Bateman, R.; Siderowf, A.; Galpern, W.R.; Dunlop, J.; Finkbeiner, S.; Sutherland, M.; Wang, H.; et al. Prioritized research recommendations from the National Institute of Neurological Disorders and Stroke Parkinson’s Disease 2014 Conference. Ann. Neurol. 2014, 76, 469–472. [Google Scholar] [CrossRef] [PubMed]

- Maetzler, W.; Domingos, J.; Srulijes, K.; Ferreira, J.J.; Bloem, B.R. Quantitative wearable sensors for objective assessment of Parkinson’s disease. Mov. Disord. 2013, 28, 1628–1637. [Google Scholar] [CrossRef] [PubMed]

- Rovini, E.; Maremmani, C.; Cavallo, F. How wearable sensors can support Parkinson’s disease diagnosis and treatment: A systematic review. Front. Neurosci. 2017, 11, 555. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez-Molinero, A.; Monsonís, A.S.; Pérez, C.; Rodríguez-Martín, D.; Alcaine, S.; Mestre, B.; Quispe, P.; Giuliani, B.; Vainstein, G.; Browne, P.; et al. Analysis of correlation between an accelerometer-based algorithm for detecting parkinsonian gait and UPDRS subscales. Front. Neurol. 2017, 8, 431. [Google Scholar] [CrossRef]

- Sejdić, E.; Lowry, K.A.; Bellanca, J.; Redfern, M.S.; Brach, J.S. A comprehensive assessment of gait accelerometry signals in time, frequency and time-frequency domains. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 603–612. [Google Scholar] [CrossRef]

- Din, S.D.; Godfrey, A.; Rochester, L. Validation of an accelerometer to quantify a comprehensive battery of gait characteristics in healthy older adults and Parkinson’s disease: Toward clinical and at home use. IEEE J. Biomed. Health Inform. 2016, 20, 838–847. [Google Scholar] [CrossRef] [PubMed]

- Hubble, R.P.; Naughton, G.A.; Silburn, P.A.; Cole, M.H. Wearable sensor use for assessing standing balance and walking stability in people with Parkinson’s disease: A systematic review. PLoS ONE 2015, 10, e0123705. [Google Scholar] [CrossRef]

- Lu, R.; Xu, Y.; Li, X.; Fan, Y.; Zeng, W.; Tan, Y.; Ren, K.; Chen, W.; Cao, X. Evaluation of wearable sensor devices in Parkinson’s disease: A review of current status and future prospects. Park. Dis. 2020, 2020, 1–8. [Google Scholar] [CrossRef]

- Moreau, C.; Rouaud, T.; Grabli, D.; Benatru, I.; Remy, P.; Marques, A.R.; Drapier, S.; Mariani, L.L.; Roze, E.; Devos, D.; et al. Overview on wearable sensors for the management of Parkinson’s disease. Npj Park. Dis. 2023, 9, 1–16. [Google Scholar] [CrossRef]

- Channa, A.; Popescu, N.; Ciobanu, V. Wearable solutions for patients with Parkinson’s disease and neurocognitive disorder: A systematic review. Sensors 2020, 20, 2713. [Google Scholar] [CrossRef] [PubMed]

- Espay, A.J.; Bonato, P.; Nahab, F.B.; Maetzler, W.; Dean, J.M.; Klucken, J.; Eskofier, B.M.; Merola, A.; Horak, F.; Lang, A.E.; et al. Technology in Parkinson’s disease: Challenges and opportunities. Mov. Disord. 2016, 31, 1272–1282. [Google Scholar] [CrossRef] [PubMed]

- Kubota, K.J.; Chen, J.A.; Little, M.A. Machine learning for large-scale wearable sensor data in Parkinson’s disease: Concepts, promises, pitfalls, and futures. Mov. Disord. 2016, 31, 1314–1326. [Google Scholar] [CrossRef]

- Arora, S.; Venkataraman, V.; Zhan, A.; Donohue, S.; Biglan, K.M.; Dorsey, E.R.; Little, M.A. Detecting and monitoring the symptoms of Parkinson’s disease using smartphones: A pilot study. Park. Relat. Disord. 2015, 21, 650–653. [Google Scholar] [CrossRef] [PubMed]

- Oung, Q.; Hariharan, M.; Lee, H.; Basah, S.; Sarillee, M.; Lee, C. Wearable Multimodal Sensors for Evaluation of Patients with Parkinson Disease. In Proceedings of the 2015 IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 27–29 November 2015; pp. 269–274. [Google Scholar] [CrossRef]

- Sotirakis, C.; Su, Z.; Brzezicki, M.A.; Conway, N.; Tarassenko, L.; FitzGerald, J.J.; Antoniades, C.A. Identification of motor progression in Parkinson’s disease using wearable sensors and machine learning. npj Park. Dis. 2023, 9, 1–8. [Google Scholar] [CrossRef]

- Cole, B.; Roy, S.; Luca, C.D.; Nawab, S. Dynamical learning and tracking of tremor and dyskinesia from wearable sensors. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 982–991. [Google Scholar] [CrossRef]

- Castelli Gattinara Di Zubiena, F.; Menna, G.; Mileti, I.; Zampogna, A.; Asci, F.; Paoloni, M.; Suppa, A.; Del Prete, Z.; Palermo, E. Machine Learning and Wearable Sensors for the Early Detection of Balance Disorders in Parkinson’s Disease. Sensors 2022, 22, 9903. [Google Scholar] [CrossRef] [PubMed]

- Albán-Cadena, A.C.; Villalba-Meneses, F.; Pila-Varela, K.O.; Moreno-Calvo, A.; Villalba-Meneses, C.P.; Almeida-Galárraga, D.A. Wearable sensors in the diagnosis and study of Parkinson’s disease symptoms: A systematic review. J. Med. Eng. Technol. 2021, 45, 532–545. [Google Scholar] [CrossRef]

- Mughal, H.; Javed, A.R.; Rizwan, M.; Almadhor, A.S.; Kryvinska, N. Parkinson’s Disease Management via Wearable Sensors: A Systematic Review. IEEE Access 2022, 10, 35219–35237. [Google Scholar] [CrossRef]

- Kour, N.; Gupta, S.; Arora, S. Sensor technology with gait as a diagnostic tool for assessment of Parkinson’s disease: A survey. Multimed. Tools Appl. 2022, 82, 10211–10247. [Google Scholar] [CrossRef]

- Kuhner, A.; Schubert, T.; Cenciarini, M.; Wiesmeier, I.K.; Coenen, V.A.; Burgard, W.; Weiller, C.; Maurer, C. Correlations between motor symptoms across different motor tasks, quantified via random forest feature classification in Parkinson’s disease. Front. Neurol. 2017, 8, 607. [Google Scholar] [CrossRef] [PubMed]

- Abujrida, H.; Agu, E.; Pahlavan, K. Smartphone-Based Gait Assessment to Infer Parkinson’s Disease Severity using Crowdsourced Data. In Proceedings of the 2017 IEEE Healthcare Innovations and Point of Care Technologies (HI-POCT), Bethesda, MD, USA, 6–8 November 2017; pp. 208–211. [Google Scholar] [CrossRef]

- Buchman, A.S.; Leurgans, S.E.; Weiss, A.; VanderHorst, V.; Mirelman, A.; Dawe, R.; Barnes, L.L.; Wilson, R.S.; Hausdorff, J.M.; Bennett, D.A. Associations between quantitative mobility measures derived from components of conventional mobility testing and parkinsonian gait in older adults. PLoS ONE 2014, 9, e86262. [Google Scholar] [CrossRef] [PubMed]

- Palmerini, L.; Mellone, S.; Avanzolini, G.; Valzania, F.; Chiari, L. Quantification of motor impairment in Parkinson’s disease using an instrumented timed up and go test. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 664–673. [Google Scholar] [CrossRef] [PubMed]

- Weiss, A.; Herman, T.; Plotnik, M.; Brozgol, M.; Maidan, I.; Giladi, N.; Gurevich, T.; Hausdorff, J.M. Can an accelerometer enhance the utility of the Timed Up & Go Test when evaluating patients with Parkinson’s disease? Med. Eng. Phys. 2010, 32, 119–125. [Google Scholar] [CrossRef] [PubMed]

- Weiss, A.; Mirelman, A.; Buchman, A.S.; Bennett, D.A.; Hausdorff, J.M. Using a body-fixed sensor to identify subclinical gait difficulties in older adults with IADL disability: Maximizing the output of the Timed Up and Go. PLoS ONE 2013, 8, e68885. [Google Scholar] [CrossRef]

- Arora, S.; Venkataraman, V.; Donohue, S.; Biglan, K.M.; Dorsey, E.R.; Little, M.A. High Accuracy Discrimination of Parkinson’s Disease Participants from Healthy Controls using Smartphones. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 3641–3644. [Google Scholar] [CrossRef]

- Schlachetzki, J.C.; Barth, J.; Marxreiter, F.; Gossler, J.; Kohl, Z.; Reinfelder, S.; Gassner, H.; Aminian, K.; Eskofier, B.M.; Winkler, J.; et al. Wearable sensors objectively measure gait parameters in Parkinson’s disease. PLoS ONE 2017, 12, e0183989. [Google Scholar] [CrossRef]

- Krupicka, R.; Viteckova, S.; Cejka, V.; Klempir, O.; Szabo, Z.; Ruzicka, E. BradykAn: A Motion Capture System for Objectification of Hand Motor Tests in Parkinson DISEASE. In Proceedings of the 2017 E-Health and Bioengineering Conference (EHB), Sinaia, Romania, 22–24 June 2017; pp. 446–449. [Google Scholar] [CrossRef]

- Abtahi, M.; Bahram Borgheai, S.; Jafari, R.; Constant, N.; Diouf, R.; Shahriari, Y.; Mankodiya, K. Merging fNIRS-EEG Brain Monitoring and Body Motion Capture to Distinguish Parkinson’s Disease. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1246–1253. [Google Scholar] [CrossRef] [PubMed]

- Castaño-Pino, Y.J.; González, M.C.; Quintana-Peña, V.; Valderrama, J.; Muñoz, B.; Orozco, J.; Navarro, A. Automatic Gait Phases Detection in Parkinson Disease: A Comparative Study. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 798–802. [Google Scholar] [CrossRef]

- Phan, D.; Horne, M.; Pathirana, P.N.; Farzanehfar, P. Measurement of Axial Rigidity and Postural Instability Using Wearable Sensors. Sensors 2018, 18, 495. [Google Scholar] [CrossRef] [PubMed]

- Del Din, S.; Elshehabi, M.; Galna, B.; Hobert, M.A.; Warmerdam, E.; Suenkel, U.; Brockmann, K.; Metzger, F.; Hansen, C.; Berg, D.; et al. Gait analysis with wearables predicts conversion to Parkinson disease. Ann. Neurol. 2019, 86, 357–367. [Google Scholar] [CrossRef] [PubMed]

- Rovini, E.; Maremmani, C.; Moschetti, A.; Esposito, D.; Cavallo, F. Comparative Motor Pre-clinical Assessment in Parkinson’s Disease Using Supervised Machine Learning Approaches. Ann. Biomed. Eng. 2018, 46, 2057–2068. [Google Scholar] [CrossRef] [PubMed]

- Rehman, R.Z.U.; Del Din, S.; Shi, J.Q.; Galna, B.; Lord, S.; Yarnall, A.J.; Guan, Y.; Rochester, L. Comparison of Walking Protocols and Gait Assessment Systems for Machine Learning-Based Classification of Parkinson’s Disease. Sensors 2019, 19, 5363. [Google Scholar] [CrossRef]

- Buckley, C.; Galna, B.; Rochester, L.; Mazzà, C. Quantification of upper body movements during gait in older adults and in those with Parkinson’s disease: Impact of acceleration realignment methodologies. Gait Posture 2017, 52, 265–271. [Google Scholar] [CrossRef]

- Cai, G.; Shi, W.; Wang, Y.; Weng, H.; Chen, L.; Yu, J.; Chen, Z.; Lin, F.; Ren, K.; Zeng, Y.; et al. Specific Distribution of Digital Gait Biomarkers in Parkinson’s Disease Using Body-Worn Sensors and Machine Learning. J. Gerontol. Biol. Sci. Med. Sci. 2023, 78, 1348–1354. [Google Scholar] [CrossRef] [PubMed]

- Jovanovic, L.; Damaševičius, R.; Matić, R.; Kabiljo, M.; Simic, V.; Kunjadic, G.; Antonijevic, M.; Zivkovic, M.; Bacanin, N. Detecting Parkinson’s disease from shoe-mounted accelerometer sensors using Convolutional Neural Networks optimized with modified metaheuristics. PeerJ Comput. Sci. 2024, 10, e2031. [Google Scholar] [CrossRef] [PubMed]

- Battista, L.; Romaniello, A. A New Wrist-Worn Tool Supporting the Diagnosis of Parkinsonian Motor Syndromes. Sensors 2024, 24, 1965. [Google Scholar] [CrossRef]

- Yue, P.; Li, Z.; Zhou, M.; Wang, X.; Yang, P. Wearable-Sensor-Based Weakly Supervised Parkinson’s Disease Assessment with Data Augmentation. Sensors 2024, 24, 1196. [Google Scholar] [CrossRef]

- Bailo, G.; Saibene, F.L.; Bandini, V.; Arcuri, P.; Salvatore, A.; Meloni, M.; Castagna, A.; Navarro, J.; Lencioni, T.; Ferrarin, M.; et al. Characterization of Walking in Mild Parkinson’s Disease: Reliability, Validity and Discriminant Ability of the Six-Minute Walk Test Instrumented with a Single Inertial Sensor. Sensors 2024, 24, 662. [Google Scholar] [CrossRef]

- Nair, P.; Baghini, M.S.; Pendharkar, G.; Chung, H. Detecting early-stage Parkinson’s disease from gait data. Proc. Inst. Mech. Eng. H 2023, 237, 1287–1296. [Google Scholar] [CrossRef]

- Keloth, S.M.; Viswanathan, R.; Jelfs, B.; Arjunan, S.; Raghav, S.; Kumar, D. Which Gait Parameters and Walking Patterns Show the Significant Differences Between Parkinson’s Disease and Healthy Participants? Biosensors 2019, 9, 59. [Google Scholar] [CrossRef]

- Trabassi, D.; Serrao, M.; Varrecchia, T.; Ranavolo, A.; Coppola, G.; De Icco, R.; Tassorelli, C.; Castiglia, S.F. Machine Learning Approach to Support the Detection of Parkinson’s Disease in IMU-Based Gait Analysis. Sensors 2022, 22, 3700. [Google Scholar] [CrossRef]

- Marin, F.; Warmerdam, E.; Marin, Z.; Ben Mansour, K.; Maetzler, W.; Hansen, C. Scoring the Sit-to-Stand Performance of Parkinson’s Patients with a Single Wearable Sensor. Sensors 2022, 22, 8340. [Google Scholar] [CrossRef]

- Gourrame, K.; Griškevičius, J.; Haritopoulos, M.; Lukšys, D.; Jatužis, D.; Kaladytė-Lokominienė, R.; Bunevičiūtė, R.; Mickutė, G. Parkinson’s disease classification with CWNN: Using wavelet transformations and IMU data fusion for improved accuracy. Technol. Health Care 2023, 31, 2447–2455. [Google Scholar] [CrossRef] [PubMed]

- Meng, L.; Pang, J.; Yang, Y.; Chen, L.; Xu, R.; Ming, D. Inertial-Based Gait Metrics During Turning Improve the Detection of Early-Stage Parkinson’s Disease Patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 1472–1482. [Google Scholar] [CrossRef] [PubMed]

- Caramia, C.; Torricelli, D.; Schmid, M.; Muñoz-Gonzalez, A.; Gonzalez-Vargas, J.; Grandas, F.; Pons, J.L. IMU-Based Classification of Parkinson’s Disease From Gait: A Sensitivity Analysis on Sensor Location and Feature Selection. IEEE J. Biomed. Health Inform. 2018, 22, 1765–1774. [Google Scholar] [CrossRef]

- Mahadevan, N.; Demanuele, C.; Zhang, H.; Volfson, D.; Ho, B.; Erb, M.; Patel, S. Development of digital biomarkers for resting tremor and bradykinesia using a wrist-worn wearable device. npj Digit. Med. 2020, 3, 5. [Google Scholar] [CrossRef]

- Lin, Z.; Dai, H.; Xiong, Y.; Xia, X.; Horng, S.J. Quantification Assessment of Bradykinesia in Parkinson’s DISEASE Based on a Wearable Device. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Republic of Korea, 11–15 July 2017; pp. 803–806. [Google Scholar] [CrossRef]

- Borzì, L.; Mazzetta, I.; Zampogna, A.; Suppa, A.; Irrera, F.; Olmo, G. Predicting Axial Impairment in Parkinson’s Disease through a Single Inertial Sensor. Sensors 2022, 22, 412. [Google Scholar] [CrossRef]

- Ymeri, G.; Salvi, D.; Olsson, C.M.; Wassenburg, M.V.; Tsanas, A.; Svenningsson, P. Quantifying Parkinson’s disease severity using mobile wearable devices and machine learning: The ParkApp pilot study protocol. BMJ Open 2023, 13, e077766. [Google Scholar] [CrossRef] [PubMed]

- Bobić, V.; Djurić-Jovičić, M.; Dragašević, N.; Popović, M.B.; Kostić, V.S.; Kvaščev, G. An Expert System for Quantification of Bradykinesia Based on Wearable Inertial Sensors. Sensors 2019, 19, 2644. [Google Scholar] [CrossRef]

- Han, Y.; Liu, X.; Zhang, N.; Zhang, X.; Zhang, B.; Wang, S.; Liu, T.; Yi, J. Automatic Assessments of Parkinsonian Gait with Wearable Sensors for Human Assistive Systems. Sensors 2023, 23, 2104. [Google Scholar] [CrossRef]

- Heijmans, M.; Habets, J.; Herff, C.; Aarts, J.; Stevens, A.; Kuijf, M.; Kubben, P. Monitoring Parkinson’s disease symptoms during daily life: A feasibility study. npj Park. Dis. 2019, 5, 21. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Jiang, X.; Zhong, M.; Shen, B.; Zhu, J.; Pan, Y.; Dong, J.; Xu, P.; Zhang, W.; Zhang, l. Wearable Sensors Measure Ankle Joint Changes of Patients with Parkinson’s Disease before and after Acute Levodopa Challenge. Park. Dis. 2020, 2020, 2976535. [Google Scholar] [CrossRef]

- Wu, X.; Ma, L.; Wei, P.; Shan, Y.; Chan, P.; Wang, K.; Zhao, G. Wearable sensor devices can automatically identify the ON-OFF status of patients with Parkinson’s disease through an interpretable machine learning model. Front. Neurol. 2024, 15, 1387477. [Google Scholar] [CrossRef]

- Pugh, R.J.; Higgins, R.D.; Min, H.; Wutzke, C.J.; Guccione, A.A. Turns while walking among individuals with Parkinson’s disease following overground locomotor training: A pilot study. Clin. Biomech. 2024, 114, 106234. [Google Scholar] [CrossRef]

- Delrobaei, M.; Memar, S.; Pieterman, M.; Stratton, T.W.; McIsaac, K.; Jog, M. Towards remote monitoring of Parkinson’s disease tremor using wearable motion capture systems. J. Neurol. Sci. 2018, 384, 38–45. [Google Scholar] [CrossRef] [PubMed]

- Memar, S.; Delrobaei, M.; Pieterman, M.; McIsaac, K.; Jog, M. Quantification of whole-body bradykinesia in Parkinson’s disease participants using multiple inertial sensors. J. Neurol. Sci. 2018, 387, 157–165. [Google Scholar] [CrossRef]

- di Biase, L.; Summa, S.; Tosi, J.; Taffoni, F.; Marano, M.; Cascio Rizzo, A.; Vecchio, F.; Formica, D.; Di Lazzaro, V.; Di Pino, G.; et al. Quantitative Analysis of Bradykinesia and Rigidity in Parkinson’s Disease. Front. Neurol. 2018, 9, 121. [Google Scholar] [CrossRef]

- Delrobaei, M.; Baktash, N.; Gilmore, G.; McIsaac, K.; Jog, M. Using Wearable Technology to Generate Objective Parkinson’s Disease Dyskinesia Severity Score: Possibilities for Home Monitoring. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1853–1863. [Google Scholar] [CrossRef] [PubMed]

- Singh, M.; Prakash, P.; Kaur, R.; Sowers, R.; Brašić, J.R.; Hernandez, M.E. A Deep Learning Approach for Automatic and Objective Grading of the Motor Impairment Severity in Parkinson’s Disease for Use in Tele-Assessments. Sensors 2023, 23, 9004. [Google Scholar] [CrossRef] [PubMed]

- Prakash, P.; Kaur, R.; Levy, J.; Sowers, R.; Brašić, J.; Hernandez, M.E. A Deep Learning Approach for Grading of Motor Impairment Severity in Parkinson’s Disease. In Proceedings of the 2023 45th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Sydney, Australia, 24–27 July 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Bremm, R.P.; Pavelka, L.; Garcia, M.M.; Mombaerts, L.; Krüger, R.; Hertel, F. Sensor-Based Quantification of MDS-UPDRS III Subitems in Parkinson’s Disease Using Machine Learning. Sensors 2024, 24, 2195. [Google Scholar] [CrossRef] [PubMed]

- Huo, W.; Angeles, P.; Tai, Y.; Pavese, N.; Wilson, S.; Hu, M.; Vaidyanathan, R. A Heterogeneous Sensing Suite for Multisymptom Quantification of Parkinson’s Disease. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1397–1406. [Google Scholar] [CrossRef] [PubMed]

- Yan, F.; Gong, J.; Zhang, Q.; He, H. Learning Motion Primitives for the Quantification and Diagnosis of Mobility Deficits. IEEE Trans. Biomed. Eng. 2024, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Hssayeni, M.D.; Jimenez-Shahed, J.; Burack, M.A.; Ghoraani, B. Wearable Sensors for Estimation of Parkinsonian Tremor Severity during Free Body Movements. Sensors 2019, 19, 4215. [Google Scholar] [CrossRef] [PubMed]

- Zajki-Zechmeister, T.; Kögl, M.; Kalsberger, K.; Franthal, S.; Homayoon, N.; Katschnig-Winter, P.; Wenzel, K.; Zajki-Zechmeister, L.; Schwingenschuh, P. Quantification of tremor severity with a mobile tremor pen. Heliyon 2020, 6, e04702. [Google Scholar] [CrossRef] [PubMed]

- Lonini, L.; Dai, A.; Shawen, N.; Simuni, T.; Poon, C.; Shimanovich, L.; Daeschler, M.; Ghaffari, R.; Rogers, J.; Jayaraman, A. Wearable sensors for Parkinson’s disease: Which data are worth collecting for training symptom detection models. npj Digit. Med. 2018, 1, 64. [Google Scholar] [CrossRef] [PubMed]

- Tsakanikas, V.; Ntanis, A.; Rigas, G.; Androutsos, C.; Boucharas, D.; Tachos, N.; Skaramagkas, V.; Chatzaki, C.; Kefalopoulou, Z.; Tsiknakis, M.; et al. Evaluating Gait Impairment in Parkinson’s Disease from Instrumented Insole and IMU Sensor Data. Sensors 2023, 23, 3902. [Google Scholar] [CrossRef]

- Chomiak, T.; Xian, W.; Pei, Z.; Hu, B. A novel single-sensor-based method for the detection of gait-cycle breakdown and freezing of gait in Parkinson’s disease. J. Neural Transm. 2019, 126, 1029–1036. [Google Scholar] [CrossRef]

- Punin, C.; Barzallo, B.; Clotet, R.; Bermeo, A.; Bravo, M.; Bermeo, J.P.; Llumiguano, C. A Non-Invasive Medical Device for Parkinson’s Patients with Episodes of Freezing of Gait. Sensors 2019, 19, 737. [Google Scholar] [CrossRef]

- Li, B.; Zhang, Y.; Tang, L.; Gao, C.; Gu, D. Automatic Detection System for Freezing of Gait in Parkinson’s Disease Based on the Clustering Algorithm. In Proceedings of the 2018 2nd IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Xi’an, China, 25–27 May 2018; pp. 1640–1644. [Google Scholar]

- Camps, J.; Samà, A.; Martín, M.; Rodríguez-Martín, D.; Pérez-López, C.; Moreno Arostegui, J.M.; Cabestany, J.; Català, A.; Alcaine, S.; Mestre, B.; et al. Deep learning for freezing of gait detection in Parkinson’s disease patients in their homes using a waist-worn inertial measurement unit. Knowl.-Based Syst. 2018, 139, 119–131. [Google Scholar] [CrossRef]

- Samà, A.; Rodríguez-Martín, D.; Pérez-López, C.; Català, A.; Alcaine, S.; Mestre, B.; Prats, A.; Crespo, M.C.; Bayés, À. Determining the optimal features in freezing of gait detection through a single waist accelerometer in home environments. Pattern Recognit. Lett. 2018, 105, 135–143. [Google Scholar] [CrossRef]

- Capecci, M.; Pepa, L.; Verdini, F.; Ceravolo, M.G. A smartphone-based architecture to detect and quantify freezing of gait in Parkinson’s disease. Gait Posture 2016, 50, 28–33. [Google Scholar] [CrossRef] [PubMed]

- Reches, T.; Dagan, M.; Herman, T.; Gazit, E.; Gouskova, N.A.; Giladi, N.; Manor, B.; Hausdorff, J.M. Using Wearable Sensors and Machine Learning to Automatically Detect Freezing of Gait during a FOG-Provoking Test. Sensors 2020, 20, 4474. [Google Scholar] [CrossRef] [PubMed]

- Pham, T.T.; Moore, S.T.; Lewis, S.J.G.; Nguyen, D.N.; Dutkiewicz, E.; Fuglevand, A.J.; McEwan, A.L.; Leong, P.H. Freezing of Gait Detection in Parkinson’s Disease: A Subject-Independent Detector Using Anomaly Scores. IEEE Trans. Biomed. Eng. 2017, 64, 2719–2728. [Google Scholar] [CrossRef] [PubMed]

- Masiala, S.; Huijbers, W.; Atzmueller, M. Feature-set-engineering for detecting freezing of gait in Parkinson’s disease using deep recurrent neural networks. arXiv 2019, arXiv:1909.03428. [Google Scholar]

- Mancini, M.; Shah, V.; Stuart, S.; Curtze, C.; Horak, F.; Safarpour, D.; Nutt, J. Measuring freezing of gait during daily-life: An open-source, wearable sensors approach. J. Neuroeng. Rehabil. 2021, 18, 1. [Google Scholar] [CrossRef] [PubMed]

- Marcante, A.; Di Marco, R.; Gentile, G.; Pellicano, C.; Assogna, F.; Pontieri, F.E.; Spalletta, G.; Macchiusi, L.; Gatsios, D.; Giannakis, A.; et al. Foot Pressure Wearable Sensors for Freezing of Gait Detection in Parkinson’s Disease. Sensors 2021, 21, 128. [Google Scholar] [CrossRef]

- Sigcha, L.; Costa, N.; Pavón, I.; Costa, S.; Arezes, P.; López, J.M.; De Arcas, G. Deep Learning Approaches for Detecting Freezing of Gait in Parkinson’s Disease Patients through On-Body Acceleration Sensors. Sensors 2020, 20, 1895. [Google Scholar] [CrossRef]

- Bikias, T.; Iakovakis, D.; Hadjidimitriou, S.; Charisis, V.; Hadjileontiadis, L.J. DeepFoG: An IMU-Based Detection of Freezing of Gait Episodes in Parkinson’s Disease Patients via Deep Learning. Front. Robot. AI 2021, 8, 537384. [Google Scholar] [CrossRef]

- Pardoel, S.; Shalin, G.; Nantel, J.; Lemaire, E.D.; Kofman, J. Early Detection of Freezing of Gait during Walking Using Inertial Measurement Unit and Plantar Pressure Distribution Data. Sensors 2021, 21, 2246. [Google Scholar] [CrossRef]

- Shi, B.; Tay, A.; Au, W.L.; Tan, D.M.L.; Chia, N.S.Y.; Yen, S.C. Detection of Freezing of Gait Using Convolutional Neural Networks and Data From Lower Limb Motion Sensors. IEEE Trans. Biomed. Eng. 2022, 69, 2256–2267. [Google Scholar] [CrossRef] [PubMed]

- Rennie, L.; Löfgren, N.; Moe-Nilssen, R.; Opheim, A.; Dietrichs, E.; Franzén, E. The reliability of gait variability measures for individuals with Parkinson’s disease and healthy older adults—The effect of gait speed. Gait Posture 2018, 62, 505–509. [Google Scholar] [CrossRef] [PubMed]

- Myers, P.S.; McNeely, M.E.; Pickett, K.A.; Duncan, R.P.; Earhart, G.M. Effects of exercise on gait and motor imagery in people with Parkinson disease and freezing of gait. Park. Relat. Disord. 2018, 53, 89–95. [Google Scholar] [CrossRef] [PubMed]

- Zadka, A.; Rabin, N.; Gazit, E.; Mirelman, A.; Nieuwboer, A.; Rochester, L.; Del Din, S.; Pelosin, E.; Avanzino, L.; Bloem, B.R.; et al. A wearable sensor and machine learning estimate step length in older adults and patients with neurological disorders. npj Digit. Med. 2024, 7, 142. [Google Scholar] [CrossRef] [PubMed]

- Haji Ghassemi, N.; Hannink, J.; Roth, N.; Gaßner, H.; Marxreiter, F.; Klucken, J.; Eskofier, B.M. Turning Analysis during Standardized Test Using On-Shoe Wearable Sensors in Parkinson’s Disease. Sensors 2019, 19, 3103. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, A.; Roth, N.; Haji Ghassemi, N.; Hannink, J.; Seel, T.; Klucken, J.; Gaßner, H.; Eskofier, B. Development and clinical validation of inertial sensor-based gait-clustering methods in Parkinson’s disease. J. Neuroeng. Rehabil. 2019, 16, 77. [Google Scholar] [CrossRef] [PubMed]

- Liuzzi, P.; Carpinella, I.; Anastasi, D.; Gervasoni, E.; Lencioni, T.; Bertoni, R.; Carrozza, M.C.; Cattaneo, D.; Ferrarin, M.; Mannini, A. Machine learning based estimation of dynamic balance and gait adaptability in persons with neurological diseases using inertial sensors. Sci. Rep. 2023, 13, 8640. [Google Scholar] [CrossRef]

- Stack, E.; Agarwal, V.; King, R.; Burnett, M.; Tahavori, F.; Janko, B.; Harwin, W.; Ashburn, A.; Kunkel, D. Identifying balance impairments in people with Parkinson’s disease using video and wearable sensors. Gait Posture 2018, 62, 321–326. [Google Scholar] [CrossRef]

- Jehu, D.; Nantel, J. Fallers with Parkinson’s disease exhibit restrictive trunk control during walking. Gait Posture 2018, 65, 246–250. [Google Scholar] [CrossRef]

- Zhang, X.; Jin, Y.; Wang, M.; Ji, C.; Chen, Z.; Fan, W.; Rainer, T.; Guan, Q.; Li, Q. The impact of anxiety on gait impairments in Parkinson’s disease: Insights from sensor-based gait analysis. J. Neuroeng. Rehabil. 2024, 21, 68. [Google Scholar] [CrossRef] [PubMed]

- Romijnders, R.; Warmerdam, E.; Hansen, C.; Welzel, J.; Schmidt, G.; Maetzler, W. Validation of IMU-based gait event detection during curved walking and turning in older adults and Parkinson’s Disease patients. J. Neuroeng. Rehabil. 2021, 18, 28. [Google Scholar] [CrossRef] [PubMed]

- Lukšys, D.; Griškevičius, J. Application of nonlinear analysis for the assessment of gait in patients with Parkinson’s disease. Technol. Health Care 2022, 30, 201–208. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Deng, K.; Li, H.; Albin, R.L.; Guan, Y. Deep learning identifies digital biomarkers for self-reported Parkinson’s disease. Patterns 2020, 1, 100042. [Google Scholar] [CrossRef] [PubMed]

- Aich, S.; Pradhan, P.M.; Park, J.; Sethi, N.; Vathsa, V.S.S.; Kim, H.C. A validation study of freezing of gait (FoG) detection and machine-learning-based FoG prediction using estimated gait characteristics with a wearable accelerometer. Sensors 2018, 18, 3287. [Google Scholar] [CrossRef] [PubMed]

- Klucken, J.; Barth, J.; Kugler, P.; Schlachetzki, J.; Henze, T.; Marxreiter, F.; Kohl, Z.; Steidl, R.; Hornegger, J.; Eskofier, B.; et al. Unbiased and mobile gait analysis detects motor impairment in Parkinson’s disease. PLOS ONE 2013, 8, e56956. [Google Scholar] [CrossRef] [PubMed]

- Patel, S.; Lorincz, K.; Hughes, R.; Huggins, N.; Growdon, J.; Standaert, D.; Akay, M.; Dy, J.; Welsh, M.; Bonato, P. Monitoring motor fluctuations in patients with Parkinson’s disease using wearable sensors. IEEE Trans. Inf. Technol. Biomed. 2009, 13, 864–873. [Google Scholar] [CrossRef] [PubMed]

- Bzdok, D.; Altman, N.; Krzywinski, M. Statistics versus machine learning. Nat. Methods 2018, 15, 233–234. [Google Scholar] [CrossRef]

- Negrini, S.; Serpelloni, M.; Amici, C.; Gobbo, M.; Silvestro, C.; Buraschi, R.; Borboni, A.; Crovato, D.; Lopomo, N.F. Use of Wearable Inertial Sensor in the Assessment of Timed-Up-and-Go Test: Influence of Device Placement on Temporal Variable Estimation. In Proceedings of the International Conference on Wireless Mobile Communication and Healthcare, Vienna, Austria, 14–15 November 2017; pp. 310–317. [Google Scholar]

- Palmerini, L.; Rocchi, L.; Mellone, S.; Valzania, F.; Chiari, L. Feature selection for accelerometer-based posture analysis in Parkinson’s disease. IEEE Trans. Inf. Technol. Biomed. 2011, 15, 481–490. [Google Scholar] [CrossRef]

- Gibb, W.R.; Lees, A.J. The relevance of the Lewy body to the pathogenesis of idiopathic Parkinson’s disease. J. Neurol. Neurosurg. Psychiatry 1988, 51, 745–752. [Google Scholar] [CrossRef]

- Keshtkarjahromi, M.; Abraham, D.S.; Gruber-Baldini, A.L.; Schrader, K.; Reich, S.G.; Savitt, J.M.; Coelln, R.V.; Shulman, L.M. Confirming Parkinson disease diagnosis: Patterns of diagnostic changes by movement disorder specialists. Park. Dis. 2022, 2022, 5535826. [Google Scholar] [CrossRef] [PubMed]

- Ghourchian, S.; Gruber-Baldini, A.L.; Shakya, S.; Herndon, J.; Reich, S.G.; von Coelln, R.; Savitt, J.M.; Shulman, L.M. Weight loss and weight gain in Parkinson disease. Park. Relat. Disord. 2021, 83, 31–36. [Google Scholar] [CrossRef]

- Shulman, L.M.; Gruber-Baldini, A.L.; Anderson, K.E.; Fishman, P.S.; Reich, S.G.; Weiner, W.J. The clinically important difference on the unified Parkinson’s disease rating scale. Arch. Neurol. 2010, 67, 64–70. [Google Scholar] [CrossRef]

- Miller, M.D.; Paradis, C.F.; Houck, P.R.; Mazumdar, S.; Stack, J.A.; Rifai, A.; Mulsant, B.; Reynolds, C.F. Rating chronic medical illness burden in geropsychiatric practice and research: Application of the cumulative illness rating scale. Psychiatry Res. 1992, 41, 237–248. [Google Scholar] [CrossRef]

- Fillenbaum, G.G.; Smyer, M.A. The development, validity, and reliability of the OARS multidimensional functional assessment questionnaire. J. Gerontol. 1981, 36, 428–434. [Google Scholar] [CrossRef] [PubMed]

- Cella, D.; Choi, S.W.; Condon, D.M.; Schalet, B.; Hays, R.D.; Rothrock, N.E.; Yount, S.; Cook, K.F.; Gershon, R.C.; Amtmann, D.; et al. PROMIS® adult health profiles: Efficient short-form measures of seven health domains. Value Health 2019, 22, 537–544. [Google Scholar] [CrossRef]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The montreal cognitive assessment, MoCA: A brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef] [PubMed]

- Goetz, C.G. The unified Parkinson’s disease rating scale (UPDRS): Status and recommendations. Mov. Disord. 2003, 18, 738–750. [Google Scholar] [CrossRef]

- Goetz, C.G.; Poewe, W.; Rascol, O.; Sampaio, C.; Stebbins, G.T.; Counsell, C.; Giladi, N.; Holloway, R.G.; Moore, C.G.; Wenning, G.K.; et al. Movement Disorder Society Task Force report on the Hoehn and Yahr staging scale: Status and recommendations. Mov. Disord. 2004, 19, 1020–1028. [Google Scholar] [CrossRef]

- von Coelln, R.; Dawe, R.J.; Leurgans, S.E.; Curran, T.A.; Truty, T.; Yu, L.; Barnes, L.L.; Shulman, J.M.; Shulman, L.M.; Bennett, D.A.; et al. Quantitative mobility metrics from a wearable sensor predict incident parkinsonism in older adults. Park. Relat. Disord. 2019, 65, 190–196. [Google Scholar] [CrossRef] [PubMed]

- Dawe, R.J.; Leurgans, S.E.; Yang, J.; Bennett, J.M.; Hausdorff, J.M.; Lim, A.S.; Gaiteri, C.; Bennett, D.A.; Buchman, A.S. Association between quantitative gait and balance measures and total daily physical activity in community-dwelling older adults. J. Gerontol. Biol. Sci. Med. Sci. 2018, 73, 636–642. [Google Scholar] [CrossRef] [PubMed]

- Bennett, D.A.; Buchman, A.S.; Boyle, P.A.; Barnes, L.L.; Wilson, R.S.; Schneider, J.A. Religious orders study and Rush memory and aging project. J. Alzheimer’S Dis. 2018, 64, S161–S189. [Google Scholar] [CrossRef]

- Koop, M.M.; Ozinga, S.J.; Rosenfeldt, A.B.; Alberts, J.L. Quantifying turning behavior and gait in Parkinson’s disease using mobile technology. IBRO Rep. 2018, 5, 10–16. [Google Scholar] [CrossRef]

- Burden, R.L.; Faires, J. Numerical Analysis, 9th ed.; Brooks/Cole: Pacific Grove, CA, USA, 2015. [Google Scholar]

- Salarian, A.; Zampieri, C.; Horak, F.B.; Carlson-Kuhta, P.; Nutt, J.G.; Aminian, K. Analyzing 180 degrees turns using an inertial system reveals early signs of progression of Parkinson’s disease. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 224–227. [Google Scholar]

- Butterworth, S. On the theory of filter amplifiers. Exp. Wirel. Wirel. Eng. 1930, 7, 536–541. [Google Scholar]

- Lomb, N.R. Least-squares frequency analysis of unequally spaced data. Astrophys. Space Sci. 1976, 39, 447–462. [Google Scholar] [CrossRef]

- Scargle, J.D. Studies in astronomical time series analysis. II. Statistical aspects of spectral analysis of unevenly spaced data. Astrophys. J. 1982, 263, 835–853. [Google Scholar] [CrossRef]

- Aich, S.; Youn, J.; Chakraborty, S.; Pradhan, P.M.; Park, J.H.; Park, S.; Park, J. A supervised machine learning approach to detect the on/off state in Parkinson’s disease using wearable based gait signals. Diagnostics 2020, 10, 421. [Google Scholar] [CrossRef]

- Dehkordi, M.B.; Zaraki, A.; Setchi, R. Feature extraction and feature selection in smartphone-based activity recognition. Procedia Comput. Sci. 2020, 176, 2655–2664. [Google Scholar] [CrossRef]

- Mehta, A.; Vaddadi, S.K.; Sharma, V.; Kala, P. A Phase-Wise Analysis of Machine Learning Based Human Activity Recognition using Inertial Sensors. In Proceedings of the 2020 IEEE 17th India Council International Conference (INDICON), New Delhi, India, 10–13 December 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Weiss, A.; Herman, T.; Plotnik, M.; Brozgol, M.; Giladi, N.; Hausdorff, J.M. An instrumented timed up and go: The added value of an accelerometer for identifying fall risk in idiopathic fallers. Physiol. Meas. 2011, 32, 2003. [Google Scholar] [CrossRef]

- Akaike, H. Information Theory and an Extension of the Maximum Likelihood Principle. In Selected Papers of Hirotugu Akaike; Springer: New York, NY, USA, 1998; pp. 199–213. [Google Scholar] [CrossRef]

- Buchman, A.S.; Dawe, R.J.; Leurgans, S.E.; Curran, T.A.; Truty, T.; Yu, L.; Barnes, L.L.; Hausdorff, J.M.; Bennett, D.A. Different combinations of mobility metrics derived from a wearable sensor are associated with distinct health outcomes in older adults. J. Gerontol. Biol. Sci. Med. Sci. 2020, 75, 1176. [Google Scholar] [CrossRef]

- Poole, V.N.; Dawe, R.J.; Lamar, M.; Esterman, M.; Barnes, L.; Leurgans, S.E.; Bennett, D.A.; Hausdorff, J.M.; Buchman, A.S. Dividing attention during the Timed Up and Go enhances associations of several subtask performances with MCI and cognition. PLoS ONE 2022, 17, e0269398. [Google Scholar] [CrossRef]

- der Laan, M.J.; Polley, E.C.; Hubbard, A.E. Super learner. Stat. Appl. Genet. Mol. Biol. 2007, 6, 25. [Google Scholar] [CrossRef] [PubMed]

- Nelder, J.A.; Wedderburn, R.W.M. Generalized Linear Models. J. R. Stat. Soc. Ser. A 1972, 135, 370–384. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Gregorutti, B.; Michel, B.; Saint-Pierre, P. Grouped variable importance with random forests and application to multiple functional data analysis. Comput. Stat. Data Anal. 2015, 90, 15–35. [Google Scholar] [CrossRef]

- Canty, A.; Ripley, B.D. boot: Bootstrap R (S-Plus) Functions. R Package Version 1.3-30. 2024. Available online: https://cran.r-project.org/web/packages/boot/index.html (accessed on 17 June 2024).

- Davison, A.C.; Hinkley, D.V. Bootstrap Methods and Their Applications; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Adler, C.H.; Beach, T.G.; Hentz, J.G.; Shill, H.A.; Caviness, J.N.; Driver-Dunckley, E.; Sabbagh, M.N.; Sue, L.I.; Jacobson, S.A.; Belden, C.M.; et al. Low clinical diagnostic accuracy of early vs advanced Parkinson disease: Clinicopathologic study. Neurology 2014, 83, 406–412. [Google Scholar] [CrossRef]

- Das, R. A comparison of multiple classification methods for diagnosis of Parkinson disease. Expert Syst. Appl. 2010, 37, 1568–1572. [Google Scholar] [CrossRef]

- Muniz, A.M.S.; Liu, H.; Lyons, K.E.; Pahwa, R.; Liu, W.; Nobre, F.F.; Nadal, J. Comparison among probabilistic neural network, support vector machine and logistic regression for evaluating the effect of subthalamic stimulation in Parkinson disease on ground reaction force during gait. J. Biomech. 2010, 43, 720–726. [Google Scholar] [CrossRef] [PubMed]

- Tien, I.; Glaser, S.D.; Aminoff, M.J. Characterization of gait abnormalities in Parkinson’s disease using a wireless inertial sensor system. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2010, 2010, 3353–3356. [Google Scholar] [CrossRef] [PubMed]

- Ferreira, M.I.A.S.N.; Barbieri, F.A.; Moreno, V.C.; Penedo, T.; Tavares, J.M.R.S. Machine learning models for Parkinson’s disease detection and stage classification based on spatial-temporal gait parameters. Gait Posture 2022, 98, 49–55. [Google Scholar] [CrossRef] [PubMed]

- Rehman, R.Z.U.; Buckley, C.; Micó-Amigo, M.E.; Kirk, C.; Dunne-Willows, M.; Mazzà, C.; Shi, J.Q.; Alcock, L.; Rochester, L.; Din, S.D. Accelerometry-based digital gait characteristics for classification of Parkinson’s disease: What counts? IEEE Open J. Eng. Med. Biol. 2020, 1, 65–73. [Google Scholar] [CrossRef]

- Moon, S.; Song, H.J.; Sharma, V.D.; Lyons, K.E.; Pahwa, R.; Akinwuntan, A.E.; Devos, H. Classification of Parkinson’s disease and essential tremor based on balance and gait characteristics from wearable motion sensors via machine learning techniques: A data-driven approach. J. Neuroeng. Rehabil. 2020, 17, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Kleanthous, N.; Hussain, A.J.; Khan, W.; Liatsis, P. A new machine learning based approach to predict freezing of gait. Pattern Recognit. Lett. 2020, 140, 119–126. [Google Scholar] [CrossRef]

- Mirelman, A.; Frank, M.B.O.; Melamed, M.; Granovsky, L.; Nieuwboer, A.; Rochester, L.; Din, S.D.; Avanzino, L.; Pelosin, E.; Bloem, B.R.; et al. Detecting sensitive mobility features for Parkinson’s disease stages via machine learning. Mov. Disord. 2021, 36, 2144–2155. [Google Scholar] [CrossRef] [PubMed]

- Kabir, M.F.; Ludwig, S. Enhancing the performance of classification using super learning. Data-Enabled Discov. Appl. 2019, 3, 5. [Google Scholar] [CrossRef]

- Demonceau, M.; Donneau, A.F.; Croisier, J.L.; Skawiniak, E.; Boutaayamou, M.; Maquet, D.; Garraux, G. Contribution of a trunk accelerometer system to the characterization of gait in patients with mild-to-moderate Parkinson’s disease. IEEE J. Biomed. Health Inform. 2015, 19, 1803–1808. [Google Scholar] [CrossRef]

- Van Uem, J.M.T.; Walgaard, S.; Ainsworth, E.; Hasmann, S.E.; Heger, T.; Nussbaum, S.; Hobert, M.A.; Micó-Amigo, E.M.; Van Lummel, R.C.; Berg, D.; et al. Quantitative Timed-Up-and-Go Parameters in Relation to Cognitive Parameters and Health-Related Quality of Life in Mild-to-Moderate Parkinson’s Disease. PLoS ONE 2016, 11, e0151997. [Google Scholar] [CrossRef]

- Shawen, N.; O’Brien, M.; Venkatesan, S.; Lonini, L.; Simuni, T.; Hamilton, J.; Ghaffari, R.; Rogers, J.; Jayaraman, A. Role of data measurement characteristics in the accurate detection of Parkinson’s disease symptoms using wearable sensors. J. Neuroeng. Rehabil. 2020, 17, 52. [Google Scholar] [CrossRef]

- Strobl, C.; Boulesteix, A.L.; Kneib, T.; Augustin, T.; Zeileis, A. Conditional variable importance for random forests. BMC Bioinform. 2008, 9, 307. [Google Scholar] [CrossRef]

- Gregorutti, B.; Michel, B.; Saint-Pierre, P. Correlation and variable importance in random forests. Stat. Comput. 2017, 27, 659–678. [Google Scholar] [CrossRef]

- Cooley, J.W.; Tukey, J.W. An algorithm for the machine calculation of complex fourier series. Math. Comput. 1965, 19, 297–301. [Google Scholar] [CrossRef]

- Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Feature reduction and selection for EMG signal classification. Expert Syst. Appl. 2012, 39, 7420–7431. [Google Scholar] [CrossRef]

- Altin, C.; Er, O. Comparison of different time and frequency domain feature extraction methods on elbow gesture’s EMG. Eur. J. Interdiscip. Stud. 2016, 5, 35. [Google Scholar] [CrossRef]

- Sinderby, C.; Lindstrom, L.; Grassino, A.E. Automatic assessment of electromyogram quality. J. Appl. Physiol. 1995, 79, 1803–1815. [Google Scholar] [CrossRef]

- Pepa, L.; Ciabattoni, L.; Verdini, F.; Capecci, M.; Ceravolo, M. Smartphone based fuzzy logic freezing of gait detection in Parkinson’s disease. In Proceedings of the 2014 IEEE/ASME 10th International Conference on Mechatronic and Embedded Systems and Applications (MESA), Ancona, Italy, 10–12 September 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Bao, L.; Intille, S.S. Activity recognition from user-annotated acceleration data. In Proceedings of the International Conference on Pervasive Computing, Vienna, Austria, 21–23 April 2004; pp. 1–17. [Google Scholar] [CrossRef]

- Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Fractal analysis features for weak and single-channel upper-limb EMG signals. Expert Syst. Appl. 2012, 39, 11156–11163. [Google Scholar] [CrossRef]

- Hasni, H.; Yahya, N.; Asirvadam, V.S.; Jatoi, M.A. Analysis of electromyogram (EMG) for detection of neuromuscular disorders. In Proceedings of the 2018 International Conference on Intelligent and Advanced System (ICIAS), Kuala Lumpur, Malaysia, 13–14 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Sukumar, N.; Taran, S.; Bajaj, V. Physical actions classification of surface EMG signals using VMD. In Proceedings of the 2018 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 3–5 April 2018; pp. 0705–0709. [Google Scholar] [CrossRef]

- Kaiser, J. On a simple algorithm to calculate the ‘energy’ of a signal. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Albuquerque, NM, USA, 3–6 April 1990; Volume 1, pp. 381–384. [Google Scholar] [CrossRef]

- Penzel, T.; Kantelhardt, J.; Grote, L.; Peter, J.; Bunde, A. Comparison of detrended fluctuation analysis and spectral analysis for heart rate variability in sleep and sleep apnea. IEEE Trans. Biomed. Eng. 2003, 50, 1143–1151. [Google Scholar] [CrossRef]

- Higuchi, T. Approach to an irregular time series on the basis of the fractal theory. Phys. D Nonlinear Phenom. 1988, 31, 277–283. [Google Scholar] [CrossRef]

- Katz, M.J. Fractals and the analysis of waveforms. Comput. Biol. Med. 1988, 18, 145–156. [Google Scholar] [CrossRef] [PubMed]

- Gneiting, T.; Ševčíková, H.; Percival, D.B. Estimators of fractal dimension: Assessing the roughness of time series and spatial data. Stat. Sci. 2012, 27, 247–277. [Google Scholar] [CrossRef]

- Quiroz, J.C.; Banerjee, A.; Dascalu, S.M.; Lau, S.L. Feature selection for activity recognition from smartphone accelerometer data. Intell. Autom. Soft Comput. 2017, 1–9. [Google Scholar] [CrossRef]

- Ayman, A.; Attalah, O.; Shaban, H. An efficient human activity recognition framework based on wearable IMU wrist sensors. In Proceedings of the 2019 IEEE International Conference on Imaging Systems and Techniques (IST), Abu Dhabi, United Arab Emirates, 9–10 December 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Batool, M.; Jalal, A.; Kim, K. Sensors technologies for human activity analysis based on SVM optimized by PSO algorithm. In Proceedings of the 2019 International Conference on Applied and Engineering Mathematics (ICAEM), Taxila, Pakistan, 27–29 August 2019; pp. 145–150. [Google Scholar] [CrossRef]

- Castiglia, S.F.; Trabassi, D.; Conte, C.; Ranavolo, A.; Coppola, G.; Sebastianelli, G.; Abagnale, C.; Barone, F.; Bighiani, F.; De Icco, R.; et al. Multiscale entropy algorithms to analyze complexity and variability of trunk accelerations time series in subjects with Parkinson’s disease. Sensors 2023, 23, 4983. [Google Scholar] [CrossRef] [PubMed]

- Venables, W.; Ripley, B. Modern Applied Statistics with S; Springer: New York, NY, USA, 2002. [Google Scholar]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley: New York, NY, USA, 1990. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 4768–4777. [Google Scholar]

| Feature | Controls () | All PD () | Mild PD () |

|---|---|---|---|

| Age (years, ) | 64.1 ± 9.8 | 66.9 ± 9.3 | 65.4 ± 9.1 |

| Gender (%male) | 38.0 | 62.0 | 58.4 |

| Height (cm, ) | 168.1 ± 10.9 | 172.2± 10.4 | 172.0 ± 10.3 |

| UPDRS (total, ) | – | 35.5 ± 17.1 | 29.4 ± 13.4 |

| UPDRS (motor-part III, ) | – | 22.2 ± 11.8 | 18.5± 9.7 |

| Disease duration (years, ) | – | 7.8 ± 6.5 | 6.6 ± 5.6 |

| H&Y () | – | 2.2 ± 0.62 | 1.9 ± 0.26 |

| stage 1 (n) | – | 12 | 12 |

| stage 1.5 (n) | – | 4 | 4 |

| stage 2 (n) | – | 169 | 169 |

| stage 2.5 (n) | – | 35 | – |

| stage 3 (n) | – | 25 | – |

| stage 4 (n) | – | 17 | – |

| (a) Controls vs. All PD | (b) Controls vs. Mild PD (H&Y ≤ 2) | ||||

|---|---|---|---|---|---|

| Controls | PD | Controls | PD | ||

| Controls | 41 | 9 | Controls | 36 | 14 |

| PD | 14 | 248 | PD | 11 | 174 |

| Controls vs. PD | All PD | Mild PD |

|---|---|---|

| Number of PD participants | 262 | 185 |

| Accuracy (CI) [%] | 92.6 (88.8, 94.9) | 89.4 (84.3, 92.3) |

| AUC-ROC (CI) | 0.88 (0.83, 0.94) | 0.83 (0.77, 0.90) |

| Sensitivity (CI) | 0.95 (0.91, 0.97) | 0.94 (0.90, 0.97) |

| Specificity (CI) | 0.82 (0.69, 0.91) | 0.72 (0.58, 0.83) |

| F1 score (CI) | 0.96 (0.93, 0.97) | 0.93 (0.90, 0.96) |

| Controls | PD | ||||||

|---|---|---|---|---|---|---|---|

| False Positive | True Negative | False Negative | True Positive | ||||

| All PD | |||||||

| UPDRS_PIII | – | – | – | 15.8 ± 8.3 | 22.5 ± 11.8 | 0.006 | |

| MoCA | – | – | – | 28.6 ± 2.5 | 27.3 ± 3.0 | 0.075 | |

| CIRS-G | – | – | – | 4.7 ± 4.3 | 4.9 ± 3.4 | 0.88 | |

| Age | 70.9 ± 7.3 | 62.6 ± 7.3 | 0.006 | 65.9 ± 6.8 | 66.9 ± 9.3 | 0.30 | |

| Sex (% male) | 11.1 | 36.0 | 0.10 | 50.0 | 63.3 | 0.33 | |

| Medication state (% ON) | – | – | – | 85.7 | 70.6 | 0.44 | |

| H&Y (n) | – | 0.47 | |||||

| 1 | 1 | 11 | |||||

| 1.5 | 1 | 3 | |||||

| 2 | 10 | 159 | |||||

| 2.5 | 1 | 34 | |||||

| 3 | 1 | 24 | |||||

| 4 | 0 | 17 | |||||

| Mild PD | |||||||

| UPDRS_PIII | – | – | – | 13.7 ± 7.4 | 18.7 ± 9.6 | 0.03 | |

| MoCA | – | – | – | 28.1 ± 1.9 | 27.5 ± 2.8 | 0.36 | |

| CIRS-G | – | – | – | 4.9 ± 4.4 | 4.4 ± 3.1 | 0.72 | |

| Age | 71.5 ± 6.3 | 61.3 ± 6.3 | 4.0 × 10−5 | 65.2 ± 9.1 | 69.2 ± 7.5 | 0.12 | |

| Sex (% male) | 42.9 | 36.1 | 0.71 | 45.5 | 60.9 | 0.32 | |

| Medication state (% ON) | – | – | – | 88.2 | 71.3 | 0.36 | |

| H&Y (n) | – | 0.38 | |||||

| 1 | 1 | 11 | |||||

| 1.5 | 1 | 3 | |||||

| 2 | 9 | 160 | |||||

| With Segmented Tasks and No Kinesiological Features | With Unsegmented Tasks and No Kinesiological Features | With Segmented Tasks and Kinesiological Features | |

|---|---|---|---|

| All PD vs. controls | 92.6 | 89.4 (−3.2) | 87.8 (−4.8) |

| Mild PD (H&Y ≤ 2) vs. controls | 89.4 | 84.3 (−5.1) | 88.9 (−0.50) |

| All Tasks | TUG-Only | cogTUG-Only | TUG-Duration | cogTUG-Duration | |

|---|---|---|---|---|---|

| All PD vs. controls | 92.6 | 90.1 (−2.5) | 89.4 (−3.2) | 83.3 (−9.3) | 84.0 (−8.6) |

| Mild PD (H&Y ≤ 2) vs. controls | 89.4 | 77.9 (−11.7) | 87.2 (−2.4) | 78.7 (−10.7) | 77.0 (−12.4) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khalil, R.M.; Shulman, L.M.; Gruber-Baldini, A.L.; Shakya, S.; Fenderson, R.; Van Hoven, M.; Hausdorff, J.M.; von Coelln, R.; Cummings, M.P. Simplification of Mobility Tests and Data Processing to Increase Applicability of Wearable Sensors as Diagnostic Tools for Parkinson’s Disease. Sensors 2024, 24, 4983. https://doi.org/10.3390/s24154983

Khalil RM, Shulman LM, Gruber-Baldini AL, Shakya S, Fenderson R, Van Hoven M, Hausdorff JM, von Coelln R, Cummings MP. Simplification of Mobility Tests and Data Processing to Increase Applicability of Wearable Sensors as Diagnostic Tools for Parkinson’s Disease. Sensors. 2024; 24(15):4983. https://doi.org/10.3390/s24154983

Chicago/Turabian StyleKhalil, Rana M., Lisa M. Shulman, Ann L. Gruber-Baldini, Sunita Shakya, Rebecca Fenderson, Maxwell Van Hoven, Jeffrey M. Hausdorff, Rainer von Coelln, and Michael P. Cummings. 2024. "Simplification of Mobility Tests and Data Processing to Increase Applicability of Wearable Sensors as Diagnostic Tools for Parkinson’s Disease" Sensors 24, no. 15: 4983. https://doi.org/10.3390/s24154983