Intelligent Cockpits for Connected Vehicles: Taxonomy, Architecture, Interaction Technologies, and Future Directions

Abstract

:1. Introduction

- A new full-process human–machine interaction framework is explored. This systematical framework encompasses multimodal perception, cognitive decision-making, active interaction, and evolutionary evaluation. It guides a user-centered, positive feedback-driven, iterative cycle of human–machine interaction to address the complexity and uncertainty of interactions in the original cockpit’s mixed state.The framework aims to enhance the efficiency and effectiveness of interactions, ensuring that drivers receive accurate and timely information in complex environments, thereby optimizing the overall driving experience and safety.

- A quantitative evaluation of user experience in intelligent cockpits is described. This study highlights that the quantitative evaluation of user experience primarily focuses on passengers’ subjective experience and objective data analysis based on ergonomics. This aids in forming a user-centered feedback and an automatic evolutionary system.

- Deep insights into the future of intelligent cockpits are provided. Based on the current development status and challenges faced by cockpits, this study innovatively proposes the integration of emerging technologies (such as digital twinning, large language models, and knowledge graphs) as future research directions for intelligent cockpits to better understand user needs.For example, a knowledge graph of human–machine interactions can be constructed to mine sparse associative data to determine driver intentions, and this can be combined with large language models to better understand the drivers’ needs and achieve precise predictions of human–machine interaction intentions in intelligent cockpits, as well as to enhance the accuracy of intelligent service recommendations and other downstream tasks.

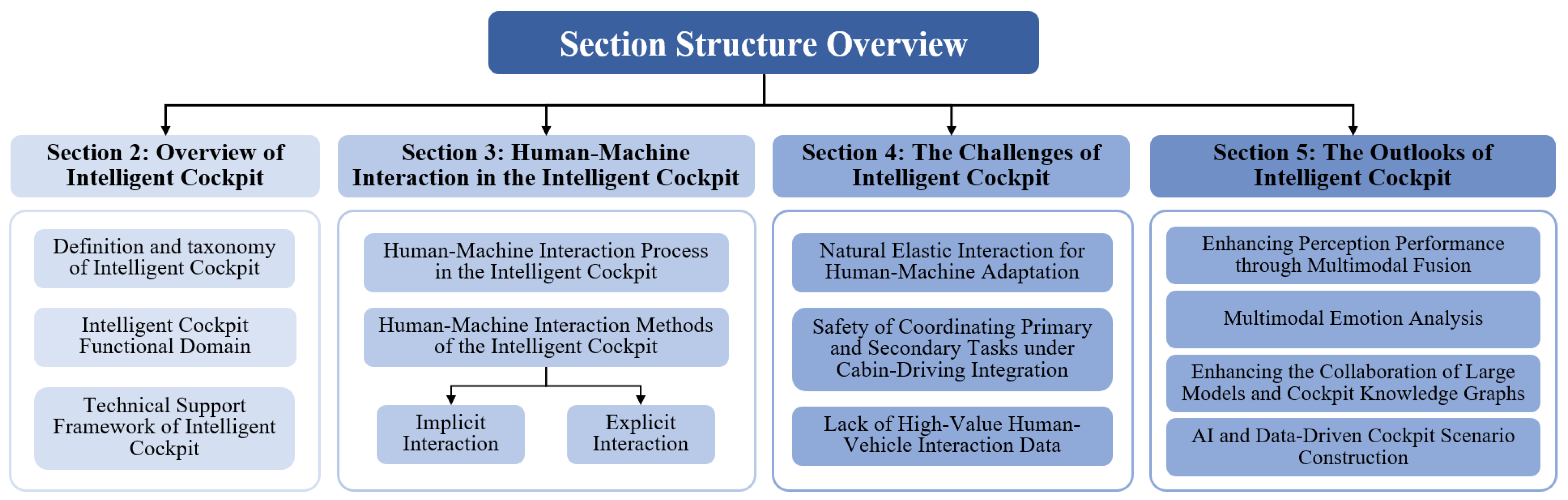

2. Overview of Intelligent Cockpits

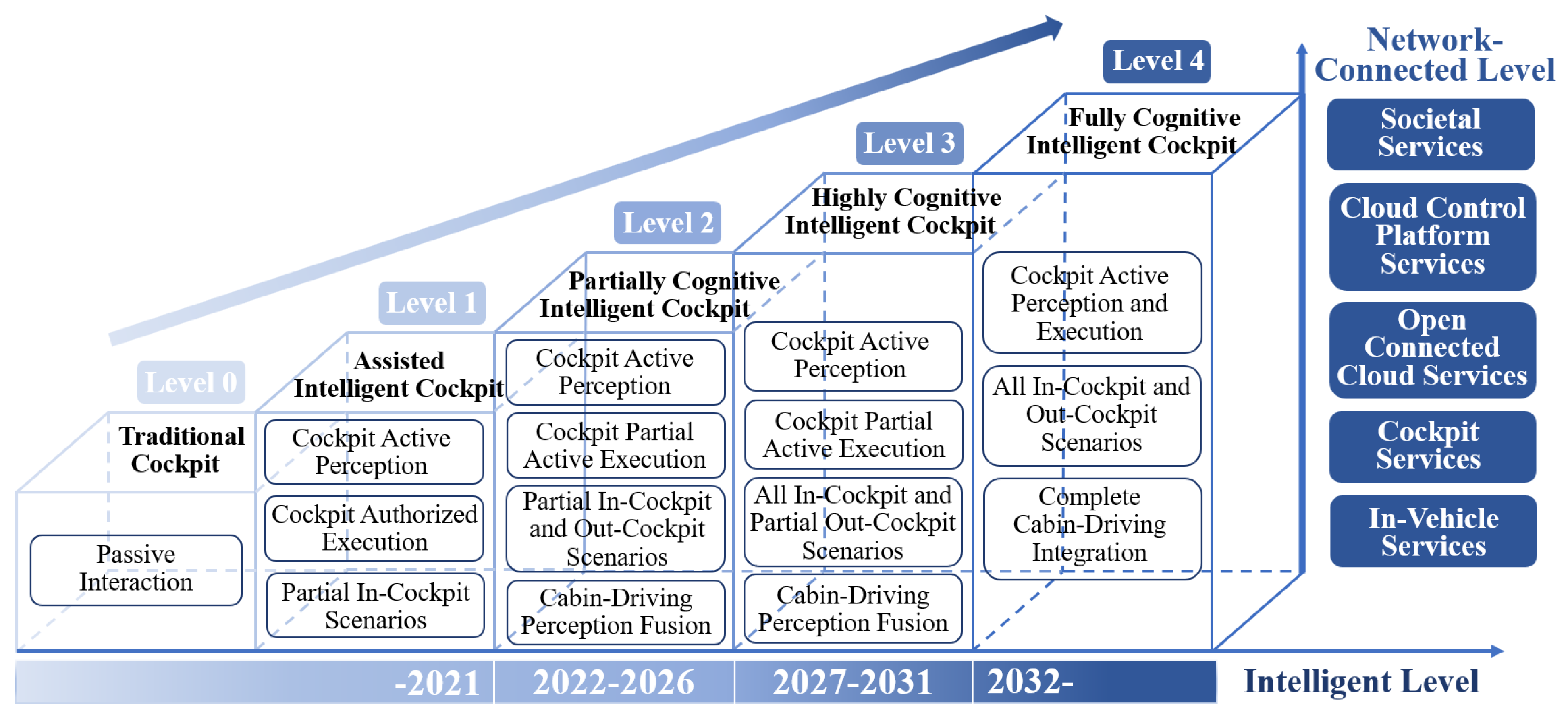

2.1. Definition and Taxonomy of Intelligent Cockpits

- (1)

- Level 0—Traditional CockpitThe task execution in in-cockpit scenarios involves the cockpit passively responding to the needs of the driver and passengers. It offers vehicle system services, including navigation, applications, and phone functionalities, catering to the occupants’ requirements.

- (2)

- Level 1—Assisted intelligent cockpitThe task execution in in-cockpit scenarios can involve active engagement from the cockpit, where it has the capability to perceive the needs of the driver and passengers. This process requires driver authorization and encompasses providing cabin services directly to the occupants. Examples include actively adjusting the air conditioning temperature and airflow, as well as managing related queries and actions to enhance comfort and convenience.

- (3)

- Level 2—Partially Cognitive Intelligent CockpitThe task execution spans both in-cockpit and selected external scenarios. In certain in-cockpit situations, the cockpit is capable of actively sensing the needs of the driver and passengers. The tasks can be actively executed, in part, with capabilities that link perception to the autonomous driving system for some external scenarios. Furthermore, it facilitates open connected cloud services. Examples include adaptive adjustments to air conditioning and seating based on driving conditions and personal preferences, health monitoring reminders, and push notifications from cloud services.

- (4)

- Level 3—Highly Cognitive Intelligent CockpitThe task execution can take place in both in-cockpit and selected external scenarios. In all in-cockpit scenarios, the cockpit is capable of actively perceiving the driver and passengers. The tasks can be partially autonomously executed, incorporating perception with the autonomous driving system in most out-cockpit scenarios. Moreover, it supports enhancements to the cloud control platform service. For instance, with Level 2 capabilities, it facilitates cloud control services.

- (5)

- Level 4—Fully Cognitive Intelligent CockpitThe task execution can occur across all in-cockpit and external scenarios. In every in-cockpit scenario, the cockpit is equipped to actively perceive the driver and passengers. Tasks can be fully autonomously executed, achieving seamless integration with the autonomous driving system in perception, decision-making, planning, and control. Additionally, it supports ongoing upgrades to cloud control and facilitates social-level connected services, including comprehensive cabin-driving integration and proactive cognitive interaction.

2.2. Intelligent Cockpit Functional Domain

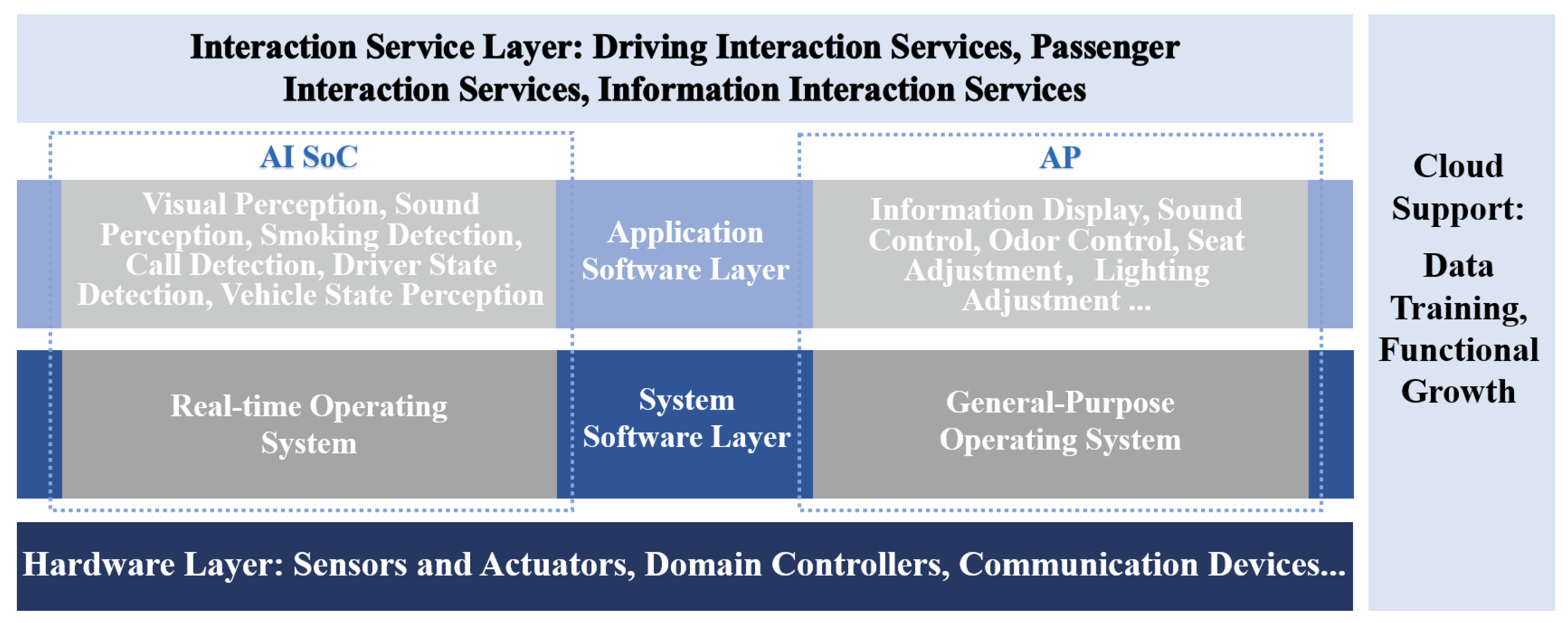

2.3. Technical Support Framework of Intelligent Cockpit

- Visual sensors. These are installed both inside and outside the cockpit to capture comprehensive visual information. Inside, they record details like the facial expressions and body postures of drivers and passengers, as well as other in-cockpit scenarios. Outside, they monitor environmental conditions such as weather and the behavior of other traffic participants.

- Auditory sensors. These encompass microphones and other devices that are specifically designed to capture auditory information from within the cockpit, such as conversations and ambient noises.

- Haptic sensors. These are integrated into components like the steering wheel, seats, interactive screens, and buttons. They are used to capture information such as pressure exerted by the fingers and bodies of the driver and passengers.

- Olfactory sensors. These are designed to capture olfactory information, such as the various smells within the cockpit.

- Physiological sensors. These capture physiological information about the driver, including electromyography signals, electroencephalography signals, electrocardiography signals, and skin conductance signals.

- Visual actuators. These include displays, instrument panels, and head-up displays, all integral to providing visual information directly to the occupants.

- Auditory actuators. These encompass multi-zone speakers and sound processors that enhance the auditory experience within the cockpit by delivering clear and customized audio outputs.

- Haptic actuators. These comprise haptic feedback devices, temperature control systems, and seat angle control mechanisms, all designed to enhance physical comfort and feedback within the cockpit.

- Olfactory actuators. These include fragrance sprayers and air freshening devices, designed to manage and enhance the olfactory environment within the cockpit.

3. Human–Machine Interaction in Intelligent Cockpits

3.1. The Human–Machine Interaction Process in Intelligent Cockpits

3.2. Human–Machine Interaction Methods of the Intelligent Cockpits

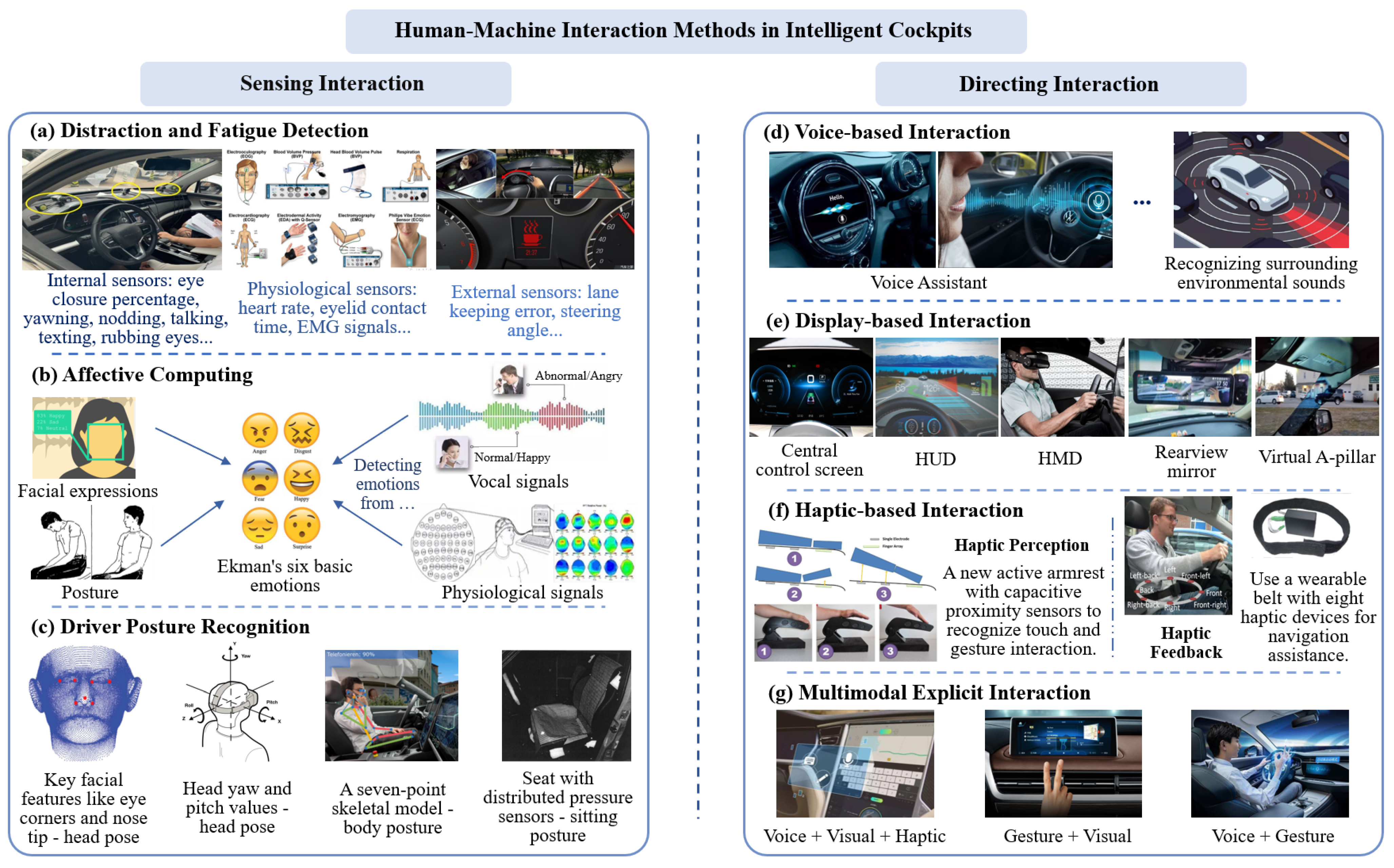

3.2.1. Sensing Interaction

- (1)

- Driver Fatigue and Distraction Detection

| Category | Data/Data Source | Works | Methodology | |

|---|---|---|---|---|

Distraction and Fatigue Detection | External Sensors | [104,105] | Detect driver distraction through lane position and lane-keeping errors. | |

| [106] | Differentiate between normal and distracted driving based on binary logistic regression and Fisher discriminant analysis. | |||

| [107] | Use steering wheel sensors to calculate steering error and indirectly determine if the driver is distracted. | |||

| In-vehicle Visual Sensors | [108] | Classify distraction behaviors using a weighted ensemble of CNN classifiers with genetic algorithms. | ||

| [109] | Utilize convolutional neural networks (CNN) to effectively adapt to changes in brightness and shadows. | |||

| [110] | Utilize support vector machines (SVM) to detect eye closure, thereby detecting distractions and other behaviors. | |||

Physiological Sensors | [111] | Utilize SVM to classify EEG signals during driving into four categories. | ||

| [112] | Use in-ear EEG instead of traditional head electrodes. | |||

| [113,114,115] | Use ECG to detect driver behavior linked to heart rate, like emotions. | |||

| [116] | Utilize EOG sensors to record eye movements, such as eyelid contact time, for detecting fatigue and distraction. | |||

| [117,118,119] | Utilize EMG to detect changes in muscle signals to determine fatigue. | |||

Affective Computing | RGB or IR Cameras | [120,121,122] | After collecting facial data using RGB or IR cameras, accurately classify the data using k-nearest neighbors (k-NN), SVM, and deep neural networks. | |

| [123,124,125] | ||||

| [49,126] | ||||

| Physiological Sensors | [127,128] | Generate physiological signals with sensors, reduce dimensions with PCA and LDA, then classify with SVM and naive Bayes. | ||

| [129,130] | ||||

| Voice Signals | [131] | Use features of voice signals (such as pitch, energy, and intensity) for emotion recognition. | ||

| [14] | Integrate global acoustic and local spectral features of voice signals to capture both overall and instantaneous changes in emotions. | |||

| Driver Behavior | [132] | Distinguish driver emotions by detecting steering wheel grip strength. | ||

| [133] | Detect driver frustration through posture using SVM. | |||

| Multimodal Data | [134] | Integrate gestures and voice for emotion analysis. | ||

| [135] | Utilize convolutional techniques to input drivers’ facial expressions and cognitive process features for emotion detection. | |||

| [136] | Classify emotions for each modality using a Bayesian classifier, then integrate at the feature and decision levels. | |||

| [137] | Using facial video and driving behavior as inputs and employing a multi-task training approach. | |||

Driver Posture Recognition | Visual Sensors | RGB Camera | [138] | Use template matching methods for head pose estimation and tracking. |

| [139] | Use a quantized pose classifier to detect discrete head yaw and pitch. | |||

| [75] | Estimate head pose by tracking key facial features such as eye corners and the tip of the nose. | |||

| Multi- cameras | [140] | Adopt a multi-camera strategy to overcome the limitations of a single-perspective monocular camera. | ||

| IR Camera | [141] | Estimate head pose using infrared images based on deep learning methods. | ||

| Structured Light | [142] | Based on a graph-based algorithm, use depth frames to fit a 7-point skeleton model to the driver’s body to infer posture. | ||

| Time of Flight | [143] | Based on the iterative closest point algorithm, estimate the position and orientation of the driver’s limbs using visual information. | ||

| Haptic Sensors | [78] | Measure the driver’s posture using optical motion capture and a seat equipped with distributed pressure sensors. | ||

| [144] | Deploy pressure maps integrated with IMU measurements to robustly identify body postures. | |||

| [145] | Accurately estimate the 3D position of the occupant’s head using a capacitive proximity sensor array and non-parametric neural networks. | |||

| [146] | Estimate head pose based on ultrasonic technology. | |||

- (2)

- Affective Computing

- (3)

- In-vehicle Posture Recognition

3.2.2. Directing Interaction

- (1)

- Voice-based Interaction

- (2)

- Display-based Interaction

- (3)

- Haptic-based Interactions

- (4)

- Multimodal Directing Interaction

4. The Challenges of Intelligent Cockpits

4.1. Natural Elastic Interactions for Human–Machine Adaptation

4.2. Safety of Coordinating Primary and Secondary Tasks in Cabin-Driving Integration

4.3. Lack of High-Value Human–Vehicle Interaction Data

5. The Outlook of Intelligent Cockpits

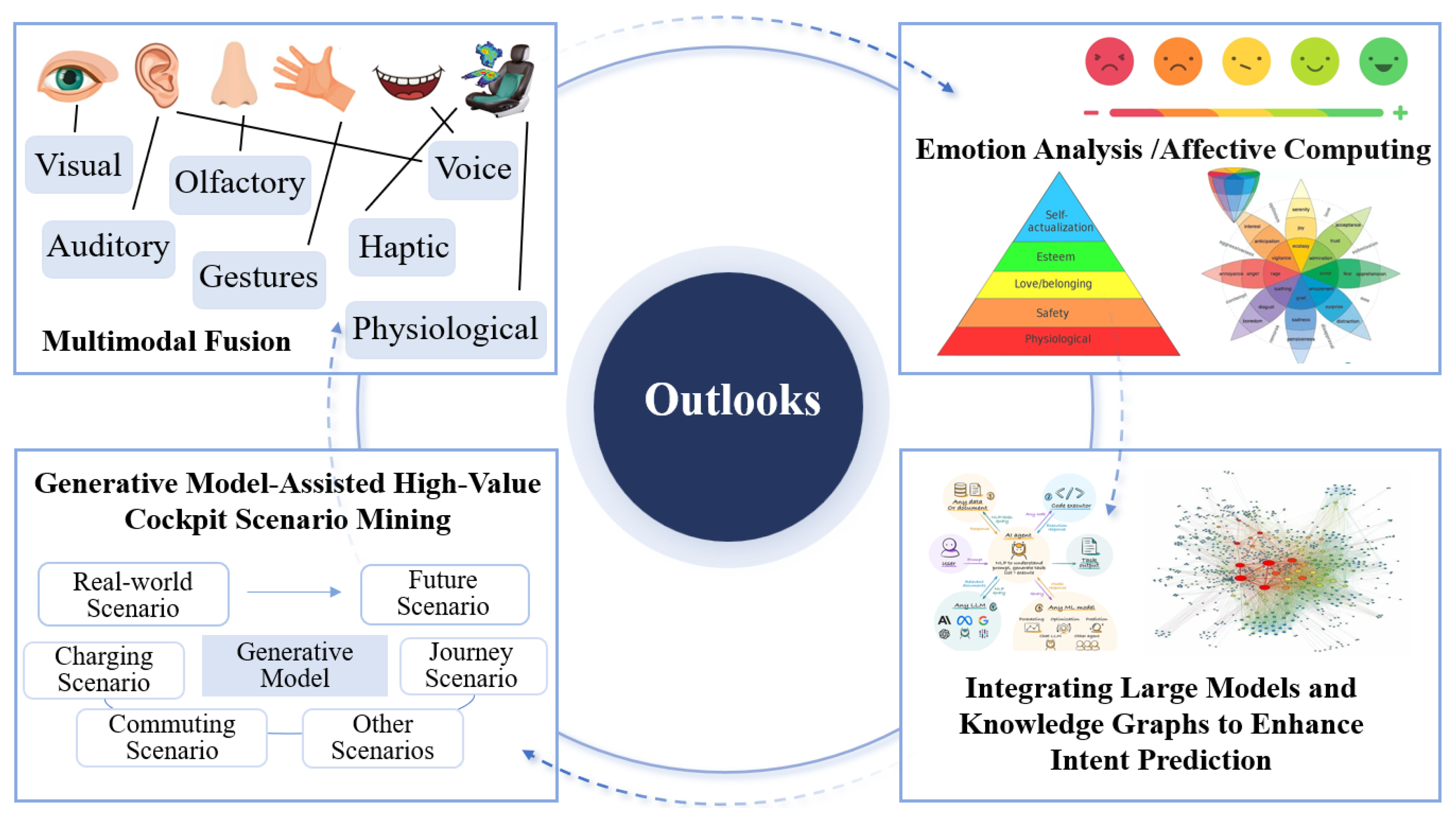

5.1. Enhancing Perception Performance through Multimodal Fusion

5.2. Improving Understanding Capabilities through Multimodal Emotion Analysis

5.3. Enhancing the Prediction of Interaction Intention through the Collaboration of Large Models and Knowledge Graphs

5.4. Boosting Function Satisfaction and Iteration Speed through AI and Data-Driven Cockpit Scenario Construction

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, W.; Cao, D.; Tan, R.; Shi, T.; Gao, Z.; Ma, J.; Guo, G.; Hu, H.; Feng, J.; Wang, L. Intelligent Cockpit for Intelligent Connected Vehicles: Definition, Taxonomy, Technology and Evaluation. IEEE Trans. Intell. Veh. 2023, 9, 3140–3153. [Google Scholar] [CrossRef]

- SAE International/ISO. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles; Standard; On-Road Automated Driving (ORAD) Committee: Warrendale, PA, USA, 2021. [Google Scholar]

- China SAE. White Paper on Automotive Intelligent Cockpit Grading and Comprehensive Evaluation; Report; China Society of Automotive Engineers: Beijing, China, 2023. [Google Scholar]

- You, Z.; Ma, N.; Wang, Y.; Jiang, Y. Cognitive Mechanism and Evaluation Method of Human-Machine Interaction in Intelligent Vehicle Cockpit; Scientific Research Publishing, Inc.: Irvine, CA, USA, 2023; p. 196. [Google Scholar]

- Sohu. The True Era of Intelligence! Intelligent Cockpits Become Tangible, and Autonomous Vehicles Are about to Hit the Roads. 2021. Available online: https://www.sohu.com/a/465281271_120699990 (accessed on 12 July 2024).

- Mobility, N. Technology Changes Life: Experience the Weltmeister EX5-Z Intelligent Cockpit. 2020. Available online: https://www.xchuxing.com/article-53204-1.html (accessed on 12 July 2024).

- ZCOOL. 2019 Intelligent Cockpit Design Innovation. 2019. Available online: https://www.zcool.com.cn/article/ZOTEzMzMy.html (accessed on 12 July 2024).

- Lauber, F.; Follmann, A.; Butz, A. What you see is what you touch: Visualizing touch screen interaction in the head-up display. In Proceedings of the 2014 Conference on Designing Interactive Systems, Vancouver, BC, Canada, 21–25 June 2014; pp. 171–180. [Google Scholar]

- Horn, N. “Hey BMW, Now We’re Talking!” BMWs Are about to Get a Personality with the Company’s Intelligent Personal Assistant. 2018. Available online: https://bit.ly/3o3LMPv (accessed on 2 March 2024).

- Li, W.; Wu, Y.; Zeng, G.; Ren, F.; Tang, M.; Xiao, H.; Liu, Y.; Guo, G. Multi-modal user experience evaluation on in-vehicle HMI systems using eye-tracking, facial expression, and finger-tracking for the smart cockpit. Int. J. Veh. Perform. 2022, 8, 429–449. [Google Scholar] [CrossRef]

- Manjakkal, L.; Yin, L.; Nathan, A.; Wang, J.; Dahiya, R. Energy autonomous sweat-based wearable systems. Adv. Mater. 2021, 33, 2100899. [Google Scholar] [CrossRef] [PubMed]

- Biondi, F.; Alvarez, I.; Jeong, K.A. Human–vehicle cooperation in automated driving: A multidisciplinary review and appraisal. Int. J.-Hum.-Comput. Interact. 2019, 35, 932–946. [Google Scholar] [CrossRef]

- Li, W.; Wu, L.; Wang, C.; Xue, J.; Hu, W.; Li, S.; Guo, G.; Cao, D. Intelligent cockpit for intelligent vehicle in metaverse: A case study of empathetic auditory regulation of human emotion. IEEE Trans. Syst. Man Cybern. Syst. 2022, 53, 2173–2187. [Google Scholar] [CrossRef]

- Li, W.; Xue, J.; Tan, R.; Wang, C.; Deng, Z.; Li, S.; Guo, G.; Cao, D. Global-local-feature-fused driver speech emotion detection for intelligent cockpit in automated driving. IEEE Trans. Intell. Veh. 2023, 8, 2684–2697. [Google Scholar] [CrossRef]

- Gen, L. The Mystery of the World’s Most Expensive Traffic Light Solved: It’s Actually Baidu Apollo’s Flip Side. 2018. Available online: https://baijiahao.baidu.com/s?id=1611649048971895110 (accessed on 11 July 2024).

- China SAE. Research Report on the Current Status and Development Trends of Human-Machine Interaction in Intelligent Vehicles; Report; China Society of Automotive Engineers: Beijing, China, 2018. [Google Scholar]

- Baidu, Hunan University. White Paper on Design Trends of Human-Machine Interaction in Intelligent Vehicles; Report; Baidu-Hunan University Joint Innovation Laboratory for Intelligent Design and Interaction Experience: Changsha, Hunan, China, 2018. [Google Scholar]

- Will, J. The 8 Coolest Car Tech Innovations from CES. 2018. Available online: https://www.mensjournal.com/gear/the-8-coolest-car-tech-innovations-from-ces-2018 (accessed on 10 July 2024).

- Li, W.; Li, G.; Tan, R.; Wang, C.; Sun, Z.; Li, Y.; Guo, G.; Cao, D.; Li, K. Review and Perspectives on Human Emotion for Connected Automated Vehicles. Automot. Innov. 2024, 7, 4–41. [Google Scholar] [CrossRef]

- Murali, P.K.; Kaboli, M.; Dahiya, R. Intelligent in-vehicle interaction technologies. Adv. Intell. Syst. 2022, 4, 2100122. [Google Scholar] [CrossRef]

- Tan, Z.; Dai, N.; Su, Y.; Zhang, R.; Li, Y.; Wu, D.; Li, S. Human–machine interaction in intelligent and connected vehicles: A review of status quo, issues, and opportunities. IEEE Trans. Intell. Transp. Syst. 2021, 23, 13954–13975. [Google Scholar] [CrossRef]

- Jiancheng, Y. Human-Machine Integration: Toyota Innovatively Explores a Third Path to Autonomous Driving. 2017. Available online: https://www.leiphone.com/news/201710/82E3lc9HuDuNTxK7.html (accessed on 10 July 2024).

- HYUNDAI. United States: Hyundai Motor Company introduces a Health + Mobility Concept for Wellness in Mobility. 2017. Available online: https:go.gale.com/ps/i.do?id=GALE%7CA476710655&sid=sitemap&v=2.1&it=r&p=HRCA&sw=w&userGroupName=anon%7Ebe52aaf3&aty=open-web-entry (accessed on 10 July 2024).

- Lin, Y.D.; Chu, E.T.H.; Chang, E.; Lai, Y.C. Smoothed graphic user interaction on smartphones with motion prediction. IEEE Trans. Syst. Man Cybern. Syst. 2017, 50, 1429–1441. [Google Scholar] [CrossRef]

- Roche, F.; Brandenburg, S. Should the urgency of visual-tactile takeover requests match the criticality of takeover situations? IEEE Trans. Intell. Veh. 2019, 5, 306–313. [Google Scholar] [CrossRef]

- Liu, H.; Sun, F.; Fang, B.; Guo, D. Cross-modal zero-shot-learning for tactile object recognition. IEEE Trans. Syst. Man Cybern. Syst. 2018, 50, 2466–2474. [Google Scholar] [CrossRef]

- Hasenjäger, M.; Heckmann, M.; Wersing, H. A survey of personalization for advanced driver assistance systems. IEEE Trans. Intell. Veh. 2019, 5, 335–344. [Google Scholar] [CrossRef]

- Wang, F.Y. Metavehicles in the metaverse: Moving to a new phase for intelligent vehicles and smart mobility. IEEE Trans. Intell. Veh. 2022, 7, 1–5. [Google Scholar] [CrossRef]

- Wang, F.Y.; Li, Y.; Zhang, W.; Bennett, G.; Chen, N. Digital twin and parallel intelligence based on location and transportation: A vision for new synergy between the ieee crfid and itss in cyberphysical social systems [society news]. IEEE Intell. Transp. Syst. Mag. 2021, 13, 249–252. [Google Scholar] [CrossRef]

- Ponos, M.; Lazic, N.; Bjelica, M.; Andjelic, T.; Manic, M. One solution for integrating graphics in vehicle digital cockpit. In Proceedings of the 2021 29th Telecommunications Forum (TELFOR), Belgrade, Serbia, 23–24 November 2021; pp. 1–3. [Google Scholar]

- Xia, B.; Qian, G.; Sun, Y.; Wu, X.; Lu, Z.; Hu, M. The Implementation of Automotive Ethernet Based General Inter-Process Communication of Smart Cockpit; Technical Report; SAE Technical Paper: Warrendale, PA, USA, 2022. [Google Scholar]

- Zhang, J.; Pu, J.; Xue, J.; Yang, M.; Xu, X.; Wang, X.; Wang, F.Y. HiVeGPT: Human-machine-augmented intelligent vehicles with generative pre-trained transformer. IEEE Trans. Intell. Veh. 2023, 8, 2027–2033. [Google Scholar] [CrossRef]

- Yahui, W. The Future is Here: Revolution in Intelligent Cockpit Human-Machine Interaction Technology and Innovation in User Experience. 2018. Available online: https://zhuanlan.zhihu.com/p/41871439?app=zhihulite (accessed on 10 July 2024).

- Mobility, N. Haptic Technology Quietly Makes Its Way into Vehicles. 2020. Available online: https://www.xchuxing.com/article-52180-1.html (accessed on 10 July 2024).

- SinoVioce. Lingyun Intelligent Voice Integrated Machine: Smart Voice Empowers Multiple Scenarios. 2021. Available online: https://www.sinovoice.com/news/products/2021/1101/1023.html (accessed on 10 July 2024).

- Bhann. Intelligent Cockpit Human-Machine Interaction: The HUD. 2022. Available online: https://zhuanlan.zhihu.com/p/513310042 (accessed on 10 July 2024).

- Duzhi. Creating a “Intelligent Comfort Cockpit”: Tianmei ET5 Features Patented Sleep Seats. 2020. Available online: http://www.qichequan.net/news/pinglun/2020/35708.html (accessed on 10 July 2024).

- AG, C. Intelligent Voice Assistant: Continental Group Develops Vehicle-Adaptive Voice-Controlled Digital Companion. 2019. Available online: https://auto.jgvogel.cn/c/2019-07-19/653573.shtml (accessed on 10 July 2024).

- James. Introduction to Multi-Sensor Fusion in Autonomous Vehicles (Part I). 2020. Available online: https://zhuanlan.zhihu.com/p/340101914 (accessed on 10 July 2024).

- handoop. Application of Knowledge Graphs in Big Data. 2019. Available online: https://blog.csdn.net/DF_XIAO/article/details/102480115 (accessed on 10 July 2024).

- Tencent. Tencent Smart Mobility Tech Open Day: Building a “Vehicle-Cloud Integration” Data-Driven Application Framework to Make Vehicles Smarter. 2023. Available online: https://www.c114.com.cn/cloud/4049/a1229335.html (accessed on 10 July 2024).

- Chen, C.; Ji, Z.; Sun, Y.; Bezerianos, A.; Thakor, N.; Wang, H. Self-attentive channel-connectivity capsule network for EEG-based driving fatigue detection. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 3152–3162. [Google Scholar] [CrossRef] [PubMed]

- Lyu, H.; Yue, J.; Zhang, W.; Cheng, T.; Yin, Y.; Yang, X.; Gao, X.; Hao, Z.; Li, J. Fatigue Detection for Ship OOWs Based on Input Data Features, From the Perspective of Comparison With Vehicle Drivers: A Review. IEEE Sensors J. 2023, 23, 15239–15252. [Google Scholar] [CrossRef]

- Akrout, B.; Mahdi, W. A novel approach for driver fatigue detection based on visual characteristics analysis. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 527–552. [Google Scholar] [CrossRef]

- Shajari, A.; Asadi, H.; Glaser, S.; Arogbonlo, A.; Mohamed, S.; Kooijman, L.; Abu Alqumsan, A.; Nahavandi, S. Detection of driving distractions and their impacts. J. Adv. Transp. 2023, 2023, 2118553. [Google Scholar] [CrossRef]

- Mou, L.; Chang, J.; Zhou, C.; Zhao, Y.; Ma, N.; Yin, B.; Jain, R.; Gao, W. Multimodal driver distraction detection using dual-channel network of CNN and Transformer. Expert Syst. Appl. 2023, 234, 121066. [Google Scholar] [CrossRef]

- Wang, A.; Wang, J.; Shi, W.; He, D. Cognitive Workload Estimation in Conditionally Automated Vehicles Using Transformer Networks Based on Physiological Signals. Transp. Res. Rec. 2024. [Google Scholar] [CrossRef]

- Wei, W.; Fu, X.; Zhong, S.; Ge, H. Driver’s mental workload classification using physiological, traffic flow and environmental factors. Transp. Res. Part Traffic Psychol. Behav. 2023, 94, 151–169. [Google Scholar] [CrossRef]

- Jain, D.K.; Dutta, A.K.; Verdú, E.; Alsubai, S.; Sait, A.R.W. An automated hyperparameter tuned deep learning model enabled facial emotion recognition for autonomous vehicle drivers. Image Vis. Comput. 2023, 133, 104659. [Google Scholar] [CrossRef]

- Park, J.; Zahabi, M. A review of human performance models for prediction of driver behavior and interactions with in-vehicle technology. Hum. Factors 2024, 66, 1249–1275. [Google Scholar] [CrossRef]

- Yang, J.; Xing, S.; Chen, Y.; Qiu, R.; Hua, C.; Dong, D. An evaluation model for the comfort of vehicle intelligent cockpits based on passenger experience. Sustainability 2022, 14, 6827. [Google Scholar] [CrossRef]

- Zhang, T.; Ren, J. Research on Seat Static Comfort Evaluation Based on Objective Interface Pressure. SAE Int. J. Commer. Veh. 2023, 16, 341–348. [Google Scholar] [CrossRef]

- Gao, Y.; Fischer, T.; Paternoster, S.; Kaiser, R.; Paternoster, F.K. Evaluating lower body driving posture regarding gas pedal control and emergency braking: A pilot study. Int. J. Ind. Ergon. 2022, 91, 103357. [Google Scholar] [CrossRef]

- Huang, Q.; Jin, X.; Gao, M.; Guo, M.; Sun, X.; Wei, Y. Influence of lumbar support on tractor seat comfort based on body pressure distribution. PLoS ONE 2023, 18, e0282682. [Google Scholar] [CrossRef]

- Cardoso, M.; McKinnon, C.; Viggiani, D.; Johnson, M.J.; Callaghan, J.P.; Albert, W.J. Biomechanical investigation of prolonged driving in an ergonomically designed truck seat prototype. Ergonomics 2018, 61, 367–380. [Google Scholar] [CrossRef]

- Hirao, A.; Naito, S.; Yamazaki, N. Pressure sensitivity of buttock and thigh as a key factor for understanding of sitting comfort. Appl. Sci. 2022, 12, 7363. [Google Scholar] [CrossRef]

- Wolf, P.; Rausch, J.; Hennes, N.; Potthast, W. The effects of joint angle variability and different driving load scenarios on maximum muscle activity–A driving posture simulation study. Int. J. Ind. Ergon. 2021, 84, 103161. [Google Scholar] [CrossRef]

- Wolf, P.; Hennes, N.; Rausch, J.; Potthast, W. The effects of stature, age, gender, and posture preferences on preferred joint angles after real driving. Appl. Ergon. 2022, 100, 103671. [Google Scholar] [CrossRef]

- Gao, F.; Zong, S.; Han, Z.W.; Xiao, Y.; Gao, Z.H. Musculoskeletal computational analysis on muscle mechanical characteristics of drivers’ lumbar vertebras and legs in different sitting postures. Rev. Assoc. Medica Bras. 2020, 66, 637–642. [Google Scholar] [CrossRef]

- Lecocq, M.; Lantoine, P.; Bougard, C.; Allègre, J.M.; Bauvineau, L.; Bourdin, C.; Marqueste, T.; Dousset, E. Neuromuscular fatigue profiles depends on seat feature during long duration driving on a static simulator. Appl. Ergon. 2020, 87, 103118. [Google Scholar] [CrossRef]

- Tang, Z.; Liu, Z.; Tang, Y.; Dou, J.; Xu, C.; Wang, L. Model construction and analysis of ride comfort for high-speed railway seat cushions. Work 2021, 68, S223–S229. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Gao, Z.; Gao, F.; Zhang, T.; Mei, X.; Yang, F. Quantitative evaluation of vehicle seat driving comfort during short and long term driving. IEEE Access 2020, 8, 111420–111432. [Google Scholar] [CrossRef]

- Xiao, J.; Yu, S.; Chen, D.; Yu, M.; Xie, N.; Wang, H.; Sun, Y. DHM-driven quantitative assessment model of activity posture in space-restricted accommodation cabin. Multimed. Tools Appl. 2024, 83, 42063–42101. [Google Scholar] [CrossRef]

- Jeon, M.; FakhrHosseini, M.; Vasey, E.; Nees, M.A. Blueprint of the auditory interactions in automated vehicles: Report on the workshop and tutorial. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Adjunct, Oldenburg, Germany, 24–27 September 2017; pp. 178–182. [Google Scholar]

- Harrington, K.; Large, D.R.; Burnett, G.; Georgiou, O. Exploring the use of mid-air ultrasonic feedback to enhance automotive user interfaces. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Toronto, ON, Canada, 23–25 September 2018; pp. 11–20. [Google Scholar]

- Sterkenburg, J.; Landry, S.; Jeon, M. Design and evaluation of auditory-supported air gesture controls in vehicles. J. Multimodal User Interfaces 2019, 13, 55–70. [Google Scholar] [CrossRef]

- Tippey, K.G.; Sivaraj, E.; Ardoin, W.J.; Roady, T.; Ferris, T.K. Texting while driving using Google Glass: Investigating the combined effect of heads-up display and hands-free input on driving safety and performance. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; SAGE Publications Sage CA: Los Angeles, CA, USA, September 2014; pp. 2023–2027. [Google Scholar]

- Alves, P.R.; Gonçalves, J.; Rossetti, R.J.; Oliveira, E.C.; Olaverri-Monreal, C. Forward collision warning systems using heads-up displays: Testing usability of two new metaphors. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium Workshops (IV Workshops), Gold Coast, QLD, Australia, 23 June 2013; pp. 1–6. [Google Scholar]

- Sun, X.; Chen, H.; Shi, J.; Guo, W.; Li, J. From hmi to hri: Human-vehicle interaction design for smart cockpit. In Proceedings of the Human-Computer Interaction. Interaction in Context: 20th International Conference, HCI International 2018, Las Vegas, NV, USA, 15–20 July 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 440–454. [Google Scholar]

- Account, V.O. Five Interaction Technologies Behind Intelligent Cockpits: Auditory, Physiological Sensing, and Vehicle Status. 2022. Available online: https://nev.ofweek.com/2022-03/ART-77015-11000-30554279.html (accessed on 10 July 2024).

- Sohu. Tesla Activates In-Car Cameras to Monitor Driver Status. 2021. Available online: https://www.sohu.com/a/469479073_121014217?_trans_=000019_wzwza (accessed on 10 July 2024).

- Zhihu. Affective Computing. 2018. Available online: https://zhuanlan.zhihu.com/p/45595156 (accessed on 10 July 2024).

- CSDN. Introduction to Affective Computing. 2022. Available online: https://blog.csdn.net/EtchTime/article/details/124755542 (accessed on 10 July 2024).

- CSDN. DEAP: A Database for Emotion Analysis Using Physiological Signals. 2020. Available online: https://blog.csdn.net/zyb228/article/details/108722769 (accessed on 10 July 2024).

- Martin, S.; Tawari, A.; Murphy-Chutorian, E.; Cheng, S.Y.; Trivedi, M. On the design and evaluation of robust head pose for visual user interfaces: Algorithms, databases, and comparisons. In Proceedings of the 4th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Portsmouth, NH, USA, 17–19 October 2012; pp. 149–154. [Google Scholar]

- Cheng, Y. Pitch-Yaw-Roll. 2019. Available online: https://blog.csdn.net/chengyq116/article/details/89195271 (accessed on 10 July 2024).

- Fairy. The New System Analyzes Camera Data in Real Time, Detecting Not Only the Facial Features of Occupants But also Recognizing Their Postures. 2021. Available online: https://www.d1ev.com/news/jishu/155290 (accessed on 10 July 2024).

- Andreoni, G.; Santambrogio, G.C.; Rabuffetti, M.; Pedotti, A. Method for the analysis of posture and interface pressure of car drivers. Appl. Ergon. 2002, 33, 511–522. [Google Scholar] [CrossRef]

- Design, H. How Are the Trends in Automotive Human-Machine Interaction Technology? How is Automotive Human-Machine Interaction Evolving? 2020. Available online: https://www.faceui.com/hmi/detail/204.html (accessed on 10 July 2024).

- Man, I. Desay SV—Wenzhong Intelligent Car Machine Launched, Enjoy a New Intelligent Driving Experience with Full Voice Interaction—Smart World: Smart Technology Aggregation Platform—Leading Future Intelligent Life. 2018. Available online: http://www.znjchina.com/kx/16640.html (accessed on 10 July 2024).

- Microone. Technologie de Conduite Autonome de véHicule. Assistant de Voiture et Concept de Vecteur de Système de Surveillance du Trafic. 2021. Available online: https://fr.freepik.com/vecteurs-premium/technologie-conduite-autonome-vehicule-assistant-voiture-concept-vecteur-systeme-surveillance-du-trafic_4148950.htm (accessed on 10 July 2024).

- News, P. Paying Tribute to the Era of Strength: Test Driving Experience of FAW Hongqi “Shuang 9” in Jiangcheng. 2021. Available online: https://ishare.ifeng.com/c/s/v002k3QE7JXV4W3BKzB4aPLG69sk1--qw2643thWnjJ5ZSiY__ (accessed on 10 July 2024).

- Cocoecar. How Many Automotive HUDs Do You Know of? 2018. Available online: https://www.cocoecar.com/223.htm (accessed on 10 July 2024).

- Jason. Audi VR Car: Put on VR Glasses and Start Driving, Would You Dare to Drive? 2016. Available online: https://vr.poppur.com/vrnews/1972.html (accessed on 10 July 2024).

- Zi, J. Traditional Rearview Mirrors Replaced? Electronic Rearview Mirrors Officially Approved. 2023. Available online: https://iphoneyun.com/newsinfo/4895242.html (accessed on 10 July 2024).

- Chaudhry, A. A 14-Year-Old Found a Potential Way to Fix Those Car Pillar Blind Spots. 2019. Available online: https://www.theverge.com/2019/11/5/20949952/car-blind-spots-pillar-windshield-fix-webcam-kia-hyundai-gassler (accessed on 6 April 2024).

- Braun, A.; Neumann, S.; Schmidt, S.; Wichert, R.; Kuijper, A. Towards interactive car interiors: The active armrest. In Proceedings of the 8th Nordic Conference on Human-Computer Interaction, Helsinki, Finland, 26–30 October 2014; pp. 911–914. [Google Scholar]

- Asif, A.; Boll, S.; Heuten, W. Right or Left: Tactile Display for Route Guidance of Drivers. It-Inf. Technol. 2012, 54, 188–198. [Google Scholar] [CrossRef]

- SinoVioce. Lingyun Vehicle Input Method: Voice + Handwriting Input for Safer In-Vehicle Typing. 2019. Available online: https://shop.aicloud.com/news/products/2019/0814/737.html (accessed on 10 July 2024).

- Pursuer. Application of Gesture Interaction in the Automotive Field. 2018. Available online: https://zhuanlan.zhihu.com/p/42464185 (accessed on 10 July 2024).

- Latitude, A.; Account, L.O. New Driving Behavior Model: Changan Oshan X7 Geeker Edition Launched, Ushering in the Era of Automotive Facial Intelligence Control. 2021. Available online: https://chejiahao.autohome.com.cn/info/8329747 (accessed on 10 July 2024).

- Lee, J.D.; Young, K.L.; Regan, M.A. Defining driver distraction. Driv. Distraction Theory Eff. Mitig. 2008, 13, 31–40. [Google Scholar]

- Soultana, A.; Benabbou, F.; Sael, N.; Ouahabi, S. A Systematic Literature Review of Driver Inattention Monitoring Systems for Smart Car. Int. J. Interact. Mob. Technol. 2022, 16. [Google Scholar] [CrossRef]

- Sun, M.; Zhou, R.; Jiao, C. Analysis of HAZMAT truck driver fatigue and distracted driving with warning-based data and association rules mining. J. Traffic Transp. Eng. (English Ed. 2023, 10, 132–142. [Google Scholar] [CrossRef]

- Ranney, T.A.; Garrott, W.R.; Goodman, M.J. NHTSA Driver Distraction Research: Past, Present, and Future; Technical Report; SAE Technical Paper: Warrendale, PA, USA, 2001. [Google Scholar]

- Klauer, S.G.; Dingus, T.A.; Neale, V.L.; Sudweeks, J.D.; Ramsey, D.J. The Impact of Driver Inattention on Near-Crash/Crash Risk: An Analysis Using the 100-Car Naturalistic Driving Study Data; Technical Report; Virginia Tech Transportation Institute: Blacksburg, VA, USA, 2006. [Google Scholar] [CrossRef]

- Dingus, T.A.; Guo, F.; Lee, S.; Antin, J.F.; Perez, M.; Buchanan-King, M.; Hankey, J. Driver crash risk factors and prevalence evaluation using naturalistic driving data. Proc. Natl. Acad. Sci. USA 2016, 113, 2636–2641. [Google Scholar] [CrossRef]

- Sullman, M.J.; Prat, F.; Tasci, D.K. A roadside study of observable driver distractions. Traffic Inj. Prev. 2015, 16, 552–557. [Google Scholar] [CrossRef]

- Xie, Z.; Li, L.; Xu, X. Real-time driving distraction recognition through a wrist-mounted accelerometer. Hum. Factors 2022, 64, 1412–1428. [Google Scholar] [CrossRef] [PubMed]

- Papatheocharous, E.; Kaiser, C.; Moser, J.; Stocker, A. Monitoring distracted driving behaviours with smartphones: An extended systematic literature review. Sensors 2023, 23, 7505. [Google Scholar] [CrossRef]

- Sajid Hasan, A.; Jalayer, M.; Heitmann, E.; Weiss, J. Distracted driving crashes: A review on data collection, analysis, and crash prevention methods. Transp. Res. Rec. 2022, 2676, 423–434. [Google Scholar] [CrossRef]

- Michelaraki, E.; Katrakazas, C.; Kaiser, S.; Brijs, T.; Yannis, G. Real-time monitoring of driver distraction: State-of-the-art and future insights. Accid. Anal. Prev. 2023, 192, 107241. [Google Scholar] [CrossRef]

- Kashevnik, A.; Shchedrin, R.; Kaiser, C.; Stocker, A. Driver distraction detection methods: A literature review and framework. IEEE Access 2021, 9, 60063–60076. [Google Scholar] [CrossRef]

- Greenberg, J.; Tijerina, L.; Curry, R.; Artz, B.; Cathey, L.; Kochhar, D.; Kozak, K.; Blommer, M.; Grant, P. Driver Distraction: Evaluation with Event Detection Paradigm. Transp. Res. Rec. 2003, 1843, 1–9. [Google Scholar] [CrossRef]

- Chai, C.; Lu, J.; Jiang, X.; Shi, X.; Zeng, Z. An automated machine learning (AutoML) method for driving distraction detection based on lane-keeping performance. arXiv 2021, arXiv:2103.08311. [Google Scholar]

- Zhang, W.; Zhang, H. Research on Distracted Driving Identification of Truck Drivers Based on Simulated Driving Experiment. IOP Conf. Ser. Earth Environ. Sci. 2021, 638, 012039. [Google Scholar] [CrossRef]

- Nakayama, O.; Futami, T.; Nakamura, T.; Boer, E.R. Development of a steering entropy method for evaluating driver workload. SAE Trans. 1999, 1686–1695. [Google Scholar] [CrossRef]

- Eraqi, H.M.; Abouelnaga, Y.; Saad, M.H.; Moustafa, M.N. Driver distraction identification with an ensemble of convolutional neural networks. J. Adv. Transp. 2019, 2019. [Google Scholar] [CrossRef]

- Tran, D.; Manh Do, H.; Sheng, W.; Bai, H.; Chowdhary, G. Real-time detection of distracted driving based on deep learning. IET Intell. Transp. Syst. 2018, 12, 1210–1219. [Google Scholar] [CrossRef]

- Craye, C.; Rashwan, A.; Kamel, M.S.; Karray, F. A multi-modal driver fatigue and distraction assessment system. Int. J. Intell. Transp. Syst. Res. 2016, 14, 173–194. [Google Scholar] [CrossRef]

- Yeo, M.V.; Li, X.; Shen, K.; Wilder-Smith, E.P. Can SVM be used for automatic EEG detection of drowsiness during car driving? Saf. Sci. 2009, 47, 115–124. [Google Scholar] [CrossRef]

- Hwang, T.; Kim, M.; Hong, S.; Park, K.S. Driver drowsiness detection using the in-ear EEG. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 4646–4649. [Google Scholar]

- Hartley, L.R.; Arnold, P.K.; Smythe, G.; Hansen, J. Indicators of fatigue in truck drivers. Appl. Ergon. 1994, 25, 143–156. [Google Scholar] [CrossRef]

- Jiao, Y.; Zhang, C.; Chen, X.; Fu, L.; Jiang, C.; Wen, C. Driver Fatigue Detection Using Measures of Heart Rate Variability and Electrodermal Activity. IEEE Trans. Intell. Transp. Syst. 2023, 25, 5510–5524. [Google Scholar] [CrossRef]

- Aminosharieh Najafi, T.; Affanni, A.; Rinaldo, R.; Zontone, P. Driver attention assessment using physiological measures from EEG, ECG, and EDA signals. Sensors 2023, 23, 2039. [Google Scholar] [CrossRef] [PubMed]

- Thorslund, B. Electrooculogram Analysis and Development of a System for Defining Stages of Drowsiness; Statens Väg-Och Transportforskningsinstitut: Linköping, Sweden, 2004. [Google Scholar]

- Fu, R.; Wang, H. Detection of driving fatigue by using noncontact EMG and ECG signals measurement system. Int. J. Neural Syst. 2014, 24, 1450006. [Google Scholar] [CrossRef]

- Balasubramanian, V.; Adalarasu, K. EMG-based analysis of change in muscle activity during simulated driving. J. Bodyw. Mov. Ther. 2007, 11, 151–158. [Google Scholar] [CrossRef]

- Khushaba, R.N.; Kodagoda, S.; Lal, S.; Dissanayake, G. Driver drowsiness classification using fuzzy wavelet-packet-based feature-extraction algorithm. IEEE Trans. Biomed. Eng. 2010, 58, 121–131. [Google Scholar] [CrossRef] [PubMed]

- Ali, M.; Mosa, A.H.; Al Machot, F.; Kyamakya, K. EEG-based emotion recognition approach for e-healthcare applications. In Proceedings of the 2016 eighth international conference on ubiquitous and future networks (ICUFN), Vienna, Austria, 5–8 July 2016; pp. 946–950. [Google Scholar]

- Moriyama, T.; Abdelaziz, K.; Shimomura, N. Face analysis of aggressive moods in automobile driving using mutual subspace method. In Proceedings of the Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 2898–2901. [Google Scholar]

- Subudhiray, S.; Palo, H.K.; Das, N. K-nearest neighbor based facial emotion recognition using effective features. IAES Int. J. Artif. Intell 2023, 12, 57. [Google Scholar] [CrossRef]

- Gao, H.; Yüce, A.; Thiran, J.P. Detecting emotional stress from facial expressions for driving safety. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 5961–5965. [Google Scholar]

- Ma, Z.; Mahmoud, M.; Robinson, P.; Dias, E.; Skrypchuk, L. Automatic detection of a driver’s complex mental states. In Proceedings of the Computational Science and Its Applications–ICCSA 2017: 17th International Conference, Trieste, Italy, 3–6 July 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 678–691. [Google Scholar]

- Shtino, V.B.; Muça, M. Comparative Study of K-NN, Naive Bayes and SVM for Face Expression Classification Techniques. Balk. J. Interdiscip. Res. 2023, 9, 23–32. [Google Scholar] [CrossRef]

- Cruz, A.C.; Rinaldi, A. Video summarization for expression analysis of motor vehicle operators. In Proceedings of the Universal Access in Human–Computer Interaction. Design and Development Approaches and Methods: 11th International Conference, UAHCI 2017, Held as Part of HCI International 2017, Vancouver, BC, Canada, 9–14 July 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 313–323. [Google Scholar]

- Rebolledo-Mendez, G.; Reyes, A.; Paszkowicz, S.; Domingo, M.C.; Skrypchuk, L. Developing a body sensor network to detect emotions during driving. IEEE Trans. Intell. Transp. Syst. 2014, 15, 1850–1854. [Google Scholar] [CrossRef]

- Singh, R.R.; Conjeti, S.; Banerjee, R. Biosignal based on-road stress monitoring for automotive drivers. In Proceedings of the 2012 National Conference on Communications (NCC), Kharagpur, India, 3–5 February 2012; pp. 1–5. [Google Scholar]

- Healey, J.A.; Picard, R.W. Detecting stress during real-world driving tasks using physiological sensors. IEEE Trans. Intell. Transp. Syst. 2005, 6, 156–166. [Google Scholar] [CrossRef]

- Wang, J.S.; Lin, C.W.; Yang, Y.T.C. A k-nearest-neighbor classifier with heart rate variability feature-based transformation algorithm for driving stress recognition. Neurocomputing 2013, 116, 136–143. [Google Scholar] [CrossRef]

- Hoch, S.; Althoff, F.; McGlaun, G.; Rigoll, G. Bimodal fusion of emotional data in an automotive environment. In Proceedings of the Proceedings.(ICASSP’05). IEEE International Conference on Acoustics, Speech, and Signal Processing, Philadelphia, PA, USA, 23 March 2005; Volume 2, pp. 1085–1088. [Google Scholar]

- Oehl, M.; Siebert, F.W.; Tews, T.K.; Höger, R.; Pfister, H.R. Improving human-machine interaction–a non invasive approach to detect emotions in car drivers. In Proceedings of the Human-Computer Interaction. Towards Mobile and Intelligent Interaction Environments: 14th International Conference, HCI International 2011, Orlando, FL, USA, 9–14 July 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 577–585. [Google Scholar]

- Taib, R.; Tederry, J.; Itzstein, B. Quantifying driver frustration to improve road safety. In Proceedings of the CHI ’14 Extended Abstracts on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; CHI EA ’14. pp. 1777–1782. [Google Scholar] [CrossRef]

- Zadeh, A.; Chen, M.; Poria, S.; Cambria, E.; Morency, L.P. Tensor fusion network for multimodal sentiment analysis. arXiv 2017, arXiv:1707.07250. [Google Scholar]

- Li, W.; Zeng, G.; Zhang, J.; Xu, Y.; Xing, Y.; Zhou, R.; Guo, G.; Shen, Y.; Cao, D.; Wang, F.Y. Cogemonet: A cognitive-feature-augmented driver emotion recognition model for smart cockpit. IEEE Trans. Comput. Soc. Syst. 2021, 9, 667–678. [Google Scholar] [CrossRef]

- Caridakis, G.; Castellano, G.; Kessous, L.; Raouzaiou, A.; Malatesta, L.; Asteriadis, S.; Karpouzis, K. Multimodal emotion recognition from expressive faces, body gestures and speech. In Proceedings of the Artificial Intelligence and Innovations 2007: From Theory to Applications: Proceedings of the 4th IFIP International Conference on Artificial Intelligence Applications and Innovations (AIAI 2007) 4, Paphos, Cyprus, 19–21 September 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 375–388. [Google Scholar]

- Hu, C.; Gu, S.; Yang, M.; Han, G.; Lai, C.S.; Gao, M.; Yang, Z.; Ma, G. MDEmoNet: A Multimodal Driver Emotion Recognition Network for Smart Cockpit. In Proceedings of the 2024 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 6–8 January 2024; pp. 1–6. [Google Scholar]

- Guo, Z.; Liu, H.; Wang, Q.; Yang, J. A fast algorithm face detection and head pose estimation for driver assistant system. In Proceedings of the 2006 8th international Conference on Signal Processing, Guilin, China, 16–20 November 2006; Volume 3. [Google Scholar]

- Wu, J.; Trivedi, M.M. A two-stage head pose estimation framework and evaluation. Pattern Recognit. 2008, 41, 1138–1158. [Google Scholar] [CrossRef]

- Tawari, A.; Martin, S.; Trivedi, M.M. Continuous head movement estimator for driver assistance: Issues, algorithms, and on-road evaluations. IEEE Trans. Intell. Transp. Syst. 2014, 15, 818–830. [Google Scholar] [CrossRef]

- Firintepe, A.; Selim, M.; Pagani, A.; Stricker, D. The more, the merrier? A study on in-car IR-based head pose estimation. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1060–1065. [Google Scholar]

- Kondyli, A.; Sisiopiku, V.P.; Zhao, L.; Barmpoutis, A. Computer assisted analysis of drivers’ body activity using a range camera. IEEE Intell. Transp. Syst. Mag. 2015, 7, 18–28. [Google Scholar] [CrossRef]

- Demirdjian, D.; Varri, C. Driver pose estimation with 3D Time-of-Flight sensor. In Proceedings of the 2009 IEEE Workshop on Computational Intelligence in Vehicles and Vehicular Systems, Nashville, TN, USA, 30 March–2 April 2009; pp. 16–22. [Google Scholar]

- Vergnano, A.; Leali, F. A methodology for out of position occupant identification from pressure sensors embedded in a vehicle seat. Hum.-Intell. Syst. Integr. 2020, 2, 35–44. [Google Scholar] [CrossRef]

- Ziraknejad, N.; Lawrence, P.D.; Romilly, D.P. Vehicle occupant head position quantification using an array of capacitive proximity sensors. IEEE Trans. Veh. Technol. 2014, 64, 2274–2287. [Google Scholar] [CrossRef]

- Pullano, S.A.; Fiorillo, A.S.; La Gatta, A.; Lamonaca, F.; Carni, D.L. Comprehensive system for the evaluation of the attention level of a driver. In Proceedings of the 2016 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Benevento, Italy, 15–18 May 2016; pp. 1–5. [Google Scholar]

- Alam, L.; Hoque, M.M. Real-time distraction detection based on driver’s visual features. In Proceedings of the 2019 International Conference on Electrical, Computer and Communication Engineering (ECCE), Cox’sBazar, Bangladesh, 7–9 February 2019; pp. 1–6. [Google Scholar]

- Nambi, A.U.; Bannur, S.; Mehta, I.; Kalra, H.; Virmani, A.; Padmanabhan, V.N.; Bhandari, R.; Raman, B. Hams: Driver and driving monitoring using a smartphone. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New Delhi, India, 29 October–2 November 2018; pp. 840–842. [Google Scholar]

- Shang, Y.; Yang, M.; Cui, J.; Cui, L.; Huang, Z.; Li, X. Driver emotion and fatigue state detection based on time series fusion. Electronics 2022, 12, 26. [Google Scholar] [CrossRef]

- Xiao, H.; Li, W.; Zeng, G.; Wu, Y.; Xue, J.; Zhang, J.; Li, C.; Guo, G. On-road driver emotion recognition using facial expression. Appl. Sci. 2022, 12, 807. [Google Scholar] [CrossRef]

- Azadani, M.N.; Boukerche, A. Driving behavior analysis guidelines for intelligent transportation systems. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6027–6045. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V.; O’sullivan, M.; Chan, A.; Diacoyanni-Tarlatzis, I.; Heider, K.; Krause, R.; LeCompte, W.A.; Pitcairn, T.; Ricci-Bitti, P.E.; et al. Universals and cultural differences in the judgments of facial expressions of emotion. J. Personal. Soc. Psychol. 1987, 53, 712. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.E.; Davidson, R.J. The Nature of Emotion: Fundamental Questions; Oxford University Press: New York, NY, USA, 1994. [Google Scholar]

- Group, M.B. Mercedes-Benz Takes in-Car Voice Control to a New Level with ChatGPT. 2023. Available online: https://group.mercedes-benz.com/innovation/digitalisation/connectivity/car-voice-control-with-chatgpt.html (accessed on 31 March 2024).

- Szabó, D. Robot-Wearable Conversation Hand-off for AI Navigation Assistant. Master’s Thesis, University of Oulu, Oulu, Finland, 2024. [Google Scholar]

- Liang, S.; Yu, L. Voice search behavior under human–vehicle interaction context: An exploratory study. Library Hi Tech 2023. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, Y. Research on Personality Traits of In-Vehicle Intelligent Voice Assistants to Enhance Driving Experience. In Proceedings of the International Conference on Human-Computer Interaction, Lleida Spain, 4–6 September 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 236–244. [Google Scholar]

- Lee, K.M.; Moon, Y.; Park, I.; Lee, J.G. Voice orientation of conversational interfaces in vehicles. Behav. Inf. Technol. 2024, 43, 433–444. [Google Scholar] [CrossRef]

- Pinnoji, A.; Ujwala, M.; Sathvik, D.; Reddy, Y.S.; Nivas, M.L. Internet Based Human Vehicle Interface. J. Surv. Fish. Sci. 2023, 10, 2762–2766. [Google Scholar]

- Lang, J.; Jouen, F.; Tijus, C.; Uzan, G. Design of a Virtual Assistant: Collect of User’s Needs for Connected and Automated Vehicles. In Proceedings of the International Conference on Human-Computer Interaction, Washington, DC, USA, 29 June–4 July 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 157–170. [Google Scholar]

- Rosekind, M.R.; Gander, P.H.; Gregory, K.B.; Smith, R.M.; Miller, D.L.; Oyung, R.; Webbon, L.L.; Johnson, J.M. Managing fatigue in operational settings 1: Physiological considerations and counter-measures. Hosp. Top. 1997, 75, 23–30. [Google Scholar] [CrossRef] [PubMed]

- Large, D.R.; Burnett, G.; Antrobus, V.; Skrypchuk, L. Driven to discussion: Engaging drivers in conversation with a digital assistant as a countermeasure to passive task-related fatigue. IET Intell. Transp. Syst. 2018, 12, 420–426. [Google Scholar] [CrossRef]

- Wong, P.N.; Brumby, D.P.; Babu, H.V.R.; Kobayashi, K. Voices in self-driving cars should be assertive to more quickly grab a distracted driver’s attention. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019; pp. 165–176. [Google Scholar]

- Ji, W.; Liu, R.; Lee, S. Do drivers prefer female voice for guidance? An interaction design about information type and speaker gender for autonomous driving car. In Proceedings of the HCI in Mobility, Transport, and Automotive Systems: First International Conference, MobiTAS 2019, Held as Part of the 21st HCI International Conference, HCII 2019, Orlando, FL, USA, 26–31 July 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 208–224. [Google Scholar]

- Politis, I.; Langdon, P.; Adebayo, D.; Bradley, M.; Clarkson, P.J.; Skrypchuk, L.; Mouzakitis, A.; Eriksson, A.; Brown, J.W.; Revell, K.; et al. An evaluation of inclusive dialogue-based interfaces for the takeover of control in autonomous cars. In Proceedings of the 23rd International Conference on Intelligent User Interfaces, Tokyo, Japan, 7–11 March 2018; pp. 601–606. [Google Scholar]

- Meucci, F.; Pierucci, L.; Del Re, E.; Lastrucci, L.; Desii, P. A real-time siren detector to improve safety of guide in traffic environment. In Proceedings of the 2008 16th European Signal Processing Conference, Lausanne, Switzerland, 25–29 August 2008; pp. 1–5. [Google Scholar]

- Tran, V.T.; Tsai, W.H. Acoustic-based emergency vehicle detection using convolutional neural networks. IEEE Access 2020, 8, 75702–75713. [Google Scholar] [CrossRef]

- Park, H.; Kim, K.h. Efficient information representation method for driver-centered AR-HUD system. In Proceedings of the Design, User Experience, and Usability. User Experience in Novel Technological Environments: Second International Conference, DUXU 2013, Held as Part of HCI International 2013, Las Vegas, NV, USA, 21–26 July 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 393–400. [Google Scholar]

- Park, H.S.; Park, M.W.; Won, K.H.; Kim, K.H.; Jung, S.K. In-vehicle AR-HUD system to provide driving-safety information. ETRI J. 2013, 35, 1038–1047. [Google Scholar] [CrossRef]

- Gabbard, J.L.; Fitch, G.M.; Kim, H. Behind the glass: Driver challenges and opportunities for AR automotive applications. Proc. IEEE 2014, 102, 124–136. [Google Scholar] [CrossRef]

- An, Z.; Xu, X.; Yang, J.; Liu, Y.; Yan, Y. A real-time three-dimensional tracking and registration method in the AR-HUD system. IEEE Access 2018, 6, 43749–43757. [Google Scholar] [CrossRef]

- Tasaki, T.; Moriya, A.; Hotta, A.; Sasaki, T.; Okumura, H. Depth perception control by hiding displayed images based on car vibration for monocular head-up display. In Proceedings of the 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA, 5–8 November 2012; pp. 323–324. [Google Scholar]

- Jiang, J.; Huang, Z.; Qian, W.; Zhang, Y.; Liu, Y. Registration technology of augmented reality in oral medicine: A review. IEEE Access 2019, 7, 53566–53584. [Google Scholar] [CrossRef]

- Gabbard, J.L.; Swan, J.E.; Hix, D.; Kim, S.J.; Fitch, G. Active text drawing styles for outdoor augmented reality: A user-based study and design implications. In Proceedings of the 2007 IEEE Virtual Reality Conference, Charlotte, NC, USA, 10–14 March 2007; pp. 35–42. [Google Scholar]

- Broy, N.; Guo, M.; Schneegass, S.; Pfleging, B.; Alt, F. Introducing novel technologies in the car: Conducting a real-world study to test 3D dashboards. In Proceedings of the 7th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Nottingham, UK, 1–3 September 2015; pp. 179–186. [Google Scholar]

- Malcolm, L.; Herriotts, P. I can see clearly now: Developing a camera-based automotive rear-view mirror using a human-centred philosophy. Ergon. Des. 2024, 32, 14–18. [Google Scholar] [CrossRef]

- Pan, J.; Appia, V.; Villarreal, J.; Weaver, L.; Kwon, D.K. Rear-stitched view panorama: A low-power embedded implementation for smart rear-view mirrors on vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 20–29. [Google Scholar]

- Mellon, T.R.P. Using Digital Human Modeling to Evaluate and Improve Car Pillar Design: A Proof of Concept and Design of Experiments. Master’s Thesis, Oregon State University, Corvallis, OR, USA, 2021. [Google Scholar]

- Srinivasan, S. Early Design Evaluation of See-Through Automotive A-pillar Concepts Using Digital Human Modeling and Mixed Reality Techniques. Master’s Thesis, Oregon State University, Corvallis, OR, USA, 2022. [Google Scholar]

- Srinivasan, S.; Demirel, H.O. Quantifying vision obscuration of a-pillar concept variants using digital human modeling. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, St. Louis, MO, USA, 14–17 August 2022; American Society of Mechanical Engineers: New York, NY, USA, 2022; Volume 86212, p. V002T02A049. [Google Scholar]

- Kaboli, M.; Long, A.; Cheng, G. Humanoids learn touch modalities identification via multi-modal robotic skin and robust tactile descriptors. Adv. Robot. 2015, 29, 1411–1425. [Google Scholar] [CrossRef]

- Kaboli, M.; Mittendorfer, P.; Hügel, V.; Cheng, G. Humanoids learn object properties from robust tactile feature descriptors via multi-modal artificial skin. In Proceedings of the 2014 IEEE-RAS International Conference on Humanoid Robots, Madrid, Spain, 18–20 November 2014; pp. 187–192. [Google Scholar]

- Kaboli, M.; De La Rosa T, A.; Walker, R.; Cheng, G. In-hand object recognition via texture properties with robotic hands, artificial skin, and novel tactile descriptors. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Republic of Korea, 3–5 November 2015; pp. 1155–1160. [Google Scholar]

- Kaboli, M.; Cheng, G. Dexterous hands learn to re-use the past experience to discriminate in-hand objects from the surface texture. In Proceedings of the 33rd Annual Conference of the Robotics Society of Japan (RSJ 2015), Tokyo, Japan, 5 September 2015. [Google Scholar]

- Kaboli, M.; Yao, K.; Feng, D.; Cheng, G. Tactile-based active object discrimination and target object search in an unknown workspace. Auton. Robot. 2019, 43, 123–152. [Google Scholar] [CrossRef]

- Kaboli, M.; Feng, D.; Yao, K.; Lanillos, P.; Cheng, G. A tactile-based framework for active object learning and discrimination using multimodal robotic skin. IEEE Robot. Autom. Lett. 2017, 2, 2143–2150. [Google Scholar] [CrossRef]

- Hirokawa, M.; Uesugi, N.; Furugori, S.; Kitagawa, T.; Suzuki, K. Effect of haptic assistance on learning vehicle reverse parking skills. IEEE Trans. Haptics 2014, 7, 334–344. [Google Scholar] [CrossRef] [PubMed]

- Katzourakis, D.I.; de Winter, J.C.; Alirezaei, M.; Corno, M.; Happee, R. Road-departure prevention in an emergency obstacle avoidance situation. IEEE Trans. Syst. Man, Cybern. Syst. 2013, 44, 621–629. [Google Scholar] [CrossRef]

- Adell, E.; Várhelyi, A.; Hjälmdahl, M. Auditory and haptic systems for in-car speed management–A comparative real life study. Transp. Res. Part Traffic Psychol. Behav. 2008, 11, 445–458. [Google Scholar] [CrossRef]

- Hwang, J.; Chung, K.; Hyun, J.; Ryu, J.; Cho, K. Development and evaluation of an in-vehicle haptic navigation system. In Proceedings of the Information Technology Convergence, Secure and Trust Computing, and Data Management: ITCS 2012 & STA 2012, Gwangju, Republic of Korea, 6–8 September 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 47–53. [Google Scholar]

- Pieraccini, R.; Dayanidhi, K.; Bloom, J.; Dahan, J.G.; Phillips, M.; Goodman, B.R.; Prasad, K.V. A multimodal conversational interface for a concept vehicle. New Sch. Psychol. Bull. 2003, 1, 9–24. [Google Scholar]

- Pfleging, B.; Schneegass, S.; Schmidt, A. Multimodal interaction in the car: Combining speech and gestures on the steering wheel. In Proceedings of the 4th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Portsmouth, NH, USA, 17–19 October 2012; pp. 155–162. [Google Scholar]

- Braun, M.; Broy, N.; Pfleging, B.; Alt, F. Visualizing natural language interaction for conversational in-vehicle information systems to minimize driver distraction. J. Multimodal User Interfaces 2019, 13, 71–88. [Google Scholar] [CrossRef]

- Jung, J.; Lee, S.; Hong, J.; Youn, E.; Lee, G. Voice+ Tactile: Augmenting in-vehicle voice user interface with tactile touchpad interaction voice user interface; tactile feedback touchpad; in-vehicle user interface. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI 2020), Honolulu, HI, USA, 25–30 April 2020. [Google Scholar]

- Lee, S.H.; Yoon, S.O. User interface for in-vehicle systems with on-wheel finger spreading gestures and head-up displays. J. Comput. Des. Eng. 2020, 7, 700–721. [Google Scholar] [CrossRef]

- Ma, J.; Ding, Y. The Impact of In-Vehicle Voice Interaction System on Driving Safety. J. Physics: Conf. Ser. 2021, 1802, 042083. [Google Scholar] [CrossRef]

- Lenstrand, M. Human-Centered Design of AI-driven Voice Assistants for Autonomous Vehicle Interactions. J. Bioinform. Artif. Intell. 2023, 3, 37–55. [Google Scholar]

- Mahmood, A.; Wang, J.; Yao, B.; Wang, D.; Huang, C.M. LLM-Powered Conversational Voice Assistants: Interaction Patterns, Opportunities, Challenges, and Design Guidelines. arXiv 2023, arXiv:2309.13879. [Google Scholar]

- Abbott, K. Voice Enabling Web Applications: VoiceXML and Beyond; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Yankelovich, N.; Levow, G.A.; Marx, M. Designing SpeechActs: Issues in speech user interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 7–11 May 1995; pp. 369–376. [Google Scholar]

- Begany, G.M.; Sa, N.; Yuan, X. Factors affecting user perception of a spoken language vs. textual search interface: A content analysis. Interact. Comput. 2016, 28, 170–180. [Google Scholar] [CrossRef]

- Corbett, E.; Weber, A. What can I say? addressing user experience challenges of a mobile voice user interface for accessibility. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 72–82. [Google Scholar]

- Kim, J.; Ryu, J.H.; Han, T.M. Multimodal interface based on novel HMI UI/UX for in-vehicle infotainment system. Etri J. 2015, 37, 793–803. [Google Scholar] [CrossRef]

- Zhang, R.; Qin, H.; Li, J.T.; Chen, H.B. Influence of Position and Interface for Central Control Screen on Driving Performance of Electric Vehicle. In Proceedings of the HCI in Mobility, Transport, and Automotive Systems. Automated Driving and In-Vehicle Experience Design: Second International Conference, MobiTAS 2020, Held as Part of the 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 445–452. [Google Scholar]

- Hock, P.; Benedikter, S.; Gugenheimer, J.; Rukzio, E. Carvr: Enabling in-car virtual reality entertainment. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 4034–4044. [Google Scholar]

- Hjorth, B. EEG analysis based on time domain properties. Electroencephalogr. Clin. Neurophysiol. 1970, 29, 306–310. [Google Scholar] [CrossRef]

- Gaffary, Y.; Lécuyer, A. The use of haptic and tactile information in the car to improve driving safety: A review of current technologies. Front. ICT 2018, 5, 5. [Google Scholar] [CrossRef]

- Nukarinen, T.; Raisamo, R.; Farooq, A.; Evreinov, G.; Surakka, V. Effects of directional haptic and non-speech audio cues in a cognitively demanding navigation task. In Proceedings of the 8th Nordic Conference on Human-Computer Interaction: Fun, Fast, Foundational, Helsinki, Finland, 26–30 October 2014; pp. 61–64. [Google Scholar]

- Mohebbi, R.; Gray, R.; Tan, H.Z. Driver Reaction Time to Tactile and Auditory Rear-End Collision Warnings While Talking on a Cell Phone. Hum. Factors 2009, 51, 102–110. [Google Scholar] [CrossRef]

- Haas, E.C.; van Erp, J.B. Multimodal warnings to enhance risk communication and safety. Saf. Sci. 2014, 61, 29–35. [Google Scholar] [CrossRef]

- Thorslund, B.; Peters, B.; Herbert, N.; Holmqvist, K.; Lidestam, B.; Black, A.; Lyxell, B. Hearing loss and a supportive tactile signal in a navigation system: Effects on driving behavior and eye movements. J. Eye Mov. Res. 2013, 6, 5. [Google Scholar] [CrossRef]

- Hancock, P.A.; Mercado, J.E.; Merlo, J.; Van Erp, J.B. Improving target detection in visual search through the augmenting multi-sensory cues. Ergonomics 2013, 56, 729–738. [Google Scholar] [CrossRef] [PubMed]

- Design, H. What Does an Automotive Human-Machine Interaction System Mean? What Functions Does It Include? 2020. Available online: https://www.faceui.com/hmi/detail/205.html (accessed on 10 July 2024).

- Peng, L. BMW Natural Interaction System Debuts at MWC, Enabling Interaction with the Vehicle’s Surrounding Environment. 2019. Available online: https://cn.technode.com/post/2019-02-27/bmw-mwc/ (accessed on 10 July 2024).

- Müller, A.L.; Fernandes-Estrela, N.; Hetfleisch, R.; Zecha, L.; Abendroth, B. Effects of non-driving related tasks on mental workload and take-over times during conditional automated driving. Eur. Transp. Res. Rev. 2021, 13, 16. [Google Scholar] [CrossRef]

- Du, Z.; Qian, Y.; Liu, X.; Ding, M.; Qiu, J.; Yang, Z.; Tang, J. Glm: General language model pretraining with autoregressive blank infilling. arXiv 2021, arXiv:2103.10360. [Google Scholar]

- Wang, C.; Liu, X.; Chen, Z.; Hong, H.; Tang, J.; Song, D. DeepStruct: Pretraining of language models for structure prediction. arXiv 2022, arXiv:2205.10475. [Google Scholar]

- Liu, M.; Zhao, Z.; Qi, B. Research on Intelligent Cabin Design of Camper Vehicle Based on Kano Model and Generative AI. In Proceedings of the International Conference on Human-Computer Interaction, Washington, DC, USA, 29 June–4 July 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 156–168. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual instruction tuning. In Proceedings of the 37th International Conference on Neural Information Processing Systems (NIPS ’23), New Orleans, LA, USA, 10–16 December 2023; Curran Associates Inc.: Red Hook, NY, USA, 2024. Article 1516. pp. 34892–34916. [Google Scholar]

| Level | Main Features | Human–Machine Interaction | Scenario Expansion | Connected Services | Expected Date |

|---|---|---|---|---|---|

| Level 0 Traditional Cockpit | The tasks are executed in the in-cockpit scenarios; the cockpit passively responds to driver and passenger needs; it includes in-vehicle service capabilities. | Passive Interaction | Partial In-Cockpit Scenarios | In-Vehicle Infotainment System | – |

| Level 1 Assisted Intelligent Cockpit | The tasks are executed in the in-cockpit scenarios; the cockpit actively perceives the driver and occupants in some scenarios; the execution of the tasks requires the authorization of drivers; it includes drivers and passenger-oriented cabin service capabilities. | Cockpit Authorized Interaction | Partial In-Cockpit Scenarios | Cabin Services | 2021 |

| Level 2 Partially Cognitive Intelligent Cockpit | The tasks are executed in the partial in-cockpit and out-cockpit scenarios; the cockpit actively perceives the driver and occupants in some scenarios; the execution of the tasks is partially active; it includes open connected cloud services capabilities. | Partially Active Interaction | Partial In-Cockpit and Out-Cockpit Scenarios | Open Connected Cloud Services | 2022 to 2026 |

| Level 3 Highly Cognitive Intelligent Cockpit | The tasks are executed in the full in-cockpit and partial out-cockpit scenarios; the cockpit actively perceives the driver and occupants in the full in-cockpit scenarios; some of the tasks are executed actively; it includes cloud control platform services capabilities. | Partially Active Interaction | All In-Cockpit and Partial Out-Cockpit Scenarios | Cloud Control Platform Services | 2027 to 2031 |

| Level 4 Fully Cognitive Intelligent Cockpit | The tasks are executed across all scenarios both in-cockpit and out-cockpit; the cockpit actively perceives the driver and occupants in all in-cockpit scenarios; all of the tasks can be executed actively; it includes societal-level service capabilities. | Fully Active Interaction | All In-Cockpit and Out-Cockpit Scenarios | Societal-Level Services | 2032– |

| Category | Effectiveness | Works | Main Contents |

|---|---|---|---|

Voice-based Interaction | Voice Assistant | [9] | BMW’s intelligent personal assistant. |

| [154] | Mercedes-Benz’s MBUX voice assistant combined with ChatGPT. | ||

| [155,156,157,158,159,160] | Voice assistants aid in navigation, entertainment, communication, and V2V. | ||

| Alertness | [161] | Engaging in conversation improves driver alertness more effectively. | |

| [162] | Validated with the Wizard-of-Oz method that brief intermittent VA conversations improve alertness more effectively. | ||

| [163] | Through simulated experiments, it was demonstrated that a VA with a confident tone of voice better captures the driver’s attention. | ||

| [164] | Female voices are seen as more trustworthy, acceptable, and pleasant. | ||

| Takeover Efficiency | [165] | Using a driving simulator, the impact of voice interfaces on driver takeover response time was evaluated. | |

| External Sound Recognition | [166] | A pitch detection algorithm based on signal processing aims to identify emergency vehicle sirens in real time. | |

| [167] | Detect emergency vehicle sirens using convolutional neural networks. | ||

Display-based Interaction | HUD and HMD Display | [168,169] | Use stereo cameras to capture forward images and detect vehicles and pedestrians in real time with SVM. |

| [170] | Achieving registration requires recognizing the posture of vehicles, heads, and eyes, as well as external targets. | ||

| [171] | Establish a real-time registration method for 3D tracking of virtual images with the environment using feature image information. | ||

| [172] | Improve depth perception accuracy by hiding HUD images during vehicle vibrations and utilizing human visual continuity. | ||

| [173] | Reviewed AR-system-based registration methods comprehensively. | ||

| [174] | Adaptively adjust AR HUD brightness and color based on ambient light. | ||

| 3D Display | [175] | Explore the application of autostereoscopic 3D vehicle dashboards, where quasi-3D displays show better performance. | |

| Rearview Mirror | [176] | Developed a user-centered, camera-based interior rearview mirror that switches between a traditional glass mirror and a display screen. | |

| [177] | Proposed a rear-stitched view panorama system using four rear cameras. | ||

| A-Pillar | [178,179,180] | Improve A-pillar visibility with simple, regular cutouts. | |

| [86] | Project real-time images onto the A-pillar to show the driver the external environment blocked by the A-pillar. | ||

Haptic-based Interaction | Haptic Descriptor | [181,182,183] | Use haptic descriptors to assist machine learning in classification. |

| [182,184] | Use haptic descriptors and machine learning to classify actions and gestures. | ||

| [185,186] | Utilize methods such as active learning and active recognition to improve the accuracy of object recognition using haptic information. | ||

| Sensor Perception | [87] | Adopt a new active armrest with capacitive proximity sensors to achieve touch and gesture interaction and recognition. | |

| Haptic Warning Feedback | [187] | Provide haptic assistance during reverse training using steering wheel torque to prevent collisions during parking. | |

| [188] | Evaluate haptic feedback on lane departure using a driving simulator. | ||

| [189] | Assess the impact of haptic feedback on the accelerator pedal on speeding behavior through speed records and driver interviews. | ||

| Haptic Information Feedback | [88] | Use a wearable belt with eight haptic devices for navigation assistance. | |

| [190] | Embed a 5 × 5 haptic matrix in the seat for navigation directions. | ||

Multimodal Directing Interaction | Voice + Visual + Haptic | [191] | Combine voice with visual or haptic feedback to establish a more natural interactive dialogue system. |

| Voice + Gesture | [192] | Combine voice and gestures to control in-vehicle functions. | |

| Voice + Visual | [193] | Enhance voice interactions by combining voice and visual feedback with visualized dialogues. | |

| Haptic + Voice | [194] | Enhance VUI with multi-touch input and high-resolution haptic output. | |

| Gesture + Visual | [195] | Combine gestures with visual modes (such as HUD) to control menus. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, F.; Ge, X.; Li, J.; Fan, Y.; Li, Y.; Zhao, R. Intelligent Cockpits for Connected Vehicles: Taxonomy, Architecture, Interaction Technologies, and Future Directions. Sensors 2024, 24, 5172. https://doi.org/10.3390/s24165172

Gao F, Ge X, Li J, Fan Y, Li Y, Zhao R. Intelligent Cockpits for Connected Vehicles: Taxonomy, Architecture, Interaction Technologies, and Future Directions. Sensors. 2024; 24(16):5172. https://doi.org/10.3390/s24165172

Chicago/Turabian StyleGao, Fei, Xiaojun Ge, Jinyu Li, Yuze Fan, Yun Li, and Rui Zhao. 2024. "Intelligent Cockpits for Connected Vehicles: Taxonomy, Architecture, Interaction Technologies, and Future Directions" Sensors 24, no. 16: 5172. https://doi.org/10.3390/s24165172