A Survey on Multi-Sensor Fusion Perimeter Intrusion Detection in High-Speed Railways

Abstract

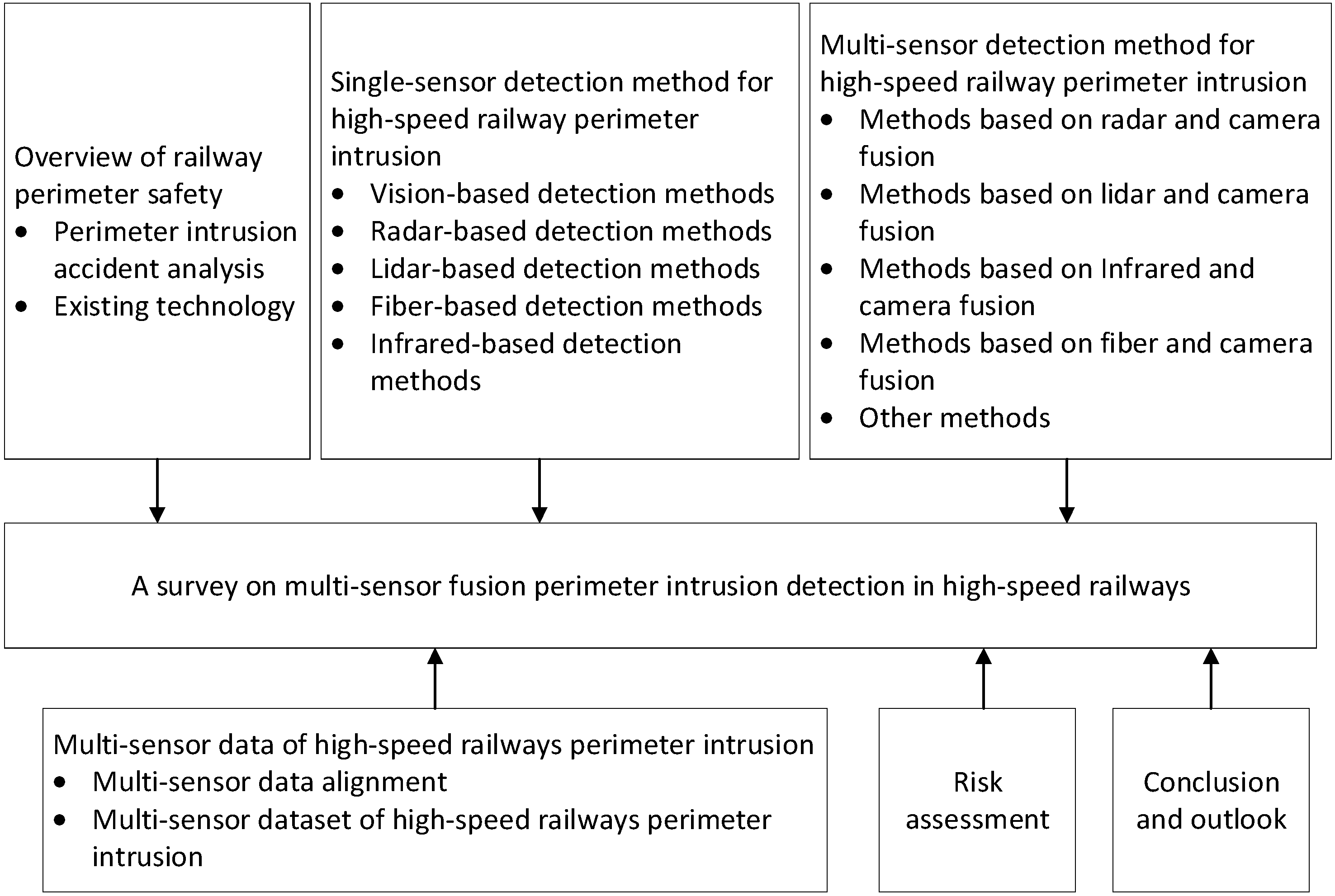

:1. Outline

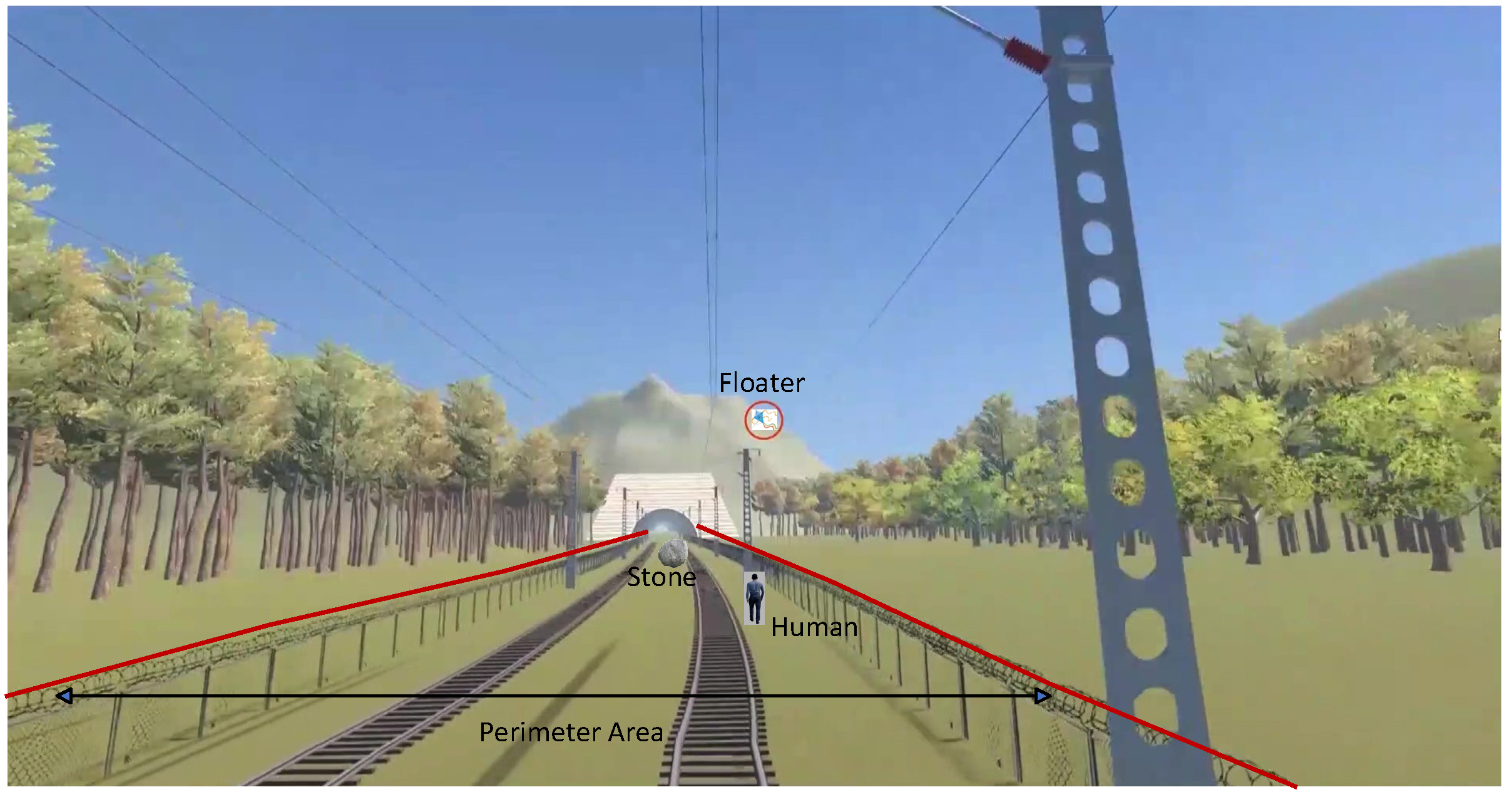

2. Overview of High-Speed Rail Perimeter Intrusion Security Issues

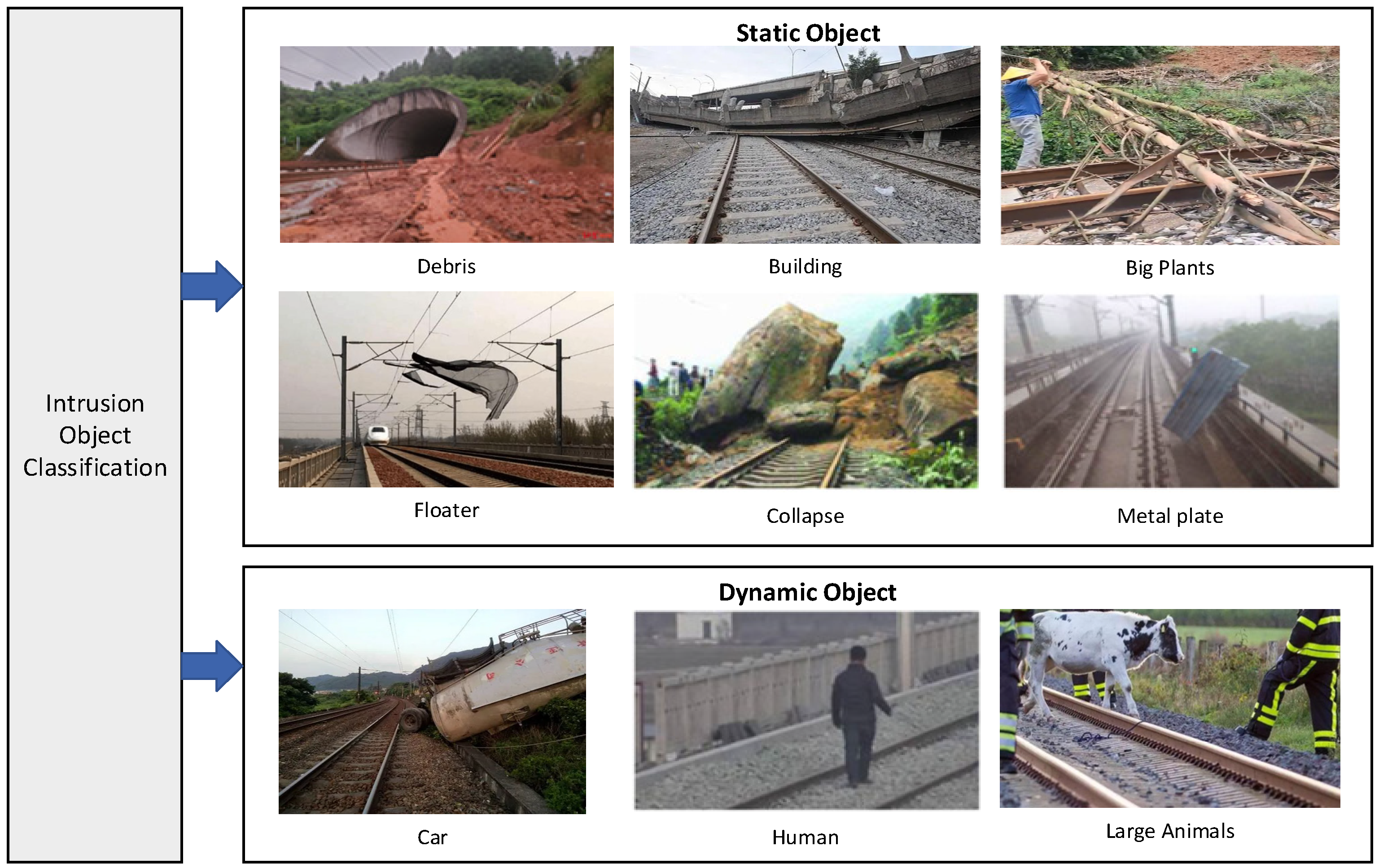

2.1. Perimeter Intrusion Event Analysis

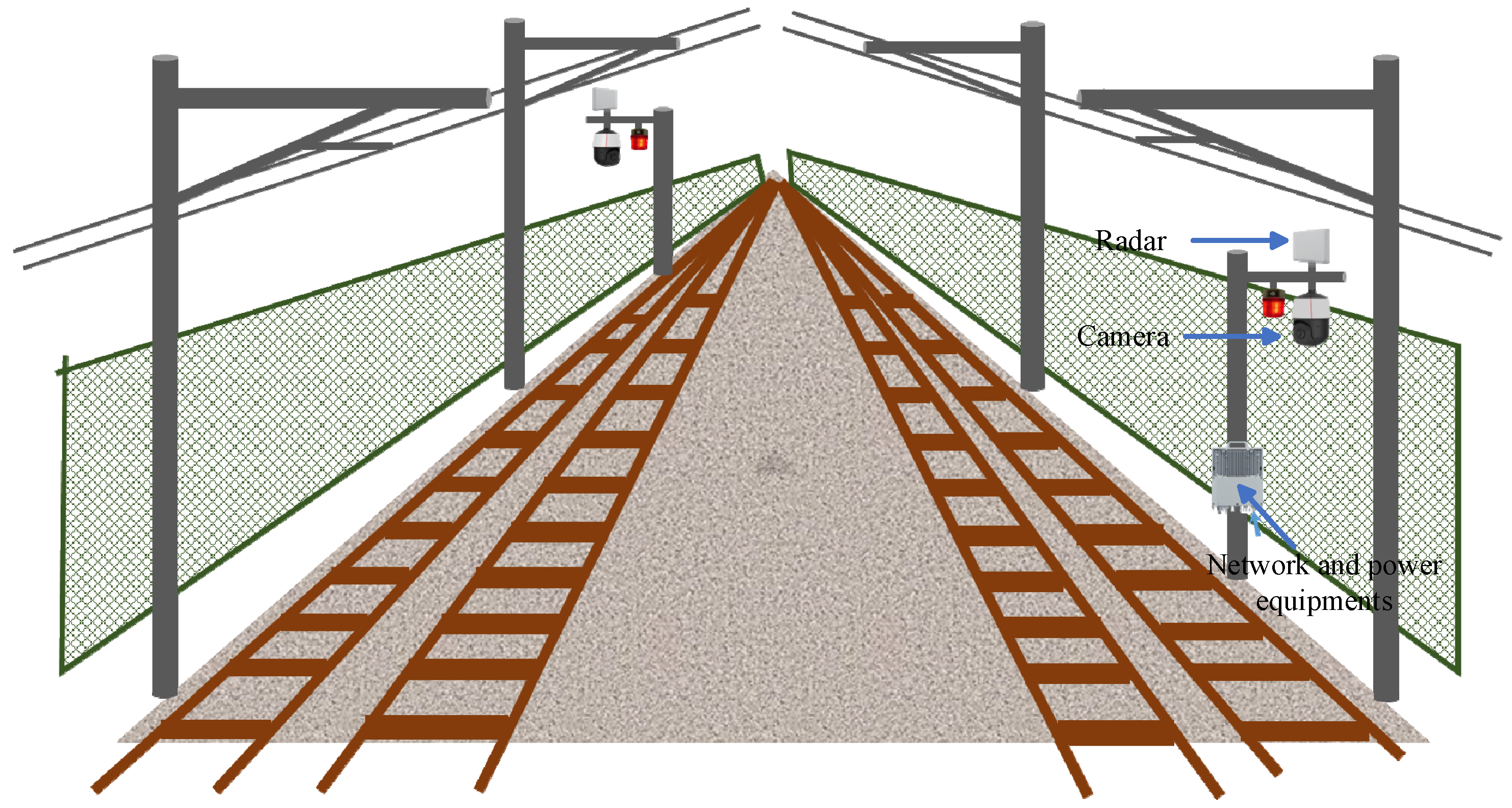

2.2. Existing Security Method

3. Single-Mode Identification Method for High-Speed Rail Perimeter Intrusion

3.1. Vision-Based Detection Method

3.2. Radar-Based Detection Method

3.3. Lidar-Based Detection Method

3.4. Fiber-Based Detection Method

3.5. Infrared-Based Detection Method

3.6. Summarize

4. Multi-Sensor Identification Method for High-Speed Rail Perimeter Intrusion

4.1. Methods Based on Radar and Camera Fusion

4.2. Methods Based on Lidar and Camera Fusion

4.3. Methods Based on Infrared and Camera Fusion

4.4. Methods Based on Fiber and Camera Fusion

4.5. Other Methods

5. High-Speed Rail Perimeter Intrusion Multi-Sensor Data

5.1. Multi-Sensor Data Alignment Method

5.2. Railway Scene Multi-Sensor Dataset

6. Risk Assessment of Railway Safety

7. Conclusions

- (1)

- Reliable perception under severe weather conditions

- (2)

- Accurate recognition using multi-sensor fusion data

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- International Union of Railways (UIC), High-Speed Rail. 2023. Available online: https://uic.org/IMG/pdf/atlas_uic_2023.pdf (accessed on 20 February 2024).

- Wang, Z.; Guo, G.; Liu, C.; Zhu, W. Research on Accident Risk Early Warning System Based on Railway Safety Management Data under Cloud Edge Collaborative Architecture. In Proceedings of the 2022 2nd International Signal Processing, Communications and Engineering Management Conference (ISPCEM), Montreal, ON, Canada, 25–27 November 2022; pp. 300–304. [Google Scholar]

- Koohmishi, M.; Kaewunruen, S.; Chang, L.; Guo, Y. Advancing railway track health monitoring: Integrating GPR, InSAR and machine learning for enhanced asset management. Autom. Constr. 2024, 162, 105378. [Google Scholar] [CrossRef]

- Alawad, H.; Kaewunruen, S. Wireless Sensor Networks: Toward Smarter Railway Stations. Infrastructures 2018, 3, 24. [Google Scholar] [CrossRef]

- Alawad, H.; Kaewunruen, S.; An, M. A Deep Learning Approach Towards Railway Safety Risk Assessment. IEEE Access 2020, 8, 102811–102832. [Google Scholar] [CrossRef]

- Qin, Y.; Cao, Z.; Sun, Y.; Kou, L.; Zhao, X.; Wu, Y.; Liu, Q.; Wang, M.; Jia, L. Research on Active Safety Methodologies for Intelligent Railway Systems. Engineering 2023, 27, 266–279. [Google Scholar] [CrossRef]

- Institute of Computing Technologies of China Academy of Railway Sciences. High-Speed Railway Perimeter Intrusion Alarm System Technology Implementation Plan; Institute of Computing Technologies of China Academy of Railway Sciences: Beijing, China, 2016. [Google Scholar]

- Rosić, S.; Stamenković, D.; Banić, M.; Simonović, M.; Ristić-Durrant, D.; Ulianov, C. Analysis of the Safety Level of Obstacle Detection in Autonomous Railway Vehicles. Acta Polytech. Hung. 2022, 19, 187–205. Available online: https://api.semanticscholar.org/CorpusID:246734413 (accessed on 23 February 2024). [CrossRef]

- Railroad Deaths and Injuries. National Safety Council. Available online: https://injuryfacts.nsc.org/home-and-community/safety-topics/railroad-deaths-and-injuries (accessed on 23 February 2024).

- Fleck, A. Indian Railways Are Improving, But Safety Issues Remain. Statista. 7 June 2023. Available online: https://www.statista.com/chart/30152/number-of-train-related-accidents-in-india/ (accessed on 1 April 2024).

- Ristić-Durrant, D.; Haseeb, M.A.; Franke, M.; Banić, M.; Simonović, M.; Stamenković, D. Artificial Intelligence for Obstacle Detection in Railways: Project SMART and Beyond. In Dependable Computing—EDCC 2020 Workshops; Bernardi, S., Vittorini, V., Flammini, F., Nardone, R., Marrone, S., Adler, R., Schneider, D., Schleiß, P., Nostro, N., Olsen, R.L., et al., Eds.; EDCC 2020; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2020; Volume 1279. [Google Scholar]

- Singh, S.; Kumar, J. Automatic Train Protection (ATP) and Signaling with Accident Avoidance System for Indian Railways. Int. J. Innov. Technol. Explor. Eng. 2020, 9, 986–990. [Google Scholar] [CrossRef]

- Yao, Y.; Kitamura, S.; Ishima, R.; Murakami, T.; Hayashi, T.; Takahashi, T.; Kowashi, Y.; Segawa, Y. Enhancement of detection functions of a 3D-laser-radar-type obstacle detection system at a level crossing. WIT Trans. Built Environ. 2020, 199, 43–52. [Google Scholar]

- Franke, M.; Gopinath, V.; Ristić-Durrant, D.; Michels, K. Object-Level Data Augmentation for Deep Learning-Based Obstacle Detection in Railways. Appl. Sci. 2022, 12, 10625. [Google Scholar] [CrossRef]

- Perić, S.; Milojković, M.; Stan, S.-D.; Banić, M.; Antić, D. Dealing with Low Quality Images in Railway Obstacle Detection System. Appl. Sci. 2022, 12, 3041. [Google Scholar] [CrossRef]

- Chen, Y.; Tong, S.; Lu, X.; Wei, Y. A Semi-Supervised Railway Foreign Object Detection Method Based on GAN. In Proceedings of the 5th International Conference on Computer Science and Application Engineering, Sanya, China, 19–21 October 2021. [Google Scholar]

- Rampriya, R.S.; Suganya, R.; Nathan, S.; Perumal, P.S. A Comparative Assessment of Deep Neural Network Models for Detecting Obstacles in the Real Time Aerial Railway Track Images. Appl. Artif. Intell. 2022, 36, 2018184. [Google Scholar] [CrossRef]

- Prakash, R.M.; Vimala, M.; Keerthana, S.; Kokila, P.; Sneha, S. Machine Learning based Obstacle Detection for Avoiding Accidents on Railway Tracks. In Proceedings of the 2023 7th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 17–19 May 2023; pp. 236–241. [Google Scholar]

- Feng, J.; Li, T.; Niu, Q.; Wang, B. Automatic Learning Technology of Railway Based on Deep Learning for Railway Obstacle Avoidance. DEStech Trans. Comput. Sci. Eng. 2019, 1039, 73–78. [Google Scholar] [CrossRef]

- Pan, H.; Li, Y.; Wang, H.; Tian, X. Railway Obstacle Intrusion Detection Based on Convolution Neural Network Multitask Learning. Electronics 2022, 11, 2697. [Google Scholar] [CrossRef]

- Meng, C.; Wang, Z.; Shi, L.; Gao, Y.; Tao, Y.; Wei, L. SDRC-YOLO: A Novel Foreign Object Intrusion Detection Algorithm in Railway Scenarios. Electronics 2023, 12, 1256. [Google Scholar] [CrossRef]

- Wang, S.; Wang, Y.; Chang, Y.; Zhao, R.; She, Y. EBSE-YOLO: High Precision Recognition Algorithm for Small Target Foreign Object Detection. IEEE Access 2023, 11, 57951–57964. [Google Scholar] [CrossRef]

- Qi, Z.; Ma, D.; Xu, J.; Xiang, A.; Qu, H. Improved YOLOv5 Based on Attention Mechanism and FasterNet for Foreign Object Detection on Railway and Airway tracks. arXiv 2024, arXiv:2403.08499. [Google Scholar]

- Cao, Y.; Pan, H.; Wang, H.; Xu, X.; Li, Y.; Tian, Z.; Zhao, X. Small Object Detection Algorithm for Railway Scene. In Proceedings of the 2022 7th International Conference on Image, Vision and Computing (ICIVC), Xi’an, China, 26–28 July 2022; pp. 100–105. [Google Scholar]

- Qin, Y.; He, D.; Jin, Z.; Chen, Y.; Shan, S. An Improved Deep Learning Algorithm for Obstacle Detection in Complex Rail Transit Environments. IEEE Sens. J. 2024, 24, 4011–4022. [Google Scholar] [CrossRef]

- Guo, B.; Yang, L.; Shi, H.; Wang, Y. High speed railway foreign bodies intrusion detection based on rapid background difference algorithm. J. Instrum. Meters 2016, 37, 8. [Google Scholar]

- Kapoor, R.; Goel, R.; Sharma, A. An intelligent railway surveillance framework based on recognition of object and railway track using deep learning. Multimed. Tools Appl. 2022, 81, 21083–21109. [Google Scholar] [CrossRef]

- Chen, Z.; Niu, W.; Wu, C.; Zhang, L.; Wang, Y. Near Real-time Situation Awareness and Anomaly Detection for Complex Railway Environment. In Proceedings of the 2021 IEEE Conference on Cognitive and Computational Aspects of Situation Management (CogSIMA), Tallinn, Estonia, 14–22 May 2021; pp. 1–8. [Google Scholar]

- Appiah, E.O.; Mensah, S. Object detection in adverse weather condition for autonomous vehicles. Multimed. Tools Appl. 2023, 83, 28235–28261. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Access 2020, 8, 2847–2868. [Google Scholar] [CrossRef]

- Liu, J.; Zhu, X.; Liu, F.; Guo, L.; Zhao, Z.; Sun, M.; Wang, W.; Lu, H.; Zhou, S.; Zhang, J.; et al. OPT: Omni-Perception Pre-Trainer for Cross-Modal Understanding and Generation. arXiv 2021, arXiv:2107.00249. [Google Scholar]

- Cai, H.; Li, F.; Gao, D.; Yang, Y.; Li, S.; Gao, K.; Qin, A.; Hu, C.; Huang, Z. Foreign Objects Intrusion Detection Using Millimeter Wave Radar on Railway Crossings. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 2776–2781. [Google Scholar]

- Yanwei, J.; Yu, D. Research on Railway Obstacle Detection Method Based on Radar. In Proceedings of the 2021 7th International Symposium on Mechatronics and Industrial Informatics (ISMII), Zhuhai, China, 22–24 January 2021; pp. 222–226. [Google Scholar]

- Richter, Y.; Balal, N.; Pinhasi, Y. Neural-Network-Based Target Classification and Range Detection by CW MMW Radar. Remote. Sens. 2023, 15, 4553. [Google Scholar] [CrossRef]

- Narayanan, A.H.; Brennan, P.; Benjamin, R.; Mazzino, N.; Bochetti, G.; Lancia, A. Railway level crossing obstruction detection using MIMO radar. In Proceedings of the 2011 8th European Radar Conference, Manchester, UK, 12–14 October 2011; pp. 57–60. [Google Scholar]

- Pan, Z.; Ding, F.; Zhong, H.; Lu, C.X. Moving Object Detection and Tracking with 4D Radar Point Cloud. arXiv 2023, arXiv:2309.09737. [Google Scholar]

- Yan, Q.; Wang, Y. MVFAN: Multi-View Feature Assisted Network for 4D Radar Object Detection. arXiv 2023, arXiv:2310.16389. [Google Scholar]

- Shi, W.; Zhu, Z.; Zhang, K.; Chen, H.; Yu, Z.; Zhu, Y. SMIFormer: Learning Spatial Feature Representation for 3D Object Detection from 4D Imaging Radar via Multi-View Interactive Transformers. Sensors 2023, 23, 9429. [Google Scholar] [CrossRef]

- Amaral, V.; Marques, F.; Lourenço, A.; Barata, J.; Santana, P.F. Laser-Based Obstacle Detection at Railway Level Crossings. J. Sensors 2016, 2016, 1719230. [Google Scholar] [CrossRef]

- Yu, X.; He, W.; Qian, X.; Yang, Y.; Zhang, T.; Ou, L. Real-time rail recognition based on 3D point clouds. Meas. Sci. Technol. 2022, 33, 105207. [Google Scholar] [CrossRef]

- Qu, J.; Li, S.; Li, Y.; Liu, L. Research on Railway Obstacle Detection Method Based on Developed Euclidean Clustering. Electronics 2023, 12, 1175. [Google Scholar] [CrossRef]

- Wisultschew, C.; Mujica, G.; Lanza-Gutierrez, J.M.; Portilla, J. 3D-LIDAR Based Object Detection and Tracking on the Edge of IoT for Railway Level Crossing. IEEE Access 2021, 9, 35718–35729. [Google Scholar] [CrossRef]

- Shinoda, N.; Takeuchi, T.; Kudo, N.; Mizuma, T. Fundamental experiment for utilizing LiDAR sensor for railway. Int. J. Transp. Dev. Integr. 2018, 2, 319–329. [Google Scholar] [CrossRef]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4490–4499. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast Encoders for Object Detection From Point Clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12689–12697. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection From Point Cloud. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Sinha, D.; Feroz, F. Obstacle Detection on Railway Tracks Using Vibration Sensors and Signal Filtering Using Bayesian Analysis. IEEE Sens. J. 2016, 16, 642–649. [Google Scholar] [CrossRef]

- Catalano, A.; Bruno, F.A.; Galliano, C.; Pisco, M.; Persiano, G.V.; Cutolo, A.; Cusano, A. An optical fiber intrusion detection system for railway security. Sens. Actuators A-Phys. 2017, 253, 91–100. [Google Scholar] [CrossRef]

- Nan, Q.; Li, S.; Yao, Y.; Li, Z.; Wang, H.; Wang, L.; Sun, L. A Novel Monitoring Approach for Train Tracking and Incursion Detection in Underground Structures Based on Ultra-Weak FBG Sensing Array. Sensors 2019, 19, 2666. [Google Scholar] [CrossRef]

- Qu, J.; Liu, Y.; Zhang, J.; Cheng, Y. A Method to Monitor Railway Tracks’ Foreign Body Invasion Based on Phase Sensitive Optical Fiber Sensing Technology. In Proceedings of the 2017 International Conference on Smart Grid and Electrical Automation (ICSGEA), Changsha, China, 27–28 May 2017; pp. 315–319. [Google Scholar]

- Meng, H. Research on Intelligent Monitoring and Identification Methods for Railway Safety Based on Fiber Optic Sensing Technology. Ph.D. Thesis, Beijing Jiaotong University, Beijing, China, 2022. [Google Scholar]

- Fang, X. Research on Railway Perimeter Intrusion Localization and Identification Algorithm Based on Mach Zehnder Interferometer. Master’s Thesis, Lanzhou Jiaotong University, Lanzhou, China, 2021. [Google Scholar]

- Berg, A.; Öfjäll, K.; Ahlberg, J.; Felsberg, M. Detecting Rails and Obstacles Using a Train-Mounted Thermal Camera. In Proceedings of the 19th Scandinavian Conference, SCIA 2015, Copenhagen, Denmark, 15–17 June 2015. [Google Scholar]

- Passarella, R.; Tutuko, B.; Prasetyo, A.P. Design concept of train obstacle detection system in Indonesia. IJRRAS 2011, 9, 453–460. [Google Scholar]

- Kapoor, R.; Goel, R.; Sharma, A. Deep Learning Based Object and Railway Track Recognition Using Train Mounted Thermal Imaging System. J. Comput. Theor. Nanosci. 2020, 17, 5062–5071. [Google Scholar] [CrossRef]

- Wang, Z. Research on Railway Foreign Object Detection and Tracking Algorithm in Infrared Weak Light Environment. Master’s Thesis, Lanzhou Jiaotong University, Lanzhou, China, 2023. [Google Scholar]

- Li, Y.; Liu, Y.; Dong, H.; Hu, W.; Lin, C. Intrusion detection of railway clearance from infrared images using generative adversarial networks. J. Intell. Fuzzy Syst. 2021, 40, 3931–3943. [Google Scholar] [CrossRef]

- Yang, S.; Chen, Z.; Ma, X.; Zong, X.; Feng, Z. Real-time high-precision pedestrian tracking: A detection–tracking–correction strategy based on improved SSD and Cascade R-CNN. J. Real-Time Image Process. 2021, 19, 287–302. [Google Scholar] [CrossRef]

- Kosuge, A.; Suehiro, S.; Hamada, M.; Kuroda, T. mmWave-YOLO: A mmWave Imaging Radar-Based Real-Time Multiclass Object Recognition System for ADAS Applications. IEEE Trans. Instrum. Meas. 2022, 71, 1–10. [Google Scholar] [CrossRef]

- Liu, X.; Cai, Z. Advanced obstacles detection and tracking by fusing millimeter wave radar and image sensor data. ICCAS 2010, 2010, 1115–1120. [Google Scholar]

- Wang, Z.; Miao, X.; Huang, Z.; Luo, H. Research of Target Detection and Classification Techniques Using Millimeter-Wave Radar and Vision Sensors. Remote. Sens. 2021, 13, 1064. [Google Scholar] [CrossRef]

- Lamane, M.; Tabaa, M.; Klilou, A. New Approach Based on Pix2Pix–YOLOv7 mmWave Radar for Target Detection and Classification. Sensors 2023, 23, 9456. [Google Scholar] [CrossRef]

- Song, Y.; Xie, Z.; Wang, X.; Zou, Y. MS-YOLO: Object Detection Based on YOLOv5 Optimized Fusion Millimeter-Wave Radar and Machine Vision. IEEE Sens. J. 2022, 22, 15435–15447. [Google Scholar] [CrossRef]

- Guan, R.; Yao, S.; Zhu, X.; Man, K.L.; Yue, Y.; Smith, J.S.; Lim, E.G.; Yue, Y. Efficient-VRNet: An Exquisite Fusion Network for Riverway Panoptic Perception based on Asymmetric Fair Fusion of Vision and 4D mmWave Radar. arXiv 2023, arXiv:2308.10287. [Google Scholar]

- Shuai, X.; Shen, Y.; Tang, Y.; Shi, S.; Ji, L.; Xing, G. Millieye: A lightweight mmwave radar and camera fusion system for robust object detection. In Proceedings of the International Conference on Internet-of-Things Design and Implementation, Charlottesville, VA, USA, 18–21 May 2021; pp. 145–157. [Google Scholar]

- Guo, P.; Shi, T.; Ma, Z.; Wang, J. Human intrusion detection for high-speed railway perimeter under all-weather condition. Railw. Sci. 2024, 3, 97–110. [Google Scholar] [CrossRef]

- Ding, Q.; Li, P.; Yan, X.; Shi, D.; Liang, L.; Wang, W.; Xie, H.; Li, J.; Wei, M. CF-YOLO: Cross Fusion YOLO for Object Detection in Adverse Weather With a High-Quality Real Snow Dataset. IEEE Trans. Intell. Transp. Syst. 2022, 24, 10749–10759. [Google Scholar] [CrossRef]

- Christian, A.B.; Wu, Y.; Lin, C.; Van, L.; Tseng, Y. Radar and Camera Fusion for Object Forecasting in Driving Scenarios. In Proceedings of the 2022 IEEE 15th International Symposium on Embedded Multicore/Many-core Systems-on-Chip (MCSoC), Penang, Malaysia, 19–22 December 2022; pp. 105–111. [Google Scholar]

- Nabati, R.; Qi, H. CenterFusion: Center-based Radar and Camera Fusion for 3D Object Detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 1526–1535. [Google Scholar]

- Han, X.; Wang, H.; Lu, J.; Zhao, C. Road detection based on the fusion of Lidar and image data. Int. J. Adv. Robot. Syst. 2017, 14, 1–10. [Google Scholar] [CrossRef]

- Wang, Z.; Jia, K. Frustum ConvNet: Sliding Frustums to Aggregate Local Point-Wise Features for a modal 3D Object Detection. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1742–1749. [Google Scholar]

- Wang, Z.; Yu, G.; Wu, X.; Li, H.; Li, D. A Camera and LiDAR Data Fusion Method for Railway Object Detection. IEEE Sens. J. 2021, 21, 13442–13454. [Google Scholar]

- Cao, J.; Li, Y.; Du, S. Robust Artificial Intelligence-Aided Multimodal Rail-Obstacle Detection Method by Rail Track Topology Reconstruction. Appl. Sci. 2024, 14, 2795. [Google Scholar] [CrossRef]

- Miickel, S.; Scherer, F.; Schuster, P.F. Multi-sensor obstacle detection on railway tracks. In Proceedings of the IEEE IV2003 Intelligent Vehicles Symposium. Proceedings (Cat. No.03TH8683), Columbus, OH, USA, 9–11 June 2003; pp. 42–46. [Google Scholar]

- Shen, Z.; He, Y.; Du, X.; Yu, J.; Wang, H.; Wang, Y. YCANet: Target Detection for Complex Traffic Scenes Based on Camera-LiDAR Fusion. IEEE Sens. J. 2024, 24, 8379–8389. [Google Scholar] [CrossRef]

- Xiao, T.; Xu, Y.; Yu, H. Research on Obstacle Detection Method of Urban Rail Transit Based on Multisensor Technology. J. Artif. Intell. Technol. 2021, 1, 61–67. [Google Scholar] [CrossRef]

- Wen, L.; Peng, Y.; Lin, M.; Gan, N.; Tan, R. Multi-Modal Contrastive Learning for LiDAR Point Cloud Rail-Obstacle Detection in Complex Weather. Electronics 2024, 13, 220. [Google Scholar] [CrossRef]

- Mai, N.A.; Duthon, P.; Khoudour, L.; Crouzil, A.; Velastín, S.A. 3D Object Detection with SLS-Fusion Network in Foggy Weather Conditions. Sensors 2021, 21, 6711. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Xu, C.; Zhang, W.; Fu, H.; Ma, Z.; Yang, X. Research on Intercity Railway Perimeter Intrusion Monitoring and Alarm Technology Based on Lidar and Video. Railw. Transp. Econ. 2023, 45, 134–142. [Google Scholar] [CrossRef]

- Dreissig, M.; Scheuble, D.; Piewak, F.; Boedecker, J. Survey on LiDAR Perception in Adverse Weather Conditions. In Proceedings of the 2023 IEEE Intelligent Vehicles Symposium (IV), Anchorage, AK, USA, 4–7 June 2023; pp. 1–8. [Google Scholar]

- Gasparini, R.; D’Eusanio, A.; Borghi, G.; Pini, S.; Scaglione, G.; Calderara, S.; Fedeli, E.; Cucchiara, R. Anomaly Detection, Localization and Classification for Railway Inspection. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 3419–3426. [Google Scholar]

- Zhou, X. Research on Railway Foreign Object Intrusion Detection Method Based on Multi-Source Image Fusion. Master’s Thesis, Beijing Jiaotong University, Beijing, China, 2018. [Google Scholar]

- Xu, X. Research on Railway Foreign Object Intrusion Detection Method Based on Target Enhancement Fusion. Master’s Thesis, Beijing Jiaotong University, Beijing, China, 2021. [Google Scholar]

- Gasparini, R.; Pini, S.; Borghi, G.; Scaglione, G.; Calderara, S.; Fedeli, E.; Cucchiara, R. Anomaly Detection for Vision-Based Railway Inspection. In Proceedings of the EDCC Workshops, Munich, Germany, 7 September 2020. [Google Scholar]

- Kim, S.; Shim, M.; Choi, B.; Kim, J.; Yang, Y. Image and sensor los-based automatic horizontal line detection and tracking for infrared search and track. In Proceedings of the 2012 IEEE International Conference on Automation Science and Engineering (CASE), Seoul, Republic of Korea, 20–24 August 2012; pp. 718–723. [Google Scholar]

- Xie, J.; Jin, X.; Cao, H. SMRD: A Local Feature Descriptor for Multi-modal Image Registration. In Proceedings of the 2021 International Conference on Visual Communications and Image Processing (VCIP), Munich, Germany, 5–8 December 2021; pp. 1–5. [Google Scholar]

- Zhou, J. Railway Perimeter Monitoring System Based on Vibration Fiber Optic and Video Analysis Technology. Railw. Commun. Signal 2019, 55, 52–55. [Google Scholar]

- Bai, M.; Yuan, Z.; Zhou, J. A high-speed railway perimeter protection system based on vibration fiber optic and video analysis technology. Inf. Technol. Informatiz. 2019, 2, 72–74. [Google Scholar]

- Li, Y.; Lv, Y.; Zhao, C.; Tang, X.; Zhang, Q.; Zhang, H. High speed rail perimeter foreign object intrusion perception and recognition system. Railw. Veh. 2022, 60, 68–72. [Google Scholar]

- Song, X.; Yuan, Q. High speed rail perimeter intrusion monitoring and alarm technology based on the fusion of multiple sensing technologies and its application. China Railw. 2023, 10, 143–150. [Google Scholar]

- Ma, X. Research on High-Speed Rail Perimeter Intrusion Detection Using Fiber Optic Sensing and Video Collaboration. Master’s Thesis, Xi’an University of Electronic Science and Technology, Xi’an, China, 2023. [Google Scholar]

- Zhao, Y. Research on Obstacle Radar Detection Technology for High Dynamic Railway Environment. Suzhou University: Suzhou, China, 2023. [Google Scholar]

- Garcia, J.; Ureña, J.; Hernández, A.; Mazo, M.; Vazquez, J.F.; Díaz, M.J. Multi-sensory system for obstacle detection on railways. In Proceedings of the 2008 IEEE Instrumentation and Measurement Technology Conference, Victoria, BC, Canada, 12–15 May 2008; pp. 2091–2096. [Google Scholar]

- Nakamura, K.; Kawasaki, K.; Iwasawa, N.; Yamaguchi, D.; Takeuchi, K.; Shibagaki, N.; Sato, Y.; Kashima, K.; Takahashi, M. Verification of Applicability of 90 GHz Band Millimeter-Wave for Obstacle Detection to Railway. IEEJ Trans. Electr. Electron. Eng. 2023, 18, 960–969. [Google Scholar] [CrossRef]

- Kyatsandra, A.K.; Saket, R.K.; Kumar, S.; Sarita, K.; Vardhan, A.S.S.; Vardhan, A.S.S. Development of TRINETRA: A Sensor Based Vision Enhancement System for Obstacle Detection on Railway Tracks. IEEE Sens. J. 2022, 22, 3147–3156. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, T.; Wang, Y.; Wang, Y.; Zhao, H. FUTR3D: A Unified Sensor Fusion Framework for 3D Detection. arXiv 2022, arXiv:2203.10642. 2022. [Google Scholar]

- Chavez-Garcia, R.O.; Aycard, O. Multiple Sensor Fusion and Classification for Moving Object Detection and Tracking. IEEE Trans. Intell. Transp. Syst. 2016, 17, 525–534. [Google Scholar] [CrossRef]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.C.; Heide, F. Seeing Through Fog Without Seeing Fog: Deep Multimodal Sensor Fusion in Unseen Adverse Weather. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11679–11689. [Google Scholar]

- Li, Y.; Yu, A.W.; Meng, T.; Caine, B.; Ngiam, J.; Peng, D.; Shen, J.; Lu, Y.; Zhou, D.; Le, Q.V.; et al. DeepFusion: Lidar-Camera Deep Fusion for Multi-Modal 3D Object Detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17161–17170. [Google Scholar]

- Ren, S.; Zeng, Y.; Hou, J.; Chen, X. Corri2p: Deep image-to-point cloud registration via dense correspondence. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 1198–1208. [Google Scholar] [CrossRef]

- Wang, X.; Wang, X.; Zhou, Z. A high-accuracy calibration method for fusion systems of millimeter-wave radar and camera. Meas. Sci. Technol. 2022, 34, 015103. [Google Scholar] [CrossRef]

- Matas, J.; Chum, O.; Urban, M.; Pajdla, T. Robust wide-baseline stereo from maximally stable extremal regions. Image Vis. Comput. 2004, 22, 761–767. [Google Scholar] [CrossRef]

- Wei, Z.; Jung, C.; Su, C. RegiNet: Gradient guided multispectral image registration using convolutional neural networks. Neurocomputing 2020, 415, 193–200. [Google Scholar] [CrossRef]

- Wang, L.; Gao, C.; Zhao, Y.; Song, T.; Feng, Q. Infrared and Visible Image Registration Using Transformer Adversarial Network. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 1248–1252. [Google Scholar]

- D’Amico, G.; Marinoni, M.; Nesti, F.; Rossolini, G.; Buttazzo, G.; Sabina, S.; Lauro, G. TrainSim: A Railway Simulation Framework for LiDAR and Camera Dataset Generation. IEEE Trans. Intell. Transp. Syst. 2023, 24, 15006–15017. [Google Scholar] [CrossRef]

- Wu, Q. Research on Forward View Obstacle Detection Method for Rail Transit Based on Video and Point Cloud Fusion. Master’s Thesis, Suzhou University, Suzhou, China, 2022. [Google Scholar]

- Zendel, O.; Murschitz, M.; Zeilinger, M.; Steininger, D.; Abbasi, S.; Beleznai, C. RailSem19: A Dataset for Semantic Rail Scene Understanding. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 1221–1229. [Google Scholar]

- Tagiew, R.; Klasek, P.; Tilly, R.; Köppel, M.; Denzler, P.; Neumaier, P.; Boekhoff, M.; Klasek, P.; Tilly, R. OSDaR23: Open Sensor Data for Rail 2023. In Proceedings of the 2023 8th International Conference on Robotics and Automation Engineering (ICRAE), Singapore, 17–19 November 2023; pp. 270–276. [Google Scholar]

- Alawad, H.; Kaewunruen, S.; An, M. Learning From Accidents: Machine Learning for Safety at Railway Stations. IEEE Access 2020, 8, 633–648. [Google Scholar] [CrossRef]

- Alawad, H.; An, M.; Kaewunruen, S. Utilizing an Adaptive Neuro-Fuzzy Inference System (ANFIS) for Overcrowding Level Risk Assessment in Railway Stations. Appl. Sci. 2020, 10, 5156. [Google Scholar] [CrossRef]

- Kaewunruen, S.; Alawad, H.; Cotruta, S. A Decision Framework for Managing the Risk of Terrorist Threats at Rail Stations Interconnected with Airports. Safety 2018, 4, 36. [Google Scholar] [CrossRef]

- Zhang, H.; Sun, Q. An Integrated Approach to Risk Assessment for Special Line Shunting via Fuzzy Theory. Symmetry 2018, 10, 599. [Google Scholar] [CrossRef]

- Šimić, V.; Sousek, R.; Jovčić, S. Picture Fuzzy MCDM Approach for Risk Assessment of Railway Infrastructure. Mathematics 2020, 8, 2259. [Google Scholar] [CrossRef]

- Liu, C.; Yang, S.; Chu, S.; Wang, C.; Liu, R. Application of Ensemble Learning and Expert Decision in Fuzzy Risk Assessment of Railway Signaling Safety. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 3691–3697. [Google Scholar]

- China National Railway Group Co., Ltd. Guidelines for the Work of Safety Dual Prevention Mechanism (Trial); China National Railway Group Co., Ltd.: Beijing, China, 2019. [Google Scholar]

- National Railway Administration of the People’s Republic of China. Management Measures for Railway Safety Risk Grading Control and Hidden Danger Investigation and Treatment; National Railway Administration of the People’s Republic of China: Beijing, China, 2023. [Google Scholar]

- China National Railway Group Co., Ltd. Implementation Opinions of China National Railway Group on Strengthening the Construction of Railway Safety Governance System; China National Railway Group Co., Ltd.: Beijing, China, 2021. [Google Scholar]

| Sensor | Pros | Cons |

|---|---|---|

| Video | Strong recognition ability. Easy to deploy and maintain. | Poor generalization capabilities for intrusion targets. Poor adaptability to the weather. |

| Radar | Strong adaptability to the environment. Long detection distance for moving objects. | Point clouds are sparse and data processing is difficult. |

| Lidar | High ranging accuracy. Not affected by light. | Fog weather recognition performance decreases. Costs are higher. |

| Fiber | Low false negative rate. Strong adaptability to the environment. | High false alarm rate. Poor positioning accuracy. |

| Infrared | Good nighttime detection performance. Large viewing range. | Poor low temperature differential detection. Unable to identify detailed features. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, T.; Guo, P.; Wang, R.; Ma, Z.; Zhang, W.; Li, W.; Fu, H.; Hu, H. A Survey on Multi-Sensor Fusion Perimeter Intrusion Detection in High-Speed Railways. Sensors 2024, 24, 5463. https://doi.org/10.3390/s24175463

Shi T, Guo P, Wang R, Ma Z, Zhang W, Li W, Fu H, Hu H. A Survey on Multi-Sensor Fusion Perimeter Intrusion Detection in High-Speed Railways. Sensors. 2024; 24(17):5463. https://doi.org/10.3390/s24175463

Chicago/Turabian StyleShi, Tianyun, Pengyue Guo, Rui Wang, Zhen Ma, Wanpeng Zhang, Wentao Li, Huijin Fu, and Hao Hu. 2024. "A Survey on Multi-Sensor Fusion Perimeter Intrusion Detection in High-Speed Railways" Sensors 24, no. 17: 5463. https://doi.org/10.3390/s24175463