Workplace Well-Being in Industry 5.0: A Worker-Centered Systematic Review

Abstract

:1. Introduction

- RQ1: What are the state-of-the-art technologies currently adopted for the assessment of both physical and cognitive well-being in industrial workplaces?

- RQ2: What advanced data processing methods, including those belonging to the field of AI, are employed to interpret the data acquired from these well-being assessments?

- RQ3: What are the principal objectives of assessing the well-being of workers in the context of Industry 5.0, and how do these assessments contribute to enhancing productivity, safety, and human–machine interaction in smart factories?

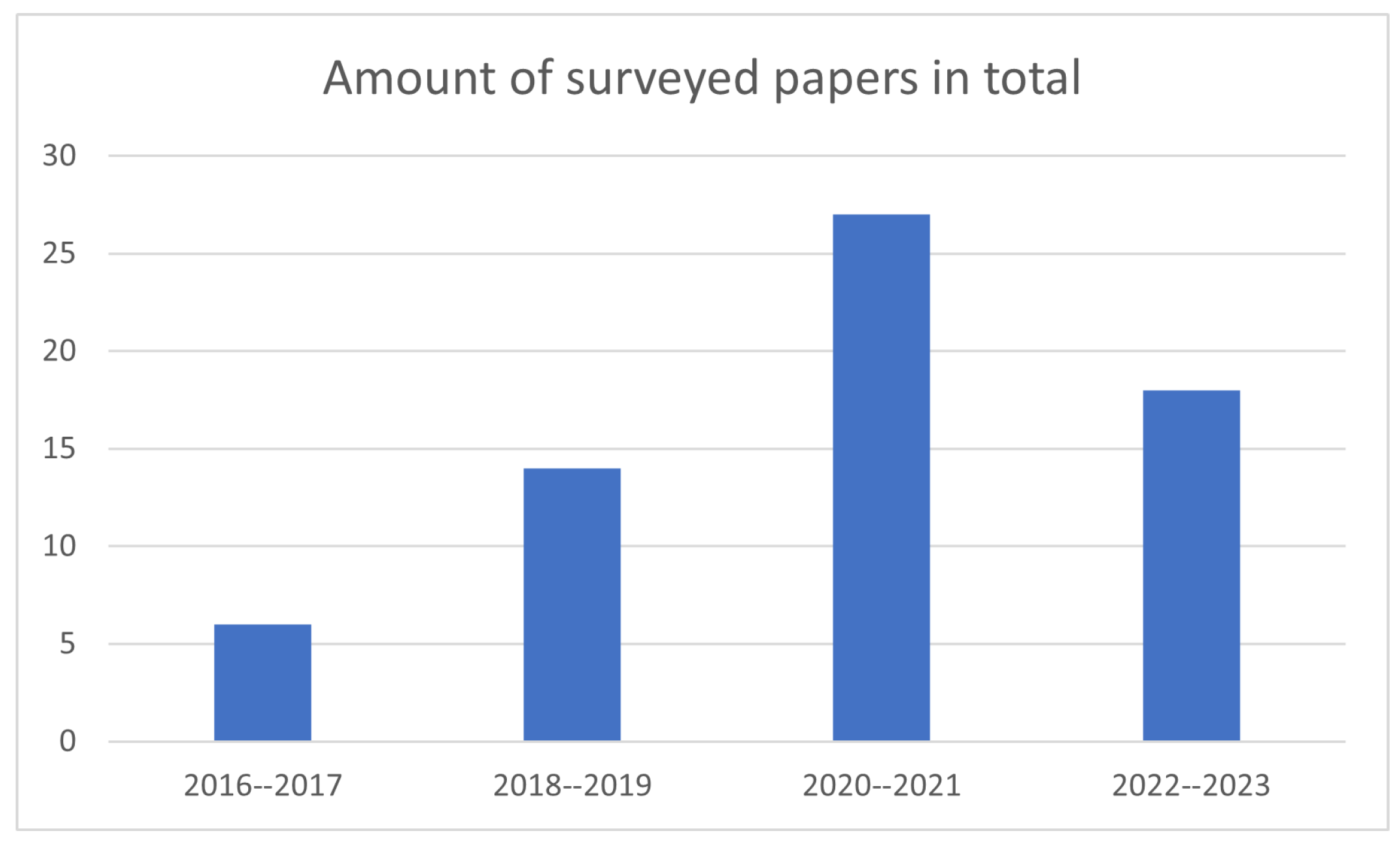

2. Methodological Analysis

- Databases used for the search: Google Scholar and Scopus;

- Only articles from indexed, peer-reviewed journals were selected;

- Publication year: papers published before 2016 were excluded;

- Context of the application: only papers involving industrial/productive contexts were included;

- An appropriate combination of keywords, detailed below, has been used for the paper selection.

- Authors;

- Year;

- Monitored activity: physical monitoring task or adopted physiological measurement;

- Data acquisition device: class of the devices employed in the examined study;

- Data acquisition device model;

- Data processing approach: machine learning (ML) or deep learning (DL). This information is particularly useful to group the studies in terms of cost–benefit ratio, recalling that DL typically provides state-of-the-art results to the cost of being equally demanding in terms of the amount of data and required computational power;

- Data processing algorithm: ML and DL are general approaches that identify a wide range of different specific algorithms;

- Ergonomics: a label to identify, eventually, if the study falls in the physical ergonomics or in the cognitive ergonomics domain;

- Standard ergonomic index or physiological measure: punctual information highlighting the adoption of a specific index in case of a study falling into the physical domain or one or multiple physiological measures in case of a study falling into the cognitive domain.

3. Results

3.1. Physical Ergonomics

3.1.1. Productivity Enhancement

3.1.2. Man–Machine Interaction Optimisation

3.1.3. Safety Support

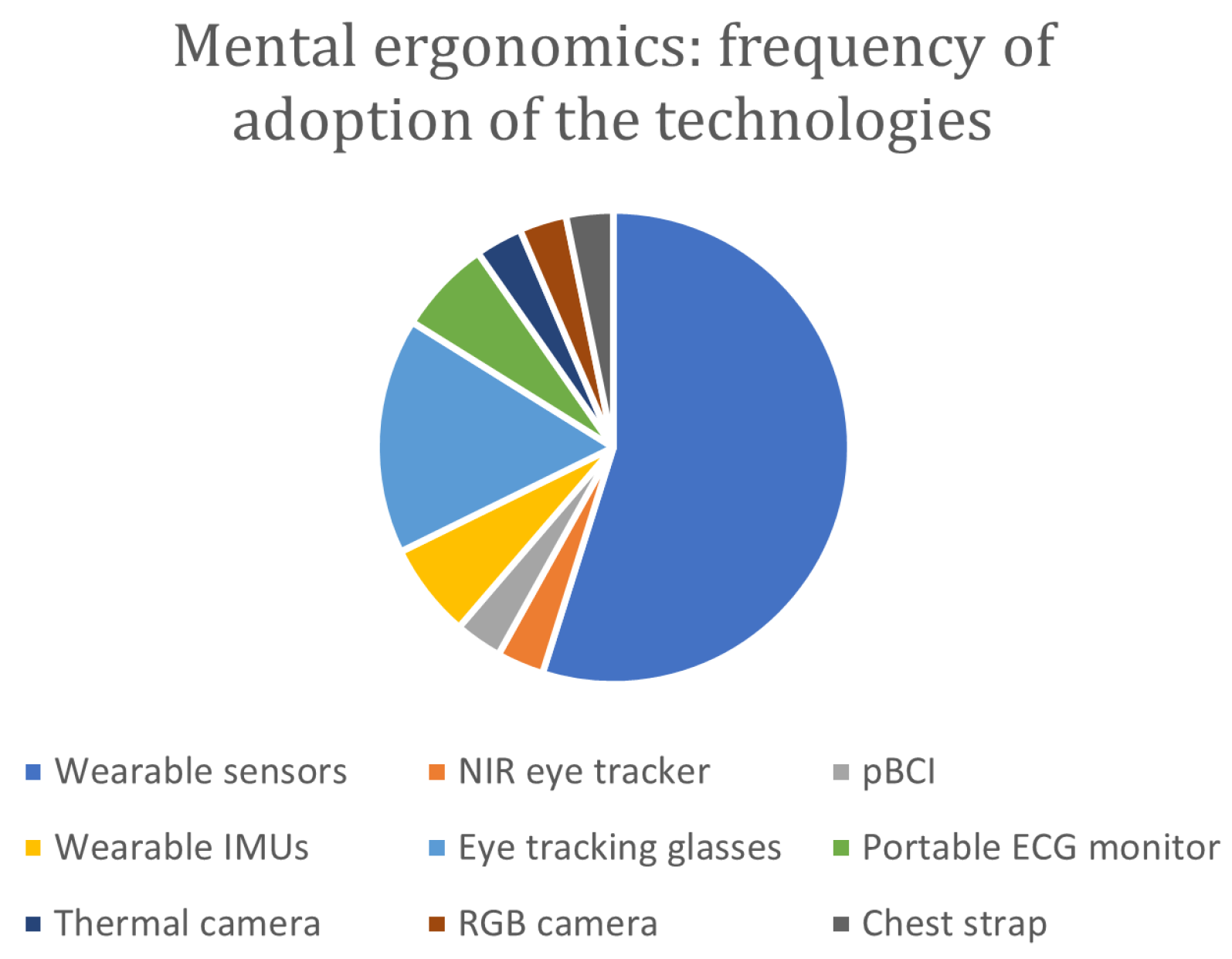

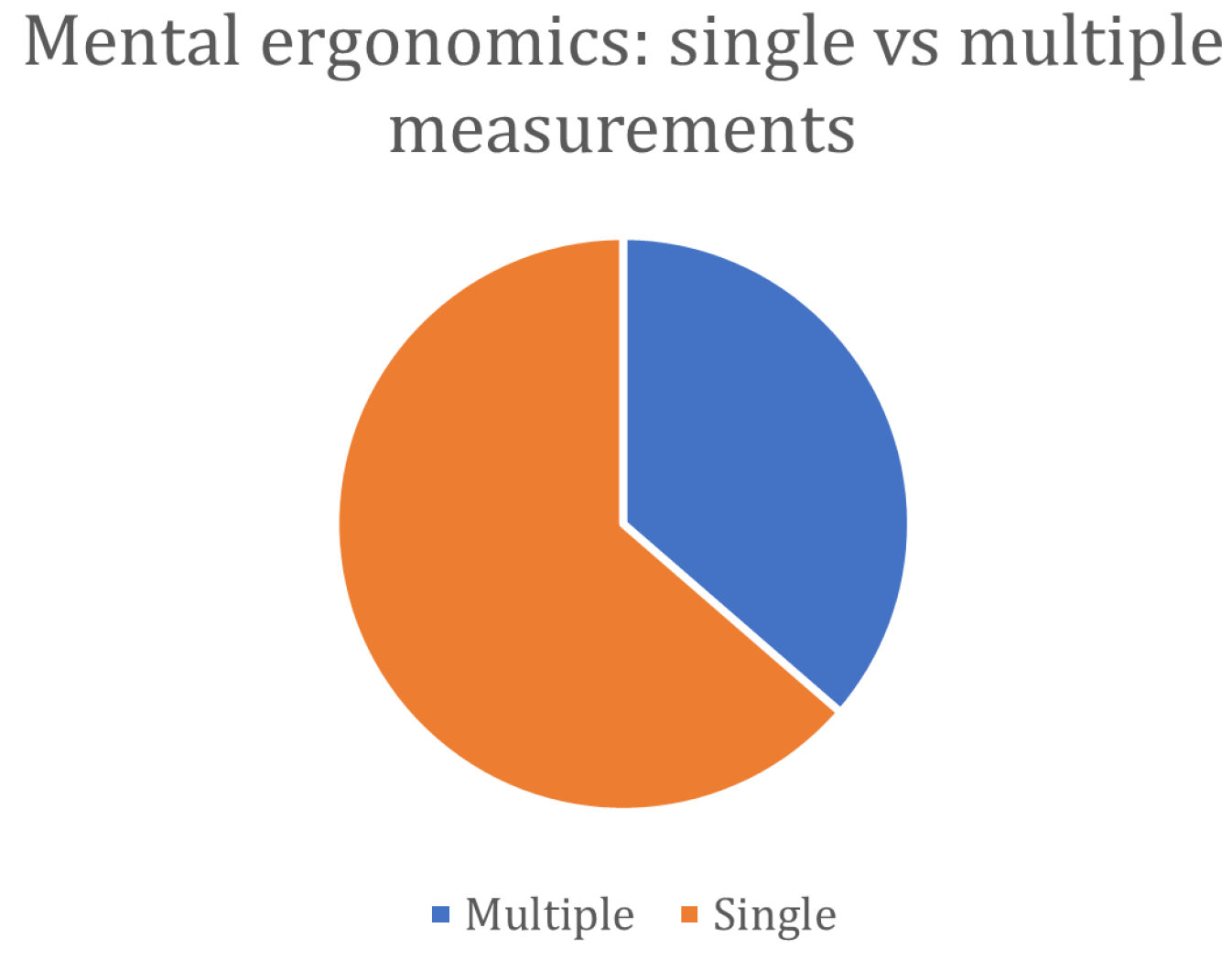

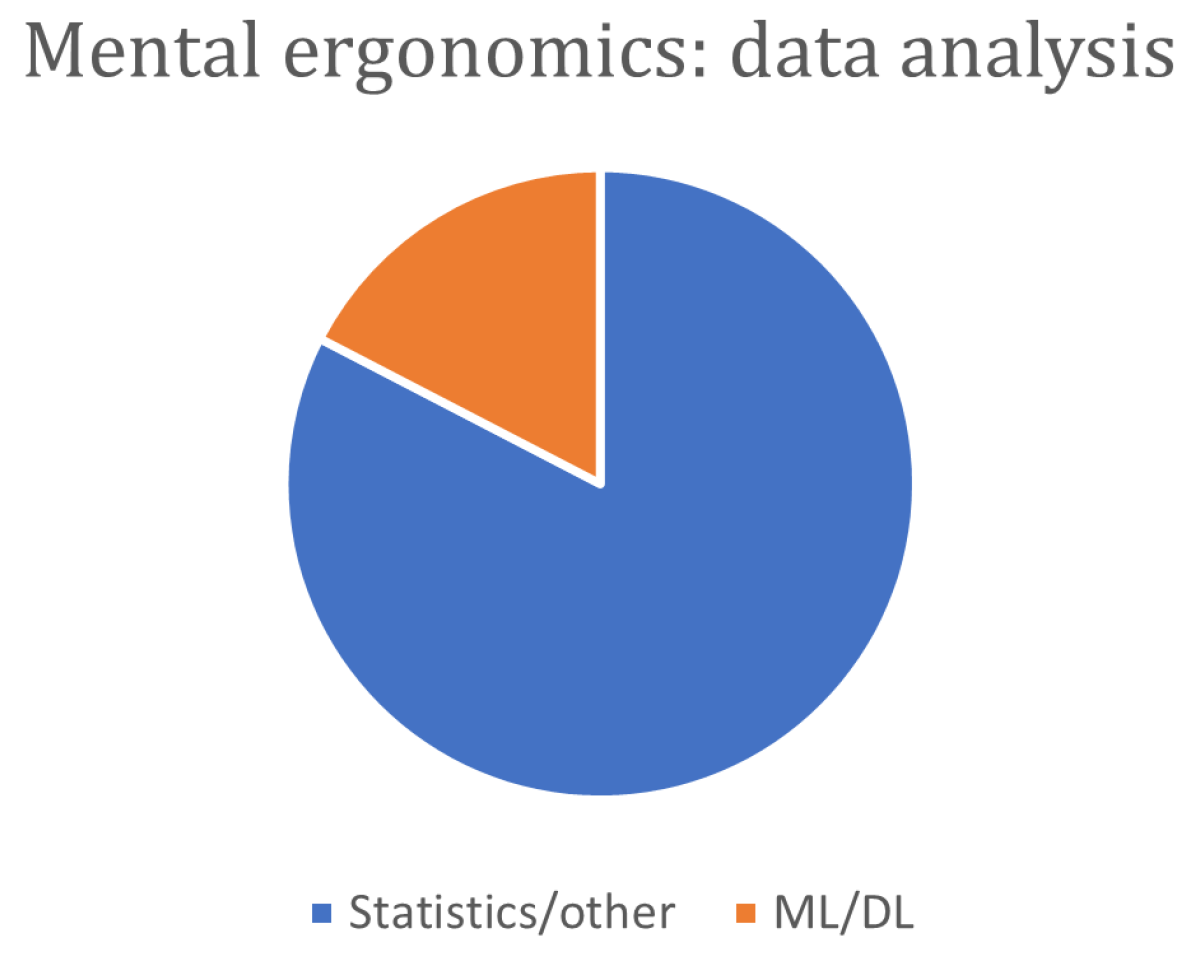

3.2. Cognitive Ergonomics

4. Discussion

4.1. Physical Ergonomics

4.2. Cognitive Ergonomics

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbreviations | Description |

| 3DMPPE | 3D multiperson pose estimation |

| ANN | Artificial Neural Network |

| APM | Action Perception Module |

| BVP | Blood Volume Pulse |

| CMU | Carnegie Mellon University |

| CNN | Convolutional Neural Network |

| CPM | convolutional pose machine |

| DHM | Digital Human Model |

| DL | Deep Learning |

| DT | Decision Tree |

| EAWS | Ergonomic Assessment Worksheet |

| ECG | electrocardiography |

| EDA | Electrodermal Activity |

| EEG | Electroencephalography |

| EIM | Ergonomics Improvement Module |

| EOG | Electro-oculography |

| fNIRS | Functional Near-Infrared Spectroscopy |

| GCN | Graph Convolutional Networks |

| GMM | Gaussian Mixture Models |

| GMR | Gaussian Mixture Regression |

| GP | Gaussian Process |

| GSR | Galvanic Skin Response |

| HAR | Human Activity Recognition |

| HMC | Human Machine Collaboration |

| HMM | Hidden Markov Model |

| HRV | Heart Rate Variability |

| IMA | Industrial Maintenance and Assembly |

| IMU | Inertial Measurement Units |

| kNN | K-Nearest Neighbors |

| KPI | Key Performance Indicator |

| LPM | Learning and Programming Module |

| LRCN | Long-term Recurrent Convolutional Network |

| LSTM | Long Short-Term Memory |

| MoCap | Motion Capture |

| OCRA | Occupational Repetitive Actions |

| oMoCap | Optical Motion Capture |

| OWAS | Ovako Working Posture Analysis System |

| PNN | Probabilistic Neural Network |

| QDA | Quadratic Discriminant Analysis |

| REBA | Rapid Entire Body Assessment |

| RF | Random Forest |

| RMS | Root Mean Square |

| RULA | Rapid Upper Limb Assessment |

| sEMG | Surface Electromyography |

| SHM | Stacked Hourglass Model |

| SS | State-Space |

| SSD | Single Shot Detector |

| StaDNet | Static and Dynamic Gestures Network |

| STN | Spatial Transformer Networks |

| SVM | Support Vector Machine |

| tCNN | Temporal Convolutional Neural Network |

| TPS | Thin-Plate Splines |

| VAE | Variational Autoencoder |

| VR | Virtual Reality |

| WMSD | Work-Related Musculoskeletal Disorders |

| XGBoost | eXtreme Gradient Boosting |

| YOLO | You Only Look Once |

Appendix A

| Authors | Year | Technology |

|---|---|---|

| Álvarez et al. [41] | 2016 | Wearable IMUs |

| Pławiak et al. [21] | 2016 | Wearable IMUs |

| Grzeszick et al. [22] | 2017 | Wearable IMUs |

| Fletcher et al. [42] | 2018 | Wearable IMUs |

| Golabchi et al. [43] | 2018 | RGB camera |

| Luo et al. [23] | 2018 | Optical IR marker-based motion capture system |

| Moya Rueda et al. [24] | 2018 | Wearable IMUs |

| Nath et al. [44] | 2018 | Smartphone IMUs |

| Maurice et al. [46] | 2019 | Wearable IMUs |

| Maurice et al. [46] | 2019 | Optical IR marker-based motion capture system |

| Maurice et al. [46] | 2019 | Force sensors |

| Maurice et al. [46] | 2019 | RGB camera |

| Urgo et al. [25] | 2019 | RGB camera |

| Chen et al. [26] | 2020 | RGB camera |

| Conforti et al. [47] | 2020 | Wearable IMUs |

| Jiao et al. [27] | 2020 | RGB camera |

| Manitsaris et al. [28] | 2020 | RGB camera |

| Manitsaris et al. [28] | 2020 | RGB-D camera |

| Massiris Fernández et al. [48] | 2020 | RGB camera |

| Peruzzini et al. [49] | 2020 | Multi-parametric wearable sensor for real-time vital parameters monitoring |

| Peruzzini et al. [49] | 2020 | RGB camera |

| Peruzzini et al. [49] | 2020 | Optical IR marker-based motion capture system |

| Phan et al. [29] | 2020 | Instrumented tool with force/torque sensors |

| Phan et al. [29] | 2020 | Wearable sEMG sensors |

| Dimitropoulos et al. [50] | 2021 | RGB-D camera |

| Luipers et al. [31] | 2021 | RGB-D camera |

| Manns et al. [32] | 2021 | Wearable IMUs |

| Mazhar et al. [51] | 2021 | RGB-D camera |

| Mudiyanselage et al. [52] | 2021 | Wearable sEMG sensors |

| Niemann et al. [33] | 2021 | Optical IR marker-based motion capture system |

| Papanagiotou et al. [34] | 2021 | RGB camera |

| Papanagiotou et al. [34] | 2021 | RGB-D camera |

| Al-Amin et al. [35] | 2022 | Wearable IMUs |

| Choi et al. [36] | 2022 | RGB-D camera |

| Ciccarelli et al. [53] | 2022 | Wearable IMUs |

| De Feudis et al. [37] | 2022 | RGB-D camera |

| Generosi et al. [54] | 2022 | RGB camera |

| Guo et al. [55] | 2022 | Optical IR marker-based motion capture system |

| Kačerová [56] | 2022 | Wearable IMUs |

| Lima et al. [38] | 2022 | RGB-D camera |

| Lin et al. [57] | 2022 | RGB camera |

| Lin et al. [57] | 2022 | Optical IR marker-based motion capture system |

| Lorenzini et al. [58] | 2022 | Wearable IMUs |

| Lorenzini et al. [58] | 2022 | Force sensors |

| Lorenzini et al. [58] | 2022 | Wearable sEMG sensors |

| Mendes [39] | 2022 | Wearable sEMG sensors |

| Nunes et al. [59] | 2022 | Wearable IMUs |

| Panariello et al. [60] | 2022 | Optical IR marker-based motion capture system |

| Panariello et al. [60] | 2022 | Force sensors |

| Panariello et al. [60] | 2022 | Wearable sEMG sensors |

| Paudel et al. [61] | 2022 | RGB camera |

| Vianello et al. [62] | 2022 | Wearable IMUs |

| Orsag et al. [40] | 2023 | Wearable IMUs |

| Device | Gesture Recognition | Pose Estimation | Action Recognition | Hand/Tool Tracking | Hand State Classification | Human Motion Prediction | Joint Stiffness Estimation | Human Activity Recognition | Digital Design Modelling |

|---|---|---|---|---|---|---|---|---|---|

| w IMUs | X | X | X | X | X | X | |||

| RGB-D MDPI: | X | X | X | X | X | X | |||

| oMoCap | X | X | X | ||||||

| RGB | X | X | X | ||||||

| w sEMG | X | X | |||||||

| Smartph. IMUs | X | ||||||||

| w IMUs, oMoCap, Flex./Force sens., RGB | X | ||||||||

| Multi-par. w sensor, RGB, oMoCa p | X | ||||||||

| Force/Torque sens. | X | ||||||||

| w IMUs, Force sens., w sEMG | X | ||||||||

| oMoCap, Force sens. W sEMG | X |

| ML | DL | |||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Author | Year | ANN | Decision Tree | GMM | GP | HMM | kNN | Meta Learning | Random Forest | SVM | 3D MPPE | ArUco | AKBT | DL Mod. CMU | CNN | GCN | LRCN | LSTM | SHM | STN | VAE | YOLO |

| Pławiak et al. [21] | 2016 | X | ||||||||||||||||||||

| Grzeszick et al. [22] | 2017 | X | ||||||||||||||||||||

| Golabchi et al. [43] | 2018 | X | ||||||||||||||||||||

| Luo et al. [23] | 2018 | X | ||||||||||||||||||||

| Moya Rueda et al. [24] | 2018 | X | ||||||||||||||||||||

| Nath et al. [44] | 2018 | X | ||||||||||||||||||||

| Maurice et al. [46] | 2019 | X | X | |||||||||||||||||||

| Urgo et al. [25] | 2019 | X | X | |||||||||||||||||||

| Chen et al. [26] | 2020 | X | X | |||||||||||||||||||

| Conforti et al. [47] | 2020 | X | ||||||||||||||||||||

| Jiao et al. [27] | 2020 | X | X | X | X | X | ||||||||||||||||

| Manitsaris et al. [28] | 2020 | X | ||||||||||||||||||||

| Massiris Fernández et al. [48] | 2020 | X | ||||||||||||||||||||

| Xiong et al. [30] | 2020 | X | ||||||||||||||||||||

| Dimitropoulos et al. [50] | 2021 | X | ||||||||||||||||||||

| Luipers et al. [31] | 2021 | X | X | X | ||||||||||||||||||

| Manns et al. [32] | 2021 | X | ||||||||||||||||||||

| Mazhar et al. [51] | 2021 | X | ||||||||||||||||||||

| Mudiyanselage et al. [52] | 2021 | X | X | X | X | |||||||||||||||||

| Niemann et al. [33] | 2021 | X | ||||||||||||||||||||

| Papanagiotou et al. [34] | 2021 | X | ||||||||||||||||||||

| Al-Amin et al. [35] | 2022 | X | ||||||||||||||||||||

| Choi et al. [36] | 2022 | X | ||||||||||||||||||||

| Ciccarelli et al. [53] | 2022 | X | ||||||||||||||||||||

| De Feudis et al. [37] | 2022 | X | X | X | X | |||||||||||||||||

| Generosi et al. [54] | 2022 | X | X | |||||||||||||||||||

| Lima et al. [54] | 2022 | X | ||||||||||||||||||||

| Lin et al. [57] | 2022 | X | ||||||||||||||||||||

| Mendes [39] | 2022 | X | X | |||||||||||||||||||

| Paudel et al. [39] | 2022 | X | X | |||||||||||||||||||

| Vianello et al. [62] | 2022 | X | ||||||||||||||||||||

| Orsag et al. [40] | 2023 | X | ||||||||||||||||||||

References

- European Commissions. Industry 5.0, 2018. Available online: https://www.eesc.europa.eu/en/agenda/our-events/events/industry-50 (accessed on 25 July 2023).

- Neumann, W.P.; Winkelhaus, S.; Grosse, E.H.; Glock, C.H. Industry 4.0 and the human factor—A systems framework and analysis methodology for successful development. Int. J. Prod. Econ. 2021, 233, 107992. [Google Scholar] [CrossRef]

- Xu, X.; Lu, Y.; Vogel-Heuser, B.; Wang, L. Industry 4.0 and Industry 5.0—Inception, conception and perception. J. Manuf. Syst. 2021, 61, 530–535. [Google Scholar] [CrossRef]

- Leng, J.; Sha, W.; Wang, B.; Zheng, P.; Zhuang, C.; Liu, Q.; Wuest, T.; Mourtzis, D.; Wang, L. Industry 5.0: Prospect and retrospect. J. Manuf. Syst. 2022, 65, 279–295. [Google Scholar] [CrossRef]

- Longo, F.; Padovano, A.; Umbrello, S. Value-oriented and ethical technology engineering in industry 5.0: A human-centric perspective for the design of the factory of the future. Appl. Sci. 2020, 10, 4182. [Google Scholar] [CrossRef]

- Dengel, A.; Devillers, L.; Schaal, L.M. Augmented human and human-machine co-evolution: Efficiency and ethics. In Reflections on Artificial Intelligence for Humanity; Springer: Berlin/Heidelberg, Germany, 2021; pp. 203–227. [Google Scholar]

- Li, X.; Nassehi, A.; Wang, B.; Hu, S.J.; Epureanu, B.I. Human-centric manufacturing for human-system coevolution in Industry 5.0. CIRP Ann. 2023, 72, 393–396. [Google Scholar] [CrossRef]

- Hashemi-Petroodi, S.E.; Dolgui, A.; Kovalev, S.; Kovalyov, M.Y.; Thevenin, S. Workforce Reconfiguration Strategies in Manufacturing Systems: A State of the Art. Int. J. Prod. Res. 2021, 59, 6721–6744. [Google Scholar] [CrossRef]

- Vijayakumar, V.; Sobhani, A. Performance Optimisation of Pick and Transport Robot in a Picker to Parts Order Picking System: A Human-Centric Approach. Int. J. Prod. Res. 2023, 61, 7791–7808. [Google Scholar] [CrossRef]

- Ge, Z.; Song, Z.; Ding, S.X.; Huang, B. Data mining and analytics in the process industry: The role of machine learning. IEEE Access 2017, 5, 20590–20616. [Google Scholar] [CrossRef]

- Le, Q.; Miralles-Pechuán, L.; Kulkarni, S.; Su, J.; Boydell, O. An overview of deep learning in industry. In Data Analytics and AI; Auerbach Publications: Boca Raton, FL, USA, 2020; pp. 65–98. [Google Scholar]

- Sun, X.; Houssin, R.; Renaud, J.; Gardoni, M. A Review of Methodologies for Integrating Human Factors and Ergonomics in Engineering Design. Int. J. Prod. Res. 2019, 57, 4961–4976. [Google Scholar] [CrossRef]

- Vijayakumar, V.; Sgarbossa, F.; Neumann, W.P.; Sobhani, A. Framework for Incorporating Human Factors into Production and Logistics Systems. Int. J. Prod. Res. 2022, 60, 402–419. [Google Scholar] [CrossRef]

- Rodrigues, P.B.; Xiao, Y.; Fukumura, Y.E.; Awada, M.; Aryal, A.; Becerik-Gerber, B.; Lucas, G.; Roll, S.C. Ergonomic Assessment of Office Worker Postures Using 3D Automated Joint Angle Assessment. Adv. Eng. Inform. 2022, 52, 101596. [Google Scholar] [CrossRef]

- O’Neill, M. Holistic ergonomics for the evolving nature of work. In Topic Brief; Knoll, Inc.: New York, NY, USA, 2011; pp. 1–8. [Google Scholar]

- Carayon, P.; Smith, M.J. Work organization and ergonomics. Appl. Ergon. 2000, 31, 649–662. [Google Scholar] [CrossRef] [PubMed]

- Meyer, A.; Fourie, I. Collaborative information seeking environments benefiting from holistic ergonomics. Libr. Hi Tech 2015, 33, 439–459. [Google Scholar] [CrossRef]

- Kadir, B.A.; Broberg, O.; da Conceicao, C.S. Current research and future perspectives on human factors and ergonomics in Industry 4.0. Comput. Ind. Eng. 2019, 137, 106004. [Google Scholar] [CrossRef]

- Gualtieri, L.; Fraboni, F.; De Marchi, M.; Rauch, E. Development and evaluation of design guidelines for cognitive ergonomics in human–robot collaborative assembly systems. Appl. Ergon. 2022, 104, 103807. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Pławiak, P.; Sosnicki, T.; Niedzwiecki, M.; Tabor, Z.; Rzecki, K. Hand Body Language Gesture Recognition Based on Signals From Specialized Glove and Machine Learning Algorithms. IEEE Trans. Ind. Inform. 2016, 12, 1104–1113. [Google Scholar] [CrossRef]

- Grzeszick, R.; Lenk, J.M.; Rueda, F.M.; Fink, G.A.; Feldhorst, S.; Ten Hompel, M. Deep Neural Network Based Human Activity Recognition for the Order Picking Process. In Proceedings of the 4th International Workshop on Sensor-Based Activity Recognition and Interaction, Rostock, Germany, 21 September 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Luo, R.; Hayne, R.; Berenson, D. Unsupervised Early Prediction of Human Reaching for Human– Robot Collaboration in Shared Workspaces. Auton. Robot. 2018, 42, 631–648. [Google Scholar] [CrossRef]

- Moya Rueda, F.; Grzeszick, R.; Fink, G.; Feldhorst, S.; Ten Hompel, M. Convolutional Neural Networks for Human Activity Recognition Using Body-Worn Sensors. Informatics 2018, 5, 26. [Google Scholar] [CrossRef]

- Urgo, M.; Tarabini, M.; Tolio, T. A Human Modelling and Monitoring Approach to Support the Execution of Manufacturing Operations. CIRP Ann. 2019, 68, 5–8. [Google Scholar] [CrossRef]

- Chen, C.; Wang, T.; Li, D.; Hong, J. Repetitive Assembly Action Recognition Based on Object Detection and Pose Estimation. J. Manuf. Syst. 2020, 55, 325–333. [Google Scholar] [CrossRef]

- Jiao, Z.; Jia, G.; Cai, Y. Ensuring Computers Understand Manual Operations in Production: Deep-Learning-Based Action Recognition in Industrial Workflows. Appl. Sci. 2020, 10, 966. [Google Scholar] [CrossRef]

- Manitsaris, S.; Senteri, G.; Makrygiannis, D.; Glushkova, A. Human Movement Representation on Multivariate Time Series for Recognition of Professional Gestures and Forecasting Their Trajectories. Front. Robot. AI 2020, 7, 80. [Google Scholar] [CrossRef] [PubMed]

- Phan, G.H.; Hansen, C.; Tommasino, P.; Budhota, A.; Mohan, D.M.; Hussain, A.; Burdet, E.; Campolo, D. Estimating Human Wrist Stiffness during a Tooling Task. Sensors 2020, 20, 3260. [Google Scholar] [CrossRef]

- Xiong, Q.; Zhang, J.; Wang, P.; Liu, D.; Gao, R.X. Transferable Two-Stream Convolutional Neural Network for Human Action Recognition. J. Manuf. Syst. 2020, 56, 605–614. [Google Scholar] [CrossRef]

- Luipers, D.; Richert, A. Concept of an Intuitive Human-Robot-Collaboration via Motion Tracking and Augmented Reality. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 28–30 June 2021; pp. 423–427. [Google Scholar] [CrossRef]

- Manns, M.; Tuli, T.B.; Schreiber, F. Identifying Human Intention during Assembly Operations Using Wearable Motion Capturing Systems Including Eye Focus. Procedia CIRP 2021, 104, 924–929. [Google Scholar] [CrossRef]

- Niemann, F.; Lüdtke, S.; Bartelt, C.; Ten Hompel, M. Context-Aware Human Activity Recognition in Industrial Processes. Sensors 2021, 22, 134. [Google Scholar] [CrossRef]

- Papanagiotou, D.; Senteri, G.; Manitsaris, S. Egocentric Gesture Recognition Using 3D Convolutional Neural Networks for the Spatiotemporal Adaptation of Collaborative Robots. Front. Neurorobot. 2021, 15, 703545. [Google Scholar] [CrossRef]

- Al-Amin, M.; Qin, R.; Tao, W.; Doell, D.; Lingard, R.; Yin, Z.; Leu, M.C. Fusing and Refining Convolutional Neural Network Models for Assembly Action Recognition in Smart Manufacturing. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2022, 236, 2046–2059. [Google Scholar] [CrossRef]

- Choi, S.H.; Park, K.B.; Roh, D.H.; Lee, J.Y.; Mohammed, M.; Ghasemi, Y.; Jeong, H. An Integrated Mixed Reality System for Safety-Aware Human-Robot Collaboration Using Deep Learning and Digital Twin Generation. Robot. Comput.-Integr. Manuf. 2022, 73, 102258. [Google Scholar] [CrossRef]

- De Feudis, I.; Buongiorno, D.; Grossi, S.; Losito, G.; Brunetti, A.; Longo, N.; Di Stefano, G.; Bevilacqua, V. Evaluation of Vision-Based Hand Tool Tracking Methods for Quality Assessment and Training in Human-Centered Industry 4.0. Appl. Sci. 2022, 12, 1796. [Google Scholar] [CrossRef]

- Lima, B.; Amaral, L.; Nascimento, G., Jr.; Mafra, V.; Ferreira, B.G.; Vieira, T.; Vieira, T. User-Oriented Natural Human-Robot Control with Thin-Plate Splines and LRCN. J. Intell. Robot. Syst. 2022, 104, 50. [Google Scholar] [CrossRef]

- Mendes, N. Surface Electromyography Signal Recognition Based on Deep Learning for Human-Robot Interaction and Collaboration. J. Intell. Robot. Syst. 2022, 105, 42. [Google Scholar] [CrossRef]

- Orsag, L.; Stipancic, T.; Koren, L. Towards a Safe Human–Robot Collaboration Using Information on Human Worker Activity. Sensors 2023, 23, 1283. [Google Scholar] [CrossRef] [PubMed]

- Álvarez, D.; Alvarez, J.C.; González, R.C.; López, A.M. Upper Limb Joint Angle Measurement in Occupational Health. Comput. Methods Biomech. Biomed. Eng. 2016, 19, 159–170. [Google Scholar] [CrossRef]

- Fletcher, S.R.; Johnson, T.L.; Thrower, J. A Study to Trial the Use of Inertial Non-Optical Motion Capture for Ergonomic Analysis of Manufacturing Work. Proc. Inst. Mech. Eng. Part J. Eng. Manuf. 2018, 232, 90–98. [Google Scholar] [CrossRef]

- Golabchi, A.; Guo, X.; Liu, M.; Han, S.; Lee, S.; AbouRizk, S. An Integrated Ergonomics Framework for Evaluation and Design of Construction Operations. Autom. Constr. 2018, 95, 72–85. [Google Scholar] [CrossRef]

- Nath, N.D.; Chaspari, T.; Behzadan, A.H. Automated Ergonomic Risk Monitoring Using Body-Mounted Sensors and Machine Learning. Adv. Eng. Inform. 2018, 38, 514–526. [Google Scholar] [CrossRef]

- Grandi, F.; Peruzzini, M.; Zanni, L.; Pellicciari, M. An Automatic Procedure Based on Virtual Ergonomic Analysis to Promote Human-Centric Manufacturing. Procedia Manuf. 2019, 38, 488–496. [Google Scholar] [CrossRef]

- Maurice, P.; Malaisé, A.; Amiot, C.; Paris, N.; Richard, G.J.; Rochel, O.; Ivaldi, S. Human Movement and Ergonomics: An Industry-Oriented Dataset for Collaborative Robotics. Int. J. Robot. Res. 2019, 38, 1529–1537. [Google Scholar] [CrossRef]

- Conforti, I.; Mileti, I.; Del Prete, Z.; Palermo, E. Measuring Biomechanical Risk in Lifting Load Tasks Through Wearable System and Machine-Learning Approach. Sensors 2020, 20, 1557. [Google Scholar] [CrossRef]

- Massiris Fernández, M.; Fernández, J.Á.; Bajo, J.M.; Delrieux, C.A. Ergonomic Risk Assessment Based on Computer Vision and Machine Learning. Comput. Ind. Eng. 2020, 149, 106816. [Google Scholar] [CrossRef]

- Peruzzini, M.; Grandi, F.; Pellicciari, M. Exploring the Potential of Operator 4.0 Interface and Monitoring. Comput. Ind. Eng. 2020, 139, 105600. [Google Scholar] [CrossRef]

- Dimitropoulos, N.; Togias, T.; Zacharaki, N.; Michalos, G.; Makris, S. Seamless Human–Robot Collaborative Assembly Using Artificial Intelligence and Wearable Devices. Appl. Sci. 2021, 11, 5699. [Google Scholar] [CrossRef]

- Mazhar, O.; Ramdani, S.; Cherubini, A. A Deep Learning Framework for Recognizing Both Static and Dynamic Gestures. Sensors 2021, 21, 2227. [Google Scholar] [CrossRef]

- Mudiyanselage, S.E.; Nguyen, P.H.D.; Rajabi, M.S.; Akhavian, R. Automated Workers’ Ergonomic Risk Assessment in Manual Material Handling Using sEMG Wearable Sensors and Machine Learning. Electronics 2021, 10, 2558. [Google Scholar] [CrossRef]

- Ciccarelli, M.; Corradini, F.; Germani, M.; Menchi, G.; Mostarda, L.; Papetti, A.; Piangerelli, M. SPECTRE: A Deep Learning Network for Posture Recognition in Manufacturing. J. Intell. Manuf. 2022. [Google Scholar] [CrossRef]

- Generosi, A.; Agostinelli, T.; Ceccacci, S.; Mengoni, M. A Novel Platform to Enable the Future Human-Centered Factory. Int. J. Adv. Manuf. Technol. 2022, 122, 4221–4233. [Google Scholar] [CrossRef]

- Guo, Z.; Zhou, D.; Hao, A.; Wang, Y.; Wu, H.; Zhou, Q.; Yu, D.; Zeng, S. An Evaluation Method Using Virtual Reality to Optimize Ergonomic Design in Manual Assembly and Maintenance Scenarios. Int. J. Adv. Manuf. Technol. 2022, 121, 5049–5065. [Google Scholar] [CrossRef]

- Ergonomic Design of a Workplace Using Virtual Reality and a Motion Capture Suit. Appl. Sci. 2022, 12, 2150. [CrossRef]

- Lin, P.C.; Chen, Y.J.; Chen, W.S.; Lee, Y.J. Automatic Real-Time Occupational Posture Evaluation and Select Corresponding Ergonomic Assessments. Sci. Rep. 2022, 12, 2139. [Google Scholar] [CrossRef]

- Lorenzini, M.; Kim, W.; Ajoudani, A. An Online Multi-Index Approach to Human Ergonomics Assessment in the Workplace. IEEE Trans. Hum. Mach. Syst. 2022, 52, 812–823. [Google Scholar] [CrossRef]

- Nunes, M.L.; Folgado, D.; Fujao, C.; Silva, L.; Rodrigues, J.; Matias, P.; Barandas, M.; Carreiro, A.V.; Madeira, S.; Gamboa, H. Posture Risk Assessment in an Automotive Assembly Line Using Inertial Sensors. IEEE Access 2022, 10, 83221–83235. [Google Scholar] [CrossRef]

- Panariello, D.; Grazioso, S.; Caporaso, T.; Palomba, A.; Di Gironimo, G.; Lanzotti, A. Biomechanical Analysis of the Upper Body during Overhead Industrial Tasks Using Electromyography and Motion Capture Integrated with Digital Human Models. Int. J. Interact. Des. Manuf. (IJIDeM) 2022, 16, 733–752. [Google Scholar] [CrossRef]

- Paudel, P.; Kwon, Y.J.; Kim, D.H.; Choi, K.H. Industrial Ergonomics Risk Analysis Based on 3D-Human Pose Estimation. Electronics 2022, 11, 3403. [Google Scholar] [CrossRef]

- Vianello, L.; Gomes, W.; Stulp, F.; Aubry, A.; Maurice, P.; Ivaldi, S. Latent Ergonomics Maps: Real-Time Visualization of Estimated Ergonomics of Human Movements. Sensors 2022, 22, 3981. [Google Scholar] [CrossRef]

- Mattsson, S.; Fast-Berglund, Å.; Åkerman, M. Assessing operator wellbeing through physiological measurements in real-time—Towards industrial application. Technologies 2017, 5, 61. [Google Scholar] [CrossRef]

- Nardolillo, A.M.; Baghdadi, A.; Cavuoto, L.A. Heart rate variability during a simulated assembly task; influence of age and gender. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Austin, TX, USA, 9–13 October 2017; SAGE Publications: Los Angeles, CA, USA, 2017; pp. 1853–1857. [Google Scholar]

- Chen, J.; Taylor, J.E.; Comu, S. Assessing task mental workload in construction projects: A novel electroencephalography approach. J. Constr. Eng. Manag. 2017, 143, 04017053. [Google Scholar] [CrossRef]

- Cheema, B.S.; Samima, S.; Sarma, M.; Samanta, D. Mental workload estimation from EEG signals using machine learning algorithms. In Proceedings of the Engineering Psychology and Cognitive Ergonomics: 15th International Conference, EPCE 2018, Held as Part of HCI International 2018, Las Vegas, NV, USA, 15–20 July 2018; Proceedings 15. Springer: Berlin/Heidelberg, Germany, 2018; pp. 265–284. [Google Scholar]

- Hwang, S.; Jebelli, H.; Choi, B.; Choi, M.; Lee, S. Measuring workers’ emotional state during construction tasks using wearable EEG. J. Constr. Eng. Manag. 2018, 144, 04018050. [Google Scholar] [CrossRef]

- Bommer, S.C.; Fendley, M. A theoretical framework for evaluating mental workload resources in human systems design for manufacturing operations. Int. J. Ind. Ergon. 2018, 63, 7–17. [Google Scholar] [CrossRef]

- D’Addona, D.M.; Bracco, F.; Bettoni, A.; Nishino, N.; Carpanzano, E.; Bruzzone, A.A. Adaptive automation and human factors in manufacturing: An experimental assessment for a cognitive approach. CIRP Ann. 2018, 67, 455–458. [Google Scholar] [CrossRef]

- Landi, C.; Villani, V.; Ferraguti, F.; Sabattini, L.; Secchi, C.; Fantuzzi, C. Relieving operators’ workload: Towards affective robotics in industrial scenarios. Mechatronics 2018, 54, 144–154. [Google Scholar] [CrossRef]

- Kosch, T.; Karolus, J.; Ha, H.; Schmidt, A. Your skin resists: Exploring electrodermal activity as workload indicator during manual assembly. In Proceedings of the ACM SIGCHI Symposium on Engineering Interactive Computing Systems, Valencia Spain, 18–21 June 2019; pp. 1–5. [Google Scholar]

- Arpaia, P.; Moccaldi, N.; Prevete, R.; Sannino, I.; Tedesco, A. A wearable EEG instrument for real-time frontal asymmetry monitoring in worker stress analysis. IEEE Trans. Instrum. Meas. 2020, 69, 8335–8343. [Google Scholar] [CrossRef]

- Bettoni, A.; Montini, E.; Righi, M.; Villani, V.; Tsvetanov, R.; Borgia, S.; Secchi, C.; Carpanzano, E. Mutualistic and adaptive human-machine collaboration based on machine learning in an injection moulding manufacturing line. Procedia CIRP 2020, 93, 395–400. [Google Scholar] [CrossRef]

- Lee, W.; Migliaccio, G.C.; Lin, K.Y.; Seto, E.Y. Workforce development: Understanding task-level job demands-resources, burnout, and performance in unskilled construction workers. Saf. Sci. 2020, 123, 104577. [Google Scholar] [CrossRef]

- Papetti, A.; Gregori, F.; Pandolfi, M.; Peruzzini, M.; Germani, M. A method to improve workers’ well-being toward human-centered connected factories. J. Comput. Des. Eng. 2020, 7, 630–643. [Google Scholar] [CrossRef]

- Van Acker, B.B.; Bombeke, K.; Durnez, W.; Parmentier, D.D.; Mateus, J.C.; Biondi, A.; Saldien, J.; Vlerick, P. Mobile pupillometry in manual assembly: A pilot study exploring the wearability and external validity of a renowned mental workload lab measure. Int. J. Ind. Ergon. 2020, 75, 102891. [Google Scholar] [CrossRef]

- Digiesi, S.; Manghisi, V.M.; Facchini, F.; Klose, E.M.; Foglia, M.M.; Mummolo, C. Heart rate variability based assessment of cognitive workload in smart operators. Manag. Prod. Eng. Rev. 2020, 11, 56–64. [Google Scholar] [CrossRef]

- Khairai, K.M.; Wahab, M.N.A.; Sutarto, A.P. Heart Rate Variability (HRV) as a physiological marker of stress among electronics assembly line workers. In Human-Centered Technology for a Better Tomorrow: Proceedings of HUMENS 2021; Springer: Berlin/Heidelberg, Germany, 2021; p. 3. [Google Scholar]

- Hopko, S.K.; Khurana, R.; Mehta, R.K.; Pagilla, P.R. Effect of cognitive fatigue, operator sex, and robot assistance on task performance metrics, workload, and situation awareness in human-robot collaboration. IEEE Robot. Autom. Lett. 2021, 6, 3049–3056. [Google Scholar] [CrossRef]

- Argyle, E.M.; Marinescu, A.; Wilson, M.L.; Lawson, G.; Sharples, S. Physiological indicators of task demand, fatigue, and cognition in future digital manufacturing environments. Int. J. Hum. Comput. Stud. 2021, 145, 102522. [Google Scholar] [CrossRef]

- Brunzini, A.; Peruzzini, M.; Grandi, F.; Khamaisi, R.K.; Pellicciari, M. A preliminary experimental study on the workers’ workload assessment to design industrial products and processes. Appl. Sci. 2021, 11, 12066. [Google Scholar] [CrossRef]

- Bläsing, D.; Bornewasser, M. Influence of increasing task complexity and use of informational assistance systems on mental workload. Brain Sci. 2021, 11, 102. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.J.; Lukodono, R.P. Classification of mental workload in Human-robot collaboration using machine learning based on physiological feedback. J. Manuf. Syst. 2022, 65, 673–685. [Google Scholar] [CrossRef]

- Mach, S.; Storozynski, P.; Halama, J.; Krems, J.F. Assessing mental workload with wearable devices–Reliability and applicability of heart rate and motion measurements. Appl. Ergon. 2022, 105, 103855. [Google Scholar] [CrossRef]

- Regazzoni, D.; De Vecchi, G.; Rizzi, C. RGB Cams vs RGB-D Sensors: Low Cost Motion Capture Technologies Performances and Limitations. J. Manuf. Syst. 2014, 33, 719–728. [Google Scholar] [CrossRef]

- Folgado, F.J.; Calderón, D.; González, I.; Calderón, A.J. Review of Industry 4.0 from the Perspective of Automation and Supervision Systems: Definitions, Architectures and Recent Trends. Electronics 2024, 13, 782. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. The Future of the Human–Machine Interface (HMI) in Society 5.0. Future Internet 2023, 15, 162. [Google Scholar] [CrossRef]

- Krupitzer, C.; Müller, S.; Lesch, V.; Züfle, M.; Edinger, J.; Lemken, A.; Schäfer, D.; Kounev, S.; Becker, C. A Survey on Human Machine Interaction in Industry 4.0. arXiv 2020. [Google Scholar] [CrossRef]

- Kramer, A.F. Physiological metrics of mental workload: A review of recent progress. In Multiple Task Performance; CRC Press: Boca Raton, FL, USA, 2020; pp. 279–328. [Google Scholar]

- Kosch, T.; Karolus, J.; Zagermann, J.; Reiterer, H.; Schmidt, A.; Woźniak, P.W. A survey on measuring cognitive workload in human-computer interaction. ACM Comput. Surv. 2023, 55, 1–39. [Google Scholar] [CrossRef]

- Carissoli, C.; Negri, L.; Bassi, M.; Storm, F.A.; Delle Fave, A. Mental workload and human-robot interaction in collaborative tasks: A scoping review. Int. J. Hum. Comput. Interact. 2023, 1–20. [Google Scholar] [CrossRef]

- Baltrusch, S.; Krause, F.; de Vries, A.; Van Dijk, W.; de Looze, M. What about the Human in Human Robot Collaboration? A literature review on HRC’s effects on aspects of job quality. Ergonomics 2022, 65, 719–740. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, C.; Song, B.; Gonzalez, N.; Gu, Y.; Hu, B. Effects of autonomous mobile robots on human mental workload and system productivity in smart warehouses: A preliminary study. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; SAGE Publications: Los Angeles, CA, USA, 2020; pp. 1691–1695. [Google Scholar]

- Ulrich, L.; Vezzetti, E.; Moos, S.; Marcolin, F. Analysis of RGB-D Camera Technologies for Supporting Different Facial Usage Scenarios. Multimed. Tools Appl. 2020, 79, 29375–29398. [Google Scholar] [CrossRef]

- Zhou, Z.H. Machine Learning; Springer Nature: Berlin, Germany, 2021. [Google Scholar]

- Ghaddar, B.; Naoum-Sawaya, J. High dimensional data classification and feature selection using support vector machines. Eur. J. Oper. Res. 2018, 265, 993–1004. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Dargan, S.; Kumar, M.; Ayyagari, M.R.; Kumar, G. A survey of deep learning and its applications: A new paradigm to machine learning. Arch. Comput. Methods Eng. 2020, 27, 1071–1092. [Google Scholar] [CrossRef]

- Ghosh, P.; Song, J.; Aksan, E.; Hilliges, O. Learning Human Motion Models for Long-Term Predictions. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 458–466. [Google Scholar] [CrossRef]

- Mumtaz, W.; Rasheed, S.; Irfan, A. Review of challenges associated with the EEG artifact removal methods. Biomed. Signal Process. Control 2021, 68, 102741. [Google Scholar] [CrossRef]

- Douibi, K.; Le Bars, S.; Lemontey, A.; Nag, L.; Balp, R.; Breda, G. Toward EEG-based BCI applications for industry 4.0: Challenges and possible applications. Front. Hum. Neurosci. 2021, 15, 705064. [Google Scholar] [CrossRef]

- Aricò, P.; Borghini, G.; Di Flumeri, G.; Colosimo, A.; Bonelli, S.; Golfetti, A.; Pozzi, S.; Imbert, J.P.; Granger, G.; Benhacene, R.; et al. Adaptive automation triggered by EEG-based mental workload index: A passive brain-computer interface application in realistic air traffic control environment. Front. Hum. Neurosci. 2016, 10, 539. [Google Scholar] [CrossRef]

- Marinescu, A.C.; Sharples, S.; Ritchie, A.C.; Sanchez Lopez, T.; McDowell, M.; Morvan, H.P. Physiological parameter response to variation of mental workload. Hum. Factors 2018, 60, 31–56. [Google Scholar] [CrossRef]

- Tuomivaara, S.; Lindholm, H.; Känsälä, M. Short-term physiological strain and recovery among employees working with agile and lean methods in software and embedded ICT systems. Int. J. Hum. Comput. Interact. 2017, 33, 857–867. [Google Scholar] [CrossRef]

- Wang, X.; Li, D.; Menassa, C.C.; Kamat, V.R. Investigating the effect of indoor thermal environment on occupants’ mental workload and task performance using electroencephalogram. Build. Environ. 2019, 158, 120–132. [Google Scholar] [CrossRef]

- Vanneste, P.; Raes, A.; Morton, J.; Bombeke, K.; Van Acker, B.B.; Larmuseau, C.; Depaepe, F.; Van den Noortgate, W. Towards measuring cognitive load through multimodal physiological data. Cogn. Technol. Work 2021, 23, 567–585. [Google Scholar] [CrossRef]

- Midha, S.; Maior, H.A.; Wilson, M.L.; Sharples, S. Measuring mental workload variations in office work tasks using fNIRS. Int. J. Hum. Comput. Stud. 2021, 147, 102580. [Google Scholar] [CrossRef]

- Orlandi, L.; Brooks, B. Measuring mental workload and physiological reactions in marine pilots: Building bridges towards redlines of performance. Appl. Ergon. 2018, 69, 74–92. [Google Scholar] [CrossRef]

- Wu, Y.; Miwa, T.; Uchida, M. Using physiological signals to measure operator’s mental workload in shipping—An engine room simulator study. J. Mar. Eng. Technol. 2017, 16, 61–69. [Google Scholar] [CrossRef]

- Drouot, M.; Le Bigot, N.; Bricard, E.; De Bougrenet, J.L.; Nourrit, V. Augmented reality on industrial assembly line: Impact on effectiveness and mental workload. Appl. Ergon. 2022, 103, 103793. [Google Scholar] [CrossRef]

- Castiblanco Jimenez, I.A.; Marcolin, F.; Ulrich, L.; Moos, S.; Vezzetti, E.; Tornincasa, S. Interpreting emotions with EEG: An experimental study with chromatic variation in VR. In Proceedings of the International Joint Conference on Mechanics, Design Engineering & Advanced Manufacturing, Ischia, Italy, 1–3 June 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 318–329. [Google Scholar]

- Castiblanco Jimenez, I.A.; Gomez Acevedo, J.S.; Olivetti, E.C.; Marcolin, F.; Ulrich, L.; Moos, S.; Vezzetti, E. User engagement comparison between advergames and traditional advertising using EEG: Does the user’s engagement influence purchase intention? Electronics 2022, 12, 122. [Google Scholar] [CrossRef]

- Castiblanco Jimenez, I.A.; Nonis, F.; Olivetti, E.C.; Ulrich, L.; Moos, S.; Monaci, M.G.; Marcolin, F.; Vezzetti, E. Exploring User Engagement in Museum Scenario with EEG—A Case Study in MAV Craftsmanship Museum in Valle d’Aosta Region, Italy. Electronics 2023, 12, 3810. [Google Scholar] [CrossRef]

| AI Keywords | Application Keywords | Context Keywords |

|---|---|---|

| Artificial Intelligence | Body Tracking | Manufacturing |

| Machine Learning | Body Recognition | Assembly Line |

| Deep Learning | Motion Capture | Industrial |

| Gesture Recognition | ||

| Postural Monitoring | ||

| Ergonomics |

| Application Keywords | Context Applications |

|---|---|

| Mental Ergonomics | Assembly Line |

| Mental Workload | Manufacturing |

| Work-Related Stress | Workplace Design |

| Cognitive Ergonomics |

| Authors (Year) | Application Context | Monitored Activity | Acquisition Device | Device Model | ML/DL | Alg. | Ergo. | Std. Ergo. Index/ Phys. Measure |

|---|---|---|---|---|---|---|---|---|

| Pławiak et al. (2016) [21] | Hand gestures analysis (body language) | Gesture recognition | Wearable IMUs | DG5 VHand glove (DGTech Engineering Solutions, Bazzano, BO, Italy) | ML | SVM (nu-SVC) | N/A | - |

| Grzeszick et al. (2017) [22] | Process order picking (warehouse settings) | Pose estimation | Wearable IMUs | Not specified | DL | CNN | N/A | - |

| Luo et al. (2018) [23] | Collaborative human–robot work in a shared workspace | Human motion prediction | Optical IR marker-based motion capture system | Vicon system (Oxford Metrics, UK)—not specified | ML | GMM | N/A | - |

| Moya Rueda et al. (2018) [24] | Locomotion gestures and settings in a warehouse (order picking in a logistics scenario) | Human activity recognition | Wearable IMUs | Not specified | DL | CNN | N/A | - |

| Urgo et al. (2019) [25] | Correctness of movement and safe behaviour in manufacturing mechanical components | Pose estimation and hand/tool tracking | RGB camera | Not specified | Both | HMM CNN (OpenPose) | N/A | - |

| Chen et al. (2020) [26] | Upper limb assembly action in an industrial setting | Pose estimation | RGB camera | Not specified | DL | YOLO CNN (OpenPose) | N/A | - |

| Jiao et al. (2020) [27] | Wind turbine blade manufacturing process | Pose estimation and action recognition | RGB camera | Not specified | DL | YOLO SHM STN GCN CNN | N/A | - |

| Manitsaris et al. (2020) [28] | TV assembly lines, the glassblowing industry, automated guided vehicles, and human–robot collaboration in the automotive assembly lines | Action recognition | (1) RGB camera (2) RGB-D camera | (1) Not specified (2) Not specified | ML | HMM | N/A | - |

| Phan et al. (2020) [29] | Stiffness along the wrist radial–ulnar deviation during a polishing task | estimation of Human Operators’ Joint Stiffness | (1) Instrumented tool with force/torque sensors; wearable sEMG sensors | (1) ATI mini 40 loadcell and Motion capture VZ4000 (Phoenix Technologies Inc., Vancouver, BC, Canada; (2) BIOPAC MP150 Data Acquisition and Analysis System (BIOPAC Systems Inc., Goleta, CA, USA) | - | - | N/A | - |

| Xiong et al. (2020) [30] | Cleaning with lying and standing engine block | Action recognition | - | - | DL | CNN | N/A | - |

| Luipers et al. (2021) [31] | Collaborative work human–robot on the assembly of sensor cases | Human motion prediction | RGB-D camera—Structured light | Kinect (Microsoft Corporation, Redmond, WA, USA) | ML | GP MetaL ANN | N/A | - |

| Manns et al. (2021) [32] | Manual assembly of extruded aluminium profile in shop floor environments | Action recognition and human motion prediction | Wearable IMUs | (1) XSENS MVN Awinda (Movella Inc., Henderson, NV, USA); (2) Manus Prime II (Movella Inc., Henderson, NV, USA) | DL | LSTM | N/A | - |

| Niemann et al. (2021) [33] | Order picking and packaging activities | Pose estimation and action recognition | Optical IR marker-based motion capture system | Not specified | DL | tCNN | N/A | - |

| Papanagiotou et al. (2021) [34] | Collaborative work human–robot on the assembly of LCD TV assembly | Pose estimation and gesture recognition | (1) RGB camera (2) RGB-D camera | (1) GoPro hero9 (San Mateo, CA, USA); (2) Intel-RealSense RGB-D (Santa Clara, CA, USA)—not specified | DL | CNN (OpenPose) | ||

| 3DCNN | N/A | - | ||||||

| Al-Amin et al. (2022) [35] | Assembly of a three-dimensional printer | Action recognition | Wearable IMUs | Myo armbands (Thalmic Labs, Waterloo, ON, Canada) | DL | CNN | N/A | - |

| Choi et al. (2022) [36] | Human–robot interaction in manufacturing and industrial fields | Pose estimation and Instance Segmentation | RGB-D camera—Time-of-Flight | Azure Kinect (Microsoft Corporation, Redmond, WA, USA) | DL | Mask-RCNN | N/A | - |

| De Feudis et al. (2022) [37] | Manual industrial assembly/disassembly procedure (power drill) | Pose estimation and hand/tool tracking | RGB-D camera—Time-of-Flight | Azure Kinect | DL | CNN (OpenPose) ArUco YOLO AKBT | N/A | - |

| Lima et al. (2022) [38] | Developing natural user interfaces to control a robotic arm | Hand state classification | RGB-D camera—Time-of-Flight | Kinect v2 | DL | LRCN | N/A | - |

| Mendes (2022) [39] | Assembly of electric motor in a human–robot collaboration environment | Gesture recognition | Wearable sEMG sensors | (1) Myo armbands; (2) sEMG prototype (Technaid S.L., Alcorcon, Spain) | Both | kNN CNN | N/A | - |

| Orsag et al. (2023) [40] | Collaborative work human–robot in an industrial environment | Action recognition and human motion prediction | Wearable IMUs | Combination Perception Neuron 32 Edition v2 (Miami, FL, USA) | DL | LSTM | N/A | - |

| Álvarez et al. (2016) [41] | Instruments for evaluation of the workers’ movements for employee injury and illness reduction (occupational health) | Pose estimation | Wearable IMUs | Model PTUD46 (Directed Perception) (Artisan TG, Champaign, IL, USA) | - | - | Physical | - |

| Fletcher et al. (2018) [42] | Aircraft wing system installations | Integration of human activity data and ergonomic analysis for digital design modelling and system monitoring | Wearable IMUs | IGS-Bio v1.8 (Animazoo Ltd., Hove, UK) | - | - | Physical | REBA |

| Golabchi et al. (2018) [43] | Construction job site | Pose estimation and action recognition | RGB camera | Not specified | DL | kNN | Physical | - |

| Nath et al. (2018) [44] | Warehouse operation (transport and loading an item) | Human activity recognition | Smartphone IMUs | Google Nexus 5X, Google Nexus 6 (Mountain View, CA, USA) | ML | SVM | Physical | - |

| Grandi et al. (2019) [45] | Workstation layout and the working cycle using digital manufacturing tools (manual assembly of cabin supports on the chassis of a tractor) | Pose estimation | - | - | - | - | Physical | EAWS |

| Maurice et al. (2019) [46] | Industry-oriented activities (car manufacturing) | Pose estimation | (1) Wearable IMUs; (2) Optical IR marker-based motion capture system; (3) Flexion and force sensor; (4) RGB camera | (1) Xsens MVN Link system (Xsens MVN whole-body Lycra suits; (2) Qualisys motion capture system (Qualisys, Goteborg, Sweden); (3) E-glove (Emphasis Telematics, Athens, Greece); (4) Not specified | Both | HMM CNN (OpenPose) | Physical | EAWS |

| Conforti et al. (2020) [47] | Manual material handling tasks | Pose estimation | Wearable IMUs | MIMUs MTw (Xsens Technologies) | ML | SVM | Physical | - |

| Massiris Fernández et al. (2020) [48] | Outdoor working scenarios (performing Marshall signs to an airplane, wall plastering and hammering work activities, tree cutting and drilling job) | Pose estimation | RGB camera | Not specified | DL | CNN (OpenPose) | Physical | RULA |

| Peruzzini et al. (2020) [49] | Assembly of the air cabin filters | Pose estimation | (1) Multi-parametric wearable sensor for real-time vital parameters monitoring; (2) RGB camera; (3) Optical IR marker-based motion capture system | (1) Zephyr BioHarness 3.0 (Medtronic, Minneapolis, MN, USA); (2) GoPro Hero3; (3) VICON Bonita cameras | - | - | Physical | OWAS, REBA, RULA |

| Dimitropoulos et al. (2021) [50] | Collaborative work in the elevator production sector | Pose estimation and action recognition | RGB-D camera—Time-of-Flight | Azure Kinect | DL | CNN | Physical | RULA |

| Mazhar et al. (2021) [51] | Human–robot interaction in social or industrial settings | Pose estimation and gesture recognition | RGB-D camera—Time-of-Flight | Kinect v2 | DL | CNN (OpenPose) | Physical | - |

| Mudiyanselage et al. (2021) [52] | Measurement of muscle activity while performing manual material handling | Pose estimation | Wearable sEMG sensors | Noraxon Mini DTS (Scottsdale, AZ, USA) | ML | Decision Tree SVM KNN Random forest | Physical | - |

| Ciccarelli et al. (2022) [53] | Posture classification in manufacturing settings (kitchen manufacturing) | Pose estimation | Wearable IMUs | Xsens MTw (Wireless Motion Tracker) | DL | CNN | Physical | RULA |

| Generosi et al. (2022) [54] | Manufacturing work operations (postures, hand grip types, and body segments) | Pose estimation | RGB camera | iPhone XS (Apple, Cupertino, CA, USA) | DL | CMU CNN (Mediapipe) | Physical | REBA, RULA, OCRA, OWAS |

| Guo et al. (2022) [55] | Building a virtual scenario for industrial maintenance and assembly process (satellite manufacturing) | Pose estimation | Optical IR marker-based motion capture system | Not specified | - | - | Physical | RULA |

| Kačerová et al. (2022) [56] | Implementation of ergonomic changes in working position (upper limb loading in the assembly workplace) | Pose estimation | Wearable IMUs | MoCap suit Perception Neuron Studio (Noitom Inc., Miami, FL, USA) | - | - | Physical | - |

| Lin et al. (2022) [57] | Video-based motion capture and force estimation frameworks for comprehensive ergonomic risk assessment | Pose estimation | (1) RGB camera; (2) Optical IR marker-based motion capture system | (1) GoPro HERO 6; (2) Vicon system—not specified | DL | CNN (OpenPose) | Physical | OWAS, REBA, RULA |

| Lorenzini et al. (2022) [58] | Kinematic/dynamic monitoring of physical load | Pose estimation | (1) Wearable IMUs; (2) Integrated piezoelectric force platforms; (3) Wearable sEMG sensors | (1) Xsens MVN suit; (2) Kistler force plate (Kistler Holding AG, Winterthur, Switzerland); (3) Delsys Trigno Wireless platform (Delsys Inc., Natick, MA, USA) | - | - | Physical | EAWS |

| Nunes et al. (2022) [59] | Posture evaluation in industrial settings (automotive assembly line) | Pose estimation | Wearable IMUs | Kallisto IMUs (Sensry Gmbh, Dresden, Germany); MVN Awinda (Xsens, Enschede, The Netherlands) | - | - | Physical | EAWS |

| Panariello et al. (2022) [60] | Execution of overhead industrial task (an overhead drilling task) | Pose estimation | (1) Optical IR marker-based motion capture system; (2) Integrated Strain Gauge force platforms; (3) Wearable sEMG sensors | (1) SMART DX 6000 (BTS Bioengineering, Garbagnate Milanese, Milano, Italy; (2) P600, BTS Bioengineering; (3) FREEEMG 1000 and 300, BTS Bioengineering | - | - | Physical | RULA |

| Paudel et al. (2022) [61] | Human body joints estimation for ergonomics (manufacturing settings) | Pose estimation | RGB camera | Not specified | DL | 3DMPPE Yolo | Physical | OWAS, REBA, RULA |

| Vianello et al. (2022) [62] | Online ergonomic feedback to industrial operators with/without interaction with a robot | Pose estimation and action recognition | Wearable IMUs | Xsens MVN suit | DL | VAE | Physical | RULA |

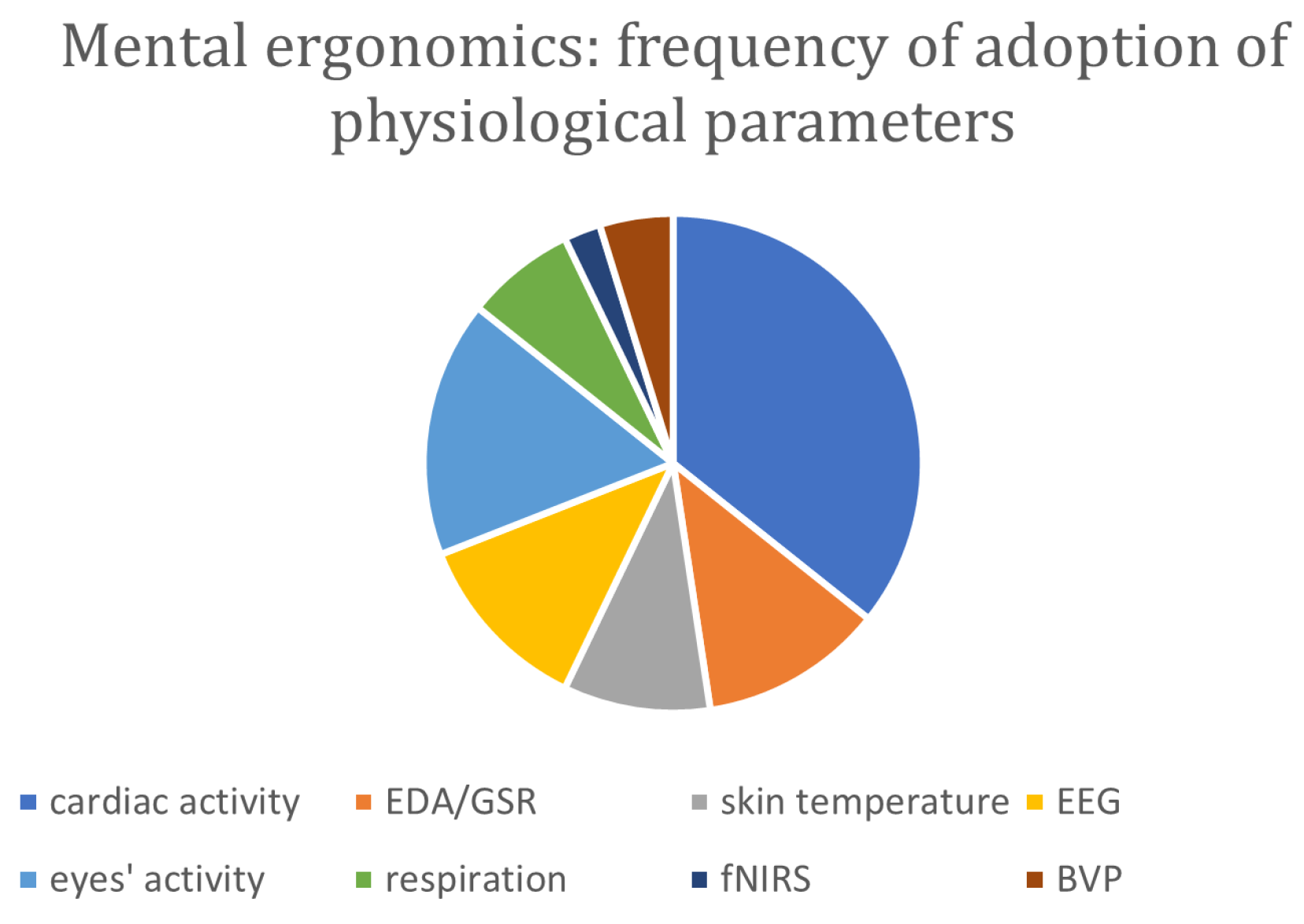

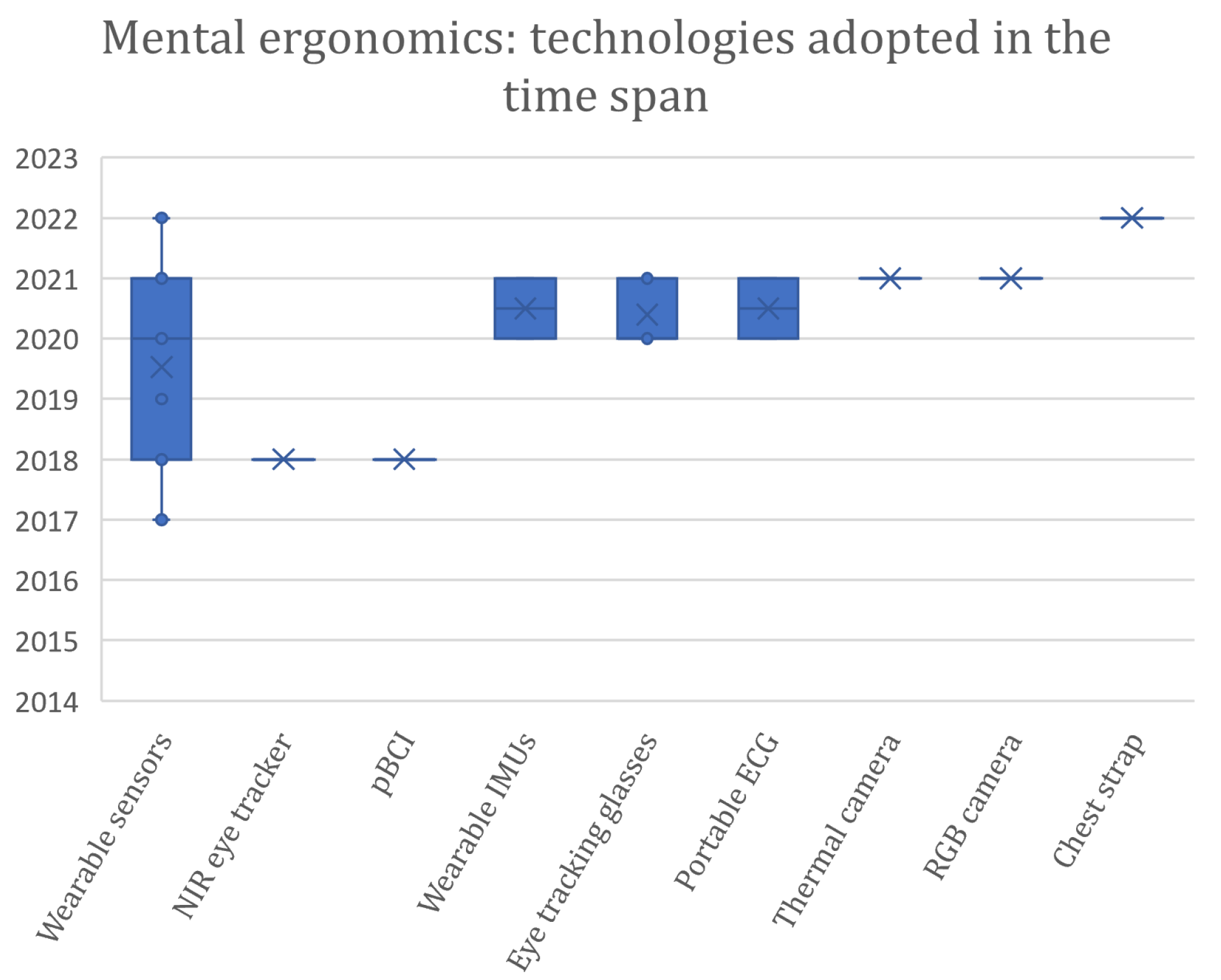

| Mattsson et al. (2017) [63] | Worker well-being evaluation in manufacturing setting | Mental load measurement | Wearable sensors | Qsensor, Breathing Activity Device, Smart Band 2 (Xiaomi, Beijing, China) | - | - | Mental | HRV, BVP, EDA, skin temperature, respiration |

| Nardolillo et al. (2017) [64] | Fatigue pattern evaluation in different aged workers (construction, manufacturing) | Mental/physical fatigue | Wearable sensors | Polar heart rate monitor (Polar Electro Oy, Kempele, Finland) | - | - | Mental/physical | HRV |

| Chen et al. (2017) [65] | Mental workload assessment in contruction workers | Mental load assessment | Wearable sensors | Prototype wearable EEG helmet | - | - | Mental | EEG |

| Cheema et al. (2018) [66] | Mental load assessment in multitasking activities (HRC) | Mental load assessment | Wearable sensors | Emotiv Epoc+ (Emotiv, San Francisco, CA, USA) | ML | RF | Mental | EEG |

| Hwang et al. (2018) [67] | Emotional assessment in construction workers | Mental load assessment | Wearable sensors | Not specified | - | - | Mental | EEG |

| Bommer et al. (2018) [68] | Mental workload assessment in manufacturing settings | Mental load assessment | Near infrared eye tracker | Tobii e T 120 (Tobii, Danderyd, Sweden) | - | - | Mental | eye tracking |

| D’Addona et al. (2018) [69] | Mental load assessment in manufacturing | Mental load assessment | Passive brain–computer interface (pBCI) | - | - | - | Mental | EEG |

| Landi et al. (2018) [70] | Mental workload assessment in industrial settings | Mental load assessment for affective robotics | Wearable sensors | Samsung Gear S (Samsung, Suwon, Republic of Korea) | - | - | Mental | HRV |

| Kosch et al. (2019) [71] | Workload evaluation in manual assembly | Mental load assessment | Wearable sensors | Empatica E4 (Empatica, Boston, MA, USA) | - | - | Mental | EDA |

| Arpaia et al. (2020) [72] | Mental workload in working environments | Mental load assessment | Wearable sensors | EEG-SMT Olimex (Olimex, Plovdiv, Bulgaria) | Both | SVM, kNN, RF, ANN | Mental | EEG |

| Bettoni et al. (2020) [73] | Mental workload assessment in manufacturing settings for adaptive HRC | Mental load assessment | Wearable sensors | Polar heart rate monitor, Empatica E4 | ML | RF | Mental | HRV, EDA, skin temperature |

| Lee et al. (2020) [74] | Evaluation of burnout influence on performance in unskilled construction workers | Burnout assessment | (1) Wearable sensors, (2) Wearable IMUs | (1) Zephyr BioHarness3, (2) ActiGraph GT9X (ActiGraph LLC, Pensacola, FL, USA) | - | - | Mental/physical | HR |

| Papetti et al. (2020) [75] | Integration of physical and mental well-being in industrial settings | Improvement of workers’ well-being | Wearable sensors, eye-tracking glasses | Not specified | - | - | Mental/ physical | HR, HRV, respiration, pupil diameter, eye blink |

| Van Acker et al. (2020) [76] | Mental workload measurement in manual assembly | Mental load assessment | Eye-tracking glasses | SMI ETG 2w (SensoMotoric Instruments, Teltow, Germany) | - | - | Mental | Pupillometry |

| Chen et al. (2020) [26] | Mental workload measurement in HRC | Mental load assessment | Eye tracking glasses | Tobii pro glasses2 | - | - | Mental | Pupillometry |

| Digiesi et al. (2020) [77] | Mental load assessment in smart operators (manufacturing activities) | Mental load assessment | ECG monitor | BITalino plugged kit (PLUX wireless biosignals, Lisboa, Portugal) | - | - | Mental | HRV |

| Mahmad Khairai et al. (2021) [78] | Work stress assessment in assembly line workers | Mental load assessment | Wearable sensors | EmWavePro (HeartMath, Boulder Creek, CA, USA) | - | - | Mental | HRV |

| Hopko et al. (2021) [79] | Evaluation of mental workload and cognitive fatigue in HRC | Menta load assessment | Wearable sensors | Actiheart 5 (CamNTech, Fenstanton, Cambridgeshire, UK) | - | - | Mental | HRV |

| Argyle et al. (2021) [80] | Analysis of fatigue and cognitive state in digital manufacturing | Mental load assessment | (1) Wearable sensors, (2) Thermal camera, (3) RGB camera | (1) Artinis Octamon+ (Artinis Medical Systems, Elst, The Netherlands), Zephyr BioHarness3, (2) FLIR A65sc (Teledyne FLIR, Wilsonville, Oregon, USA), (3) Not specified | - | - | Mental | fNIRS, HR, respiration, skin temperature |

| Brunzini et al. (2021) [81] | Analysis of ergonomics of operators in manual assembly | Workload assessment for HCD of industrial processes | (1) Eye-tracking glasses, (2) Wearable sensors, (3) Wearable IMUs | (1) Tobii glasses2, (2) Empatica E4, (3) Vive trackers 3.0 (HTC, Taoyuan, Taiwan) | - | - | Mental/ physical | RULA, HR, EDA, pupillometry |

| Bläsing et al. (2021) [82] | Mental workload assessment in manual assembly with assistance system | Mental load assessment | (1) Portable ECG monitor, (2) Eye-tracking glasses | (1) Faros eMotion 180 (BlindSight Gmbh, Fröndenberg, Germany), (2) SMI ETG 2w | - | - | Mental | ECG, eye tracking |

| Lin et al. (2022) [83] | Mental workload prediction in HRC | Mental workload assessment | Wearable sensors | Smartwatch DTA-S50 (DTAudio, Taiwan) | ML | RF | Mental | GSR, skin temperature, HR, BVP |

| Mach et al. (2022) [84] | Mental workload measurement for application in industry | Mental load assessment | (1) Wearable sensors, (2) Chest strap | (1) Samsung Gear S3, (2) Garmin premium heart rate monitor (Garmin Ltd., Olathe, KS, USA) | - | - | Mental | HR |

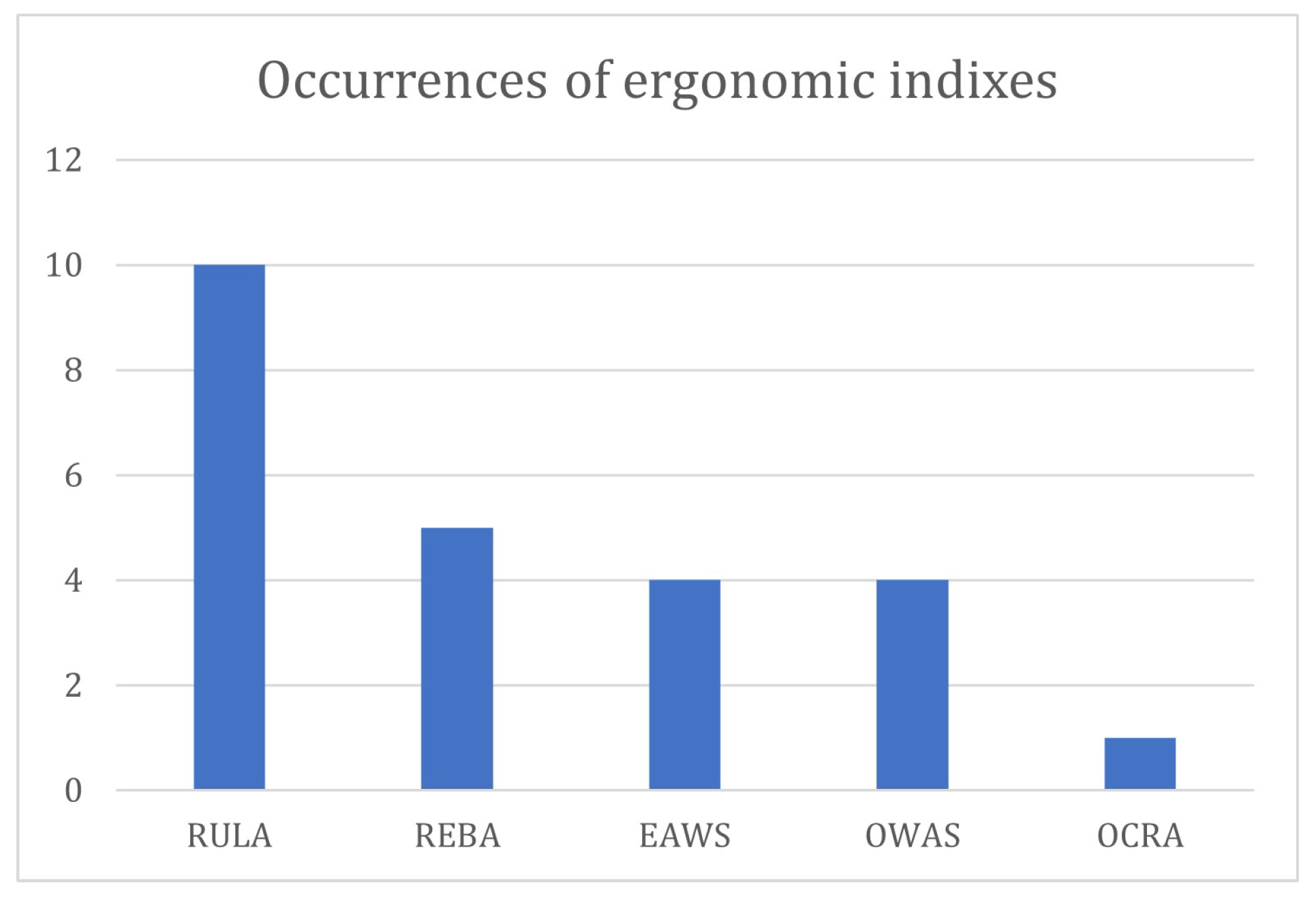

| Author | Reference | Year | RULA | REBA | EAWS | OWAS | OCRA | Other |

|---|---|---|---|---|---|---|---|---|

| Álvarez et al. | [41] | 2016 | X | |||||

| Fletcher et al. | [42] | 2018 | X | |||||

| Golabchi et al. | [43] | 2018 | X | |||||

| Nath et al. | [44] | 2018 | X | |||||

| Grandi et al. | [45] | 2019 | X | |||||

| Maurice et al. | [46] | 2019 | X | |||||

| Conforti et al. | [47] | 2020 | X | |||||

| Massiris Fernández et al. | [48] | 2020 | X | |||||

| Peruzzini et al. | [49] | 2020 | X | X | X | |||

| Dimitropoulos et al. | [50] | 2021 | X | |||||

| Mazhar et al. | [51] | 2021 | X | |||||

| Mudiyanselage et al. | [52] | 2021 | X | |||||

| Ciccarelli et al. | [53] | 2022 | X | |||||

| Generosi et al. | [54] | 2022 | X | X | X | X | ||

| Guo et al. | [55] | 2022 | X | |||||

| Kačerová et al. | [56] | 2022 | X | |||||

| Lin et al. | [57] | 2022 | X | X | X | |||

| Lorenzini et al. | [58] | 2022 | X | |||||

| Nunes et al. | [59] | 2022 | X | |||||

| Panariello et al. | [60] | 2022 | X | |||||

| Paudel et al. | [61] | 2022 | X | X | X | |||

| Vianello et al. | [62] | 2022 | X | X |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Antonaci, F.G.; Olivetti, E.C.; Marcolin, F.; Castiblanco Jimenez, I.A.; Eynard, B.; Vezzetti, E.; Moos, S. Workplace Well-Being in Industry 5.0: A Worker-Centered Systematic Review. Sensors 2024, 24, 5473. https://doi.org/10.3390/s24175473

Antonaci FG, Olivetti EC, Marcolin F, Castiblanco Jimenez IA, Eynard B, Vezzetti E, Moos S. Workplace Well-Being in Industry 5.0: A Worker-Centered Systematic Review. Sensors. 2024; 24(17):5473. https://doi.org/10.3390/s24175473

Chicago/Turabian StyleAntonaci, Francesca Giada, Elena Carlotta Olivetti, Federica Marcolin, Ivonne Angelica Castiblanco Jimenez, Benoît Eynard, Enrico Vezzetti, and Sandro Moos. 2024. "Workplace Well-Being in Industry 5.0: A Worker-Centered Systematic Review" Sensors 24, no. 17: 5473. https://doi.org/10.3390/s24175473