Smart Ship Draft Reading by Dual-Flow Deep Learning Architecture and Multispectral Information

Abstract

:1. Introduction

- This paper innovatively combines NIR and RGB images for automatic draft reading, leveraging their complementary spectral information to mitigate the impact of water surface conditions in draft reading tasks.

- A dual-branch backbone BIF is introduced to extract pairs of information from RGB and NIR images, serving multiple downstream tasks such as waterline segmentation and character recognition.

- Compared with previous research, our method achieved the best results in both waterline segmentation and draft detection tasks, with a mAP of 99.2% and mIoU of 99.3%, respectively. Additionally, our draft reading error is less than 0.01m compared with the ground truth, achieving the highest accuracy among all the evaluation methods.

2. Materials and Methods

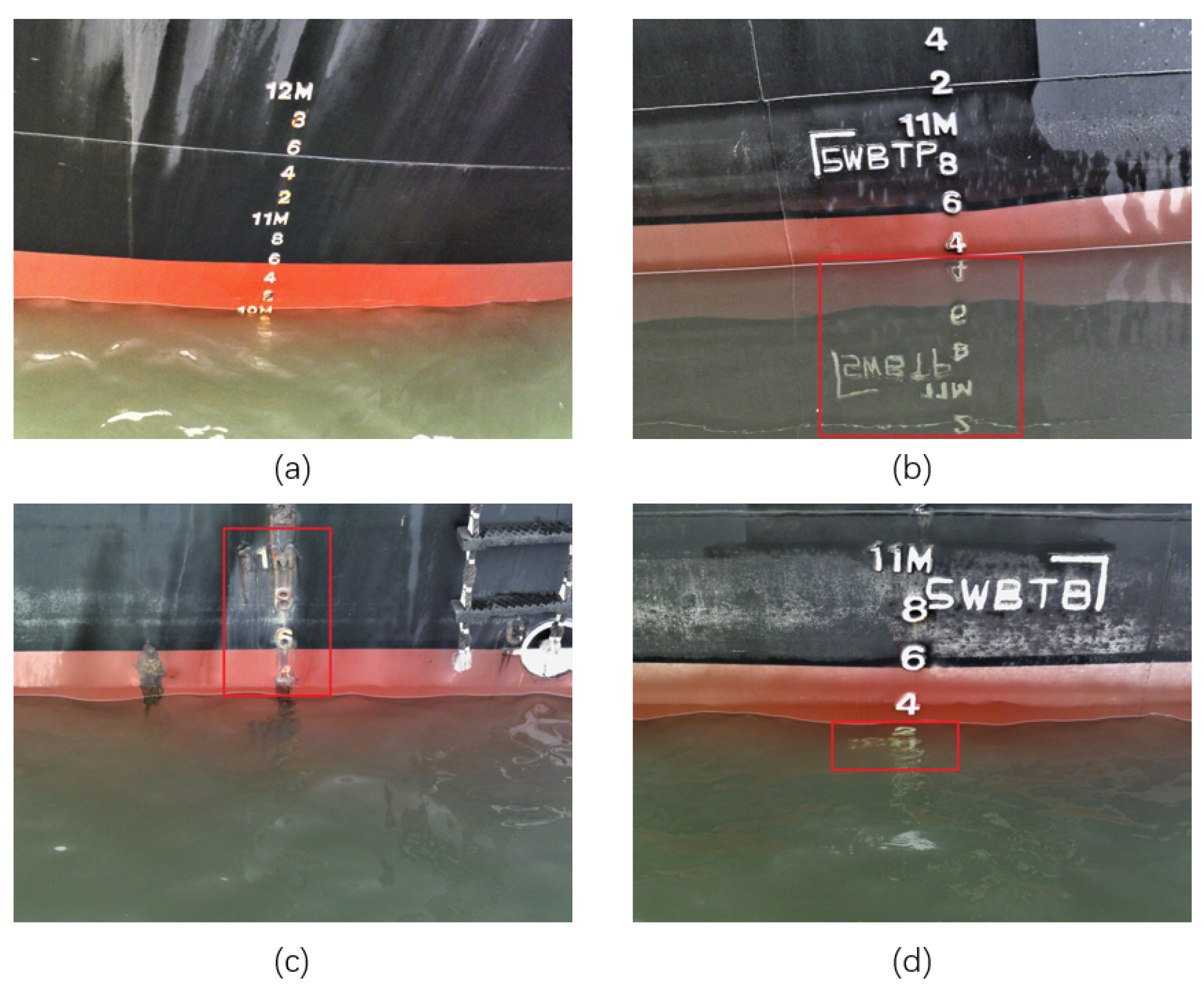

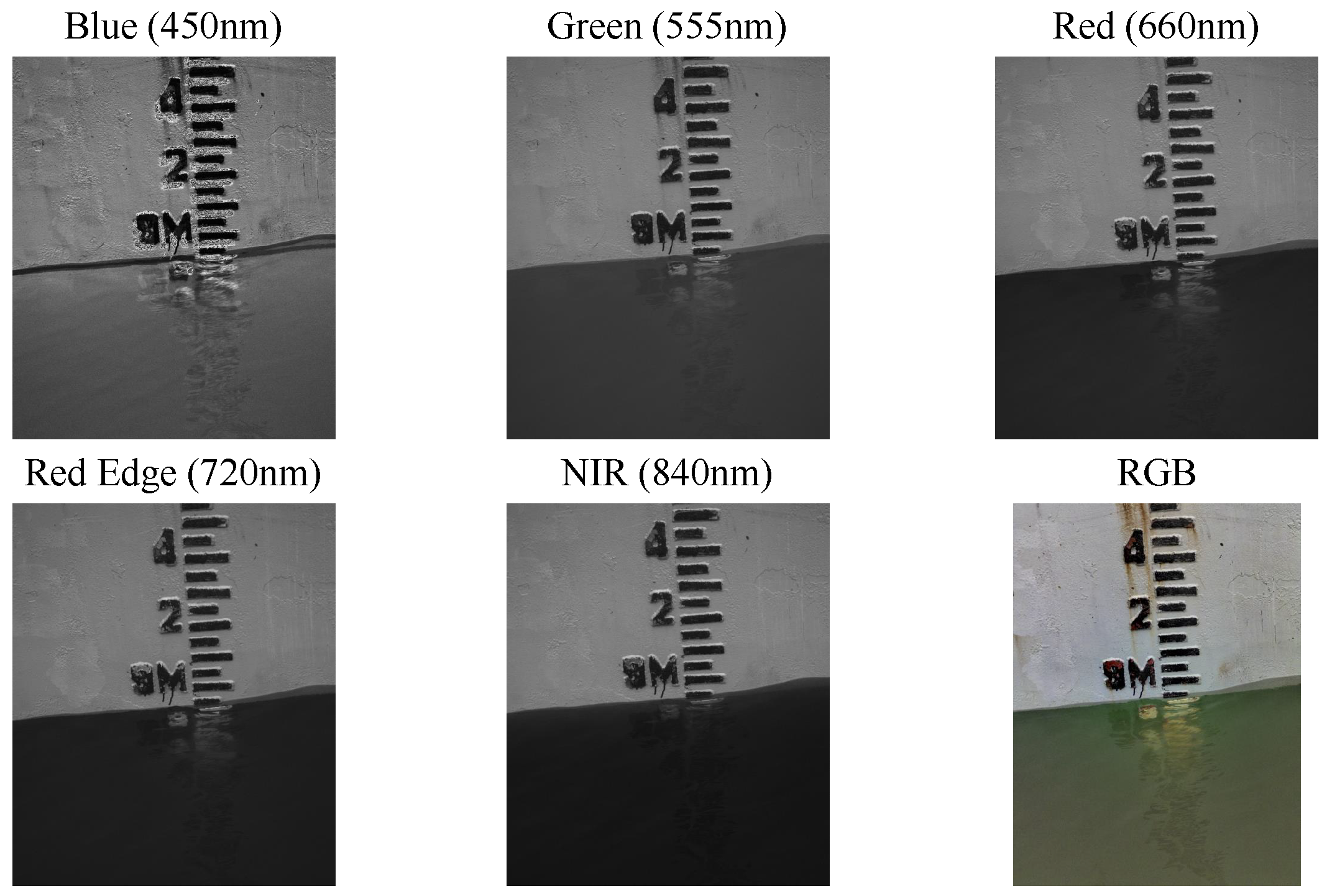

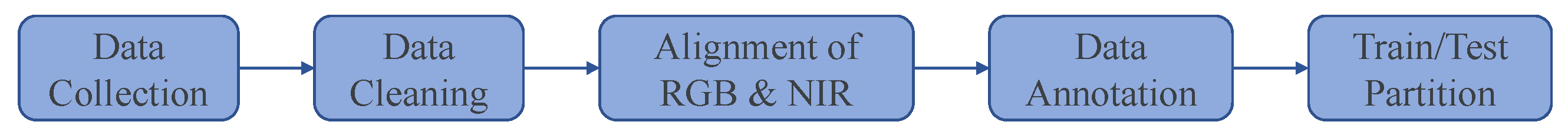

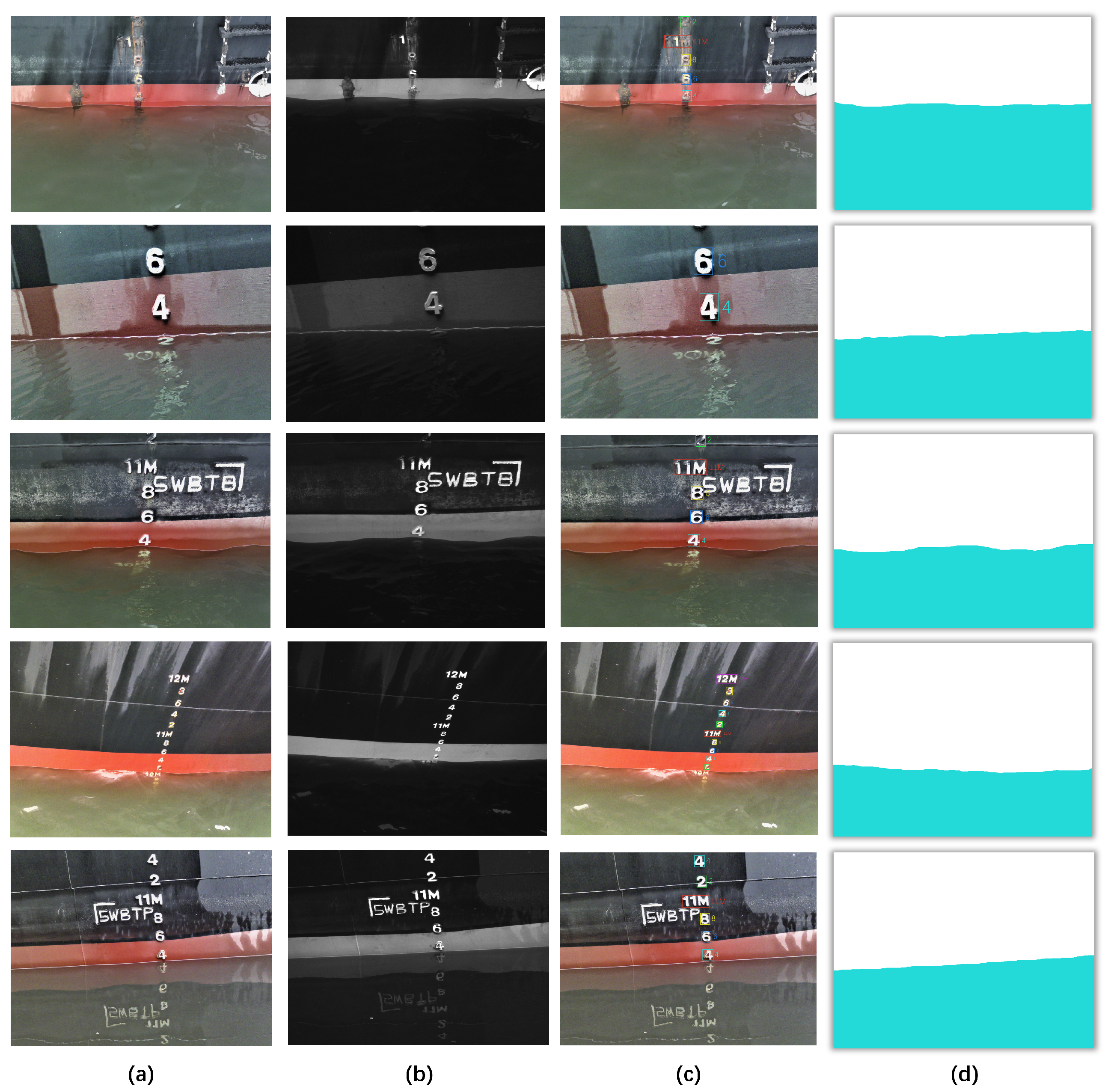

2.1. Materials

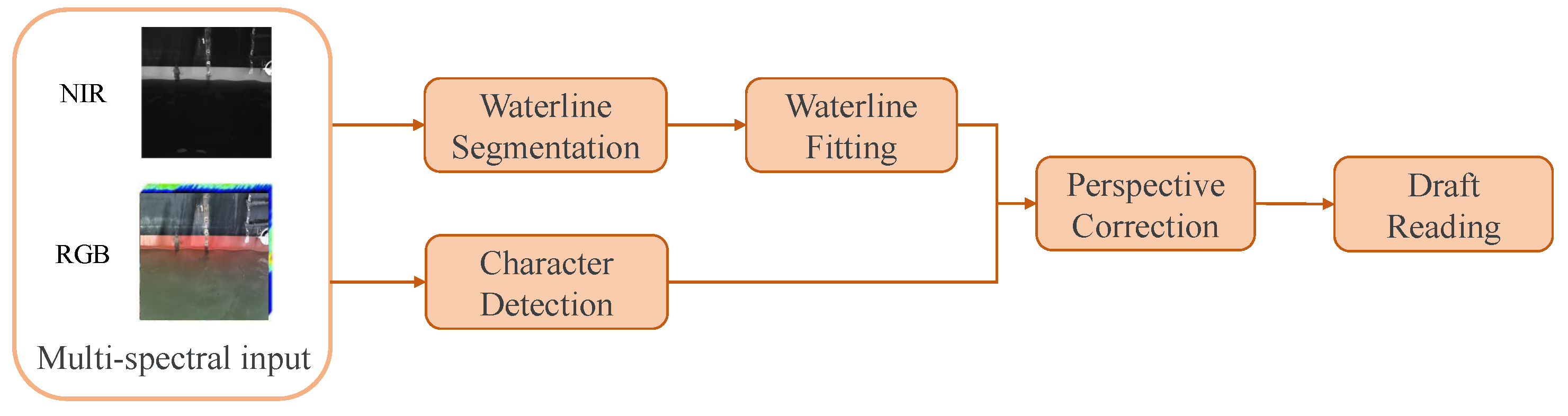

2.2. Methodology

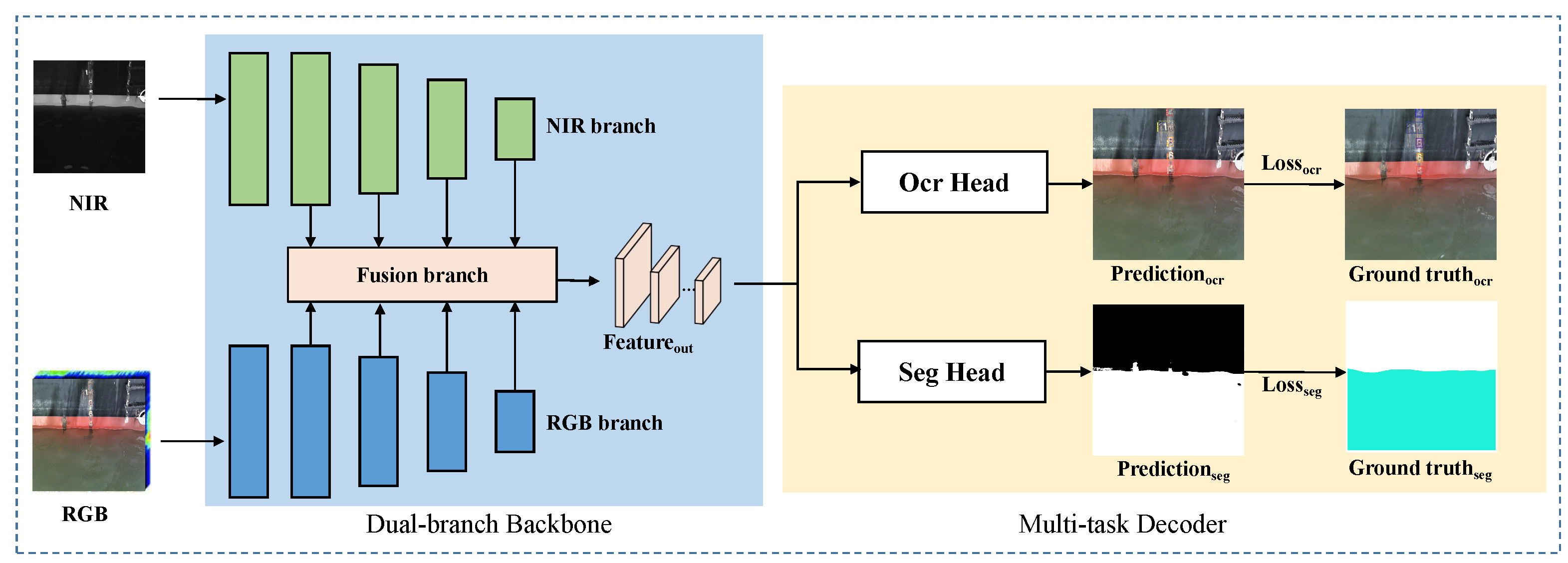

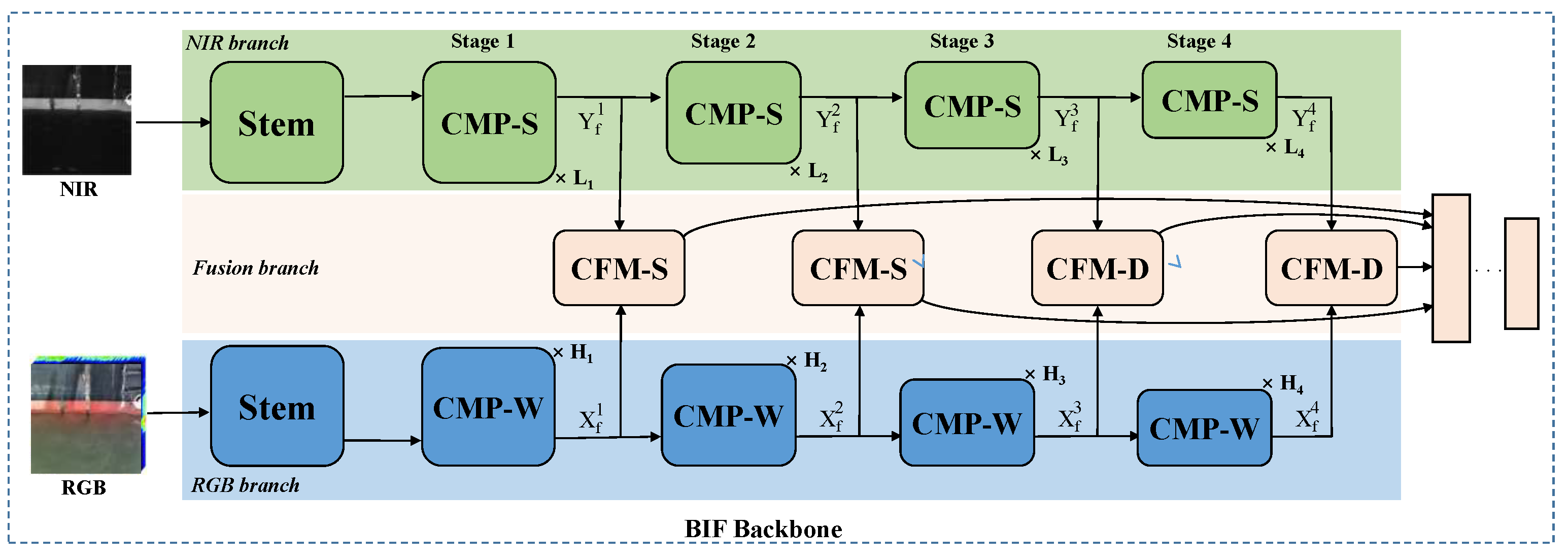

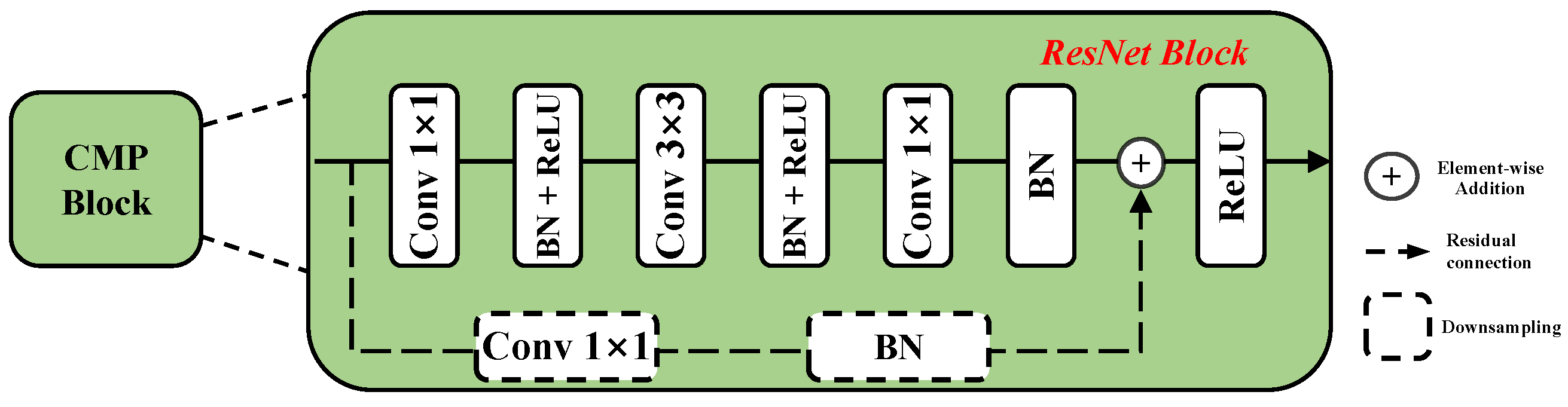

2.2.1. Band Information Fusion Framework

2.2.2. Waterline Fitting

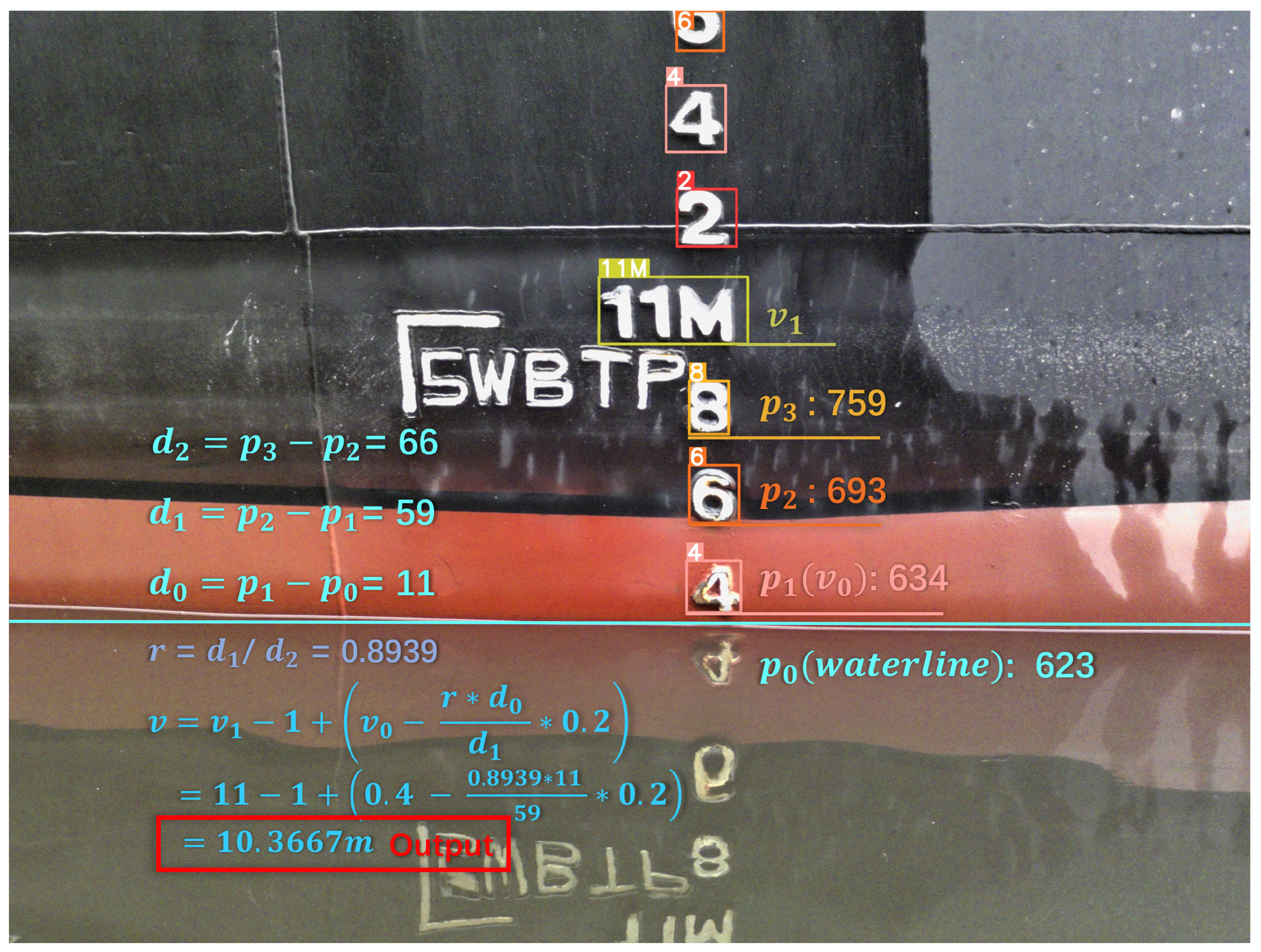

2.2.3. Perspective Correction and Reading

3. Results

3.1. Evaluation Metrics

3.2. Experimental Setup

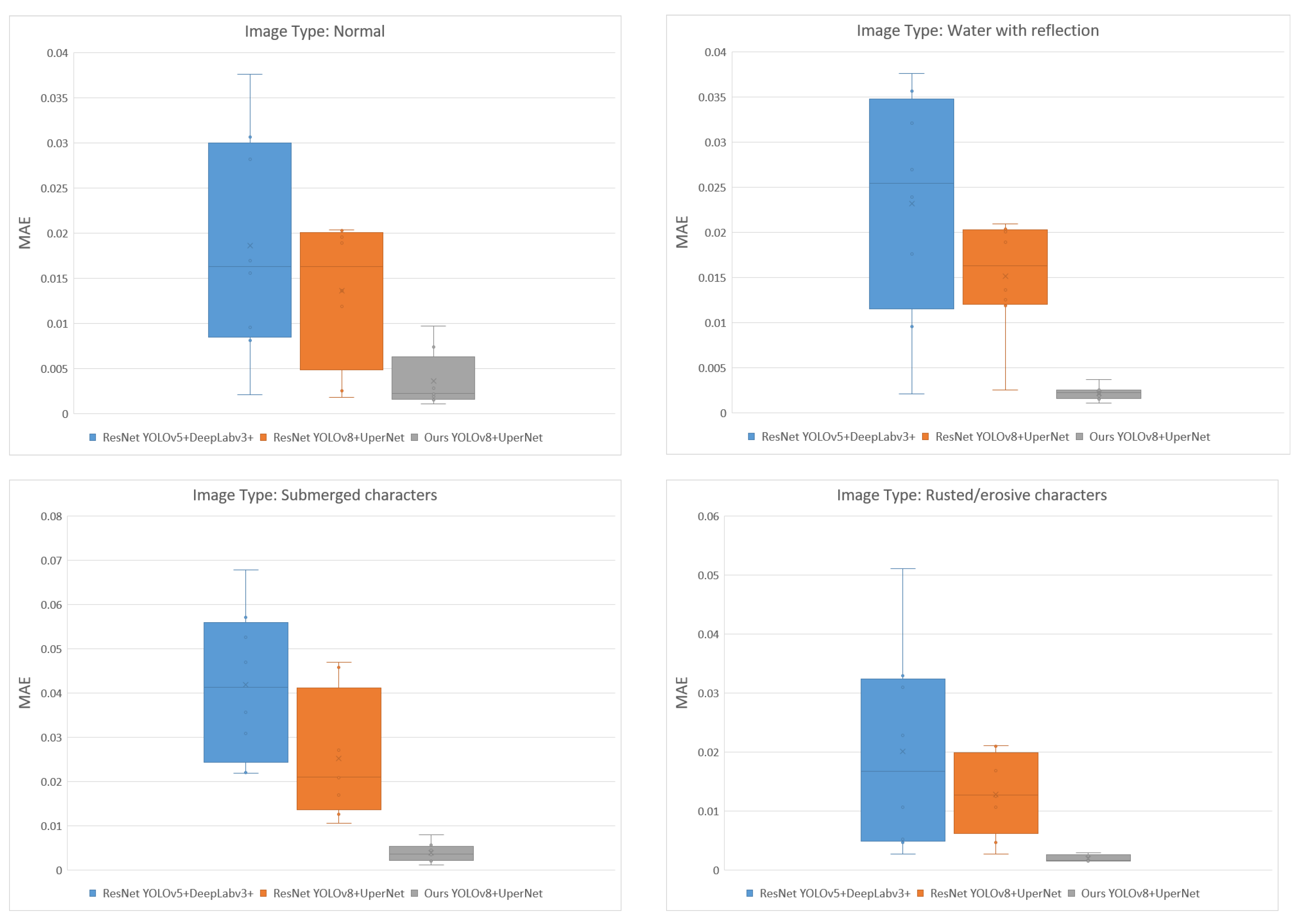

3.3. Algorithm Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wei, Y. Research Review of Ship Draft Observation Methods. Am. J. Traffic Transp. Eng. 2023, 8, 33. [Google Scholar] [CrossRef]

- Wu, J.; Cai, R. Problem In Vessl’S Draft Survey And Countmeature To Increase Its Precision. J. Insp. Quar. 2010, 20, 79–80. [Google Scholar]

- Salminen, J.O. Draft of a Ship-Measurement Technologies and Applications. Master’s Thesis, Aalto University, Espoo, Finland, 2012. [Google Scholar]

- Jiang, X.; Mao, H.; Zhang, H. Simultaneous optimization of the liner shipping route and ship schedule designs with time windows. Math. Probl. Eng. 2020, 2020, 1–11. [Google Scholar] [CrossRef]

- Dhar, S.; Khawaja, H. Real-Time Ship Draft Measurement and Optimal Estimation Using Kalman Filter. Int. J. Multiphysics 2023, 17, 407–425. [Google Scholar]

- Zhang, X.; Yu, M.; Ma, Z.; Ouyang, H.; Zou, Y.; Zhang, S.L.; Niu, H.; Pan, X.; Xu, M.; Li, Z.; et al. Self-powered distributed water level sensors based on liquid–solid triboelectric nanogenerators for ship draft detecting. Adv. Funct. Mater. 2019, 29, 1900327. [Google Scholar] [CrossRef]

- Gu, H.W.; Zhang, W.; Xu, W.H.; Li, Y. Digital measurement system for ship draft survey. Appl. Mech. Mater. 2013, 333, 312–316. [Google Scholar] [CrossRef]

- Rodriguez, D.R.; Peavey, R.W.; Beech, W.E.; Beatty, J.M. Portable Draft Measurement Device and Method of Use Therefor. U.S. Patent 6,347,461, 2002. [Google Scholar]

- Zheng, H.; Huang, Y.; Ye, Y. New level sensor system for ship stability analysis and monitor. IEEE Trans. Instrum. Meas. 1999, 48, 1014–1017. [Google Scholar] [CrossRef]

- Wang, Z.; Shi, P.; Wu, C. A Ship Draft Line Detection Method Based on Image Processing and Deep Learning. J. Phys. Conf. Ser. 2020, 1575, 012230. [Google Scholar] [CrossRef]

- Kirilenko, Y.; Epifantsev, I. Automatic Recognition of Draft Marks on a Ship’s Board Using Deep Learning System. In International School on Neural Networks, Initiated by IIASS and EMFCSC; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1393–1401. [Google Scholar]

- Fernandes, L.A.; Oliveira, M.M. Real-time line detection through an improved Hough transform voting scheme. Pattern Recognit. 2008, 41, 299–314. [Google Scholar] [CrossRef]

- Tsujii, T.; Yoshida, H.; Iiguni, Y. Automatic draft reading based on image processing. Opt. Eng. 2016, 55, 104104. [Google Scholar] [CrossRef]

- Ran, X.; Shi, C.; Chen, J.; Ying, S.; Guan, K. Draft line detection based on image processing for ship draft survey. In Proceedings of the 2011 2nd International Congress on Computer Applications and Computational Science, Jakarta, Indonesia, 15–17 November 2011; Springer: Berlin/Heidelberg, Germany, 2012; Volume 2, pp. 39–44. [Google Scholar]

- Zhang, K.; Kong, C.; Sun, F.; Cong, C.; Shen, Y.; Jiang, Y. Intelligent Recognition of Waterline Value Based on Neural Network. In Proceedings of the International Conference on Computational & Experimental Engineering and Sciences, Dubai, United Arab Emirates, 8–12 January 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 191–209. [Google Scholar]

- Wang, B.; Liu, Z.; Wang, H. Computer vision with deep learning for ship draft reading. Opt. Eng. 2021, 60, 024105. [Google Scholar] [CrossRef]

- Li, W.; Zhan, W.; Han, T.; Wang, P.; Liu, H.; Xiong, M.; Hong, S. Research and Application of U 2-NetP Network Incorporating Coordinate Attention for Ship Draft Reading in Complex Situations. J. Signal Process. Syst. 2023, 95, 177–195. [Google Scholar] [CrossRef]

- Qu, J.; Liu, R.W.; Zhao, C.; Guo, Y.; Xu, S.S.D.; Zhu, F.; Lv, Y. Multi-Task Learning-Enabled Automatic Vessel Draft Reading for Intelligent Maritime Surveillance. IEEE Trans. Intell. Transp. Syst. 2023, 25, 4552–4564. [Google Scholar] [CrossRef]

- Tosi, F.; Ramirez, P.Z.; Poggi, M.; Salti, S.; Mattoccia, S.; Di Stefano, L. RGB-Multispectral matching: Dataset, learning methodology, evaluation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–22 June 18 2022; pp. 15958–15968. [Google Scholar]

- Barrero, O.; Perdomo, S.A. RGB and multispectral UAV image fusion for Gramineae weed detection in rice fields. Precis. Agric. 2018, 19, 809–822. [Google Scholar] [CrossRef]

- Zhang, H.; Fromont, E.; Lefevre, S.; Avignon, B. Multispectral fusion for object detection with cyclic fuse-and-refine blocks. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Virtual, 25–28 October 2020; pp. 276–280. [Google Scholar]

- Soroush, R.; Baleghi, Y. NIR/RGB image fusion for scene classification using deep neural networks. Vis. Comput. 2023, 39, 2725–2739. [Google Scholar] [CrossRef]

- Lira, J. Segmentation and morphology of open water bodies from multispectral images. Int. J. Remote Sens. 2006, 27, 4015–4038. [Google Scholar] [CrossRef]

- Zhang, F.-F.; Zhang, B.; Li, J.-S.; Shen, Q.; Wu, Y.; Song, Y. Comparative analysis of automatic water identification method based on multispectral remote sensing. Procedia Environ. Sci. 2011, 11, 1482–1487. [Google Scholar] [CrossRef]

- Nguyen, D. Water body extraction from multi spectral image by spectral pattern analysis. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 181–186. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified perceptual parsing for scene understanding. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 418–434. [Google Scholar]

| Band No. | Name | Center Wavelength | Bandwidth |

|---|---|---|---|

| 1 | Blue | 450 nm | 35 nm |

| 2 | Green | 555 nm | 25 nm |

| 3 | Red | 660 nm | 22.5 nm |

| 4 | Red Edge | 720 nm | 10 nm |

| 5 | NIR | 840 nm | 30 nm |

| Model | Epoch | Batch Size | Learning Rate | Optimizer |

|---|---|---|---|---|

| Our YOLOv5 | 100 | 8 | 0.001 | ADAM |

| Our YOLOv8 | 100 | 8 | 0.1 | ADAM |

| Our DeepLabv3+ | 100 | 8 | 0.005 | SGD |

| Our UPerNet | 100 | 8 | 0.02 | SGD |

| Backbone | Input Type | Model | mAP (%) |

|---|---|---|---|

| ResNet-50 | RGB | YOLOv5 | 94.1 |

| ResNet-50 | RGB | YOLOv8 | 96.7 |

| ResNet-50 | RGB + NIR | YOLOv5 | 95.0 |

| ResNet-50 | RGB + NIR | YOLOv8 | 97.9 |

| Ours | RGB + NIR | YOLOv5 | 95.9 |

| Ours | RGB + NIR | YOLOv8 | 99.2 |

| Backbone | Input Type | Model | mIoU (%) |

|---|---|---|---|

| ResNet-50 | RGB | DeepLabv3+ | 98.0 |

| ResNet-50 | RGB | UPerNet | 98.4 |

| ResNet-50 | RGB + NIR | DeepLabv3+ | 98.3 |

| ResNet-50 | RGB + NIR | UPerNet | 98.9 |

| Ours | RGB + NIR | DeepLabv3+ | 99.0 |

| Ours | RGB + NIR | UPerNet | 99.3 |

| Image Type | ResNet | Ours | |

|---|---|---|---|

| YOLOv5 + DeepLabv3+ | YOLOv8 + UPerNet | YOLOv8 + UPerNet | |

| Normal (11 images) | 0.021 m | 0.013 m | 0.007 m |

| Water with reflection (57 images) | 0.023 m | 0.014 m | 0.003 m |

| Submerged characters (16 images) | 0.051 m | 0.031 m | 0.005 m |

| Rusted/erosive characters (21 images) | 0.034 m | 0.018 m | 0.002 m |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, B.; Li, J.; Tang, H.; Liu, X. Smart Ship Draft Reading by Dual-Flow Deep Learning Architecture and Multispectral Information. Sensors 2024, 24, 5580. https://doi.org/10.3390/s24175580

Zhang B, Li J, Tang H, Liu X. Smart Ship Draft Reading by Dual-Flow Deep Learning Architecture and Multispectral Information. Sensors. 2024; 24(17):5580. https://doi.org/10.3390/s24175580

Chicago/Turabian StyleZhang, Bo, Jiangyun Li, Haicheng Tang, and Xi Liu. 2024. "Smart Ship Draft Reading by Dual-Flow Deep Learning Architecture and Multispectral Information" Sensors 24, no. 17: 5580. https://doi.org/10.3390/s24175580

APA StyleZhang, B., Li, J., Tang, H., & Liu, X. (2024). Smart Ship Draft Reading by Dual-Flow Deep Learning Architecture and Multispectral Information. Sensors, 24(17), 5580. https://doi.org/10.3390/s24175580