An Audiovisual Correlation Matching Method Based on Fine-Grained Emotion and Feature Fusion

Abstract

:1. Introduction

- A video and music dataset with fine-grained annotations of emotional arousal levels was constructed, with corresponding affective space for semantic analysis.

- An extraction method for audiovisual affective features in film and television scenes was proposed. By distinguishing between samples with high matching degrees and those with low matching degrees, optimal correlation features were obtained based on the CCA method.

- An audiovisual emotion matching model is proposed, which integrates the association of cross-modal features and similarity judgment of fine-grained emotions, enhancing the rationality of prediction results.

2. Related Works

2.1. Audiovisual Affective Feature Extraction

2.2. Audiovisual Association Matching

3. Proposed Methodology

3.1. Music Video Affective Dataset

3.1.1. Dataset Establishment

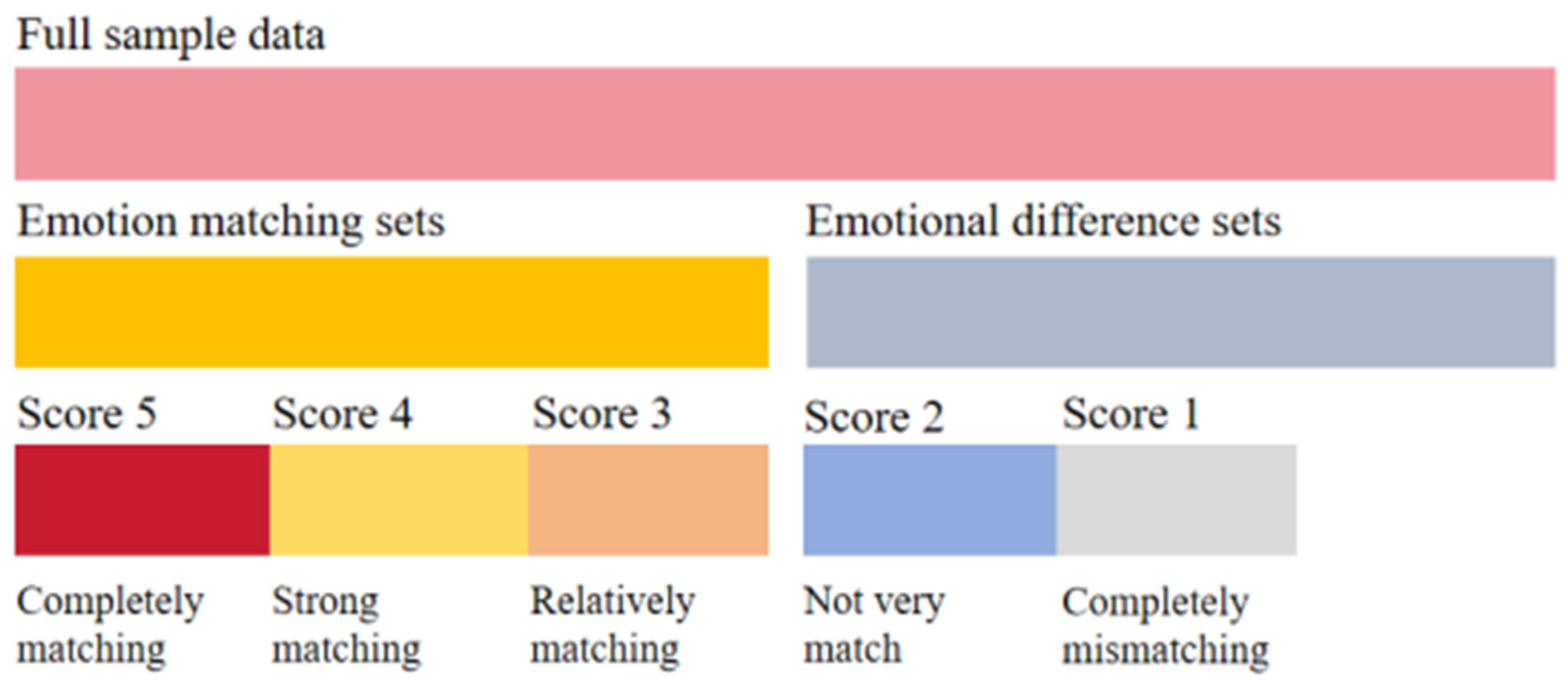

3.1.2. Affective Data Annotation

- Multidimensional affective annotation of video materials only: Participants are required to watch silent video clips in random order and use the affective evaluation system composed of multidimensional evaluation words to quantitatively describe the different dimensions of emotions in the video.

- Multidimensional affective annotation of music materials only: Participants are required to listen to music material in random order and quantitatively describe the different dimensions of emotions in the music using the affective evaluation system composed of multidimensional evaluation words.

- Multidimensional affective annotation of music–video material combinations: Participants were required to watch video clips with music in random order, and the affective evaluation system composed of multidimensional evaluation words was used to quantify the different dimensions of the combined emotion.

- Annotate the affective matching degree of music–video material combinations: Participants are required to watch video materials with music in random order and describe the degree of audiovisual affective matching for each combination of materials.

3.2. Feature Extraction

3.2.1. Music Stream

3.2.2. Video Stream

3.2.3. Missing Value Processing Method

3.3. The Audiovisual Matching Architecture

4. Experiments and Results

4.1. Subsets Analysis

4.2. Parameter Determination

4.3. Comparison with Other Methods

4.4. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pang, N.; Guo, S.; Yan, M.; Chan, C.A. A Short Video Classification Framework Based on Cross-Modal Fusion. Sensors 2023, 23, 8425. [Google Scholar] [CrossRef]

- Tao, R.; Zhu, M.; Cao, H.; Ren, H. Fine-Grained Cross-Modal Semantic Consistency in Natural Conservation Image Data from a Multi-Task Perspective. Sensors 2024, 24, 3130. [Google Scholar] [CrossRef]

- Chatterjee, S.; Mishra, J.; Sundram, F.; Roop, P. Towards Personalised Mood Prediction and Explanation for Depression from Biophysical Data. Sensors 2024, 24, 164. [Google Scholar] [CrossRef] [PubMed]

- Hehenkamp, N.; Rizzi, F.G.; Grundhöfer, L.; Gewies, S. Prediction of Ground Wave Propagation Delay for MF R-Mode. Sensors 2024, 24, 282. [Google Scholar] [CrossRef] [PubMed]

- Leung, R. Using AI–ML to Augment the Capabilities of Social Media for Telehealth and Remote Patient Monitoring. Healthcare 2023, 11, 1704. [Google Scholar] [CrossRef]

- Yan, M.; Lou, X.; Chan, C.A.; Wang, Y.; Jiang, W. A Semantic and Emotion-based Dual Latent Variable Generation Model for a Dialogue System. CAAI Trans. Intell. Technol. 2023, 8, 319–330. [Google Scholar] [CrossRef]

- Sarkar, R.; Choudhury, S.; Dutta, S.; Roy, A.; Saha, S.K. Recognition of emotion in music based on deep convolutional neural network. Multimed. Tools Appl. 2020, 79, 765–783. [Google Scholar] [CrossRef]

- Mittal, T.; Guhan, P.; Bhattacharya, U.; Chandra, B.; Bera, A.; Manocha, D. Emoticon: Context-aware multimodal emotion recognition using frege’s principle. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 14234–14243. [Google Scholar] [CrossRef]

- Masci, J.; Bronstein, M.M.; Bronstein, A.M.; Schmidhuber, J. Multimodal Similarity-Preserving Hashing. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 824–830. [Google Scholar] [CrossRef] [PubMed]

- Li, T.; Sun, Z.; Zhang, H.; Sun, Z.; Li, J.; Wu, Z. Deep music retrieval for fine-grained videos by exploiting cross-modal-encoded voice-overs. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR), New York, NY, USA, 11–15 July 2021; pp. 1880–1884. [Google Scholar] [CrossRef]

- Liao, Z.; Yu, Y.; Gong, B.; Cheng, L. Audeosynth: Music-driven video montage. ACM Trans. Graph. (TOG) 2015, 34, 1–10. [Google Scholar] [CrossRef]

- Nakatsuka, T.; Hamasaki, M.; Goto, M. Content-Based Music-Image Retrieval Using Self-and Cross-Modal Feature Embedding Memory. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 2174–2184. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing; Technical Report; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Chen, C.H.; Weng, M.F.; Jeng, S.K.; Ghuang, Y.Y. Emotion-based Music Visualization using Photos. In Proceedings of the Advances in Multimedia Modeling: 14th International Multimedia Modeling Conference (MMM), Kyoto, Japan, 9–11 January 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 358–368. [Google Scholar] [CrossRef]

- Su, F. An automatic music classification method based on emotion. Inf. Technol. 2021, 10, 93–97. [Google Scholar] [CrossRef]

- Zhan, C.; She, D.; Zhao, S.; Cheng, M.; Yang, J. Zero-shot emotion recognition via affective structural embedding. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1151–1160. [Google Scholar] [CrossRef]

- Gómez-Cañón, J.S.; Cano, E.; Eerola, T.; Herrera, P.; Hu, X.; Yang, Y.H. Music Emotion Recognition: Toward new, robust standards in personalized and context-sensitive applications. IEEE Signal Process. Mag. 2021, 38, 106–114. [Google Scholar] [CrossRef]

- Yu, X. Adaptability of Simple Classifier and Active Learning in Music Emotion Recognition. In Proceedings of the 4th International Conference on Electronics, Communications and Control Engineering (ICECC), New York, NY, USA, 9–11 April 2021; pp. 13–19. [Google Scholar] [CrossRef]

- Zhang, J.; Wen, X.; Cho, A.; Whang, M. An Empathy Evaluation System Using Spectrogram Image Features of Audio. Sensors 2021, 21, 7111. [Google Scholar] [CrossRef]

- Al-Saadawi, H.F.T.; Das, R. TER-CA-WGNN: Trimodel Emotion Recognition Using Cumulative Attribute-Weighted Graph Neural Network. Appl. Sci. 2024, 14, 2252. [Google Scholar] [CrossRef]

- Wang, J.; Huang, R. Music Emotion Recognition based on Wide Deep Learning Networks. J. East China Univ. Sci. Technol. 2022, 48, 373–380. [Google Scholar] [CrossRef]

- Tang, H.; Chen, N. Combining CNN and Broad Learning for Music Classification. IEICE Trans. Inf. Syst. 2020, 103, 695–701. [Google Scholar] [CrossRef]

- Ning, H.; Nan, J. Classification of music emotion appreciation based on forward neural network multi-feature fusion algorithm. Microcomput. Appl. 2021, 37, 91–94. [Google Scholar]

- Hizlisoy, S.; Yildirim, S.; Tufekci, Z. Music emotion recognition using convolutional long short term memory deep neural networks. Eng. Sci. Technol. Int. J. 2021, 24, 760–767. [Google Scholar] [CrossRef]

- Zhong, Z.; Wang, H.; Su, B.; Liu, M.; Pei, D. Music emotion recognition fusion on CNN-BiLSTM and self-attention model. Comput. Eng. Appl. 2023, 59, 10. [Google Scholar]

- Wang, Y.; Wu, J.; Heracleous, P.; Wada, S.; Kimura, R.; Kurihara, S. Implicit knowledge injectable cross attention audiovisual model for group emotion recognition. In Proceedings of the 2020 International Conference on Multimodal Interaction (ICMI), Utrecht, The Netherlands, 25–29 October 2020; pp. 827–834. [Google Scholar] [CrossRef]

- Huang, R.; Chen, Z.; He, J.; Chu, X. Dynamic Heterogeneous User Generated Contents-Driven Relation Assessment via Graph Representation Learning. Sensors 2022, 22, 1402. [Google Scholar] [CrossRef]

- Ma, Y.; Xu, Y.; Liu, Y.; Yan, F.; Zhang, Q.; Li, Q.; Liu, Q. Multi-Scale Cross-Attention Fusion Network Based on Image Super-Resolution. Appl. Sci. 2024, 14, 2634. [Google Scholar] [CrossRef]

- Xu, H.; Jiang, C.; Liang, X.; Li, Z. Spatial-aware graph relation network for large-scale object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9298–9307. [Google Scholar] [CrossRef]

- Chaudhari, A.; Bhatt, C.; Krishna, A.; Mazzeo, P.L. ViTFER: Facial Emotion Recognition with Vision Transformers. Appl. Syst. Innov. 2022, 5, 80. [Google Scholar] [CrossRef]

- Roshdy, A.; Karar, A.; Kork, S.A.; Beyrouthy, T.; Nait-ali, A. Advancements in EEG Emotion Recognition: Leveraging Multi-Modal Database Integration. Appl. Sci. 2024, 14, 2487. [Google Scholar] [CrossRef]

- Tie, Y.; Cheng, H.; Jin, C.; Li, X.; Qi, L. Research on emotion recognition method based on audio and video feature fusion. J. Chongqing Univ. Technol. (Nat. Sci.) 2022, 36, 120–127. [Google Scholar]

- Liao, Y.; Lei, Q.; Zhang, W.; Zhou, M.; Wen, Y. Reasearch on image sentiment analysis based on muti-visual object fusion. Appl. Res. Comput. 2021, 38, 1250–1255. [Google Scholar]

- Lee, J.; Kim, S.; Kim, S.; Park, J.; Sohn, K. Context-aware emotion recognition networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10143–10152. [Google Scholar]

- Avramidis, K.; Stewart, S.; Narayanan, S. On the role of visual context in enriching music representations. In Proceedings of the ICASSP 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Sato, T.; Sugano, Y.; Sato, Y. Self-Supervised Learning for Audio-Visual Relationships of Videos with Stereo Sounds. IEEE Access 2022, 10, 94273–94284. [Google Scholar] [CrossRef]

- Surís, D.; Vondrick, C.; Russell, B.; Salamon, J. It’s time for artistic correspondence in music and video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10564–10574. [Google Scholar] [CrossRef]

- Cao, Y.; Long, M.; Wang, J.; Yang, Q.; Yu, P.S. Deep visual-semantic hashing for cross-modal retrieval. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1445–1454. [Google Scholar] [CrossRef]

- Shen, Y.; Liu, L.; Shao, L.; Song, J. Deep binaries: Encoding semantic-rich cues for efficient textual-visual cross retrieval. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4097–4106. [Google Scholar] [CrossRef]

- Liang, L.; Wang, G.; Zuo, W.; Feng, X.; Zhang, L. Cross-Domain Visual Matching via Generalized Similarity Measure and Feature Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1089–1102. [Google Scholar] [CrossRef]

- Nie, X.; Wang, B.; Li, J.; Hao, F.; Jian, M.; Yin, Y. Deep multiscale fusion hashing for cross-modal retrieval. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 401–410. [Google Scholar] [CrossRef]

- Rasiwasia, N.; Costa Pereira, J.; Coviello, E.; Doyle, G.; Lanckriet, G.R.; Levy, R.; Vasconcelos, N. A new approach to cross-modal multimedia retrieval. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010; pp. 251–260. [Google Scholar] [CrossRef]

- Andrew, G.; Arora, R.; Bilmes, J.; Livescu, K. Deep canonical correlation analysis. In Proceedings of the International Conference on Machine Learning, PMLR, Atlanta, GA, USA, 17–19 June 2013; pp. 1247–1255. [Google Scholar]

- Shao, J.; Wang, L.; Zhao, Z.; Cai, A. Deep canonical correlation analysis with progressive and hypergraph learning for cross-modal retrieval. Neurocomputing 2016, 214, 618–628. [Google Scholar] [CrossRef]

- Zeng, D.; Yu, Y.; Oyama, K. Deep Triplet Neural Networks with Cluster-CCA for Audio-Visual Cross-modal Retrieval. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2020, 16, 1–23. [Google Scholar] [CrossRef]

- Vukotić, V.; Raymond, C.; Gravier, G. Bidirectional joint representation learning with symmetrical deep neural networks for multimodal and crossmodal applications. In Proceedings of the 2016 ACM on International Conference on Multimedia Retrieval, New York, NY, USA, 6–9 June 2016; pp. 343–346. [Google Scholar] [CrossRef]

- Wei, W.; Ooi, B.C.; Yang, X.; Zhang, D.; Zhuang, Y. Effective multi-modal retrieval based on stacked auto-encoders. Proc. VLDB Endow. 2014, 7, 649–660. [Google Scholar] [CrossRef]

- He, Y.; Xiang, S.; Kang, C.; Wang, J.; Pan, C. Cross-Modal Retrieval via Deep and Bidirectional Representation Learning. IEEE Trans. Multimed. 2016, 18, 1363–1377. [Google Scholar] [CrossRef]

- Hua, Y.; Tian, H.; Cai, A.; Shi, P. Cross-modal correlation learning with deep convolutional architecture. In Proceedings of the 2015 Visual Communications and Image Processing (VCIP), Singapore, 13–16 December 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, J.; Peng, Y.; Yuan, M. Unsupervised generative adversarial cross-modal hashing. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar] [CrossRef]

- Nawaz, S.; Janjua, M.K.; Calefati, A.; Gallo, L. Revisiting cross modal retrieval. axXiv 2018, arXiv:1807.07364. [Google Scholar]

- Gu, J.; Cai, J.; Joty, S.R.; Niu, L.; Wang, G. Look, imagine and match: Improving textual-visual cross-modal retrieval with generative models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7181–7189. [Google Scholar] [CrossRef]

- Su, S.; Zhong, Z.; Zhang, C. Deep joint-semantics reconstructing hashing for large-scale unsupervised cross-modal retrieval. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3027–3035. [Google Scholar] [CrossRef]

- Li, C.; Deng, C.; Wang, L.; Xie, D.; Liu, X. Coupled cyclegan: Unsupervised hashing network for cross-modal retrieval. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 176–183. [Google Scholar] [CrossRef]

- Wang, P.; Wang, X.; Wang, Z.; Dong, Y. Learning Accurate Pseudo-Labels via Feature Similarity in the Presence of Label Noise. Appl. Sci. 2024, 14, 2759. [Google Scholar] [CrossRef]

- Wang, P.; Liu, S.; Chen, J. CCDA: A Novel Method to Explore the Cross-Correlation in Dual-Attention for Multimodal Sentiment Analysis. Appl. Sci. 2024, 14, 1934. [Google Scholar] [CrossRef]

- Zhao, X.; Li, X.; Tie, Y.; Hu, Z.; Qi, L. Video Background Music Recommendation Based on Multi-level Fusion Features. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Brisbane, Australia, 10–14 July 2023; pp. 405–410. [Google Scholar] [CrossRef]

- Zeng, D.; Wu, J.; Hattori, G.; Xu, R.; Yu, Y. Learning explicit and implicit dual common subspaces for audio-visual cross-modal retrieval. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 1–23. [Google Scholar] [CrossRef]

- Rasiwasia, N.; Mahajan, D.; Mahadevan, V.; Aggarwal, G. Cluster Canonical Correlation Analysis. In Proceedings of the Seventeenth International Conference on Artificial Intelligence and Statistics, PMLR, Reykjavik, Iceland, 22–25 April 2014; pp. 823–831. [Google Scholar]

- Akaho, S. A kernel method for canonical correlation analysis. Neural Netw. 2006, 18, 911–917. [Google Scholar]

- Pandeya, Y.R.; Lee, J. Deep learning-based late fusion of multimodal information for emotion classification of music video. Multimed. Tools Appl. 2021, 80, 2887–2905. [Google Scholar] [CrossRef]

- Chua, P.; Makris, D.; Herremans, D.; Roig, G.; Agres, K. Predicting emotion from music videos: Exploring the relative contribution of visual and auditory information to affective responses. arXiv 2022, arXiv:2202.10453. [Google Scholar] [CrossRef]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Collecting Large, Richly Annotated Facial-Expression Databases from Movies. IEEE MultiMedia 2012, 19, 34–41. [Google Scholar] [CrossRef]

- Baveye, Y.; Dellandrea, E.; Chamaret, C.; Chen, L. LIRIS-ACCEDE: A video database for affective content analysis. IEEE Trans. Affect. Comput. 2015, 6, 43–55. [Google Scholar] [CrossRef]

- Schindler, A.; Rauber, A. Harnessing music-related visual stereotypes for music information retrieval. ACM Trans. Intell. Syst. Technol. (TIST) 2016, 8, 1–21. [Google Scholar] [CrossRef]

- Su, Z.; Qian, Y.; Yu, G.; Ren, H. Research on emotion space of film and television scene images based on subjective perception. J. China Univ. Posts Telecommun. 2019, 26, 75. [Google Scholar] [CrossRef]

- Su, Z.; Liu, B.; Zhou, X.; Ren, H. Multidimensional sentiment recognition of film and television scene images. J. Electron. Imaging 2021, 30, 063014. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Fan, H.; Xiong, B.; Mangalam, K.; Li, Y.; Yan, Z.; Malik, J.; Feichtenhofer, C. Multiscale vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 6824–6835. [Google Scholar]

- Bertasius, G.; Wang, H.; Torresani, L. Is space-time attention all you need for video understanding? ICML 2021, 2, 4. [Google Scholar]

- Su, Z.; Peng, J.; Ren, H.; Zhang, Y. Fine-grained Sentiment Semantic Analysis and Matching of Music and Image. In Proceedings of the 2022 IEEE 6th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Beijing, China, 3–5 October 2022; pp. 1593–1597. [Google Scholar] [CrossRef]

- Maćkiewicz, A.; Ratajczak, W. Principal components analysis (PCA). Comput. Geosci. 1993, 19, 303–342. [Google Scholar] [CrossRef]

- Oseledets, I.V.; Tyrtyshnikov, E.E. Breaking the curse of dimensionality, or how to use SVD in many dimensions. SIAM J. Sci. Comput. 2009, 31, 3744–3759. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 52. [Google Scholar]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Zhao, Y. Research on Stock Price Prediction Model Based on CCA-GA-BPNN Comprehensive Technology. Master’s Thesis, South China University of Technology, Guangzhou, China, 2020. Available online: https://d.wanfangdata.com.cn/thesis/D02084355 (accessed on December 2020).

- Xing, B.; Xie, H.; Zhu, B.; Huang, Q.; Chen, R.; Tian, H. Research on the cross-media synesthesia matching of Chinese poetry and folk music based on emotional characteristics. J. Fudan Univ. 2020, 59, 558–564. [Google Scholar]

| Dataset | From Movie and Television | Open Source | Size | Emotional Labels | Divide between Matched and Unmatched Pairs |

|---|---|---|---|---|---|

| AFEW [63] | √ | √ | 1426 clips from 0.3 to 5.4 s long | 7 categories | — |

| Music Video Emotion Dataset [61] | — | √ | 1699 clips from 25 to 35 s long | 6 categories | — |

| Muvi [62] | — | √ | 81 one-minute videos | V-A; GEMS28 | — |

| LIRIS-ACCEDE [64] | √ | — | 9800 excerpts from 8 to 12 s long | PAD | — |

| MVD [65] | — | √ | 1600 ids from YouTube videos | — | — |

| Our Dataset | √ | — | 980 clips from 5 to 15 s long | Fine-grained emotion | √ |

| Sentiment | Sentiment |

|---|---|

| Sentimental | Warm |

| Depressed | Sunny |

| Disappoint | Cozy |

| Anxious | Happy |

| Oppressive | Hopeful |

| Lonely | Relaxed |

| Romantic | Dreamy |

| Magnificent | Fresh |

| Comfortable |

| Score | Name of Experiment | |

|---|---|---|

| Affective Labeling | Matching Degree Labeling | |

| 1 | No at all | Completely mismatching |

| 2 | Not too strong | Not very match |

| 3 | General | Relatively matching |

| 4 | Strong | Strong matching |

| 5 | Very strong | Completely matching |

| Model | MSE |

|---|---|

| C3D [70] | 0.0409 |

| MVIT [71] | 0.0506 |

| TimeSformer [72] | 0.0347 |

| InceptionV3 [69] | 0.0766 |

| Vgg16 [68] | 0.0252 |

| Sentiment | Music Video Composition Components (Factors) | |||

|---|---|---|---|---|

| F1 | F2 | F3 | F4 | |

| Sunny | −0.704 | |||

| Hopeful | −0.668 | |||

| Relaxed | −0.618 | |||

| Happy | −0.773 | |||

| Disappoint | 0.905 | |||

| Depressed | 0.867 | |||

| Sentimental | 0.946 | |||

| Anxious | 0.820 | |||

| Lonely | 0.954 | |||

| Oppressive | 0.660 | |||

| Comfortable | 0.706 | |||

| Warm | 0.592 | |||

| Fresh | 0.660 | |||

| Cozy | 0.708 | |||

| Romantic | 0.797 | |||

| Dreamy | 0.914 | |||

| Magnificent | 0.960 | |||

| Model | Feature Fusion | MSE | |||

|---|---|---|---|---|---|

| Original Dimension | PCA [74] | SVD [75] | RF [76] | ||

| XGBoost [78] | \ | 0.0463 | 0.0473 | 0.0479 | 0.0532 |

| CCA [42] | 0.0472 | 0.0448 | 0.0464 | 0.0532 | |

| KCCA [60] | 0.0331 | 0.0390 | 0.0398 | 0.0533 | |

| DCCA [43] | 0.0376 | 0.0374 | 0.0390 | 0.0532 | |

| LightGBM [77] | \ | 0.0443 | 0.0472 | 0.0468 | 0.0533 |

| CCA [42] | 0.0458 | 0.0474 | 0.0465 | 0.0533 | |

| KCCA [60] | 0.0342 | 0.0410 | 0.0406 | 0.0532 | |

| DCCA [43] | 0.0371 | 0.0363 | 0.0390 | 0.0533 | |

| Sub-Model | MSE |

|---|---|

| Affective matching model | 0.0378 |

| Affective difference model | 0.0365 |

| Metrics | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 | |

| N | 444 | 452 | 459 | 447 | 440 | 466 | 478 | 472 | 470 | 463 | 463 |

| Accuracy (%) | 50.5 | 51.4 | 52.2 | 50.8 | 50.0 | 53.0 | 54.3 | 53.6 | 53.4 | 52.6 | 52.6 |

| Metrics | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 | |

| N | 376 | 485 | 460 | 447 | 440 | 452 | 494 | 515 | 463 | 447 | 424 |

| Accuracy (%) | 42.8 | 55.1 | 52.3 | 50.8 | 50.0 | 51.4 | 56.1 | 58.5 | 52.6 | 50.8 | 48.2 |

| Methods | MSE |

|---|---|

| CCA-GA-BPNN [79] | 0.0431 |

| RF [80] | 0.0523 |

| CCA feature fusion [42] | 0.0304 |

| Modified CCDA [56] | 0.0349 |

| Modified TNN-C-CCA [45] | 0.0388 |

| Affective similarity and CCA feature fusion (ours) | 0.0299 |

| Video Clips | Music Clips | Affective Matching Values | |

|---|---|---|---|

| Subjective Value | Predicted Value | ||

| Little Astronaut Henry Lai | 3.42 | 3.33 |

| The First Words Song Yu Vin | 3.13 | 3.00 |

| Let the World be Filled with Love Various Artists | 4.24 | 4.42 |

| Water Of Life Silver Screen | 4.07 | 4.18 |

| Because I Only See You Kim Nayoung | 3.90 | 3.82 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, Z.; Feng, Y.; Liu, J.; Peng, J.; Jiang, W.; Liu, J. An Audiovisual Correlation Matching Method Based on Fine-Grained Emotion and Feature Fusion. Sensors 2024, 24, 5681. https://doi.org/10.3390/s24175681

Su Z, Feng Y, Liu J, Peng J, Jiang W, Liu J. An Audiovisual Correlation Matching Method Based on Fine-Grained Emotion and Feature Fusion. Sensors. 2024; 24(17):5681. https://doi.org/10.3390/s24175681

Chicago/Turabian StyleSu, Zhibin, Yiming Feng, Jinyu Liu, Jing Peng, Wei Jiang, and Jingyu Liu. 2024. "An Audiovisual Correlation Matching Method Based on Fine-Grained Emotion and Feature Fusion" Sensors 24, no. 17: 5681. https://doi.org/10.3390/s24175681

APA StyleSu, Z., Feng, Y., Liu, J., Peng, J., Jiang, W., & Liu, J. (2024). An Audiovisual Correlation Matching Method Based on Fine-Grained Emotion and Feature Fusion. Sensors, 24(17), 5681. https://doi.org/10.3390/s24175681