1. Introduction

Indoor localization has attracted increasing attention for location awareness where the Global Navigation Satellite System (GNSS) does not work in indoor buildings. Many different methods have been developed by using methods such as Pedestrian Dead Reckoning (PDR) [

1], hardware-based localizations such as Angle of Arrival (AoA) [

2] and Time Difference of Arrival (TDoA) [

3], and distance estimations [

4]. Additionally, Wi-Fi Received Signal Strength Indicator (RSSI) fingerprint localization has become popular due to its advantage of utilizing complementary Wi-Fi RSSIs obtained from a large number of existing Access Points (APs) built into the structure [

5]. A Wi-Fi fingerprint is defined as a labeled data point that is a pair of RSSIs and their measuring location. To estimate a location, given a set of RSSIs, a machine learning approach aims to find a mapping, such that

A major issue with Wi-Fi RSSI fingerprint localization is that data collection is costly. It needs manual collection across all positioning areas, and an annotation process to label locations to RSSI sets. To reduce these effort, a Generative Adversarial Network (GAN) might be one of the promising solutions. The purpose of the GAN is to produce artificial data samples similar to real ones [

6]. A typical GAN has two independent deep neural networks, i.e, a generator and a discriminator. An adversarial learning approach via a min–max game is used for training; the generator is learned to fool the discriminator by making realistic fake data, whereas the discriminator is learned to distinguish fake and real data.

In the scenario where GANs are assumed to be applied for fingerprint indoor localization, a generator might be modeled to produce artificial RSSI data, such that

It is expected that the GAN improves the localization accuracy by supplying a large amount of training data. However, most of the existing GAN-based fingerprint methods [

7,

8,

9,

10,

11] can only produce

unlabeled RSSI samples. By this restriction, their methods have reported using only a few visible Wi-Fi APs whose locations are known preliminarily. This limitation is different to a general fingerprint localization environment in which a large number of location-unknown APs are used.

The main contribution of this paper is to develop a Wi-Fi Semi-Supervised GAN (SSGAN) for fingerprint localization, which produces synthetic

labeled RSSI data, such that

One of main differences from a normal GAN is the input configuration. By inputting a specific query location, the SSGAN generates a corresponding labeled RSSI fingerprint, whereas a normal GAN produces only unlabeled RSSI values irrelevant to locations. Moreover, the proposed Wi-Fi SSGAN includes an additional classification network, which can be utilized as a positioning model, so it does not need to employ an extra positioning method. The produced labeled data can help improve learning performance of the positioning network model because the fingerprint localization mainly uses labeled data. While GAN is an unsupervised learning method, SSGAN can be seen as a type of semi-supervised learning whose main purpose is to learn a classifier, as illustrated in

Figure 1.

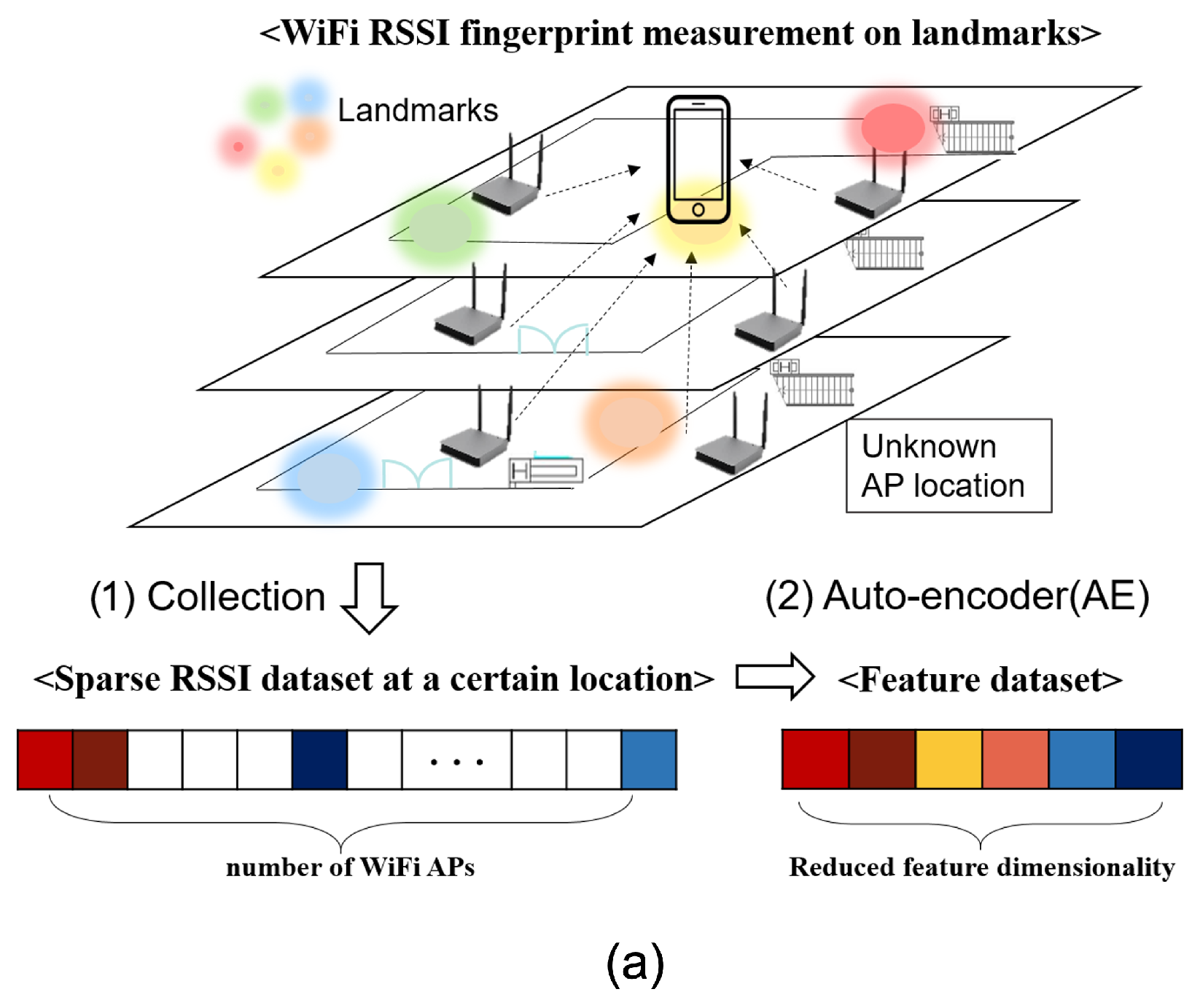

Because raw RSSI fingerprints are sparse due to the AP range limit, feature extraction from RSSIs [

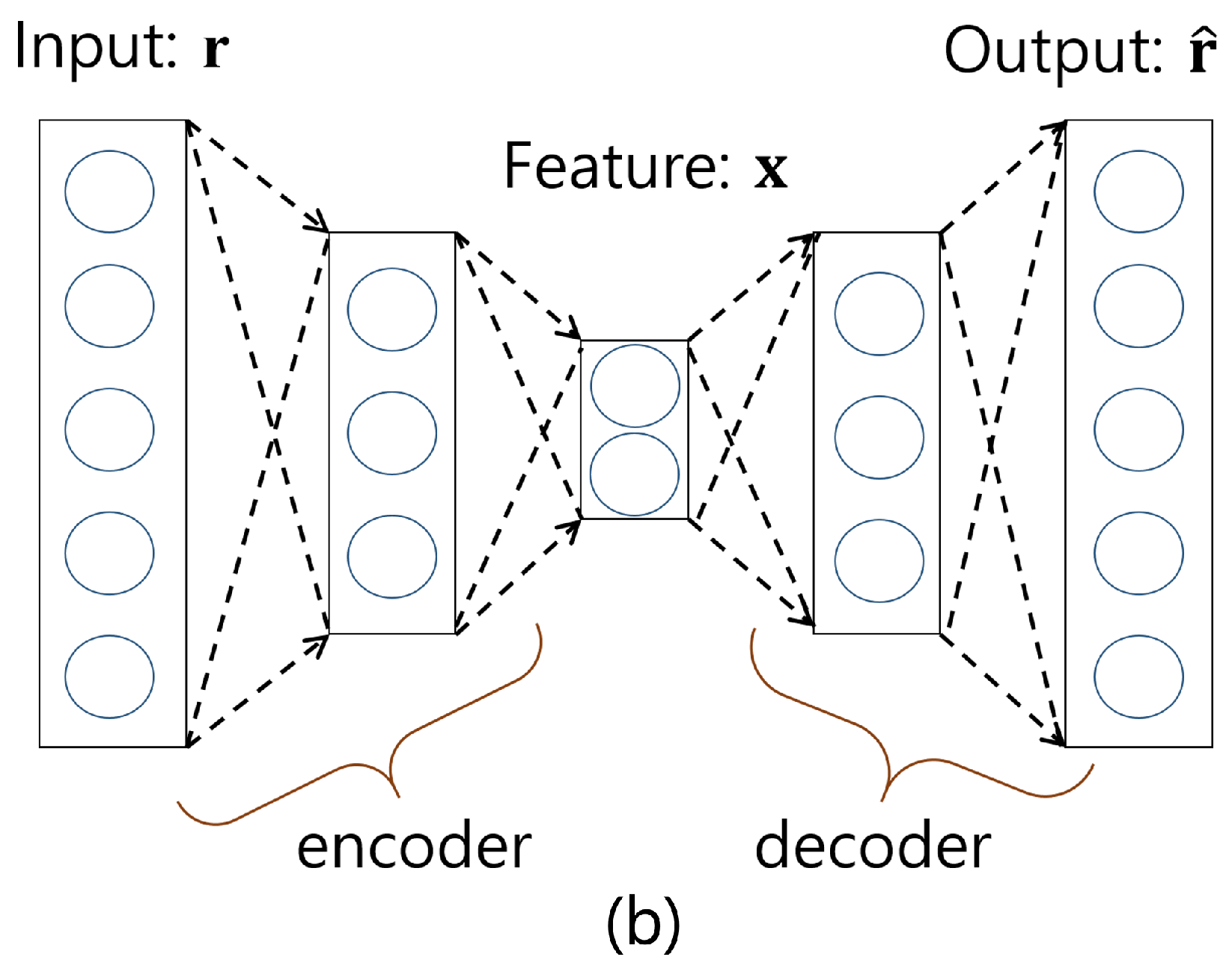

12] is mandatory for fingerprint localization. This paper applies an Auto-Encoder (AE) to convert them to trainable data, where an AE is an unsupervised deep representational neural network to recover original input data [

13]. A learned AE model extracts feature values of neural nodes from the middle layer. The resultant feature set has far lower dimensionality than the original data set. As a result, the high dimensional and sparse raw RSSI measurements are transformed to feature sets by the AE, and then the feature sets are used as input data for the Wi-Fi SSGAN to learn an indoor localization model.

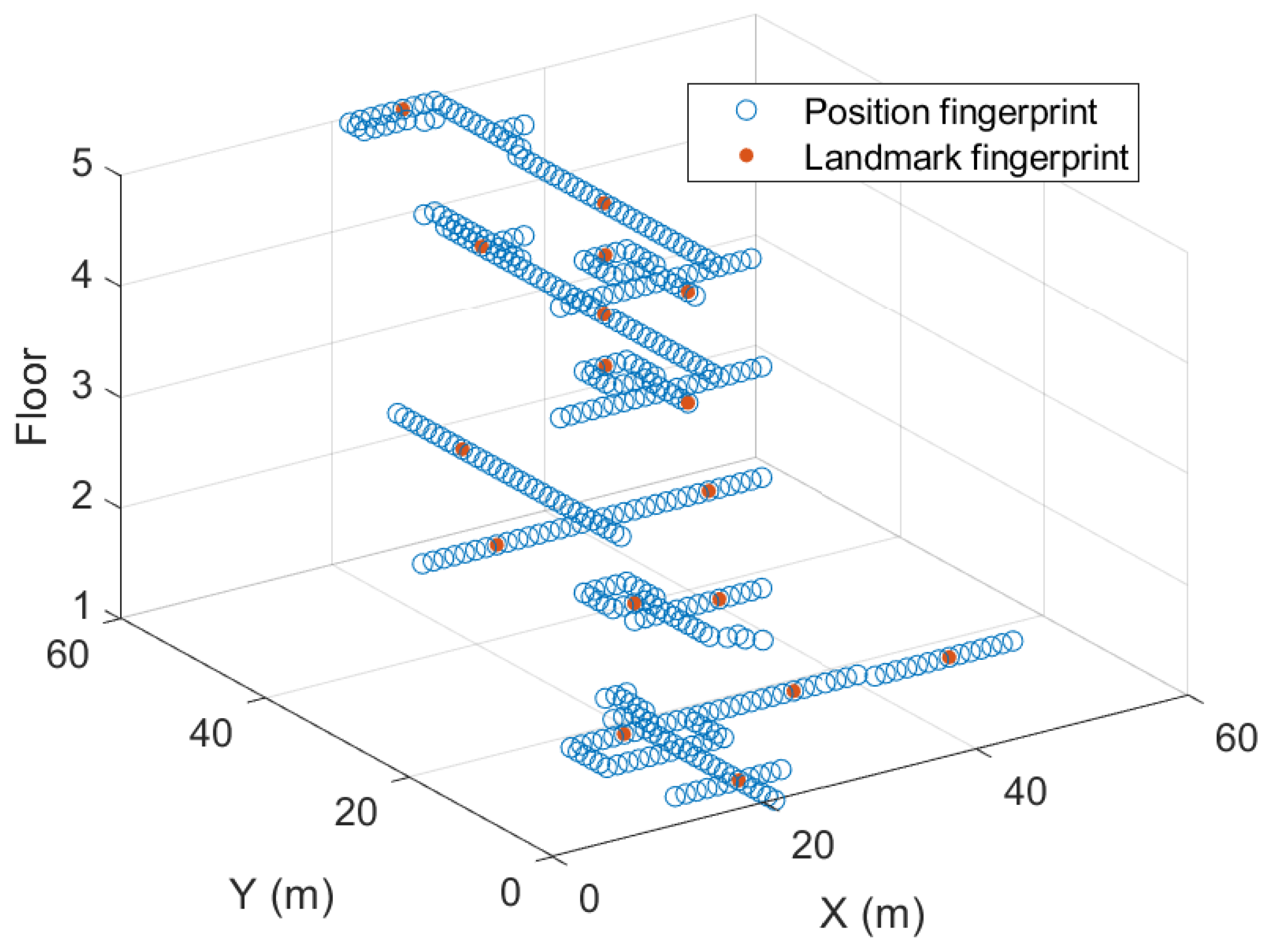

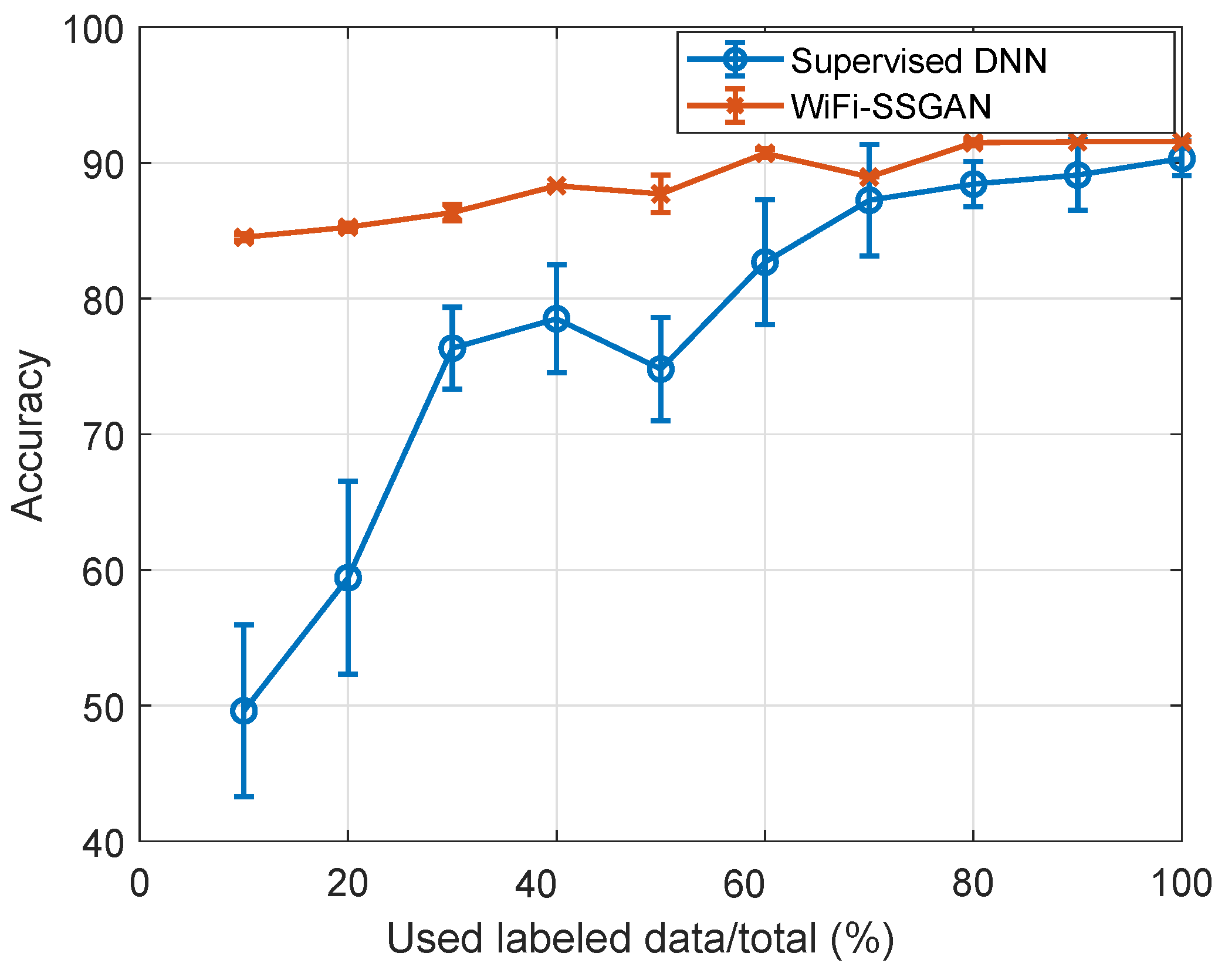

For the experimental study, we collected Wi-Fi fingerprints through corridors at a five-story office building, in which 508 different AP devices are scanned across five floors. To evaluate the proposed Wi-Fi SSGAN algorithm, we define a multi-classification problem as landmark localization. From the experiments, Wi-Fi SSGAN achieved 35% better accuracy compared to a supervised deep neural network when a small amount of training data was used.

The rest of this paper is organized as follows.

Section 2 overviews the related works.

Section 3 describes the Wi-Fi fingerprint data preprocessing.

Section 4 mainly introduces the Wi-Fi SSGAN algorithm.

Section 5 and

Section 6 report the experimental results and conclusion, respectively.

3. Wi-Fi Fingerprint Preprocessing

This section overviews Wi-Fi fingerprint data localization in

Section 3.1 and feature extraction by the AE in

Section 3.2. The feature data will be used as input of the main learning algorithm described in

Section 4.

3.1. Wi-Fi RSSI Fingerprint for Landmark Localization

For landmark localization, the Wi-Fi RSSI fingerprint data are collected by placing a receiver, e.g., a smartphone, at different landmark locations. A receiver measures RSSIs obtained from near APs that are broadcasting their signals periodically. The Wi-Fi signal conveys unique information of a AP transmitter by means of Media Access Control (MAC) address. This enables the receiver to recognize which AP sent the Wi-Fi signal. A general fingerprint localization method utilizes all RSSIs of APs scanned across the positioning area.

Suppose that

N number of Wi-Fi RSSI fingerprints at

N locations are obtained from total

d number of APs, given by

where

is am RSSI fingerprint set and

is a landmark index. The landmark label index

is defined as a

l-by-1 one-hot vector, where

l is the number of landmarks that are predesignated by a developer. The RSSI set at the

i-th landmark is given by

where

is a RSSI measurement obtained from the

j-th AP. The dimensionality of an RSSI set

equals the number of APs,

d.

Given the training data D, the objective of machine learning in the training phase is to build a classifier: , which represents a relationship between a set of Wi-Fi RSSI measurements and a landmark. In the test phase, when a query is given, the positioning model infers on which landmark a receiver is located.

3.2. Feature Extraction by Auto-Encoder (AE)

The outstanding property of raw RSSI fingerprint data is its sparsity as shown in

Figure 2a. By restriction of the Wi-Fi signal propagation range, there are many empty elements in a raw RSSI set, so that

in (

2) has many empty values. Typically, these empty components are filled with a possible minimum value, such as −100 dBm. Because this prompts inaccurate learning performance, feature extraction for the sparse RSSI data is mandatory. The objective of feature extraction is to obtain a model

H that converts a raw

d-dimensional Wi-Fi RSSI fingerprint set

into a

s-dimensional feature set

(

), where

s is dimensionality of feature data.

AE is an unsupervised deep representational neural network. It trains a meaningful feature space among input data in a layer-wise manner by learning a neural network model to replicate the original input data to output. Hidden layers from the input layer to the feature layer are called encoders, and the rest of the layers for the restoration are called decoders, as described in

Figure 2b.

Given the Wi-Fi RSSI data set

from (

1), the encoder converts raw data

to low-dimensional feature data

. The decoder reconstructs the feature

back to the original data

by estimating its prediction

. More detail of mathematical explanation of the AE can be found in [

13].

After the AE model is learned, the encoder part is used as the feature extraction method to obtain the following feature database,

The newly made database (

3) replaces the original dataset (

1) to learn the proposed Wi-Fi SSGAN model in the next section.

4. Wi-Fi SSGAN

The Wi-Fi SSGAN consists of multiple neural networks, and they are learned by complementary optimizations. Variables

and

are fake and real data, respectively.

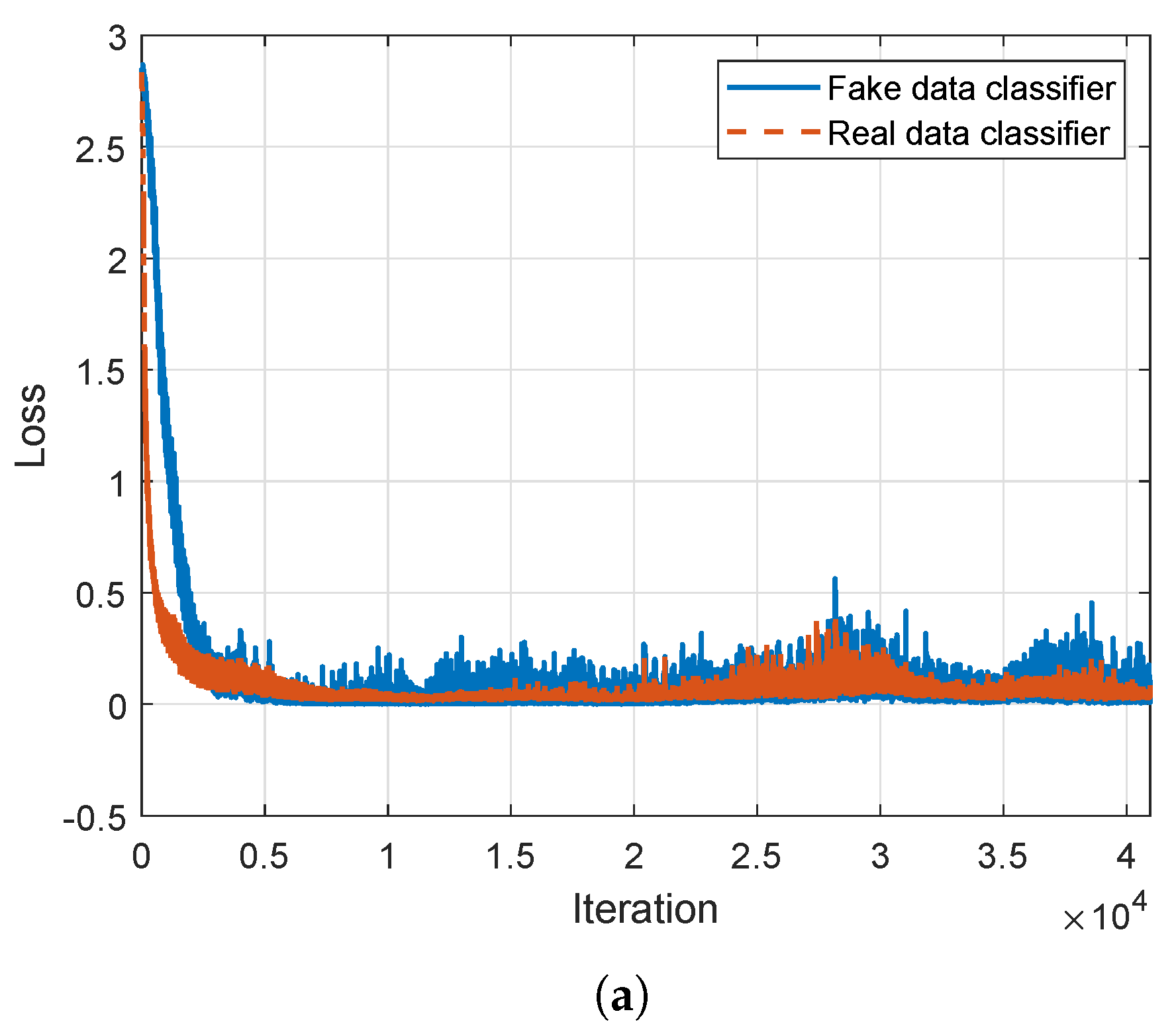

Figure 3 shows network models of Wi-Fi SSGAN in which the generative model produces fake labeled RSSI feature data, and the combined model of discriminator and classification predicts a location and distinguishes fake data, simultaneously.

4.1. Generator

The SSGAN generator produces fake samples with respect to a specific location query by learning the network with parameter set . Using an artificial label as the generator’s input is one of the major differences between the SSGAN and a normal GAN that only allows random noise as input. A simulated RSSI set generated by the SSGAN generator mimics a real fingerprint sample in relation to an actual location. Consequently, the accurately created fingerprint data can effectively support the learning of a positioning model.

4.2. Discriminator

When real data are initially given and fake data are made from the generator, the discriminator aims to recognize whether the produced samples are fake or not. The learning process lets the generator and discriminator outplay each other. The learning process is repeated until the generator finally produces realistic samples, so that the discriminator with does not exactly distinguish if the generated samples are fake.

4.3. Classifier

Classifier involvement into the discriminator network is another major difference between the SSGAN and a normal GAN in which a prediction model does not exist. The classifier with and discriminator with share a network model, except for the output layer. It is noted that the fake data also have ground-truths , so that the prediction errors of the fake data can be calculated. Therefore, training both the actual and the fake data can improve the learning performance of the classifier.

4.4. SSGAN Formulation

In WGAN-GP [

19], the discriminator

D and generator

G play a min–max game based on the Wasserstein distance

, which is a distance between distributions

D and

G, such that

where

are real data,

are noise vectors, and

is uniform distribution with bound [0, 1]. To achieve a solution to (

4), separate optimizations are performed to derive each generator and discriminator. The discriminator is learned by minimizing the following loss:

In (

5), the first term is the Wasserstein distance, and the second term is a gradient penalty controller to improve the stability of the learning convergence, where

is a tuning parameter and

are samples lying on a straight line between

and

[

19]. On the other hand, the generator is learned to fool the discriminator by reducing the following loss:

In (

5) and (

6),

and

are the fixed weight parameters, respectively, so that each network focuses on learning own model.

The proposed Wi-Fi SSGAN is extended from the WGAN-GP in a semi-supervised learning manner. One of the main differences between SSGAN and WGAN-GP is the input configuration of the generator. SSGAN generator’s input

is defined as a concatenation of noise and label, given by

Accordingly, fake data

are made by the generator given by

and the Wasserstein distance is defined as

The second difference is the prediction model involvement into the discriminator network for the purpose of increasing the accuracy of label prediction. The combined discriminator and classifier (CDC) model minimizes the following loss:

where

is defined in (

5), and

is as follows:

Finally, the generator loss of SSGAN with the fixed

and

is given by

The overall training algorithm of the proposed Wi-Fi SSGAN is summarized in Algorithm 1. After the training process, the AE model and the classifier model are obtained. For the test when a query Wi-Fi RSSI set is given, the AE model first converts it to a feature set. Then, the classifier performs the probabilistic inference to localization.

| Algorithm 1 Wi-Fi SSGAN |

| Input: Training dataset with RSSI set and one-hot landmark label . |

| Output: An auto-encoder , and a classifier . |

Feature extraction:- 1:

Given the training dataset, learn the auto-encoder model and build the new feature dataset with .

|

SSGAN:- 2:

Initialize a generator with , a discriminator with and a classifier with . - 3:

repeat - 4:

for do - 5:

Sample a batch of real data from the feature dataset. - 6:

Produce a batch of fake data by the generator in ( 8). - 7:

Do optimization to reduce loss in ( 10) and update . - 8:

end for - 9:

Produce a batch of fake data by the generator in ( 8). - 10:

Do optimization to reduce loss in ( 6) and update . - 11:

until end of learning - 12:

Obtain the classifier with .

|

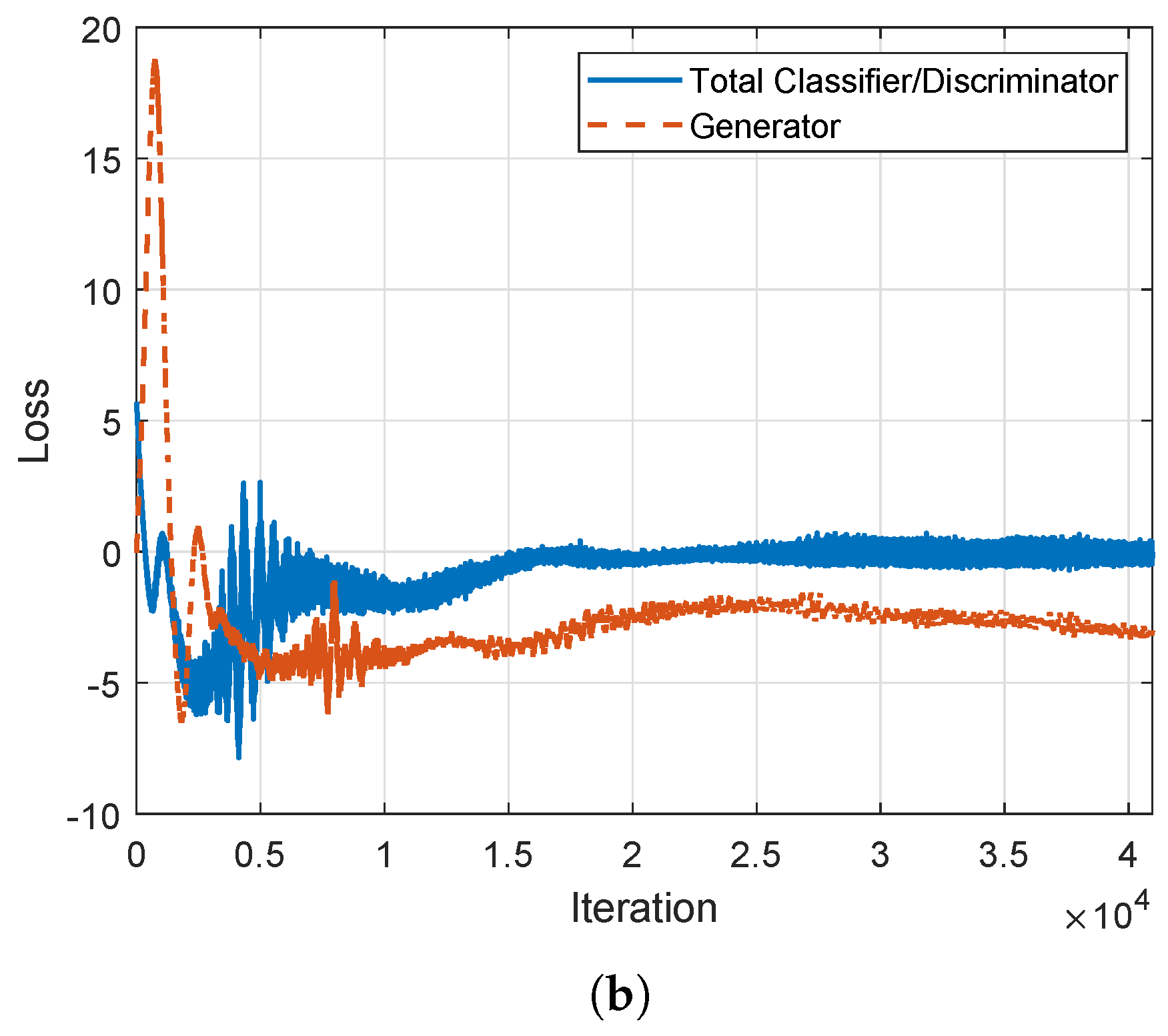

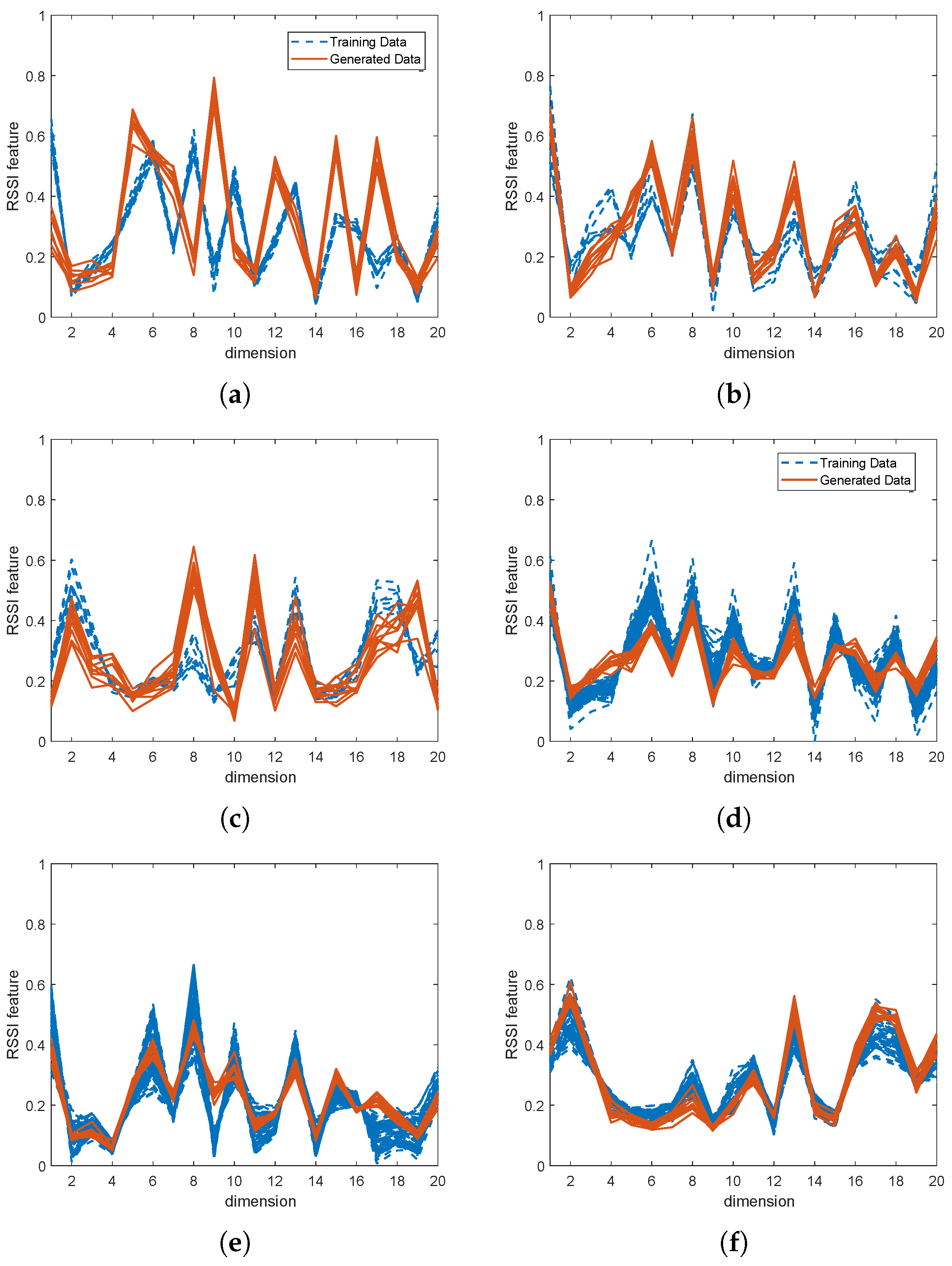

6. Conclusions

The Wi-Fi SSGAN, which is a new semi-supervised learning version of a generative adversarial network for Wi-Fi indoor localization, was presented. The proposed method aims to produce artificial fingerprint data to support a lack of actual fingerprint data. From the experiments, the similarity of the fake data to real data was demonstrated, and localization accuracy was improved, especially when small amounts of actual training data were used. Many RSSI-based indoor localization algorithms have been reported, and these methods typically depend on manually collected fingerprint-labeled data. Therefore, the accurately produced fingerprint data presented in this paper can significantly support RSSI-based positioning algorithms. For instance, the synthetic data can be used as an alternative for predicting locations in areas that are otherwise inaccessible. As a future work, we plan to further analyze the effectiveness of the generated data in enhancing other RSSI-based localization methods, and to explore its limitations in various scenarios where it may not be useful for positioning.