Safety, Efficiency, and Mental Workload in Simulated Teledriving of a Vehicle as Functions of Camera Viewpoint

Abstract

:1. Introduction

2. Previous Studies on the Effect of Viewpoint on Driving-Related Indices, and Gaps in the Literature

3. The Current Study

4. Methods

4.1. Participants

4.2. Design

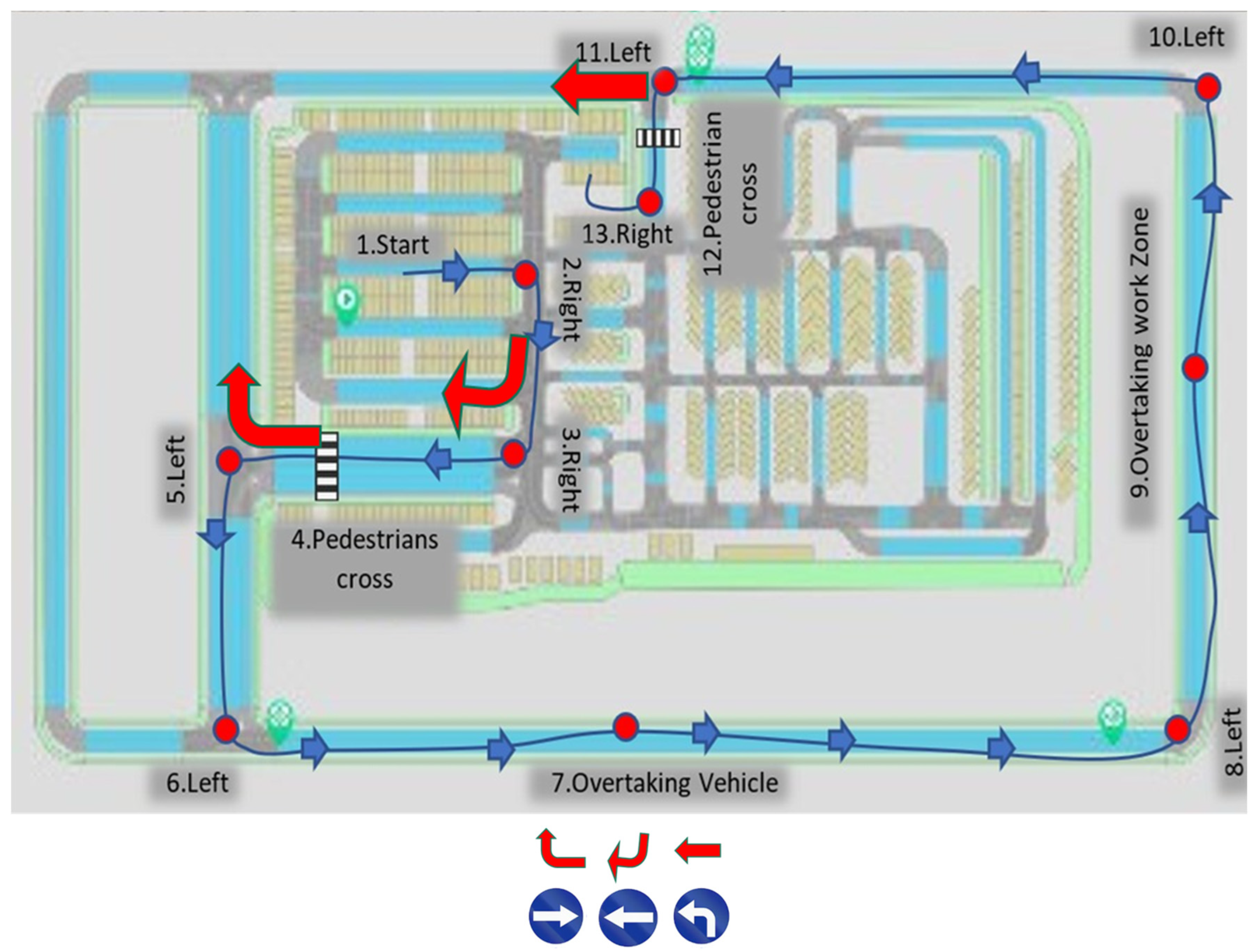

- Efficiency: this category included the task completion times of the entire simulated driving route, as well as of each of the challenges along this route; another efficiency index was the probability of making a navigation error. We instructed participants to follow direction signs to navigate their way along the driving route. They could err at three locations (see Table 2 and Figure 2) and any such error had led to a simulation run termination;

- Safety: this category included collision rates, steering intensity (lateral acceleration m/s2), and maximal braking intensity (negative longitudinal acceleration, m/s2);

- Driver state: this category included the drivers’ reported mental workload. We computed the values of all dependent measures as a function of viewpoint (driver vs. teledriver).

4.3. Apparatus and Materials

4.3.1. Questionnaires

4.3.2. Driving Simulator

4.3.3. Camera Viewpoints

4.3.4. Driving Challenges

4.4. Procedure

4.5. Statistical Analyses

4.5.1. Collision Rates

4.5.2. Navigational Errors

4.5.3. Intensity of Braking and Steering Events

4.5.4. Driving Challenges Completion Time

4.5.5. Mental Workload

5. Results

5.1. Driving Efficiency

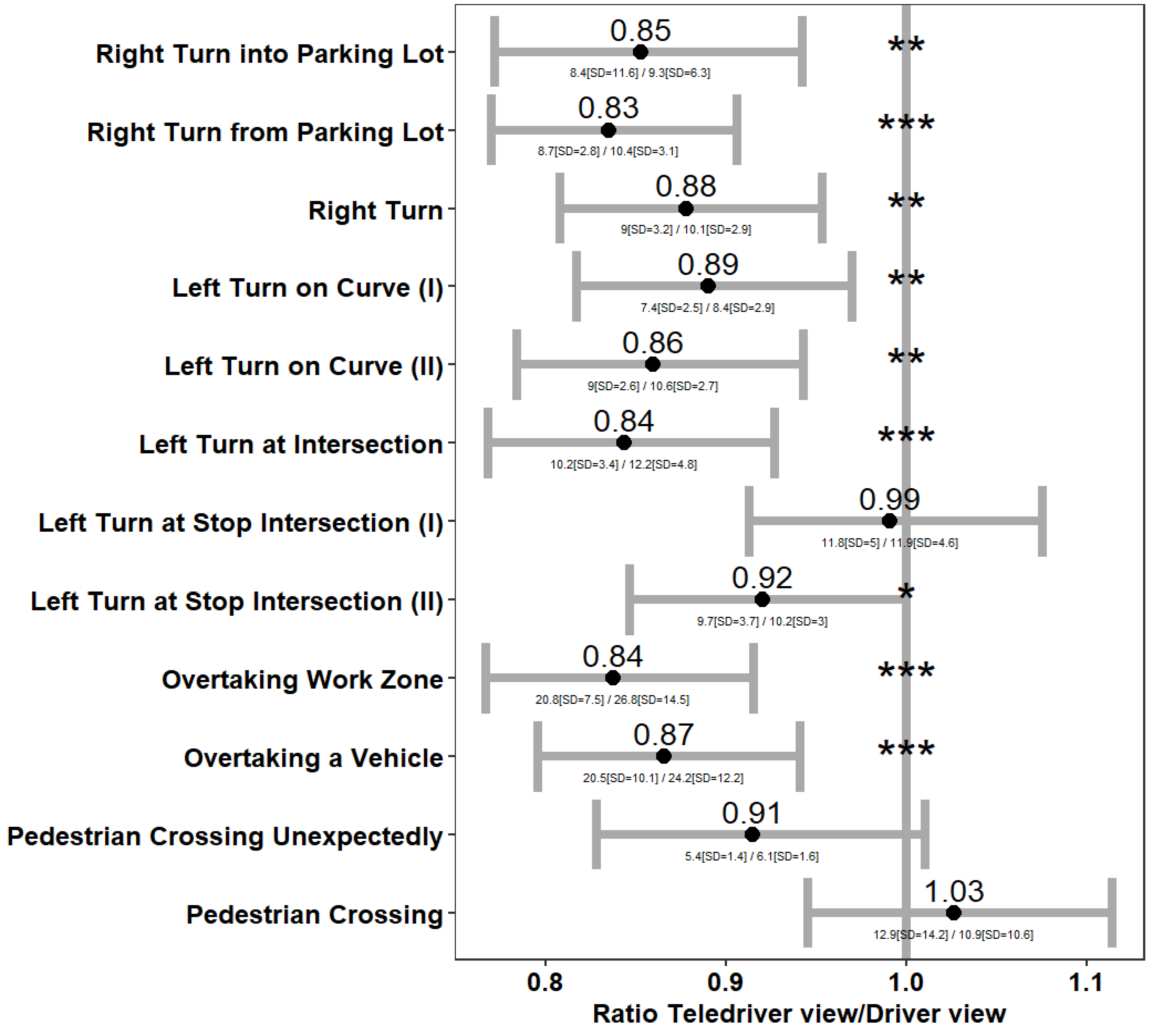

5.1.1. Completion Times

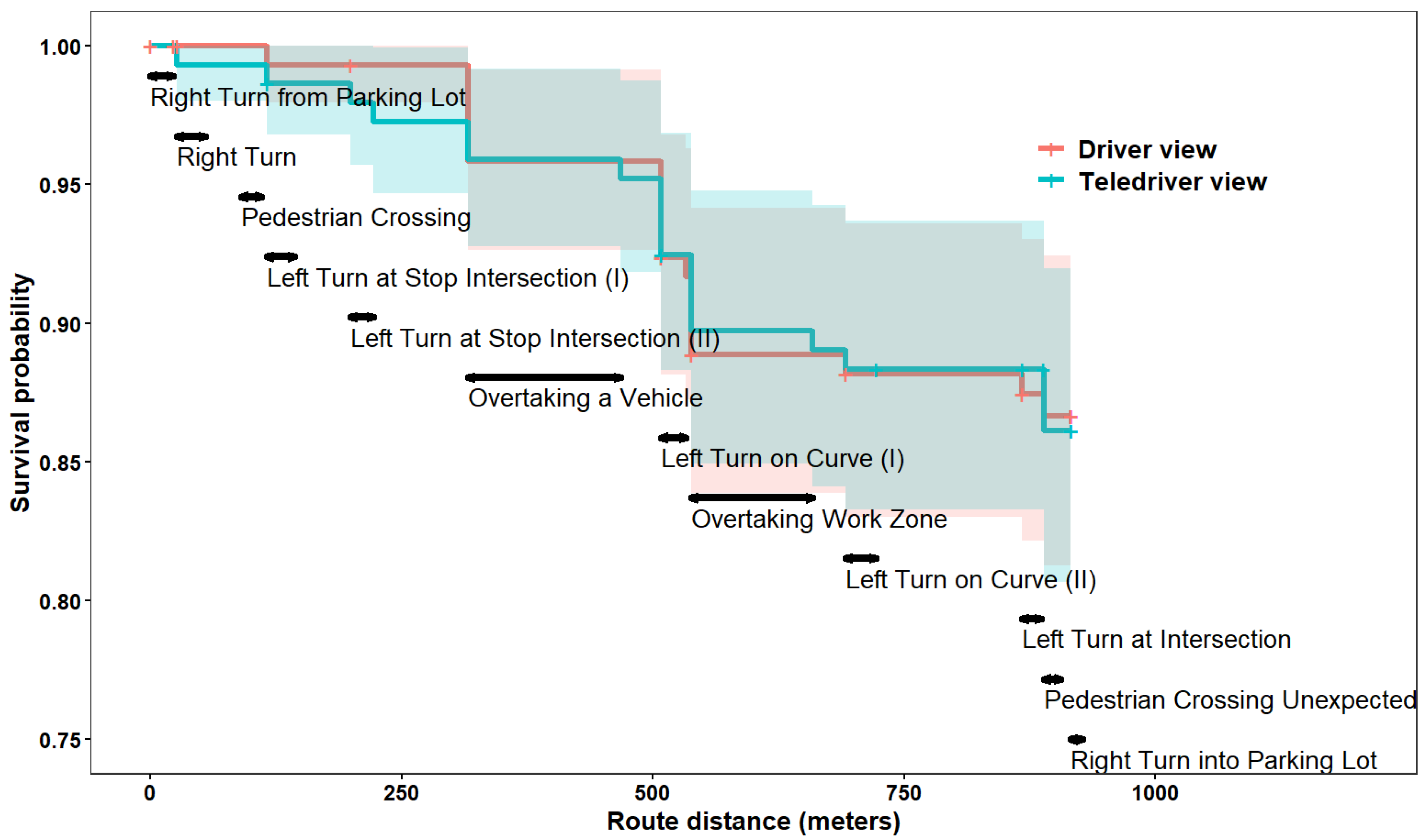

5.1.2. Navigational Errors

5.2. Driving Safety

5.2.1. Collision Rates

5.2.2. Probabilities for Braking and Steering Events

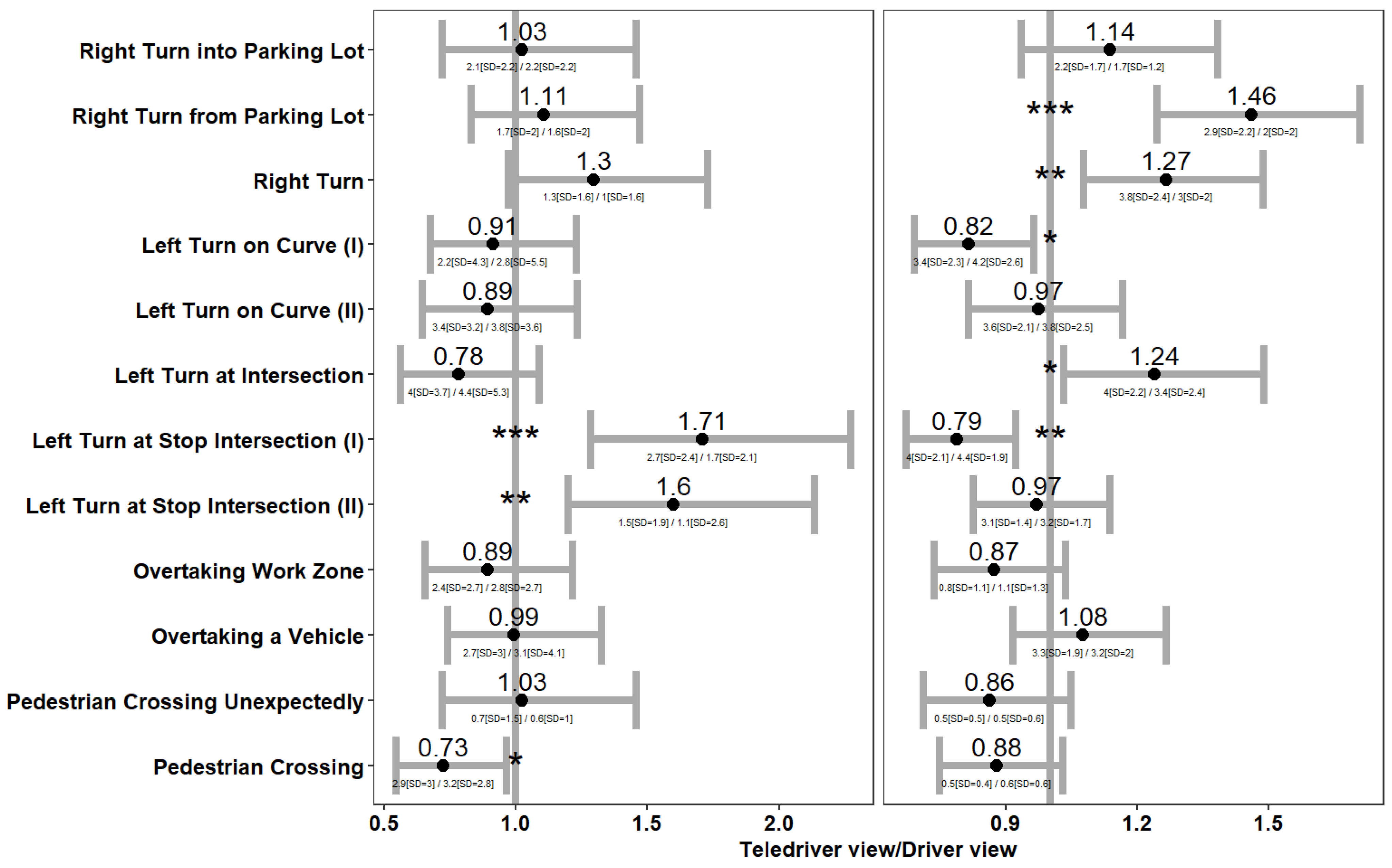

5.2.3. Maximal Braking and Steering Intensities by Driving Challenge

5.3. Mental Workload

6. Discussion

6.1. Driving Efficiency

6.2. Driving Safety

6.3. Mental Workload

6.4. Limitations and Recommendations for Future Studies

7. Conclusions and Practical Implications

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hosseini, A.; Lienkamp, M. Predictive Safety Based on Track-Before-Detect for Teleoperated Driving Through Communication Time Delay. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 165–172. [Google Scholar] [CrossRef]

- Mutzenich, C.; Durant, S.; Helman, S.; Dalton, P. Updating our understanding of situation awareness in relation to remote operators of autonomous vehicles. Cogn. Res. Princ. Implic. 2021, 6, 9. [Google Scholar] [CrossRef] [PubMed]

- Bhavsar, P.; Das, P.; Paugh, M.; Dey, K.; Chowdhury, M. Risk Analysis of Autonomous Vehicles in Mixed Traffic Streams. Transp. Res. Rec. 2017, 2625, 51–61. [Google Scholar] [CrossRef]

- Neumeier, S.; Walelgne, E.A.; Bajpai, V.; Ott, J.; Facchi, C. Measuring the Feasibility of Teleoperated Driving in Mobile Networks. In Proceedings of the 3rd IFIP/IEEE Network Traffic Measurement and Analysis Conference, TMA 2019, Paris, France, 19–21 June 2019; pp. 113–120. [Google Scholar] [CrossRef]

- Shen, X.; Chong, Z.J.; Pendleton, S.; Fu, G.M.J.; Qin, B.; Frazzoli, E.; Ang, M.H. Teleoperation of On-Road Vehicles via Immersive Telepresence Using Off-the-Shelf Components. In Intelligent Autonomous Systems, 13th ed.; Springer: Cham, Switzerland, 2016; pp. 1419–1433. [Google Scholar]

- Meir, A.; Grimberg, E.; Musicant, O. The human-factors’ challenges of (tele)drivers of Autonomous Vehicles. Ergonomics 2024, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Hosseini, A.; Richthammer, F.; Lienkamp, M. Predictive Haptic Feedback for Safe Lateral Control of Teleoperated Road Vehicles in Urban Areas. In Proceedings of the 2016 IEEE 83rd Vehicular Technology Conference2016 (VTC Spring), Nanjing, China, 15–18 May 2016; pp. 1–7. [Google Scholar] [CrossRef]

- Zhang, T. Toward automated vehicle teleoperation: Vision, opportunities, and challenges. IEEE Internet Things J. 2020, 7, 11347–11354. [Google Scholar] [CrossRef]

- Plavšic, M.; Duschl, M.; Tönnis, M.; Bubb, H.; Klinker, G. Ergonomic design and evaluation of augmented reality based cautionary warnings for driving assistance in urban environments. Proc. Intl. Ergon. Assoc. 2009. [Google Scholar]

- Prewett, M.S.; Johnson, R.C.; Saboe, K.N.; Elliott, L.R.; Coovert, M.D. Managing workload in human-robot interaction: A review of empirical studies. Comput. Hum. Behav. 2010, 26, 840–856. [Google Scholar] [CrossRef]

- Fabroyir, H.; Teng, W.C. Navigation in virtual environments using head-mounted displays: Allocentric vs. egocentric behaviors. Comput. Hum. Behav. 2018, 80, 331–343. [Google Scholar] [CrossRef]

- Lamb, M.; Hollands, J.G. Viewpoint Tethering in Complex Terrain Navigation and Awareness. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2005, 49, 1573–1577. [Google Scholar] [CrossRef]

- Pazuchanics, S.L. The effects of camera perspective and field of view on performance in teleoperated navigation. Proc. Hum. Factors Ergon. Soc. 2006, 50, 1528–1532. [Google Scholar] [CrossRef]

- Bernhard, C.; Hecht, H. The Ups and Downs of Camera-Monitor Systems: The Effect of Camera Position on Rearward Distance Perception. Hum. Factors 2021, 63, 415–432. [Google Scholar] [CrossRef]

- Bernhard, C.; Reinhard, R.; Kleer, M.; Hecht, H. A Case for Raising the Camera: A Driving Simulator Test of Camera-Monitor Systems. Hum. Factors 2023, 65, 187208211010941. [Google Scholar] [CrossRef] [PubMed]

- Van Erp, J.B.F.; Padmos, P. Image parameters for driving with indirect viewing systems. Ergonomics 2003, 46, 1471–1499. [Google Scholar] [CrossRef] [PubMed]

- Saakes, D.; Choudhary, V.; Sakamoto, D.; Inami, M.; Lgarashi, T. A Teleoperating Interface for Ground Vehicles Using Autonomous Flying Cameras. In Proceedings of the 2013 23rd International Conference on Artificial Reality and Telexistence (ICAT), Tokyo, Japan, 11–13 December 2013; pp. 13–19. [Google Scholar]

- Musicant, O.; Botzer, A.; Shoval, S. Effects of simulated time delay on teleoperators’ performance in inter-urban conditions. Transp. Res. Part F Traffic Psychol. Behav. 2023, 92, 220–237. [Google Scholar] [CrossRef]

- Moniruzzaman, M.; Rassau, A.; Chai, D.; Islam, S.M.S. Teleoperation methods and enhancement techniques for mobile robots: A comprehensive survey. Robot. Auton. Syst. 2022, 150, 103973. [Google Scholar] [CrossRef]

- Vay Exciting News: Vay Is Now Live in Las Vegas! 2024. Available online: https://vay.io/exciting-news-vay-is-now-live-in-las-vegas/ (accessed on 9 April 2024).

- AVride Universal Driver. 2024. Available online: https://www.avride.ai/driver#Hardware (accessed on 9 April 2024).

- Waymo. Waypoint, the Official Waymo Blog. 2022. Available online: https://waymo.com/blog/2020/03/designing-5th-generation-waymo-driver/ (accessed on 20 April 2024).

- Cruise. Driving Cities Forward. 2024. Available online: https://www.getcruise.com/about/ (accessed on 9 April 2024).

- Ackerman, E. What Full Autonomy Means for the Waymo Driver, March 2021. Available online: https://spectrum.ieee.org/full-autonomy-waymo-driver (accessed on 9 March 2024).

- Kolodny, L. Cruise Confirms Robotaxis Rely on Human Assistance Every Four to Five Miles. Cruise Confirms Robotaxis Rely on Human Assistance Every 4 to 5 Miles. 2023. Available online: https://www.cnbc.com (accessed on 23 March 2024).

- Gitelman, V.; Bekhor, S.; Doveh, E.; Pesahov, F.; Carmel, R.; Morik, S. Exploring relationships between driving events identified by in-vehicle data recorders, infrastructure characteristics and road crashes. Transp. Res. Part C Emerg. Technol. 2018, 91, 156–175. [Google Scholar] [CrossRef]

- Simons-Morton, B.G.; Zhang, Z.; Jackson, J.C.; Albert, P.S. Do elevated gravitational-force events while driving predict crashes and near crashes? Am. J. Epidemiol. 2012, 175, 1075–1079. [Google Scholar] [CrossRef]

- Toledo, T.; Musicant, O.; Lotan, T. In-vehicle data recorders for monitoring and feedback on drivers’ behavior. Transp. Res. Part C Emerg. Technol. 2008, 16, 320–331. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (task load index): Results of empirical and theoretical research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar] [CrossRef]

- Sahar, Y.; Elbaum, T.; Musicant, O.; Wagner, M.; Altarac, L.; Shoval, S. Mapping grip force characteristics in the measurement of stress in driving. Int. J. Environ. Res. Public Health 2023, 20, 4005. [Google Scholar] [CrossRef]

- Prakash, J.; Vignati, M.; Vignarca, D.; Sabbioni, E.; Cheli, F. Predictive Display with Perspective Projection of Surroundings in Vehicle Teleoperation to Account Time-Delays. IEEE Trans. Intell. Transp. Systems 2023, 24, 9084–9097. [Google Scholar] [CrossRef]

- Botzer, A.; Musicant, O.; Mama, Y. Relationship between hazard-perception-test scores and proportion of hard-braking events during on-road driving—An investigation using a range of thresholds for hard-braking. Accid. Anal. Prev. 2019, 132, 105267. [Google Scholar] [CrossRef] [PubMed]

- Hirsh, T.; Sahar, Y.; Musicant, O.; Botzer, A.; Shoval, S. Relationship between braking intensity and driver heart rate as a function of the size of the measurement window and its position. Transp. Res. Part F Traffic Psychol. Behav. 2023, 94, 528–540. [Google Scholar] [CrossRef]

- Hill, A.; Horswill, M.S.; Whiting, J.; Watson, M.O. Computer-based hazard perception test scores are associated with the frequency of heavy braking in everyday driving. Accid. Anal. Prev. 2019, 122, 207–214. [Google Scholar] [CrossRef] [PubMed]

- Saunier, N.; Mourji, N.; Agard, B. Using Data Mining Techniques to Understand Collision Processes. In Proceedings of the 21st Canadian Multidisciplinary Road Safety Conference, Halifax, NS, Canada, 8–11 May 2011; pp. 1–13. [Google Scholar]

- Wielenga, T.J. A Method for Reducing On-Road Rollovers—Anti-Rollover Braking; SAE Technical Paper 1999-01-0123; SAE International: Warrendale, PA, USA, 1999. [Google Scholar]

- Wynne, R.A.; Beanland, V.; Salmon, P.M. Systematic review of driving simulator validation studies. Saf. Sci. 2019, 117, 138–151. [Google Scholar] [CrossRef]

- Kass, S.J.; Beede, K.E.; Vodanovich, S.J. Self-report measures of distractibility as correlates of simulated driving performance. Accid. Anal. Prev. 2010, 42, 874–880. [Google Scholar] [CrossRef]

- Selander, H.; Strand, N.; Almberg, M.; Lidestam, B. Ready for a Learner’s Permit? Clinical Neuropsychological Off-road Tests and Driving Behaviors in a Simulator among Adolescents with ADHD and ASD. Dev. Neurorehabilit. 2021, 24, 256–265. [Google Scholar] [CrossRef]

- Ericsson, K.A.; Krampe, R.T.; Tesch-Römer, C. The role of deliberate practice in the acquisition of expert performance. Psychol. Rev. 1993, 100, 363–406. [Google Scholar] [CrossRef]

- Helsen, W.F.; Hodges, N.J.; Van Winckel, J.; Starkes, J.L. The roles of talent, physical precocity and practice in the development of soccer expertise. J. Sports Sci. 2000, 18, 727–736. [Google Scholar] [CrossRef]

- Higashiyama, A. Horizontal and vertical distance perception: The discorded-orientation theory. Percept. Psychophys. 1996, 58, 259–270. [Google Scholar] [CrossRef]

- Desyllas, P.; Sako, M. Profiting from business model innovation: Evidence from pay-as-you-drive auto insurance. Res. Policy 2013, 42, 101–116. [Google Scholar] [CrossRef]

- Litman, T. Pay-As-You-Drive Pricing for Insurance Affordability; Victoria Transport Policy Institute: Victoria, BC, Canada, 2011. [Google Scholar]

| Study | Investigated Viewpoint | Vehicle Type | Scenario Characteristics | Indices & Main Findings |

|---|---|---|---|---|

| Lamb & Hollands (2005) [12] | Egocentric, dynamic tether, rigid tether, three-dimensional exocentric, and two-dimensional exocentric. n = 12. | A tank controlled by a joystick. | Off-road navigation through waypoints—Participants had to avoid entering the line of sight of enemy entities. | A. Time to complete the task: faster with the egocentric than with the other displays. B. The amount of time the tank was within sight of any enemy: shorter with the tethered displays than with the other displays and shorter with the exocentric display than with the egocentric display. C. Participant’s recognition of the terrain in which they were driving (Accuracy and time to recognize): Recognition accuracy was best with the exocentric display and better with the tethered displays than with the egocentric display. Recognition time was shorter with the exocentric than with the tethered displays. |

| Pazuchanics (2006) [13] | First and third-person camera perspective, the latter meaning mounting the camera in a position where it can capture part of the vehicle in the context of its surrounding environment. n = 24 | Unmanned ground vehicles | Off-road driving through obstacles: A. Tightly zigzagging pathway created with indestructible barriers. B. Pathway created with destructible barriers. C. Field of scattered destructible cars. | A. Time to complete obstacle courses: Faster with a third-person view. B. Number of collisions: No significant effect for camera viewpoint. C. Number of turnarounds (diverging more than 90° from the optimum path for ten seconds.): No significant effect for camera viewpoint. D. Operating comfort: higher with a third-person view. |

| Bernhard & Hecht (2021) [14] | Exp. 1. Rearview side camera at five positions: conventional and two vertical offsets (low, high) by two horizontal offsets (back, and front). n = 20. Exp. 2. Rearview side camera at five positions (very low, low, conventional, high, and very high) with the back of the ego-vehicle either visible or not. n = 30. | Passenger Vehicle. | Highway driving scenario. | Accuracy in distance estimation to a following vehicle at different distances from the ego-vehicle: Exp. 1. Low vs. conventional and high camera position resulted in underestimation of distance to a following vehicle. Exp. 2. If the back of the ego-vehicle was invisible: Very low (high) camera resulted in overestimation (underestimation) of distance relative to the conventional camera position. If the back of the ego-vehicle was visible, all the aforementioned effects disappeared. |

| Bernhard et al. (2023) [15] | Rearview side camera at three vertical positions: Conventional, low (43 cm below conventional) and high (43 cm above conventional). n = 36. | Passenger Vehicle. | Highway driving scenario when participants are required to overtake a slow truck. An emerging vehicle visible in the rearview mirror should be considered in an overtaking decision. | A. Safety—participants declared the last safe gap (LSG) for overtaking: LSG was significantly lower for the low camera position as compared to the normal or higher positions. There was no effect for the higher position. B. Self-reported perception of speed, distance, and reward of using the viewpoint: Participants rated the higher camera position as better than the lower position on distance, speed and overall rearview perception. They rated the conventional position as better than the lower position on speed and overall rearview perception. |

| van Erp & Padmos (2003) [16] | Exp. 1. Two viewpoints on the vehicle roof—1.8 m and 2.8 m above ground. Two FVOs (50° or 100°). n = 8. Exp. 2. Two viewpoints (1.8 and 2.8 m) and two FVOs (50° or 100°). n = 8. | Passenger Vehicle. | Experiment 1: field test in a close track. Experiment 2: Simulator study. | Exp. 1. A. Lateral control (e.g., course stability) on different routes (e.g., 8 courses, sharp curves, lane change, driving backwards on a curve): There was an interaction between effects of FOV and camera height: In several challenges, with low (High) FOV, performance was better with a low (High) camera viewpoint. B. Longitudinal control (Distance to a stopping line and TTC with respect to a stopping line, difference between actual and target speed): Drivers stopped closer to the line with the higher camera. Exp. 2. A. Lateral control (Sharp curves, lane change): With low (High) FOV, performance was better with a low (High) camera viewpoint (consistent with Exp. 1). B. Longitudinal control (Speed and distance estimation): No significant effects were reported. |

| Saakes et al. (2013) [17] | Front view from a camera at the rear of the vehicle, bird’s eye view from a camera on a quadcopter, and a downward view from a camera on a pole. n = 17. | A robot vehicle. | Search and rescue-navigating through a maze and searching for victims (embodied by toys). | A. Task completion time: faster with a camera on a pole. B. Collision count: was lower with camera on a pole and with camera on a quadcopter. C. Number of victims found: More victims were found with camera on a pole and with camera on a quadcopter. |

| The current study | Driver vs. teledriver viewpoint from the vehicle’s roof. | Passenger Vehicle. | Simulator study in an urban scenario. | A. Efficiency B. Safety C. Mental Workload |

| The Number on the Map in Figure 2 | Action | Road Environment | Challenge Description |

|---|---|---|---|

| 1 | Start | - | - |

| 2 | Turning | Parking lot | Right turn from the parking lot. |

| 3 | Turning | Straight road | Right turn. Attention to route directions is required to avoid navigational errors (see red arrow for error). |

| 4 | Pedestrian crossing | Crosswalk | Pedestrians crossing |

| 5 | Turning | Intersection | Left turn at an intersection with a stop sign. Attention to route directions is required to avoid navigational errors (see red arrow for error). |

| 6 | Turning | Intersection | Left turn at an intersection with a stop sign. |

| 7 | Overtaking | Oncoming traffic lanes | Overtaking a vehicle with oncoming traffic. |

| 8 | Turning | Curved road | Left turn on a curve. |

| 9 | Overtaking | Oncoming traffic lanes | Overtaking a work zone with oncoming traffic. |

| 10 | Turning | Curved road | Left turn on a curve. |

| 11 | Turning | Intersection | Left turn at an intersection. Attention to route directions is required to avoid navigational errors (see red arrow for error). |

| 12 | Pedestrian crossing | Crosswalk | Pedestrian cross unexpectedly. |

| 13 | Turning | Parking lot | Right turn into the parking lot. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Musicant, O.; Botzer, A.; Richmond-Hacham, B. Safety, Efficiency, and Mental Workload in Simulated Teledriving of a Vehicle as Functions of Camera Viewpoint. Sensors 2024, 24, 6134. https://doi.org/10.3390/s24186134

Musicant O, Botzer A, Richmond-Hacham B. Safety, Efficiency, and Mental Workload in Simulated Teledriving of a Vehicle as Functions of Camera Viewpoint. Sensors. 2024; 24(18):6134. https://doi.org/10.3390/s24186134

Chicago/Turabian StyleMusicant, Oren, Assaf Botzer, and Bar Richmond-Hacham. 2024. "Safety, Efficiency, and Mental Workload in Simulated Teledriving of a Vehicle as Functions of Camera Viewpoint" Sensors 24, no. 18: 6134. https://doi.org/10.3390/s24186134