A Driving Warning System for Explosive Transport Vehicles Based on Object Detection Algorithm

Abstract

:1. Introduction

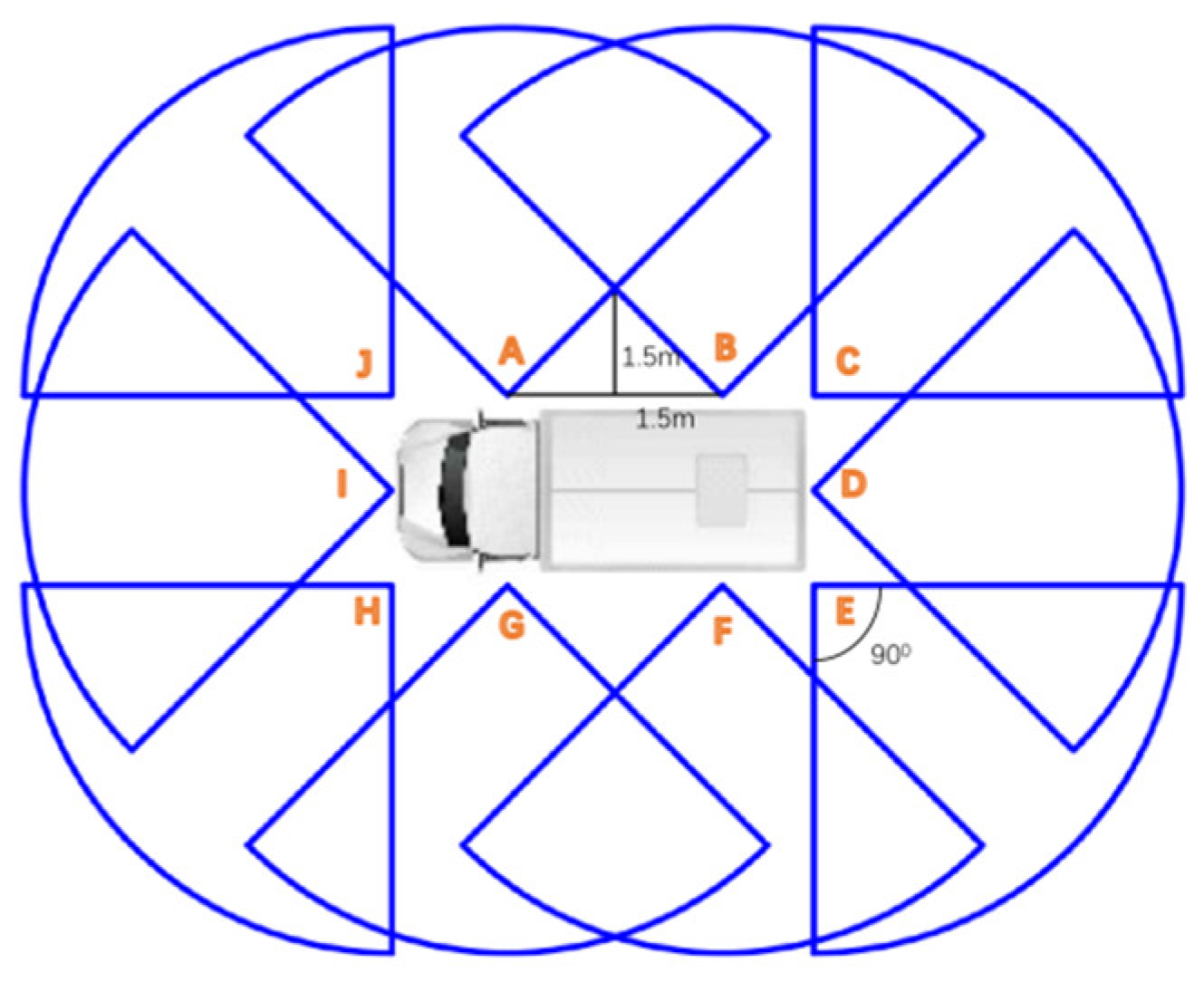

2. Single Camera Layout Plan

2.1. Single Camera Ranging

2.2. Camera Layout Plan

3. Implementation Steps of YOLO V4 Target Monitoring Algorithm

3.1. Recognition Principle

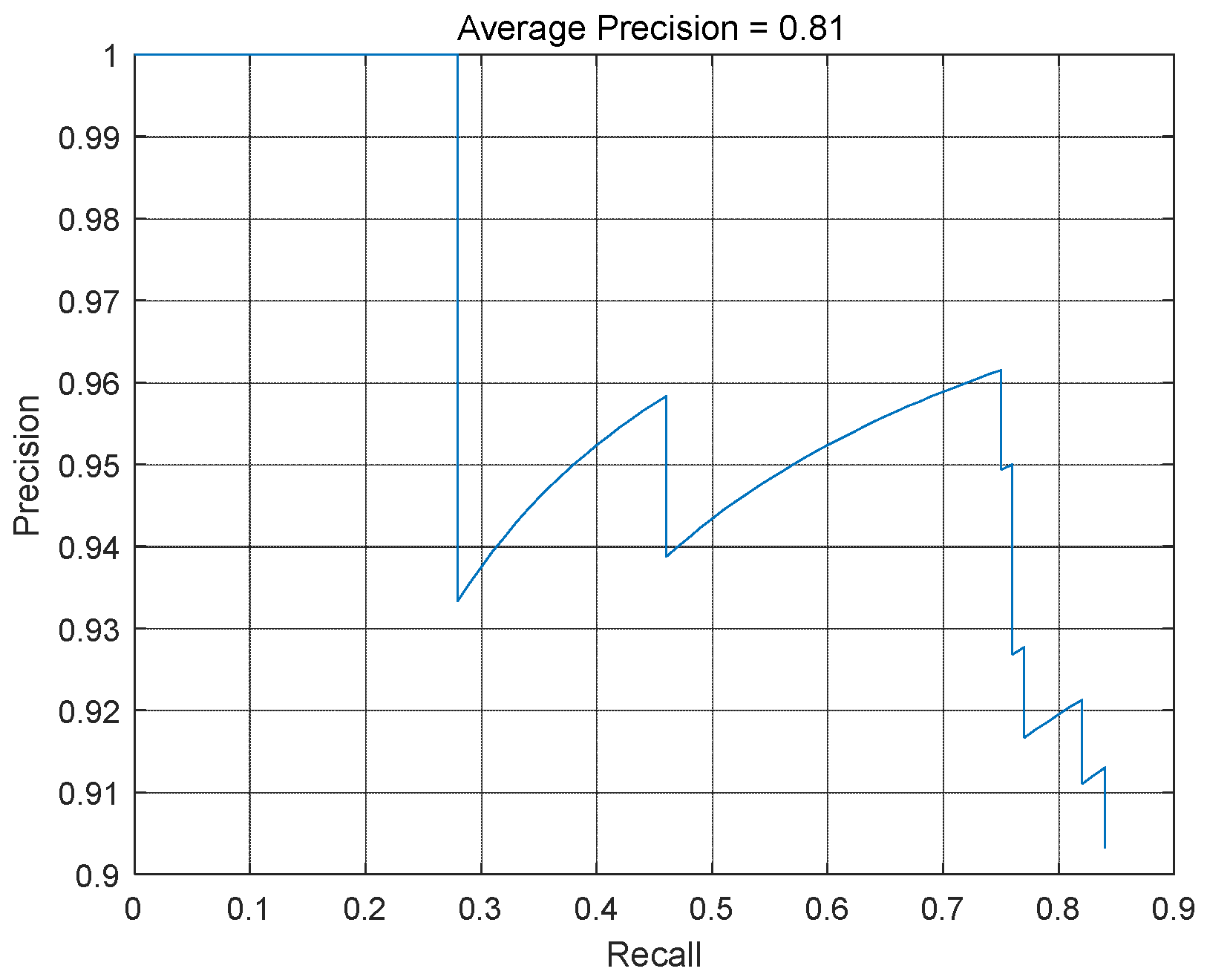

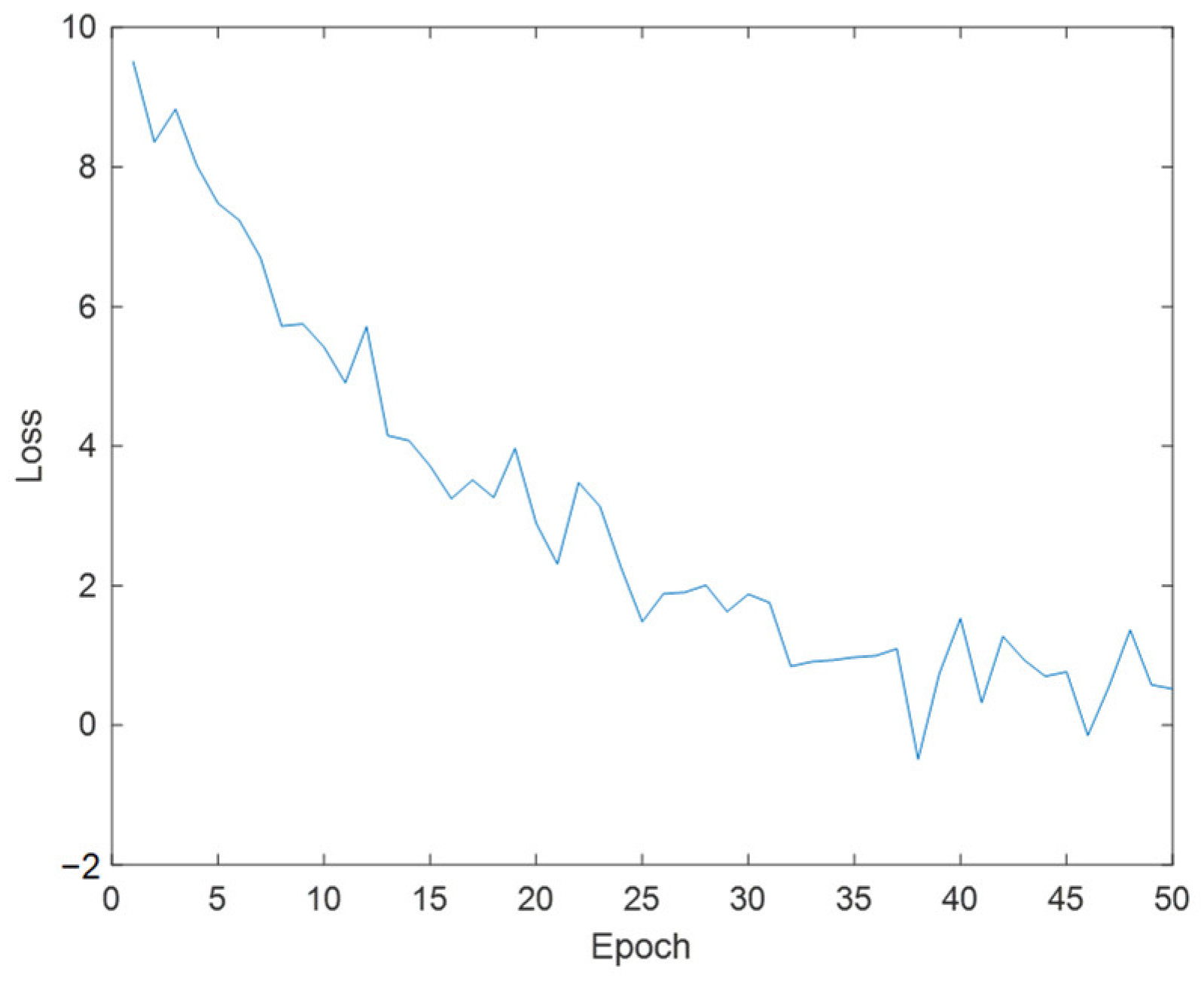

3.2. Model Training

3.2.1. Load Dataset

3.2.2. Perform Data Augmentation

4. Simulation of Vehicle Operation Using Cellular Automata Based on Game Theory

4.1. Enter Initial Parameters

- Acceleration rule: If the current speed of the vehicle is less than the maximum speed limit, it can accelerate. Usually, vehicles can accelerate by 1 unit per time step until reaching maximum speed.

- Deceleration rule: If the distance ahead of the vehicle is less than its current speed, it must slow down to avoid collisions. This is to simulate the safe distance between vehicles.

- Random deceleration rule: Introduce a random factor to simulate random deceleration in traffic flow. This makes the model closer to the complexity of actual traffic flow.

- Movement rule: Vehicles move at their speed, that is, at each time step, by a distance equal to their current speed. The simulation process of the NaSch model is carried out by iteratively applying these rules to simulate the movement of vehicles on the road. The following rule considers the following behavior between vehicles, while the lane changing rule takes into account factors such as speed difference, distance ahead, safety distance, and emergency lane changing.

4.2. Following Rules

- 1.

- Calculate the distance from the preceding vehicle:

- 2.

- Deceleration rules:

- 3.

- Acceleration rules:

- 4.

- Maintain rules:

- 5.

- Random deceleration rule:

- 6.

- Mobile Rules

4.3. Lane Changing Rules

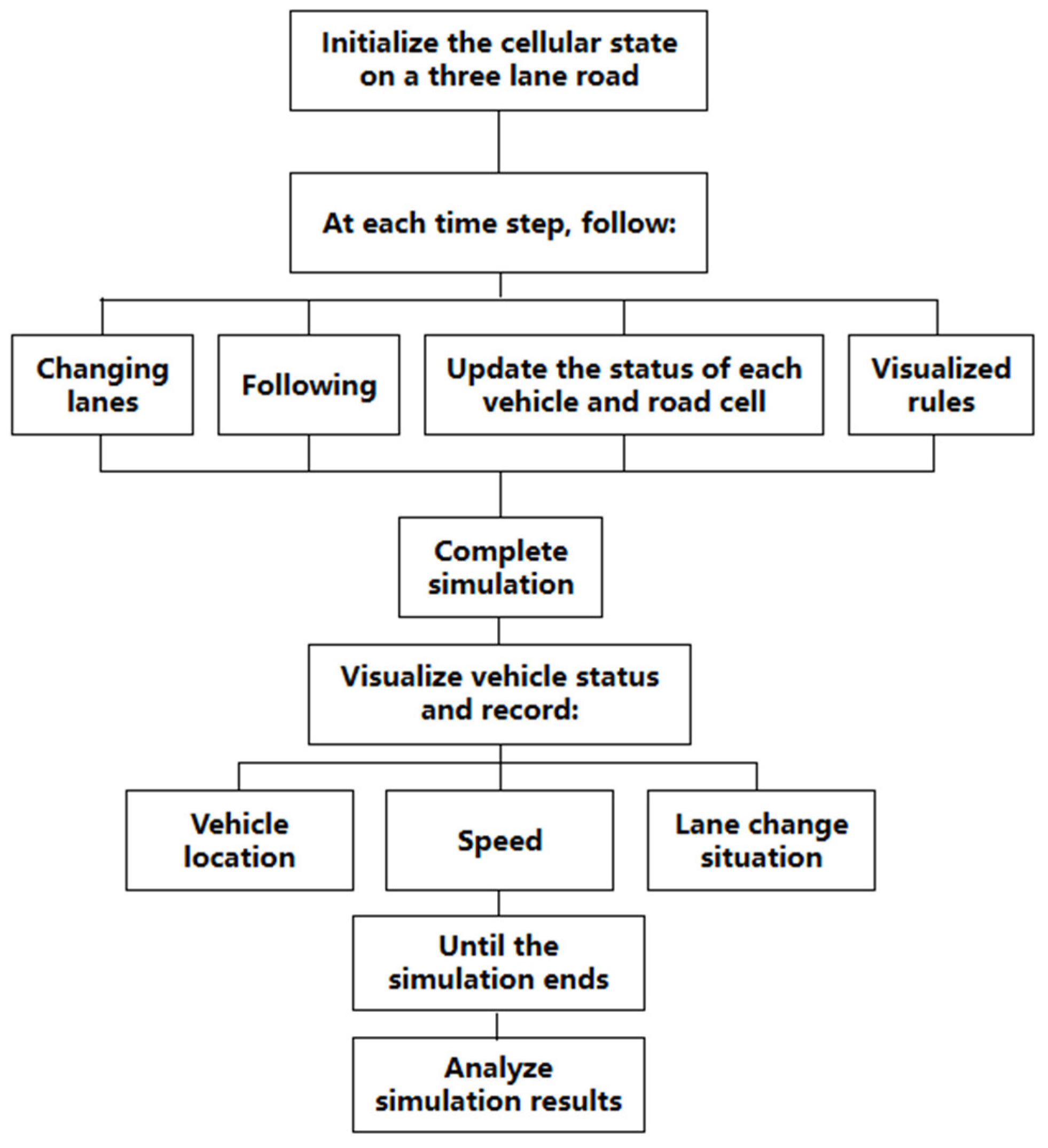

4.4. Simulation Steps

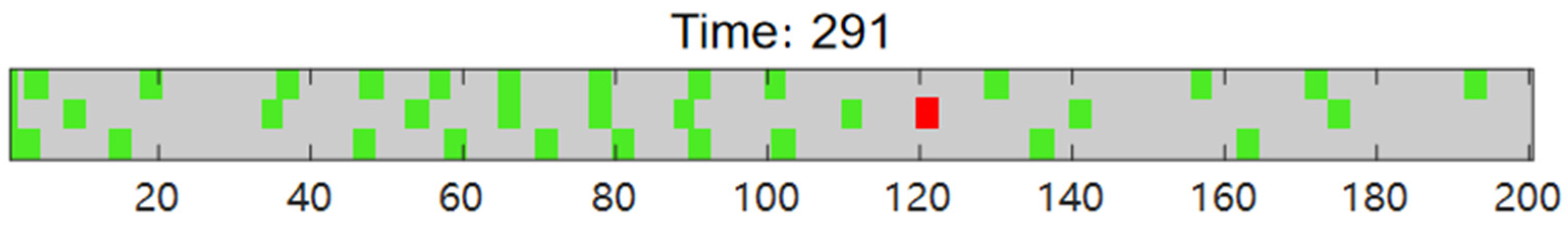

4.5. Simulation Result

5. Conclusions

- According to relevant road traffic regulations and selected consumer-level camera parameters, a camera layout plan for explosive transport vehicles has been designed to ensure compliance with the detection requirements of the surrounding environment during operation.

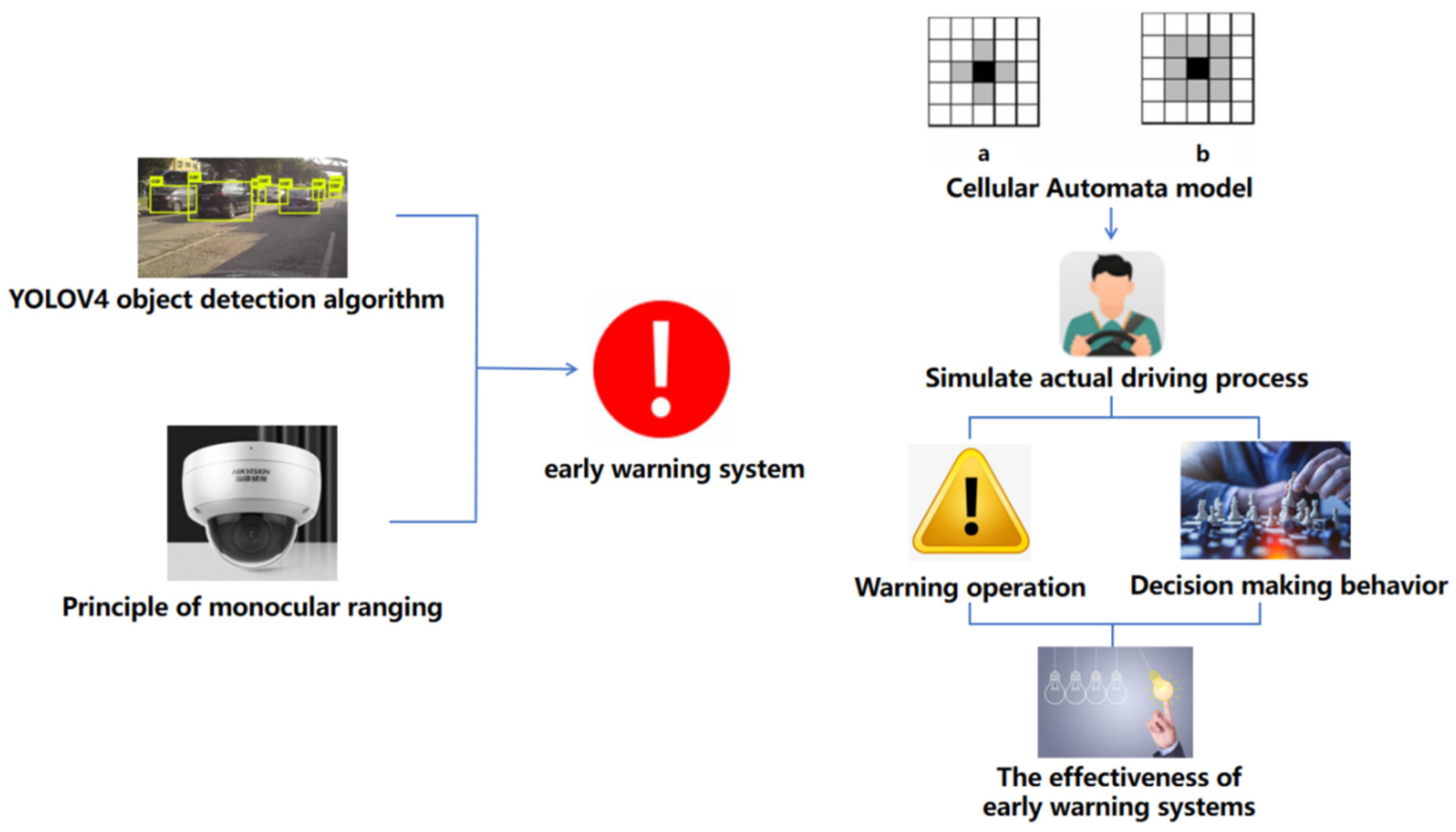

- This system includes recognition and distance measurement functions for surrounding vehicles, which are achieved through the YOLOv4 object detection algorithm. Then, using the principle of monocular camera distance measurement, the distance between vehicles is calculated based on the triangle similarity principle and the vehicle width and wheelbase. Based on the YOLOv4 code, improvements are made to achieve distance measurement. Promptly detect and warn drivers of abnormal approaches or lane changes in surrounding vehicles to prompt them to take appropriate measures.

- Using a cellular automaton model based on game theory, simulate the actual operating scenario of the vehicle, simulate the warning operation of the program, and the decision-making behavior of the driver when encountering dangerous situations while driving explosive transport vehicles. The results indicate that the model can effectively simulate real road traffic conditions and provide timely warning functions when explosive transport vehicles encounter abnormal approaches and lane changes from surrounding vehicles. At the same time, it can effectively simulate the decision-making process of the driver when encountering the above-mentioned dangerous situations. After the program warning, the driver can take timely acceleration, deceleration, or lane change measures, avoiding accidents and proving the effectiveness of the warning program.

- Research shortcomings and prospects: Currently, the testing of this study is only based on simulation and has not yet been deployed to hardware. Subsequent research will attempt to deploy it on real explosive transport vehicles and create a warning display screen for drivers to watch. Data will be collected in real-time situations to further verify the effectiveness and feasibility of the system.

6. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Feng, Y. Research on Intelligent Identification of Explosive Transport Vehicles and Fire Accidents. Master’s Thesis, Jianghan University, Wuhan, China, 2022. [Google Scholar]

- Ge, H. Research on Intelligent Recognition Technology for Distance and Weather Type in Front of Explosive Transport Vehicles. Master’s Thesis, Jianghan University, Wuhan, China, 2023. [Google Scholar]

- Wang, B.J.; Hensher, D.A.; Ton, T. Safety in the road environment: A driver behavioural response perspective. Transportation 2002, 29, 253–270. [Google Scholar] [CrossRef]

- Jiang, Q. Design and Implementation of a Video Surveillance Management System for Intelligent Identification of Urban Road Fire and Explosion Accidents. Master’s Thesis, Jianghan University, Wuhan, China, 2023. [Google Scholar]

- Faisal, M.; Chaudhury, S.; Sankaran, K.S.; Raghavendra, S.; Chitra, R.J.; Eswaran, M.; Boddu, R. Faster R-CNN Algorithm for Detection of Plastic Garbage in the Ocean: A Case for Turtle Preservation. Math. Probl. Eng. 2022, 2022, 3639222. [Google Scholar] [CrossRef]

- Fu, H.; Zhao, H.; Jiang, J.; Zhang, Y.; Liu, G.; Xiao, W.; Du, S.; Guo, W.; Liu, X. Automatic detection tree crown and height using Mask R-CNN based on unmanned aerial vehicles images for biomass mapping. For. Ecol. Manag. 2024, 555, 121712. [Google Scholar] [CrossRef]

- Al-batat, R.; Angelopoulou, A.; Premkumar, S.; Hemanth, J.; Kapetanios, E. An End-to-End Automated License Plate Recognition System Using YOLO Based Vehicle and License Plate Detection with Vehicle Classification. Sensors 2022, 22, 9477. [Google Scholar] [CrossRef] [PubMed]

- Niu, C.; Song, Y.; Zhao, X. SE-Lightweight YOLO: Higher Accuracy in YOLO Detection for Vehicle Inspection. Appl. Sci. 2023, 13, 13052. [Google Scholar] [CrossRef]

- Zhang, X.; Lei, H.; Yang, S.; Liu, L.; Shi, Z.; Yang, G. Research on Workpiece Intelligent Detection Method Based on SSD Algorithm and Transfer Learning. Integr. Ferroelectr. 2023, 236, 1–13. [Google Scholar] [CrossRef]

- Jiang, T. Application of SSD network algorithm in panoramic video image vehicle detection system. Open Comput. Sci. 2023, 13, 20220270. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhe, T.; Liu, Q. An Improved Faster R-CNN Vehicle Recognition Algorithm. Softw. Guide 2022, 21, 25–30. [Google Scholar]

- Zhang, Z.; Yao, G.; Li, X.; Zhang, J. Small target vehicle detection based on improved fast R-CNN algorithm. Technol. Innov. Appl. 2021, 4, 28–32. [Google Scholar]

- Zhou, M. Pedestrian and vehicle detection algorithm based on YOLO model. Master’s Thesis, Anhui University of Science and Technology, Huainan, China, 2022. [Google Scholar]

- Yin, Y.; Xu, Y.; Xing, Y. Vehicle Target Detection Algorithm Based on YOLO v4. Comput. Mod. 2022, 7, 8–14. [Google Scholar]

- Kang, R. Research on Vehicle Target Detection Algorithm Based on SSD. Master’s Thesis, Chang’an University, Xi’an, China, 2022. [Google Scholar]

- Yang, F.; Wu, S. Research on Target Vehicle Detection Algorithm Based on SSD. IoT Technol. 2021, 11, 19–22. [Google Scholar]

- Hikvision—Leading the New Future of Intelligent IoT. Available online: https://www.hikvision.com/cn/ (accessed on 2 July 2024).

- Su, J.; Liu, Y. Vehicle Target Detection Algorithm Based on Improved YOLOv4. J. Tianjin Univ. Sci. Technol. 2023, 38, 61–67. [Google Scholar]

- Zhang, P.; Kang, C.; Tang, W.; Luo, X. Real time detection of drone aerial video based on YOLOv4. J. Civ. Aviat. Flight Acad. China 2023, 34, 23–26. [Google Scholar]

- Zhang, X.; Sun, G.; Lian, M.; Zhang, Y. Fault Analysis of Power Supply Network in a Certain Unmanned System Based on Cellular Automata. Mod. Mach. 2023, 5, 92–96. [Google Scholar]

- Mou, S. Research on Autonomous Lane Changing of Autonomous Vehicles Based on Game Theory. Master’s Thesis, Shandong Jiaotong University, Jinan, China, 2023. [Google Scholar]

- Yu, J. Research on Intelligent Vehicle Lane Changing with the Introduction of Game Theory. Master’s Thesis, Shenyang Ligong University, Shenyang, China, 2023. [Google Scholar]

- Dong, R. Research on Continuous Flow Cellular Automata Model for Narrow Motor Vehicle Lanes in Cities. Master’s Thesis, Nanjing Forestry University, Nanjing, China, 2023. [Google Scholar]

| Functional Requirement | Camera Model | Focal Length | Horizontal Field of View Angle | Irradiation Distance |

|---|---|---|---|---|

| Single camera rough measurement of front and rear vehicle distance | Hikvision 4 million infrared hemisphere 3146FWD-I camera | 2.8–8 mm | 37.5–98.2° | 60 m |

| Number of Road Lanes | Cell Length | ||

|---|---|---|---|

| 3 | 6 m | 3 | 10 |

| Total time T | |||

| 0.3 | 0.5 | 1 | 1000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, J.; Zheng, R.; Liu, X.; Jiang, W.; Jia, M. A Driving Warning System for Explosive Transport Vehicles Based on Object Detection Algorithm. Sensors 2024, 24, 6339. https://doi.org/10.3390/s24196339

Sun J, Zheng R, Liu X, Jiang W, Jia M. A Driving Warning System for Explosive Transport Vehicles Based on Object Detection Algorithm. Sensors. 2024; 24(19):6339. https://doi.org/10.3390/s24196339

Chicago/Turabian StyleSun, Jinshan, Ronghuan Zheng, Xuan Liu, Weitao Jiang, and Mutian Jia. 2024. "A Driving Warning System for Explosive Transport Vehicles Based on Object Detection Algorithm" Sensors 24, no. 19: 6339. https://doi.org/10.3390/s24196339

APA StyleSun, J., Zheng, R., Liu, X., Jiang, W., & Jia, M. (2024). A Driving Warning System for Explosive Transport Vehicles Based on Object Detection Algorithm. Sensors, 24(19), 6339. https://doi.org/10.3390/s24196339