Abstract

Fourier ptychographic microscopy, as a computational imaging method, can reconstruct high-resolution images but suffers optical aberration, which affects its imaging quality. For this reason, this paper proposes a network model for simulating the forward imaging process in the Tensorflow framework using samples and coherent transfer functions as the input. The proposed model improves the introduced Wirtinger flow algorithm, retains the central idea, simplifies the calculation process, and optimizes the update through back propagation. In addition, Zernike polynomials are used to accurately estimate aberration. The simulation and experimental results show that this method can effectively improve the accuracy of aberration correction, maintain good correction performance under complex scenes, and reduce the influence of optical aberration on imaging quality.

1. Introduction

Fourier ptychographic microscopy (FPM) [,] is an emerging imaging technique, which was proposed by Zheng et al. in 2013. Compared to the traditional microscopy imaging mode, this technique combines the ideas of phase recovery [,,], stacked imaging [], and synthetic aperture [] by breaking through the limitation of the numerical aperture of the objective lens and improving the image resolution under the premise of ensuring the original size of the field of view. However, optical aberration emerges in the actual application process, which imposes certain limitations on the imaging results.

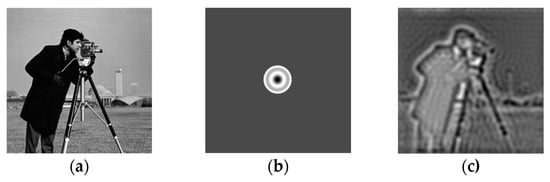

Aberration refers to the difference between the actual and ideal images. While beam focusing in optics can be elaborated as the convergence of light rays to a single point, aberration is the deviation of light rays from the optimal focal point, causing the focus to spread in space []. As the imaging system has a certain aperture and field of view, the imaging position for incident light can be different at different apertures. In optics, the aberration in the imaging system can be divided into seven kinds, namely, spherical aberration, coma, dispersion, field curvature, aberration, positional chromatic aberration, and magnification chromatic aberration, as shown in Figure 1.

Figure 1.

Comparison of images before and after adding aberrations: (a) the cameraman image; (b) the coherent transfer function with the addition of a spherical aberration; (c) the image with the addition of a spherical aberration.

Aberration is commonly corrected by restoring high-resolution complex objects and unknown aberration pupil functions in the iterative process. For example, Ou et al. [] proposed a phase recovery algorithm (EPRY-FPM) based on the ePIE method [], which restores the extended sample spectrum and the pupil function of the imaging system by employing the image of the sample captured by the FPM. With the continuous development of deep learning, more and more researchers have introduced the aberration correction process into the neural network, with the purpose of improving the computational efficiency of the algorithm by taking advantage of its fast computing. For example, using the neural network, Zhang et al. [] modeled the samples and aberrations as the learnable weights of the multiplication layer and discovered that the INNM network architecture could obtain a complex sample without aberrations. Zhang et al. [] proposed a Fourier imaging neural network (FINN-CP) with Tensorflow, which is composed of two models, for effectively correcting the position error and wavefront aberration of the system. Hu et al. [] proposed a microscopic image aberration correction method based on deep learning and aberration prior knowledge, which enhances and corrects the microscopic image in the form of image restoration. Zhang et al. [] combined the channel attention module with a physics-based neural network to adaptively correct aberrations; Zhao et al. [] established the relationship between the phase and aberration coefficient through deep learning to segment samples and backgrounds [] and realized fast automatic aberration compensation correction []. Wu et al. [] proposed an FPM aberration correction reconstruction framework (AA-P) algorithm based on an improved phase retrieval strategy, which improves the iterative reconstruction quality by optimizing the spectral function and the pupil function update strategy while alleviating the influence of mixed wavefront aberrations on the reconstructed image quality and avoiding the occurrence of errors in the reconstruction process. The quality of image reconstruction can be ensured by aberration correction, endowing the reconstructed image with more details. Xiang et al. [] proposed a phase diversity-based FP (PDFP) scheme for aberration correction. The PD algorithm is an unconventional imaging technique introduced by Gonsalves and Chidlaw [], which characterize wavefront aberrations by means of a set of focused images and defocused images. Experiments have proven the ability of this scheme to correct changing aberrations and improve image quality. Aberration correction can ensure the quality of image reconstruction, achieving the reconstructed image with more details.

In this paper, we propose an aberration correction method based on the Fourier ptychographic microscopy technique for the aberration existing in the imaging process and name it Integrated Neural Network based on Improved Wirtinger Flow (INN_IWF). The model proposed in this paper is a trainable network constructed on the basis of the TensorFlow framework to simulate the entire process. The network simulates the forward imaging process of the Fourier ptychographic microscopy system while modelling the optical aberration of the objective lens as the optical pupil function to better estimate the optical aberration and optimize the update by back propagation. Furthermore, the alternate updating (AU) mechanism and the Zernike mode are introduced to the model to further improve the performance of the proposed network. Therefore, this method can effectively recover optical aberrations while guaranteeing the overall performance of the network. The results of several sets of experiments show that the mentioned method is superior to other methods in its capability to effectively improve the quality of image reconstruction while retaining more detailed information.

2. Methods

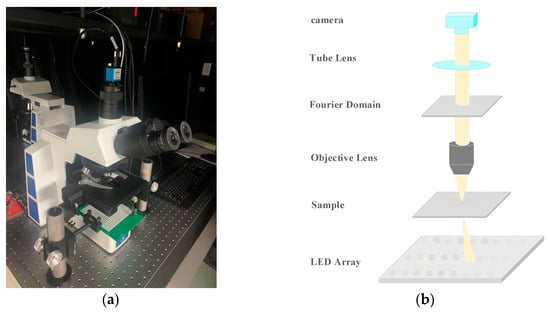

2.1. Fourier Ptychographic Microscope

The difference between a Fourier ptychographic microscope and conventional microscope is that the Fourier ptychographic microscope uses an array of LEDs instead of a conventional microscope light source. The LEDs are correctly selected to achieve illumination from a variety of angles. Figure 2a shows the RX50 series upright field microscopes and Figure 2b shows the simulation schematic diagram of the device. The camera used in the device is a DMK 33UX264 camera (The Imaging Source, Bremen, Germany, 3.45 µm, 2448 × 2048). The purpose of this device is to digitally image the sample. Optical imaging collected by the device can be accomplished either directly visually or by using the software to view the actual iPlease provide manufacturer and address informationmages captured by the camera.

Figure 2.

Fourier ptychographic microscope []. (a) Real FPM system; (b) FPM Simulation Schmatic.

The device consists of a DMK 33UX264 camera, an eyepiece, an optical path selector lever, a Y-axis moving handwheel, a mirror group, a tightening and loosening adjusting handwheel, an adjusting light wheel, a light collector mirror, an X-axis moving handwheel, a mechanical platform, an LED light board holder, an LED light board (20 × 20), etc. The LED light board parses the commands from the MATLAB program sent through the serial port to light up the LED lights in the specified positions. With LED lights and LED built-in RGB three-color beads, the device can capture images using a black and white camera and synthesize these images into color images. The light panel can be fixed or moved downwards and upwards through LED brackets.

2.2. Imaging Model and Reconstruction Model

2.2.1. Imaging Model

In the forward imaging process, the sample can be represented by the transfer function , where r represents the two-dimensional coordinate. Assuming that the distance between the LED lamp and the sample is far enough, the illumination wave of the LED lamp can be approximated as an oblique plane wave, and the wave vector of the nth lamp can be expressed as

where represents the incident angle of the nth LED lamp, is the wavelength of the incident light, and the complex amplitude entering the sample plane is expressed as . When the nth LED lamp illuminates the sample, the output field after Fourier transform can be expressed as . Illuminating the sample using the oblique plane wave with a wave vector is equivalent to the shift of the sample spectrum . When passing through the objective lens, the field is lowpass filtered by the pupil function . At this time, the forward imaging process of FPM can be expressed as

where represents the intensity information on the sensor, represents the complex amplitude distribution on the sensor, represents the sample spectrum illuminated by its plane wave vector plane wave, represents the two-dimensional coordinate, and represents the inverse Fourier transform [].

2.2.2. Reconstruction Model

In the reconstruction process, FPM obtains a high-resolution complex amplitude distribution by synthesizing images with different frequency domain information. The classical FPM reconstruction algorithm iteratively estimates the complex amplitude image and updates it using the captured intensity image. An iteration can be expressed as

Equation (3) is used to estimate the high-resolution image relative to each LED light, while Equation (4) updates the high-resolution image by utilizing the captured low-resolution intensity image. The degree of spectral convergence can be known by repeated calculations, and low-resolution images can be used for the initial . Finally, the estimated spectral is transformed into by inverse Fourier transform, and the high-resolution image is extracted from .

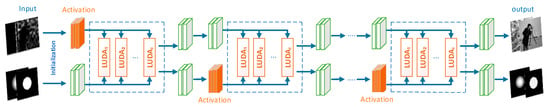

2.3. Integrated Neural Network Based on Improved Wirtinger Flow

2.3.1. Network Architecture

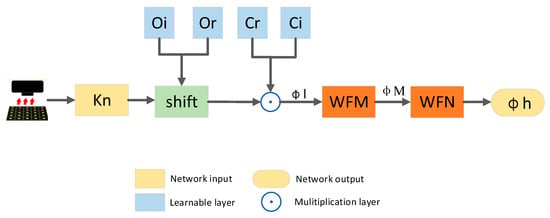

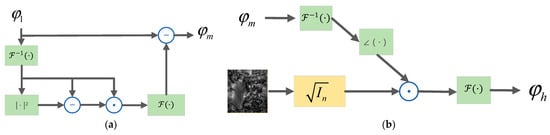

The whole network implements aberration correction in the Tensorflow framework. Figure 3 shows the overall flowchart of INN_IWF. The sample images captured by up-sampling and the aberration-free coherent transfer function serve as the inputs of the network, respectively. They are alternately updated and fed into the lighting update units with different angles (LUDA), as shown in Figure 4. A set of captured images and their corresponding wave vectors are taken as a sampling process, and in each sampling, all the samples with different angles are input into the model, and the model parameters are updated by using back-propagation. The expected results are generated through multiple sets of training phases, where the WFM module and the WFN module are separately shown in Figure 5a,b.

Figure 3.

INN_IWF overall flowchart.

Figure 4.

LUDA flowchart.

Figure 5.

Flowchart of WFM and WFN modules. (a) WFM module; (b) WFN module.

The pupil recovery module is specifically formulated as

where O(k) is the Fourier function of the sample, and and are the Fourier original aperture during the update of the INN_IWF network and the updated aperture, respectively. is a pre-upsampled sample image. represents the coherence transfer function of the objective lens, which is used to characterize the imaging quality of the diffraction-limited system under the condition of coherent illumination. The standard formula of pupil function CTF can be expressed as

where denotes the two-dimensional spatial coordinates of the Fourier domain, and NA denotes the numerical aperture, , where is the wavelength of the incident light.

Figure 4 shows the flowchart of LUDA. As the proposed network is defined in the complex domain, the samples and the coherence transfer function (CTF) are divided into real and imaginary parts, which are passed to the network as inputs to LUDA. The samples are shifted according to and then multiplied by the CTF to generate ,which is the spectrum before updating, Hence, Equation (5) can be rewritten as

where r and i represent the real and imaginary parts, respectively.

The traditional correction method cannot meet the requirements of complex aberration scenes. Therefore, this paper adds an optimization framework based on the traditional method, as shown in Figure 5a. The whole updating process can be represented by Equation (6). can be expressed by Equation (9).

where is the output of the WFM module in Figure 5a, represented by Equation (10), using the idea of the Wirtinger Flow algorithm []. As a technology for solving the phase retrieval problem, the Wirtinger Flow Algorithm [] will transform the problem into a problem of finding the minimum value and serves as a general optimization framework that can reduce computational costs and effectively deal with noise. The spectrum before updating will be divided into two parts, one of which remains unchanged, and the other part is that is transformed by inverse Fourier transform and defined as , where is a linear sampling matrix, which is to be updated through operations such as phase subtraction, the dot product, etc. Then, the updated variable will undergo the Fourier transform again and be subtracted from to generate . The specific flow is shown in the WFM module of Figure 5a.

where ∆ is the custom gradient descent step size and ⨀ represents the dot product.

According to Equation (10), is gradient-updated to generate . enters the WFN module for phase conversion for calculating to obtain . Second, the amplitude of the simulated image in the WFN module is represented by the square root of the pre-sampled intensity image. As an intensity constraint, is multiplied by it. The network generates the updated spectrum according to the update process shown in Figure 5a,b. Since the spectra before and after the update have the same frequency, the whole network structure can be used to obtain the optimal result based on whether the difference between the spectra before and after the update is minimized. In this paper, the mean square error is used to calculate the minimum of the difference between the spectra before and after the update. The loss function is expressed as

2.3.2. Alternating Update Mechanism

After the above update process, the network outputs the updated samples and CTF. However, the samples and CTF have different properties when the network back propagates, and if the same gradient descent step size is used, the network will fail to converge to a perfect state. Therefore, an alternating update mechanism [] is adopted to respectively control the gradient descent steps of the samples and CTF in this paper.

The updating process is divided into two parts, one of which aims to change the learning rate of the samples and control the gradient descent step size of the samples while keeping the CTF unchanged, and the other is to change the learning rate of the CTF to control the gradient descent step size of the CTF while keeping the samples unchanged, as identified by orange. Only after these two sections are completed will the network be able to converge to the optimal point and can better results be achieved for the samples and CTF.

2.3.3. Optical Aberration Processing Mechanism

The aberration function of the system is expressed in terms of Zernike polynomials, as shown in Equation (12), which can be used to describe the wavefront characteristics [].

where ρ and θ are variables, is the expansion coefficient of different Zernike polynomials, and is different Zernike polynomials, which can be expressed as:

where m and n are positive integers with zeros, and are even numbers; n is the highest order ρ of the polynomial; m is the azimuth frequency; j is the order of the polynomial and is a function of n and m; and can be expressed as:

The CTF is always updated as a whole. The Zernike polynomials are applied to model the phase of the CTF in this paper, which, therefore, can be expressed as

where I in Equation (13) is the number of Zernike polynomials and is the coefficient of each Zernike polynomial.

The amplitude of the CTF remains updated as a whole, and the final form of the CTF modelling is expressed as

3. Experimental Results

3.1. Experimental System Setup

The equipment used for the experiments is shown in Section 3.1. A programmable controlled light source element LED and an illumination wavelength of 532 nm were used and placed 100 mm below the sample to provide illumination. In the sample collection process of the FPM device, the LED array is designed into a 15 × 15 LED rectangular area by programming. The rectangular region can be understood as a two-dimensional coordinate. The LED in the upper left corner of the coordinate starts to light up, and the remaining LED lights up in turn according to the coordinates, forming illumination at different angles. LED lights at different angles illuminate the samples placed on the stage. The FPM system used had a numerical aperture of 0.1 and was used to capture low-resolution sample images illuminated at different angles and record light intensity images using a CMOS camera with a pixel size of 3.45 µm. The results obtained by the INN_IWF were verified through both simulated and real datasets and then compared with those of other methods, such as those proposed by Jiang et al. [].

Two metrics, namely, the Peak-Signal-to-Noise Ratio (PSNR) and Structural Similarity (SSIM), were used for evaluating the image quality. The Peak-Signal-to-Noise Ratio (PSNR) is an indicator commonly used to measure signal distortion. The larger the PSNR value, the better the image quality. In the field of image evaluation, the Peak-Signal-to-Noise ratio is calculated by the mean squared error (MSE):

The MSE is defined as

Among them, and represent the real image and the contrast image, respectively.

The Structural Similarity Index Measure (SSIM) is used to evaluate the image quality from the perspectives of brightness, contrast, and structure, which is in line with the intuitive effect observed by human vision, whose value falls in the range of 0~1:

where , and , represent the mean and standard deviation of the two images, respectively; is the covariance of the two; and and are constant and equal.

3.2. Comparative Experiments with Simulated Datasets

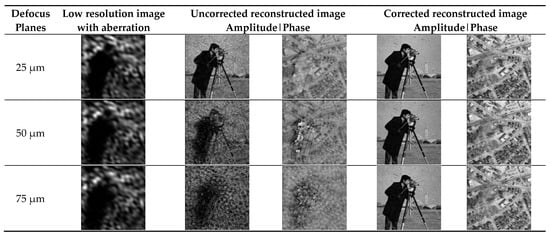

The Cameraman and street map were used as the amplitude and phase images for the simulated dataset, as shown in Figure 6. The optical aberration is dominated by defocus aberration, which is caused by an uneven sample or inaccurate focusing. The experimental equipment, as described above, was used to generate 225 intensity images, from which the amplitude, phase, and CTF were reconstructed.

Figure 6.

Comparison of low-resolution images with different defocus planes and images before and after aberration correction on sitmulation datasets.

3.2.1. Correction Performance for Different Defocus Planes

Three defocus planes of 25 µm, 50 µm, and 75 µm were selected to verify the aberration correction performance of the method at different defocus planes (ranging from 25 µm to 75 µm). In this paper, Zernike polynomials were used to estimate the aberration, and the polynomial mode is about −1.44, corresponding to the defocus aberration of 50 µm. The first column is the low-resolution images with aberrations generated using the forward imaging model, the second and third columns are the images without aberration correction, and the fourth and fifth columns are the images after aberration correction using the INN_IWF network.

Figure 6 demonstrates the effect of aberration on the reconstructed results at different defocus planes. As can be seen from the figure, the effect of aberration on the final generated image became increasingly obvious with the increase in the amount of defocus. Compared with the image without aberration correction, the imaging effect after aberration correction using this method was improved, suggesting that the INN_IWF network can complete the correction of aberrations and maintain a good correction performance on different defocus planes.

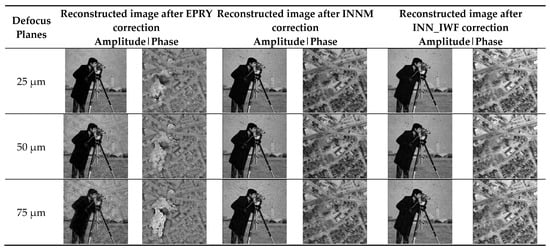

In order to further verify the good aberration performance of the proposed method on different defocus planes, INNM [] and EPRY [] are used as comparison algorithms in this paper. Several experiments were carried out to compare the correction performance of the above three aberration correction methods on different defocus planes, and the PSNR and SSIM index values calculated by each experiment were averaged. As shown in Figure 7, the images constructed using the three methods were affected to some extent with the increase in the amount of defocus in different defocus planes. Among them, the EPRY method is most affected by the change in the defocus plane, while the method in this paper is least affected by the defocus plane, which can correct the aberration well and obtain the reconstructed image with richer image details. Table 1 is the image reconstruction index values of different methods on different defocus planes, among which the optimal results are marked in bold. In Table 1, the maximum and minimum values of the image reconstruction indexes calculated by many experiments are also shown. The fluctuation range of the maximum and minimum values in Table 1 is smaller than that of the other two methods. The purpose of the maximum and minimum values is to show the fluctuation range of the evaluation indexes of each method. It can be seen from the results shown in Table 1 that the EPRY method has a lower calculated evaluation index value than the other two methods because its correction performance is greatly affected by the change in the defocus plane. The method in this paper adds an optimization process to the network. Compared to the INNM method, it has a better performance and higher image evaluation index value. The above analysis shows that the method put forward in this paper consistently exhibited good aberration correction performances on different defocus planes.

Figure 7.

Comparison of the results of different methods on different defocus planes on sitmulation datasets.

Table 1.

Image reconstruction metrics of different methods on different defocus planes.

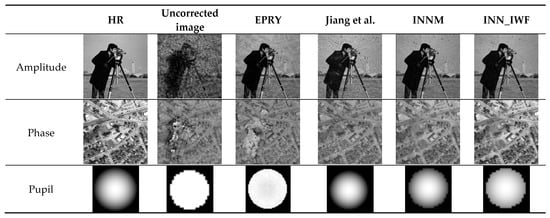

3.2.2. Comparison of the Results of Different Methods on the Simulated Dataset

The results of this method were compared with those of INNM [], EPRY [], and the method proposed by Jiang et al. [] on a simulated dataset under the condition that defocus aberration was used as the optical aberration, with a size of 50 µm. In addition, the PSNR and SSIM index values for each experimental result of the above methods were calculated and averaged, as shown in Figure 8 and Table 2. The results shown in Figure 8 show that the method in this paper can correct the aberration well. Compared to the other three methods, it has a higher image clarity and more image detail features. In Table 2, the optimal results are marked in bold. Table 2 shows the maximum and minimum values of the image reconstruction indexes calculated by Jiang et al.’s [] method. The values of other methods are shown in Table 1. The results indicated that the results obtained by the method proposed in this paper were better than those obtained by the other three methods.

Figure 8.

Comparison of the results of different methods on the simulation dataset [].

Table 2.

Image reconstruction metrics of different methods on the simulated dataset.

3.3. Comparative Experiments with a Real Dataset

3.3.1. Correction Performance for Different Defocus Planes

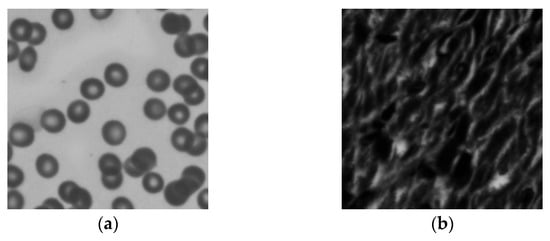

In order to verify that the proposed method still has a good correction performance in the face of complex aberration conditions, the device of Section 2.1 is used for sample collection. The numerical aperture of the system and the position of the LED array remain unchanged. The 15 × 15 LED illumination array irradiates the real cell image placed on the stage through the plane wave of different angles. The CMOS camera with a pixel size of 3.45 µm captures 225 real sample images with different angles of illumination and records the light intensity image. The intensity and phase images of the real samples are shown in Figure 9a,b.

Figure 9.

The collected cell images. (a) Intensity Image; (b) Phase Image.

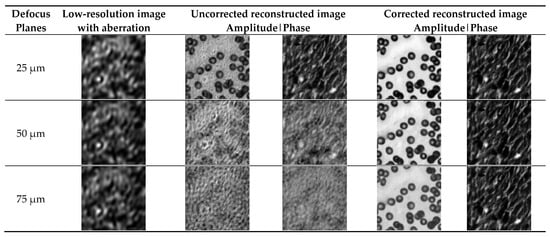

Three defocus planes of 25 µm, 50 µm, and 75 µm were selected for comparison. The aberration correction results for different defocus planes are shown in Figure 10. The first column shows a low-resolution image with aberrations generated using the forward imaging model. The second and third columns are images without aberration correction. The fourth and fifth columns are images after aberration correction using the INN_IWF network.

Figure 10.

Comparison of low-resolution images with different defocus planes and images before and after aberration correction in a real dataset.

Figure 10 shows the effect of aberrations on the reconstruction results of cell images at different defocusing planes, which shows that the effect of the aberration on the final reconstructed image became more and more pronounced with the increase in the defocus amount. Compared with the image without aberration correction, the imaging effect of the image corrected by the method proposed in this paper was improved, and the image texture features were retained to a large extent, implying that the INN_IWF network could not only achieve aberration correction but also maintain a good aberration correction performance in the case of severe aberration, so the reconstructed results retained more image detail features.

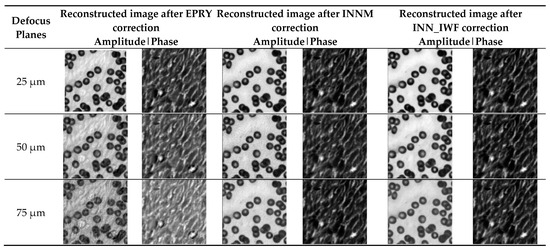

In order to further verify the good aberration correction performance of the method presented in this paper for cell images on different defocus planes, INNM [] and EPRY [] are used as comparison algorithms. The correction results of the above three aberration correction methods on different defocus planes are compared by multiple experimental results, and the PSNR and SSIM index values calculated by multiple experimental results are averaged. As shown in Figure 11, the defocus amount of different defocus planes gradually increased, which indicates that aberrations on the reconstruction results had a more and more obvious influence on the reconstruction results and that they would also have a certain degree of influence on the reconstruction image quality of the above three methods. The optimal results are in bold in Table 3. The maximum and minimum values of the image reconstruction indexes calculated by multiple experiments are also shown in Table 3. The fluctuation degree of the maximum and minimum values of the proposed method is the same as that of the INNM method, but the numerical value is better than that of the INNM method. As can be seen from the table, aberration had the greatest influence on the EPRY [] method, and the proposed method and the INNM [] method are less affected by aberrations. Table 3 also shows that the aberration correction effect of the method proposed on different defocus planes was better than that of the other two methods, with a higher value of the image reconstruction index. The above analysis shows that the proposed method maintains a good aberration correction performance for cell images, and the correction performance is not reduced in complex scenes while retaining image texture features.

Figure 11.

Comparison of the results of different methods on different defocus planes in a real dataset.

Table 3.

Image reconstruction metrics of different methods on different defocus planes in a real dataset.

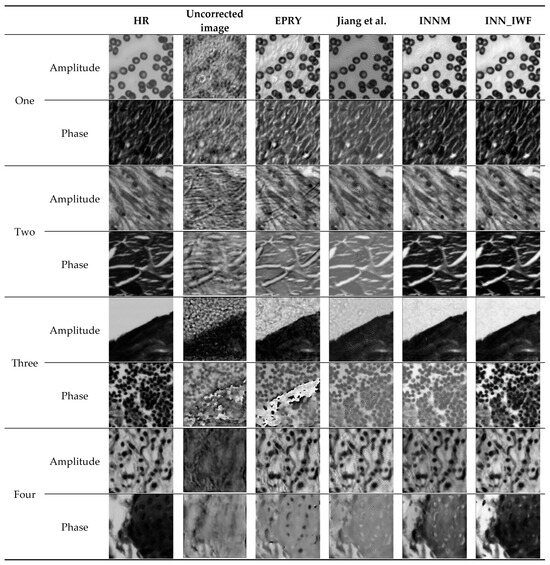

3.3.2. Comparison of the Results of Different Methods on a Real Dataset

The dataset used in this subsection is four sets of cell images acquired under real experimental conditions, and the superiority of the method is verified through a comparison with other methods.

The results of multiple experiments of INN_IWF, INNM [], EPRY [], and the method proposed by Jiang et al. [] in real datasets are compared, as shown in Figure 12. Table 4 is the average value of the image reconstruction index of the above four methods in PSNR and SSIM. Due to the limitation of the table size in Table 4, the maximum and minimum values of multiple sets of real image reconstruction indexes are shown in Table 5. It can be seen from the results that the method in this paper is better than the other methods. The optimal results are in bold. It can be seen from Figure 12 and Table 4 that the image clarity obtained by the INN_IWF was improved when compared with that of the methods proposed by Jiang et al. and EPRY in these four groups of experiments, with more image details. As can been from the reconstruction indexes in Table 4, the reconstruction index value of the method proposed in this paper was higher. The results of the first two groups of experiments were similar to those of the INNM method, while the results of the latter two groups show that the correction performance of the proposed method is better than that of the INNM method. The image reconstruction index values of the two methods in Table 4 show that the INNM is suboptimal. In summary, the method in this paper had a better aberration correction performance in real datasets and was able to obtain better reconstruction results.

Figure 12.

Comparison of the results of different methods in a real dataset [].

Table 4.

Image reconstruction metrics of different methods in a real dataset.

Table 5.

The maximum and minimum values of the reconstruction metrics of different methods in a real dataset.

4. Conclusions

This paper proposes an aberration correction method based on the improved Wirtinger Flow algorithm under the Tensorflow framework. This method simulates the forward imaging process and improves the Wirtinger Flow algorithm introduced into the model, retains the central idea, simplifies the calculation process, and improves the performance of the aberration correction of the network. The alternating update mechanism (AU) updates the sample and the coherent transfer function in batches to obtain better results. Zernike polynomials can estimate aberrations with high precision. The simulation and experimental results show that the INN_IWF network demonstrates a better performance in correcting aberrations while obtaining richer texture details of reconstructed images, proving that the proposed method is superior on different defocus planes, effectively avoiding a low correction accuracy and poor correction performance under complex aberration conditions while retaining more image texture features when compared to traditional algorithms.

Author Contributions

Conceptualization, X.W. (Xiaoli Wang) and J.L.; methodology, X.W. (Xiaoli Wang) and Z.L.; software, Z.L. and X.W. (Xinbo Wang); validation, Z.L.; investigation, Z.L.; writing—original draft preparation, X.W. (Xiaoli Wang) and Z.L.; writing—review and editing, X.W. (Xiaoli Wang), J.L., Z.L., Y.W., H.W. and X.W. (Xinbo Wang); supervision, X.W. (Xiaoli Wang); project administration, X.W. (Xiaoli Wang) and J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Jilin Provincial Science and Technology Department [NO: 2021JBH02L38] and by the Science and Technology Development Plan Projects of Jilin Province [NO: YDZJ202301ZYTS180].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Acknowledgments

The authors sincerely acknowledge the help from the Optoelectronics Laboratory of the School of Electronic Information Engineering, Changchun University.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zheng, G.; Horstmeyer, R.; Yang, C. Wide-field, high-resolution Fourier ptychographic microscopy. Nat. Photonics 2013, 7, 739–745. [Google Scholar] [CrossRef]

- Yin, W.; Zhong, J.; Feng, S.; Tao, T.; Han, J.; Huang, L.; Chen, Q.; Zuo, C. Composite deep learning framework for absolute 3D shape measurement based on single fringe phase retrieval and speckle correlation. J. Phys. Photonics 2020, 2, 045009. [Google Scholar] [CrossRef]

- Candes, E.J.; Eldar, Y.C.; Strohmer, T.; Voroninski, V. Phase retrieval via matrix completion. SIAM Rev. 2015, 57, 225–251. [Google Scholar] [CrossRef]

- Fienup, J.R. Reconstruction of an object from the modulus of its Fourier transform. Opt. Lett. 1978, 3, 27–29. [Google Scholar] [CrossRef]

- Fienup, J.R. Phase retrieval algorithms: A comparison. Opt. Lett. 1982, 21, 2758–2769. [Google Scholar] [CrossRef]

- Marrison, J.; Räty, L.; Marriott, P.; O’Toole, P. Ptychography—A label free, high-contrast imaging technique for live cells using quantitative phase information. Sci. Rep. 2013, 3, 2369. [Google Scholar] [CrossRef]

- Alexandrov, S.A.; Hillman, T.R.; Gutzler, T.; Sampson, D.D. Synthetic Aperture Fourier Holographic Optical Microscopy. Phys. Rev. Lett. 2006, 97, 168102. [Google Scholar] [CrossRef] [PubMed]

- Booth, M.J. Adaptive optics in microscopy. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2007, 365, 2829–2843. [Google Scholar] [CrossRef] [PubMed]

- Ou, X.; Zheng, G.; Yang, C. Embedded pupil function recovery for Fourier ptychographic microscopy. Opt. Express 2014, 22, 4960. [Google Scholar] [CrossRef] [PubMed]

- Maiden, A.; Johnson, D.; Li, P. Further improvements to the ptychographical iterative engine. Optica 2017, 4, 736. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Jiang, S.; Dixit, K.; Song, P.; Zhang, X.; Ji, X.; Li, X. Neural network model assisted Fourier ptychography with Zernike aberration recovery and total variation constraint. J. Biomed. Opt. 2021, 26, 036502. [Google Scholar] [CrossRef]

- Zhang, J.; Tao, X.; Yang, L.; Wu, R.; Sun, P.; Wang, C.; Zheng, Z. Forward imaging neural network with correction of positional misalignment for Fourier ptychographic microscopy. Opt. Express 2020, 28, 23164–23175. [Google Scholar] [CrossRef]

- Hu, L. Research on Aberration Correction Method Based on Wavefront Detection and Deep Learning in Microscopic System. Ph.D. Thesis, Zhejiang University, Hangzhou, China, 2021. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, T.; Li, J.; Zhang, Y.; Jiang, S.; Chen, Y.; Zhang, J. Physics-based learning with channel attention for Fourier ptychographic microscopy. J. Biophotonics 2022, 15, e202100296. [Google Scholar] [CrossRef]

- Zhao, M.; Zhang, X.; Tian, Z.; Liu, S. Neural network model with positional deviation correction for Fourier ptychography. J. Soc. Inf. Disp. 2021, 29, 749–757. [Google Scholar] [CrossRef]

- Xiao, W.; Xin, L.; Cao, R.; Wu, X.; Tian, R.; Che, L.; Sun, L.; Ferraro, P.; Pan, F. Sensing morphogenesis of bone cells under microfluidic shear stress by holographic microscopy and automatic aberration compensation with deep learning. Lab Chip 2021, 21, 1385–1394. [Google Scholar] [CrossRef]

- Xiaoze, O.; Roarke, H.; Guoan, Z.; Changhuei, Y. High numerical aperture Fourier ptychography: Principle, implementation and characterization. Opt. Express 2015, 23, 3472–3491. [Google Scholar]

- Wu, R.; Luo, J.; Li, J.; Chen, H.; Zhen, J.; Zhu, S.; Luo, Z.; Wu, Y. Adaptive correction method of hybrid aberrations in Fourier ptychographic microscopy. J. Biomed. Opt. 2023, 28, 036006. [Google Scholar] [CrossRef]

- Xiang, M.; Pan, A.; Liu, J.; Xi, T.; Guo, X.; Liu, F.; Shao, X. Phase Diversity-Based Fourier Ptychography for Varying Aberration Correction. Front. Phys. 2022, 10, 129. [Google Scholar] [CrossRef]

- Gonsalves, R.A. Phase retrieval and diversity in adaptive optics. Opt. Eng. 1982, 21, 829–832. [Google Scholar] [CrossRef]

- Wang, X.; Piao, Y.; Jin, Y.; Li, J.; Lin, Z.; Cui, J.; Xu, T. Fourier Ptychographic Reconstruction Method of Self-Training Physical Model. Appl. Sci. 2023, 13, 3590. [Google Scholar] [CrossRef]

- Wang, X.; Piao, Y.; Yu, J.; Li, J.; Sun, H.; Jin, Y.; Liu, L.; Xu, T. Deep Multi-Feature Transfer Network for Fourier Ptychographic Microscopy Imaging Reconstruction. Sensors 2022, 22, 1237. [Google Scholar] [CrossRef]

- Bian, L.; Suo, J.; Zheng, G.; Guo, K.; Chen, F.; Dai, Q. Fourier ptychographic reconstruction using Wirtinger flow optimization. Opt. Express 2015, 23, 4856–4866. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Zhong, Z.; Shen, T.; Lin, Z. Convolutional neural networks with alternately updated clique. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2413–2422. [Google Scholar]

- Thibos, L.N.; Applegate, R.A.; Schwiegerling, J.T.; Webb, R. Standards for reporting the optical aberrations of eyes. J. Refract. Surg. 2002, 18, S652–S660. [Google Scholar] [CrossRef] [PubMed]

- Jiang, S.; Guo, K.; Liao, J.; Zheng, G. Solving Fourier ptychographic imaging problems via neural network modeling and TensorFlow. Biomed. Opt. Express 2018, 9, 3306–3319. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).