Enhancing Robot Task Planning and Execution through Multi-Layer Large Language Models

Abstract

:1. Introduction

2. Related Work

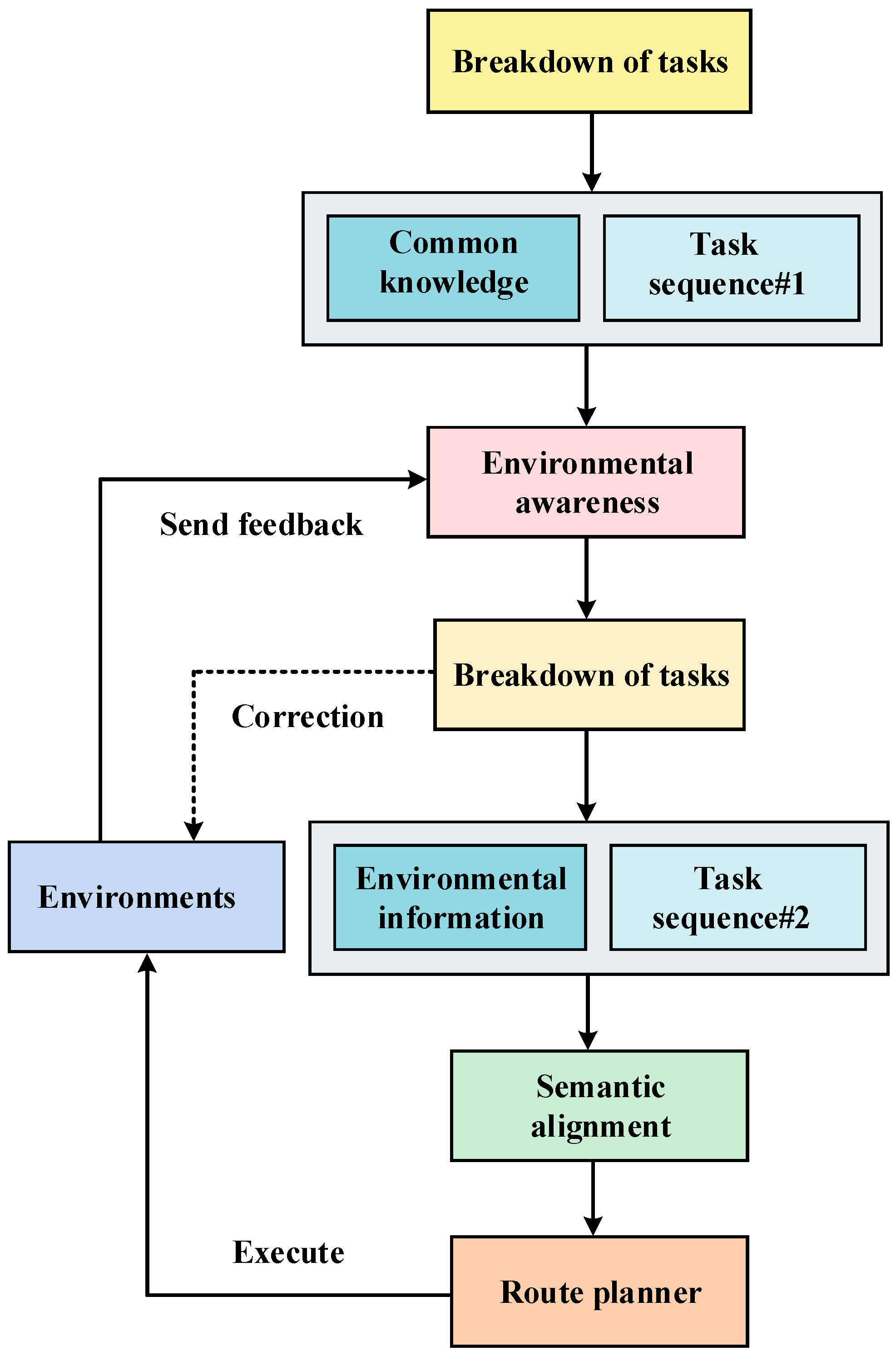

3. Method

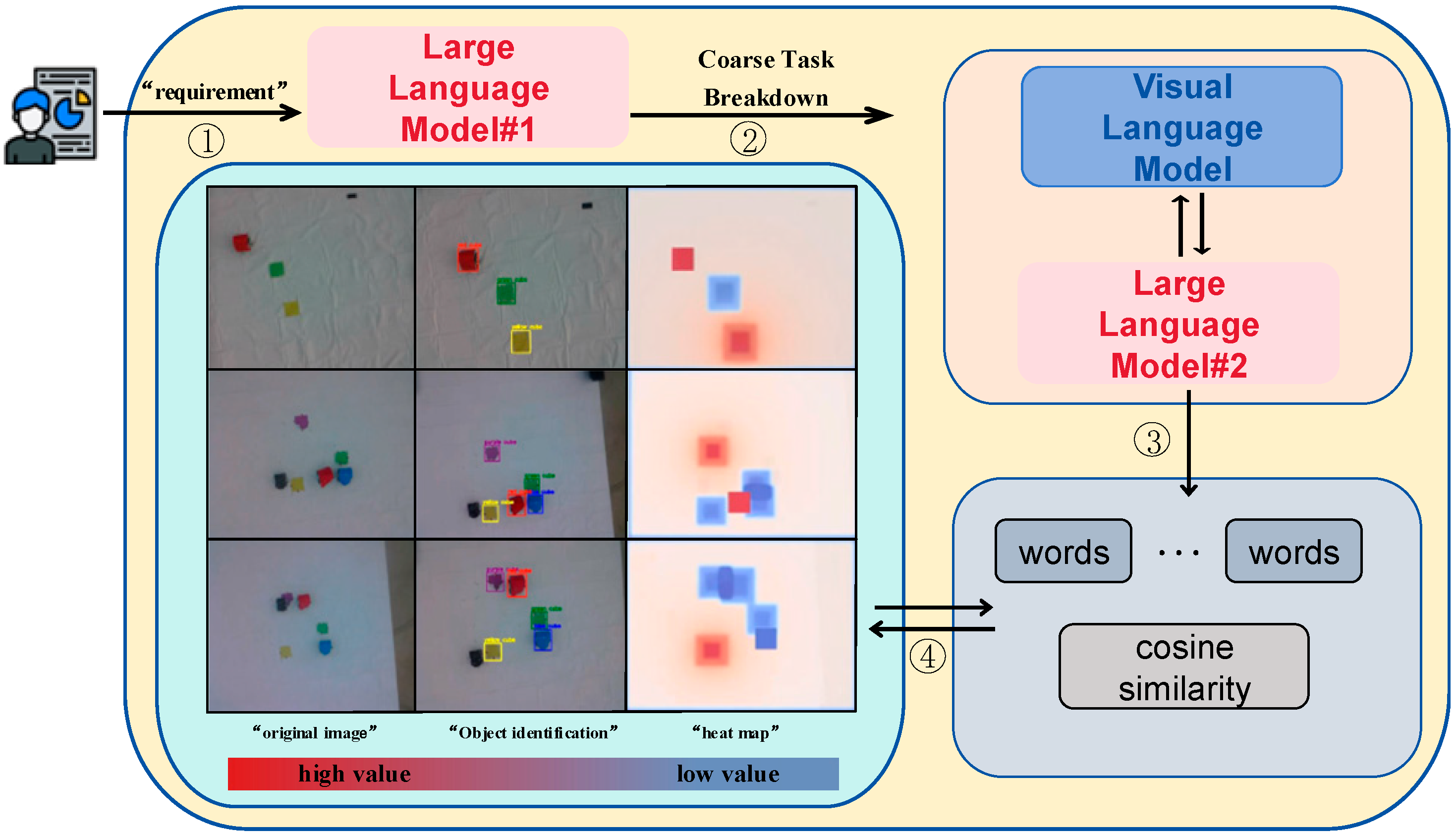

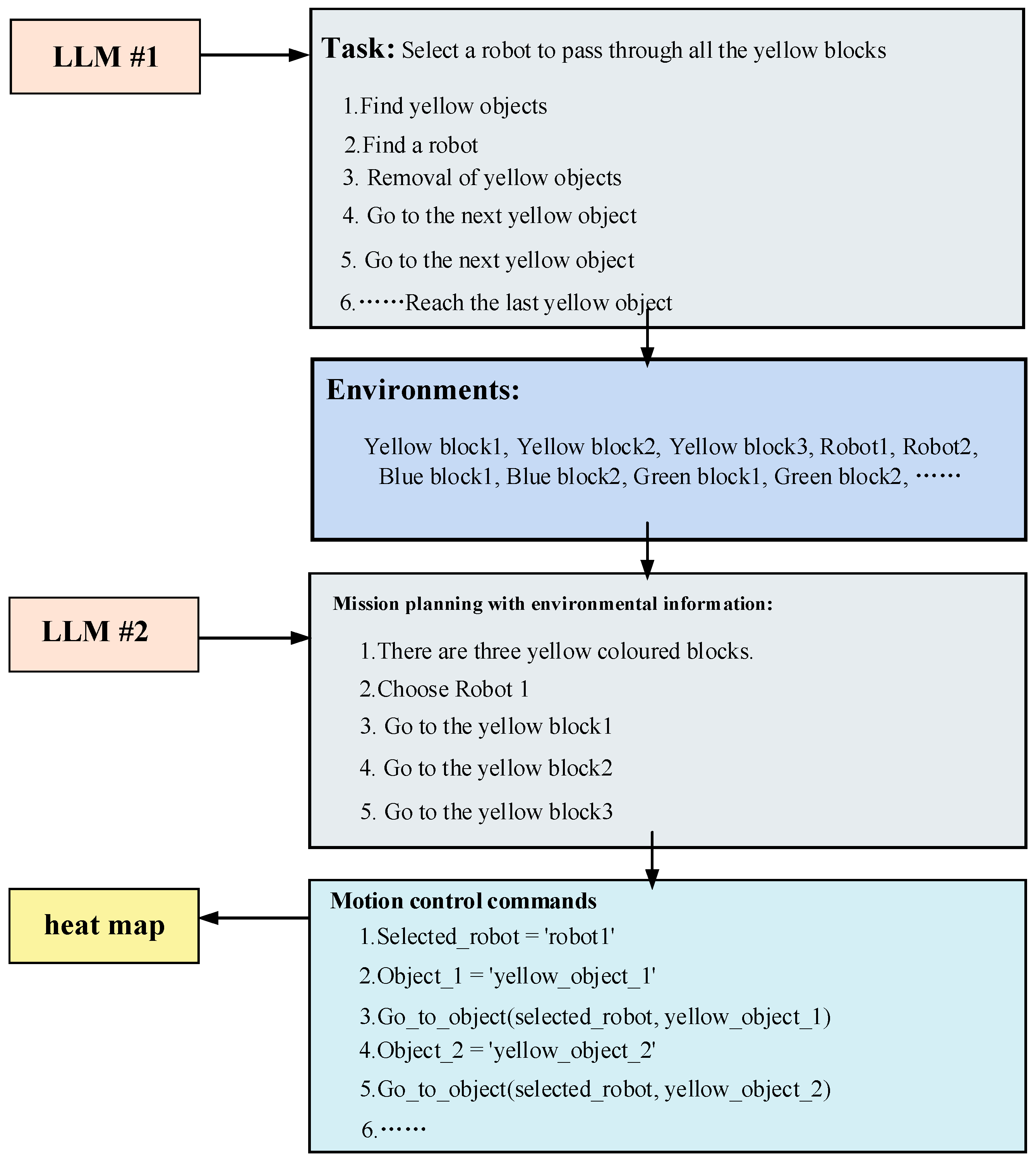

3.1. Multi-Layer Large Language Model Architecture for Task Decomposition

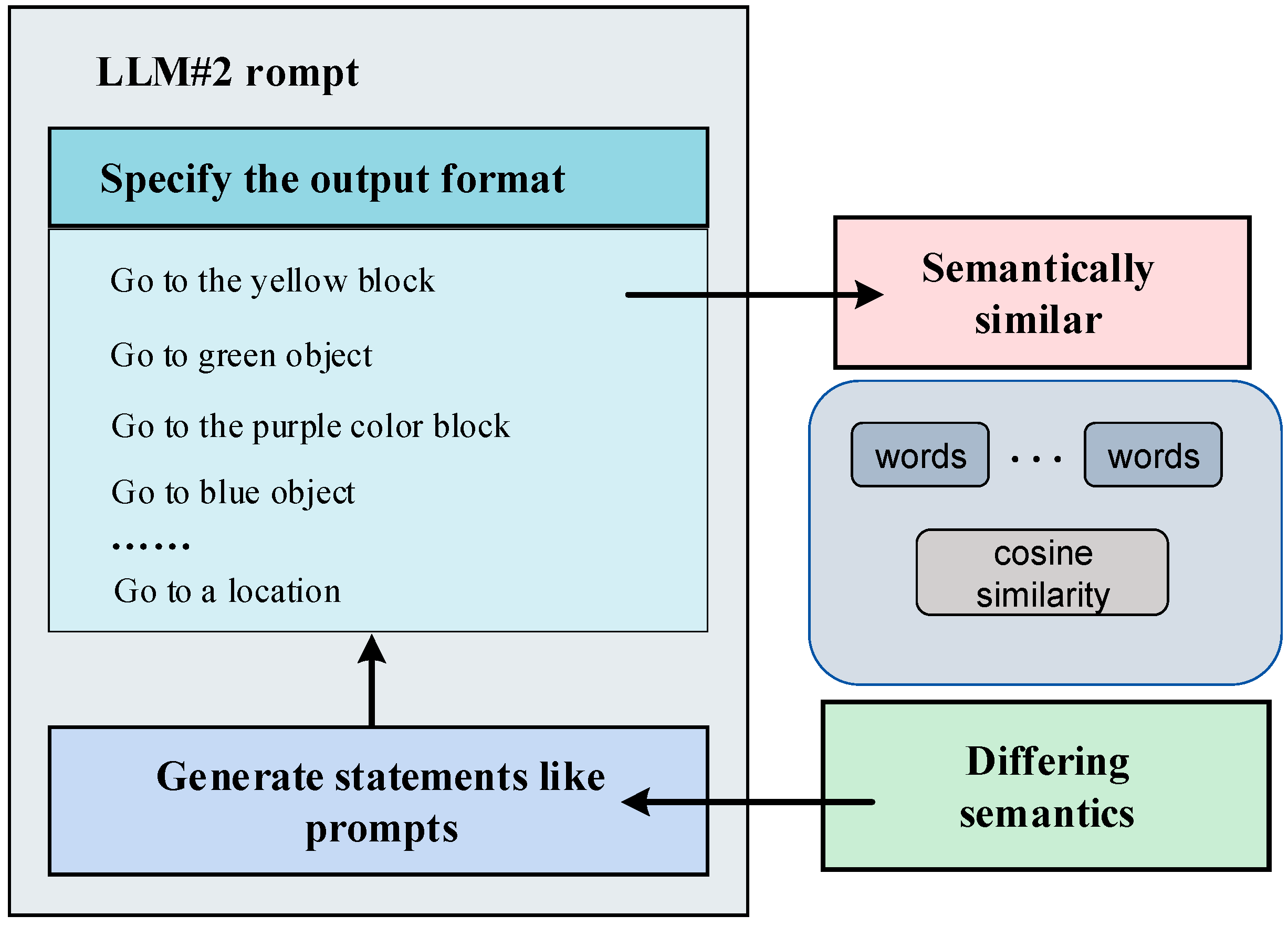

3.2. Semantic Similarity-Based Alignment of Task Descriptions with Robot Control Instructions

| Algorithm 1: Semantic Information Vector Space Alignment Methods Cosine similarity vector alignment (outline) |

| 1. Input: |

| 2. quantitative: |

| 3. //vectorization and location information |

| 4. normalization: |

| 5. //vector normalization |

| 6. cosine similarity: |

| 7. //Text Similarity Determination |

| 8. //Alignment of text A and text B |

| 9. Determining text similarity: (−1 to 1) The closer to 1 the vectors are, the more similar they are. |

3.3. Robot Heatmap Navigation Algorithm for Open Environment Awareness

| Algorithm 2: Dynamic navigation algorithms for calorific heat maps Environmental Interaction and Mathematical Representation (outline) |

| 1. Input: |

| 2. Breakdown of tasks: |

| 3. |

| 4. The problem is reduced to the optimization equation |

| 5. |

| 6. Constructing a mathematical representation of |

| 7. |

| 8. Construct a two-dimensional space: |

| 9. Constructing calorific heat maps: |

| 10. |

| 11. Define a high calorific value path: |

| 12. //We define the heat value of a path as the sum of the heat values of all nodes on the path. |

| 13. Path generation: |

| 14. // denotes the set of neighbouring nodes of node |

| 15. Output: //Path with the highest calorific value |

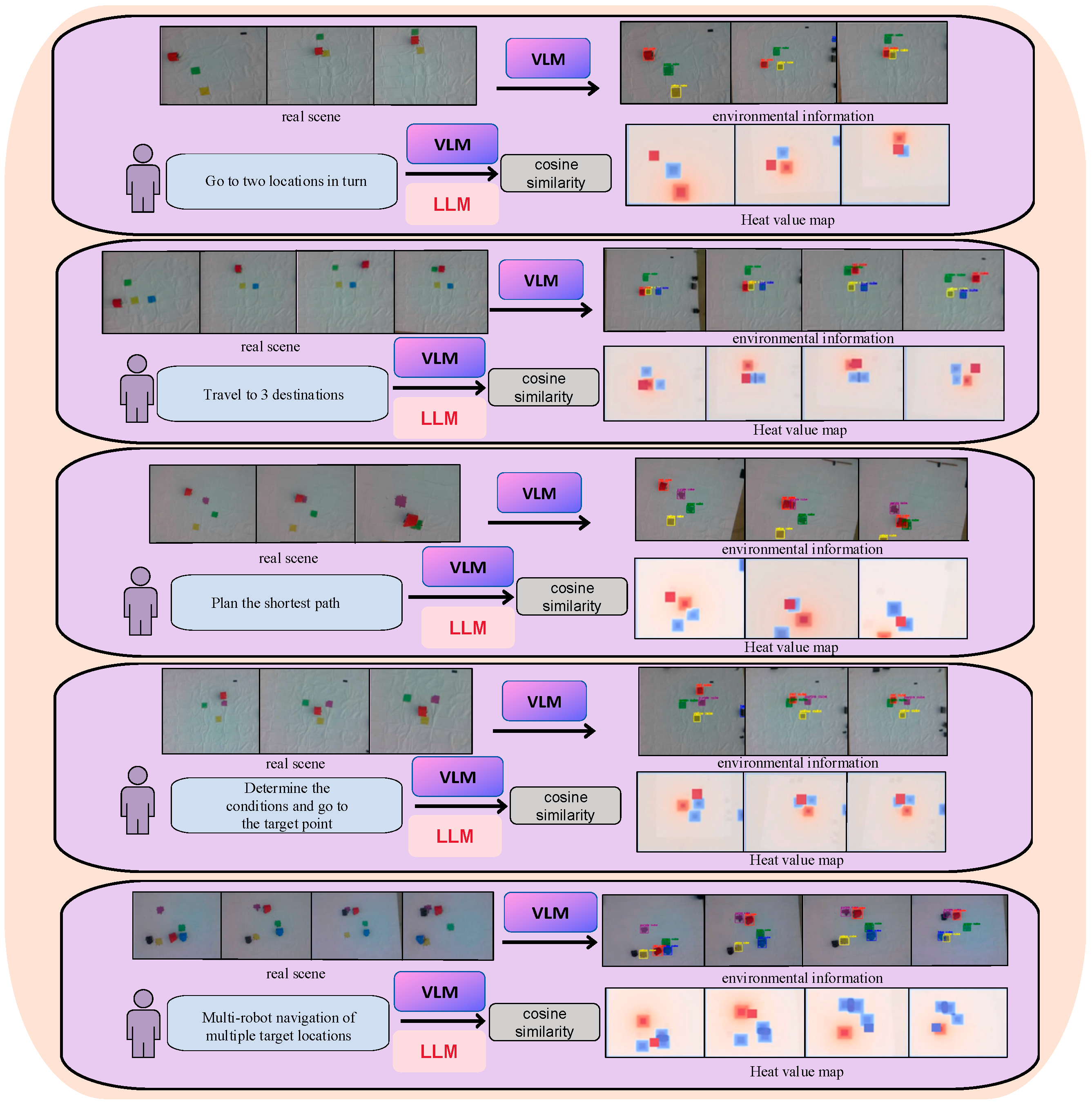

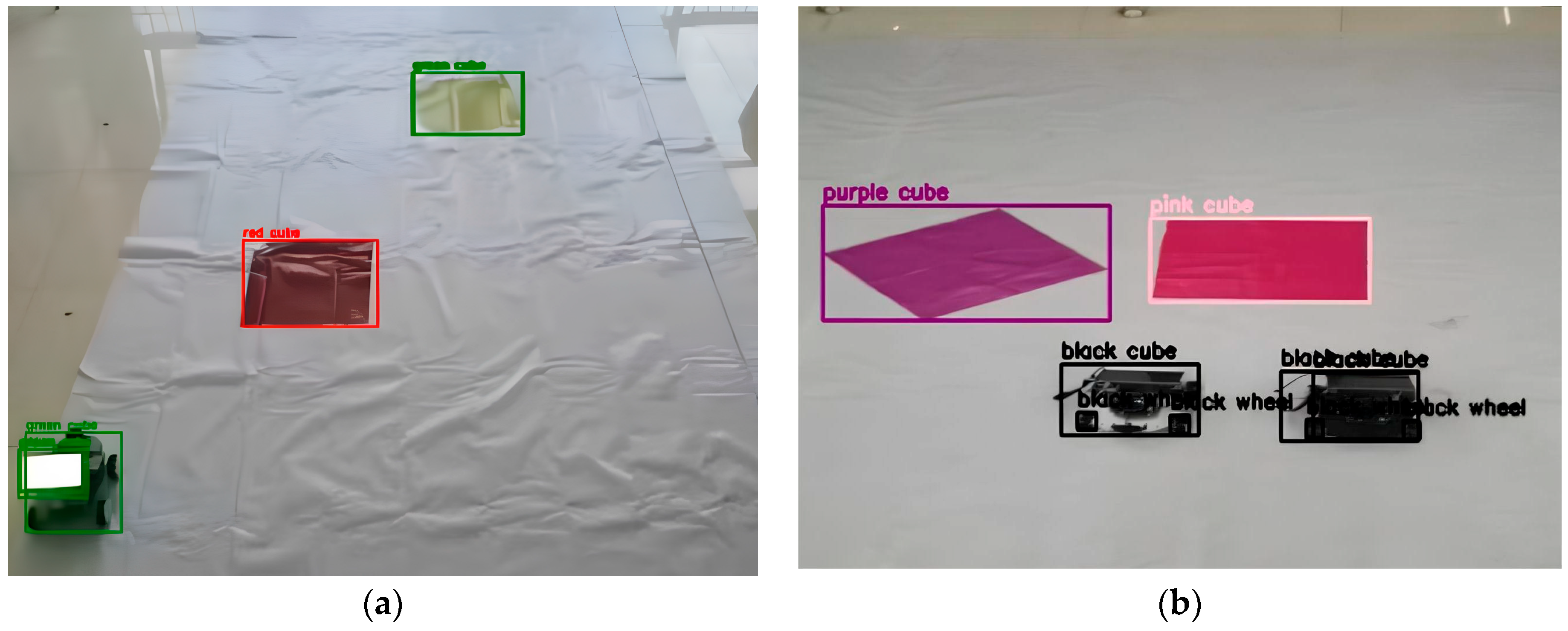

4. Experiments and Analyses

4.1. Experimental Design

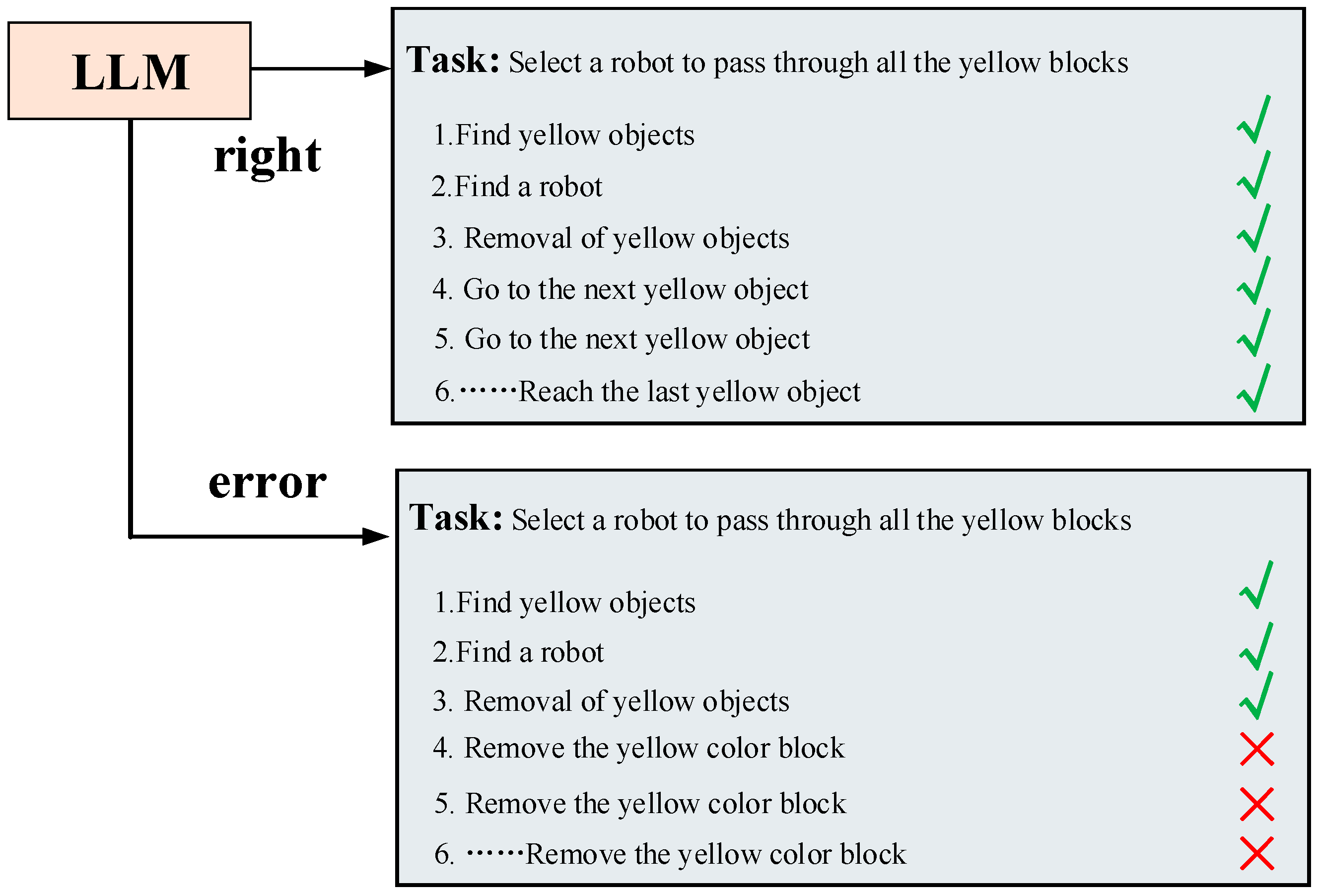

4.2. Intermediate Process of Task Planning

4.3. Feedback Experiments Based on Semantic Similarity

4.4. Discussion and Analysis of Failed Cases

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Fiedel, N. Palm: Scaling language modeling with pathways. J. Mach. Learn. Res. 2023, 24, 1–113. [Google Scholar]

- Toussaint, M.; Harris, J.; Ha, J.S.; Driess, D.; Hönig, W. Sequence-of-Constraints MPC: Reactive Timing-Optimal Control of Sequential Manipulation. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 13753–13760. [Google Scholar]

- Liu, W.; Paxton, C.; Hermans, T.; Fox, D. Structformer: Learning spatial structure for language-guided semantic rearrangement of novel objects. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 6322–6329. [Google Scholar]

- Thomason, J.; Padmakumar, A.; Sinapov, J.; Walker, N.; Jiang, Y.; Yedidsion, H.; Mooney, R. Jointly improving parsing and perception for natural language commands through human-robot dialog. J. Artif. Intell. Res. 2020, 67, 327–374. [Google Scholar] [CrossRef]

- Jang, E.; Irpan, A.; Khansari, M.; Kappler, D.; Ebert, F.; Lynch, C.; Finn, C. Bc-z: Zero-shot task generalization with robotic imitation learning. In Proceedings of the Conference on Robot Learning, PMLR, Auckland, New Zealand, 14–18 December 2022; pp. 991–1002. [Google Scholar]

- Brohan, A.; Brown, N.; Carbajal, J.; Chebotar, Y.; Dabis, J.; Finn, C.; Zitkovich, B. Rt-1: Robotics transformer for real-world control at scale. arXiv 2022, arXiv:2212.06817. [Google Scholar]

- Andreas, J.; Klein, D.; Levine, S. Learning with latent language. arXiv 2017, arXiv:1711.00482. [Google Scholar]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Liang, P. On the opportunities and risks of foundation models. arXiv 2021, arXiv:2108.07258. [Google Scholar]

- Tellex, S.; Gopalan, N.; Kress-Gazit, H.; Matuszek, C. Robots that use language. Annu. Rev. Control Robot. Auton. Syst. 2020, 3, 25–55. [Google Scholar] [CrossRef]

- Huang, W.; Abbeel, P.; Pathak, D.; Mordatch, I. Language models as zero-shot planners: Extracting actionable knowledge for embodied agents. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MD, USA, 17–23 July 2022; pp. 9118–9147. [Google Scholar]

- Micheli, V.; Fleuret, F. Language models are few-shot butlers. arXiv 2021, arXiv:2104.07972. [Google Scholar]

- Thomason, J.; Zhang, S.; Mooney, R.J.; Stone, P. Learning to interpret natural language commands through human-robot dialog. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Shwartz, V.; West, P.; Bras, R.L.; Bhagavatula, C.; Choi, Y. Unsupervised commonsense question answering with self-talk. arXiv 2020, arXiv:2004.05483. [Google Scholar]

- Blukis, V.; Knepper, R.A.; Artzi, Y. Few-shot object grounding and mapping for natural language robot instruction following. arXiv 2020, arXiv:2011.07384. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Amodei, D. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Jiang, Y.; Gu, S.S.; Murphy, K.P.; Finn, C. Language as an abstraction for hierarchical deep reinforcement learning. Adv. Neural Inf. Process. Syst. 2019, 32. Available online: https://proceedings.neurips.cc/paper_files/paper/2019/file/0af787945872196b42c9f73ead2565c8-Paper.pdf (accessed on 2 February 2024).

- Sharma, P.; Sundaralingam, B.; Blukis, V.; Paxton, C.; Hermans, T.; Torralba, A.; Fox, D. Correcting robot plans with natural language feedback. arXiv 2022, arXiv:2204.05186. [Google Scholar]

- Huang, W.; Wang, C.; Zhang, R.; Li, Y.; Wu, J.; Fei-Fei, L. Voxposer: Composable 3d heat maps for robotic manipulation with language models. arXiv 2023, arXiv:2307.05973. [Google Scholar]

- Zeng, A.; Attarian, M.; Ichter, B.; Choromanski, K.; Wong, A.; Welker, S.; Florence, P. Socratic models: Composing zero-shot multimodal reasoning with language. arXiv 2022, arXiv:2204.00598. [Google Scholar]

- Liang, J.; Huang, W.; Xia, F.; Xu, P.; Hausman, K.; Ichter, B.; Zeng, A. Code as policies: Language model programs for embodied control. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 9493–9500. [Google Scholar]

- Huang, W.; Xia, F.; Shah, D.; Driess, D.; Zeng, A.; Lu, Y.; Ichter, B. Grounded decoding: Guiding text generation with grounded models for robot control. arXiv 2023, arXiv:2303.00855. [Google Scholar]

- Wu, J.; Antonova, R.; Kan, A.; Lepert, M.; Zeng, A.; Song, S.; Funkhouser, T. Tidybot: Personalized robot assistance with large language models. arXiv 2023, arXiv:2305.05658. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sutskever, I. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Gu, X.; Lin, T.Y.; Kuo, W.; Cui, Y. Open-vocabulary object detection via vision and language knowledge distillation. arXiv 2021, arXiv:2104.13921. [Google Scholar]

- Kamath, A.; Singh, M.; LeCun, Y.; Synnaeve, G.; Misra, I.; Carion, N. Mdetr-modulated detection for end-to-end multi-modal understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1780–1790. [Google Scholar]

- Minderer, M.; Gritsenko, A.; Stone, A.; Neumann, M.; Weissenborn, D.; Dosovitskiy, A.; Houlsby, N. Simple open-vocabulary object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 728–755. [Google Scholar]

- Zellers, R.; Holtzman, A.; Peters, M.E.; Mottaghi, R.; Kembhavi, A.; Farhadi, A.; Choi, Y. PIGLeT: Language grounding through neuro-symbolic interaction in a 3D world. arXiv 2021, arXiv:2106.00188. [Google Scholar]

- Zellers, R.; Lu, X.; Hessel, J.; Yu, Y.; Park, J.S.; Cao, J.; Choi, Y. Merlot: Multimodal neural script knowledge models. Adv. Neural Inf. Process. Syst. 2021, 34, 23634–23651. [Google Scholar]

- Shah, D.; Osiński, B.; Levine, S. Lm-nav: Robotic navigation with large pre-trained models of language, vision, and action. In Proceedings of the Conference on Robot Learning, PMLR, Atlanta, GA, USA, 6–9 November 2023; pp. 492–504. [Google Scholar]

- Cui, Y.; Karamcheti, S.; Palleti, R.; Shivakumar, N.; Liang, P.; Sadigh, D. No, to the Right: Online Language Corrections for Robotic Manipulation via Shared Autonomy. In Proceedings of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm, Sweden, 13–16 March 2023; pp. 93–101. [Google Scholar]

- Stone, A.; Xiao, T.; Lu, Y.; Gopalakrishnan, K.; Lee, K.H.; Vuong, Q.; Hausman, K. Open-world object manipulation using pre-trained vision-language models. arXiv 2023, arXiv:2303.00905. [Google Scholar]

- Ma, Y.J.; Liang, W.; Som, V.; Kumar, V.; Zhang, A.; Bastani, O.; Jayaraman, D. LIV: Language-Image Representations and Rewards for Robotic Control. arXiv 2023, arXiv:2306.00958. [Google Scholar]

- Nair, S.; Mitchell, E.; Chen, K.; Savarese, S.; Finn, C. Learning language-conditioned robot behavior from offline data and crowd-sourced annotation. In Proceedings of the Conference on Robot Learning, PMLR, Auckland, New Zealand, 14–18 December 2022; pp. 1303–1315. [Google Scholar]

- Singh, I.; Blukis, V.; Mousavian, A.; Goyal, A.; Xu, D.; Tremblay, J.; Garg, A. Progprompt: Generating situated robot task plans using large language models. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 11523–11530. [Google Scholar]

- Raman, S.S.; Cohen, V.; Rosen, E.; Idrees, I.; Paulius, D.; Tellex, S. Planning with large language models via corrective re-prompting. In Proceedings of the NeurIPS 2022 Foundation Models for Decision Making Workshop, New Orleans, LA, USA, 3 December 2022. [Google Scholar]

- Liu, B.; Jiang, Y.; Zhang, X.; Liu, Q.; Zhang, S.; Biswas, J.; Stone, P. Llm+ p: Empowering large language models with optimal planning proficiency. arXiv 2023, arXiv:2304.11477. [Google Scholar]

- Vemprala, S.; Bonatti, R.; Bucker, A.; Kapoor, A. Chatgpt for robotics: Design principles and model abilities. Microsoft Auton. Syst. Robot. Res. 2023, 2, 20. [Google Scholar]

- Lin, K.; Agia, C.; Migimatsu, T.; Pavone, M.; Bohg, J. Text2motion: From natural language instructions to feasible plans. arXiv 2023, arXiv:2303.12153. [Google Scholar] [CrossRef]

- Driess, D.; Xia, F.; Sajjadi, M.S.; Lynch, C.; Chowdhery, A.; Ichter, B.; Florence, P. Palm-e: An embodied multimodal language model. arXiv 2023, arXiv:2303.03378. [Google Scholar]

- Yuan, H.; Zhang, C.; Wang, H.; Xie, F.; Cai, P.; Dong, H.; Lu, Z. Plan4mc: Skill reinforcement learning and planning for open-world minecraft tasks. arXiv 2023, arXiv:2303.16563. [Google Scholar]

- Xie, Y.; Yu, C.; Zhu, T.; Bai, J.; Gong, Z.; Soh, H. Translating natural language to planning goals with large-language models. arXiv 2023, arXiv:2302.05128. [Google Scholar]

- Lu, Y.; Lu, P.; Chen, Z.; Zhu, W.; Wang, X.E.; Wang, W.Y. Multimodal Procedural Planning via Dual Text-Image Prompting. arXiv 2023, arXiv:2305.01795. [Google Scholar]

- Kwon, M.; Xie, S.M.; Bullard, K.; Sadigh, D. Reward design with language models. arXiv 2023, arXiv:2303.00001. [Google Scholar]

- Du, Y.; Watkins, O.; Wang, Z.; Colas, C.; Darrell, T.; Abbeel, P.; Andreas, J. Guiding pretraining in reinforcement learning with large language models. arXiv 2023, arXiv:2302.06692. [Google Scholar]

- Hu, H.; Sadigh, D. Language instructed reinforcement learning for human-ai coordination. arXiv 2023, arXiv:2304.07297. [Google Scholar]

- Bahl, S.; Mendonca, R.; Chen, L.; Jain, U.; Pathak, D. Affordances from Human Videos as a Versatile Representation for Robotics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13778–13790. [Google Scholar]

- Patel, D.; Eghbalzadeh, H.; Kamra, N.; Iuzzolino, M.L.; Jain, U.; Desai, R. Pretrained Language Models as Visual Planners for Human Assistance. arXiv 2023, arXiv:2304.09179. [Google Scholar]

- Wang, G.; Xie, Y.; Jiang, Y.; Mandlekar, A.; Xiao, C.; Zhu, Y.; Anandkumar, A. Voyager: An open-ended embodied agent with large language models. arXiv 2023, arXiv:2305.16291. [Google Scholar]

- Tam, A.; Rabinowitz, N.; Lampinen, A.; Roy, N.A.; Chan, S.; Strouse, D.J.; Hill, F. Semantic exploration from language abstractions and pretrained representations. Adv. Neural Inf. Process. Syst. 2022, 35, 25377–25389. [Google Scholar]

- Mu, J.; Zhong, V.; Raileanu, R.; Jiang, M.; Goodman, N.; Rocktäschel, T.; Grefenstette, E. Improving intrinsic exploration with language abstractions. Adv. Neural Inf. Process. Syst. 2022, 35, 33947–33960. [Google Scholar]

- Li, S.; Puig, X.; Paxton, C.; Du, Y.; Wang, C.; Fan, L.; Zhu, Y. Pre-trained language models for interactive decision-making. Adv. Neural Inf. Process. Syst. 2022, 35, 31199–31212. [Google Scholar]

- Wang, Y.; Kordi, Y.; Mishra, S.; Liu, A.; Smith, N.A.; Khashabi, D.; Hajishirzi, H. Self-instruct: Aligning language model with self generated instructions. arXiv 2022, arXiv:2212.10560. [Google Scholar]

- Yang, R.; Song, L.; Li, Y.; Zhao, S.; Ge, Y.; Li, X.; Shan, Y. Gpt4tools: Teaching large language model to use tools via self-instruction. arXiv 2023, arXiv:2305.18752. [Google Scholar]

| Task | Feedback 0 | Feedback 1 | Feedback 2 | Feedback 3 | Feedback 4 | Feedback 5 |

|---|---|---|---|---|---|---|

| Task 1: Travel to two target sites | 4/10 | 7/10 | 8/10 | 9/10 | 10/10 | 10/10 |

| Task 2: Travel to three target sites | 3/10 | 6/10 | 8/10 | 8/10 | 8/10 | 8/10 |

| Task 3: Planning the shortest route | 3/10 | 6/10 | 7/10 | 8/10 | 8/10 | 8/10 |

| Task 4: Self-determination of target location | 4/10 | 5/10 | 7/10 | 7/10 | 7/10 | 7/10 |

| Task 5: Multi-robot to multi-objective tasks | 2/10 | 5/10 | 6/10 | 7/10 | 7/10 | 7/10 |

| total | 32% | 58% | 72% | 78% | 80% | 80% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luan, Z.; Lai, Y.; Huang, R.; Bai, S.; Zhang, Y.; Zhang, H.; Wang, Q. Enhancing Robot Task Planning and Execution through Multi-Layer Large Language Models. Sensors 2024, 24, 1687. https://doi.org/10.3390/s24051687

Luan Z, Lai Y, Huang R, Bai S, Zhang Y, Zhang H, Wang Q. Enhancing Robot Task Planning and Execution through Multi-Layer Large Language Models. Sensors. 2024; 24(5):1687. https://doi.org/10.3390/s24051687

Chicago/Turabian StyleLuan, Zhirong, Yujun Lai, Rundong Huang, Shuanghao Bai, Yuedi Zhang, Haoran Zhang, and Qian Wang. 2024. "Enhancing Robot Task Planning and Execution through Multi-Layer Large Language Models" Sensors 24, no. 5: 1687. https://doi.org/10.3390/s24051687

APA StyleLuan, Z., Lai, Y., Huang, R., Bai, S., Zhang, Y., Zhang, H., & Wang, Q. (2024). Enhancing Robot Task Planning and Execution through Multi-Layer Large Language Models. Sensors, 24(5), 1687. https://doi.org/10.3390/s24051687