Soft Polymer Optical Fiber Sensors for Intelligent Recognition of Elastomer Deformations and Wearable Applications

Abstract

1. Introduction

2. Materials and Methods

3. Results and Discussion

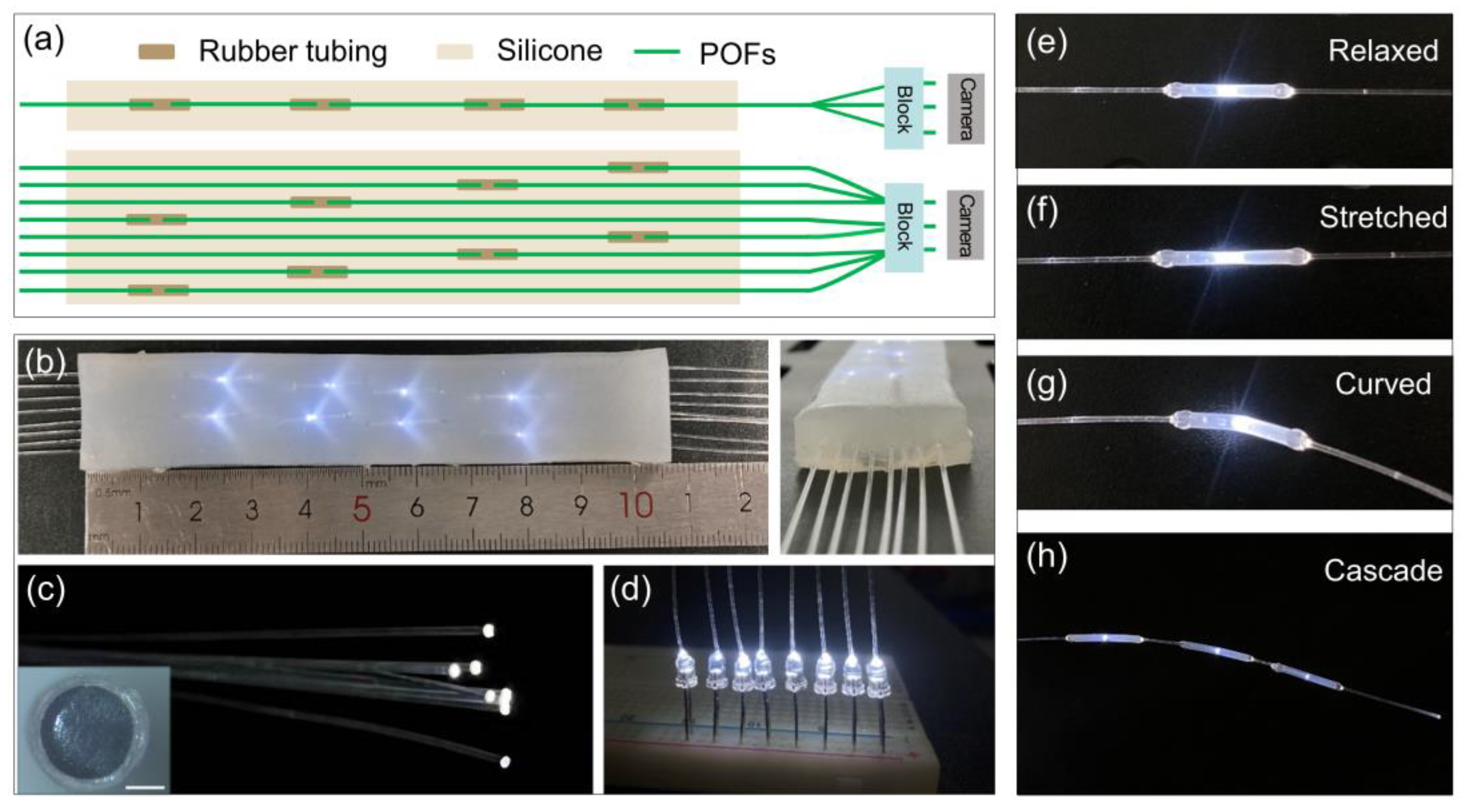

3.1. Quantification of POF Sensors to Mechanical Deformations

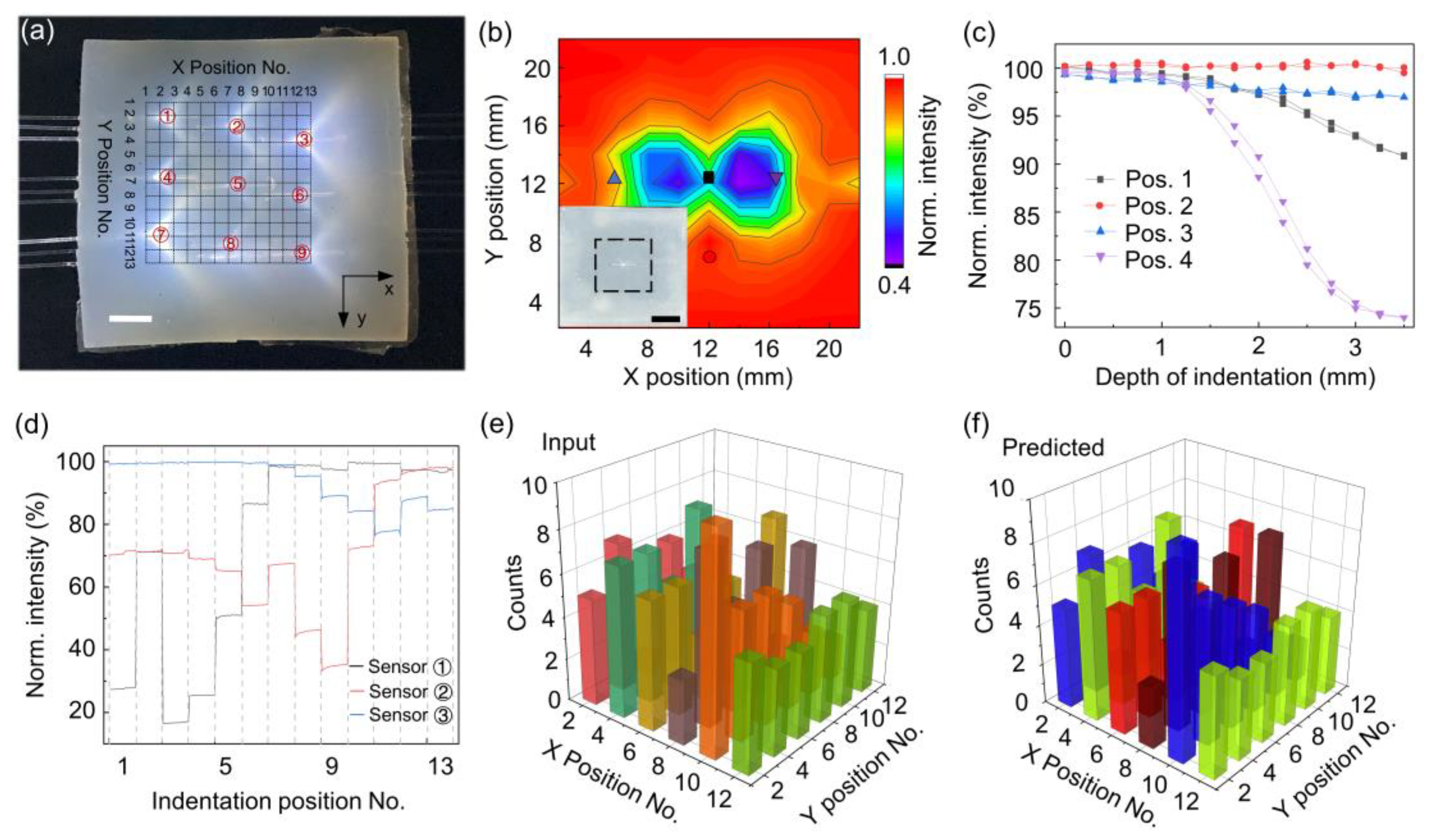

3.2. Demonstration of Spatially Resolved Pressing Recognition

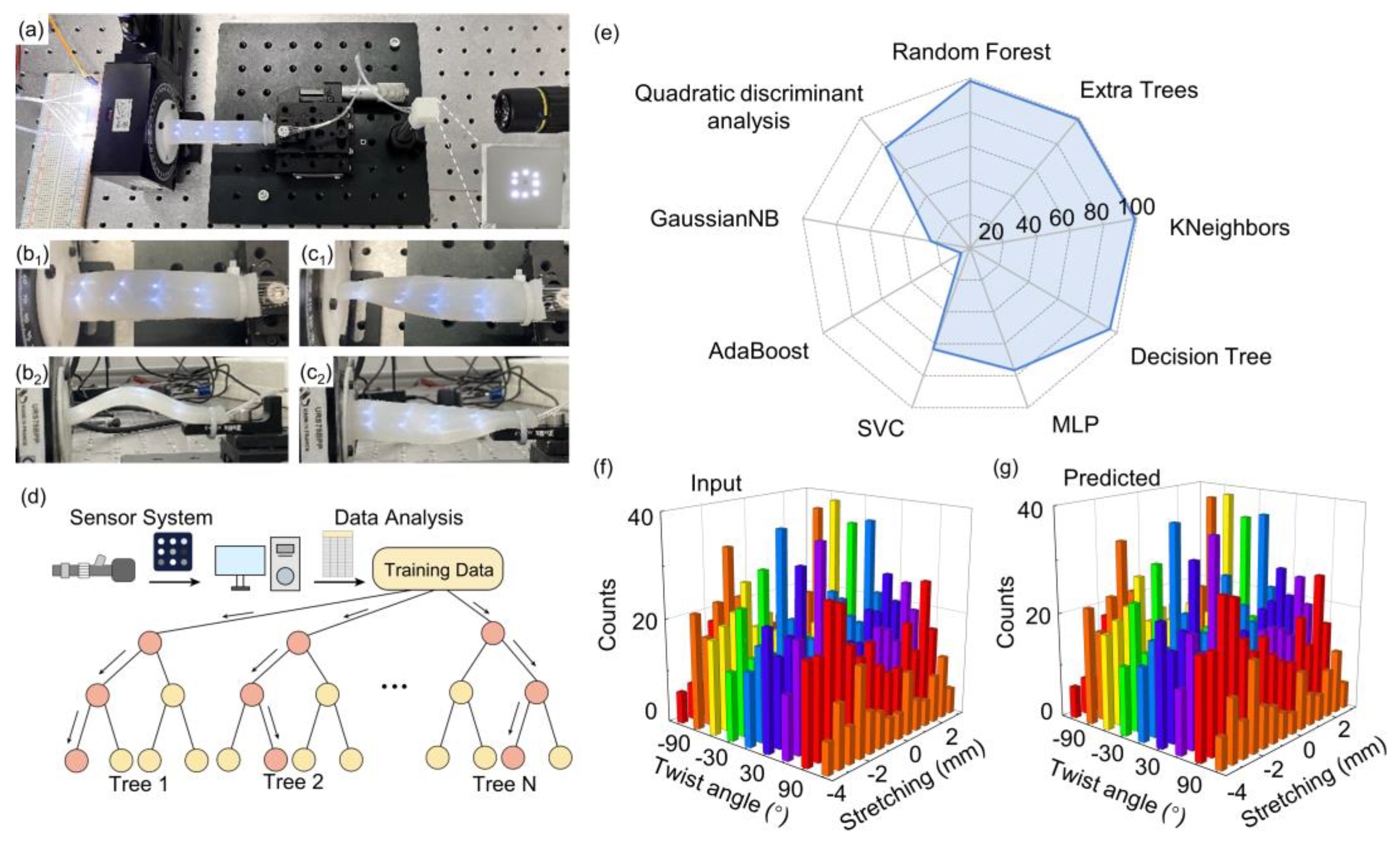

3.3. Demonstration of Multimodal Deformation Recognition

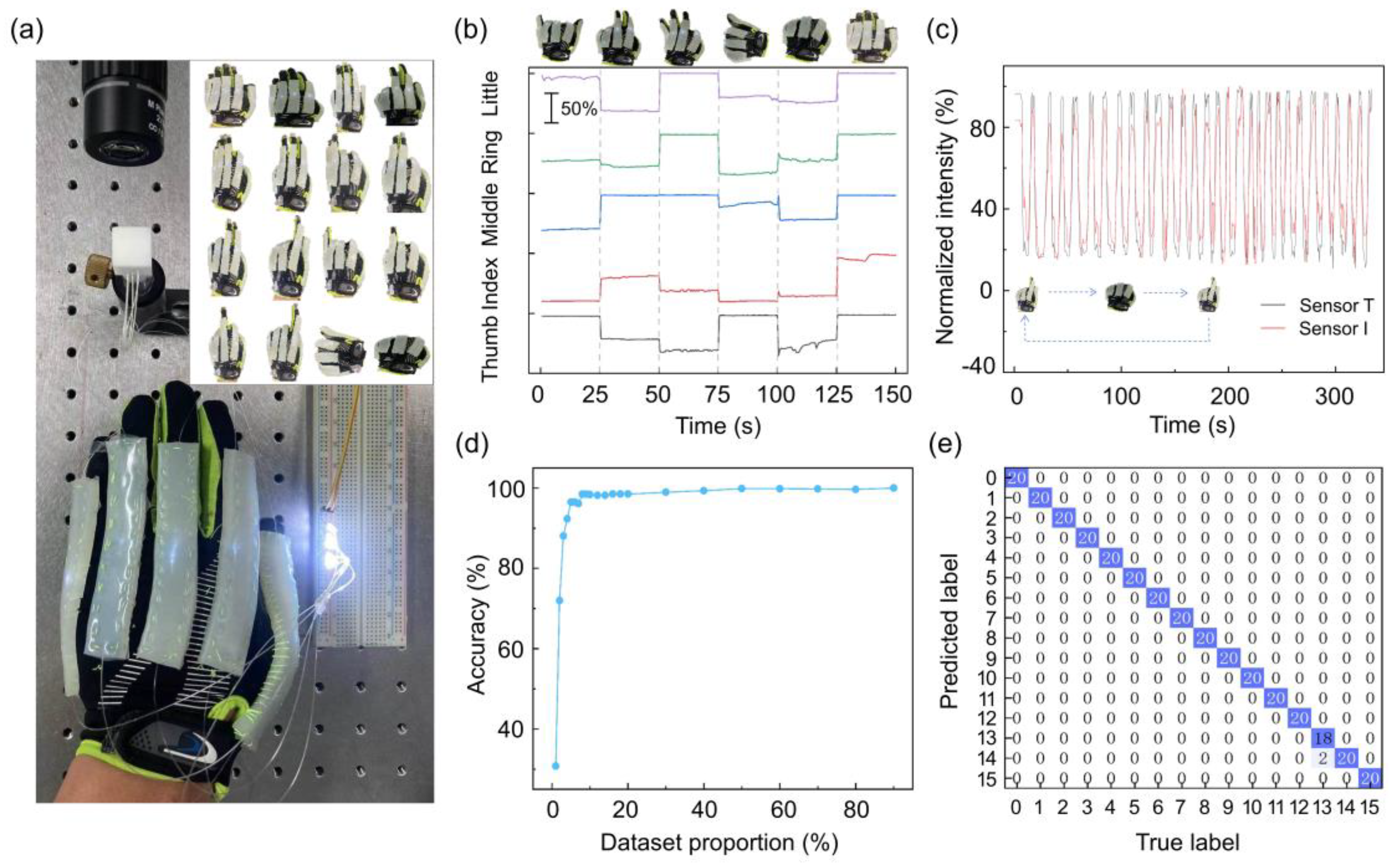

3.4. Integrated Glove Sensors for Hand Gesture Recognition

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wallin, T.; Pikul, J.; Shepherd, R.F. 3D printing of soft robotic systems. Nat. Rev. Mater. 2018, 3, 84–100. [Google Scholar] [CrossRef]

- Shih, B.; Shah, D.; Li, J.; Thuruthel, T.G.; Park, Y.-L.; Iida, F.; Bao, Z.; Kramer-Bottiglio, R.; Tolley, M.T. Electronic skins and machine learning for intelligent soft robots. Sci. Robot. 2020, 5, eaaz9239. [Google Scholar] [CrossRef] [PubMed]

- Rus, D.; Tolley, M.T. Design, fabrication and control of soft robots. Nature 2015, 521, 467–475. [Google Scholar] [CrossRef] [PubMed]

- Martinez, R.V.; Branch, J.L.; Fish, C.R.; Jin, L.H.; Shepherd, R.F.; Nunes, R.M.D.; Suo, Z.G.; Whitesides, G.M. Robotic tentacles with three-dimensional mobility based on flexible elastomers. Adv. Mater. 2013, 25, 205–212. [Google Scholar] [CrossRef] [PubMed]

- Amjadi, M.; Kyung, K.U.; Park, I.; Sitti, M. Stretchable, skin-mountable, and wearable strain sensors and their potential applications: A review. Adv. Funct. Mater. 2016, 26, 1678–1698. [Google Scholar] [CrossRef]

- Chortos, A.; Liu, J.; Bao, Z. Pursuing prosthetic electronic skin. Nat. Mater. 2016, 15, 937–950. [Google Scholar] [CrossRef] [PubMed]

- Hammock, M.L.; Chortos, A.; Tee, B.C.K.; Tok, J.B.H.; Bao, Z. 25th Anniversary Article: The evolution of electronic skin (E-skin): A brief history, design considerations, and recent progress. Adv. Mater. 2013, 25, 5997–6038. [Google Scholar] [CrossRef] [PubMed]

- He, J.; Xiao, P.; Shi, J.W.; Liang, Y.; Lu, W.; Chen, Y.S.; Wang, W.Q.; Théato, P.; Kuo, S.W.; Chen, T. High performance humidity fluctuation sensor for wearable devices via a bioinspired atomic-precise tunable graphene-polymer heterogeneous sensing junction. Chem. Mater. 2018, 30, 4343–4354. [Google Scholar] [CrossRef]

- Kim, J.; Lee, M.; Shim, H.J.; Ghaffari, R.; Cho, H.R.; Son, D.; Jung, Y.H.; Soh, M.; Choi, C.; Jung, S.; et al. Stretchable silicon nanoribbon electronics for skin prosthesis. Nat. Commun. 2014, 5, 5747. [Google Scholar] [CrossRef]

- Guo, J.; Yang, C.; Dai, Q.; Kong, L. Soft and stretchable polymeric optical waveguide-based sensors for wearable and biomedical applications. Sensors 2019, 19, 3771. [Google Scholar] [CrossRef]

- Zhao, L.; Tan, Y.; Li, H.; Chen, J.H. Wearable fiber-optic sensors: Recent advances and future opportunities. Sci. Sin. Phys. Mech. Astron. 2023, 53, 114204. [Google Scholar]

- Zhang, L.; Pan, J.; Zhang, Z.; Wu, H.; Yao, N.; Cai, D.; Xu, Y.; Zhang, J.; Sun, G.; Wang, L.; et al. Ultrasensitive skin-like wearable optical sensors based on glass micro/nanofibers. Opto-Electron. Adv. 2020, 3, 190022. [Google Scholar] [CrossRef]

- Li, J.-h.; Chen, J.-h.; Xu, F. Sensitive and wearable optical microfiber sensor for human health monitoring. Adv. Mater. Technol. 2018, 3, 1800296. [Google Scholar] [CrossRef]

- Zhao, H.C.; O’Brien, K.; Li, S.; Shepherd, R.F. Optoelectronically innervated soft prosthetic hand via stretchable optical waveguides. Sci. Robot. 2016, 1, eaai7529. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.-T.; Zhan, L.-W.; Dai, Q.; Xu, B.; Chen, Y.; Lu, Y.-Q.; Xu, F. Self-assembled wavy optical microfiber for stretchable wearable sensor. Adv. Opt. Mater. 2021, 9, 2002206. [Google Scholar] [CrossRef]

- Ma, S.; Wang, X.; Li, P.; Yao, N.; Xiao, J.; Liu, H.; Zhang, Z.; Yu, L.; Tao, G.; Li, X. Optical micro/nano fibers enabled smart textiles for human–machine interface. Adv. Fiber Mater. 2022, 4, 1108–1117. [Google Scholar] [CrossRef]

- Zhao, L.; Wu, B.; Niu, Y.; Zhu, S.; Chen, Y.; Chen, H.; Chen, J.-H. Soft Optoelectronic sensors with deep learning for gesture recognition. Adv. Mater. Technol. 2022, 7, 2101698. [Google Scholar] [CrossRef]

- Abro, Z.A.; Hong, C.; Chen, N.; Zhang, Y.; Lakho, R.A.; Yasin, S. A fiber Bragg grating-based smart wearable belt for monitoring knee joint postures. Text. Res. J. 2020, 90, 386–394. [Google Scholar] [CrossRef]

- Da Silva, A.F.; Gonçalves, A.F.; Mendes, P.M.; Correia, J.H. FBG sensing glove for monitoring hand posture. IEEE Sens. J. 2011, 11, 2442–2448. [Google Scholar] [CrossRef]

- Pan, J.; Jiang, C.; Zhang, Z.; Zhang, L.; Wang, X.; Tong, L. Flexible liquid-filled fiber adapter enabled wearable optical sensors. Adv. Mater. Technol. 2020, 5, 2000079. [Google Scholar] [CrossRef]

- Chen, J.H.; Li, D.R.; Xu, F. Optical microfiber sensors: Sensing mechanisms, and recent advances. J. Light. Technol. 2019, 37, 2577–2589. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, L. Packaged Elastomeric optical fiber sensors for healthcare monitoring and human-machine interaction. Adv. Mater. Technol. 2024, 9, 2301415. [Google Scholar] [CrossRef]

- Wu, C.; Liu, X.; Ying, Y. Soft and stretchable optical waveguide: Light delivery and manipulation at complex biointerfaces creating unique windows for on-body sensing. ACS Sens. 2021, 6, 1446–1460. [Google Scholar] [CrossRef]

- Guo, J.; Niu, M.; Yang, C. Highly flexible and stretchable optical strain sensing for human motion detection. Optica 2017, 4, 1285–1288. [Google Scholar] [CrossRef]

- Van Meerbeek, I.M.; De Sa, C.M.; Shepherd, R.F. Soft optoelectronic sensory foams with proprioception. Sci. Robot. 2018, 3, eaau2489. [Google Scholar] [CrossRef] [PubMed]

- Bai, H.D.; Li, S.; Barreiros, J.; Tu, Y.Q.; Pollock, C.R.; Shepherd, R.F. Stretchable distributed fiber-optic sensors. Science 2020, 370, 848–852. [Google Scholar] [CrossRef] [PubMed]

- Yan, W.; Li, S.; Deguchi, M.; Zheng, Z.; Rus, D.; Mehta, A. Origami-based integration of robots that sense, decide, and respond. Nat. Commun. 2023, 14, 1553. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y.; Li, J.H.; Solomon, S.A.; Min, J.H.; Tu, J.B.; Guo, W.; Xu, C.H.; Song, Y.; Gao, W. All-printed soft human-machine interface for robotic physicochemical sensing. Sci. Robot. 2022, 7, eabn0495. [Google Scholar] [CrossRef] [PubMed]

- Thuruthel, T.G.; Shih, B.; Laschi, C.; Tolley, M.T. Soft robot perception using embedded soft sensors and recurrent neural networks. Sci. Robot. 2019, 4, eaav1488. [Google Scholar] [CrossRef]

- Liao, X.; Wang, W.; Zhong, L.; Lai, X.; Zheng, Y. Synergistic sensing of stratified structures enhancing touch recognition for multifunctional interactive electronics. Nano Energy 2019, 62, 410–418. [Google Scholar] [CrossRef]

- Zhang, C.; Dong, H.; Zhang, C.; Fan, Y.; Yao, J.; Zhao, Y.S. Photonic skins based on flexible organic microlaser arrays. Sci. Adv. 2021, 7, eabh3530. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Ying, C.; Miao, Q.-G.; Liu, J.-C.; Lin, G. Advance and prospects of AdaBoost algorithm. Acta Autom. Sin. 2013, 39, 745–758. [Google Scholar]

- Tang, J.; Deng, C.; Huang, G.-B. Extreme learning machine for multilayer perceptron. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 809–821. [Google Scholar] [CrossRef]

- Navada, A.; Ansari, A.N.; Patil, S.; Sonkamble, B.A. Overview of use of decision tree algorithms in machine learning. In Proceedings of the 2011 IEEE Control and System Graduate Research Colloquium, Shah Alam, Malaysia, 27–28 June 2011; pp. 37–42. [Google Scholar]

| Algorithm | Prediction Accuracy for Strain (%) | Prediction Accuracy for Twist (%) | Prediction Accuracy for Combined Strain and Twist (%) |

|---|---|---|---|

| Random forest | 99.13 | 99.71 | 98.37 |

| Extra trees | 99.86 | 99.95 | 98.85 |

| KNeighbors | 99.95 | 99.90 | 98.75 |

| Decision tree | 97.31 | 98.70 | 95.291 |

| MLP | 86.64 | 82.32 | 76.56 |

| SVC | 74.05 | 53.58 | 63.30 |

| AdaBoost | 33.68 | 26.81 | 5.96 |

| GaussianNB | 67.23 | 34.31 | 23.34 |

| Quadratic Discriminant analysis | 99.09 | 81.84 | 77.23 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, N.; Yao, Y.; Wu, P.; Zhao, L.; Chen, J. Soft Polymer Optical Fiber Sensors for Intelligent Recognition of Elastomer Deformations and Wearable Applications. Sensors 2024, 24, 2253. https://doi.org/10.3390/s24072253

Wang N, Yao Y, Wu P, Zhao L, Chen J. Soft Polymer Optical Fiber Sensors for Intelligent Recognition of Elastomer Deformations and Wearable Applications. Sensors. 2024; 24(7):2253. https://doi.org/10.3390/s24072253

Chicago/Turabian StyleWang, Nicheng, Yuan Yao, Pengao Wu, Lei Zhao, and Jinhui Chen. 2024. "Soft Polymer Optical Fiber Sensors for Intelligent Recognition of Elastomer Deformations and Wearable Applications" Sensors 24, no. 7: 2253. https://doi.org/10.3390/s24072253

APA StyleWang, N., Yao, Y., Wu, P., Zhao, L., & Chen, J. (2024). Soft Polymer Optical Fiber Sensors for Intelligent Recognition of Elastomer Deformations and Wearable Applications. Sensors, 24(7), 2253. https://doi.org/10.3390/s24072253