In this section, we delve into the results obtained from our experiments and provide a comprehensive discussion of their implications. We analyze the performance of our proposed model, the adaptive temporal convolutional network autoencoder (ATCN-AE), in comparison with baseline methods. Through an in-depth examination of the findings, we aim to elucidate the strengths, weaknesses, and potential avenues for further improvement of our approach to detecting malicious data within mobile crowdsensing environments. We also present a comparative analysis of our experiment’s results against baseline approaches, serving as benchmarks to assess the efficacy of our model.

4.1. Performance Evaluation

The performance of the proposed ATCN-AE model is evaluated using a range of evaluation metrics, including accuracy, precision, recall, F1 score, and the area under the receiver operating characteristic curve (AUC-ROC). These metrics comprehensively assess how accurately the model classifies benign and malicious data instances.

Table 4 presents the confusion matrix from the ATCN-AE.

From

Table 4, the number of true positives represents the correctly classified malicious instances. In this case, the model correctly predicted 200 instances as malicious. False positives occur when the model predicts an instance as malicious when it is benign. In the confusion matrix, 16 instances are wrongly classified as malicious. False negatives arise when the model incorrectly classifies a malicious instance as benign. Here, six instances are falsely classified as benign. True negatives represent the instances that are correctly classified as benign. The model correctly predicted 1103 instances as benign. Furthermore, the evaluation of the proposed ATCN-AE using metrics including accuracy, precision, recall, F1 score, and the sample averages is shown in

Table 5 and visualized in

Figure 3.

The accuracy of the model is calculated by dividing the sum of true positives and true negatives by the total number of instances, as presented in Equation (3).

For this model, the accuracy is calculated as

resulting in an accuracy of 98%, which indicates that the model accurately classifies 98.4% of the instances in the dataset. This relatively high accuracy shows that the model distinguishes between benign and malicious data well. Precision measures the proportion of correctly identified malicious data out of all instances predicted as malicious, given by Equation (4).

The precision is calculated as

, resulting in a precision of approximately 92%. This evaluation means that out of all instances predicted as malicious, around 92% are truly malicious. Recall, also known as sensitivity or true positive rate, measures the proportion of correctly identified malicious data out of all true malicious instances using Equation (5).

The recall is calculated as

resulting in approximately 97%, meaning the model captures 97% of the true malicious instances. F1 score, on the other hand, is the harmonic mean of precision and recall, providing a balanced measure of the performance of the model. It is calculated using Equation (6).

Using the calculated precision and recall values, the F1 score is approximately 94%. Micro, macro, weighted, and sample averages summarize the performance of the model across all classes. The micro average considers the total true positives, false positives, and false negatives of all the classes. The macro average calculates the average performance for each class without considering class imbalance. The weighted average considers the class distribution in the dataset, providing higher weightage to classes with more instances. The sample average is a simple average of the metrics for each class.

The micro average, accuracy, precision, recall, and F1 score are all 0.96, which indicates consistent performance across all classes. The macro average accuracy is 0.95, indicating good overall performance. However, the macro average recall is 0.93, slightly lower than the macro average precision of 0.95, indicating a potential imbalance in class performance. The weighted and sample averages are similar to the micro average, reflecting the balanced performance of the model.

Overall, the results indicate high accuracy and precision in detecting benign and malicious data. However, there is room for improvement in the precision and F1 score, suggesting that the model may benefit from further refinement to enhance its ability to capture all instances of malicious data. We further evaluate the prediction of the proposed ATCN-AE model based on the test loss, test mean absolute error, FPR, and TPR, as displayed in

Table 6.

The test loss value of 0.171 indicates the average discrepancy between the model predictions and the true labels on the test dataset. The low test loss shows that predictions made by the model are closer to the ground truth labels, indicating better performance in capturing the patterns and features of the data. Meanwhile, the test MAE value of 0.96 represents the average absolute difference between the predictions on the test dataset and the true values. It measures the magnitude of the errors made by the ATCN-AE model in predicting malicious data. Within this evaluation, the low MAE indicates that the predictions made by the model are, on average, closer to the true values, demonstrating high accuracy in predicting malicious data.

Furthermore, the false positive rate is a performance metric that measures the proportion of incorrectly classified benign data out of all true benign data instances. For the ATCN-AE model, the FPR value of 0.013 indicates that only 1.3% of the true benign data are incorrectly classified as malicious by the ATCN-AE model. The low FPR is desirable as it signifies that the model effectively identifies benign data and minimizes false positives. On the other hand, the true positive rate quantifies the proportion of correctly identified malicious data out of all true malicious instances. Here, the TPR value of 0.873 indicates that the model correctly identifies 87.3% of malicious data. The higher TPR highlights that the model is effective, which, in the context of malicious data detection, can identify a significant portion of the true malicious data instances.

Another significant parameter of the ATCN-AE model is the reconstruction error. The threshold determines the sensitivity of the model to malicious data detection. Our experiment observed that the larger the threshold margin, the more malicious samples are misclassified as benign samples. Consequently, this decreases FPR and increases FNR. In contrast, a smaller threshold increases FPR and decreases FNR. Since we require the model to have a low false alarm, we set a significant threshold of 0.5 and obtained an FPR of 0.013.

The receiver operating characteristic (ROC) curve and the corresponding area under the curve (AUC) value of 0.97 provide a comprehensive assessment of the model’s ability to discriminate between benign and malicious data instances, as shown in

Figure 4. The high AUC score demonstrates the model’s excellent discriminative power, further validating its effectiveness in the malicious data detection task.

Figure 5 depicts the loss and accuracy curves of the proposed model. As

Figure 5 illustrates, the model learns and improves its performance; the loss decreases while the accuracy increases. This relationship between the loss and accuracy of the ATCN-AE model is a function of the training and validation process. We monitor the performance of the model using loss and accuracy on the validation dataset, which is calculated at the end of each training epoch. We trained the model with 100 epochs and obtained a decreasing loss and increasing accuracy before convergence during the training.

Comparing the validation loss and accuracy curves for both the training and testing datasets is crucial. As reflected in

Figure 6, the validation loss decreases for both the training and testing datasets as the number of epochs increases. The validation loss on the training data shows consistent improvement while maintaining similar trends on the validation data, suggesting that the model is not overfitting and can generalize well to unseen sensor data. The low validation loss of 0.2 as the model converges at 100 epochs denotes a better performance, showing that the predictions by the model are closer to the true class. Meanwhile, the validation accuracy represents the percentage of correctly predicted instances in the validation dataset. It measures the classification performance of the model on unseen data. The high validation accuracy between 96.4% and 96.8% demonstrates that the model performs well and makes more accurate predictions even with fewer epochs.

The result in

Figure 7 illustrates the predictions made by the proposed adaptive temporal convolutional network autoencoder (ATCN-AE) model on a dataset of mobile sensor data instances. The data points are classified as either “Normal” (benign) or “Malicious” based on the model’s output. The majority of the data points, represented by green markers, form a dense cluster near the bottom of the plot, indicating that the ATCN-AE model has classified these instances as benign sensor data conforming to expected patterns learned during training. Conversely, several data points marked with red “x” symbols are scattered at higher values along the y-axis, corresponding to instances identified as malicious by the model due to their significant deviation from the learned patterns of normal sensor data.

The clear separation between the two classes of data points in

Figure 7 highlights the effectiveness of the ATCN-AE model in distinguishing between benign and malicious sensor data instances within the mobile crowdsensing environment. The data distribution reveals that the ATCN-AE model achieves a high level of accuracy in detecting malicious data instances while minimizing false positives and false negatives, as supported by the results in

Table 6. The dense clustering of normal data points indicates accurate classification of benign sensor data, with only a few potential false negatives within the normal cluster. Similarly, the well-separated malicious data points show the effective identification of anomalous instances.

4.2. Comparison with State of the Art

To benchmark the proposed adaptive temporal convolutional network autoencoder (ATCN-AE) against the existing literature, we compare its performance with several prior studies on the task of detecting malicious data within IoT and mobile crowdsensing environments. Specifically, our comparison focuses on similar autoencoder models and models that employ the SherLock dataset. Results presented in

Table 7 demonstrate that our ATCN-AE model achieves approximately 98% accuracy in classifying benign and malicious data. This result signifies a marked improvement over existing baseline models such as the Sparse autoencoder (91.2%) [

15], CFLVAE (88.1% accuracy) [

16], and AE-D3F (82.0%) [

19] as visualized in

Figure 8. Additionally, our proposed model surpasses the performance of models utilizing the SherLock dataset, as evidenced by studies [

28,

29,

30], achieving accuracies of 82%, 83%, and 81%, respectively.

The comparative evaluation highlights the capabilities of our ATCN-AE model in learning effective representations to detect anomalous patterns in crowdsensed data. Our proposed model outperforms previous approaches, underlining the significance of explicitly modeling temporal dependencies and leveraging deep learning for enhanced security in mobile crowdsensing systems.

Overall, the proposed adaptive temporal convolutional network autoencoder for malicious data detection model presents a promising solution to address the growing concern of detecting malicious data in mobile crowdsensing applications. By leveraging the temporal information, capturing relevant features with TCNs, compressing the data with autoencoders, and adapting to the dynamic nature of the environment, the proposed model aims to enhance the security and reliability of mobile crowdsensing systems.

The experimental results and evaluation confirm the effectiveness and potential of our ATCN-AE model in detecting malicious data in mobile crowdsensing environments.

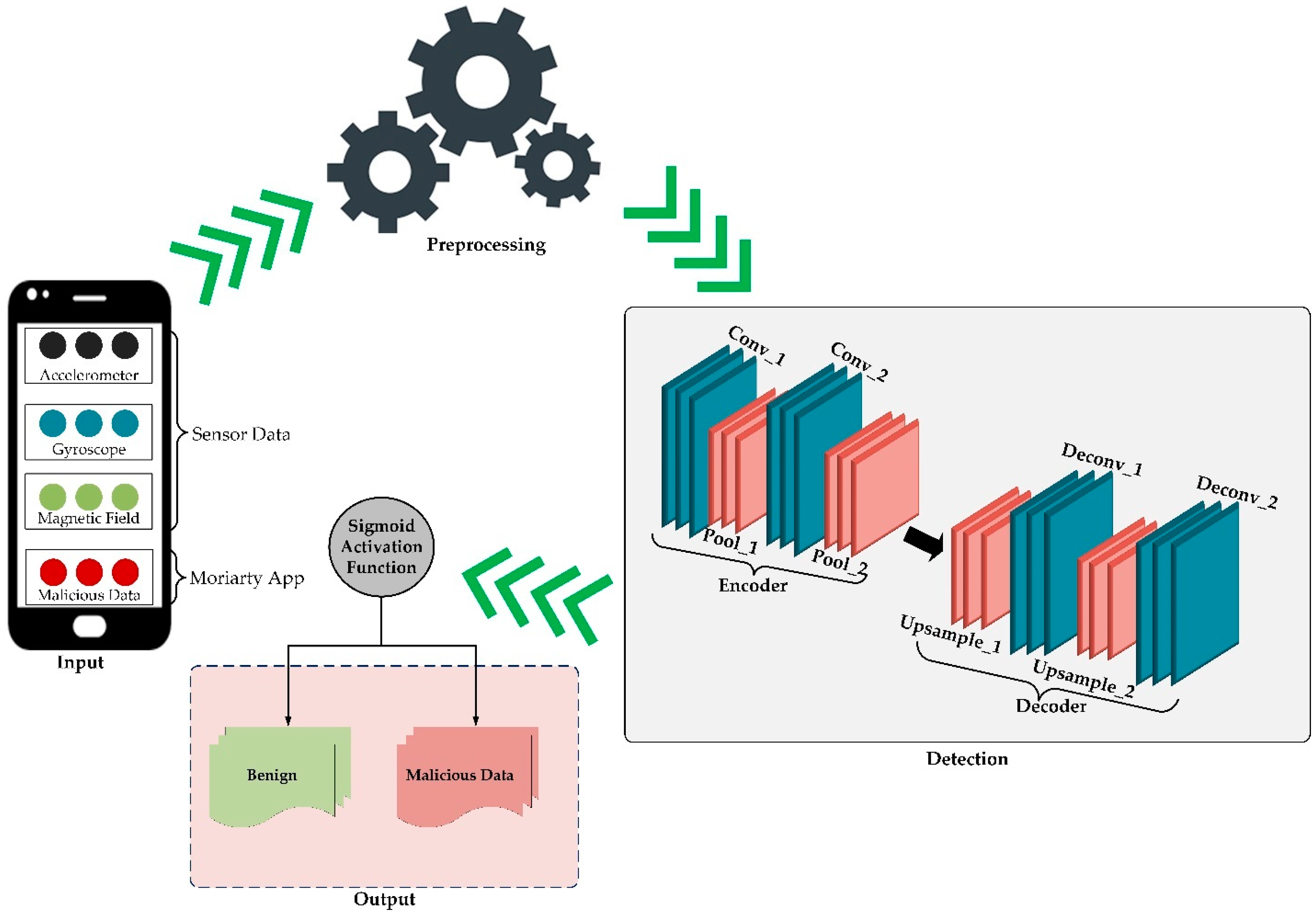

The key novelty of the approach presented in this paper is the introduction of an adaptive temporal convolutional network autoencoder (ATCN-AE) model specifically designed for detecting malicious data in mobile crowdsensing (MCS) systems. The proposed model combines the strengths of temporal convolutional networks (TCNs) and autoencoders in a novel architecture to effectively capture and analyze temporal patterns in MCS data, enabling accurate identification of malicious activities.

Existing anomaly detection methods, such as those reviewed in the paper, have limitations when applied to MCS systems. Many approaches do not explicitly model the temporal dependencies present in the sensor data collected by mobile devices, which can be crucial for detecting malicious activities that may exhibit specific temporal patterns. Additionally, some methods are not adaptive and may struggle to keep up with the dynamic nature of MCS environments where data characteristics and attack vectors can evolve over time.

The proposed ATCN-AE incorporates an adaptive learning mechanism that enables the model to continuously update its parameters and adapt to new patterns or emerging malicious activities in the MCS environment. This adaptive nature enhances the model’s robustness and ensures its effectiveness over time, even as the characteristics of malicious data evolve. Unlike many existing anomaly detection models designed for general IoT or network traffic scenarios, the ATCN-AE is tailored specifically for the MCS domain. It is designed to handle the unique challenges of detecting malicious data in the context of mobile crowd sensing where sensor data from numerous devices needs to be analyzed for temporal patterns and anomalies.

The experimental results presented in the paper demonstrate the effectiveness of the ATCN-AE model in detecting malicious data in an MCS scenario, achieving an accuracy of 98% and outperforming existing baseline models. The comparative analysis highlights the significance of explicitly modeling temporal dependencies and leveraging deep learning for enhanced security in mobile crowdsensing systems.