Cognitive Control Architecture for the Practical Realization of UAV Collision Avoidance

Abstract

:1. Introduction

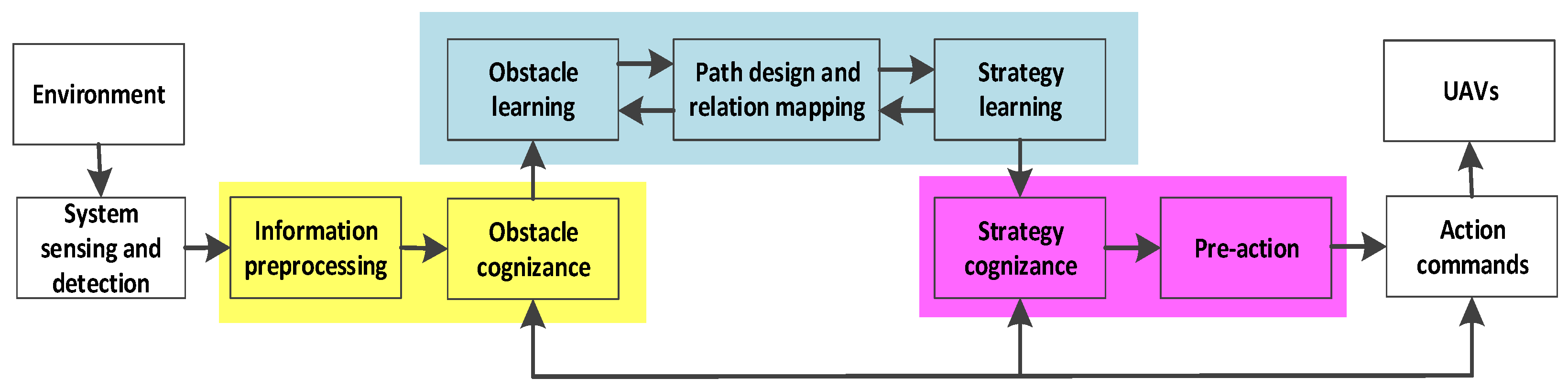

2. A Cognitive Control Architecture for the Conditioned Reflex Cycle in the Anti-Collision Behavior of UAVs

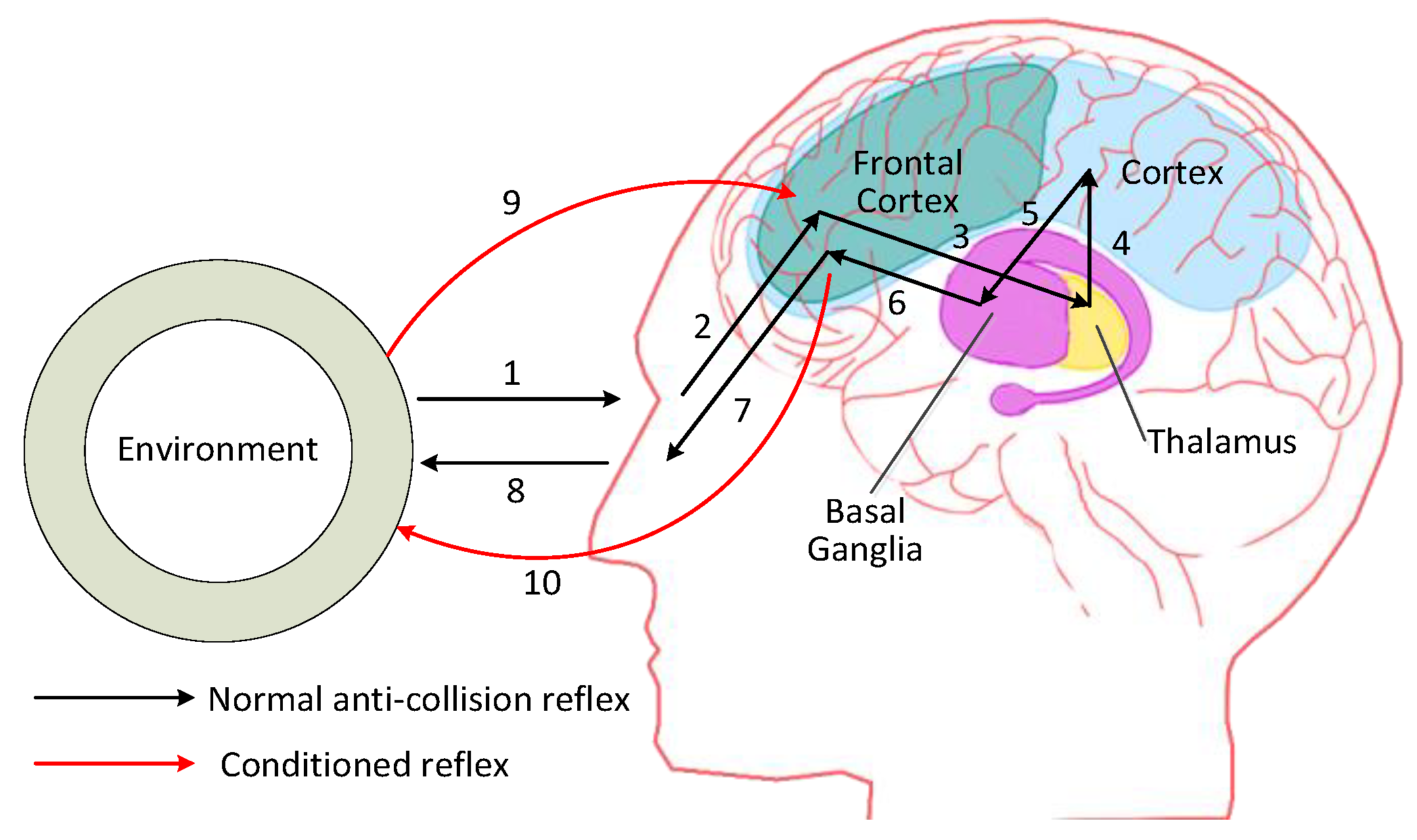

2.1. The Brain Pathway of the Conditioned Reflex Cycle in Human Anti-Collision Behavior

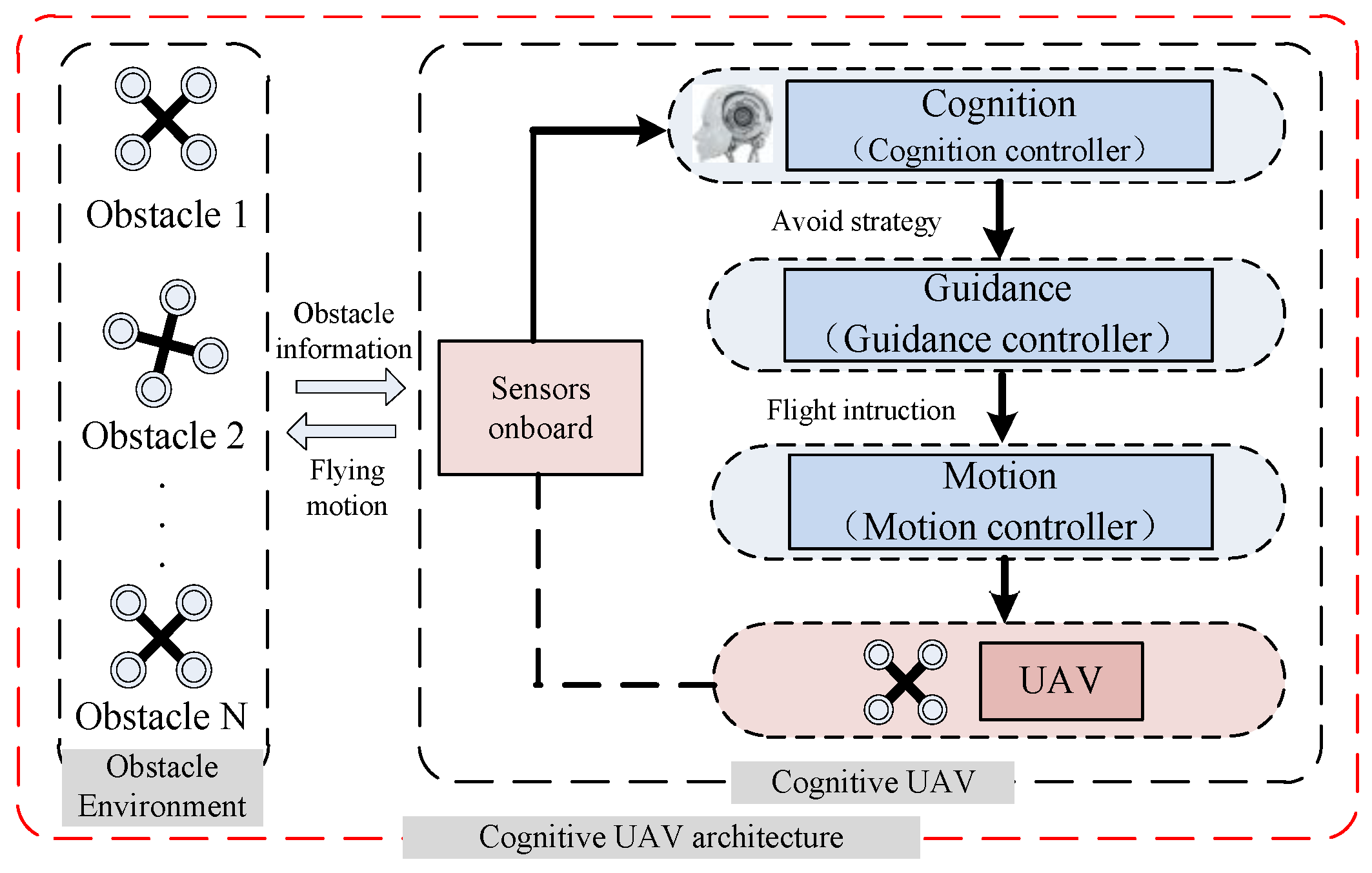

2.2. Cognitive Control Architecture for UAVs

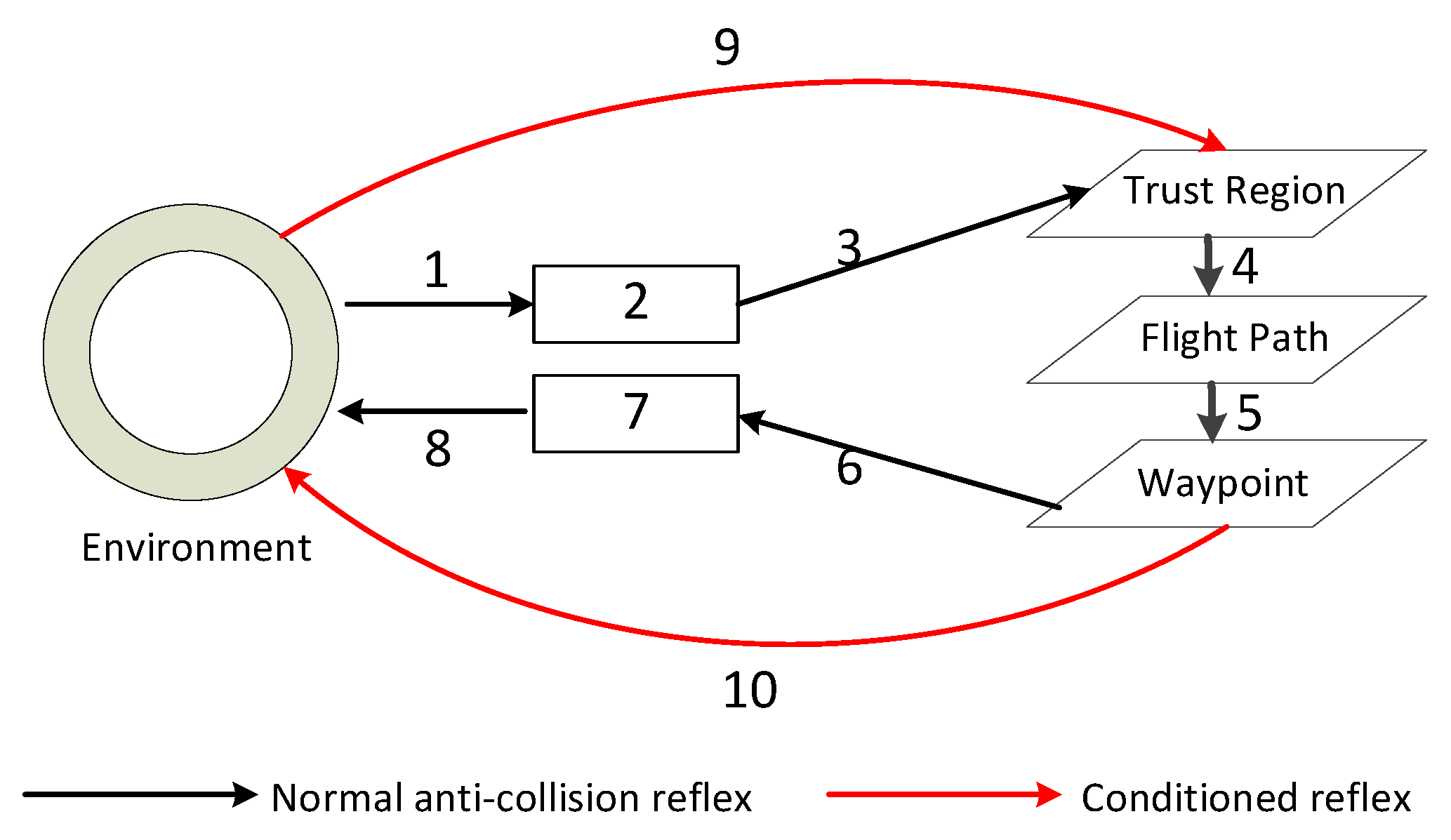

2.3. The Brain-Like Conditioned Reflex Cycle of Cognitive UAVs

- Universality: Cognitive algorithms design a universal schema rather than preprogramming and reprogramming for special missions, so they can enrich themselves incrementally.

- Interactivity: Humans act as teachers rather than programmers. We can only influence the process and the context but are definitely not the decision-makers.

- Learnability: Cognition is a gradual and cumulative process. Advanced knowledge relies on basic ability.

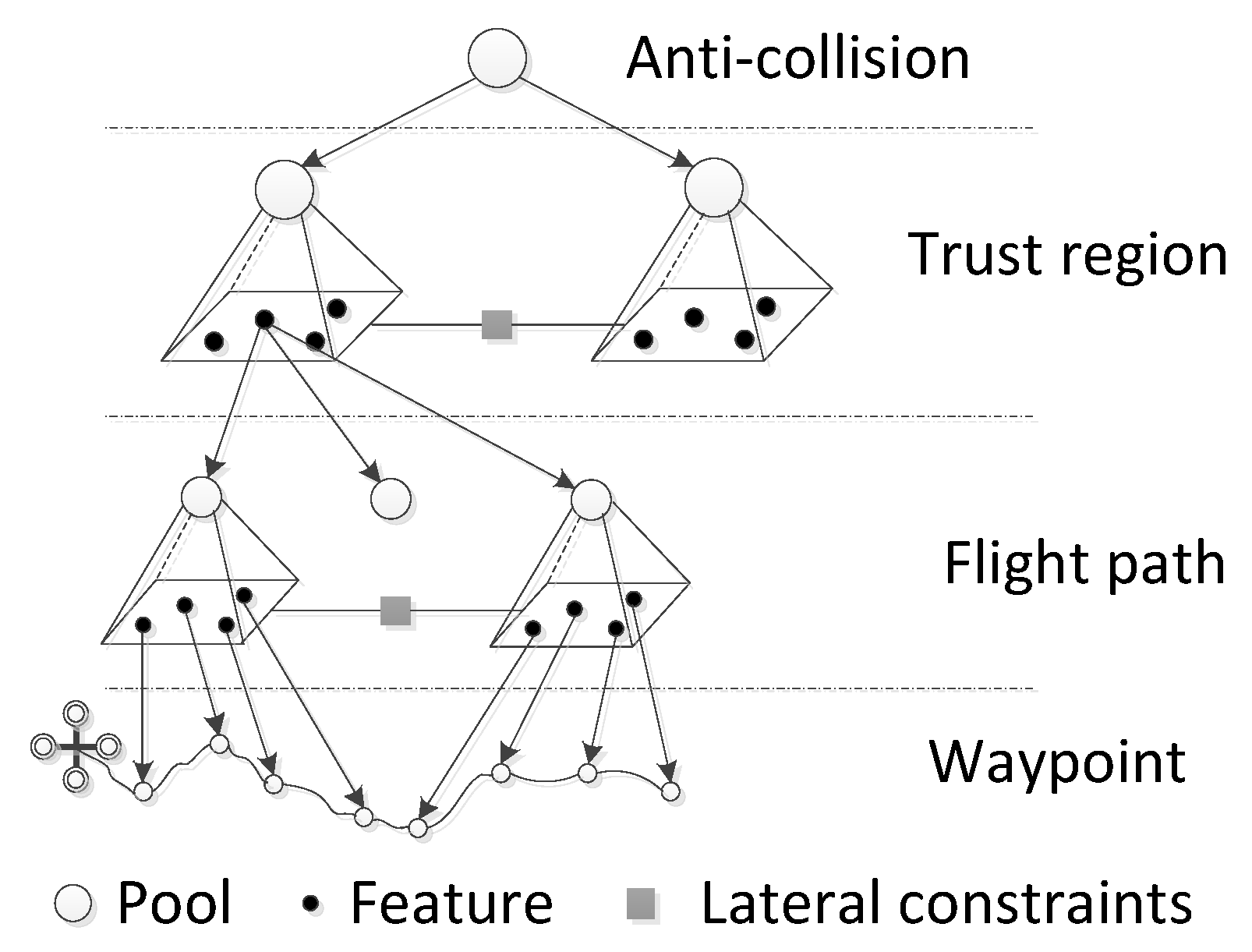

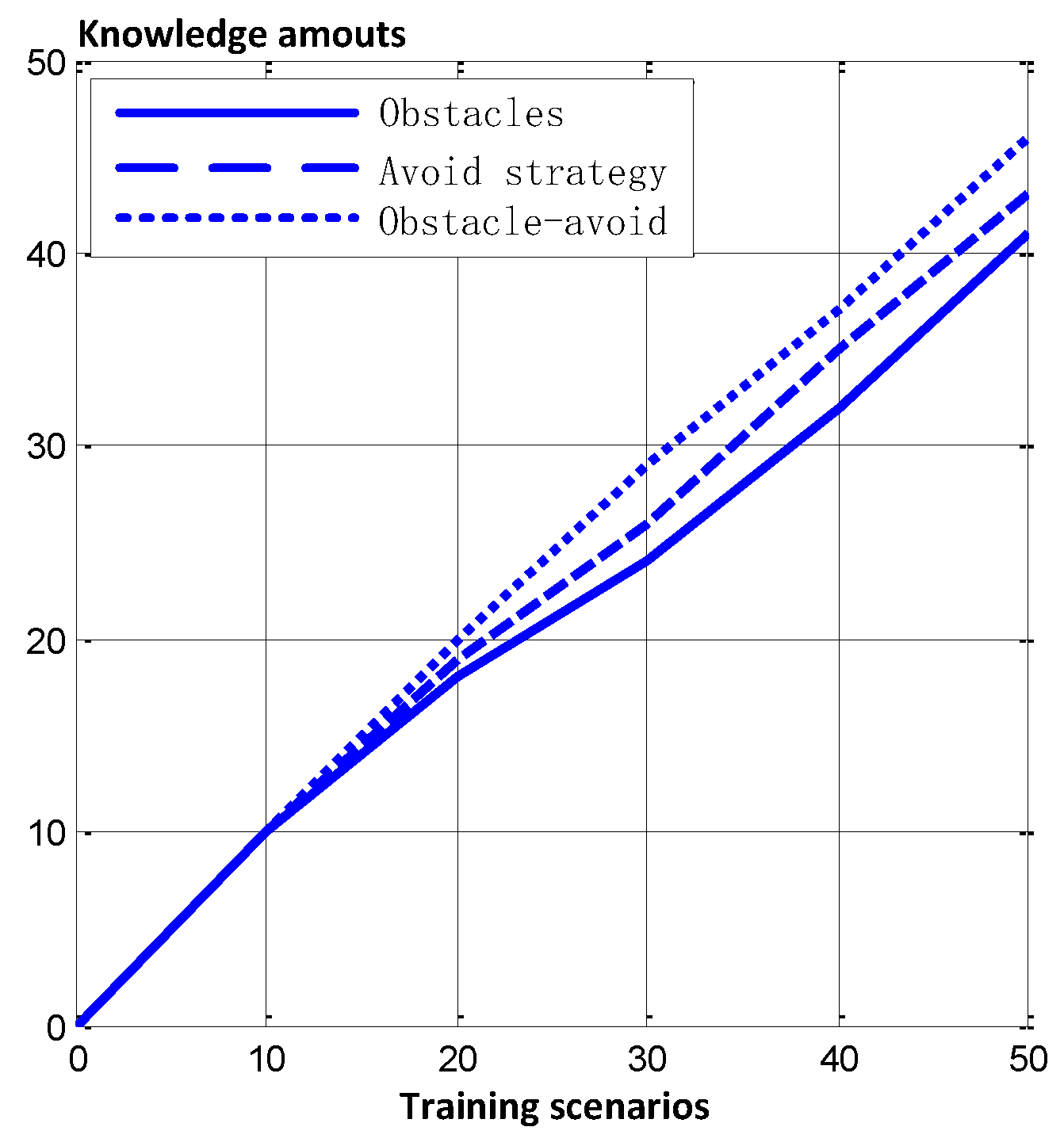

3. Algorithmic Design of Cognitive UAVs

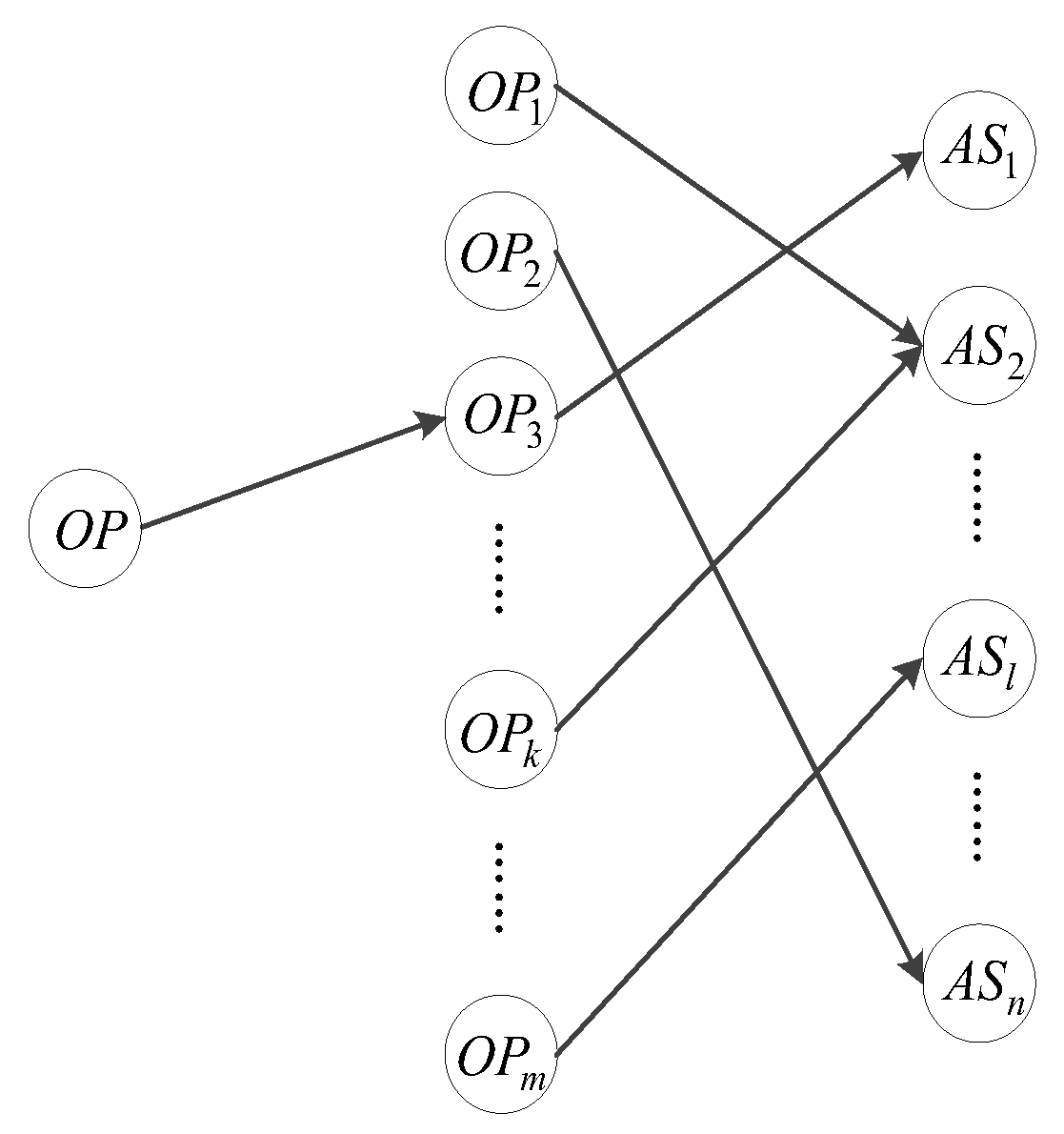

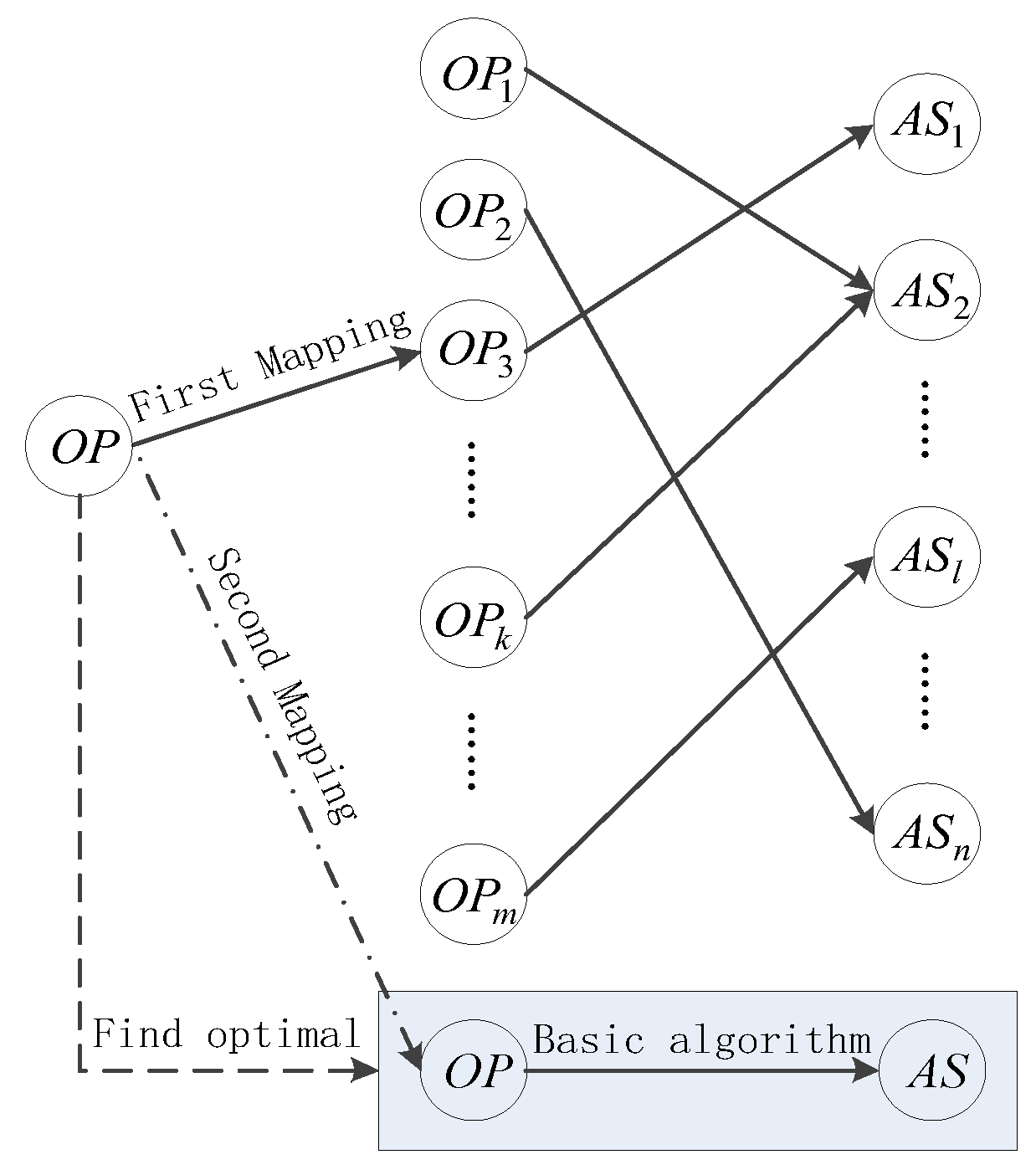

3.1. Construction of the Obstacle-Avoidance Mapping Relation

3.2. Construction of an Avoidance Strategy and an Obstacle Pattern

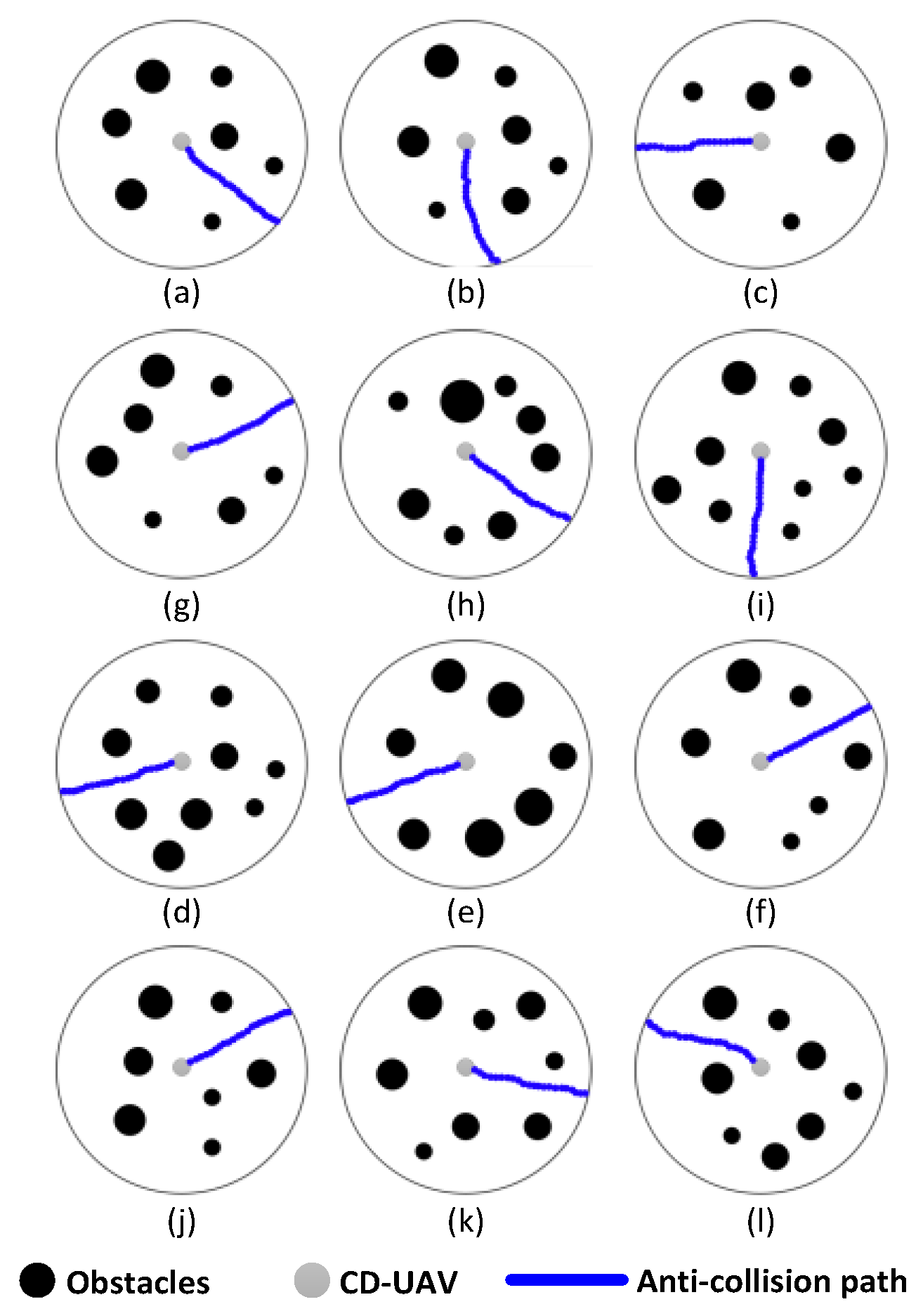

4. Simulation and Analysis

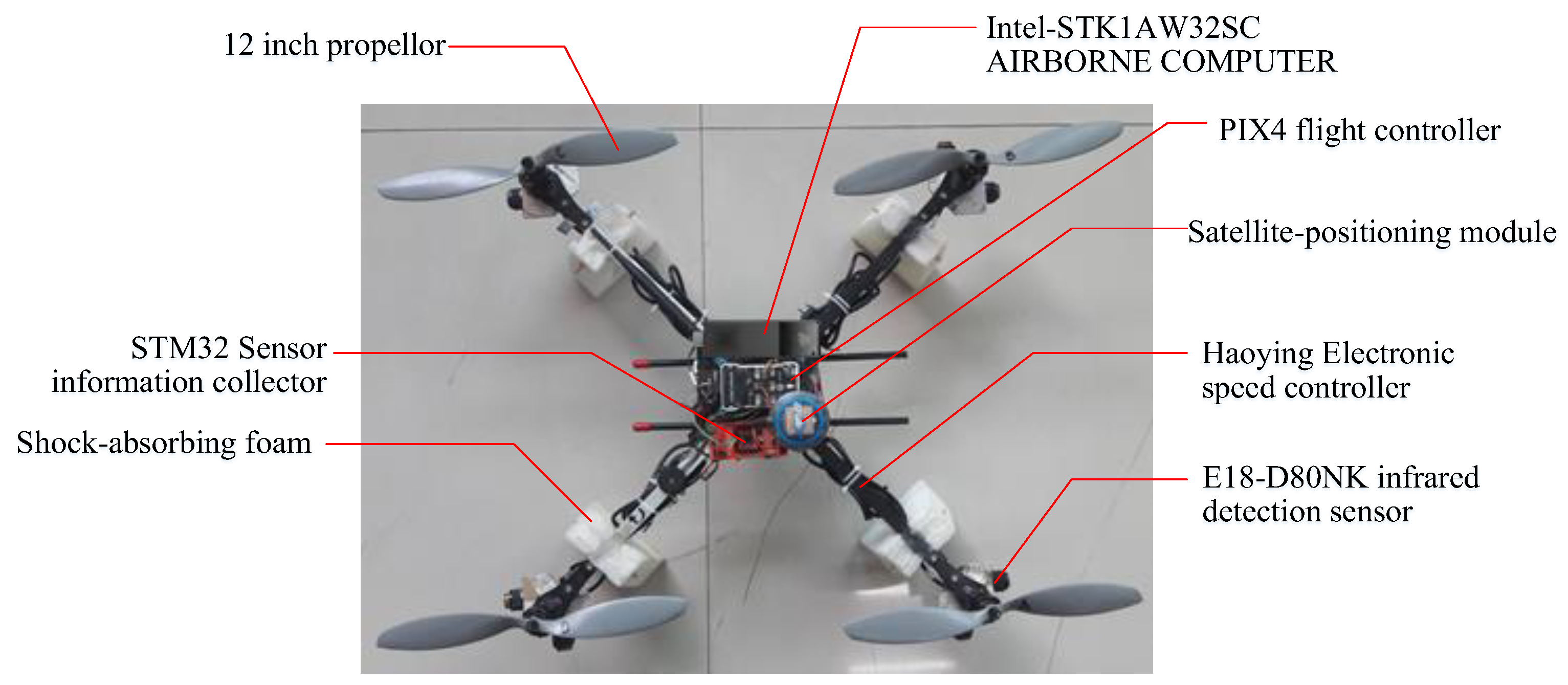

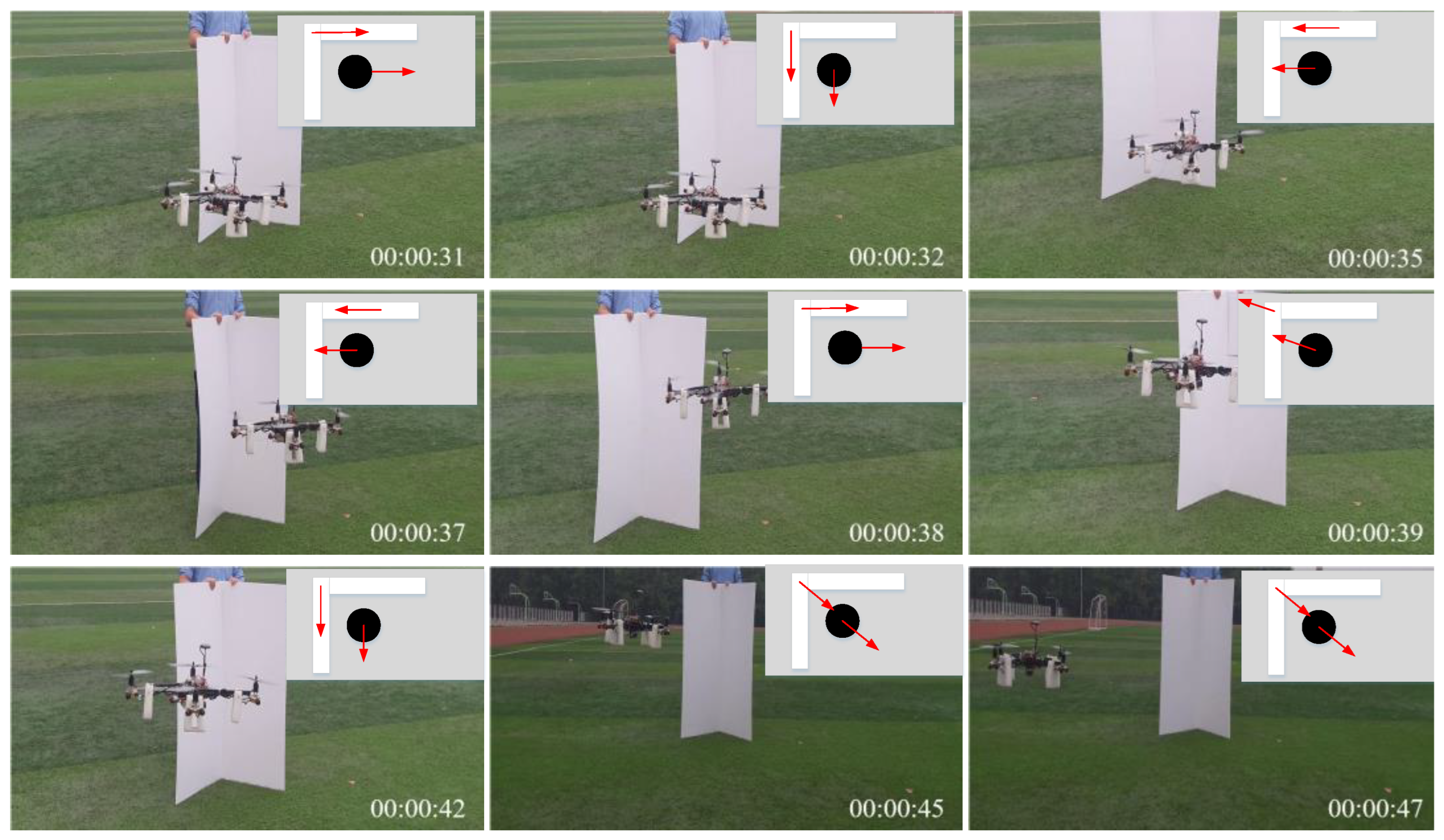

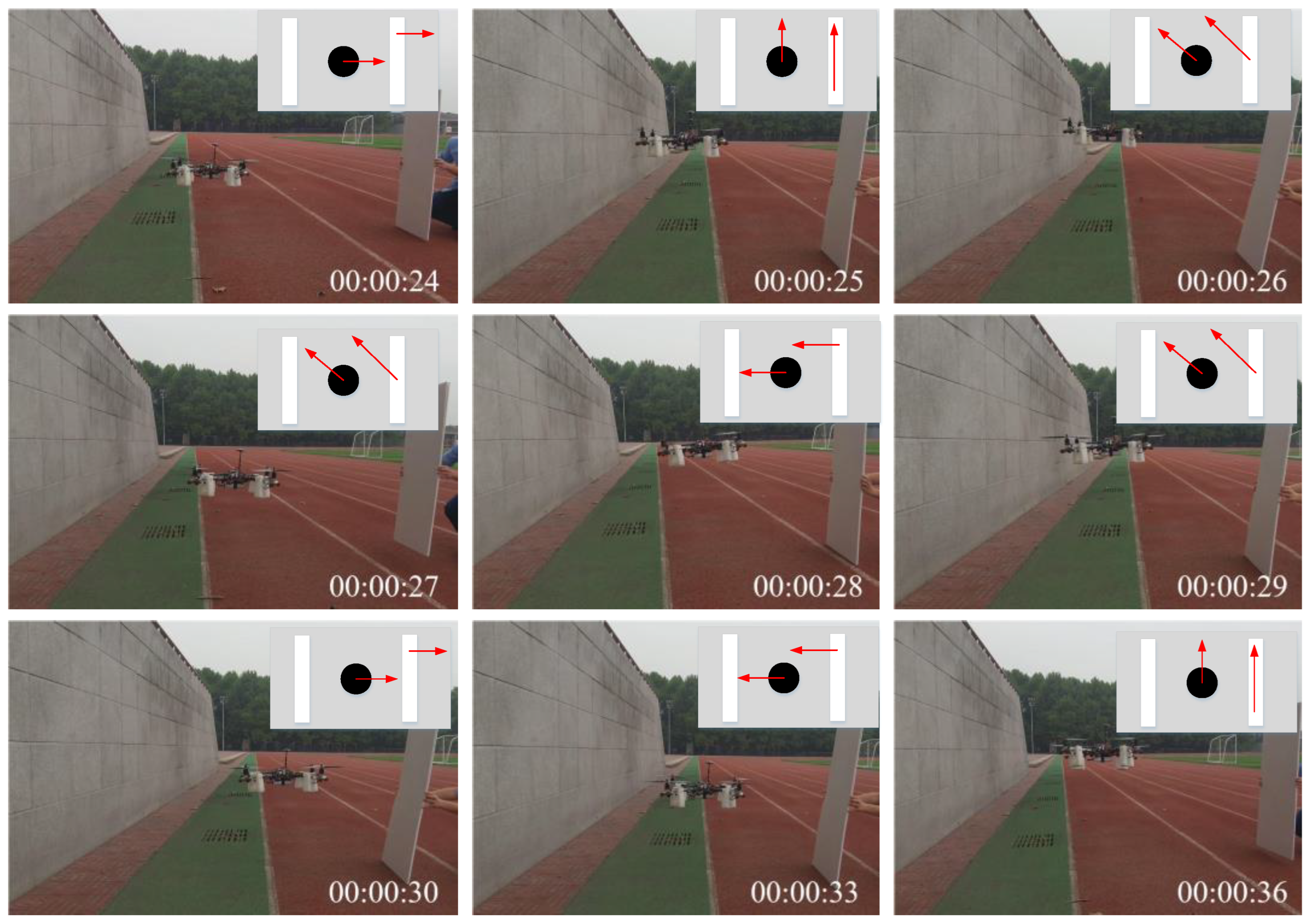

5. Experiment and Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Amin, J.N.; Boskovic, J.D.; Mehra, K. A Fast and Efficient Approach to Path Planning for Unmanned Vehicles. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Keystone, Colorado, 21–24 August 2006. [Google Scholar]

- Czubenko, M.; Kowalczuk, Z.; Ordys, A. Autonomous Driver Based on an Intelligent System of Decision-Making. Cogn. Comput. 2015, 7, 569–581. [Google Scholar] [CrossRef]

- Wei, R.; Zhang, Q.; Xu, Z. Peers’ Experience Learning for Developmental Robots. Int. J. Soc. Robot. 2019, 12, 35–45. [Google Scholar] [CrossRef]

- Zhang, Q.; Wei, R. Unmanned aerial vehicle perception system following visual cognition invariance mechanism. IEEE Access 2019, 7, 45951–45960. [Google Scholar] [CrossRef]

- Beard, R.W.; McLain, T.W. Trajectory Planning for Coordinated Rendezvous of Unmanned Air Vehicles. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Denver, CO, USA, 14–17 August 2000. [Google Scholar]

- Wang, S.L.; Wei, R.X. Laguerre Diagram Construction Algorithm for Path Planning. Syst. Eng. Electron. 2013, 3, 552–556. [Google Scholar]

- Wei, R.X.; Wang, S.L. Self-Optimization A-Star Algorithm for UAV Path Planning Based on Laguerre Diagram. Syst. Eng. Electron. 2015, 3, 577–582. [Google Scholar]

- Beard, R.W.; McLain, T.W.; Goodrich, M. Coordinated Target Assignment and Intercept for Unmanned Air Vehicles. IEEE Trans. Robot. Autom. 2002, 18, 911–922. [Google Scholar] [CrossRef]

- Russell, S.; Norvig, P. Artificial Intelligence: A Modern Approach; Prentice Hall: London, UK, 2003. [Google Scholar]

- Mitchell, J.S.; Keirsey, D.M. Planning Strategic Paths through Variable Terrain Data. In Proceedings of the SPIE Conference on Applications of Artificial Intelligence, Arlington, VA, USA, 29 April–4 May 1984. [Google Scholar]

- Stentz, A. Optimal and Efficient Path Planning for Partially-Known Environments. In Proceedings of the 1994 International Conference on Robotics and Automation, Los Alamitos, CA, USA, 8–13 May 1994. [Google Scholar]

- Khatib, O. Real-Time Obstacle Avoidance for Manipulators and Mobile Robots. Int. J. Robot. Res. 1986, 5, 90–98. [Google Scholar] [CrossRef]

- Vanek, B.; Peni, T.; Bokor, J.; Balas, G. Practical approach to real-time trajectory tracking of UAV formations. In Proceedings of the American Control Conference, Portland, OR, USA, 28–30 June 2000. [Google Scholar]

- Fogel, D.B.; Fogel, L.J. Optimal Routing of Multiple Autonomous Underwater Vehicles through Evolutionary Programming. In Proceedings of the 1990 Symposium on Autonomous Underwater Vehicles Technology, Washington, DC, USA, 5–6 June 1990; pp. 44–47. [Google Scholar]

- Capozzi, B.J.; Vagners, J. Evolving (Semi)-Autonomous Vehicles. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Montreal, QB, Canada, 6–9 August 2001. [Google Scholar]

- Luo, B.; Hussain, A.; Mahmud, M.; Tang, J. Advances in Brain-Inspired Cognitive Systems. Cogn. Comput. 2016, 8, 795–796. [Google Scholar] [CrossRef]

- Tu, Z.; Zheng, A.; Yang, E.; Luo, B.; Hussain, A. A Biologically Inspired Vision-Based Approach for Detecting Multiple Moving Objects in Complex Outdoor Scenes. Cogn. Comput. 2015, 7, 539–551. [Google Scholar] [CrossRef]

- Hofstadter, D.R. Metamagical Themas: Questing for the Essence of Mind and Pattern; Basic Books; Hachette UK: London, UK, 1985. [Google Scholar]

- Beck, E.C.; Doty, R.W. Conditioned flexion reflexes acquired during combined catalepsy and de-efferentation. J. Comp. Physiol. Psychol. 1957, 50, 211–216. [Google Scholar] [CrossRef]

- Soltysik, S.; Gasanova, R. The effect of “partial reinforcement” on classical and instrumental conditioned reflexes acquired under continuous reinforcement. Acta Biol. Exp. 1969, 29, 29–49. [Google Scholar]

- Badia SB, I.; Pyk, P.; Verschure PF, M.J. A fly-locust based neuronal control system applied to an unmanned aerial vehicle: The invertebrate neuronal principles for course stabilization, altitude control and collision avoidance. Int. J. Robot. Res. 2007, 26, 759–772. [Google Scholar] [CrossRef]

- Weng, J.; McClelland, J.; Pentland, A.; Sporns, O.; Stockman, I.; Sur, M.; Thelen, E. Autonomous Mental Development by Robots and Animals. Science 2001, 291, 599–600. [Google Scholar] [CrossRef] [PubMed]

- Weng, J.; Hwang, W. Incremental Hierarchical Discriminant Regression. IEEE Trans. Neural Netw. 2007, 18, 397–415. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Zhang, L.; Weng, J. Spatio-Temproal Adaptation in the Unsupervised Development of Networked Visual Neurons. IEEE Trans. Neural Netw. 2009, 6, 992–1008. [Google Scholar] [CrossRef] [PubMed]

- Lim, M.-S.; Oh, S.-R.; Son, J.; You, B.-J.; Kim, K.-B. A Human-like Real-time Grasp Synthesis Method for Humanoid Robot Hands. Robot. Auton. Syst. 2000, 30, 261–271. [Google Scholar] [CrossRef]

- Asada, M. Towards Artificial Empathy: How Can Artificial Empathy Follow the Developmental Pathway of Natural Empathy? Int. J Soc. Robot. 2015, 7, 19–33. [Google Scholar] [CrossRef]

- Sandini, G.; Metta, G.; Vernon, D. Robotcub: An Open Framework for Research in Embodied Cognition. In Proceedings of the 4th IEEE/RAS International Conference on Humanoid Robots, Santa Monica, CA, USA, 10–12 November 2004; pp. 13–32. [Google Scholar]

- Simmons, R.G. Structured Control for Autonomous Robots. IEEE Trans. Robot. Autom. 1994, 10, 34–43. [Google Scholar] [CrossRef]

- Rosenblatt, J.K.; Thorpe, C.E. Combining Multiple Goals in a Behavior-Based Architecture. In Proceedings of the IEEE International Conference on Robotics and Automation, Pittsburgh, PA, USA, 5–9 August 1995; pp. 136–141. [Google Scholar]

- Brooks, R.A. A Robust Layered Control System for a Mobile Robot. IEEE Trans. Robot Autom. 1986, 2, 14–23. [Google Scholar] [CrossRef]

- Rescorla, R.A. Pavlovian Conditioning-It’s Not What You Think It Is. Am. Psychol. 1988, 43, 151–160. [Google Scholar] [CrossRef]

- Papini, M.R.; Bitterman, M.E. The role of contingency in classical conditioning. Psychol. Rev. 1990, 97, 396–403. [Google Scholar] [CrossRef] [PubMed]

- Von Eckardt, B. What Is Cognitive Science? MIT Press: Cambridge, MA, USA, 1996. [Google Scholar]

- Blomberg, O. Concepts of cognition for cognitive engineering. Int. J. Aviat. Psychol. 2011, 21, 85–104. [Google Scholar] [CrossRef]

- Zhou, H.; Friedman, H.S.; von der Heydt, R. Coding of border ownership in monkey visual cortex. J. Neurosci. 2000, 20, 6594–6611. [Google Scholar] [CrossRef] [PubMed]

- Rivara, C.B.; Sherwood, C.C.; Bouras, C.; Hof, P.R. Stereological characterization and spatial distribution patterns of Betz cells in the human primary motor cortex. Anat. Rec. Part A Discov. Mol. Cell. Evol. Biol. 2003, 270, 137–151. [Google Scholar] [CrossRef] [PubMed]

- Steriade, M.; Llinás, R.R. The functional states of the thalamus and the associated neuronal interplay. Physiol. Rev. 1988, 68, 649–742. [Google Scholar] [CrossRef] [PubMed]

- Sherman, S.M.; Guillery, R.W. Exploring the Thalamus; Academic Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Chakravarthy, V.S.; Joseph, D.; Bapi, R.S. What do the basal ganglia do? A modeling perspective. Biol. Cybern. 2010, 103, 237–253. [Google Scholar] [CrossRef] [PubMed]

- Smith, Y.; Wichmann, T. Functional Anatomy and Physiology of the Basal Ganglia. In Deep Brain Stimulation in Neurological & Psychiatric Disorders; Humana Press: Totowa, NJ, USA, 2008; pp. 33–62. [Google Scholar]

- Stocco, A.; Lebiere, C.; Anderson, J.R. Conditional Routing of Information to the Cortex: A Model of the Basal Ganglia’s Role in Cognitive Coordination. Psychol. Rev. 2010, 117, 541–574. [Google Scholar] [CrossRef] [PubMed]

- Ridderinkhof, K.R.; Ullsperger, M.; Crone, E.A.; Nieuwenhuis, S. The Role of the Medial Frontal Cortex in Cognitive Control. Science 2004, 306, 443–447. [Google Scholar] [CrossRef] [PubMed]

- Moore, T.; Armstrong, K.M. Selective gating of visual signals by microstimulation of frontal cortex. Nature 2003, 421, 370–373. [Google Scholar] [CrossRef]

- Murray, E.A.; Wise, S.P.; Graham, K.S. Chapter 1: The History of Memory Systems, The Evolution of Memory Systems: Ancestors, Anatomy, and Adaptations, 1st ed.; Oxford University Press: Oxford, UK, 2016; pp. 22–24. [Google Scholar]

- Bouton, M.E. Learning and Beavior: A Contemporary Synthesis, 2nd ed.; Sinauer: Sunderland, MA, USA, 2016. [Google Scholar]

- Lee, T.S.; Mumford, D. Hierarchical Bayesian inference in the visual cortex. JOSA A 2003, 20, 1434–1448. [Google Scholar] [CrossRef]

- Lee, T.S. The visual system’s internal model of the world. Proc. IEEE. 2013, 103, 1359–1378. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; ZHAO, Y.; Han, J. An improved ant colony optimization algorithm for robotic path planning. Control Theory Appl. 2010, 27, 821–825. [Google Scholar]

- Ru, C.; Wei, R.; Guo, Q.; Zhang, L. Guidance control of cognitive game for unmanned aerial vehicle autonomous collision avoidance. Control Theory Appl. 2014, 31, 1555–1560. [Google Scholar]

| Number of Processes | Functions | |

|---|---|---|

| 1 | Sensing | UAVs use sensors onboard to detect obstacles around them. |

| 2 | Preprocessing | To preprocess the mass and unclassified information of obstacles. |

| 3 | Cognition | In collision avoidance, this process involves having an awareness of familiar environment and cognizing obstacles. |

| 4 | Obstacle learning | This process aims to learn and reserve paths and to map the paths between trust regions and waypoints. |

| 5 | Behavior | Its function is to design an anti-collision strategy and to behave safely in an environment. |

| 6 | Strategy learning | This process is to memorize anti-collision strategies. |

| 7 | Pre-action | The waypoints cannot be decoded and acted by UAVs, so this process clarifies them so as to transform them into commands. |

| 8 | Action | Cognitive UAVs follow the commands from the RBN to avoid crowded obstacles. |

| Test No. | AC | CG | CR | |||

|---|---|---|---|---|---|---|

| Minf/(m) | tmin/(s) | Minf/(m) | tmin/(s) | Minf/(m) | tmin/(s) | |

| 1 | 0 | 96.7 | 0 | 169.2 | 0.25 | 76.8 |

| 2 | 0 | 87.5 | 0 | 89.4 | 0.42 | 89.1 |

| 3 | 0 | 165.2 | 0 | 164.3 | 0.34 | 79.7 |

| 4 | 0 | 148.3 | 0 | 172.8 | 0.43 | 267.5 |

| 5 | 0 | 87.9 | 0 | 94.6 | 0.26 | 285.6 |

| Environment | 1st | 2nd | 3rd | 4th |

|---|---|---|---|---|

| Traditional method | 6.4 s | 8.6 s | 7.1 s | 6.5 s |

| Conditioned reflection | 3.7 s | 5.2 s | 3.6 s | 4.4 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Q.; Wei, R.; Huang, S. Cognitive Control Architecture for the Practical Realization of UAV Collision Avoidance. Sensors 2024, 24, 2790. https://doi.org/10.3390/s24092790

Zhang Q, Wei R, Huang S. Cognitive Control Architecture for the Practical Realization of UAV Collision Avoidance. Sensors. 2024; 24(9):2790. https://doi.org/10.3390/s24092790

Chicago/Turabian StyleZhang, Qirui, Ruixuan Wei, and Songlin Huang. 2024. "Cognitive Control Architecture for the Practical Realization of UAV Collision Avoidance" Sensors 24, no. 9: 2790. https://doi.org/10.3390/s24092790

APA StyleZhang, Q., Wei, R., & Huang, S. (2024). Cognitive Control Architecture for the Practical Realization of UAV Collision Avoidance. Sensors, 24(9), 2790. https://doi.org/10.3390/s24092790