GBV-Net: Hierarchical Fusion of Facial Expressions and Physiological Signals for Multimodal Emotion Recognition

Abstract

1. Introduction

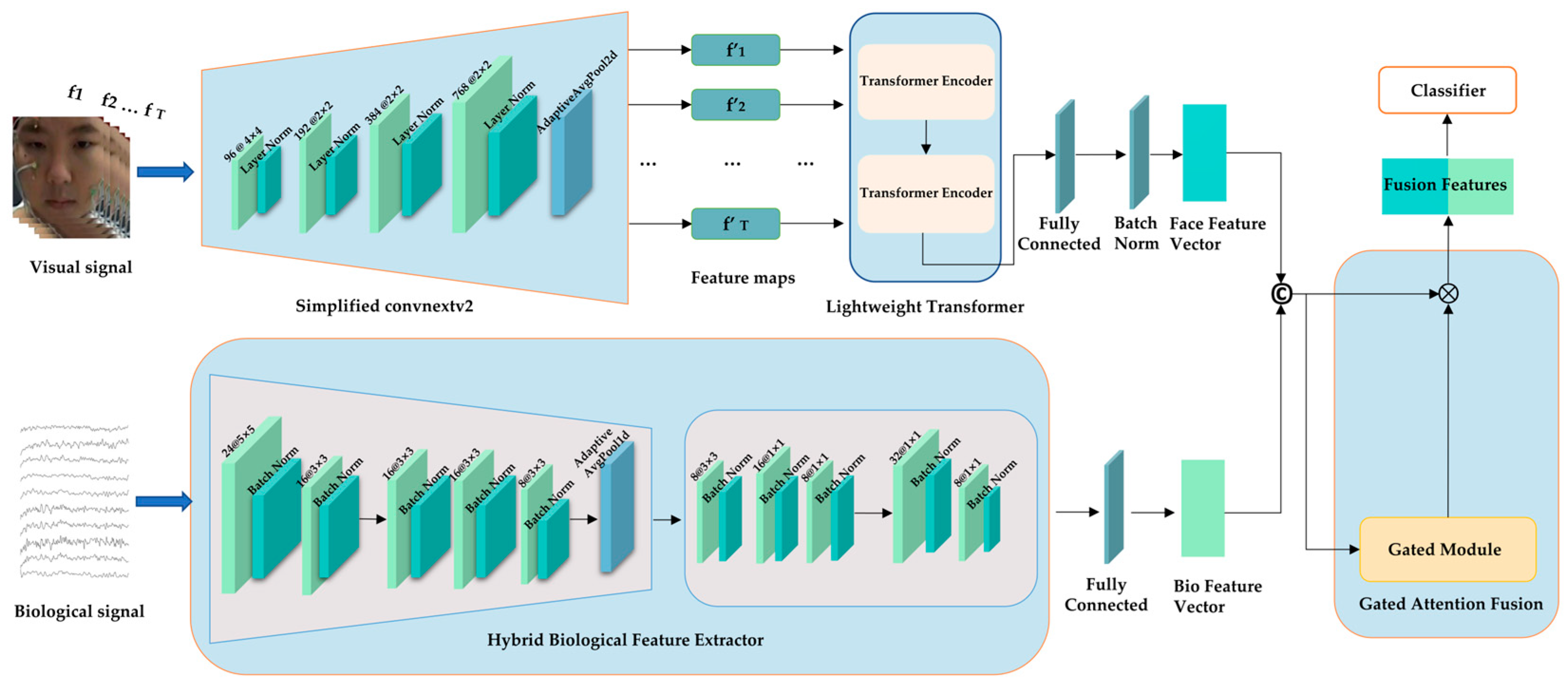

- To address the inefficient modeling of coupled spatio-temporal features in continuous facial expression sequences, we introduce a computationally efficient synergistic architecture combining ConvNeXt V2 and lightweight Transformers for efficient spatio-temporal dynamic feature extraction.

- To overcome the challenge of unified modeling for multi-scale temporal patterns in physiological signals (transient local, rhythmic mid-range, and global dependencies), we develop a novel three-level hybrid feature extraction framework (“Local-Medium-Global”). This framework ensures computational efficiency while comprehensively capturing cross-scale bio-features.

- To mitigate the limitations of simple feature concatenation, such as modal redundancy and lack of complementarity, we propose a feature fusion module based on a Gated Attention Mechanism. This module adaptively learns and modulates the contribution weights of features from different modalities, enabling deep interaction and optimal collaboration at the feature level, effectively overcoming the drawbacks of naive concatenation.

2. Related Work

2.1. Unimodal Emotion Recognition

2.1.1. Emotion Recognition from Facial Expressions

2.1.2. Emotion Recognition from Physiological Signals

2.1.3. Comparison Between Facial Expression- and Physiological Signal-Based Emotion Recognition

2.2. Multimodal Emotion Recognition

3. Methodology

3.1. GBV-Net Architecture Overview

3.2. Multimodal Feature Extraction

3.2.1. Facial Feature Extraction

3.2.2. Physiological Signal Feature Extraction

3.3. Feature Fusion

4. Experimental Results and Analysis

4.1. Experimental Dataset and Preprocessing

- Data Augmentation: As detailed in Section 4.1, extensive data augmentation techniques (e.g., horizontal flipping and color jittering for faces; additive noise and temporal shifting for bio-signals) were applied during training. This increases the diversity of the training data, forcing the model to learn more generalized features.

- Monitoring Learning Curves: The training and validation accuracy and loss curves were meticulously monitored throughout the training process (as shown in Figure 2 and Figure 3). The close alignment and concurrent convergence of these curves, without a significant divergence, indicate that the model was learning generalizable patterns rather than memorizing the training data.

- Regularization Techniques: The model architecture inherently incorporates modern regularization techniques, such as Batch Normalization and residual connections, which help stabilize training and reduce overfitting.

4.2. Main Results and Comparative Analysis

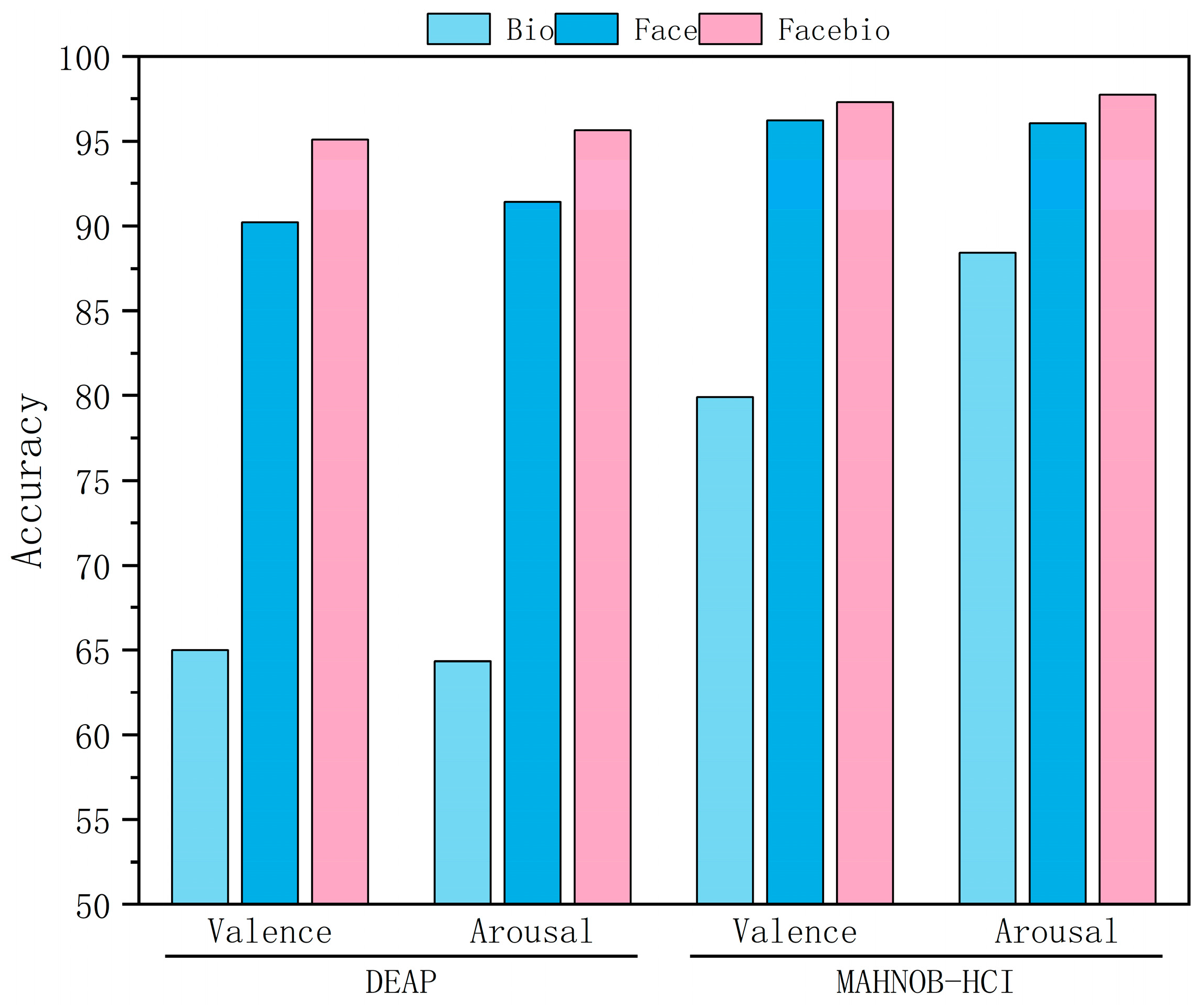

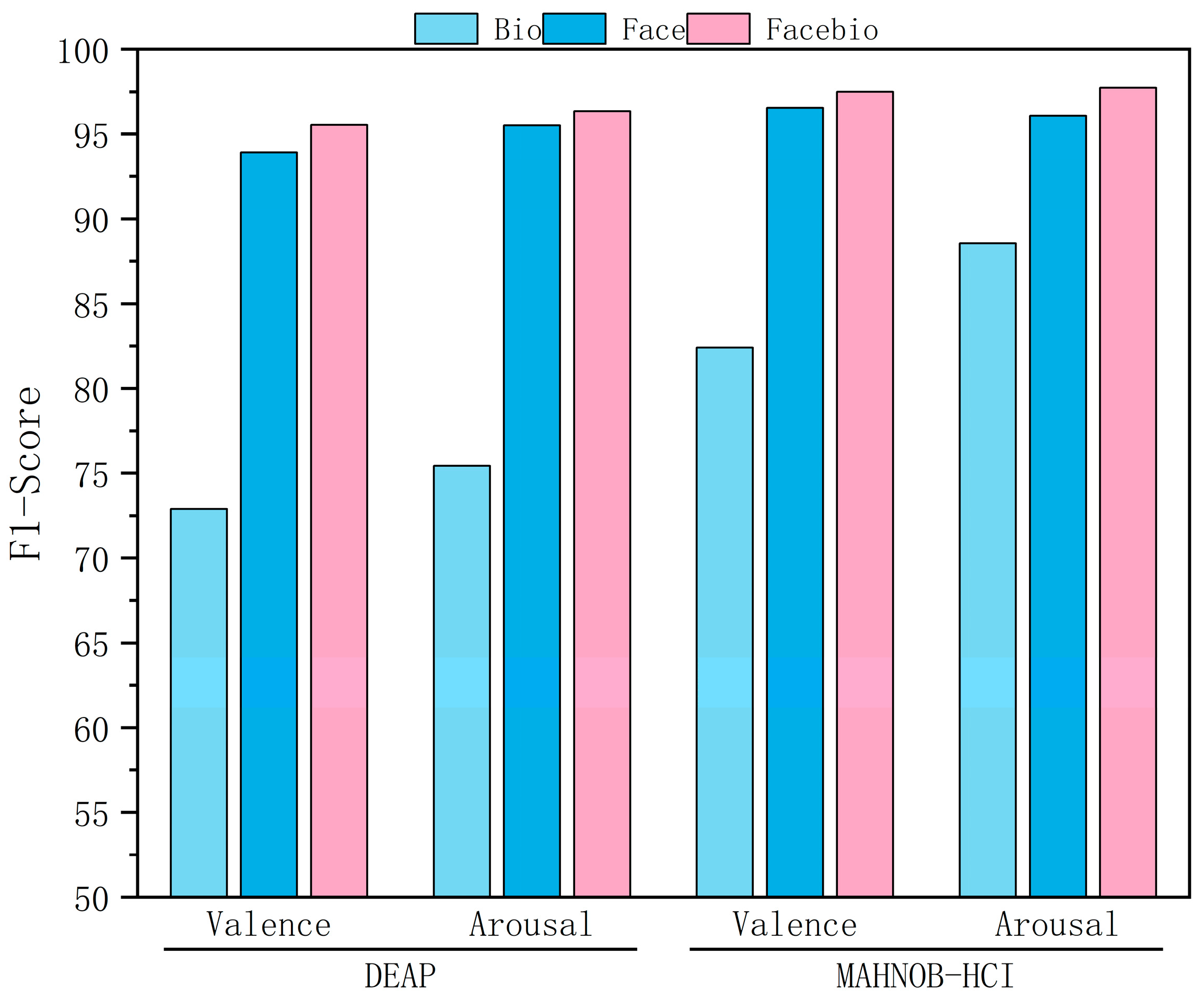

4.3. Ablation Experiment

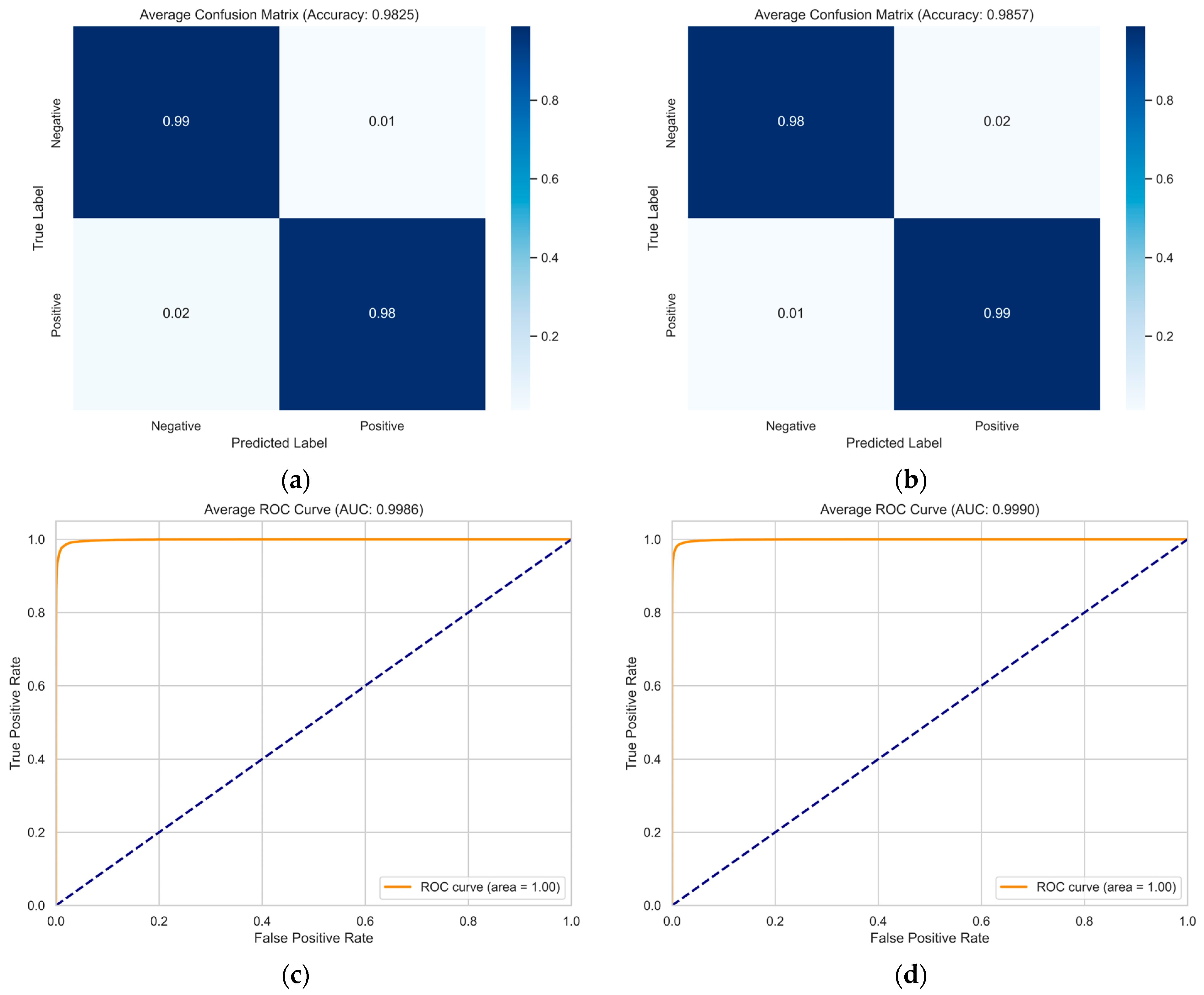

4.4. Visual Analysis and Robustness Evaluation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| EEG | Electroencephalogram |

| ECG | Electrocardiogram |

| EOG | Electrooculogram |

| GSR | Galvanic skin response |

| Bio | Biological |

References

- Lu, B.; Zhang, Y.; Zheng, W. A Survey of Affective Brain-Computer Interface. Chin. J. Intell. Sci. Technol. 2021, 3, 36–48. [Google Scholar]

- De Nadai, S.; D’Inca, M.; Parodi, F.; Benza, M.; Trotta, A.; Zero, E.; Zero, L.; Sacile, R. Enhancing Safety of Transport by Road by On-Line Monitoring of Driver Emotions. In Proceedings of the 2016 11th System of Systems Engineering Conference (SoSE), Kongsberg, Norway, 12–16 June 2016; IEEE: New York, NY, USA, 2016; pp. 1–4. [Google Scholar]

- Bhatti, U.A.; Huang, M.; Wu, D.; Zhang, Y.; Mehmood, A.; Han, H. Recommendation System Using Feature Extraction and Pattern Recognition in Clinical Care Systems. Enterp. Inf. Syst. 2019, 13, 329–351. [Google Scholar] [CrossRef]

- Guo, R.; Li, S.; He, L.; Gao, W.; Qi, H.; Owens, G. Pervasive and Unobtrusive Emotion Sensing for Human Mental Health. In Proceedings of the 2013 7th International Conference on Pervasive Computing Technologies for Healthcare and Workshops, Venice, Italy, 5–8 May 2013; IEEE: New York, NY, USA, 2013. [Google Scholar]

- Abdullah, S.M.S.A.; Ameen, S.Y.A.; Sadeeq, M.A.M.; Zeebaree, S. Multimodal Emotion Recognition Using Deep Learning. J. Appl. Sci. Technol. Trends 2021, 2, 73–79. [Google Scholar] [CrossRef]

- Wang, Y.; Song, W.; Tao, W.; Liotta, A.; Yang, D.; Li, X.; Gao, S.; Sun, Y.; Ge, W.; Zhang, W.; et al. A Systematic Review on Affective Computing: Emotion Models, Databases, and Recent Advances. Inf. Fusion. 2022, 83, 19–52. [Google Scholar] [CrossRef]

- Meena, G.; Mohbey, K.K.; Indian, A.; Khan, M.Z.; Kumar, S. Identifying Emotions from Facial Expressions Using a Deep Convolutional Neural Network-Based Approach. Multimed. Tools Appl. 2023, 83, 15711–15732. [Google Scholar] [CrossRef]

- Chowdary, M.K.; Nguyen, T.N.; Hemanth, D.J. Deep Learning-Based Facial Emotion Recognition for Human–Computer Interaction Applications. Neural Comput. Appl. 2023, 35, 23311–23328. [Google Scholar] [CrossRef]

- Minaee, S.; Abdolrashidi, A. Deep-Emotion: Facial Expression Recognition Using Attentional Convolutional Network 2019. Sensors 2021, 21, 3046. [Google Scholar] [CrossRef]

- Zhu, J.-Y.; Zheng, W.-L.; Lu, B.-L. Cross-Subject and Cross-Gender Emotion Classification from EEG. In World Congress on Medical Physics and Biomedical Engineering, June 7–12, 2015, Toronto, Canada; Jaffray, D.A., Ed.; IFMBE Proceedings; Springer International Publishing: Cham, Switzerland, 2015; Volume 51, pp. 1188–1191. ISBN 978-3-319-19386-1. [Google Scholar]

- Bhatti, A.M.; Majid, M.; Anwar, S.M.; Khan, B. Human Emotion Recognition and Analysis in Response to Audio Music Using Brain Signals. Comput. Hum. Behav. 2016, 65, 267–275. [Google Scholar] [CrossRef]

- Algarni, M.; Saeed, F.; Al-Hadhrami, T.; Ghabban, F.; Al-Sarem, M. Deep Learning-Based Approach for Emotion Recognition Using Electroencephalography (EEG) Signals Using Bi-Directional Long Short-Term Memory (Bi-LSTM). Sensors 2022, 22, 2976. [Google Scholar] [CrossRef]

- Salama, E.S.; El-Khoribi, R.A.; Shoman, M.E.; Wahby Shalaby, M.A. A 3D-Convolutional Neural Network Framework with Ensemble Learning Techniques for Multi-Modal Emotion Recognition. Egypt. Inform. J. 2021, 22, 167–176. [Google Scholar] [CrossRef]

- Jung, T.-P.; Sejnowski, T.J. Utilizing Deep Learning Towards Multi-Modal Bio-Sensing and Vision-Based Affective Computing. IEEE Trans. Affect. Comput. 2022, 13, 96–107. [Google Scholar] [CrossRef]

- Huang, Y.; Yang, J.; Liu, S.; Pan, J. Combining Facial Expressions and Electroencephalography to Enhance Emotion Recognition. Future Internet 2019, 11, 105. [Google Scholar] [CrossRef]

- Xiang, G.; Yao, S.; Deng, H.; Wu, X.; Wang, X.; Xu, Q.; Yu, T.; Wang, K.; Peng, Y. A Multi-Modal Driver Emotion Dataset and Study: Including Facial Expressions and Synchronized Physiological Signals. Eng. Appl. Artif. Intell. 2024, 130, 107772. [Google Scholar] [CrossRef]

- Cui, R.; Chen, W.; Li, M. Emotion Recognition Using Cross-Modal Attention from EEG and Facial Expression. Knowl.-Based Syst. 2024, 304, 112587. [Google Scholar] [CrossRef]

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.S.; Xie, S. ConvNeXt V2: Co-Designing and Scaling ConvNets with Masked Autoencoders. In Proceedings of the Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 16133–16142. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Ullah, I.; Hussain, M.; Qazi, E.-H.; Aboalsamh, H. An Automated System for Epilepsy Detection Using EEG Brain Signals Based on Deep Learning Approach. Expert. Syst. Appl. 2018, 107, 61–71. [Google Scholar] [CrossRef]

- He, Y.; Zhao, J. Temporal Convolutional Networks for Anomaly Detection in Time Series. J. Phys. Conf. Ser. 2019, 1213, 042050. [Google Scholar] [CrossRef]

- Sripada, C.; Angstadt, M.; Kessler, D.; Phan, K.L.; Liberzon, I.; Evans, G.W.; Welsh, R.C.; Kim, P.; Swain, J.E. Volitional Regulation of Emotions Produces Distributed Alterations in Connectivity between Visual, Attention Control, and Default Networks. NeuroImage 2014, 89, 110–121. [Google Scholar] [CrossRef] [PubMed]

- Adolphs, R. Neural Systems for Recognizing Emotion. Curr. Opin. Neurobiol. 2002, 12, 169–177. [Google Scholar] [CrossRef] [PubMed]

- Min, J.; Nashiro, K.; Yoo, H.J.; Cho, C.; Nasseri, P.; Bachman, S.L.; Porat, S.; Thayer, J.F.; Chang, C.; Lee, T.-H.; et al. Emotion Downregulation Targets Interoceptive Brain Regions While Emotion Upregulation Targets Other Affective Brain Regions. J. Neurosci. 2022, 42, 2973–2985. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Soleymani, M.; Lichtenauer, J.; Pun, T.; Pantic, M. A Multimodal Database for Affect Recognition and Implicit Tagging. IEEE Trans. Affect. Comput. 2012, 3, 42–55. [Google Scholar] [CrossRef]

- Bulat, A.; Tzimiropoulos, G. How Far Are We From Solving the 2D & 3D Face Alignment Problem? (And a Dataset of 230,000 3D Facial Landmarks). In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1021–1030. [Google Scholar]

- Hu, X.; Chen, C.; Yang, Z.; Liu, Z. Reliable, Large-Scale, and Automated Remote Sensing Mapping of Coastal Aquaculture Ponds Based on Sentinel-1/2 and Ensemble Learning Algorithms. Expert. Syst. Appl. 2025, 293, 128740. [Google Scholar] [CrossRef]

- Yuvaraj, R.; Thagavel, P.; Thomas, J.; Fogarty, J.; Ali, F. Comprehensive Analysis of Feature Extraction Methods for Emotion Recognition from Multichannel EEG Recordings. Sensors 2023, 23, 915. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Liang, Y.; Liu, X.; Wang, B.; Huang, W.; Cai, Z.; Ye, Y.; Qiu, L.; Pan, J. MindLink-Eumpy: An Open-Source Python Toolbox for Multimodal Emotion Recognition. Front. Hum. Neurosci. 2021, 15, 621493. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Liu, H.; Zhang, D.; Chen, X.; Qin, T.; Zheng, Q. EEG-Based Emotion Recognition With Emotion Localization via Hierarchical Self-Attention. IEEE Trans. Affect. Comput. 2023, 14, 2458–2469. [Google Scholar] [CrossRef]

| Attribute | DEAP | MAHNOB-HCI |

|---|---|---|

| Subjects | 32 | 27 |

| Available channels | 40 | 38 |

| Length of each train | 60 s | 49 s–117 s |

| Trail of each subject | 40 | 20 |

| Emotional description | Valence, Arousal | Valence, Arousal |

| Channel Number | Channel Name | Channel Number | Channel Name |

|---|---|---|---|

| 1 | Fp1 | 17 | Fp2 |

| 2 | AF3 | 18 | AF4 |

| 3 | F3 | 19 | Fz |

| 4 | F7 | 20 | F4 |

| 5 | FC5 | 21 | F8 |

| 6 | FC1 | 22 | FC6 |

| 7 | C3 | 23 | FC2 |

| 8 | T7 | 24 | Cz |

| 9 | CP5 | 25 | C4 |

| 10 | CP1 | 26 | T8 |

| 11 | P3 | 27 | CP6 |

| 12 | P7 | 28 | CP2 |

| 13 | PO3 | 29 | P4 |

| 14 | O1 | 30 | P8 |

| 15 | Oz | 31 | PO4 |

| 16 | Pz | 32 | O2 |

| Datasets | Authors | Accuracy | |

|---|---|---|---|

| Valence | Arousal | ||

| DEAP | Yuvaraj et al. [29] | 78.18% | 79.90% |

| Huang et al. [15] | 80.30% | 74.23% | |

| Li et al. [30] | 71.00% | 58.75% | |

| Zhang et al. [31] | 72.89% | 77.03% | |

| Siddharth et al. [14] | 79.52% | 78.34% | |

| Ours | 95.10% | 95.65% | |

| MAHNOB-HCI | Yuvaraj et al. [29] | 83.98% | 85.58% |

| Huang et al. [15] | 75.21% | 75.63% | |

| Li et al. [30] | 70.04% | 72.14% | |

| Zhang et al. [31] | 79.90% | 81.37% | |

| Siddharth et al. [14] | 85.49% | 82.93% | |

| Ours | 97.28% | 97.73% | |

| Datasets | Dimension | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| DEAP | Valence | 95.10 | 95.21 | 95.84 | 95.52 |

| Arousal | 95.65 | 95.89 | 96.82 | 96.35 | |

| MAHNOB-HCI | Valence | 97.28 | 97.67 | 97.33 | 97.50 |

| Arousal | 97.73 | 97.51 | 97.98 | 97.74 |

| Datasets | Modal | Accuracy | F1-Score | ||

|---|---|---|---|---|---|

| Valence | Arousal | Valence | Arousal | ||

| DEAP | Bio | 64.99 | 64.34 | 72.89 | 75.42 |

| Face | 90.22 | 91.40 | 93.91 | 95.49 | |

| Facebio | 95.10 | 95.65 | 95.52 | 96.35 | |

| MAHNOB-HCI | Bio | 79.89 | 88.42 | 82.40 | 88.55 |

| Face | 96.23 | 96.05 | 96.55 | 96.08 | |

| Facebio | 97.28 | 97.73 | 97.50 | 97.74 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, J.; Ru, Y.; Lei, B.; Chen, H. GBV-Net: Hierarchical Fusion of Facial Expressions and Physiological Signals for Multimodal Emotion Recognition. Sensors 2025, 25, 6397. https://doi.org/10.3390/s25206397

Yu J, Ru Y, Lei B, Chen H. GBV-Net: Hierarchical Fusion of Facial Expressions and Physiological Signals for Multimodal Emotion Recognition. Sensors. 2025; 25(20):6397. https://doi.org/10.3390/s25206397

Chicago/Turabian StyleYu, Jiling, Yandong Ru, Bangjun Lei, and Hongming Chen. 2025. "GBV-Net: Hierarchical Fusion of Facial Expressions and Physiological Signals for Multimodal Emotion Recognition" Sensors 25, no. 20: 6397. https://doi.org/10.3390/s25206397

APA StyleYu, J., Ru, Y., Lei, B., & Chen, H. (2025). GBV-Net: Hierarchical Fusion of Facial Expressions and Physiological Signals for Multimodal Emotion Recognition. Sensors, 25(20), 6397. https://doi.org/10.3390/s25206397