Abstract

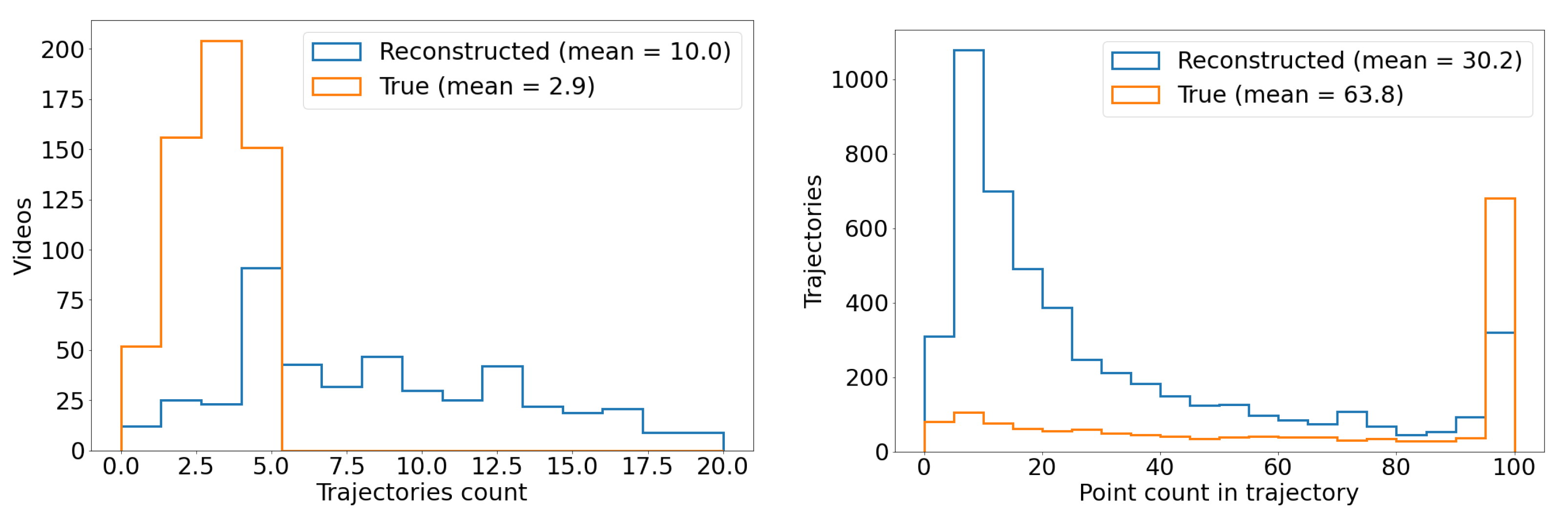

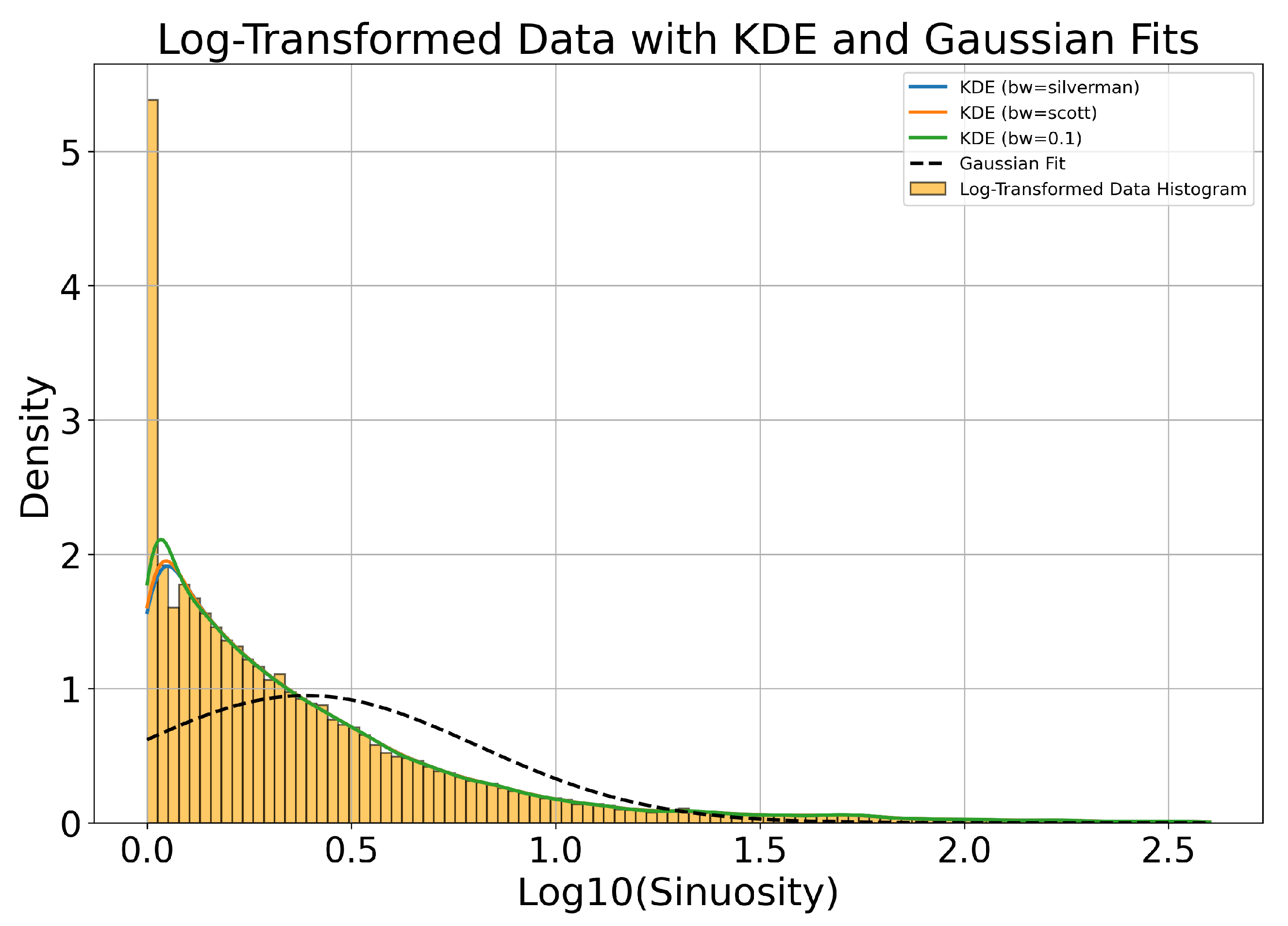

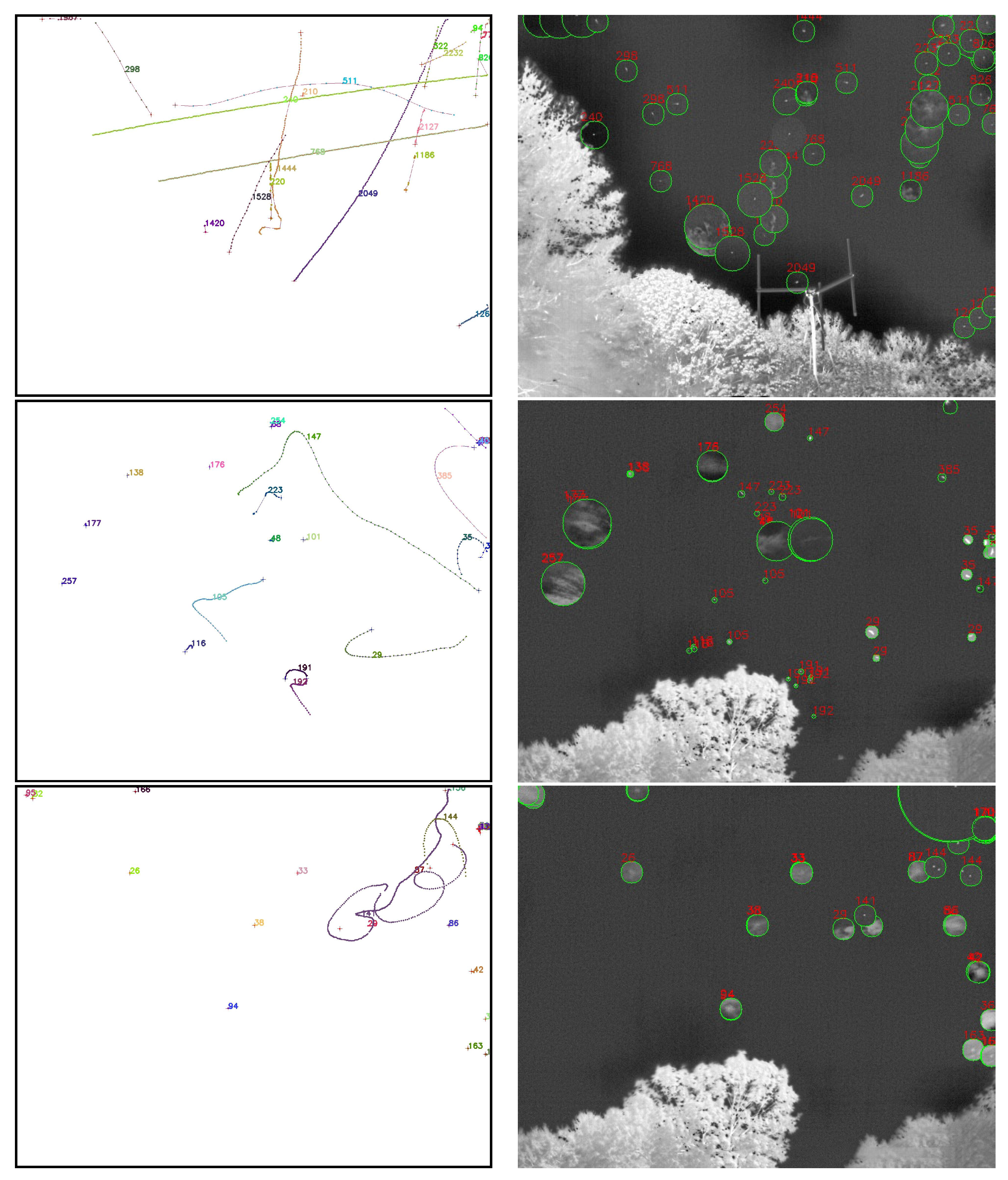

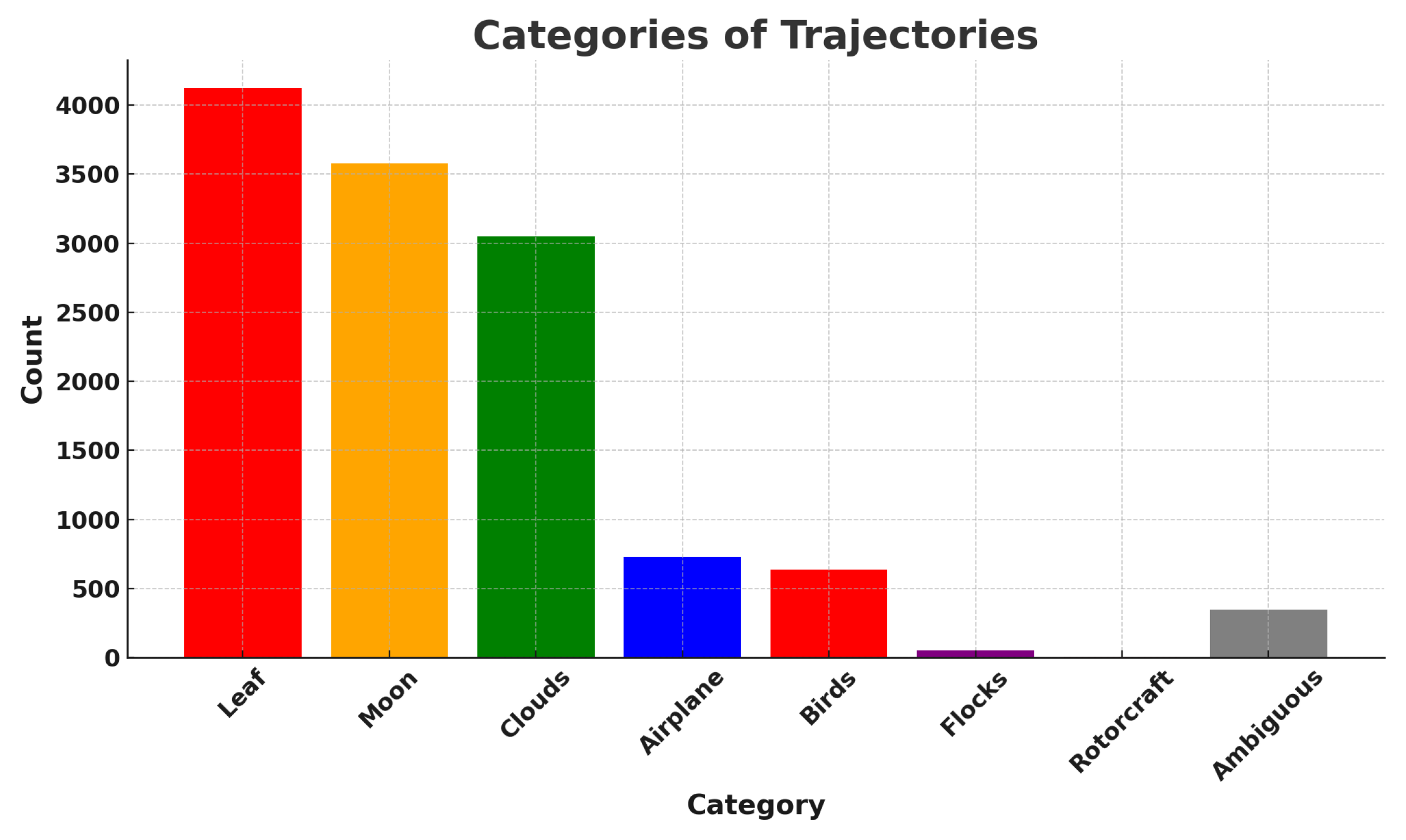

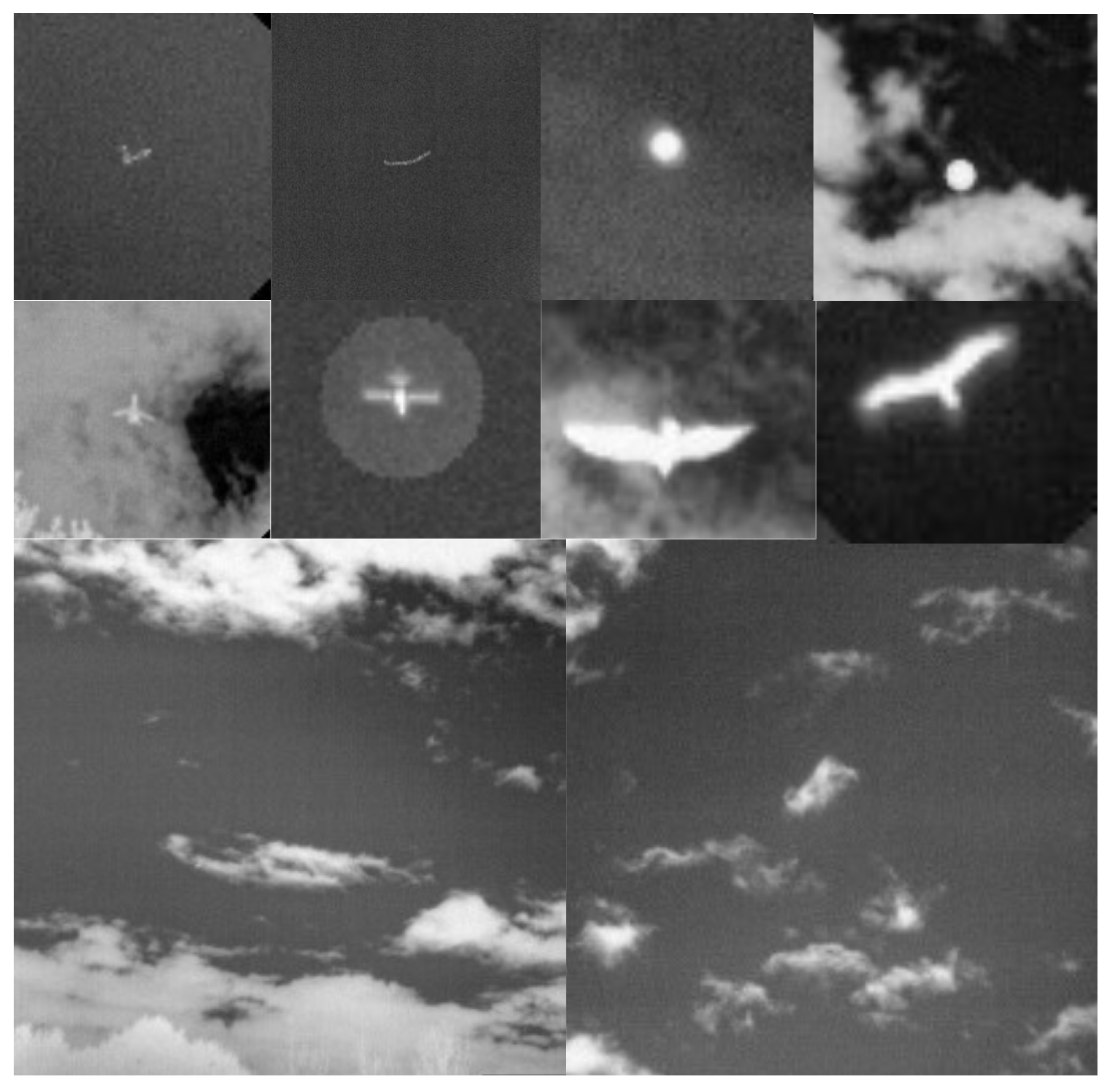

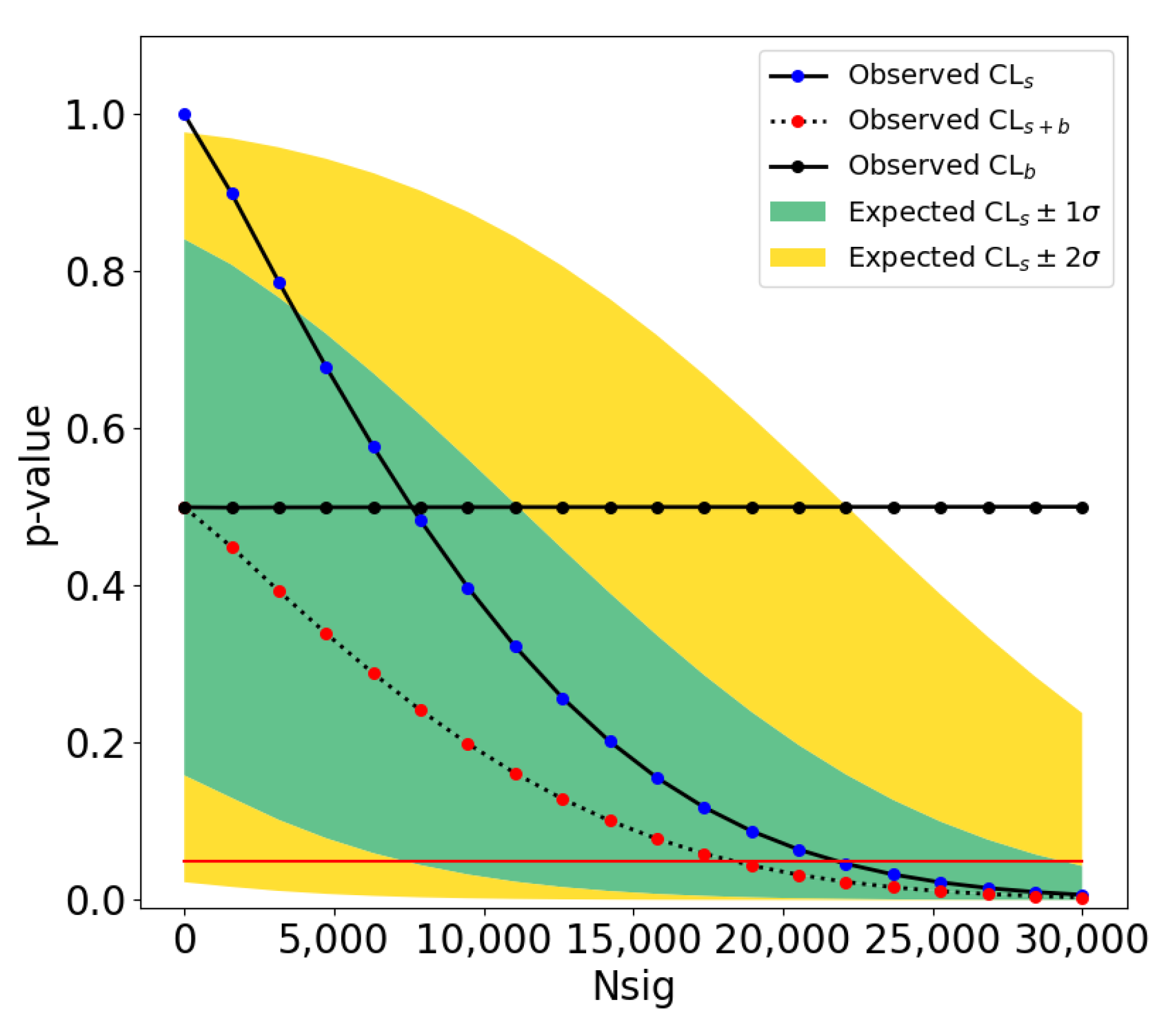

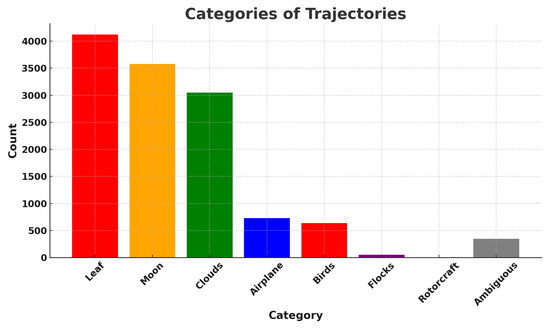

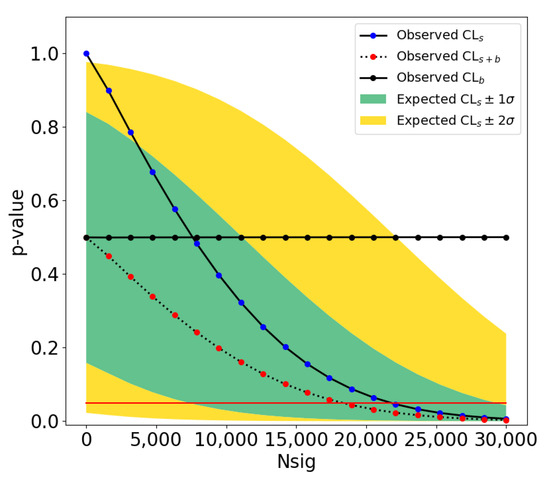

To date, there is little publicly available scientific data on unidentified aerial phenomena (UAP) whose properties and kinematics purportedly reside outside the performance envelope of known phenomena. To address this deficiency, the Galileo Project is designing, building, and commissioning a multi-modal, multi-spectral ground-based observatory to continuously monitor the sky and collect data for UAP studies via a rigorous long-term aerial census of all aerial phenomena, including natural and human-made. One of the key instruments is an all-sky infrared camera array using eight uncooled long-wave-infrared FLIR Boson 640 cameras. In addition to performing intrinsic and thermal calibrations, we implement a novel extrinsic calibration method using airplane positions from Automatic Dependent Surveillance–Broadcast (ADS-B) data that we collect synchronously on site. Using a You Only Look Once (YOLO) machine learning model for object detection and the Simple Online and Realtime Tracking (SORT) algorithm for trajectory reconstruction, we establish a first baseline for the performance of the system over five months of field operation. Using an automatically generated real-world dataset derived from ADS-B data, a dataset of synthetic 3D trajectories, and a hand-labeled real-world dataset, we find an acceptance rate (fraction of in-range airplanes passing through the effective field of view of at least one camera that are recorded) of 41% for ADS-B-equipped aircraft, and a mean frame-by-frame aircraft detection efficiency (fraction of recorded airplanes in individual frames which are successfully detected) of 36%. The detection efficiency is heavily dependent on weather conditions, range, and aircraft size. Approximately 500,000 trajectories of various aerial objects are reconstructed from this five-month commissioning period. These trajectories are analyzed with a toy outlier search focused on the large sinuosity of apparent 2D reconstructed object trajectories. About 16% of the trajectories are flagged as outliers and manually examined in the IR images. From these ∼80,000 outliers and 144 trajectories remain ambiguous, which are likely mundane objects but cannot be further elucidated at this stage of development without information about distance and kinematics or other sensor modalities. We demonstrate the application of a likelihood-based statistical test to evaluate the significance of this toy outlier analysis. Our observed count of ambiguous outliers combined with systematic uncertainties yields an upper limit of 18,271 outliers for the five-month interval at a 95% confidence level. This test is applicable to all of our future outlier searches.

1. Introduction

There is currently very little publicly available scientific-grade data on unidentified aerial phenomena (UAP) whose properties and kinematics purportedly reside outside the performance envelope of known phenomena. Several U.S. federal agencies have weighed in on this issue. The Office of the Director of National Intelligence (ODNI)’s annual UAP reports [1,2] have advocated since 2021 for bolstered data collection initiatives and increased resource allocation in order to scrutinize unexplained aerial phenomena that unambiguously pose aviation safety issues and potentially challenge U.S. national security. In 2023, NASA released an independent study [3] which emphasized how studying the phenomena using passive sensing “with multiple, well-calibrated sensors is paramount” and that “multispectral data” need to be collected “as part of a rigorous data acquisition campaign”. In addition, they suggested that “machine learning algorithms could be incorporated to detect and analyze UAP in real-time.” The Galileo Project is designing, building, and commissioning a passive, multi-modal, multi-spectral ground-based observatory to continuously monitor the sky and conduct an exhaustive observational long-term survey in search of measurable anomalous phenomena [4,5,6,7,8]. This long-term field observation effort fits many recommendations from the NASA study and represents “a complex undertaking whose outcome could allow for substantial and systematic gathering of UAP data as well as a robust characterization of the background” [3].

We have designed and built the first observatory at our development site in Massachusetts [4], and we are now commissioning instruments for each of the sensor modalities separately (optical sensors in the infrared, visible, and ultraviolet; acoustic; radio spectrum; magnetic field strength; charged particle count; and weather) and in combination to validate and benchmark their performance characteristics. Once commissioned, we will commence collecting scientific-grade data and begin to quantitatively identify classes of objects and statistical outliers that are corroborated over time. We can generate testable hypotheses to account for any novel class discovered in this way, which, after further investigation and possible instrument refinements, may result in the discovery of scientific anomalies, i.e., statistical outliers that resist explanation in terms of prevailing scientific beliefs, representing objects and phenomena that are unknown to science [4]. We can also derive upper bounds for the occurrence rate of outliers, novel classes, and anomalies at our development site. As the instruments of our observatory are commissioned, we will make copies and distribute them to additional sites. Such a network of observatories will enable us to monitor a larger volume of the sky. This will not only increase our likelihood of novel class discovery, but also open the possibility of scientific measurement of the UAP event rate and spatio-temporal distribution, which to date has never been achieved over significant time and spatial scales.

1.1. Related Work

For a review of relevant historical field work and related studies, we refer the reader to section 2.3 of our original design paper [4], and to [9]. A variety of related contemporary work exists, but academic, instrumented, field efforts focused on UAP are rare. These include the automated, roof-top, and continuously recording system of optical and infrared cameras developed by the Interdisciplinary Research Centre for Extraterrestrial Studies at Julius-Maximilians-Universität Würzburg in Germany [10,11,12]; a one week field study on and near Catalina Island in 2021, conducted by UAPx, led by a group of academic scientists from the University of Albany, using optical and thermal infrared cameras, and high-energy particle detectors [13]; a long-term effort re-purposing data from existing aerial monitoring networks that is underway by “Sigma2” (the UAP technical commission of the learned society, Association Aeronautique et Astronautique de France), which makes use of active and passive bistatic radars, visible and thermal infrared cameras, optical and radio meteoroid surveillance networks (including FRIPON [14], operated by the Institute of Celestial Mechanics and Ephemeris Calculation of the Paris Observatory), gravimeter and magnetometer networks, as well as IR and optical imaging satellites [15]; and the search for fast unexplained transients in photographic plates in historic sky surveys [16], also known as the Vanishing and Appearing Sources during a Century of Observations (VASCO) project, based at Stockholm University.

1.2. Galileo Project Commissioning Approach

Our observational effort is unique, with its high concentration of sensor modalities designed for long-term operation in outdoor field conditions. We require careful scientific calibration and commissioning to ensure a clear understanding of the reliability, operational environment, and detection volumes of individual instruments and combinations of instruments. Each instrument of our observatory goes through four distinct phases: development, integration and testing, commissioning, and science. Commissioning is a final group of activities (generally performed in situ after deployment) that aims to bring a system into an operational condition by ensuring it meets key requirements and science expectations. Meeting basic system requirements is verification, while confirming science gathering expectations is validating the system. Commissioning activities include calibrations, performance characterizations, functionality checks, and data processing evaluations, and are undertaken for both instruments and infrastructure generally on a system-level basis, but may occur on subsystems or individual components as needed.

Our approach is primarily driven by a “top-down” paradigm whereby our observatory goals (e.g., an all-sky survey, object triangulation, autonomous data processing with accurate object detection, remote autonomous operations, etc.) taken from our Science Traceability Matrix (STM) [4] will drive observation plans and then specific instrument and operational use cases. These will in turn form the basis of test cases and data collection campaigns that, when evaluated as successful, act as “proof” of performance. Additionally, observatory commissioning will be step-wise on an instrument-by-instrument basis (following the approach commonly used for NASA science missions), with the advantage of collecting scientific data as early as possible. Commissioning is also “end-to-end”, from sensing to data product production, meaning that it encompasses the evaluation not only of sensor calibrations but also of final data processing pipelines and the accuracy and utility of the final data products.

Importantly, although we define the completion of commissioning as the demarcation between instrument development and testing vs. scientific data gathering operations, it is typically the case for scientific observatories that commissioning is just the beginning of a continuous improvement process as more is learned about how the instruments and systems perform under in situ operating conditions. Continued fine-tuning of instruments, improved data handling and processing, better algorithms for science data analysis, new and better data reduction products, better tools for performing remote observatory monitoring and control, etc., are all expected well after completion of initial planned commissioning.

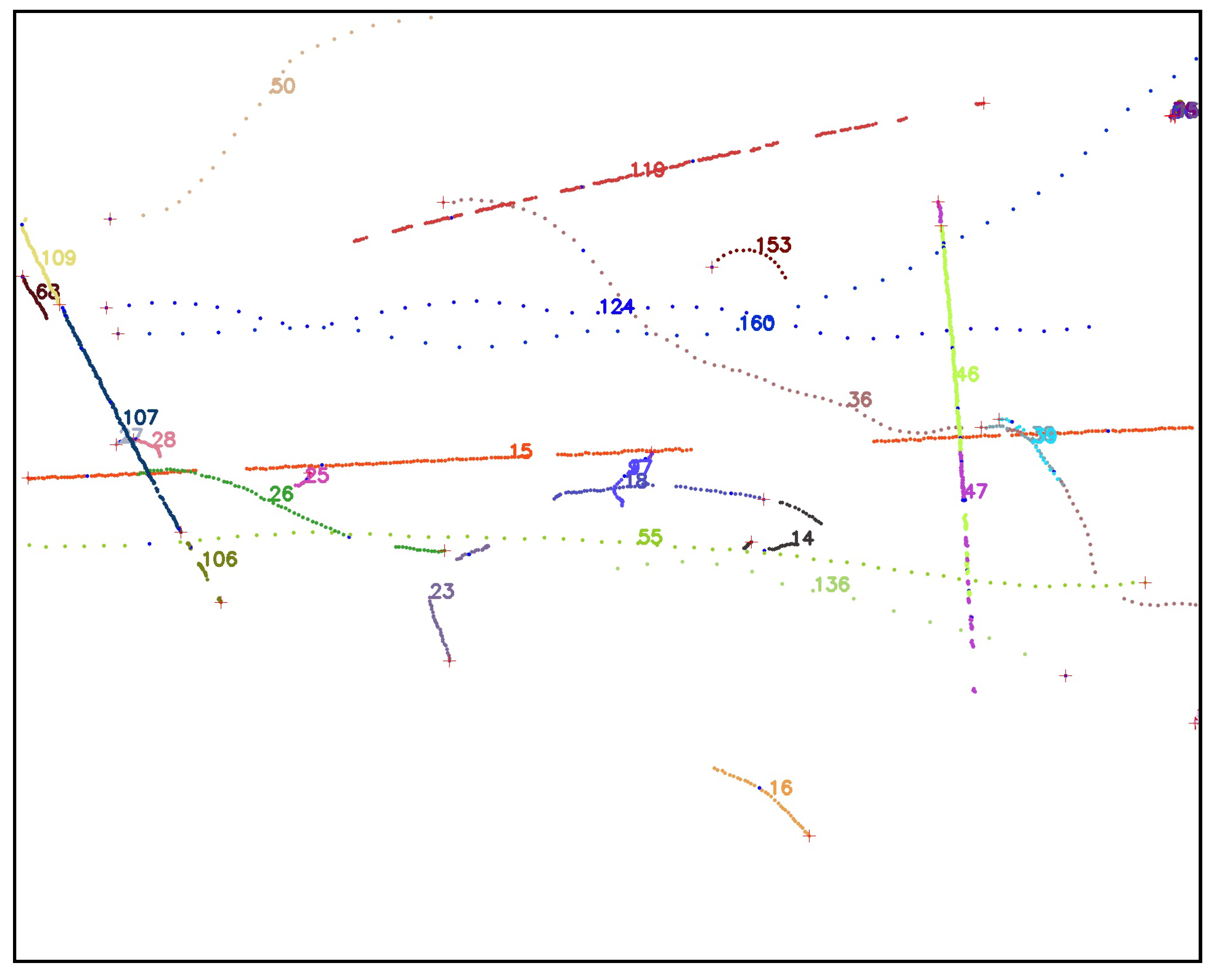

Our all-sky infrared camera array with eight uncooled long-wave-infrared FLIR Boson 640 cameras, nicknamed “Dalek”, is the first instrument of our first observatory to enter the commissioning phase. This paper describes the commissioning process and results. In Section 2, we describe the Dalek design, the camera calibrations, and the algorithms to detect and reconstruct object trajectories. In Section 3, we report on commissioning studies of the Dalek and the full system, including the detection pipeline. We also propose that UAP instrument-driven studies should adopt likelihood-based statistical tests to evaluate the significance of outlier searches. Section 3.4.2 showcases a toy outlier analysis, i.e., a deliberately simplistic outlier search which can be used to illustrate the application of likelihood-based statistical tests to future outlier searches. This simplified outlier analysis searches for reconstructed object trajectories with large sinuosity in our commissioning dataset.

This paper describes the innovative commissioning steps developed and used to bring a new type of all-sky infrared camera that we designed [6] into full scientific field operation in the search for aerial anomalies. These contributions can be applied to the commissioning of any all-sky infrared system, and include (a) the development of an innovative extrinsic calibration method for infrared cameras using ADS-B-equipped aircraft (stars are not imaged in infrared and the usual celestial methods cannot be applied); (b) the novel use of ADS-B-derived data to automatically establish the performance envelopes of an all-sky infrared camera array system, and as functions of atmospheric weather conditions, distance, and aircraft size; (c) an aerial census of the sky above our test site over the commissioning period of five months, which (d) enables an evaluation of our detection and tracking pipeline on manually labeled real-world videos and connects the recorded dataset with environmental and instrumental effects; and (e) a demonstration of robust quantification of the uncertainties in our search for aerial anomalies via a generalizable likelihood-based method to measure the significance of any given outlier search.

2. Materials and Methods

The reconstruction of an aerial object transiting in range of our observatory is defined as the process of identifying and characterizing the original object, its properties and kinematics, from the different sensors’ signals and the multiple outputs of the signals analysis pipeline, which can include, for example, the reconstructed object’s trajectory. This section aims to present our current methods for reconstructing aerial objects using solely the infrared camera array, whose details are described in Section 2.1. Section 2.2 presents the different calibrations applied to the cameras, including intrinsic, extrinsic, and thermal calibration. Section 2.3 summarizes the reconstruction of aerial events, for which we use a mixture of machine learning and traditional object tracking algorithms, which are evaluated in this section. Also in Section 2.3, we have summarized the datasets referenced throughout the paper for performance evaluations.

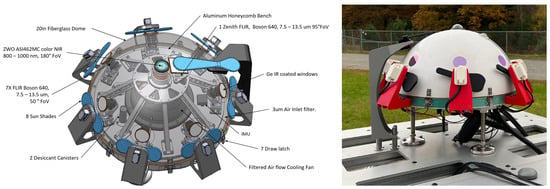

2.1. The Dalek Infrared Camera Array

One of the core modalities of the ground-based observatories is a suite of weatherproofed optical sensors which can be used to detect, track, and classify objects in the entire sky at multiple wavelengths: visible, infrared (IR), and ultraviolet (UV). One of the flagship instruments is a custom all-sky array of eight, long-wavelength-IR (LWIR) cameras. It is a hemispherical array of seven IR cameras with one additional IR camera pointing towards the zenith, which provide a 360° azimuth by +80° elevation view of the environment, as shown in Figure 1. This figure also shows a near-infrared camera ZWO ASI462MC which is built into the Dalek but is not yet being used, and thus is outside the scope of this paper.

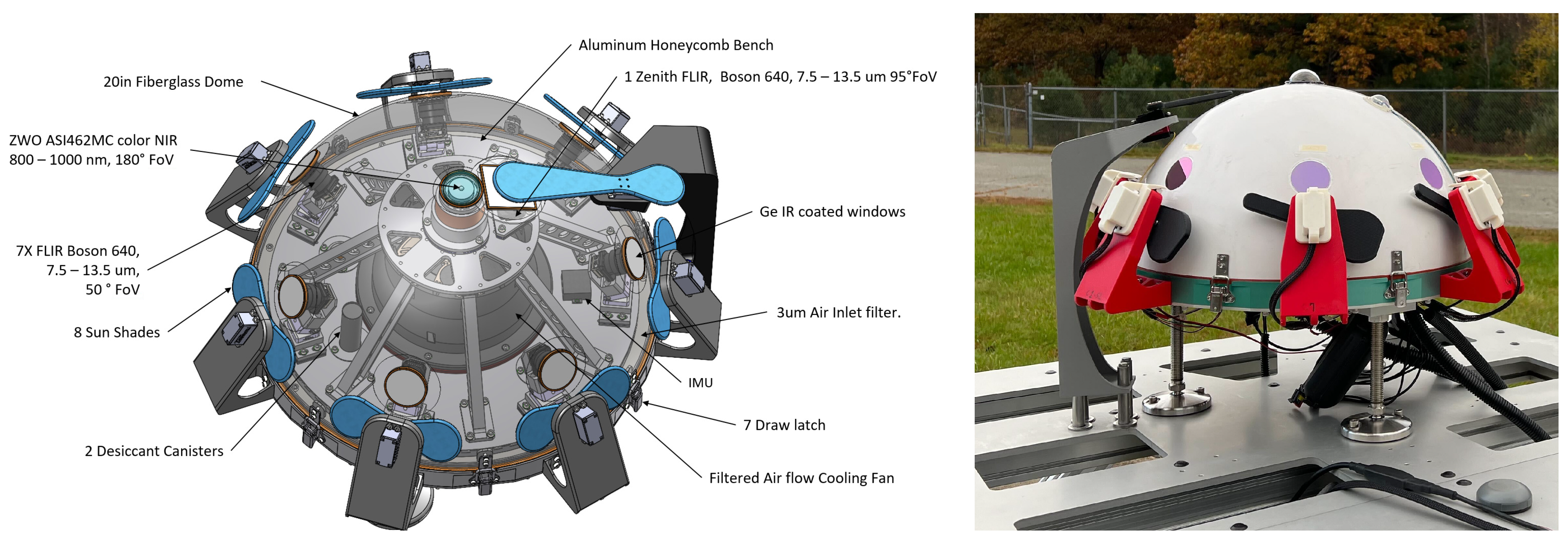

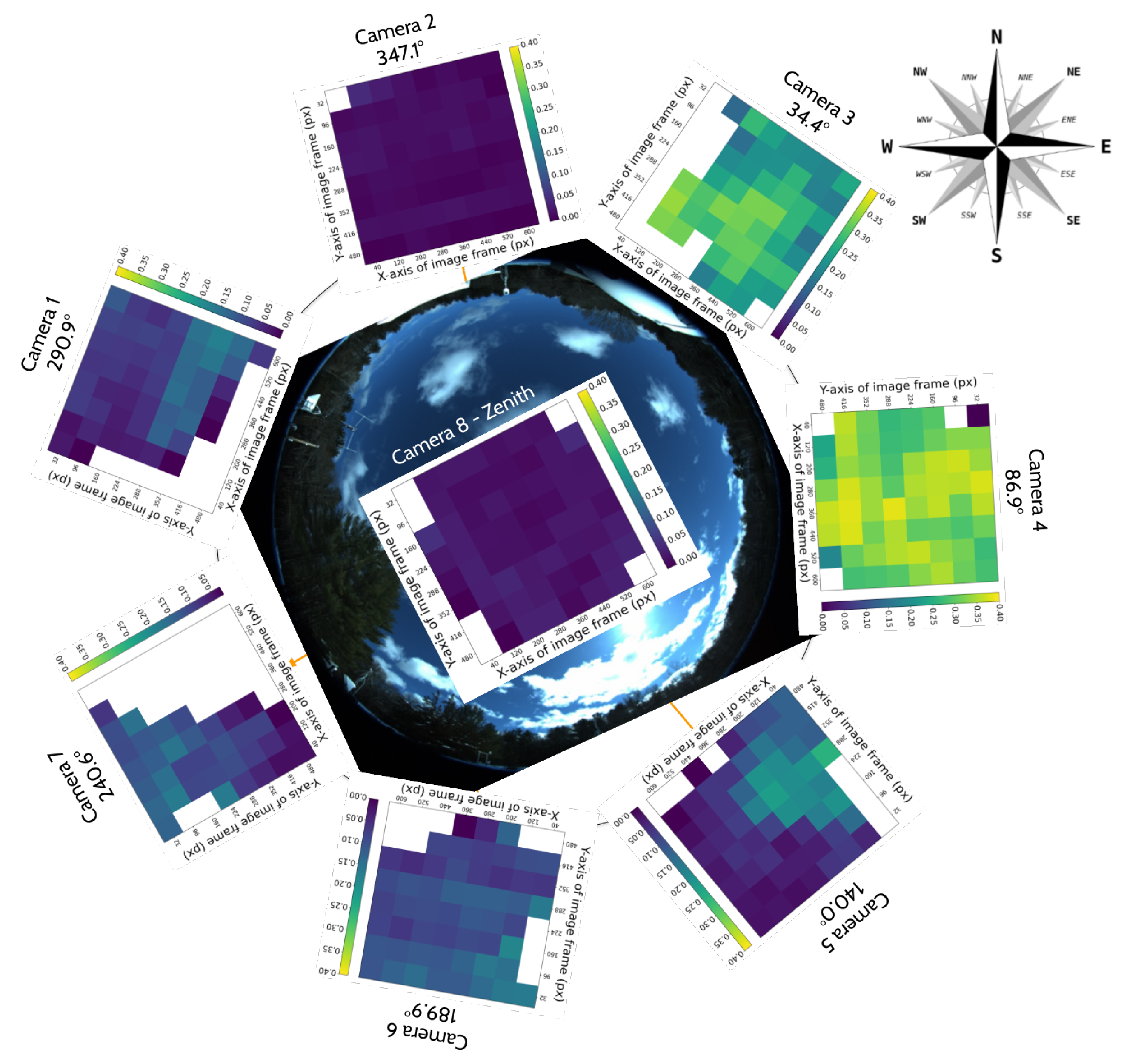

Figure 1.

(Left): Mechanical design drawing of the Dalek IR camera array. (Right): Photograph of the Dalek as constructed at the development site.

When designing the all-sky infrared array, we considered from four to fourteen cameras. The camera count of eight was selected to allow for high angular resolution, which translates into a larger detection volume, and for enhanced ability to resolve details, while balancing cost considerations. We are also developing a lower-cost and less complex four-camera array and we will compare its performance to the eight-camera array in the future.

The prototype has been deployed to our development site in Massachusetts. This site is surrounded by forest and within 5 miles of a regional airport, which ensures a regular flow of airplanes above the Dalek that we can harness for both calibration and commissioning.

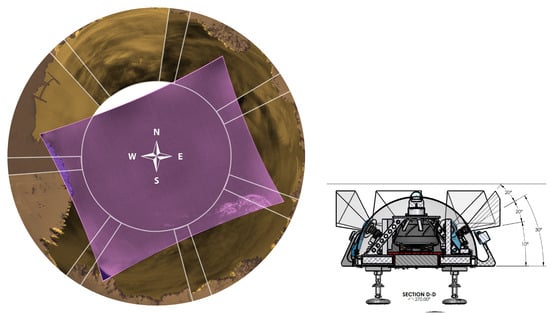

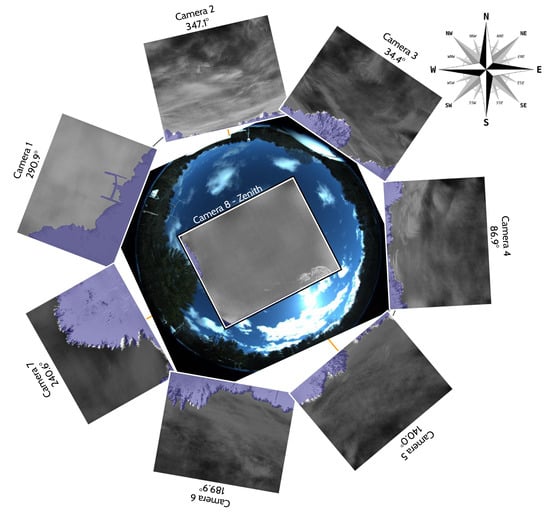

The seven IR cameras in the hemispherical array are FLIR LWIR Boson 640 × 512 (spectral band 7.5–13.5 m, focal length 8.7 mm, inverse relative aperture of F/1). Their field of view (FOV) is 50° × 40° (horizontal and vertical). The center of their optical axis is pointing 30° above the horizon such that the bottom of the image taken by these cameras corresponds to 10° above the horizon. As a result, adjacent cameras have overlapping FOVs, as shown in the left of Figure 2. The zenith camera is an FLIR LWIR Boson 640 × 512 (spectral band 7.5–13.5 m, focal length 4.9 mm, inverse relative aperture of F/1.1) with an FOV of 95° × 72°. Figure 3 shows a mosaic of the views of the eight cameras in the array, oriented with respect to true north in map view. Each camera has trees and vegetation in the lower part of its FOV; the same figure also overlays in semi-translucent purple the treeline masks that we use in post-processing to only analyze the sky area of the images. These masks are initially generated using the recent pre-trained Segment Anything Model (SAM) [17] machine learning model, which classifies every pixel of an image into different semantic categories. The enclosure is a fiberglass dome, which provides weatherization. The IR cameras are set behind germanium windows and can be protected from direct sunlight by programmable sunshades.

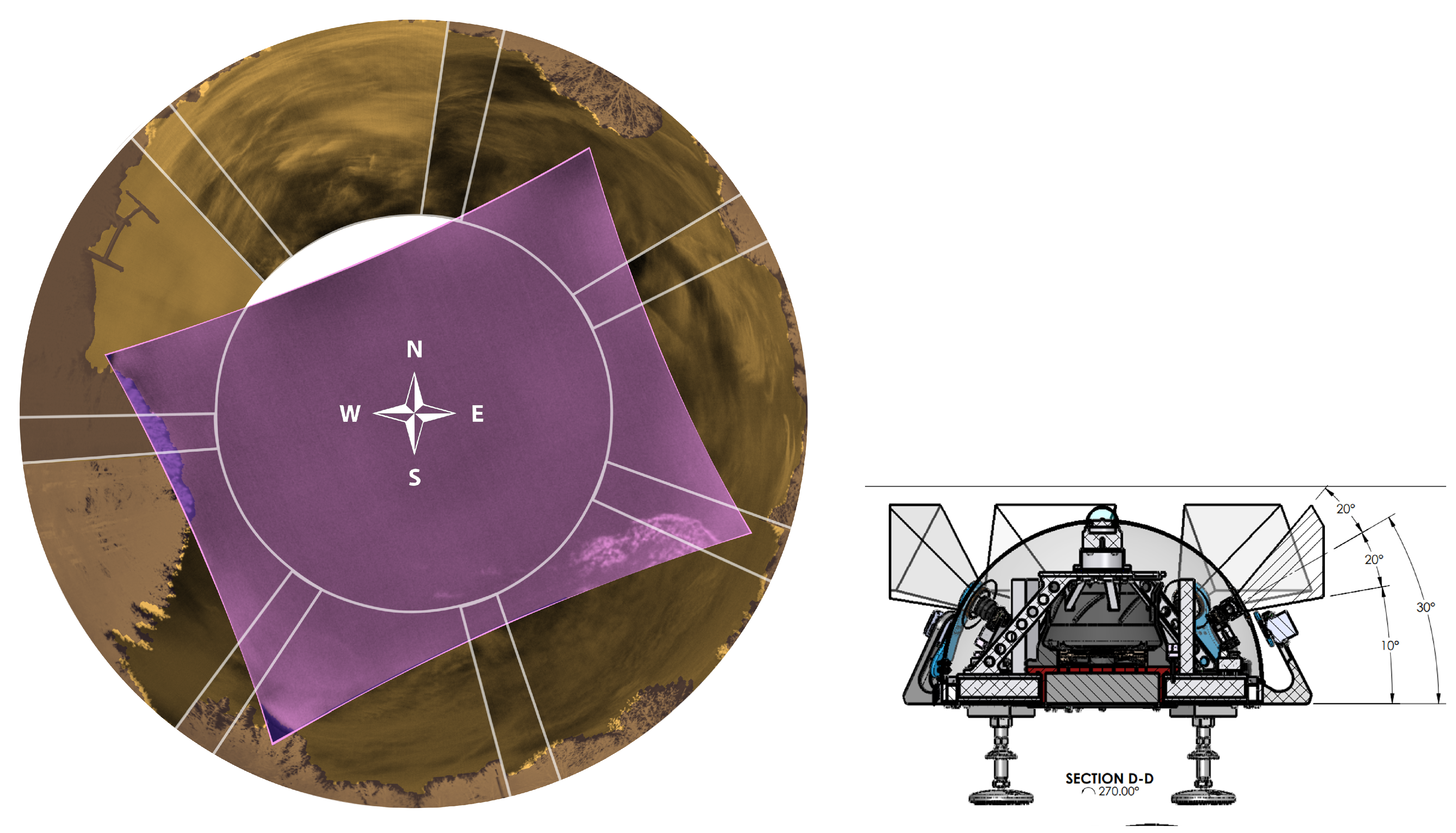

Figure 2.

(Left): Illustration of fields of view (FOVs) and their overlap between the eight cameras of the Dalek. The orange areas represent the FOVs of the seven cameras arranged hemispherically. The purple area shows the FOV of the zenith camera on top of the Dalek. (Right): Side view of fields of view for the hemispherical Dalek cameras. As the center of their optical axes are pointing 30° above the horizon, the bottom of the images that they capture corresponds to 10° above the horizon.

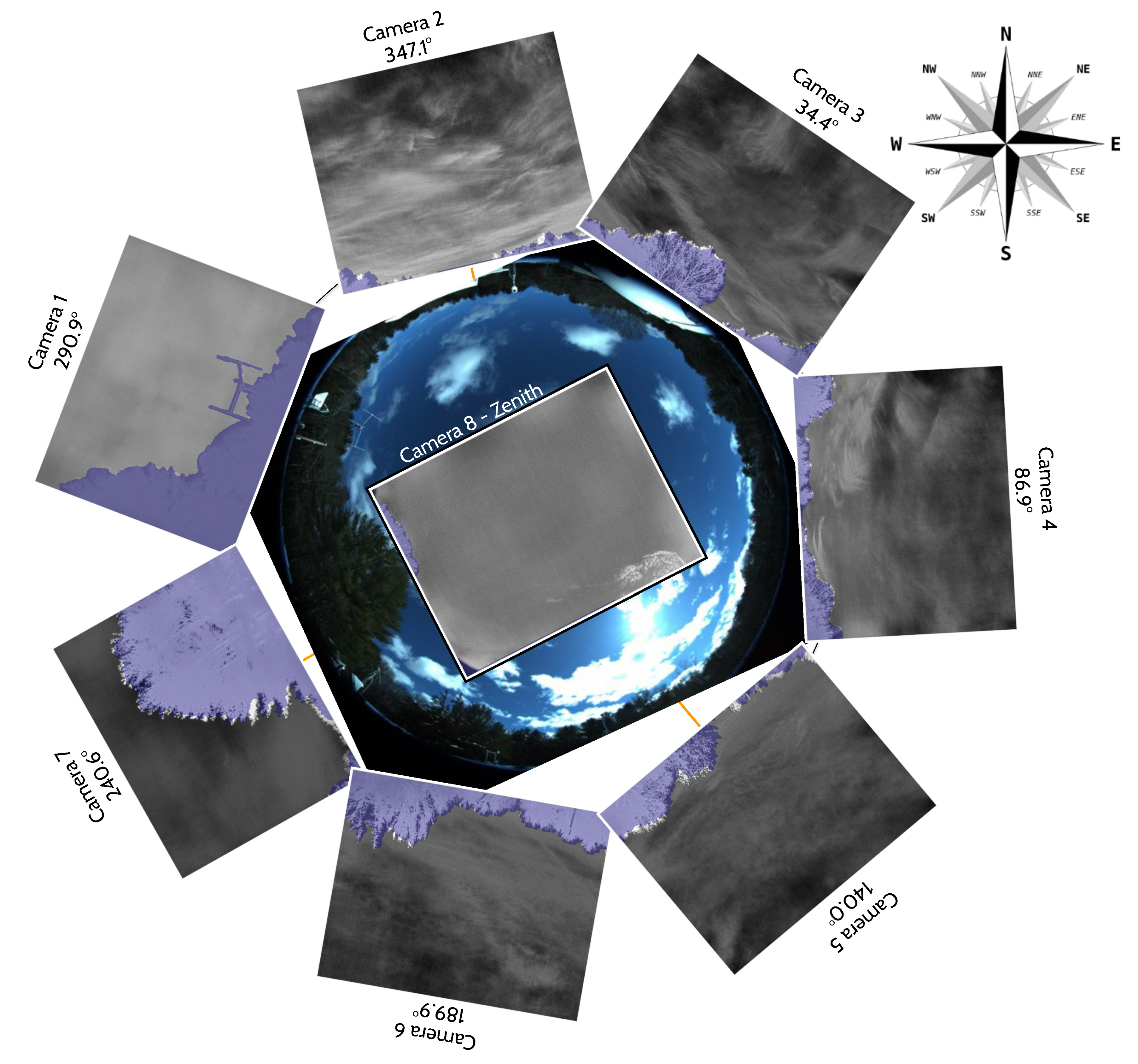

Figure 3.

Map view of a mosaic of images from the seven hemispheric cameras and the one zenith Boson IR camera, and their orientation with respect to a visible all-sky camera photograph from the Dalek’s location (background, center of the image). The shaded (purple) semi-translucent overlays show the corresponding treeline masks that are used in post-processing to ignore all but the sky area of the images. All camera frames are taken from a video recording from 7 May 2024 except for camera 1 (3 April 2024) and camera 7 (10 May 2024).

Each camera is connected via a USB3 cable to an Nvidia Jetson Orin NX, which can handle edge computing tasks such as continuous recordings and, in the future, real-time object detection, tracking, and classification. One Jetson receives data from four FLIR cameras. A Gstreamer [18] pipeline feeds from each camera and creates 5-min video segments, which are stored on the Jetson. For all of the data used in this paper, we were recording at 10 frames per second, although we plan to increase this to 30 frames per second in the future to take full advantage of the Boson cameras’ capabilities. The bit depth is 8-bit unless otherwise specified. The cameras have low, high, and automatic gain settings. All the cameras are set on high-gain mode. An automatic flat-field calibration (FCC) using a shutter happens regularly and interrupts the recordings, overlaying a green square in the top right of the frame while the FFC is performed. This mitigates the effects of infrared non-uniformity over long recording time periods. While we expect that certain parameters such as a higher frame rate would improve the detection and tracking performance, a quantitative analysis of the influence of these parameters would require us to collect data over a longer period of time, up to a year, to have sufficient statistics of ADS-B-equipped aircraft, which is our most abundant source of labeled real-world data to assess detection and tracking performance. We plan to write a future paper focused on comparing the influence of the array camera system parameters, such as the camera count, on the performance envelope.

The custom Dalek enclosure protects the cameras from weather conditions such as wind, rain, snow, dust, or disturbance from animals. The fiberglass dome has a maximum deformation of less than 1 mm of radial deformation when submitted to a 200 mph wind, according to a finite element analysis of its structure, which is roughly double the record wind gusts in this area. The enclosure contains two 72-cubic-inch desiccant containers meant to keep the enclosure dry. The desiccant changes color from blue to pink when it reaches its full moisture-absorbing capacity. Baking it releases the moisture and restores the absorbing capacity for a new cycle of use. Finally, a fan in the dome enclosure helps to evacuate hot air from both direct sunlight and hardware heat emission. This keeps the hardware below the maximum operating temperature of 80 °C for the FLIR Boson 640 cameras. A first set of germanium windows was chosen for their high transmissivity (95% anti-reflection/anti-reflection (AR/AR) coating) over corrosion resistance (85% diamond-like carbon/anti-reflection (DLC/AR) coating). Prolonged outdoor exposure significantly degraded the AR/AR coating within a year. The germanium windows have since been replaced with ones having both a hydrophobic coating and a high transmissivity (∼96% hydrophobic/anti-reflection (HP/AR) coating). These have not shown as many signs of degradation.

Ideally, all eight of the Dalek’s cameras would record continuously 24 h per day, seven days a week, all year. However, the IR camera sensors can be damaged if the sun is directly in their field of view for long periods, so the Dalek is equipped with a sunshade on each camera that automatically opens and closes on a per-camera schedule as the sun crosses the sky. The mechanical sunshades are mounted on servos and controlled by an Arduino micro-controller. They also have integrated brushes on the side facing the camera to periodically remove debris from the windows. When the sunshade is closed on a camera, its recording is stopped in order to save data storage space. As a result, all cameras run during the night, but during the day, each one has a different recording schedule, with the north-facing cameras recording for longer durations and the south-facing cameras for shorter. Thus, the total expected recording duration is less than 24 h/day for each camera. Camera 2, facing north-northwest, has the longest recording duration, and camera 6, facing south-southeast, has the shortest. The sunshade schedule and total recording time per camera per season is broken down in Table A1. The sunshades are depicted in blue in the schematic on the left side of Figure 1, and are black under red mounts on the photograph of the Dalek as-built, shown on the right of Figure 1.

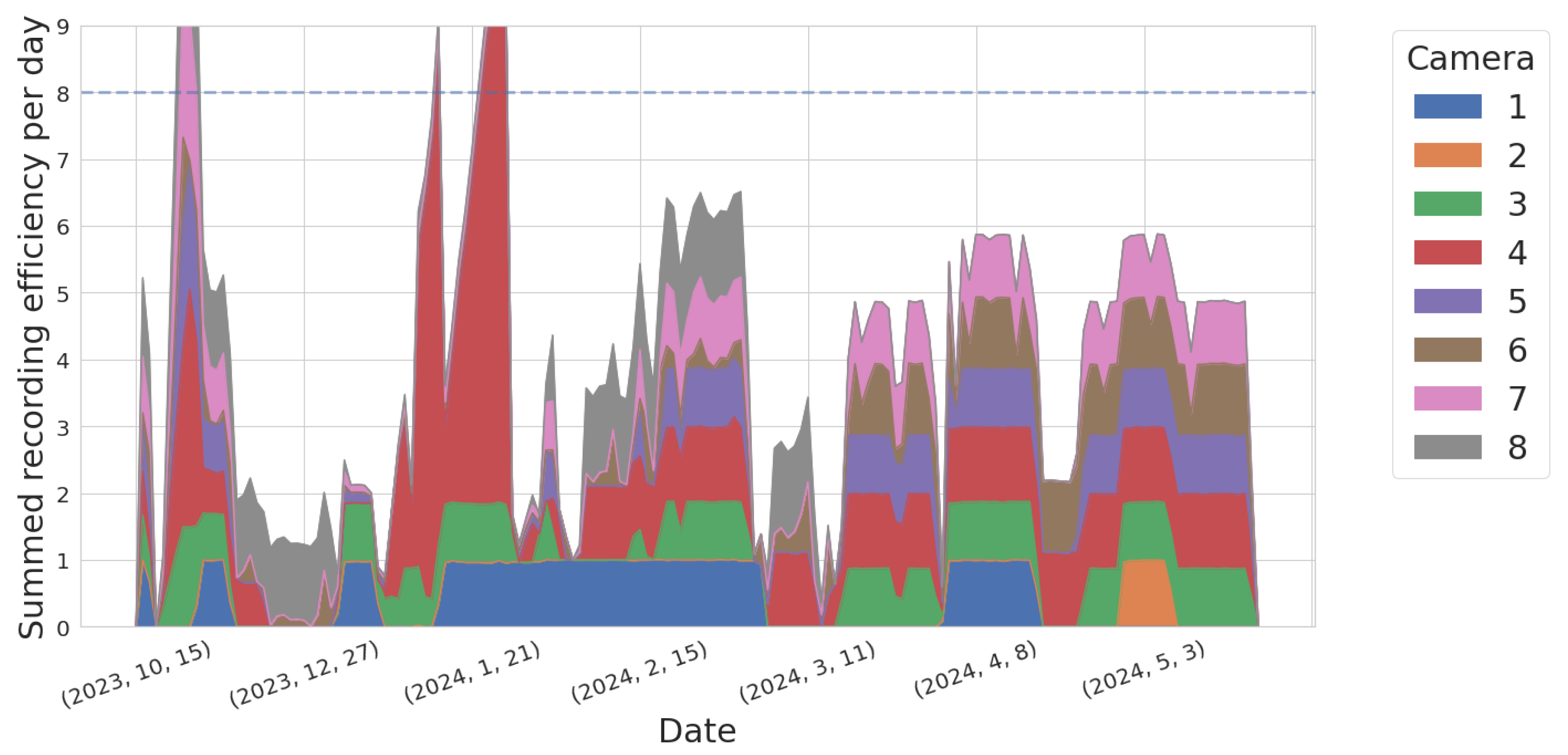

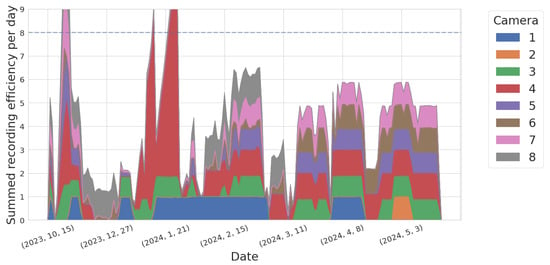

Although we started recording continuously in November 2023, the system’s uptime only started to stabilize in January 2024. Figure 4 shows the improvement over time of the Dalek cameras’ uptime, quantified by the recording efficiency per day or ratio between the total recording duration and the expected duration from the recording schedule, for each camera. Cameras 2 and 6 suffered the most downtime, and were eventually diagnosed with USB cable failure. After replacement of the USB cable, their behavior stabilized dramatically. The data points in Figure 4 with an efficiency above 8 are due to manual enabling of recording outside of the automatic schedule, for testing purposes.

Figure 4.

Stacked area plot showing the evolution over time of the sum of all cameras’ recording efficiencies, defined as recording duration per camera per day divided by expected duration based on recording schedule, which varies per camera. If all cameras were recording according to the schedule all the time, the summed efficiencies should add up to 8. The few data points above 8 are due to manual enabling of the recording, for testing purposes. This timeline goes from November 2023 to May 2024. Some cameras, such as cameras 6 and 7, show a drastic improvement over time.

2.2. Calibration

To relate object positions in the camera image to object positions in the sky, several camera calibration steps are required. Intrinsic calibration corrects for slight aberrations in the lens itself, both intentional (e.g., barrel distortions) and lens imperfections. Removal of image non-uniformities (INUs) involves correcting for the impact of microscopic lens aberrations on the recorded image under certain lighting conditions. Extrinsic calibration, applied last, enables a mapping from the sensor’s physical location and object’s location in the 2D field of view to the object’s 3D world location, for example, from an image’s pixel to an object’s azimuth and elevation relative to the camera location.

2.2.1. Intrinsic Calibration

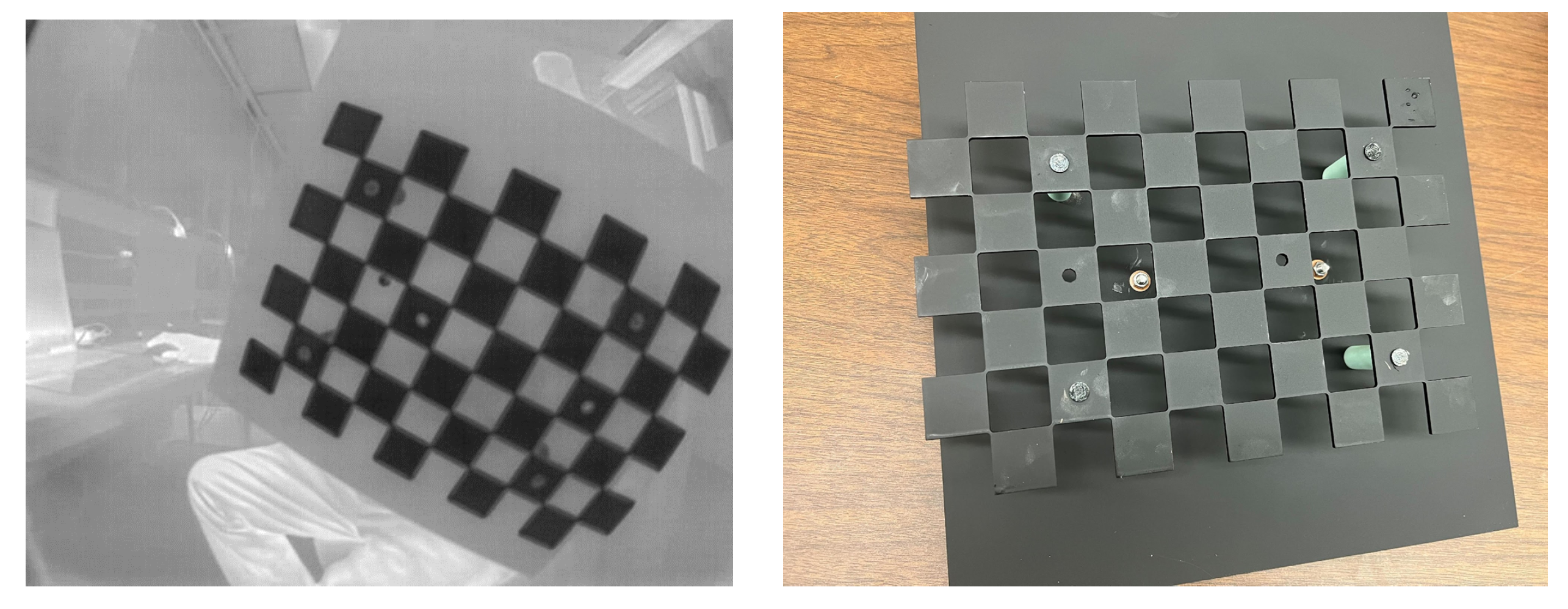

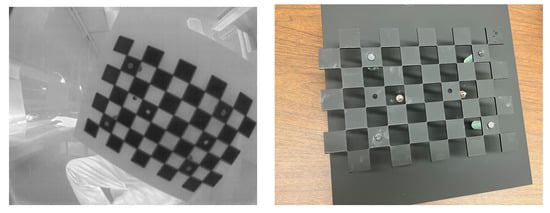

The chessboard calibration method described in [19] and coded in the OpenCV library [20] allows us to perform an intrinsic calibration of each camera independently. It yields intrinsic parameters: focal length, optical image center, and distortion coefficients. These are stored in the camera configuration file. This calibration is performed in the laboratory. Since the Boson cameras are only sensitive to long-wave-infrared wavelengths, we cut a chessboard grid out of a metal sheet. To obtain a good contrast between cold and hot, we cool it in a freezer to −20 °C, quickly assemble it with thermal insulators as spacers to a solid background metal sheet that has been heated to 50 °C, and hold it by a handle that is mounted on the back of the warm metal sheet to capture a series of calibration images covering the field of view of the camera, as shown in Figure 5.

Figure 5.

Metal chessboard used for intrinsic calibration of the FLIR Boson 640 cameras. (Left): Boson camera image. (Right): Dark-painted metal cutout chessboard on dark-painted metal base plate.

2.2.2. Removal of Image Non-Uniformities (INUs)

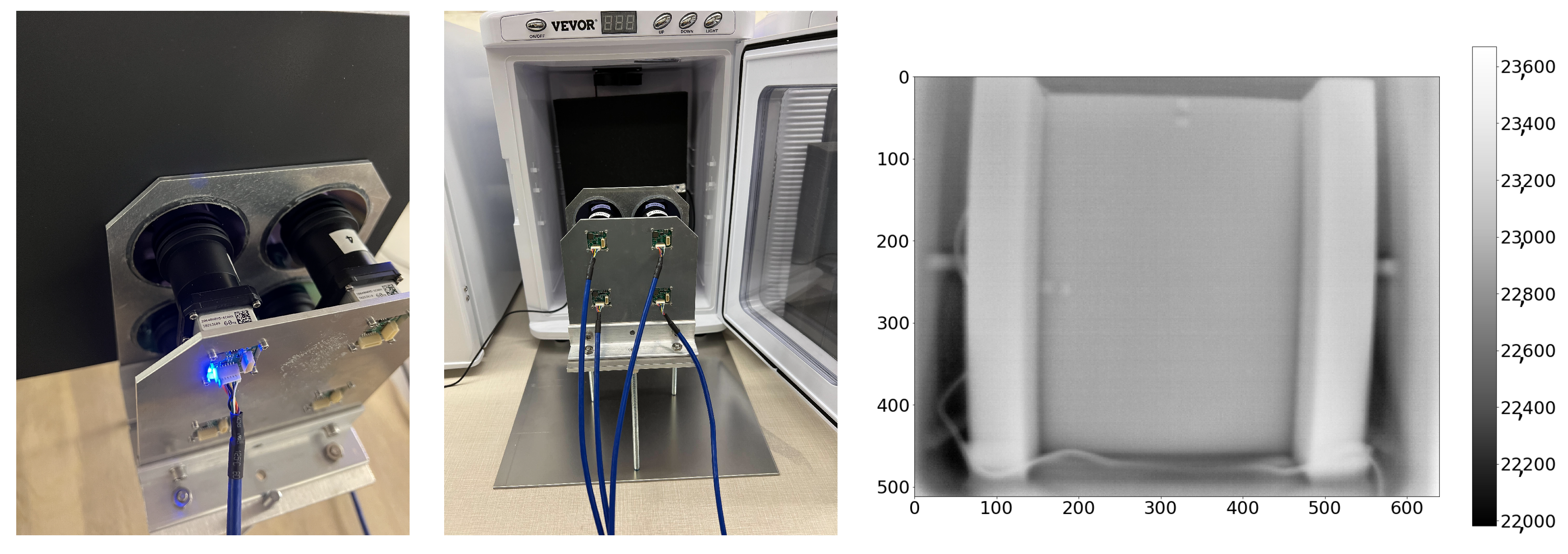

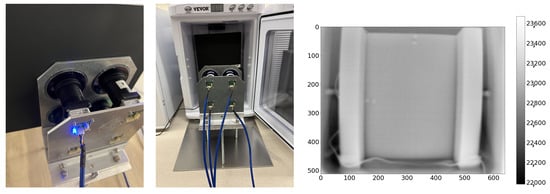

The Boson camera manufacturer, FLIR, defines INUs as “a group of pixels which are prone to varying slightly from their local neighborhood under certain imaging conditions” [21]. Specks of dirt, dried water droplets, or microscopic defects in the coating on the outside of the Boson camera’s factory window, or small defects on the inside of the window, can cause INUs. FLIR recommends two procedures to mitigate INUs: lens gain correction followed by supplemental flat-field correction (SFFC); these should be carried out in this order [22]. For lens gain correction, we image two metal plates at different but uniform temperatures. The metal plates are covered in black Krylon ColorMaxx paint to ensure thermal uniformity. The software to compute the lens gain correction is provided by FLIR. The SFFC compensates for irradiance from heat generated inside the camera body itself. We occupy the entire FOV of the camera with a black-body target (polyurethane foam) to perform the SFFC. Both the metal plates for lens gain correction and the black foam for SFFC are pictured in Figure 6.

Figure 6.

(Left): Four cameras mounted on a fixture and pointed at the black metal plate used for removing INUs through lens gain correction and supplemental flat-field correction (SFFC). (Middle): Four cameras mounted on a fixture and pointing at the black polyurethane foam in the incubator; used for thermal calibration of four Boson cameras at the same time. (Right): Example of 16-bit image taken with a Boson camera using the thermal calibration setup described in Section 2.2.5. The two small pieces of reflective tape, pinned at the top and left side of the foam, are used to find the geometrical center of the foam in the image, where the target temperature was measured using a thermometer. The “ears” of foam minimize thermal reflections from the sides and door of the freezer or incubator.

2.2.3. Extrinsic Calibration with ADS-B-Equipped Airplanes

The rotation matrix and translation vector which convert a camera’s coordinate frame to world coordinates constitute the extrinsic calibration parameters of a camera. To find the translation vector, we use GPS coordinates from a cellphone positioned next to the Dalek, which has an accuracy of approximately 5 meters [23] in this case. Extrinsic calibration of visible-light cameras often relies on astrometry, but the LWIR Boson cameras cannot see stars. Therefore, to find the rotation matrix, we adopt the novel calibration technique described in [4] using airplanes, which emit at LWIR wavelengths and also reflect emissions from the warm ground. Automatic Dependent Surveillance Broadcast (ADS-B) Mode-S transmission devices are operated in most airplanes flying in US airspace, as required by the US Federal Aviation Administration [24]. Airplanes send with ADS-B, in near real-time (transmission of the aircraft position must happen within 2 s from the measurement time, with up to 0.6 s of uncompensated latency, and at least once a second [25]) and roughly once every 2 s, their GPS-derived latitude and longitude positions, and GPS- and barometric-derived altitudes. ADS-B receivers are widely available for retail and anyone with a software-defined radio receiver can collect local ADS-B data. Non-profits such as OpenSky Network aggregate ADS-B data, crowdsourcing individuals’ ADS-B receiver data from all over the world into a single database [26]. This historical ADS-B database is made available to academic researchers. For this paper, we rely on the OpenSky historical database [26] for ADS-B records to obtain the coordinates of the many aircraft flying in the Dalek’s field of view because our own ADS-B receiver was not operating continuously during the five-month period of the Dalek’s commissioning.

If the camera captures N images of an aircraft as it passes overhead, we can interpolate the 3D coordinates provided by the ADS-B data over this time range, and associate a 3D world location with the aircraft’s camera pixel location at the timestamp of each frame. Let be the vectors pointing from the camera’s known origin in world coordinates, given by GPS, to the airplane’s position in the same frame as given by ADS-B. We then use OpenCV’s implementation of the Perspective-n-Point (PnP) algorithm [27] to compute the camera’s pose in world coordinates, giving as input the camera’s intrinsic parameters, extrinsic parameters, and the airplane’s image points and corresponding world pointing vectors. This returns a rotation matrix which represents the camera’s pose. This method to extrinsically calibrate a camera can be applied to both optical and IR cameras. Applying it to the Dalek data was our first demonstration of this method, and we plan to use this for other cameras in the observatory.

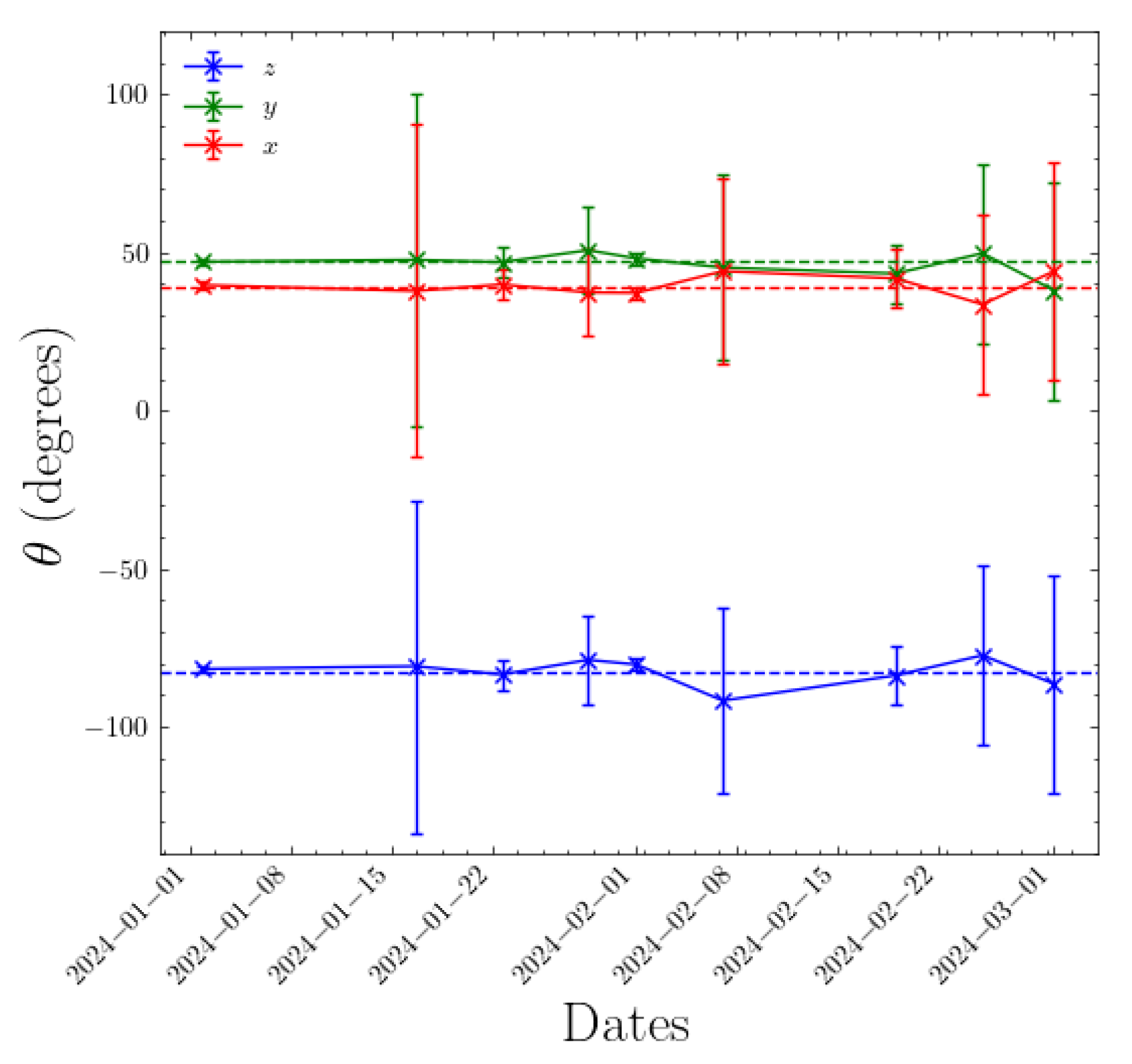

2.2.4. Monitoring Camera Orientation Changes

We automated this extrinsic calibration technique in order to repeat this at regular intervals. This opens the door to detecting unwanted changes in camera orientation; for example, this can happen during high wind weather events or hardware maintenance that requires opening the dome, or temperature-related expansion of the aluminum bench supporting the cameras. Small changes affect the treeline mask used downstream in the analysis pipeline for reconstruction of aerial events, and cause incorrect estimation of object positions in the sky, which depend on the calibration matrices. Determining this threshold and using this to detect significant changes is important for consistency and accuracy in tracking and trajectory reconstruction.

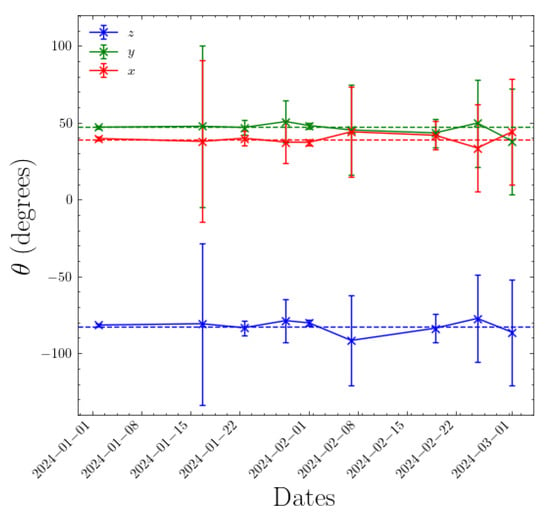

The process we use to monitor for unexpected changes in camera orientation is as follows. We use the You Only Look Once (YOLO) machine learning (ML) object detection method [28,29], described in Section 2.3, to automatically detect aircraft in a given frame of a Boson camera video. The existing extrinsic and intrinsic calibration allows us to translate an aircraft’s ADS-B GPS coordinates into camera 2D image coordinates. The ADS-B predicted positions are interpolated in a time interval of s, to account for timing inaccuracies in both the ADS-B and the camera image frame timestamps, and matched to the closest center of the detection bounding boxes predicted by YOLO. We allow such a generous time threshold to account for the occasionally less-than-ideal timestamp accuracy of the video files recorded during this commissioning phase. For matching we use a distance threshold between the centers of the YOLO-predicted and ADS-B-derived bounding boxes of 20 pixels and a range threshold of 2 km from the observatory to the aircraft. The distance threshold accommodates latency-induced inaccuracy in the ADS-B-derived position estimation and inaccuracies in the existing extrinsic calibration. We collect data from a minimum of 20 airplanes before computing a new orientation matrix. This procedure is repeated on a weekly basis to monitor the changes over time. Figure 7 shows the actual evolution over time of the rotation matrix of one of the Dalek cameras. The rotation matrix is represented by its three Euler angles and computed over a period of at least 1 week, ensuring statistics from at least 20 airplanes. We observe slight fluctuations in the Euler angles over time, of the order of 1%. Errors are derived from the inverse Hessian matrix, obtained using the minimization algorithm [30]. These errors do not account for the uncertainty in bounding boxes’ center positions, which could affect this measurement. This method will be improved with further refinements but it can be used in its current form to trigger an alarm if the orientation of a Boson camera changes significantly, for example, by more than one degree in any Euler angle.

Figure 7.

Euler angles representing the orientation matrix of camera 1 in the Dalek over a period of three months: January to March 2024. The error bars are estimated from the inverse Hessian matrix after minimization. Large error bars are correlated with a lack of statistics. Overall the fluctuations are of the order of 1%. The dashed lines represent the fixed Euler angle values that were used throughout this paper.

2.2.5. Thermal Calibration

The FLIR Boson 640 cameras have the ability to record images with 8- or 16-bit depth. The 16-bit data can be used for thermal radiometry. The camera model selected for the Dalek comes without factory thermal calibration, hence we performed the thermal calibration of the cameras ourselves. This requires taking images of a black-body target of known emissivity when both the camera and the target are exposed to a wide range of temperatures. This is necessary in order to correlate the 16-bit pixel values and camera temperature to the target temperature.

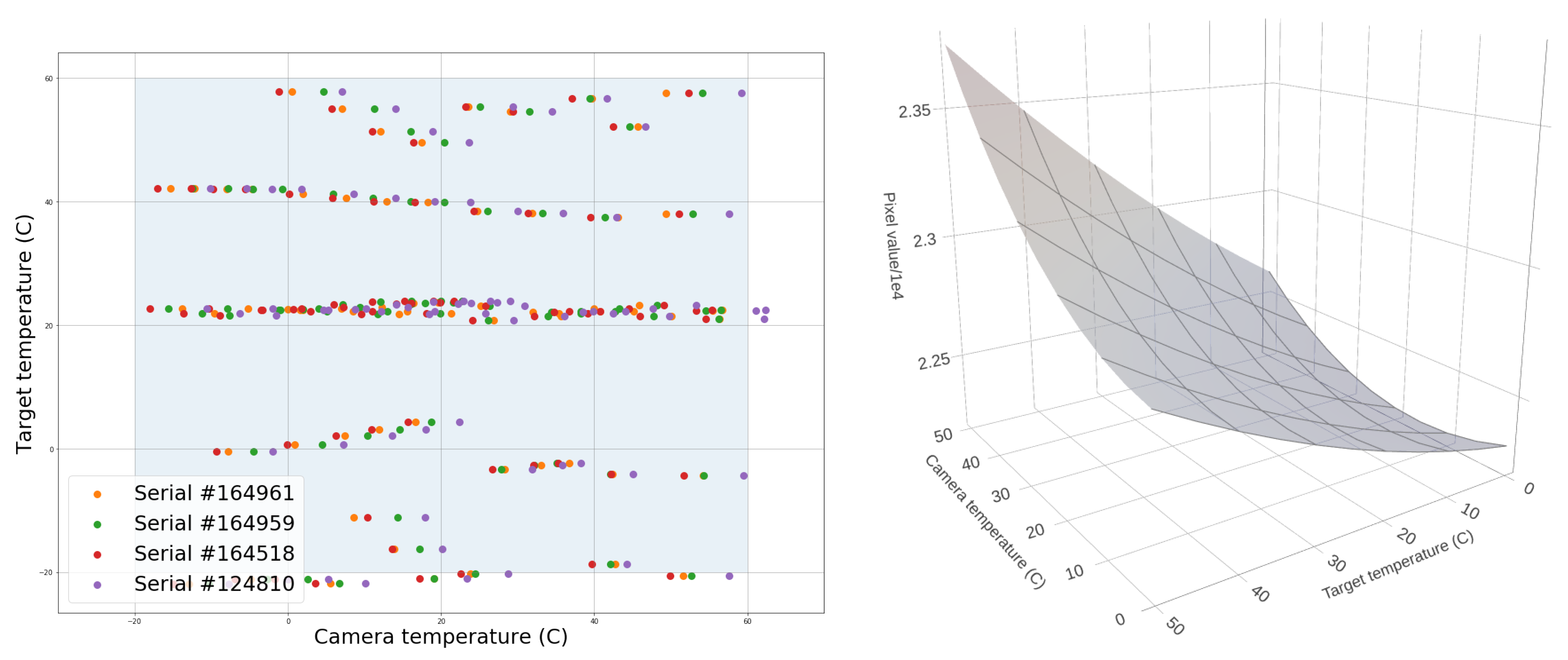

Our target was a block of black polyurethane foam. In order to calibrate up to four cameras at the same time, we used the custom-built mechanical fixture that holds both the cameras and the germanium windows, shown previously in Figure 6 and used for the intrinsic calibration procedure. Since the opacity of the germanium windows is temperature-dependent, being almost opaque at 100 °C, it is important to include them in the calibration fixture. We drilled holes in a plywood sheet to keep the distance and orientation of the fixture relative to the foam constant. Two pieces of foam were taped into position, one inside an incubator and one inside a freezer. Figure 6 shows an example of this setup, where the fixture faces the foam target in the incubator. A thermal probe was inserted in the foam to measure the temperature at the center of the block. The position of the probe tip inside the foam was marked using horizontal and vertical reflective tape indicators at the edge of the foam. We used the temperature sensor onboard the camera chips to record the camera temperatures. We explored a 2D grid of target and camera temperatures in the range of [−20, 60] °C. For a given target temperature, the process was as follows:

- Warm or cool down the foam to the target temperature.

- Warm up the camera to 60 °C using an incubator.

- Place it in front of the foam target and capture images at regular intervals while it is cooling down back to room temperature.

- After cooling down the camera to −20 °C with the help of a freezer, place it in front of the target and capture images while it is warming back up to room temperature.

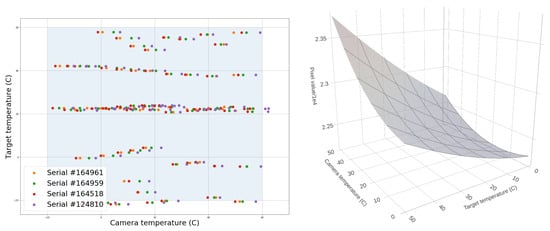

Ambient humidity proved to be a challenge in this process, as it created condensation droplets on the camera lenses and germanium windows. We had to maintain the air humidity in the lab below 40% in order to take meaningful measurements. Figure 8 shows the temperatures sampled for different cameras following this process. Once all the images are taken, i.e., once sampling the 2D grid of camera and target temperature is completed, we find the pixel coordinates of the centers of both foam targets (the foam in the incubator and the foam in the freezer) for each individual camera. We record the mean pixel value in a -pixel square at these coordinates. We grid the pixel values using inverse distance weighting interpolation and smoothing. The result is fitted to a bi-quadratic polynomial, where the two variables are camera sensor temperature and target temperature, and the output is pixel value. Figure 8 has an example of a fitted polynomial that can be used to relate a 16-bit pixel value and camera temperature to the target radiance, assuming it is a black body. The black-body emission of the target is related to its temperature through Planck’s equation, which we numerically integrate for the wavelength band of the Boson camera, i.e., from m to m:

where and m·K.

Figure 8.

(Left): Map of measurements made on four cameras of the Dalek. (Right): Visualization of fitted polynomial. The maximum pixel values occur when the camera and target are both “hot”. Pixel values are divided by on the z-axis.

2.2.6. Object Temperature Measurement

This section demonstrates a first-order example of using the thermal calibration of the previous section to estimate the temperature of an aerial object. A surface emits thermal radiance depending on both the surface temperature and the surface emissivity. To use the calibrations from the previous section on real images captured with a Boson camera, we need first to measure the emissivity of the foam used in the calibration process. We let the foam equilibrate to room temperature. We measure the temperature of the foam through a surface temperature probe which yields 23 °C °C. Simultaneously, an external laser infrared thermometer set to an emissivity of 1.0 measures 22 °C °C at the site of the temperature probe. We deduce an estimate of the foam emissivity from the ratio of the two temperatures in kelvin units, .

For the thermal calibration, we assumed that, owing to the short distance between the camera and the foam target, any atmospheric effects would be negligible. In general, however, another parameter is involved in the measurement of an object’s temperature through our cameras in the field: the atmospheric transmission, which quantifies the attenuation of thermal radiation emitted by the object due to water vapor and carbon dioxide along the transmission path. This depends on the relative humidity, the temperature of the atmosphere, and the distance from the camera to the object. We use the Passman–Larmore tables [31,32], which were derived experimentally, to compute the atmospheric transmission coefficient . For a wavelength of m (the center of the FLIR Boson camera spectral band), a distance m to the object, relative humidity %, and atmospheric temperature °C, we use equation (6), table 1, and table 2 from [32] to compute the water vapor absorbance and carbon dioxide absorbance . Then, we can approximate the atmospheric transmission coefficient with [33].

Given the emissivity of the object and the atmospheric transmission , we see a brightness of

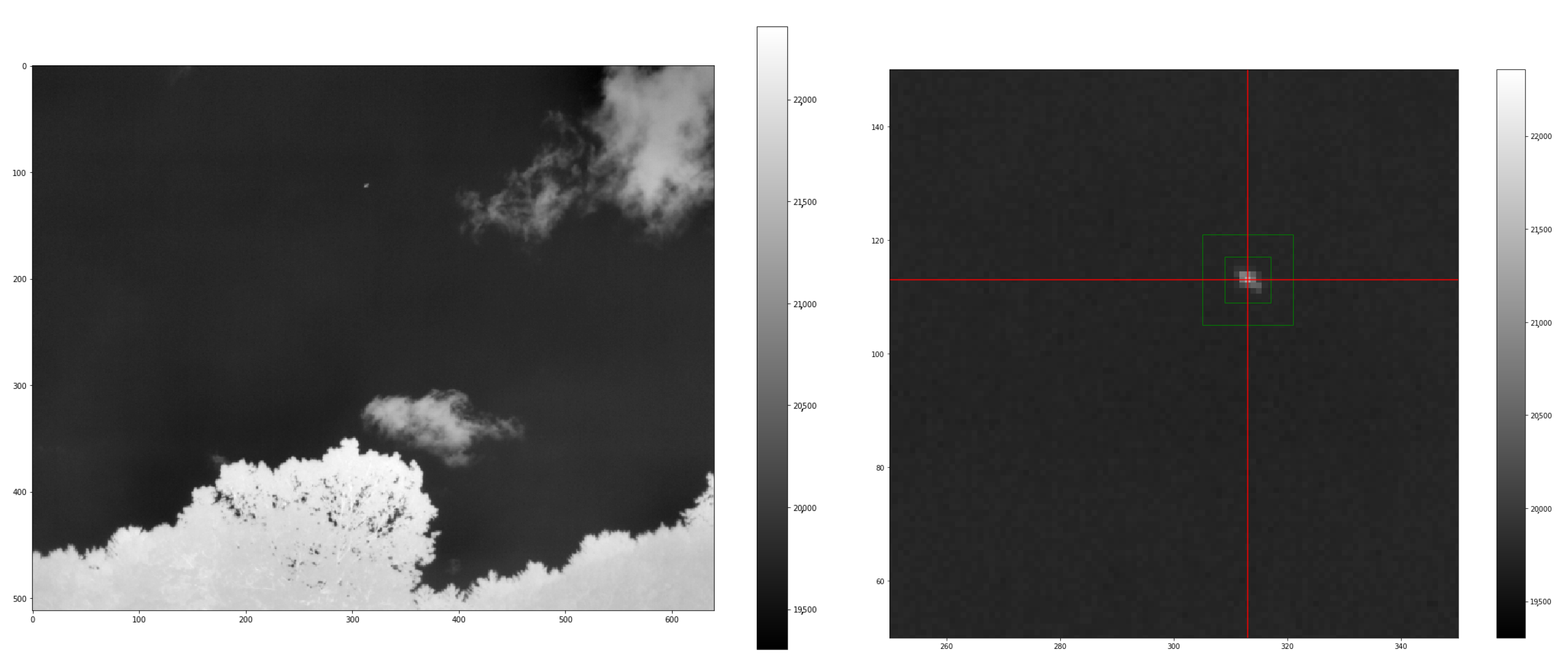

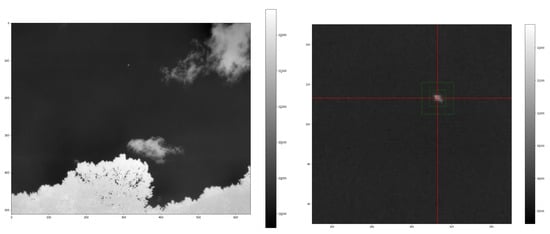

where is the brightness emitted by the object’s surroundings and reflected by the object, is emitted by the atmosphere, is what we measure from the camera sensor, and is emitted by the object, i.e., the quantity we wish to determine [32,34]. Figure 9 shows an example of a 16-bit frame from camera 3 of the Dalek during an airplane fly-by. The aircraft is an Airbus A321 taking off from BOS airport at a slant range of 5.3 km from the observatory. We measure the ambient reflected brightness in units of pixel values, and then use our calibration to convert to temperature. We average the pixel values immediately surrounding the object. For measuring the atmospheric brightness, we average the pixel values in the top left quarter of the frame, which is empty sky. We obtain 19,748 and 19,765. By plugging in the values for the object emissivity and atmospheric transmittance, we can infer the actual object brightness of 22,672 from the observed brightness of 21,307. This would be the pixel value for a piece of foam in the same conditions as it was during the thermal calibration. The fitted relationship between brightness and radiance that we obtained from the calibration in the previous section yields an estimate of the object’s radiance: assuming a sensor temperature of 30 °C, given the ambient temperature at the time the image was taken, the target radiance is estimated at 53 W/m2/sr. Next, we set equal the radiant flux emitted from a surface of ∼8 × 8 m2 (assuming an average airliner has a wingspan of 50 m, at a distance of 5.3 km, one pixel of the camera corresponds to ∼8 m of the object), which sees the camera sensor from a solid angle of ∼7.10−6 sr at ∼5 km distance, and the radiant flux emitted by an object at ∼0.1 m distance, with ∼0.25 m2 surface area, and which sees the camera sensor from a solid angle of ∼0.001 sr; from this, we find the equivalent radiance of the actual target, the aircraft at 5 km, to be ∼37 W/m2/sr. Planck’s law, assuming an emission coefficient of for an aluminum and stainless steel object, yields a corresponding temperature of 24 °C.

Figure 9.

(Left): Example of 16-bit infrared image of an aircraft taken with a Boson camera. (Right): Zoom in on the airplane in the scene. The red lines cross at the pixel of maximum brightness. The area in between the green rectangles is used to estimate the ambient brightness.

We compare this result to a temperature estimate based on aircraft altitude. The standard adiabatic lapse rate is a temperature decrease of 2 °C for every 1000 feet increase in elevation, so we expect a temperature of ∼2 °C at the aircraft barometric altitude of 11,450 feet. Since the aircraft has just taken off, to estimate its body temperature we use Newton’s law of cooling for an aluminum (specific heat capacity 903 J/kg/K, heat transfer coefficient ∼5 W/m2/K) sphere of ∼83 metric tons going from a temperature on the ground of ∼20 °C to the temperature of 2 °C at the aircraft’s current altitude within 5 min. We find that we should expect a temperature of ∼18 °C for this specific aircraft, which would mean an error of ∼6 °C in this example.

This temperature estimate is included in this study as a proof-of-concept demonstration. During the commissioning period we collected only 8-bit recordings and did not yet have triangulation in place for range estimation. The rest of this paper is not concerned with objects’ temperatures. In the near future, we plan to enable the continuous recording of 16-bit infrared videos with the Dalek, which will help characterize the temperatures of objects in combination with range estimation derived from triangulation, for example.

2.3. Reconstruction of Aerial Objects Using YOLOv5 and SORT

The primary design purpose of the Dalek system is object detection and trajectory reconstruction. A combination of machine learning and traditional algorithms suited for this purpose are applied to the IR images obtained from the Dalek video recordings. You Only Look Once (YOLO) [28,29] is a common machine learning architecture for real-time object detection. We train version 5 of YOLO using both synthetic images and the Dalek’s real-world images [7]. The datasets are described in Section 2.3.1 and the training outcomes are evaluated in Section 2.3.2. YOLOv5 marks detections on each frame individually. To reconstruct the trajectories of detected objects within a single camera’s field of view, we apply a multi-object tracking (MOT) algorithm to track individual objects in a video sequence. We use the popular Simple Online Real-time Tracking (SORT) [35], which combines a Kalman filter with the Hungarian algorithm. Section 2.3.3 contains more details about our implementation and a performance benchmark for this stage.

2.3.1. Datasets for Training and Evaluation

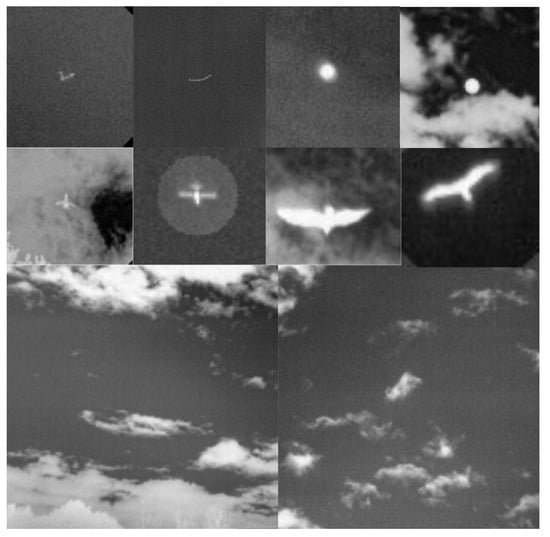

Synthetic Image Dataset

An object detection algorithm such as YOLOv5 requires supervised training and tens or hundreds of thousands of labeled training samples. We developed a synthetic image generation tool called AeroSynth, which leverages the Python bindings of Blender [36], a free and open-source 3D modeling software. Ref. [7] contains more details about AeroSynth and the training process of a YOLOv5 [28,29] architecture using synthetic datasets. Our synthetic dataset contains 25 object bounding boxes (labels) per image, sampled from 40 different 3D models including airplanes, balloons, drones, birds, and helicopters, but excluding some natural objects such as leaves and clouds, totaling ∼800k objects spread over ∼32k + 8k (train + test) images.

Mixed Synthetic and Real-World Image Dataset

In addition to bootstrapping the training using a synthetic dataset, we also selected real-world images from Boson cameras which contained highly confident (score of ) detections from the YOLOv5 model trained using exclusively synthetic data. We included these images, using these detections as truth bounding boxes or labels, to fine-tune the model on real-world images as well. This mixed dataset contains ∼424k objects spread over ∼45k real-world images. Roughly 10% of these images are background, empty images. On average, there are 9 object bounding boxes (truth labels) per image. In Section 2.3.2, we compare these two training approaches (using the synthetic dataset and using the mixed dataset) in a benchmark.

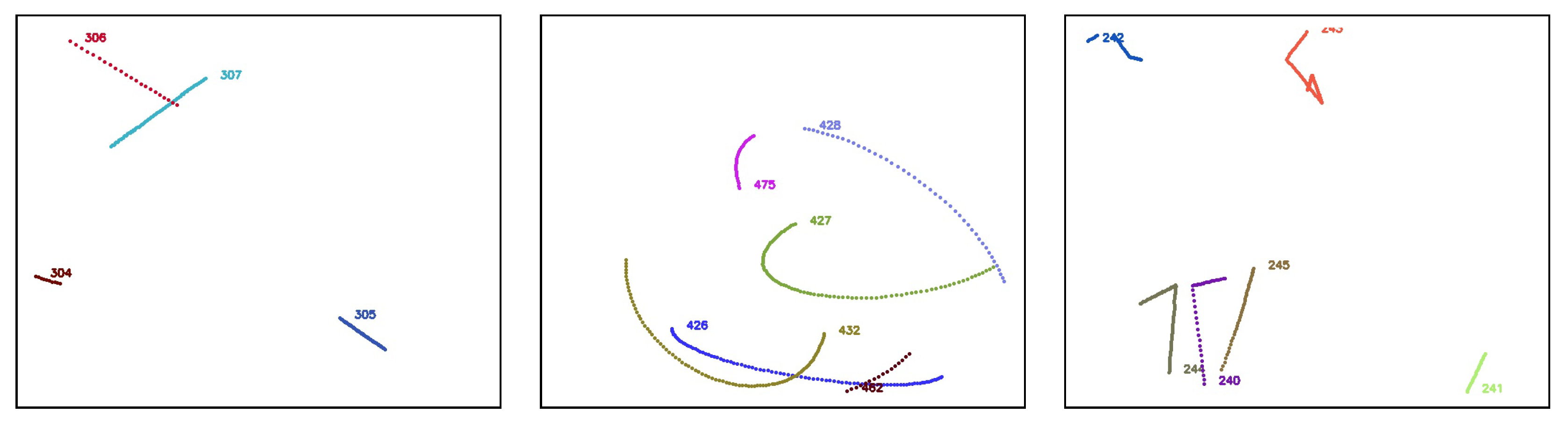

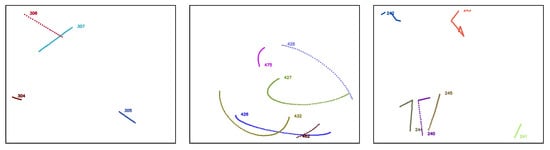

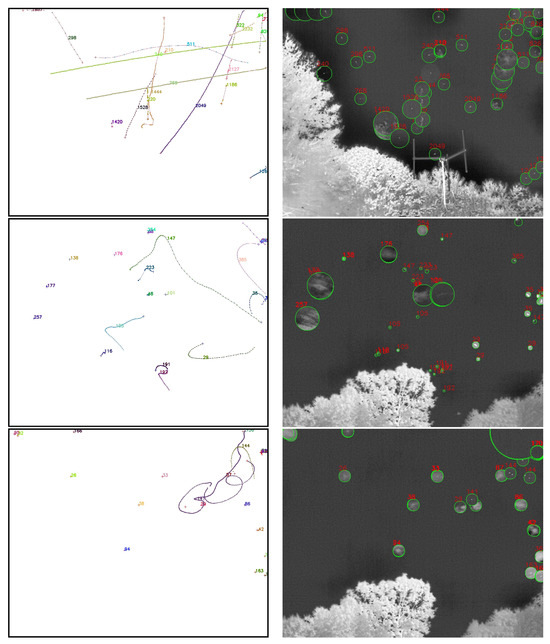

Synthetic Video Dataset

A video is a set of frames or images that are correlated in time. In order to evaluate tracking algorithms on synthetic, controlled trajectories, we need to generate consecutive images of synthetic objects that, when stitched together, form a video of object trajectories. We again rely on AeroSynth [7] to render videos of 3D models that mimic the video recordings by individual Boson infrared cameras. Each synthetic video is a collection of 100 images or frames, which are snapshots of the 3D models in the scene at successive points in time, separated by 0.1 seconds. The rate of 10 frames per second is chosen to match the Dalek commissioning dataset frame rate. We include the same 3D models that were used to create the synthetic image dataset used to train YOLOv5. The synthetic trajectories fall into three categories: straight, simple curve, and piecewise. Straight trajectories are given a starting point chosen randomly in the 3D space within the field of view of the camera and follow a straight line in a random, uniformly sampled direction. Curved trajectories are given two points chosen randomly in the 3D space within the field of view of the camera and follow a circular arc between the two points using a random radius uniformly sampled in the range of [0, 50] meters. Piecewise trajectories are given a starting point in 3D space within the camera field of view and follow a sequence of straight lines in randomly selected directions. They can have 1 to 5 inflection points. For all trajectories, the speed is uniform across the trajectory and sampled in the range of [0, 100] meters per second, which means that the trajectory’s points are uniformly sampled. We generate ∼1600 unique trajectories. Each video contains up to 5 moving objects at the same time. Figure 10 shows visualizations of each type of trajectory.

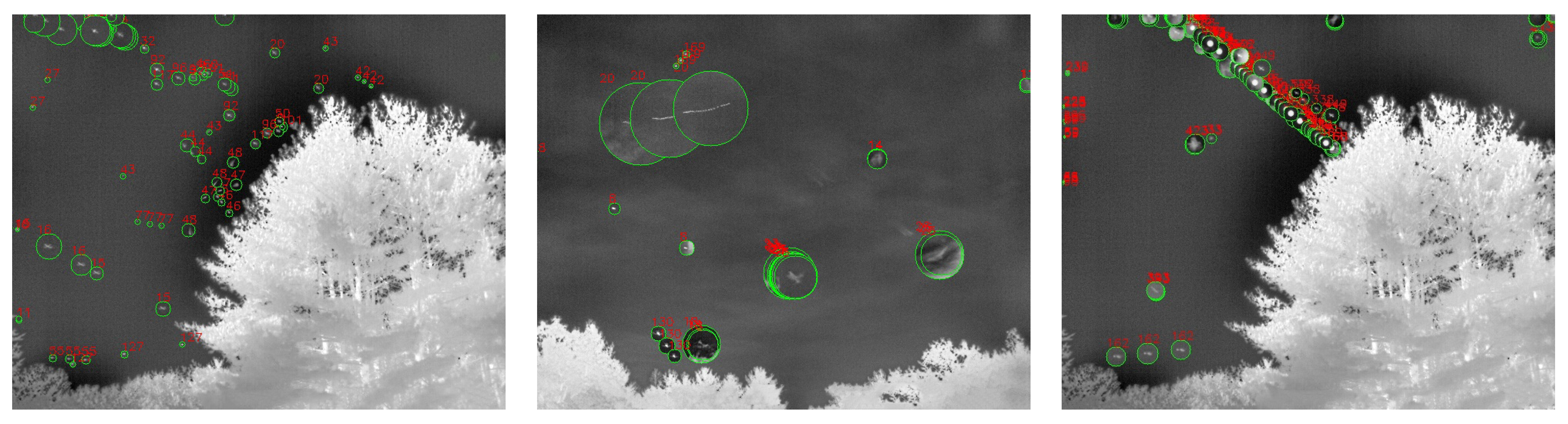

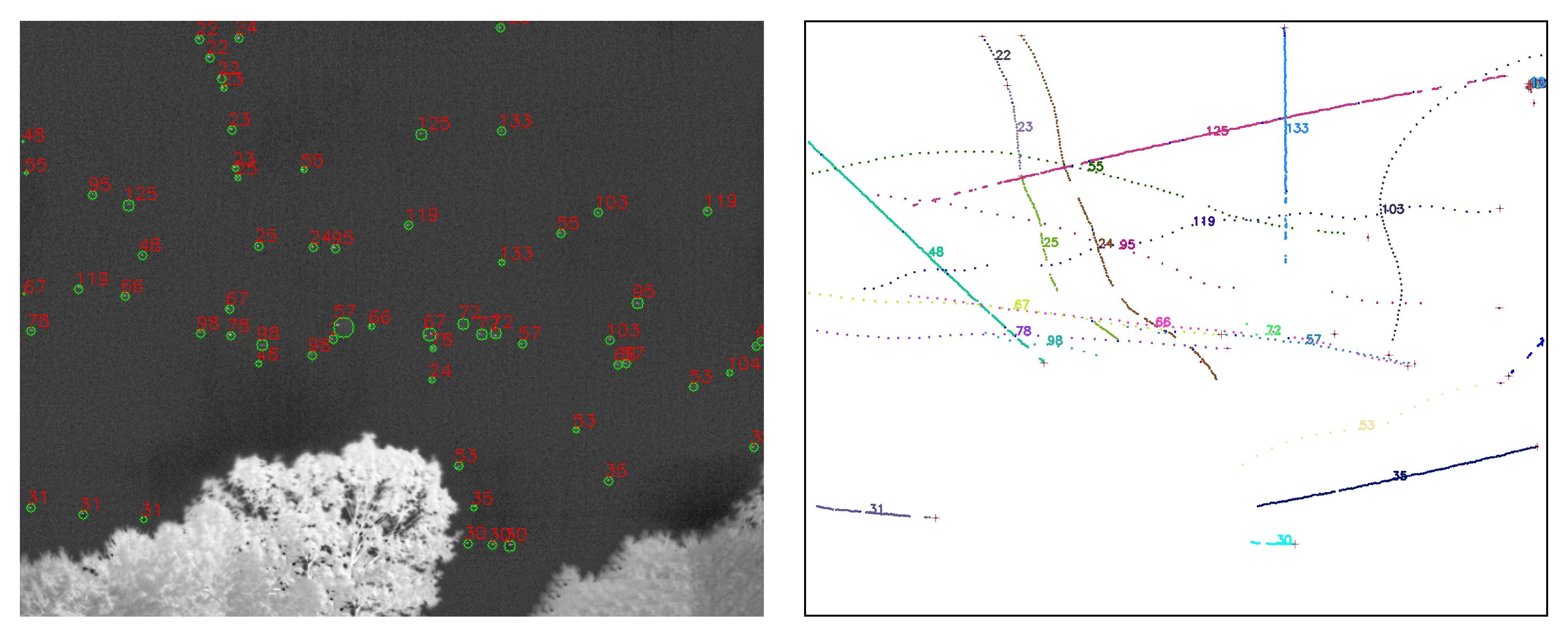

Figure 10.

Comparison of straight (left), curved (middle), and piecewise (right) 3D trajectories from the synthetic video dataset. Trajectories are identified by color and unique number.

Manually Labeled Real-World Image Dataset

We manually labeled each frame of a subset of Dalek video recordings by drawing bounding boxes on visually identified aerial objects in each frame (airplanes, birds, leaves, etc.) to create truth labels for object bounding boxes using the free and open-source Computer Vision Annotation Tool (CVAT). For each of the eight cameras, we sampled up to three five-minute videos per day during January 2024: one at midnight, one at noon, and one at 6 PM Eastern Standard Time. Data availability determined the final dataset, comprising 314 videos, which amount to 904,257 individual frames. A total of 36,036 frames in this dataset (approximately 4%) contain objects. Of these, approximately 94% contain one object, 5% contain two objects, and 1% contain three or more objects. The highest number of objects observed in a single frame is 10. Ultimately, this dataset contains 40,268 individual object annotations (labels) which include the bounding box center coordinates, width, and height, but not object class. Throughout the process, we strove for tight bounding box definitions with minimal surplus margin, consistent labeling of all visible objects, and complete enclosure of objects within bounding boxes (even in cases of partial object occlusion).

ADS-B-Derived Real-World Dataset

We expect the fraction of aircraft not transmitting ADS-B to be small at our site (military aircraft only infrequently fly overhead). Using ADS-B data (see Section 2.2.3) from the OpenSky database, we select ADS-B records whose latitude and longitude are within a N-S, E-W square of side 10 km centered on the observatory. Additionally, for each selected ADS-B record, we use the camera’s extrinsic and intrinsic calibration to translate the 3D coordinates of the aircraft to 2D coordinates in the frame of the camera images. In that way, we can determine whether the aircraft’s transmitted ADS-B location is within the field of view of at least one camera and, if so, whether it is above the treeline of the camera in which it is visible. The timestamp of the ADS-B record can be compared to the Dalek recording periods to determine whether the camera was recording when the aircraft was in view. We note that in addition to inaccuracies in the camera recording timestamps, there are also latencies in the ADS-B records timing and update frequencies that require relaxing any time-matching thresholds when working with this dataset: for example, ref. [37] found a mean delay between the aircraft on-board time and the ground receiving time of 0.2 s, which corresponds to 2 frames in the Dalek recordings, while [38] reported that 13% of the ADS-B updates happened at intervals greater than 2 s. This dataset thus contains the trajectories of all aircraft that passed over the site during the five-month commissioning period, and for each of them, which cameras both were recording and had the aircraft in their unobstructed field of view (if not necessarily visible or detectable) at that time. In addition, the hourly weather information corresponding to every aircraft ADS-B transmission is downloaded from the Open-Meteo API [39] and joined to the dataset.

2.3.2. YOLOv5 Benchmark on Manually Labeled Dataset

The first stage of the Dalek detection pipeline is a frame-by-frame object detection machine learning algorithm, YOLOv5 [28,29]. Here, we present some basic metrics on the performance of the YOLOv5 model that we use for the rest of this paper. In particular, we explain why we decided to use the YOLOv5 model trained on the synthetic dataset alone rather than the model trained on the mixed synthetic and real-world dataset. We apply both of the YOLOv5 models in evaluation mode, i.e., run their inference, on each frame of the 314 videos on the manually labeled dataset of Dalek real-world images. Next, we calculate the intersection over union (IoU) between each detection bounding box and each ground truth bounding box. The IoU is a ratio of areas which measures the overlap between the predicted bounding box and the ground truth bounding box, providing a metric for the agreement between detections and ground truth bounding boxes. We set a low IoU threshold of 0.01 to define a match between predicted and ground truth bounding boxes, i.e., a true positive. We consider detections with an IoU below this value as false positives. Following the IoU calculation, we evaluate the detection performance using the following key metrics:

- Detection errors: True positive (), false positive (), true negative (), and false negative (), in counts.

- Precision (Prcn): The ratio of true positives to the sum of true positives and false positives: .

- Recall (Rcll): The ratio of true positives to the sum of true positives and false negatives: .

- Accuracy: The ratio of correct detections (both true positives and true negatives) to the total number .

- F1-score: The harmonic mean (appropriate for finding the average of two rates) of precision and recall.

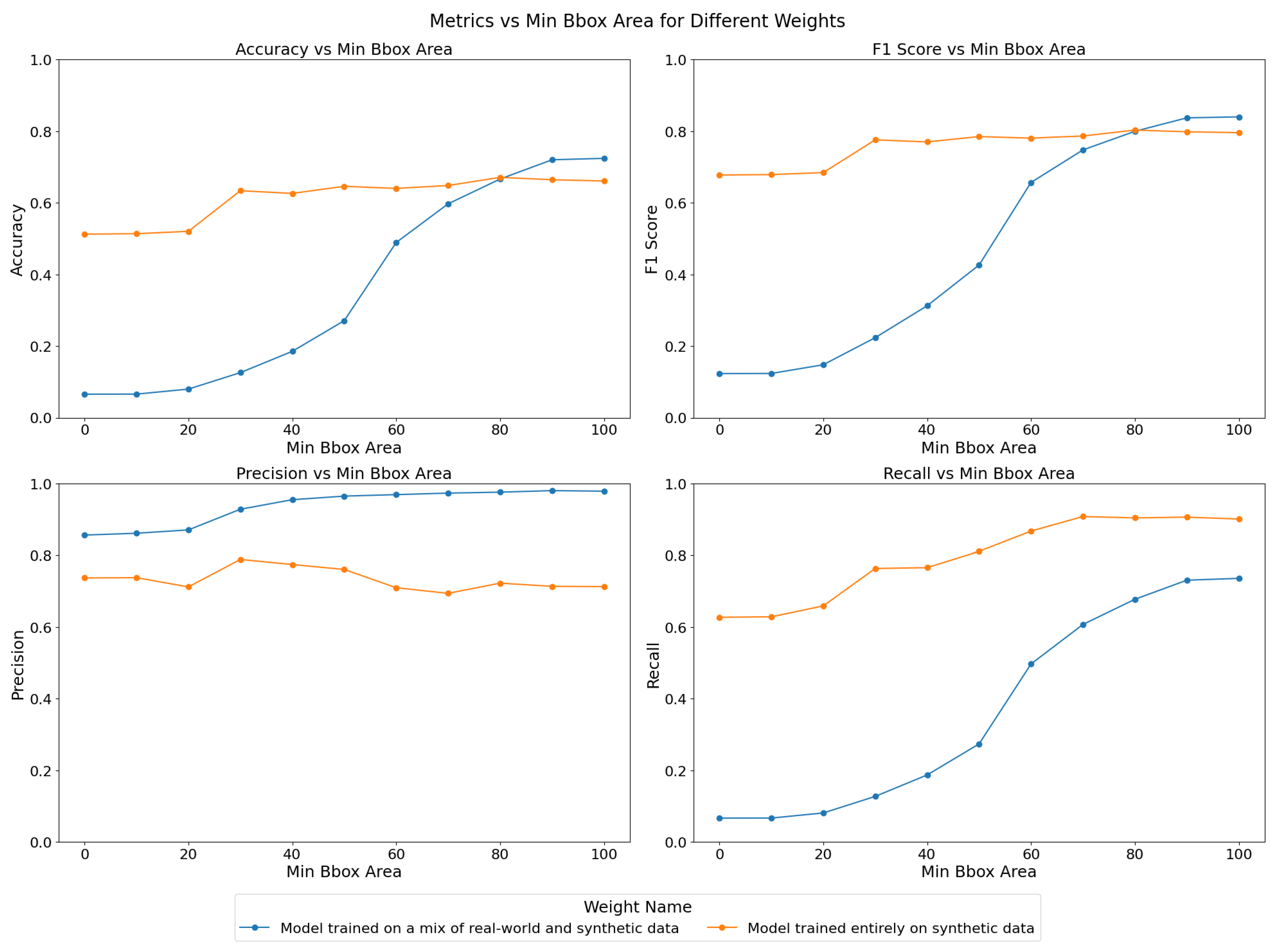

As illustrated in Table 1, the model trained on both real-world and synthetic data achieves a high precision of 85.6%, indicating that when it makes a positive prediction, it is likely correct. However, its recall is extremely low at 6.60%, meaning it fails to detect a large portion of the actual objects, as evidenced by the high number of false negatives (36,412). This poor recall significantly impacts the model’s overall accuracy (6.60%) and F1-score (12.3%), suggesting that this model struggles with generalization or may be overly conservative in making detections. In contrast, the model trained solely on synthetic data shows a much better balance between precision and recall. While its precision (73.7%) is slightly lower than the mixed-data model, it achieves a much higher recall (62.7%), leading to a significantly improved F1-score (67.8%) and accuracy (51.3%). This model detects a greater number of true positives (24,465) and substantially fewer false negatives (14,535), indicating it is more effective at identifying objects, though it also produces more false positives (8728).

Table 1.

Comparison of metrics for YOLOv5 models trained on real-world + synthetic data versus synthetic-only data.

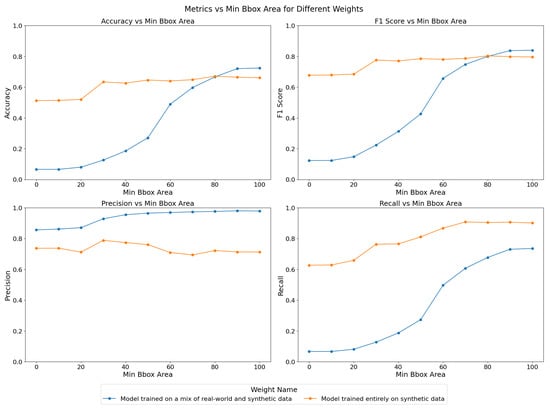

Given these results, we hypothesize that for larger objects, the model trained on a mix of real-world and synthetic data might perform better than its synthetic-only counterpart. Indeed real-world data could provide more accurate representations of larger objects, which might be underrepresented or inaccurately simulated in synthetic datasets. To further explore this, we repeat the inference multiple times for each model, adjusting the bounding box area minimum threshold incrementally from 0 to 100 pixels square. The results, as shown in Figure 11, reveal how the performance metrics—accuracy, F1-score, precision, and recall—vary as the minimum bounding box area increases.

Figure 11.

Comparison of YOLOv5 model trained on synthetic-only dataset versus model trained on a mix of synthetic and real-world datasets. The horizontal axis units are pixels square. The model trained on synthetic-only data has better overall performance and is the one used for commissioning in this paper.

For the model trained on both real-world and synthetic data, accuracy and recall improve significantly as the minimum bounding box area increases, with the most notable gains occurring between thresholds of 40 and 80 pixels square. This suggests that the model becomes more reliable when detecting larger objects, which aligns with our hypothesis. The F1-score also increases steadily, reflecting the improved balance between precision and recall as the model becomes more selective in its detections. On the other hand, the model trained solely on synthetic data shows relatively stable performance across varying bounding box thresholds. While precision remains consistently high, recall improves slightly with higher thresholds, resulting in a stable F1-score. The accuracy of this model, however, begins at a higher value and increases more gradually, indicating that it is generally more consistent in detecting objects of varying sizes, but it might not show as much performance increase from increased object size as the mixed-data model.

The YOLOv5 stage is the first one on our object detection and tracking pipeline. False positives can still be weeded out downstream, but recovering from false negatives is challenging. Thus, the metric we care about the most in the context of the Dalek instrument is recall. Overall, the synthetic-only model demonstrates superior performance across most metrics, particularly in terms of recall and overall accuracy, making it a more reliable choice for object detection in this context. All further studies involving YOLOv5 in this paper use the synthetic-only model. In the future, further tuning and possibly the inclusion of more diverse real-world data, including more smaller-sized objects and overlays of real-world background images with synthetic objects, could help improve the performance of the mixed-data model. We expect that training with real-world background images, which have more textured skies due to weather conditions and camera optics compared to synthetic plain backgrounds, will help to decrease the number of false positives triggered by clouds, for example, and to increase the overall detection performance.

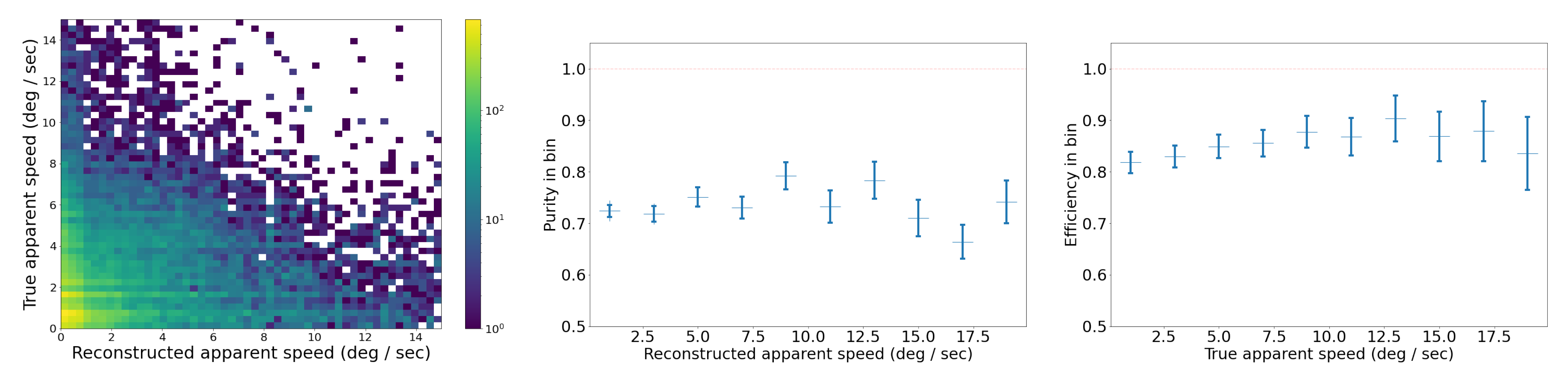

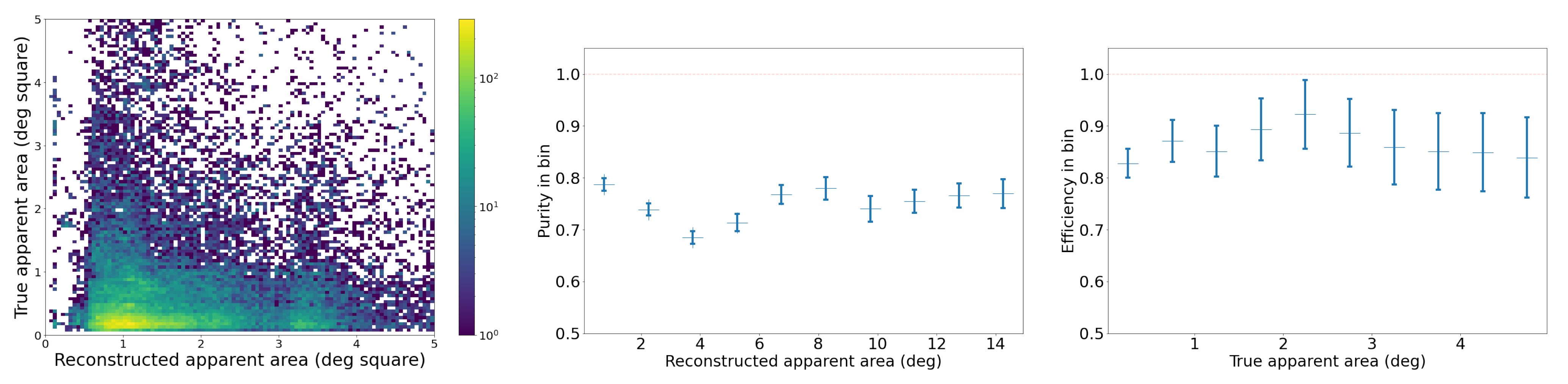

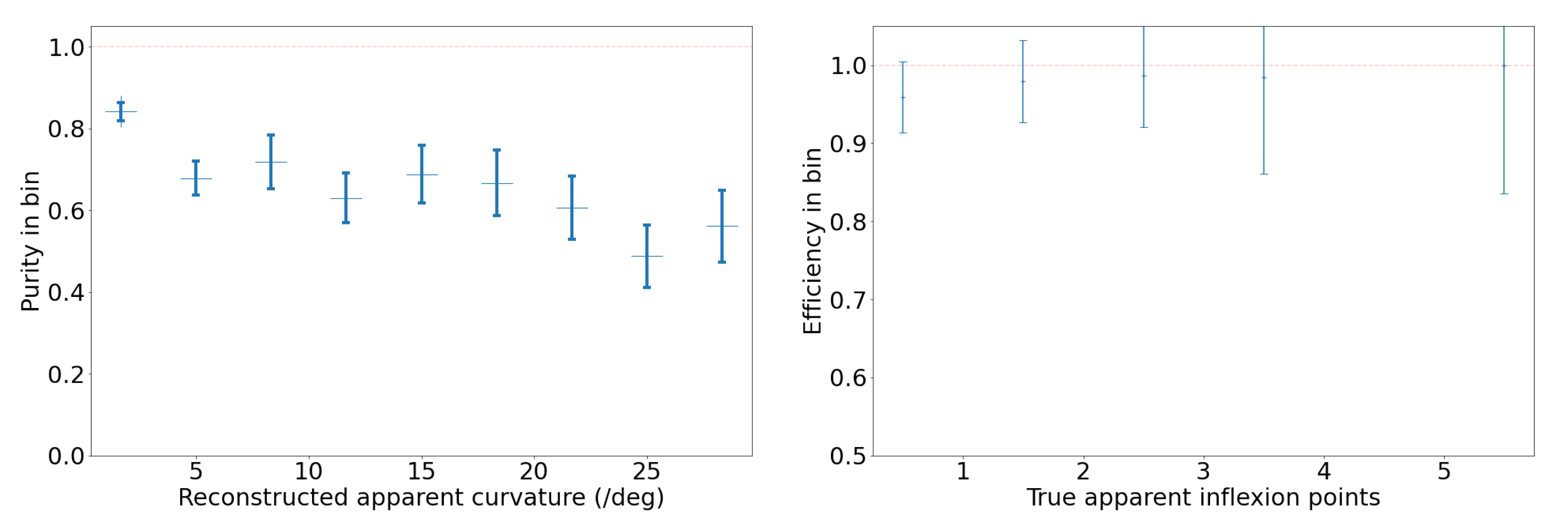

2.3.3. Benchmark for SORT on the Synthetic Video Dataset

SORT [35] is a multi-object tracking algorithm which connects individual frame-by-frame object detections made by YOLOv5 into object trajectories. It relies on a Kalman filter and the Hungarian algorithm. The Kalman filter is used in SORT to predict the future location of objects based on their past states (e.g., position, kinematics), under the assumption of constant apparent velocity in the image. The covariances in the Kalman filter represent the uncertainty associated with the predicted state. The Hungarian algorithm, on the other hand, is used to solve the assignment problem, where the goal is to associate new detections with existing tracks. The algorithm takes in a cost matrix, where each element represents the “cost” or mismatch distance (in our case, using the IoU) of associating a detection with a track. The Hungarian algorithm then finds the optimal matching that minimizes the total assignment cost, ensuring that each detection is paired with the most appropriate existing track.

We analyze the performance of our SORT implementation on the synthetic video dataset, which has three different types of trajectories: straight, curved, and piecewise, as shown in Figure 10. We set an IoU threshold of 0.5 to define a match between bounding boxes predicted by the Kalman filter and the ground truth bounding boxes. Each reconstructed trajectory is assigned an identifier (ID). We report the following standard metrics, in addition to the ones defined in Section 2.3.2, for tracking performance [40,41,42,43]:

- Identity switches (IDSs): Also known as association error, it is the count of how many times the reconstructed ID associated with a ground truth trajectory changes, i.e., where objects are incorrectly re-identified as new objects.

- Multiple object tracking accuracy (MOTA): A metric compiling tracking errors over time: .

- Multiple object tracking precision (MOTP): A measure of localization accuracy: , where is the number of matches in frame t, and is the bounding box overlap of target i with its corresponding ground truth box.

- Track quality metrics relative to ground truth trajectories that have been successfully tracked, i.e., have matched Kalman filter predictions, regardless of their predicted ID:

- –

- Mostly tracked (MT): For at least 80% of their life;

- –

- Mostly lost (ML): For less than 20% of their life;

- –

- Partially tracked (PT): For between 20% and 80% of their life;

- –

- Track fragmentations (FMs): A count of how many times a ground truth trajectory goes from tracked to untracked status.

- Identification metrics establishing a one-to-one match between ground truth trajectories and reconstructed trajectories:

- –

- ID precision (IDP): The fraction of reconstructed trajectories which have a match;

- –

- ID recall (IDR): The fraction of true trajectories which have a match;

- –

- ID F1-score (IDF1): The harmonic mean of IDP and IDR.

SORT Parameter Optimization

Our implementation of SORT uses three standard parameters:

- frames, which determines how long a reconstructed track can exist without a match before being deleted from the list of current reconstructed track;

- , which requires a track to have a minimum number of consecutive matches before being confirmed;

- , which sets the minimum IoU needed for a detection to be associated with a track;

and a custom parameter to mitigate the intermittent lack of YOLOv5 detections:

- , which is a multiplicative factor to scale all bounding box widths and heights proportionally before running SORT.

We confirm that the values we used for and are optimal or close to optimal, balancing tracking accuracy and efficiency in our specific application.

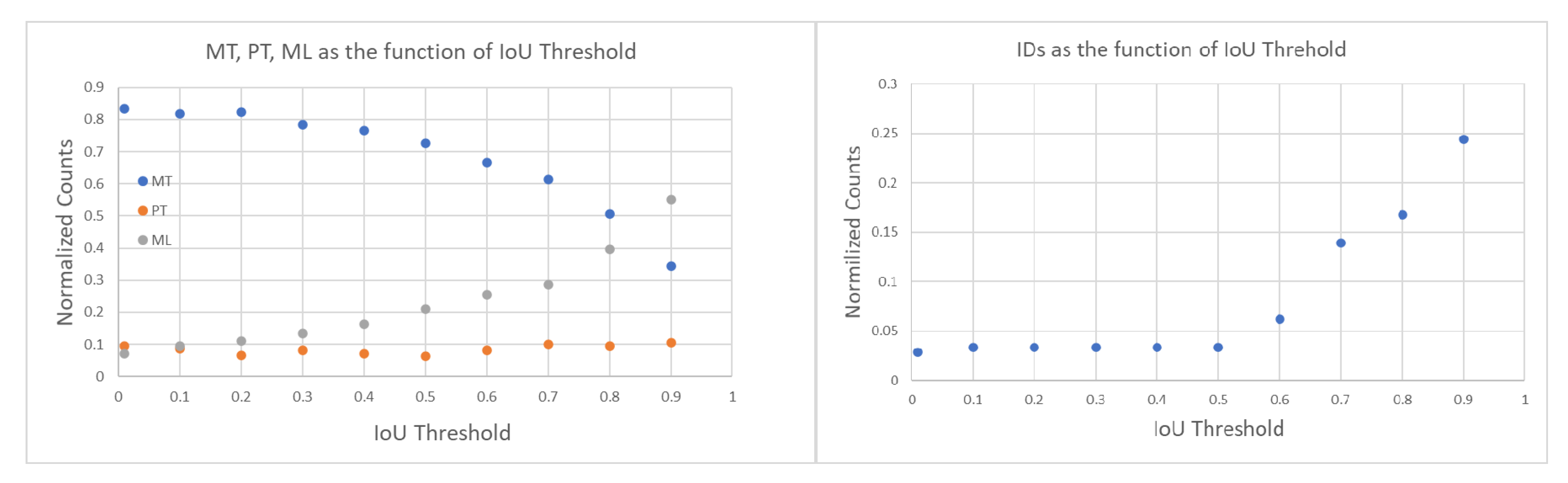

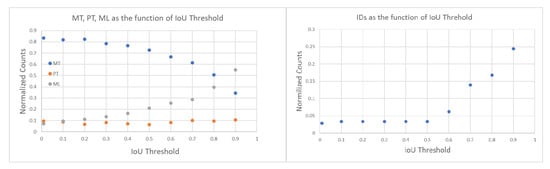

First, we tuned the by varying it from 0.01 to 0.90 and computing both tracking errors and track quality metrics. The right of Figure 12 shows the number of identity switches (IDSs) divided by the total number of ground truth trajectories, across different IoU thresholds. As the threshold increases from 0.01 to 0.90, a rise in identity switches was observed, particularly at IoU thresholds of 0.60 and 0.90, where the normalized IDS peaks at 0.06 and 0.24, respectively. Lower thresholds (≤0.30) exhibited far fewer identity switches, indicating fewer instances where objects were incorrectly re-identified as new objects.

Figure 12.

(Left): Track quality metrics MT, PT, and ML as a function of IoU threshold; values are normalized by the total number of ground truth trajectories (209) and together add up to 1. Higher values of MT (mostly tracked) are better. (Right): IDSs normalized by the total number of ground truth trajectories (209) as a function of IoU threshold. A lower number of IDSs (track identity switches) is better.

The relationships between IoU thresholds and the tracking quality metrics MT, PT, and ML are shown on the left side of Figure 12. The number of ground truth trajectories remains constant at 209. The MT fraction is highest at low IoU thresholds (0.01–0.30) and worsens as the threshold increases. At thresholds ≥0.4, MT begins to drop monotonically, indicating that at higher IoU thresholds, fewer ground truth objects are being correctly tracked throughout their entire trajectory. The PT fraction remains relatively stable across all thresholds, with values fluctuating between 0.06 and 0.10, suggesting that partial tracking performance is not influenced by the IoU threshold. In contrast, the ML fraction increases monotonically with higher IoU thresholds, from a minimum of 0.07 at an IoU of 0.01 to a maximum of 0.55 at an IoU of 0.90.

The results of these two track quality analyses suggests that an IoU threshold around 0.3 provides the optimal balance between minimizing identity switches (low IDSs) and maintaining accurate tracking (high MT), as it results in normalized IDSs of 0.03, MT of 0.79, ML of 0.12. In contrast, higher IoU thresholds (≥0.8) lead to excessive re-identification errors, while lower IoU thresholds (≤0.2) risk over-tracking due to lenient matching criteria between the Kalman filter prediction and the ground truth bounding box. In all the following studies, we use = 0.3.

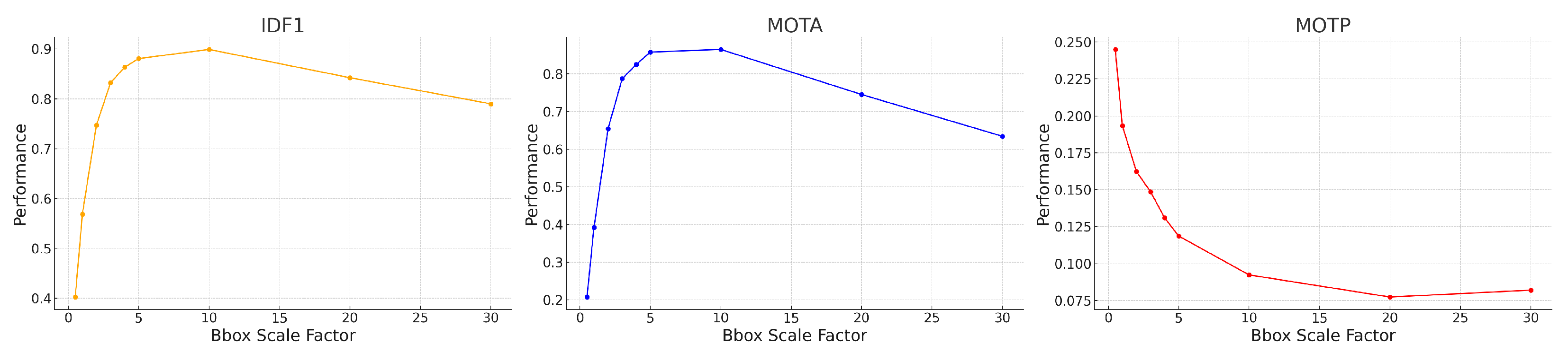

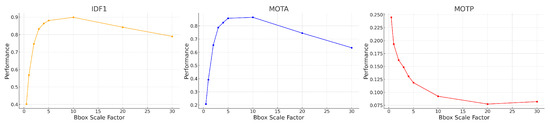

In our implementation of SORT, instead of tuning the default parameters of the Kalman filter, we artificially increase the bounding box (BBox) size by multiplying both the height and width by a scale factor. This adjustment addresses the challenge of tracking objects with varying bounding box size, such as objects moving towards or away from the camera. Initially we picked a scale factor of 4 based on visual inspection, but the synthetic video dataset enables us to check that this choice is close to optimal. Figure 13 shows the MOTA, IDF1, and MOTP metrics as a function of the BBox scale factor, which ranges from 0.5 to 30.0. MOTA in the middle of Figure 13 improves rapidly at first, peaking around a BBox scale factor of 5–10. The IDF1 graph on the left measures the tradeoff between identity precision and recall for tracking. We see a sharp rise in performance initially, with a peak around a scale factor of 5–10. Beyond a scale factor of 10, the IDF1 metric declines. The MOTP graph on the right shows that as the BBox scale factor increases, the MOTP decreases. The smallest MOTP values are observed at a scale factor of 10–20. This confirms that our original, heuristic choice for the BBox scale factor of 4 is not too far from optimal. Overall it results in a 40% increase in the number of correctly matched tracks, compared to a BBox scale factor of 1. For all the following studies, we use a BBox scale factor equal to 4.

Figure 13.

MOTA, IDF1, and MOTP tracking performance metrics for our SORT implementation on synthetic trajectories, as a function of the BBox scale factor.

Benchmark on the Synthetic Video Dataset

With these parameters in our SORT implementation, we also analyzed performance on the synthetic trajectories as a function of trajectory type: straight, curvy, and piecewise. The advantage of synthetic trajectories is that we control and can isolate the trajectory characteristics influencing the tracking performance.

The results in Table 2 show that across all three types of trajectories, we find an MOTA of 0.87. It also demonstrates that SORT exhibits strong identification precision (IDP = 95%) and high object recall (Rcll = 90%) across synthetic data, while maintaining a high tracking accuracy, with MOTA = 0.87. However, a moderate level of fragmentation (FM = 935) suggests that identity switches and interruptions occurred during the tracking of objects, likely due to the challenges posed by rapid or unpredictable object movements in the synthetic environment, specifically for curved trajectories. The near-perfect precision (Prcn = 98%) indicates that the model had very few false positives. We investigate further the tracking performance for three types of trajectories, and as seen in Table 2, the model’s performance varies depending on the nature of the object trajectories. For the 604 straight trajectories, the model achieved its highest overall performance, reflected by an IDF1 score of 94% and an MOTA of 0.90, due to the simplicity of tracking linear motion. The precision and recall metrics for straight trajectories are also high, with Prcn = 98% and Rcll = 92%. These results suggest that the model efficiently tracks objects following predictable, straightforward paths, minimizing identity switches (IDSs = 15) and fragmentation (FM = 202). For piecewise trajectories, which involve sudden changes in object direction, the algorithm performs better than on curvy trajectories, with an IDF1 of 91% and MOTA of 0.90. The slight improvement compared to curvy trajectories is likely due to the model’s ability to adjust more easily to segmented motion changes rather than continuous curving movements. However, the number of identity switches (IDSs = 149) and fragmentation events (FM = 353) is noticeably higher for piecewise trajectories, reflecting the difficulty the model faces in consistently associating object identities across sudden directional changes.

Table 2.

SORT tracking performance metrics for different trajectory types from the synthetic video dataset.

3. Commissioning Results

In this section, we focus on the Dalek as a whole system: its eight IR cameras and the pipeline from data to reconstruction of aerial events. We present basic commissioning checks on the recorded dataset and corresponding reconstructed quantities such as individual frame-by-frame detections or object trajectories. After these, we further characterize the performance of the Dalek and the detection pipeline as a function of atmospheric conditions and object characteristics using two distinct datasets: an ADS-B-derived dataset, which focuses on airplanes in real-world data, and a synthetic dataset.

3.1. Basic Checks on Recorded Dataset

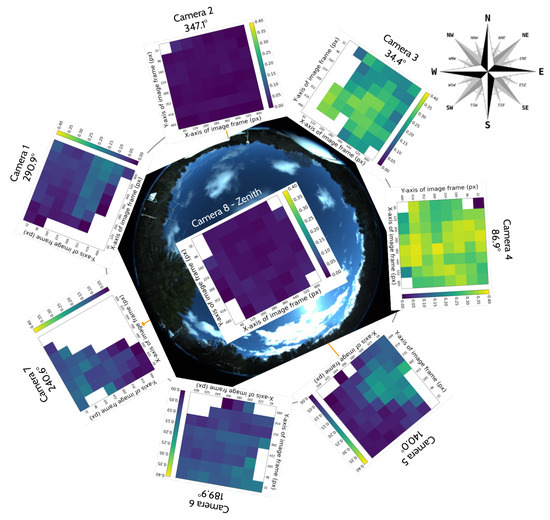

3.1.1. Environment Effects on Spatial Distribution of Detection Counts per Camera

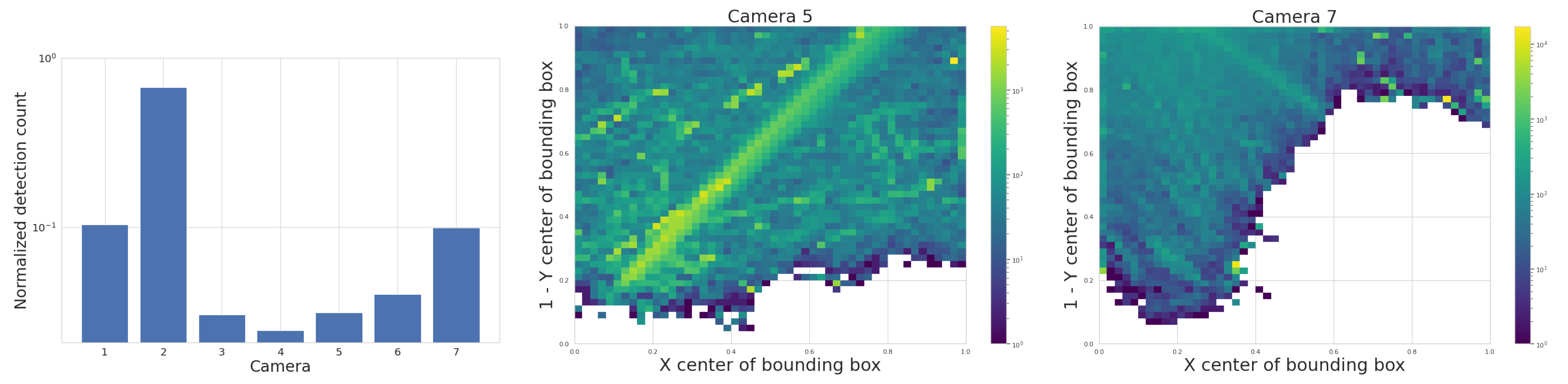

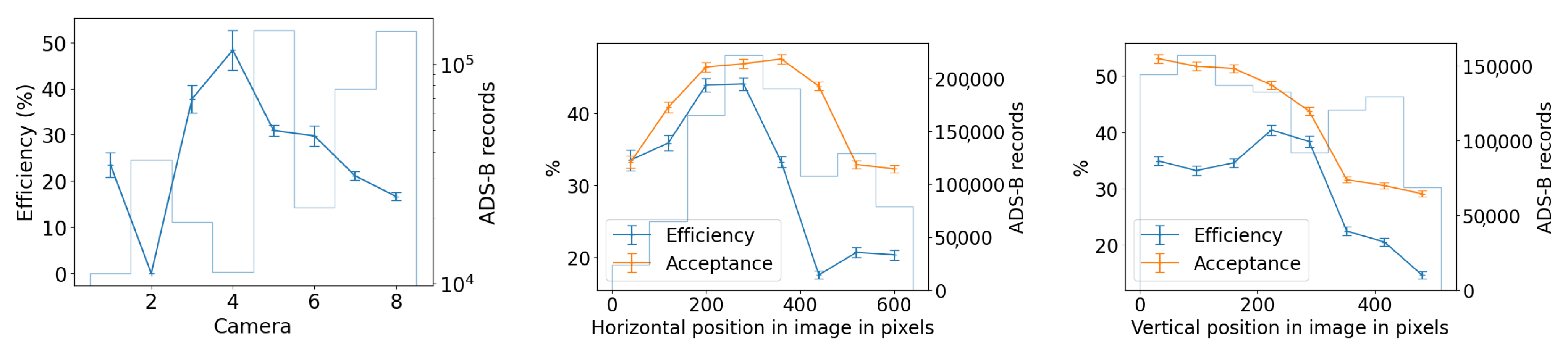

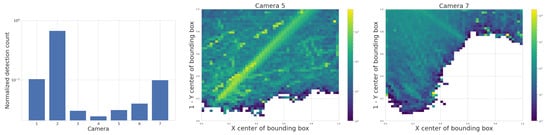

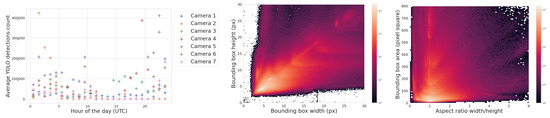

We start with the detection counts per camera, and attempt to correlate any long-term spatial inhomogeneities with known conditions at the observatory site. We check each camera for its spatial distribution of YOLO detections to rule out any potential bias. ADS-B historical records confirm that cameras 5 and 7 capture airplane high-traffic routes. This is shown in the center of Figure 14, where a commercial airline approach route to a major airport is visible when plotting purely detection counts. We also find that cameras 1 and 4 often capture the Moon at night. which triggers a detection by YOLOv5. Additionally, detections caused by birds’ preferential perches or nests have small spatial signatures at the edges of the treeline. New leaves and growth of the trees beyond the treeline image mask that we utilize may trigger an unusually high count of detections in certain months. Some spurious points may be due to dust on the lens of the Boson camera or imperfections in the coating of the germanium windows. Blowing leaves and the edges of clouds are a source of many detections, but we expect them to be randomly distributed over time scales of months. And finally, the cameras regularly display a green square in the top right corner of the image during the automatic flat-field calibration, which can be detected by YOLO and shows up as an unusually high bin in this histogram, clearly visible for camera 5, Figure 14 (center image).

Figure 14.

(Left): YOLO detection count per camera, first divided by the camera’s visible sky area and total recordings count, and then normalized across cameras so all values sum up to 1. This histogram includes all detections of any object from a subset of the five months of commissioning data. Camera 8 was offline during this interval. (Middle and Right): 2D histograms showing examples of spatial distribution of YOLO detections with confidence score > 0.5, for the month of May 2024, for camera 5 (SE) and 7 (SW), respectively. A regular commercial aircraft route is clearly visible for camera 5 (center).

3.1.2. Cross-Camera Check

We check cross-camera variations of the detection counts by normalizing the detection count by area of the sky above the treeline, and by total recorded time; some cameras pointing north are constantly in recording mode with their sunshades open, while others alternate between recording and pause mode, sunshade open and closed, depending on the sun position in the sky. Figure 14 shows on the left that the average normalized detection count is not uniform across cameras. We expect that air traffic routes or the Moon, mentioned previously, can bias this metric for certain cameras.

3.1.3. Bounding Box Properties

The middle of Figure 15 is a histogram of YOLOv5’s detection bounding box width versus height and highlights a potentially useful feature, where distinctly different populations of detections appear as elongated clusters. The histogram represents all detections, not just airplanes, and currently we can only speculate about which object falls into which cluster. For example, the population cluster close to a bounding box aspect ratio of 1 would correlate with the regular Moon detections. Clustering also appears in the right-hand side of Figure 15, which compares bounding box aspect ratio with its area. The variety in bounding box size and aspect ratio suggests that they may be useful features in the future to help classify objects and search for outlier populations.

Figure 15.

(Left): Average hourly detection count for different cameras throughout the day. (Middle): A 2D histogram of the detections’ bounding box width and height, showing pronounced clustering that may be useful for object classification. (Right): A 2D histogram of the detections’ bounding box area and aspect ratio, again with strong clustering. The histograms include all detections of any object from a subset of the five months of commissioning data.

After these basic checks on the Dalek detections, we further studied the performance of the Dalek and the detection pipeline, including as a function of atmospheric conditions and object characteristics, on two distinct datasets: an ADS-B-derived dataset, which focuses on airplanes in real-world data, and a synthetic dataset.

3.2. Performance Evaluations Using ADS-B-Equipped Aircraft

In this section, we evaluate the physical performance envelope of the full Dalek system using data related to the many ADS-B-equipped airplanes (Section 2.2.3) that fly over our development site. We use the latitude, longitude, and altitude from an ADS-B-derived real-world dataset as the aircraft’s true position, project these onto each 2D image frame, and compare these true points to the objects predicted by YOLOv5’s detection algorithm.

3.2.1. Evaluation Methodology

For this analysis we define sets of ADS-B entries using the following sequential selection criteria:

- Within a square of side 10 km centered on the observatory;

- Within the field-of-view of at least one camera;

- Above the treeline of the camera from which they should be visible;

- At a time when there is a recording by the relevant camera;

- Are detected by YOLOv5.

For each selected ADS-B record, we use the camera’s extrinsic and intrinsic calibration to translate the 3D coordinates of the aircraft to 2D coordinates in the frame of the camera images. We match ADS-B records with existing bounding box detections using a temporal threshold of 5 seconds and a spatial threshold of 30 pixels. These thresholds are generous to account for the imprecision of the recording timestamps in the time period we selected and the potential error in the reported ADS-B positions.

To define performance metrics, we first identify the number of aircraft within range of our site (criterion 1) and define these as in range. From this set, we identify those that were in the right location to have been viewed by the Dalek, that is, within the effective field of view of at least one camera (criteria 2 and 3). The set of aircraft that meet the first three criteria outlined above is defined as viewable, and it is independent of airplane size, aspect, distance (under 10 km), ambient IR conditions, cloud cover, and camera uptime. We then identify the subset of these viewable aircraft who were also in the camera’s recording window (criterion 4), that is, they were in the right place and at the right time. The set of aircraft that meet the first four criteria is defined as recorded.

The acceptance is related to instrument uptime and is defined here as the number of recorded aircraft divided by the number of viewable aircraft. In other words, it is the fraction of viewable aircraft that the Dalek managed to record; these aircraft were “accepted” into the analysis pipeline. The set of image frames with recorded ADS-B aircraft is then compared with YOLOv5 detections for the same frames (criterion 5). Our final set consists of aircraft matching the time and place of objects detected by YOLOv5, meeting all five criteria. We define this set as detected.

The efficiency is related to YOLOv5’s performance, and is defined here as the number of detected aircraft divided by the number of recorded aircraft. In other words, it is the fraction of aircraft meeting the first four selection criteria that are also detected by the YOLOv5 object detection stage. If our pipeline is efficient, it converts most of the objects that enter the pipeline into detections, with a minimum of loss. This metric can depend on aircraft size, aspect, distance, and atmospheric conditions. If our Dalek system pipeline and site location were perfect, all aircraft within our site range limit would be in our detected set. Our pipeline is not perfect, and these sequential metrics help us find areas on which to focus improvements. We now examine these metrics.

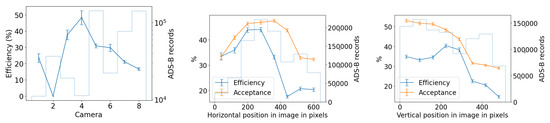

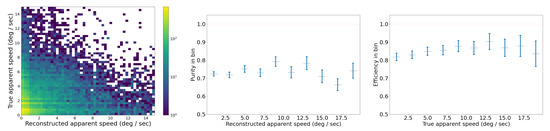

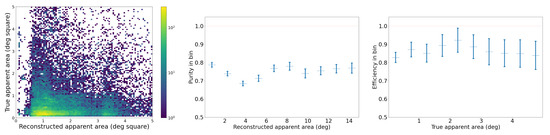

3.2.2. Performance Results

Overall, we counted 27,467 airplanes that flew within a radius of 10 km centered on the observatory, of which 8550 met the four selection criteria outlined above, and, of these, 3678 were matched in at least one frame to a YOLOv5 detection bounding box, meeting criteria 1–5. We use this number to estimate the fraction of all objects reconstructed during the commissioning period that is made up of aircraft. Taking the total count of reconstructed object tracks from Section 3.4.1, which includes aircraft, birds, leaves, clouds, etc., we estimate that ∼0.7% of all reconstructed object tracks were associated with ADS-B-equipped aircraft.

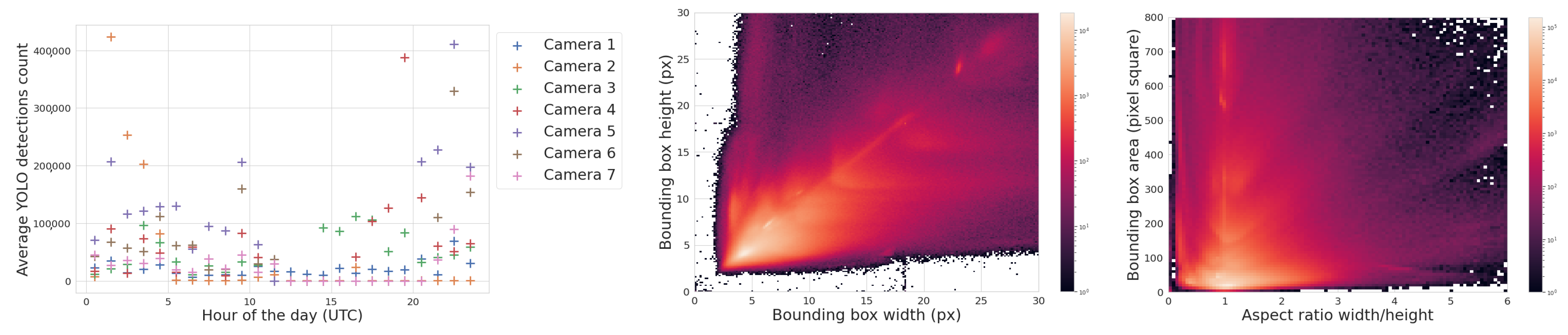

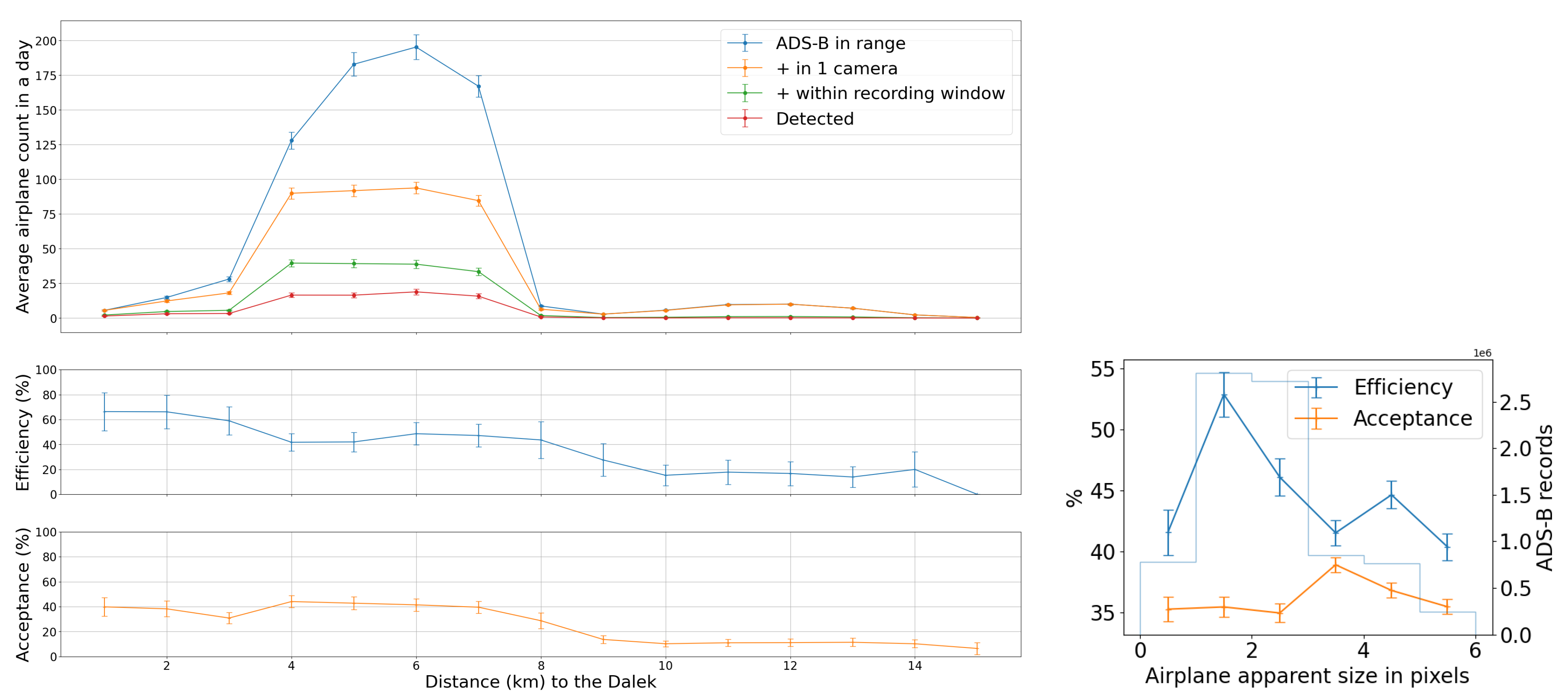

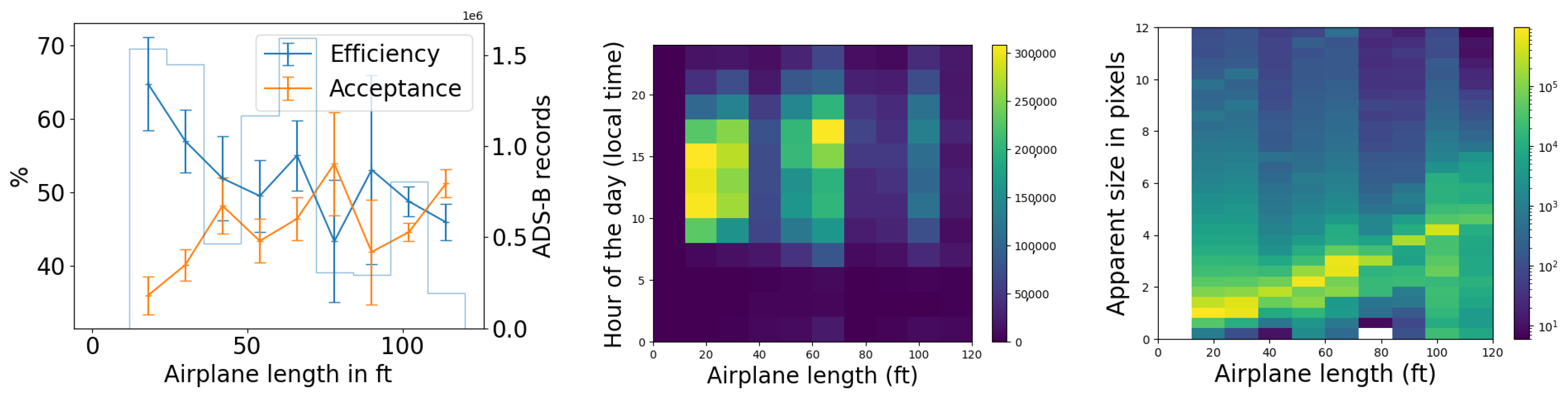

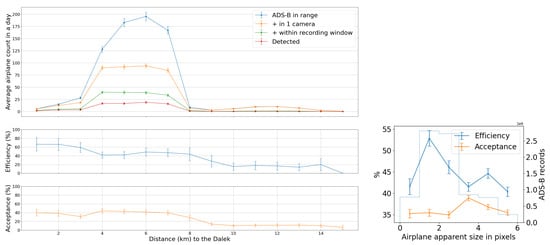

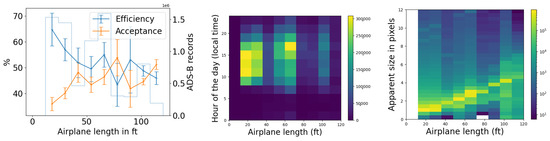

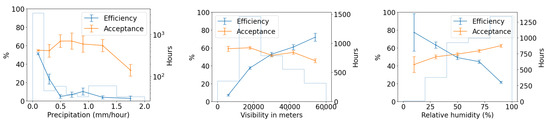

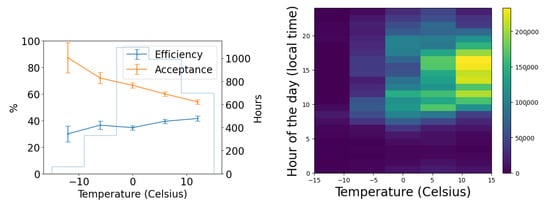

We use the five months of data from the commissioning period to examine our ability to reconstruct aerial objects such as aircraft from their physical parameters using only our Dalek system. We look at the sequence of processed information, from all the aircraft that passed overhead, to the detections that come out of YOLOv5. We can quantify the falloff from the average daily count of ADS-B airplanes within range of our site, to those also within the effective field of view of at least one camera, to those also within that camera’s recording time window, and finally to those also detected by YOLOv5. For example, the upper left panel of Figure 16 shows this progression in average daily counts. For a given aircraft, we bin its ADS-B records according to their distance to the site, before counting unique aircraft in each distance bin. For example, we expect, on average, within ∼10 km of the site, ∼210 airplanes to be viewable in a day, of which ∼76 pass by within a recording window and ∼31 are detected. At a distance of 10 km or less, the mean efficiency is 43%, the rest of the recorded airplanes at this distance are not detected by YOLOv5. The detection efficiency decreases with increasing distance of the airplane from the observatory. We also see from examining the ADS-B data that there are flight lanes to regional airports within 2–5 km horizontal distance to the observatory, which, accounting for typical aircraft altitudes, correlates with the peak of aircraft in range being around ∼5–7 km.

Figure 16.

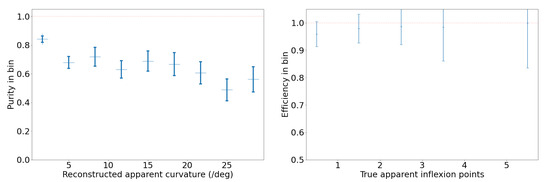

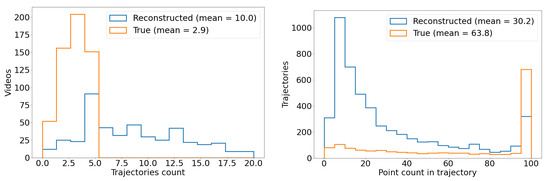

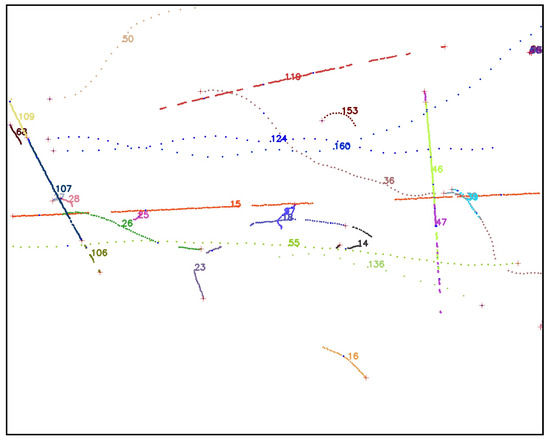

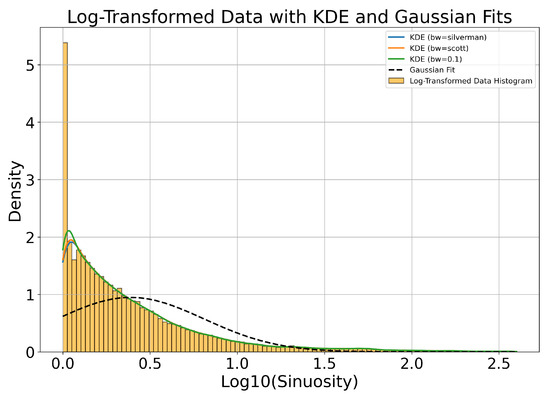

(Upper left): For the commissioning period, average daily count vs. distance (km) from observatory of ADS-B-equipped airplanes in range of site (criterion 1: in range); of those, also within the effective field of view of at least one camera (criteria 1–3: viewable); of those, also within that camera’s recording time window (criteria 1–4: recorded); and, of those, also detected by YOLOv5 (criteria 1–5: detected). (Middle left): The number detected divided by the number recorded (efficiency) vs. distance (km). (Lower left): The number recorded divided by the number viewable (acceptance) vs. distance (km). (Right): Acceptance and efficiency as functions of apparent airplane size. The histogram shows the number of ADS-B records that contributed to each point on the graph. Error bars are computed by propagating statistical errors from all ADS-B counts, assuming Poisson distributions.