Front-to-Side Hard and Soft Biometrics for Augmented Zero-Shot Side Face Recognition

Abstract

1. Introduction

- Proposing a novel set of face soft biometrics that can be invariantly observed from the front and side face view.

- Fusing the proposed soft features with cited face hard biometrics for effective zero-shot front-to-side face recognition.

- Adopting a proper feature-level fusion method to effectively integrate the proposed hard and soft traits.

- Analyzing the proposed biometric traits to explore their interactions and correlations between the front and side viewpoints and to identify the most effective and highest-performing ones, along with evaluating and comparing the performance of the proposed approach in augmenting zero-shot side face recognition.

2. Related Works

2.1. Traditional Face Recognition Approaches

2.2. Face Recognition Using Soft Biometrics

2.3. Biometric Feature-Level Fusion for Face Recognition

3. Proposed Methodology

3.1. Face Image Dataset

3.2. Face Soft Biometric Traits

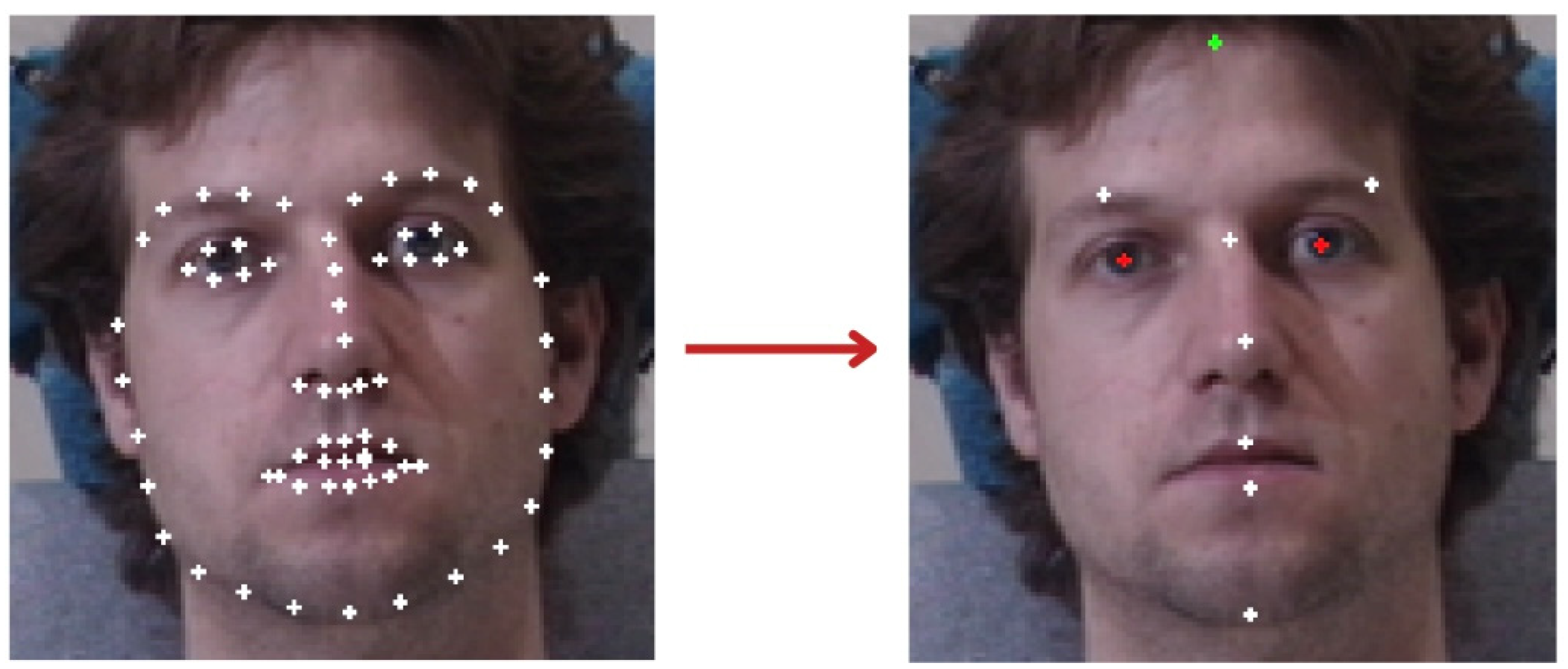

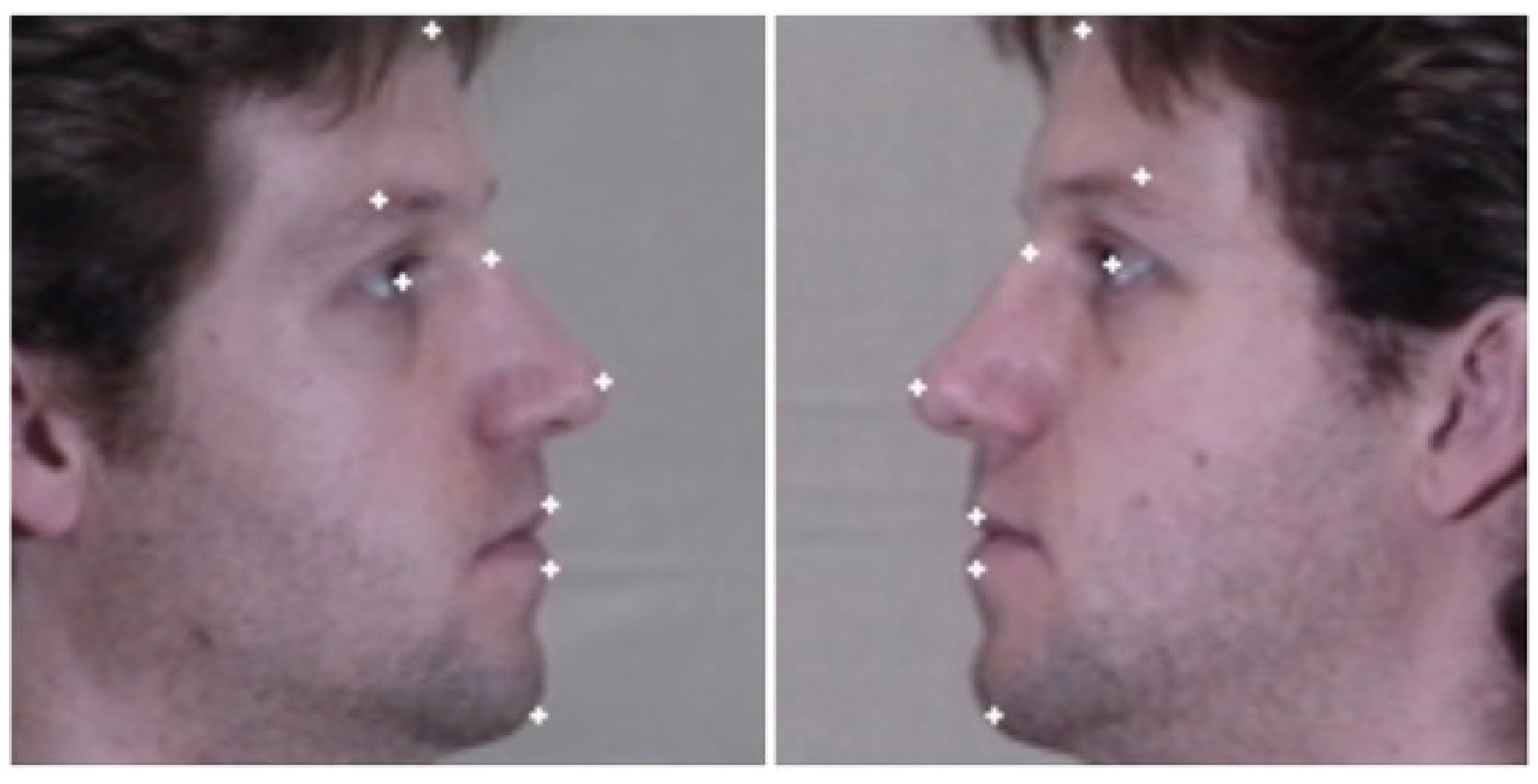

3.2.1. Face Landmarks Detection

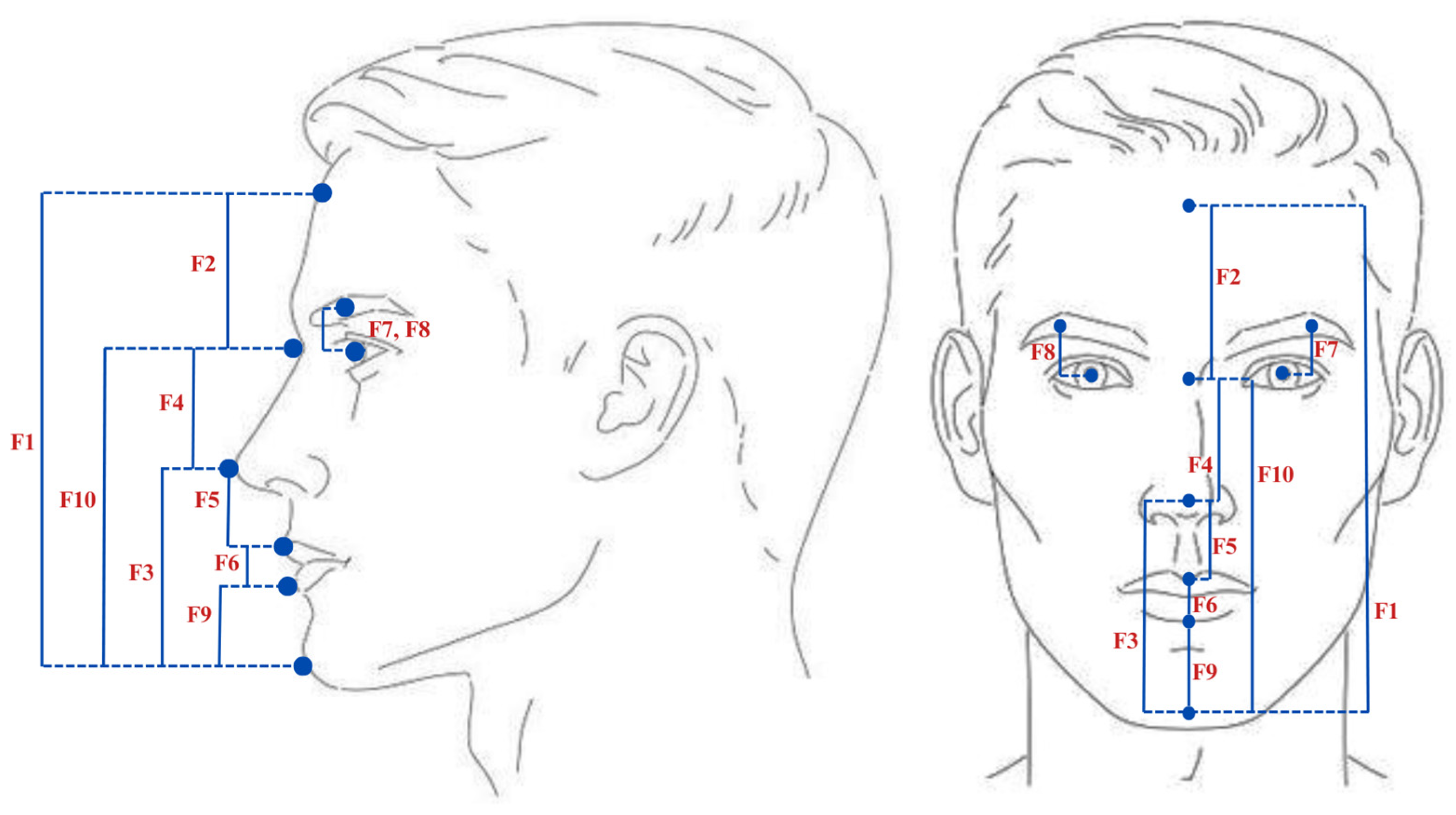

3.2.2. Face Soft Traits

3.2.3. Analysis of Face Soft Traits

- Analysis of variance (ANOVA):

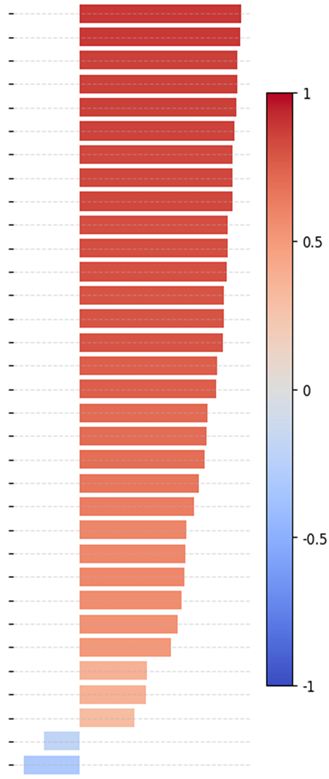

- Pearson’s r correlation:

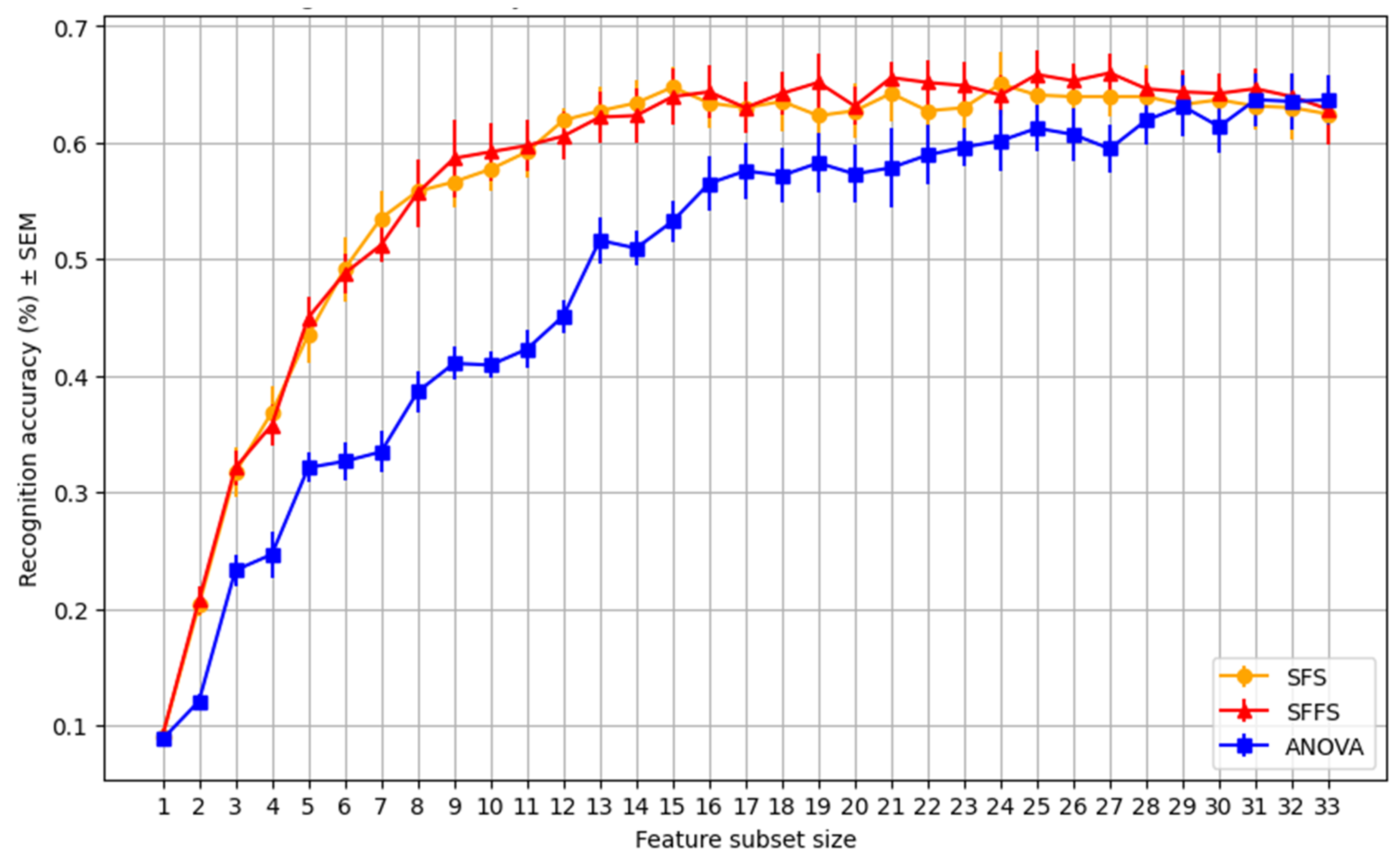

- Feature subset selection:

3.2.4. Normalization of Soft Traits

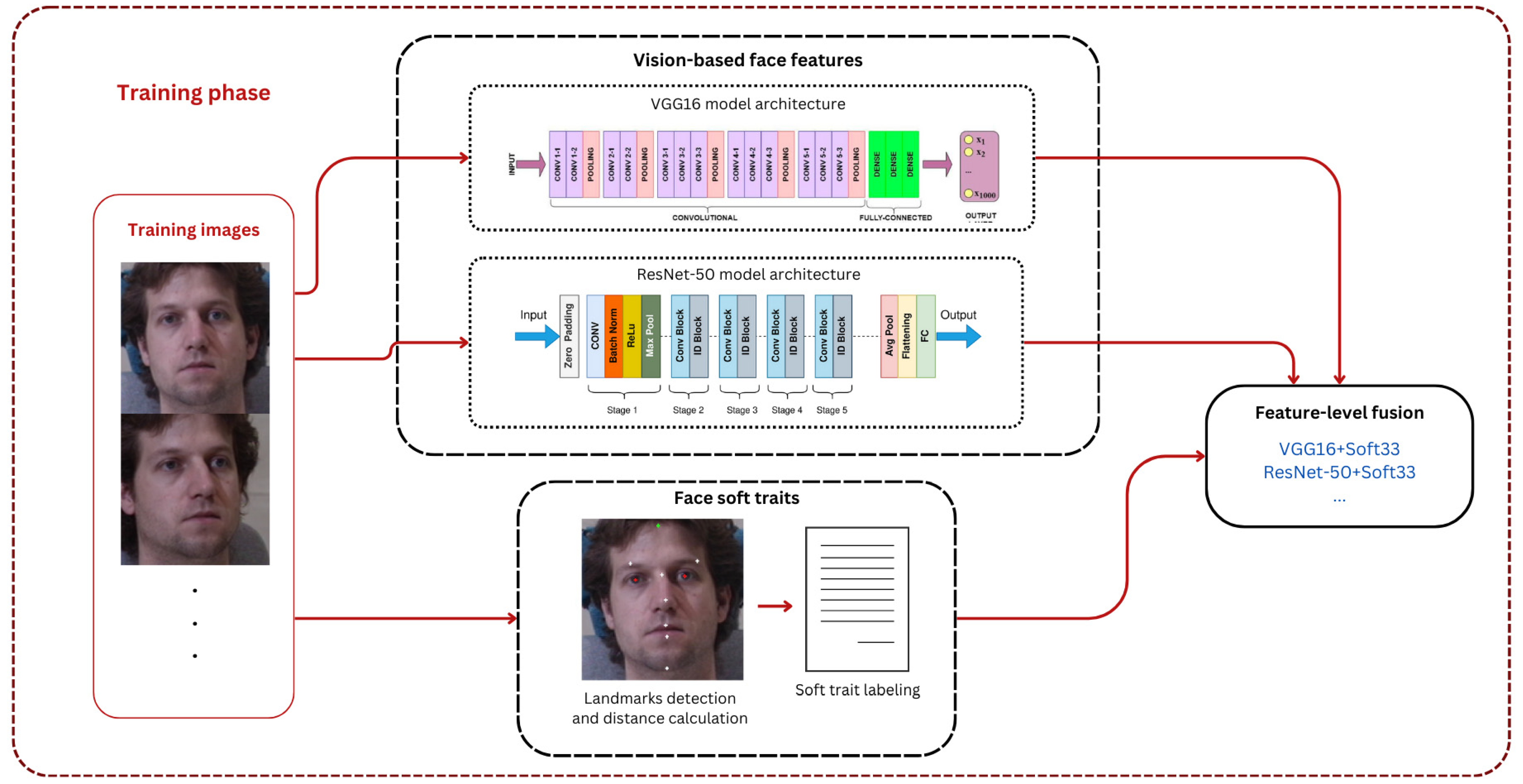

3.3. Vision-Based Face Traits Extraction

- VGG-16:

- ResNet-50:

3.4. Bioemric Trait Fusion

3.5. SVM-Based Classification

4. Experiments

4.1. Evaluation Metrics

- Accuracy: the proportion of correctly classified instances among all instances.

- Precision: the accuracy of positive forecasts (positive predictive value).

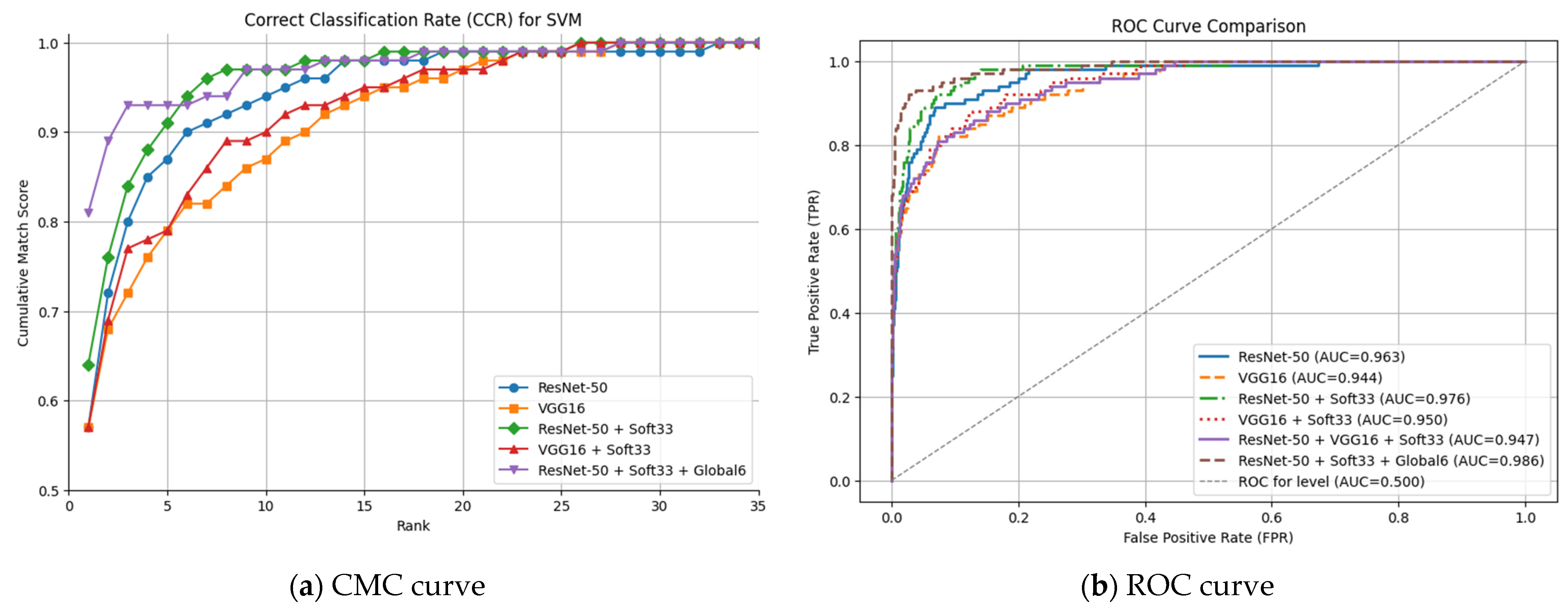

- Cumulative match characteristic (CMC): a metric employed to assess the effectiveness of identification systems (as one-to-many). It evaluates such systems based on their ability to rank by identification match scores. It yields a ranked list of registered candidates from the dataset based on the relative ranking of matching scores for each biometric sample [61].

- Receiver operating characteristic (ROC): represents the performance graphically and gives the possibility to view how the trade-off between the true positive rate (TPR) and false positive rate (FPR) changes along gradually varying categorization levels [62].

- Equal error rate (EER): represents a level of equality between the false rejection rate (FRR) and the false acceptance rate (FAR). It is the level at which the likelihood of the system incorrectly accepting a nonmatching person is equal to the likelihood of incorrectly rejecting a matching person. In biometric security systems, EER is an essential measure, since it maintains a balance between accessibility and security.

- Area under the curve (AUC): the ability of a model to differentiate between two classes. Better performance is indicated by higher numbers, which range from 0 to 1.

4.2. Experiments and Results

4.2.1. Hard and Soft Biometric Trait Fusion

4.2.2. Hard, Soft, Global-Soft Biometric Trait Fusion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fuad, M.T.H.; Fime, A.A.; Sikder, D.; Iftee, M.A.R.; Rabbi, J.; Al-rakhami, M.S.; Gumae, A.; Sen, O.; Fuad, M.; Islam, M.N. Recent Advances in Deep Learning Techniques for Face Recognition. IEEE Access 2021, 9, 99112–99142. [Google Scholar] [CrossRef]

- Bertillon, A.; McClaughry, R.W. Signaletic Instructions Including the Theory and Practice of Anthropometrical Identification; Werner Company: London, UK, 1896. [Google Scholar]

- Beyond Mug Shots: Other Methods of Criminal Identification in the 19th Century. Available online: https://www.police.govt.nz/about-us/history/museum/exhibitions/suspicious-looking-19th-century-mug-shots/other-methods-criminal-identification (accessed on 12 November 2024).

- El Kissi Ghalleb, A.; Sghaier, S.; Essoukri Ben Amara, N. Face Recognition Improvement Using Soft Biometrics. In Proceedings of the 10th International Multi-Conferences on Systems, Signals & Devices 2013 (SSD13), Hammamet, Tunisia, 18–21 March 2013; pp. 1–6. [Google Scholar]

- Pal Singh, S. Profile Based Side-View Face Authentication Using Pose Variants: A Survey. Iosr J. Comput. Eng. 2016, 2, 43–48. [Google Scholar] [CrossRef]

- Ruan, S.; Tang, C.; Zhou, X.; Jin, Z.; Chen, S.; Wen, H.; Liu, H.; Tang, D. Multi-Pose Face Recognition Based on Deep Learning in Unconstrained Scene. Appl. Sci. 2020, 10, 4669. [Google Scholar] [CrossRef]

- Kakadiaris, I.A.; Abdelmunim, H.; Yang, W.; Theoharis, T. Profile-Based Face Recognition. In Proceedings of the 2008 8th IEEE International Conference on Automatic Face & Gesture Recognition, Amsterdam, The Netherlands, 17–19 September 2008; pp. 1–8. [Google Scholar]

- Deng, J.; Cheng, S.; Xue, N.; Zhou, Y.; Zafeiriou, S. UV-GAN: Adversarial Facial UV Map Completion for Pose-Invariant Face Recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7093–7102. [Google Scholar]

- Romeo, J.; Bourlai, T. Semi-Automatic Geometric Normalization of Profile Faces. In Proceedings of the 2019 European Intelligence and Security Informatics Conference (EISIC), Oulu, Finland, 26–27 November 2019; pp. 121–125. [Google Scholar]

- Hangaragi, S.; Singh, T.; Neelima, N. Face Detection and Recognition Using Face Mesh and Deep Neural Network. Procedia Comput. Sci. 2023, 218, 741–749. [Google Scholar] [CrossRef]

- Taherkhani, F.; Talreja, V.; Dawson, J.; Valenti, M.C.; Nasrabadi, N.M. PF-cpGAN: Profile to Frontal Coupled GAN for Face Recognition in the Wild. In Proceedings of the 2020 IEEE International Joint Conference on Biometrics (IJCB), Houston, TX, USA, 28 September–1 October 2020; pp. 1–10. [Google Scholar]

- Cao, K.; Rong, Y.; Li, C.; Tang, X.; Loy, C.C. Pose-Robust Face Recognition via Deep Residual Equivariant Mapping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Santemiz, P.; Spreeuwers, L.J.; Veldhuis, R.N.J. Automatic Face Recognition for Home Safety Using Video-based Side-view Face Images. IET Biom. 2018, 7, 606–614. [Google Scholar] [CrossRef]

- Ghaffary, K.A. Profile-Based Face Recognition Using the Outline Curve of the Profile Silhouette. Artif. Intell. Tech. 2011, AIT, 38–43. [Google Scholar]

- Sarwar, M.G.; Dey, A.; Das, A. Developing a LBPH-Based Face Recognition System for Visually Impaired People. In Proceedings of the 2021 1st International Conference on Artificial Intelligence and Data Analytics (CAIDA), Riyadh, Saudi Arabia, 6–7 April 2021; pp. 286–289. [Google Scholar]

- Alamri, M.; Mahmoodi, S. Facial Profiles Recognition Using Comparative Facial Soft Biometrics. In Proceedings of the 2020 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 16–18 September 2020. [Google Scholar]

- Dantcheva, A.; Dugelay, J.-L. Frontal-to-Side Face Re-Identification Based on Hair, Skin and Clothes Patches. In Proceedings of the 2011 8th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Klagenfurt, Austria, 30 August–2 September 2011; pp. 309–313. [Google Scholar]

- Almudhahka, N.Y.; Nixon, M.S.; Hare, J.S. Semantic Face Signatures: Recognizing and Retrieving Faces by Verbal Descriptions. IEEE Trans. Inf. Forensics Secur. 2018, 13, 706–716. [Google Scholar] [CrossRef]

- Park, U.; Jain, A.K. Face Matching and Retrieval Using Soft Biometrics. IEEE Trans. Inf. Forensics Secur. 2010, 5, 406–415. [Google Scholar] [CrossRef]

- Nhat, H.T.M.; Hoang, V.T. Feature Fusion by Using LBP, HOG, GIST Descriptors and Canonical Correlation Analysis for Face Recognition. In Proceedings of the 2019 26th International Conference on Telecommunications (ICT), Hanoi, Vietnam, 8–10 April 2019; pp. 371–375. [Google Scholar]

- AlFawwaz, B.M.; AL-Shatnawi, A.; Al-Saqqar, F.; Nusir, M.; Yaseen, H. Face Recognition System Based on the Multi-Resolution Singular Value Decomposition Fusion Technique. Int. J. Data Netw. Sci. 2022, 6, 1249–1260. [Google Scholar] [CrossRef]

- Walmiki, P.D. Improved Face Recognition Using Data Fusion. Available online: https://www.semanticscholar.org/paper/Improved-Face-Recognition-using-Data-Fusion-Walmiki-Mhamane/412af1896438165e5a0c0c9e837d5bee30717f4d (accessed on 13 November 2024).

- Reddy, C.V.R.; Kishore, K.V.K.; Reddy, U.S.; Suneetha, M. Person Identification System Using Feature Level Fusion of Multi-Biometrics. In Proceedings of the 2016 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC), Chennai, 15–17 December 2016; pp. 1–6. [Google Scholar]

- Gross, R.; Matthews, I.; Cohn, J.; Kanade, T.; Baker, S. Multi-PIE. In Proceedings of the 2008 8th IEEE International Conference on Automatic Face & Gesture Recognition, Amsterdam, The Netherlands, 17–19 September 2008; pp. 1–8. [Google Scholar]

- Multi_Pie. Available online: https://www.kaggle.com/datasets/aliates/multi-pie (accessed on 13 November 2024).

- Wu, Y.; Ji, Q. Facial Landmark Detection: A Literature Survey. Int. J. Comput. Vis. 2019, 127, 115–142. [Google Scholar] [CrossRef]

- Xia, H.; Li, C. Face Recognition and Application of Film and Television Actors Based on Dlib. In Proceedings of the 2019 12th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Suzhou, China, 19–21 October 2019; pp. 1–6. [Google Scholar]

- RectLabel. Available online: https://rectlabel.com/ (accessed on 12 November 2024).

- Al-jassim, N.H.; Fathallah, Z.F.; Abdullah, N.M. Anthropometric measurements of human face in Basrah. Bas. J. Surg. 2014, 20, 29–40. [Google Scholar] [CrossRef]

- Farkas, L.G.; Katic, M.J.; Forrest, C.R. International Anthropometric Study of Facial Morphology in Various Ethnic Groups/Races. J. Craniofacial Surg. 2005, 16, 615–646. [Google Scholar] [CrossRef] [PubMed]

- Ran, L.; Zhang, X.; Hu, H.; Luo, H.; Liu, T. Comparison of Head and Face Anthropometric Characteristics Between Six Countries. In HCI International 2016—Posters’ Extended Abstracts; Stephanidis, C., Ed.; Communications in Computer and Information Science; Springer International Publishing: Cham, Switzerland, 2016; Volume 617, pp. 520–524. ISBN 978-3-319-40547-6. [Google Scholar]

- Dhaliwal, J.; Wagner, J.; Leong, S.L.; Lim, C.H. Facial Anthropometric Measurements and Photographs—An Interdisciplinary Study. IEEE Access 2020, 8, 181998–182013. [Google Scholar] [CrossRef]

- Tome, P.; Vera-Rodriguez, R.; Fierrez, J.; Ortega-Garcia, J. Facial Soft Biometric Features for Forensic Face Recognition. Forensic Sci. Int. 2015, 257, 271–284. [Google Scholar] [CrossRef][Green Version]

- Dagar, M.A.; Tech, M. Face Recognition Using Mahalanobis Distance in Matlab. 2018, Volume 5. Available online: https://www.semanticscholar.org/paper/FACE-RECOGNITION-USING-MAHALANOBIS-DISTANCE-IN-Pooja-Dagar/10471332ca4575c60fc79ce57b6a51acf51d0a74 (accessed on 13 November 2024).

- Percentile Formula: Definition, Formula, Calculation & Examples. Available online: https://testbook.com/maths-formulas/percentile-formula (accessed on 13 November 2024).

- Tome, P.; Fierrez, J.; Vera-Rodriguez, R.; Nixon, M.S. Soft Biometrics and Their Application in Person Recognition at a Distance. IEEE Trans. Inf. Forensics Secur. 2014, 9, 464–475. [Google Scholar] [CrossRef]

- Jaha, E.S. Augmenting Gabor-Based Face Recognition with Global Soft Biometrics. In Proceedings of the 2019 7th International Symposium on Digital Forensics and Security (ISDFS), Barcelos, Portugal, 10–12 June 2019; pp. 1–5. [Google Scholar]

- Henson, R.N. Analysis of Variance (ANOVA). In Brain Mapping; Elsevier: Amsterdam, The Netherlands, 2015; pp. 477–481. ISBN 978-0-12-397316-0. [Google Scholar]

- Nasiri, H.; Alavi, S.A. A Novel Framework Based on Deep Learning and ANOVA Feature Selection Method for Diagnosis of COVID-19 Cases from Chest X-Ray Images. Comput. Intell. Neurosci. 2022, 2022, 4694567. [Google Scholar] [CrossRef]

- Jaha, E.S.; Nixon, M.S. Viewpoint Invariant Subject Retrieval via Soft Clothing Biometrics. In Proceedings of the 2015 International Conference on Biometrics (ICB), Phuket, Thailand, 19–22 May 2015; pp. 73–78. [Google Scholar]

- Chen, P.; Li, F.; Wu, C. Research on Intrusion Detection Method Based on Pearson Correlation Coefficient Feature Selection Algorithm. J. Phys. Conf. Ser. 2021, 1757, 012054. [Google Scholar] [CrossRef]

- Chotchantarakun, K. Optimizing Sequential Forward Selection on Classification Using Genetic Algorithm. IJCAI 2023, 47, 81–90. [Google Scholar] [CrossRef]

- Peng, X.; Cheng, K.; Lang, J.; Zhang, Z.; Cai, T.; Duan, S. Short-Term Wind Power Prediction for Wind Farm Clusters Based on SFFS Feature Selection and BLSTM Deep Learning. Energies 2021, 14, 1894. [Google Scholar] [CrossRef]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M.; Danks, N.P.; Ray, S. Partial Least Squares Structural Equation Modeling (PLS-SEM) Using R: A Workbook; Classroom Companion: Business; Springer International Publishing: Cham, Switzerland, 2021; ISBN 978-3-030-80518-0. [Google Scholar]

- Patro, S.G.K.; Sahu, K.K. Normalization: A Preprocessing Stage. Int. Adv. Res. J. Sci. Eng. Technol. 2015, 2, 20–22. [Google Scholar] [CrossRef]

- Henderi, H. Comparison of Min-Max Normalization and Z-Score Normalization in the K-Nearest Neighbor (kNN) Algorithm to Test the Accuracy of Types of Breast Cancer. Int. J. Inform. Inf. Syst. 2021, 4, 13–20. [Google Scholar] [CrossRef]

- Fei, N.; Gao, Y.; Lu, Z.; Xiang, T. Z-Score Normalization, Hubness, and Few-Shot Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Rajeena, P.P.F.; Orban, R.; Vadivel, K.S.; Subramanian, M.; Muthusamy, S.; Elminaam, D.S.A.; Nabil, A.; Abulaigh, L.; Ahmadi, M.; Ali, M.A.S. A Novel Method for the Classification of Butterfly Species Using Pre-Trained CNN Models. Electronics 2022, 11, 2016. [Google Scholar] [CrossRef]

- Atliha, V.; Sesok, D. Comparison of VGG and ResNet Used as Encoders for Image Captioning. In Proceedings of the 2020 IEEE Open Conference of Electrical, Electronic and Information Sciences (eStream), Vilnius, Lithuania, 30 April 2020; pp. 1–4. [Google Scholar]

- Mustapha, A.; Mohamed, L.; Hamid, H.; Ali, K. Diabetic Retinopathy Classification Using ResNet50 and VGG-16 Pretrained Networks. Int. J. Comput. Eng. Data Sci. 2021, 1, 1–7. [Google Scholar]

- Gholami, S.; Zarafshan, E.; Sheikh, R.; Sana, S.S. Using Deep Learning to Enhance Business Intelligence in Organizational Management. Data Sci. Financ. Econ. 2023, 3, 337–353. [Google Scholar] [CrossRef]

- Ahmed, M.U.; Kim, Y.H.; Kim, J.W.; Bashar, M.R.; Rhee, P.K. Two Person Interaction Recognition Based on Effective Hybrid Learning. KSII Trans. Internet Inf. Syst. 2019, 13, 751–770. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Al-Haija, Q.A.; Adebanjo, A. Breast Cancer Diagnosis in Histopathological Images Using ResNet-50 Convolutional Neural Network. In Proceedings of the 2020 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Vancouver, BC, Canada, 9–12 September 2020; pp. 1–7. [Google Scholar]

- Alshahrani, A.A.; Jaha, E.S.; Alowidi, N. Fusion of Hash-Based Hard and Soft Biometrics for Enhancing Face Image Database Search and Retrieval. Comput. Mater. Contin. 2023, 77, 3489–3509. [Google Scholar] [CrossRef]

- Jiddah, S.M.; Abushakra, M.; Yurtkan, K. Fusion of geometric and texture features for side-view face recognition using svm. Istat. J. Turk. Stat. Assoc. 2021, 13, 108–119. [Google Scholar]

- Hindi Alsaedi, N.; Sami Jaha, E. Dynamic Feature Subset Selection for Occluded Face Recognition. Intell. Autom. Soft Comput. 2022, 31, 407–427. [Google Scholar] [CrossRef]

- Chauhan, V.K.; Dahiya, K.; Sharma, A. Problem Formulations and Solvers in Linear SVM: A Review. Artif. Intell. Rev. 2019, 52, 803–855. [Google Scholar] [CrossRef]

- Guo, S.; Chen, S.; Li, Y. Face Recognition Based on Convolutional Neural Network and Support Vector Machine. In Proceedings of the 2016 IEEE International Conference on Information and Automation (ICIA), Ningbo, China, 1–3 August 2016; pp. 1787–1792. [Google Scholar]

- Tharwat, A. Classification Assessment Methods. Appl. Comput. Inform. 2021, 17, 168–192. [Google Scholar] [CrossRef]

- Paisitkriangkrai, S.; Shen, C.; Van Den Hengel, A. Learning to Rank in Person Re-Identification with Metric Ensembles. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1846–1855. [Google Scholar]

- DeCann, B.; Ross, A. Relating ROC and CMC Curves via the Biometric Menagerie. In Proceedings of the 2013 IEEE Sixth International Conference on Biometrics: Theory, Applications and Systems (BTAS), Arlington, VA, USA, 29 September–2 October 2013; pp. 1–8. [Google Scholar]

| Data Distribution | Training | Testing |

|---|---|---|

| Number of images | 738 | 100 |

| ID | Facial Distance Attributes | Description | Semantic Labels | Label Form |

|---|---|---|---|---|

| F1 | Face height | Distance between the forehead to chin | [very large—large—medium—small—very small] | Relative |

| F2 | Forehead height | Distance between the forehead and nasion | [very large—large—medium—small—very small] | Relative |

| F3 | Lower face height | Distance between the nose tip and chin | [very large—large—medium—small—very small] | Relative |

| F4 | Nasal length | Distance between nasion and tip of nose | [very large—large—medium—small—very small] | Relative |

| F5 | Philtrum | Distance between the nasal tip and upper lip | [very large—large—medium—small—very small] | Relative |

| F6 | Mouth height | Distance from the lower lip to upper lip | [very large—large—medium—small—very small] | Relative |

| F7 | Eye-to-eyebrow-left | distance between left eye to left eyebrow | [very large—large—medium—small—very small] | Relative |

| F8 | Eye-to-eyebrow-right | distance between the right eye to right eyebrow | [very large—large—medium—small—very small] | Relative |

| F9 | Chin-to-mouth | Distance between chin to the lower lip | [very large—large—medium—small—very small] | Relative |

| F10 | Nasion-to-chin | Distance between nasion and chin | [very large—large—medium—small—very small] | Relative |

| ID | Facial Ratio Attributes | Calculation Formula | Description | Semantic Labels | Label Form |

|---|---|---|---|---|---|

| F11 | Forehead to face-height ratio | The proportion of forehead height relative to the total face height | [very large—large—medium—small—very small] | Relative | |

| F12 | Lower face to face-height ratio | The proportion of lower face height relative to the total face height | [very large—large—medium—small—very small] | Relative | |

| F13 | Nasal length to face-height ratio | The proportion of nose length relative to the total face height | [very large—large—medium—small—very small] | Relative | |

| F14 | Philtrum to face height | The proportion of philtrum distance relative to the total face height | [very large—large—medium—small—very small] | Relative | |

| F15 | Mouth-height to face-height ratio | The proportion of mouth height relative to the total face height | [very large—large—medium—small—very small] | Relative | |

| F16 | Nasion-to-chin to face-height ratio | The proportion of nasion-to-chin distance relative to the total face height | [very large—large—medium—small—very small] | Relative | |

| F17 | Chin-to-mouth to face-height ratio | The proportion of chin-to-mouth distance relative to the total face height | [very large—large—medium—small—very small] | Relative | |

| F18 | Eye-to-eyebrow-left to face-height ratio | The proportion of eye-to-eyebrows distance relative to the total face height | [very large—large—medium—small—very small] | Relative | |

| F19 | Eye-to-eyebrow-right to face-height ratio | The proportion of eye-to-eyebrows distance relative to the total face height | [very large—large—medium—small—very small] | Relative | |

| F20 | Nasal length to nasion-to-chin ratio | The proportion of nose length relative to the nasion-to-chin distance | [very large—large—medium—small—very small] | Relative | |

| F22 | Mouth-height to nasion-to-chin ratio | The proportion of mouth height relative to nasion-to-chin distance | [very large—large—medium—small—very small] | Relative | |

| F23 | Chin-to-mouth to nasion-to-chin ratio | The proportion of chin-to-mouth distance relative to nasion-to-chin distance | [very large—large—medium—small—very small] | Relative | |

| F24 | Philtrum to mouth height ratio | The proportion of mouth-to-nose distance relative to mouth height | [very large—large—medium—small—very small] | Relative | |

| F25 | Philtrum to chin-to-mouth ratio | The proportion of mouth-to-nose distance relative to chin-to-mouth distance | [very large—large—medium—small—very small] | Relative | |

| F26 | Philtrum to lower face-height ratio | The proportion of mouth-to-nose distance relative to the lower face height | [very large—large—medium—small—very small] | Relative | |

| F27 | Mouth height to lower face-height ratio | The proportion of mouth height relative to the lower face height | [very large—large—medium—small—very small] | Relative | |

| F28 | Chin-to-mouth to lower face height ratio | The proportion of chin-to-mouth distance relative to the lower face height | [very large—large—medium—small—very small] | Relative | |

| F29 | Eye-to-eyebrow-left to forehead height ratio | The proportion of eye-to-eyebrow distance relative to forehead height | [very large—large—medium—small—very small] | Relative | |

| F30 | Eye-to-eyebrow-right to forehead-height ratio | The proportion of eye-to-eyebrow distance relative to forehead height | [very large—large—medium—small—very small] | Relative | |

| F31 | Philtrum to nasal-length ratio | The proportion of mouth-to-nose distance relative to the nasal length | [very large—large—medium—small—very small] | Relative | |

| F32 | Mouth height to nasal-length ratio | The proportion of mouth height relative to the nasal length | [very large—large—medium—small—very small] | Relative | |

| F33 | Chin-to-mouth to mouth height ratio | The proportion of chin-to-mouth distance relative to mouth height | [very large—large—medium—small—very small] | Relative |

| Soft Trait | Semantic Labels | Label Form |

|---|---|---|

| 1. Gender | [Male—female] | Absolute |

| 2. Ethnicity | [European—Middle eastern—Far eastern—South Asian—African—Mixed—Other] | Absolute |

| 3. Age group | [Infant—Preadolescence—Adolescence—Young adult—Adult—Middle aged—Senior] | Absolute |

| 4. Skin color | [White—oriental—tanned—brown—black] | Absolute |

| 5. Skin tone | [Fair—light—medium—brown—dark—very dark] | Relative |

| 6. Facial hair | [None—mustache—beard] | Absolute |

| ID | F-Ratio | p-Value | ID | F-Ratio | p-Value | ID | F-Ratio | p-Value |

|---|---|---|---|---|---|---|---|---|

| F27 | 40.363 | 2.99 × 10−169 | F29 | 29.200 | 6.70 × 10−136 | F6 | 21.473 | 1.30 × 10−107 |

| F22 | 40.352 | 3.20 × 10−169 | F26 | 28.523 | 1.27 × 10−133 | F16 | 21.235 | 1.19 × 10−106 |

| F18 | 40.205 | 7.90 × 10−169 | F31 | 28.232 | 1.25 × 10−132 | F12 | 20.857 | 4.10 × 10−105 |

| F24 | 39.484 | 7.09 × 10−167 | F23 | 27.416 | 8.11 × 10−130 | F11 | 20.252 | 1.26 × 10−102 |

| F4 | 38.114 | 4.23 × 10−163 | F2 | 27.251 | 3.05 × 10−129 | F25 | 20.159 | 3.08 × 10−102 |

| F32 | 37.893 | 1.77 × 10−162 | F17 | 26.437 | 2.25 × 10−126 | F19 | 20.015 | 1.23 × 10−101 |

| F15 | 37.379 | 4.96 × 10−161 | F21 | 25.512 | 4.77 × 10−123 | F8 | 17.615 | 2.84 × 10−91 |

| F7 | 36.586 | 9.06 × 10−159 | F14 | 25.227 | 5.23 × 10−122 | F9 | 13.014 | 2.74 × 10−69 |

| F33 | 34.914 | 6.93 × 10−154 | F28 | 24.841 | 1.37 × 10−120 | F30 | 12.261 | 2.18 × 10−65 |

| F13 | 31.215 | 1.73 × 10−142 | F20 | 24.781 | 2.29 × 10−120 | F5 | 10.212 | 2.65 × 10−54 |

| F1 | 30.873 | 2.17 × 10−141 | F10 | 24.178 | 4.06 × 10−118 | F3 | 7.398 | 6.82 × 10−38 |

| ID | Pearson’ r Correlation Coefficient | |

|---|---|---|

| F32 | 0.887 |  |

| F22 | 0.886 | |

| F15 | 0.867 | |

| F7 | 0.867 | |

| F27 | 0.863 | |

| F33 | 0.852 | |

| F4 | 0.843 | |

| F24 | 0.843 | |

| F19 | 0.840 | |

| F13 | 0.818 | |

| F18 | 0.817 | |

| F8 | 0.812 | |

| F6 | 0.797 | |

| F31 | 0.794 | |

| F30 | 0.790 | |

| F21 | 0.757 | |

| F10 | 0.750 | |

| F26 | 0.704 | |

| F20 | 0.697 | |

| F28 | 0.688 | |

| F29 | 0.659 | |

| F5 | 0.632 | |

| F14 | 0.590 | |

| F16 | 0.583 | |

| F9 | 0.579 | |

| F23 | 0.561 | |

| F25 | 0.539 | |

| F11 | 0.504 | |

| F17 | 0.371 | |

| F1 | 0.368 | |

| F3 | 0.302 | |

| F12 | −0.196 | |

| F2 | −0.305 | |

| Approach | Accuracy (SVM) | Precision (SVM) | Soft Trait Weight Coefficient |

|---|---|---|---|

| ResNet-50 | 62.00% | 56.53% | - |

| VGG-16 | 62.00% | 64.80% | - |

| VGG-16 + soft_traits_33 | 66.00% 68.00% | 69.38% 71.35% | 3.5 5.5 |

| ResNet-50 + soft_traits_33 | 72.00% | 73.70% | - |

| VGG-16 + ResNet-50 + soft_traits_33 | 66.00% 68.00% | 69.95% 70.48% | 3.5 5.5 |

| VGG-16 + soft_traits_33 + global_traits_6 | 68.00% | 70.48% | 5.5 |

| ResNet-50 + soft_traits_33 + global_traits_6 | 84.00% 85.00% | 79.47% 81.13% | 2.5 3.5 |

| Approach | Identification | Verification | |||

|---|---|---|---|---|---|

| Average Sum Match Scores Up to Rank | |||||

| =1 | =5 | =10 | AUC | EER | |

| ResNet-50 | 0.570 | 0.870 | 0.940 | 0.963 | 0.107 |

| VGG-16 | 0.570 | 0.790 | 0.870 | 0.944 | 0.150 |

| ResNet-50 + soft33 | 0.640 | 0.910 | 0.970 | 0.976 | 0.075 |

| VGG-16 + soft33 | 0.570 | 0.790 | 0.900 | 0.950 | 0.129 |

| ResNet-50 + soft33 + global6 | 0.810 | 0.930 | 0.970 | 0.986 | 0.062 |

| Ref. | Dataset | Hard Features Extractor | Soft Biometrics | Features Fusion | Face View | Accuracy | |

|---|---|---|---|---|---|---|---|

| Training | Testing | ||||||

| [13] | UT-DOOR, CMU Multi-PIE | PCA, LDA, LBP, and HOG | No | No | Side | Side id & verification | 96.7%, 92.7% |

| [16] | XM2VTSDB | No | 33 comparative face traits | No | Side | Side id | 96.0% |

| [17] | FERET color dataset | No | 3 patches for (hair, skin, and cloths colors) | No | Front | Side re-id | unknown |

| Ours | CMU Multi-PIE | ResNet-50, VGG-16 | New proposed 33 face soft traits and 6 global soft traits | Yes | Front | Zero-shot Side id & verification | 85.0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alsubhi, A.H.; Jaha, E.S. Front-to-Side Hard and Soft Biometrics for Augmented Zero-Shot Side Face Recognition. Sensors 2025, 25, 1638. https://doi.org/10.3390/s25061638

Alsubhi AH, Jaha ES. Front-to-Side Hard and Soft Biometrics for Augmented Zero-Shot Side Face Recognition. Sensors. 2025; 25(6):1638. https://doi.org/10.3390/s25061638

Chicago/Turabian StyleAlsubhi, Ahuod Hameed, and Emad Sami Jaha. 2025. "Front-to-Side Hard and Soft Biometrics for Augmented Zero-Shot Side Face Recognition" Sensors 25, no. 6: 1638. https://doi.org/10.3390/s25061638

APA StyleAlsubhi, A. H., & Jaha, E. S. (2025). Front-to-Side Hard and Soft Biometrics for Augmented Zero-Shot Side Face Recognition. Sensors, 25(6), 1638. https://doi.org/10.3390/s25061638