Developing Computer Vision Models for Classifying Grain Shapes of Crushed Stone

Abstract

:1. Introduction

- →

- Collecting an empirical database of crushed stone grain images;

- →

- Carrying out the augmentation process to expand the set of representative data;

- →

- Selecting neural network architectures that are most suitable for solving the tasks;

- →

- Training the selected algorithms;

- →

- Testing the trained algorithms on a test sample;

- →

- Analysis of the obtained results according to the main quality metrics of classification models.

2. Materials and Methods

2.1. Grain Shape of Crushed Stone and Methods for Determining It

- →

- The preparation of a laboratory sample of crushed stone of one grain fraction;

- →

- Weighing the sample and selecting from it grains of a lamellar and needle-shaped form;

- →

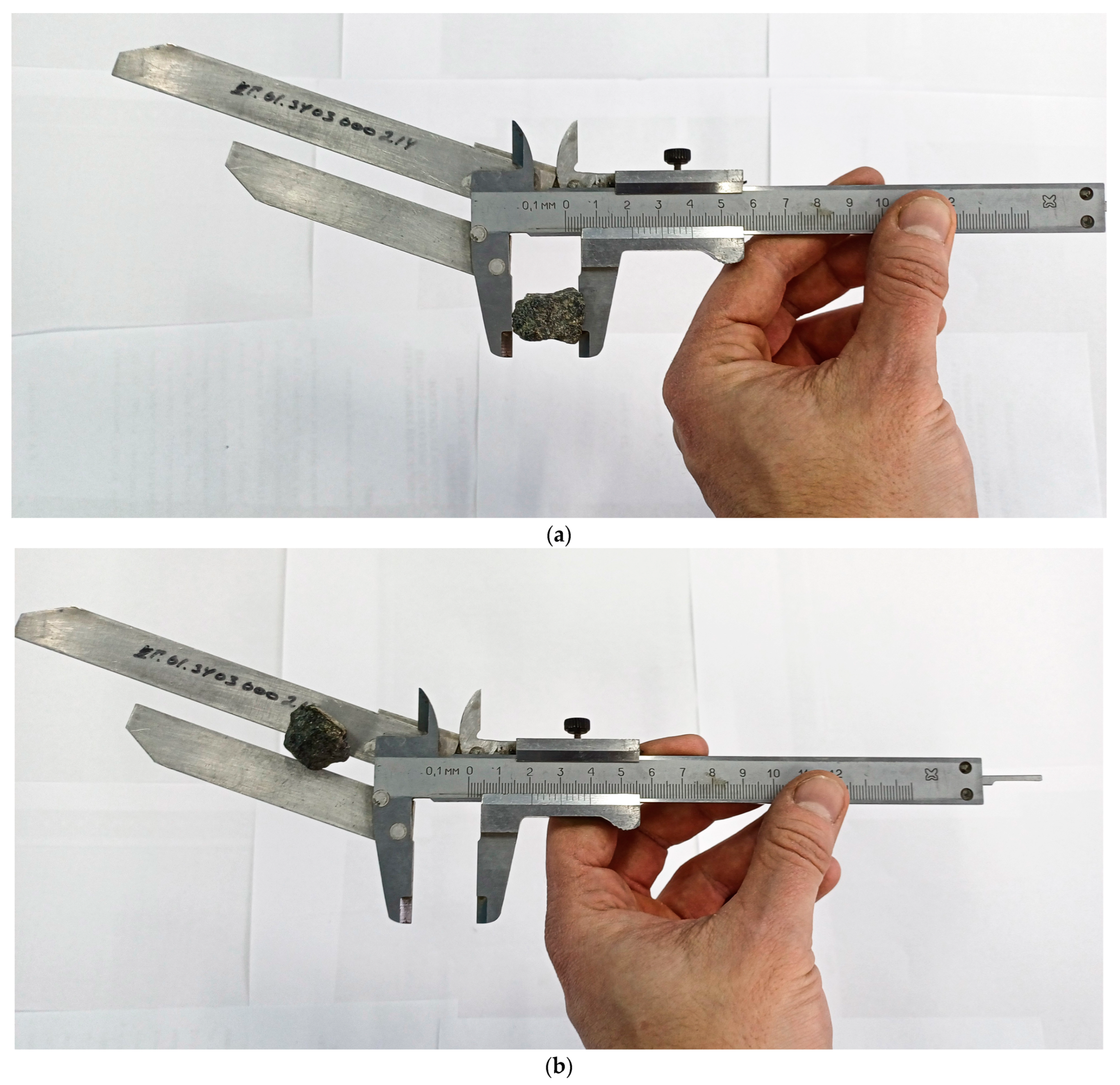

- Determining the ratio of grain sizes using a movable template: First, the grain to be measured is placed with its largest size between the jaws, and its position is fixed (Figure 2a); then, the grain is passed with its smallest size between the plates of the template and installed at a distance three times smaller (Figure 2b). If the grain passes between the jaws, then it is classified as a grain of lamellar or needle-shaped form.

- →

- The percentage ratio of grains of a lamellar and needle-shaped form is determined by the ratio of the mass of grains of a lamellar and needle-shaped form to the mass of the original sample.

- –

- Preparing a crushed stone sample of one fraction and weighing it;

- –

- Sifting the sample through slotted sieves;

- –

- Weighing the crushed stone grains that have passed through the slotted sieves.

2.2. Data Collection, Annotation, and Augmentation

- Random rotation. The object is rotated around all three axes (X, Y, Z) by random angles in the range from −π to π. This allows the model to be invariant with the orientation of the object in space. Implemented using the Euler rotation matrix [48].

- Random scaling. The object is scaled along all three axes by a random factor in the range from 0.5 to 1.5. This allows the model to be robust to changes in the object’s size. The value of the random transformation coefficient is determined randomly within certain limits that are possible within each class.

- Adding noise. Gaussian noise with a zero mean and a standard deviation of 0.01 is added to the object’s vertices [49]. This helps the model be robust to small distortions in the data.

- Reflection. The object is reflected along one of the three axes (X, Y, or Z) with a 50% probability. This increases the diversity of the data and helps the model account for the symmetrical properties of objects.

- Scaling along the main axis.

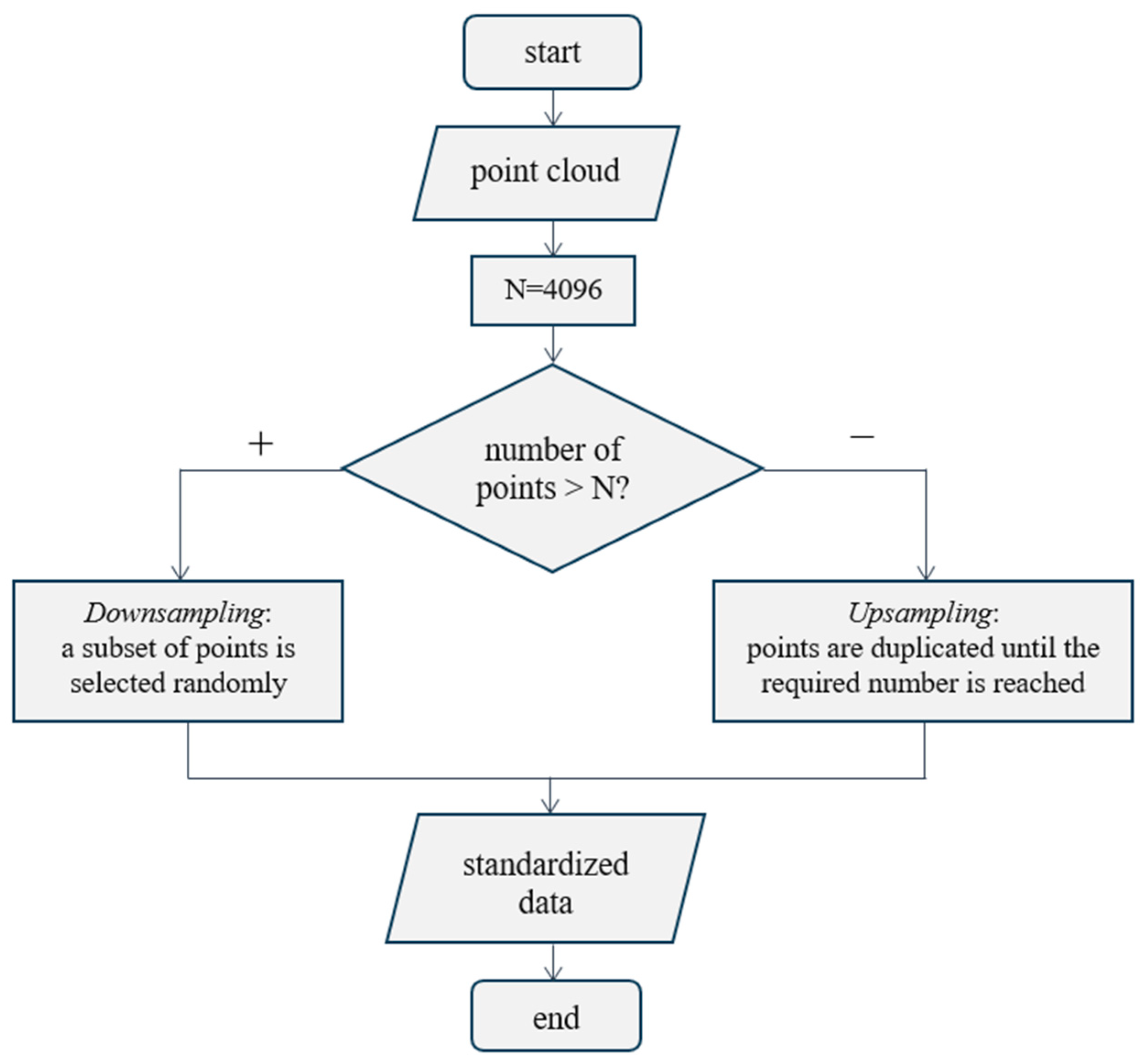

- Data unification. Resampling of points ensures the same size of input data, which simplifies the model architecture and the training process.

- Increasing data diversity. Thanks to the selected set of modifications that are correct for the objects under consideration, a more diverse data set appears, which improves the generalizing ability of the model. It is important to note that, for needle-shaped grains, an algorithm was created that implements specific augmentations that take into account the unique characteristics of objects of this class, which increase the accuracy and reliability of classifiers.

2.3. Selection of Neural Network Architectures

- Input Transform Network: a convolutional network that transforms input 3D coordinates into a feature space.

- Feature Transform Network: an additional convolutional network for further feature extraction.

- Global Feature Aggregation: max pooling is used to aggregate features across all points.

- Classifier: a multilayer perceptron that predicts the class of an object.

- –

- There are no matrix transformations (alignment networks);

- –

- Local features are excluded;

- –

- Only max pooling is used, without additional operations with local features.

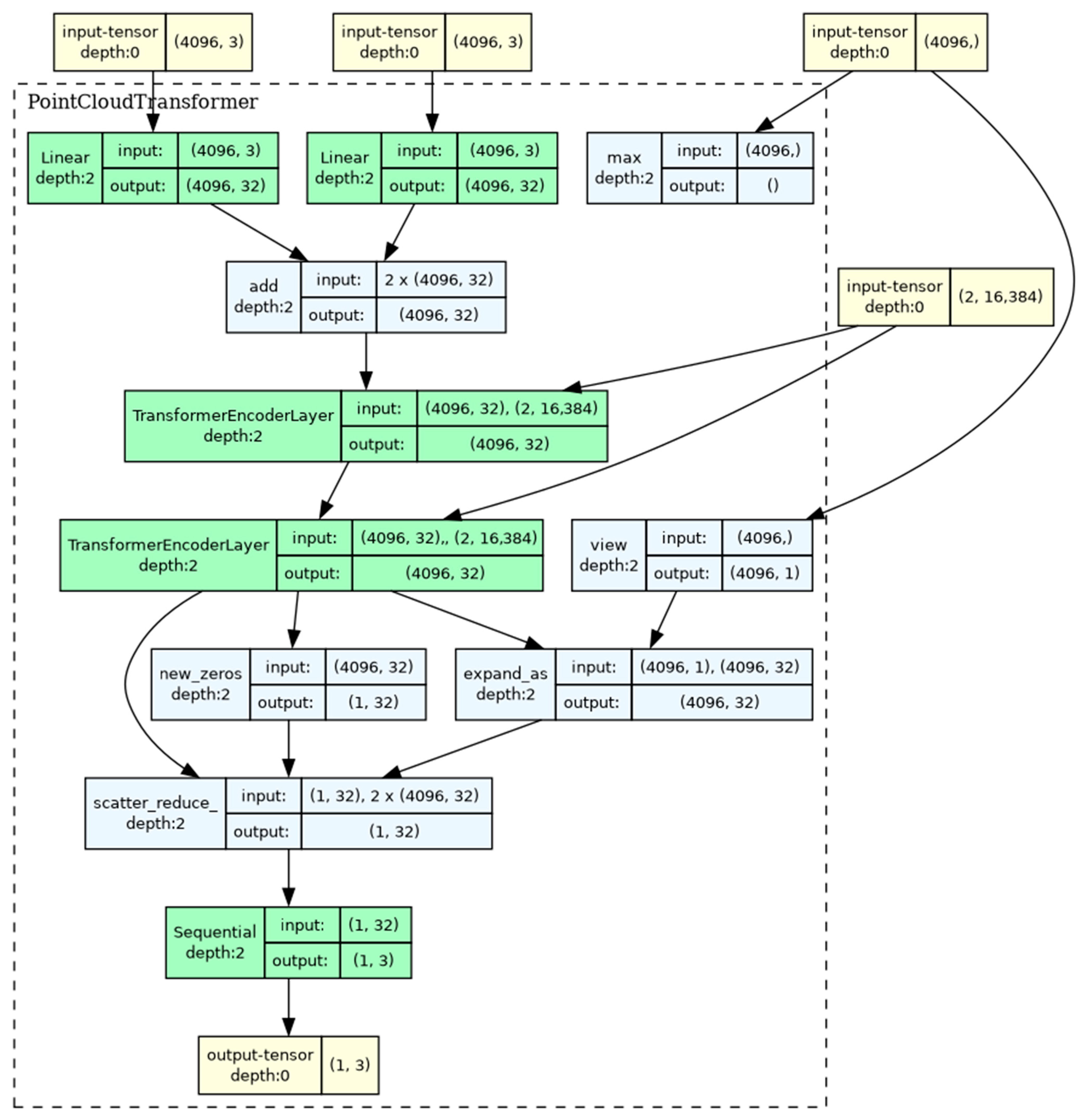

- Linear projections (input projection and positional encoding) for transforming input 3D coordinates.

- TransformerEncoderLayer.

- Global max pooling for combining information from the entire point cloud.

- A classifier represented by a multilayer perceptron with normalization and dropout for class prediction.

3. Results and Discussion

3.1. PointNet-Based Model Training

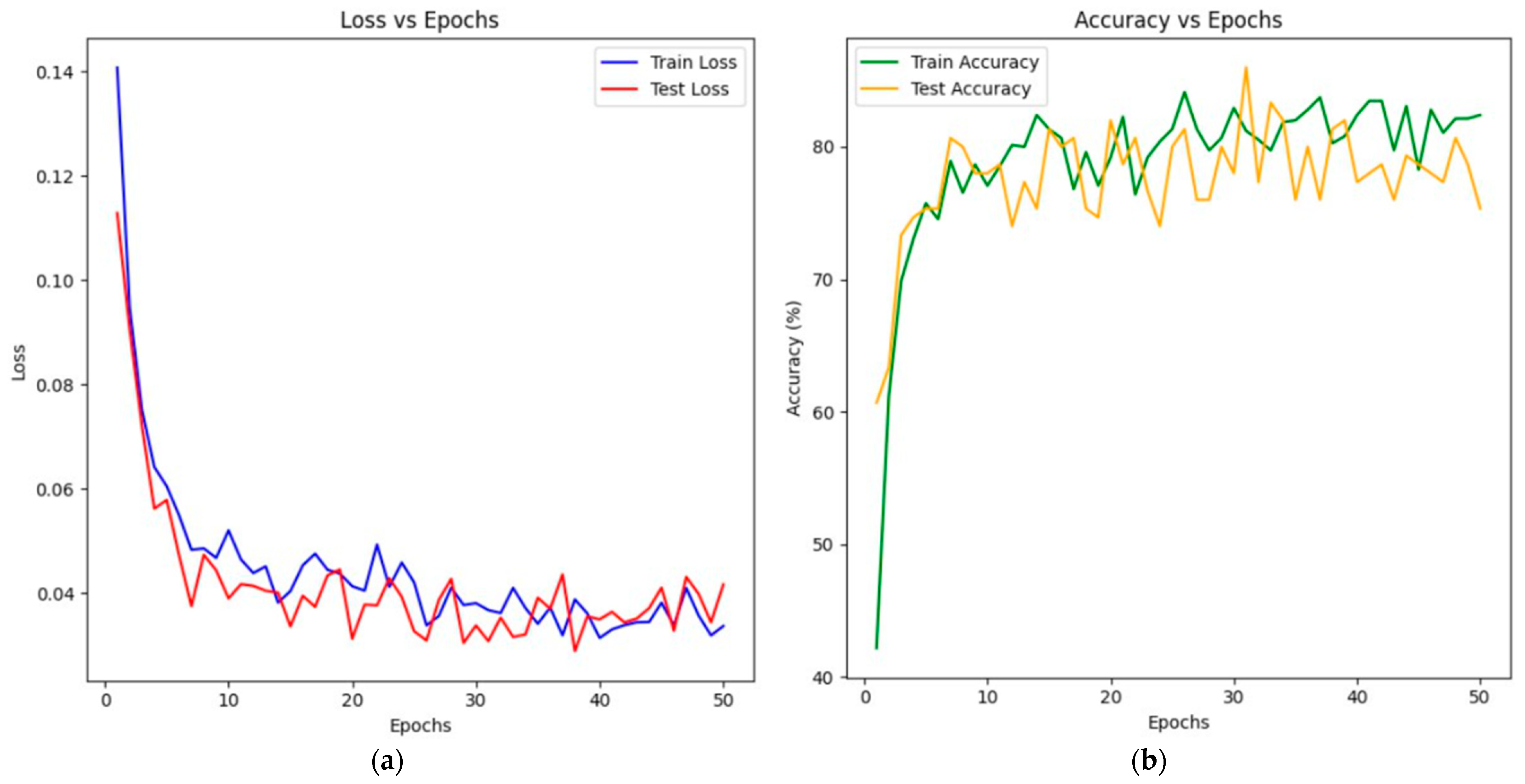

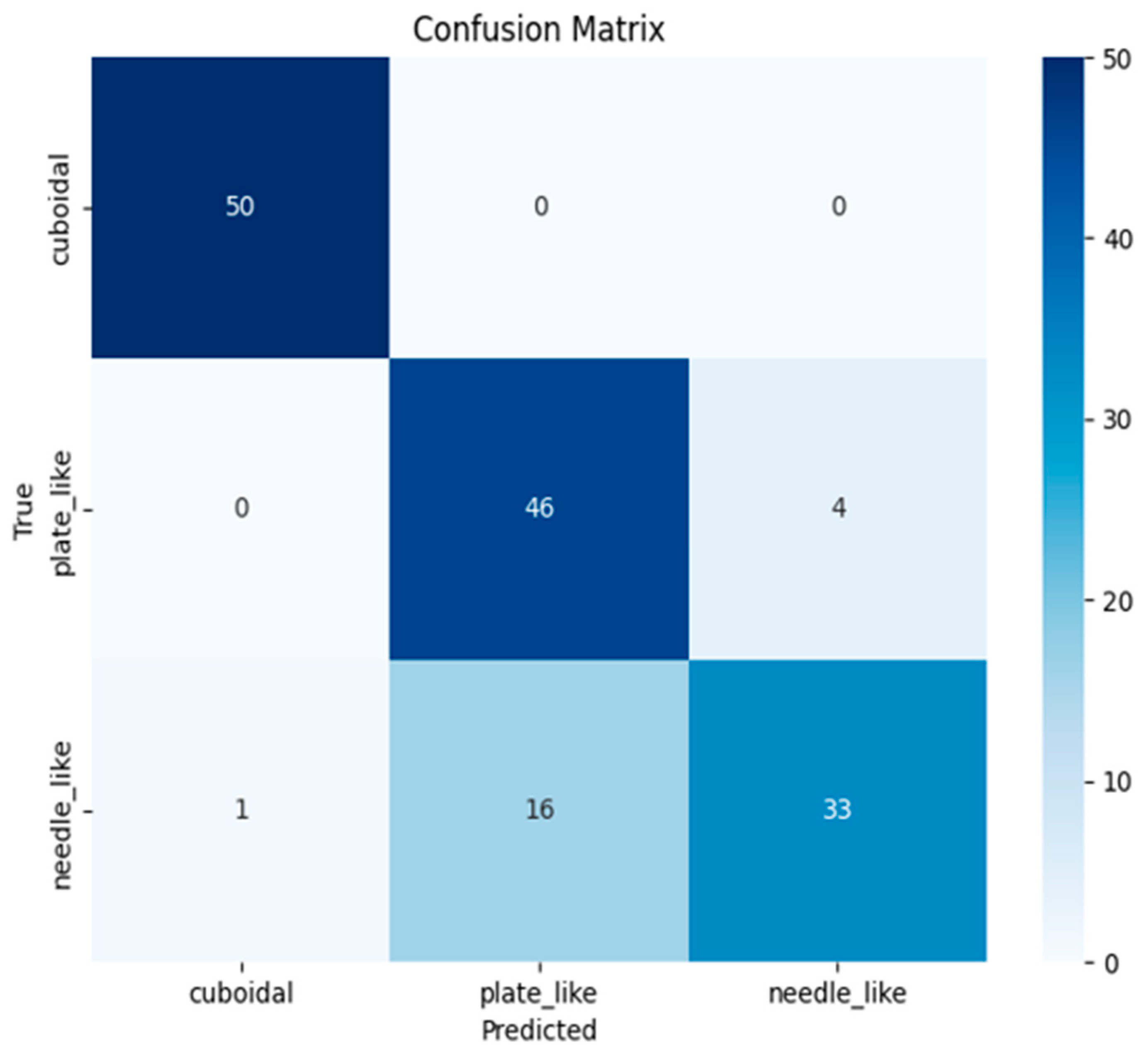

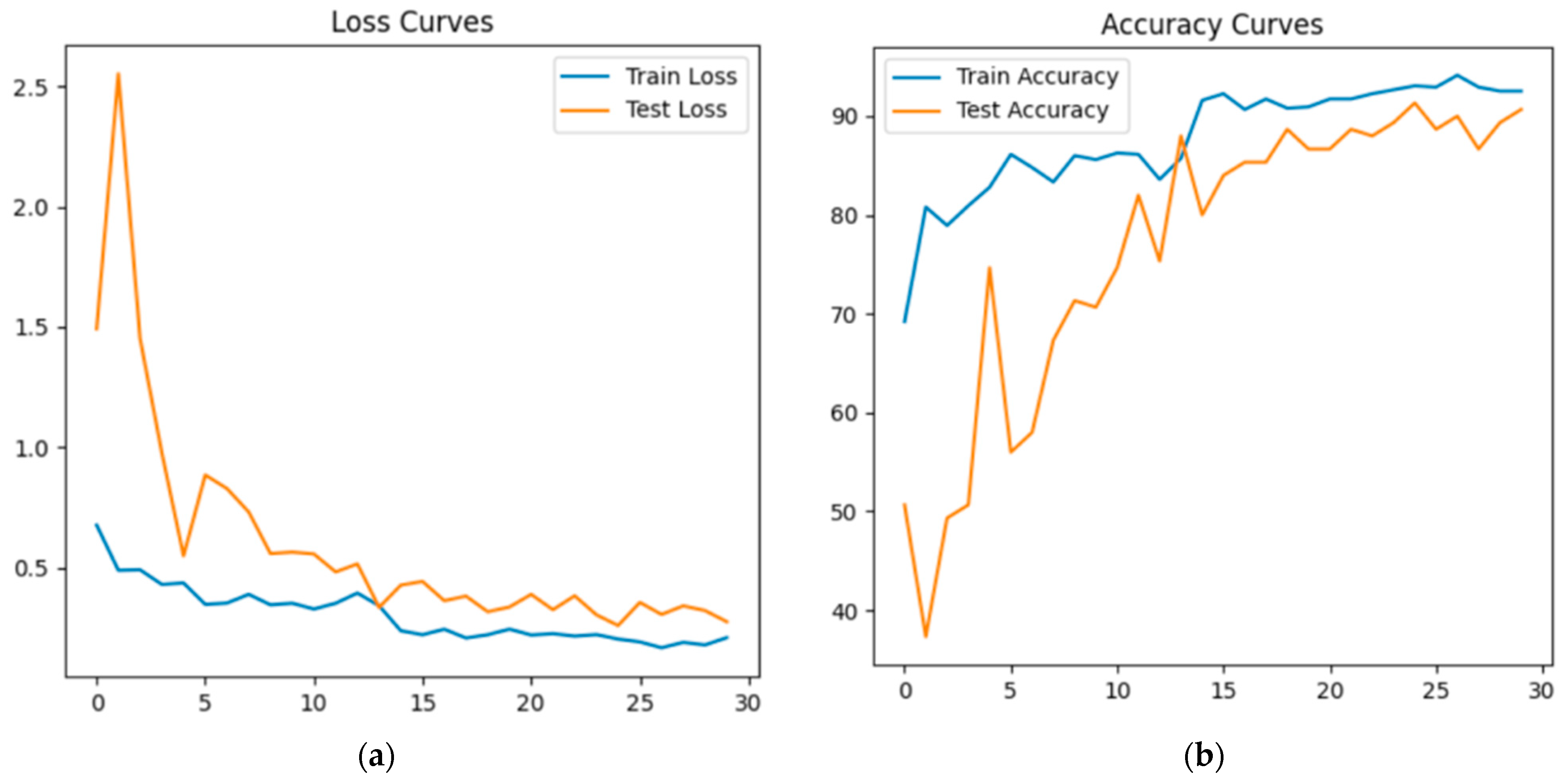

3.2. Training the Model Based on PointCloudTransformer

3.3. Discussion

- →

- The need for high-quality 3D photo recording of each sample;

- →

- Both models work with point clouds, without taking into account the grain texture, which could be useful for analysis;

- →

- The PointNet-based model loses local features (small-scale structures, irregularities, elevations), analyzing only larger features of the general appearance;

- →

- The PointCloudTransformer-based model is computationally expensive when processing large data sets and with a large number of neighbors (parameter k) when constructing graphs.

4. Conclusions

- (1)

- An empirical database was created containing information in the form of 3D images of crushed stone grains of three classes.

- (2)

- Parameters were selected for the stable training of intelligent models based on the PointNet and PointCloudTransformer neural networks.

- (3)

- Experimental results showed that the developed models have high accuracy in solving the classification problem: the Accuracy metric = 0.86 for both models.

- (4)

- A comparison of the developed method with the method of manual selection, sieve analysis, or using special equipment was carried out. The comparison showed that the developed approach will reduce manual labor and can also serve as an additional source for verifying the quality of building materials at various stages of construction.

- (5)

- A comparative experiment was conducted on 200 crushed stone grains sized 10–40 mm, demonstrating the efficiency and superiority of the proposed computer vision algorithms over the manual method using a movable template of the crushed stone grain size ratio. With a slightly lower accuracy in determining the shape of crushed stone grains, the computer vision algorithms reduced the time costs by 20% compared to the manual method. It was found that when determining a larger number of grains (more than 200) using a movable template and reducing the size of crushed stone grains (5–10 mm), the accuracy will decrease and the time spent will increase.

- (6)

- The prospect of improving the model lies in the following actions:

- -

- Application of the developed algorithm to other building materials with similar morphological characteristics;

- -

- Creation of models capable of taking into account the texture features of the analyzed objects;

- -

- Classification of the building materials of interest by other visual criteria (color, surface quality);

- -

- Implementation of the developed models in the process of determining the shape of crushed stone grains in laboratory and field conditions.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbreviation | Expanded |

| AI | Artificial Intelligence |

| DGCNN | Dynamic Graph Convolutional Neural Network |

| GPU | Graphics Processing Unit |

| KNN | K-Nearest Neighbors |

| QD-LUBE | Quality-Driven Lower Upper Bound Estimation |

| StepLR | Step Learning Rate |

| SVM | Support Vector Machine |

References

- Keles, C.; Cruz Rios, F.; Hoque, S. Digital Technologies and Circular Economy in the Construction Sector: A Review of Lifecycle Applications, Integrations, Potential, and Limitations. Buildings 2025, 15, 553. [Google Scholar] [CrossRef]

- Nguyen, T.-D.; Cherif, R.; Mahieux, P.-Y.; Bastidas-Arteaga, E. A Bayesian Network Framework to Predict Compressive Strength of Recycled Aggregate Concrete. J. Compos. Sci. 2025, 9, 72. [Google Scholar] [CrossRef]

- Borovkov, A.I.; Vafaeva, K.M.; Vatin, N.I.; Ponyaeva, I. Synergistic Integration of Digital Twins and Neural Networks for Advancing Optimization in the Construction Industry: A Comprehensive Review. Constr. Mater. Prod. 2024, 7, 1–38. [Google Scholar] [CrossRef]

- Samal, C.G.; Biswal, D.R.; Udgata, G.; Pradhan, S.K. Estimation, Classification, and Prediction of Construction and Demolition Waste Using Machine Learning for Sustainable Waste Management: A Critical Review. Constr. Mater. 2025, 5, 10. [Google Scholar] [CrossRef]

- Wang, G.; Zhou, Y.; Cao, D. Artificial intelligence in construction: Topic-based technology mapping based on patent data. Autom. Constr. 2025, 172, 106073. [Google Scholar] [CrossRef]

- Li, Y.; Chen, H.; Yu, P.; Yang, L. A Review of Artificial Intelligence in Enhancing Architectural Design Efficiency. Appl. Sci. 2025, 15, 1476. [Google Scholar] [CrossRef]

- Karabulut, M. Nonlinear Load-Deflection Analysis of Steel Rebar-Reinforced Concrete Beams: Experimental, Theoretical and Machine Learning Analysis. Buildings 2025, 15, 432. [Google Scholar] [CrossRef]

- Chepurnenko, A.S.; Turina, V.S.; Akopyan, V.F. Artificial intelligence model for predicting the load-bearing capacity of eccentrically compressed short concrete filled steel tubular columns. Constr. Mater. Prod. 2024, 7, 2. [Google Scholar] [CrossRef]

- Araya-Aliaga, E.; Atencio, E.; Lozano, F.; Lozano-Galant, J. Automating Dataset Generation for Object Detection in the Construction Industry with AI and Robotic Process Automation (RPA). Buildings 2025, 15, 410. [Google Scholar] [CrossRef]

- Chaaya, M.L.; Sarkis, L.M.; Tahmasebinia, F. Integration of Emerging Technologies with Construction Practices in Australia. Buildings 2025, 15, 396. [Google Scholar] [CrossRef]

- Babushkina, N.E.; Lyapin, A.A. Solving the Problem of Determining the Mechanical Properties of Road Structure Materials Using Neural Network Technologies. Adv. Eng. Res. 2022, 22, 285–292. [Google Scholar] [CrossRef]

- Manzhilevskaya, S.E. Modeling and Predicting PM2.5 Concentration at a Construction Site Using the Artificial Intelligence. Mod. Trends Constr. Urban Territ. Plan. 2024, 3, 104–113. [Google Scholar] [CrossRef]

- Xie, Y.; Wang, X.; Zhou, X.; Zhou, Q. Bidirectional Pattern Recognition and Prediction of Bending-Active Thin Sheets via Artificial Neural Networks. Electronics 2025, 14, 503. [Google Scholar] [CrossRef]

- Sung, G.-M.; Kohale, S.D.; Chiang, T.-H.; Chong, Y.-J. An Artificial Intelligence Home Monitoring System That Uses CNN and LSTM and Is Based on the Android Studio Development Platform. Appl. Sci. 2025, 15, 1207. [Google Scholar] [CrossRef]

- Bilal, M.; Oyedele, L.O.; Qadir, J.; Munir, K.; Ajayi, S.O.; Akinade, O.O.; Owolabi, H.A.; Alaka, H.A.; Pasha, M. Big Data in the construction industry: A review of present status, opportunities, and future trends. Adv. Eng. Inform. 2016, 30, 500–521. [Google Scholar] [CrossRef]

- Munawar, H.S.; Ullah, F.; Qayyum, S.; Shahzad, D. Big Data in Construction: Current Applications and Future Opportunities. Big Data Cogn. Comput. 2022, 6, 18. [Google Scholar] [CrossRef]

- Sepasgozar, S.M.E.; Davis, S. Construction Technology Adoption Cube: An Investigation on Process, Factors, Barriers, Drivers and Decision Makers Using NVivo and AHP Analysis. Buildings 2018, 8, 74. [Google Scholar] [CrossRef]

- Sepasgozar, S.M.; Davis, S.R.; Li, H.; Luo, X. Modeling the Implementation Process for New Construction Technologies: Thematic Analysis Based on Australian and US Practices. J. Manag. Eng. 2018, 34, 05018005. [Google Scholar] [CrossRef]

- Smith, C.J.; Wong, A.T.C. Advancements in Artificial Intelligence-Based Decision Support Systems for Improving Construction Project Sustainability: A Systematic Literature Review. Informatics 2022, 9, 43. [Google Scholar] [CrossRef]

- Ivanova, S.; Kuznetsov, A.; Zverev, R.; Rada, A. Artificial Intelligence Methods for the Construction and Management of Buildings. Sensors 2023, 23, 8740. [Google Scholar] [CrossRef]

- Sharma, K.; Patil, P.P.; Srivastava, A.; Mayuri, K.; Michaelson, J.; Srivastava, A. Optimized Construction Project Management Using Ai And Machine Learning. In Proceedings of the 2023 6th International Conference on Contemporary Computing and Informatics (IC3I), India, 14–16 September 2023; Volume 6, pp. 1614–1618. [Google Scholar] [CrossRef]

- Choi, W.; Na, S.; Heo, S. Integrating Drone Imagery and AI for Improved Construction Site Management through Building Information Modeling. Buildings 2024, 14, 1106. [Google Scholar] [CrossRef]

- Lee, J.; Lee, S. Construction Site Safety Management: A Computer Vision and Deep Learning Approach. Sensors 2023, 23, 944. [Google Scholar] [CrossRef]

- Garyaev, A.; Garyaev, N. Integration of artificial intelligence and video surveillance technology to monitor construction equipment. E3S Web Conf. 2023, 410, 04002. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Stel’makh, S.A.; Shcherban’, E.M.; Razveeva, I.; Kozhakin, A.; Meskhi, B.; Chernil’nik, A.; Elshaeva, D.; Ananova, O.; Girya, M. Computer Vision Method for Automatic Detection of Microstructure Defects of Concrete. Sensors 2024, 24, 4373. [Google Scholar] [CrossRef] [PubMed]

- Razveeva, I.; Kozhakin, A.; Beskopylny, A.N.; Stel’makh, S.A.; Shcherban’, E.M.; Artamonov, S.; Pembek, A.; Dingrodiya, H. Analysis of Geometric Characteristics of Cracks and Delamination in Aerated Concrete Products Using Convolutional Neural Networks. Buildings 2023, 13, 3014. [Google Scholar] [CrossRef]

- Hadinata, P.N.; Simanta, D.; Eddy, L.; Nagai, K. Multiclass Segmentation of Concrete Surface Damages Using U-Net and DeepLabV3+. Appl. Sci. 2023, 13, 2398. [Google Scholar] [CrossRef]

- Kardoulias, A.; Arailopoulos, A.; Seventekidis, P. From Binary to Multi-Class: Neural Networks for Structural Damage Classification in Bridge Monitoring Under Static and Dynamic Loading. Dynamics 2024, 4, 786–803. [Google Scholar] [CrossRef]

- Ghaffari, A.; Shahbazi, Y.; Mokhtari Kashavar, M.; Fotouhi, M.; Pedrammehr, S. Advanced Predictive Structural Health Monitoring in High-Rise Buildings Using Recurrent Neural Networks. Buildings 2024, 14, 3261. [Google Scholar] [CrossRef]

- Shabbir, K.; Umair, M.; Sim, S.-H.; Ali, U.; Noureldin, M. Estimation of Prediction Intervals for Performance Assessment of Building Using Machine Learning. Sensors 2024, 24, 4218. [Google Scholar] [CrossRef]

- Ercan, E.; Avcı, M.S.; Pekedis, M.; Hızal, Ç. Damage Classification of a Three-Story Aluminum Building Model by Convolutional Neural Networks and the Effect of Scarce Accelerometers. Appl. Sci. 2024, 14, 2628. [Google Scholar] [CrossRef]

- Akbar, A.; Njoroge, J.M.; Lee, S.; Chang, Y.; Kwon, S. CNN-Based Automatic Mobile Reporting System and Quantification for the Concrete Crack Size of the Precast Members of OSC Construction. Eng. Proc. 2023, 36, 15. [Google Scholar] [CrossRef]

- Boccagna, R.; Bottini, M.; Petracca, M.; Amelio, A.; Camata, G. Unsupervised Deep Learning for Structural Health Monitoring. Big Data Cogn. Comput. 2023, 7, 99. [Google Scholar] [CrossRef]

- Lee, K.; Hong, G.; Sael, L.; Lee, S.; Kim, H.Y. MultiDefectNet: Multi-Class Defect Detection of Building Façade Based on Deep Convolutional Neural Network. Sustainability 2020, 12, 9785. [Google Scholar] [CrossRef]

- Dong, X.; Liu, Y.; Dai, J. Recognition of Concrete Surface Cracks Based on Improved TransUNet. Buildings 2025, 15, 541. [Google Scholar] [CrossRef]

- Słoński, M. Assessment of Cracking Development in Concrete Precast Crane Beams Using Optical and Deep Learning Methods. Materials 2025, 18, 731. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Shcherban’, E.M.; Stel’makh, S.A.; Mailyan, L.R.; Meskhi, B.; Razveeva, I.; Kozhakin, A.; El’shaeva, D.; Beskopylny, N.; Onore, G. Detecting Cracks in Aerated Concrete Samples Using a Convolutional Neural Network. Appl. Sci. 2023, 13, 1904. [Google Scholar] [CrossRef]

- Bai, Y.; Li, Z.; Liu, R.; Feng, J.; Li, B. Crack-Detection Algorithm Integrating Multi-Scale Information Gain with Global–Local Tight–Loose Coupling. Entropy 2025, 27, 165. [Google Scholar] [CrossRef]

- Dais, D.; Bal, E.; Smyrou, E.; Sarhosis, V. Automatic crack classification and segmentation on masonry surfaces using convolutional neural networks and transfer learning. Autom. Constr. 2021, 125, 103606. [Google Scholar] [CrossRef]

- Liu, N.; Ge, Y.; Bai, X.; Zhang, Z.; Shangguan, Y.; Li, Y. Research on Damage Detection Methods for Concrete Beams Based on Ground Penetrating Radar and Convolutional Neural Networks. Appl. Sci. 2025, 15, 1882. [Google Scholar] [CrossRef]

- Charoenkwan, P.; Homkong, N. CSDeep: A crushed stone image predictor based on deep learning and intelligently selected features. In Proceedings of the 2017 2nd International Conference on Information Technology (INCIT), Nakhonpathom, Thailand, 2–3 November 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Murtagh, F.; Starck, J.-L. Wavelet and curvelet moments for image classification: Application to aggregate mixture grading. Pattern Recognit. Lett. 2008, 29, 1557–1564. [Google Scholar] [CrossRef]

- Chatterjee, S. Vision-based rock-type classification of limestone using multi-class support vector machine. Appl. Intell. 2013, 39, 14–27. [Google Scholar] [CrossRef]

- Jiang, Y.; Wong, L.N.Y.; Ren, J. A numerical test method of California bearing ratio on graded crushed rocks using particle flow modeling. J. Traffic Transp. Eng. (Engl. Ed.) 2015, 2, 107–115. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Stel’makh, S.A.; Shcherban’, E.M.; Mailyan, L.R.; Meskhi, B.; Beskopylny, N.; El’shaeva, D. Influence of the Chemical Activation of Aggregates on the Properties of Lightweight Vibro-Centrifuged Fiber-Reinforced Concrete. J. Compos. Sci. 2022, 6, 273. [Google Scholar] [CrossRef]

- Shcherban’, E.M.; Stel’makh, S.A.; Mailyan, L.R.; Beskopylny, A.N.; Mailyan, A.L.; Shcherban’, N.; Chernil’nik, A.; Elshaeva, D. Composition and Properties of Lightweight Concrete of Variotropic Structure Based on Combined Aggregate and Microsilica. Buildings 2025, 15, 346. [Google Scholar] [CrossRef]

- GOST 8267-93; Crushed Stone and Gravel of Solid Rocks for Construction Works. Specifications. Standartinform: Moscow, Russia, 2018. Available online: https://docs.cntd.ru/document/1200000314 (accessed on 18 February 2025).

- Rose, D. Rotations in Three-Dimensions: Euler Angles and Rotation Matrices. Part 1—Main Paper. Eng. Notes 2015. Available online: https://danceswithcode.net/engineeringnotes/rotations_in_3d/rotations_in_3d_part1.html (accessed on 3 March 2025).

- Rice, S.O. Mathematical analysis of random noise. Bell Syst. Tech. J. 1944, 23, 282–332. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. arXiv 2017, arXiv:1612.00593. [Google Scholar] [CrossRef]

- Guo, M.-H.; Cai, J.-X.; Liu, Z.-N.; Mu, T.-J.; Martin, R.R.; Hu, S.-M. PCT: Point Cloud Transformer. Comput. Vis. Media 2021, 7, 187–199. [Google Scholar] [CrossRef]

- Svenningsson, P.; Fioranelli, F.; Yarovoy, A. Radar-PointGNN: Graph Based Object Recognition for Unstructured Radar Point-cloud Data. In Proceedings of the 2021 IEEE Radar Conference (RadarConf21), Atlanta, GA, USA, 7–14 May 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Wei, J.; Zhang, X.; Zhuo, Z.; Ji, Z.; Wei, Z.; Li, J.; Li, Q. Leader population learning rate schedule. Inf. Sci. 2023, 623, 455–468. [Google Scholar] [CrossRef]

- Shan, L.; Li, H.; Zhao, J.; Zhang, X.; Kang, X.; Gao, X.; Zhou, Z. Investigating on the Pavement Performance of Multi-Source Solid Wastes by Cement and Fly Ash. Materials 2023, 16, 6556. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Shcherban’, E.M.; Stel’makh, S.A.; Mailyan, L.R.; Meskhi, B.; Razveeva, I.; Kozhakin, A.; Beskopylny, N.; El’shaeva, D.; Artamonov, S. Method for Concrete Structure Analysis by Microscopy of Hardened Cement Paste and Crack Segmentation Using a Convolutional Neural Network. J. Compos. Sci. 2023, 7, 327. [Google Scholar] [CrossRef]

- Feng, Z.; Lu, J.; Liu, S.; Xia, J.; Wang, J.; Leng, F.; Ma, X. Performance Evaluation of Pebble Concrete for Pavement: A Study on the Sucre Highway Project. Materials 2024, 17, 4994. [Google Scholar] [CrossRef] [PubMed]

- Luo, J.; Huang, R.; Wang, J.; Zhang, Y. Carbon Sequestration by Preparing Recycled Cement, Recycled Aggregates, and Recycled Concrete from Construction and Demolition (C&D) Wastes. Materials 2024, 17, 5020. [Google Scholar] [CrossRef]

- Dvorkin, L.; Bordiuzhenko, O.; Tracz, T.; Mróz, K. Optimizing Porous Concrete Using Granite Stone-Crushing Waste: Composition, Strength, and Density Analysis. Appl. Sci. 2024, 14, 6934. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Y.; Yang, T.; Liao, H.; Yu, L.; Liu, Y.; Wang, G.; Zhao, Y.; Qiao, H. Study on the Influence of Specimen Size and Aggregate Size on the Compressive Strength of Rock-Filled Concrete. Appl. Sci. 2023, 13, 6246. [Google Scholar] [CrossRef]

- Stempkowska, A.; Gawenda, T.; Naziemiec, Z.; Adam Ostrowski, K.; Saramak, D.; Surowiak, A. Impact of the Geometrical Parameters of Dolomite Coarse Aggregate on the Thermal and Mechanic Properties of Preplaced Aggregate Concrete. Materials 2020, 13, 4358. [Google Scholar] [CrossRef]

- Sadagopan, M.; Malaga, K.; Nagy, A. Improving Recycled Aggregate Quality by Mechanical Pre-Processing. Materials 2020, 13, 4342. [Google Scholar] [CrossRef] [PubMed]

- Ntoulmperis, M.; Discepolo, S.; Castellini, P.; Catti, P.; Nikolakis, N.; van de Kamp, W.; Alexopoulos, K. Inline-Acquired Product Point Clouds for Non-Destructive Testing: A Case Study of a Steel Part Manufacturer. Machines 2025, 13, 88. [Google Scholar] [CrossRef]

- Lario, J.; Mateos, J.; Psarommatis, F.; Ortiz, Á. Towards Zero Defect and Zero Waste Manufacturing by Implementing Non-Destructive Inspection Technologies. J. Manuf. Mater. Process. 2025, 9, 29. [Google Scholar] [CrossRef]

- Yuan, T.; Adjallah, K.H.; Sava, A.; Wang, H.; Liu, L. A Decision Risk Assessment and Alleviation Framework under Data Quality Challenges in Manufacturing. Sensors 2024, 24, 6586. [Google Scholar] [CrossRef]

- Belova, Y.V.; Razveeva, I.F.; Rakhimbaeva, E.O. Development of an Algorithm for Semantic Segmentation of Earth Remote Sensing Data to Determine Phytoplankton Populations. Adv. Eng. Res. 2024, 24, 283–292. [Google Scholar] [CrossRef]

| Num | Parameter | Value |

|---|---|---|

| 1 | learning rate | 0.001 |

| 2 | weight decay | |

| 3 | num epochs | 50 |

| 4 | focal loss | α = 0.25, γ = 2 |

| 5 | batch size | 32 |

| № | Parameter | Precision | Recall | F1 |

|---|---|---|---|---|

| 1 | cuboidal | 0.95 | 0.8 | 0.87 |

| 2 | plate_like | 0.9 | 0.9 | 0.9 |

| 3 | needle_like | 0.76 | 0.88 | 0.81 |

| 4 | accuracy | - | - | 0.86 |

| 5 | macro avg | 0.87 | 0.86 | 0.86 |

| 6 | weighted avg | 0.87 | 0.86 | 0.86 |

| Num | Parameter | Value |

|---|---|---|

| 1 | k | 4 |

| 2 | batch_size | 8 |

| 3 | learning rate | 0.001 |

| 4 | weight_decay | |

| 5 | step_size | 15 |

| 6 | gamma | 0.1 |

| Num | Parameter | Precision | Recall | F1 |

|---|---|---|---|---|

| 1 | cuboidal | 0.98 | 1.0 | 0.99 |

| 2 | plate_like | 0.74 | 0.92 | 0.82 |

| 3 | needle_like | 0.89 | 0.66 | 0.76 |

| 4 | accuracy | - | - | 0.86 |

| 5 | macro avg | 0.87 | 0.86 | 0.86 |

| 6 | weighted avg | 0.87 | 0.86 | 0.86 |

| № | Method | Photo Fixation Time, Minutes:Seconds | Operating Time, Minutes:Seconds | Total, Minutes:Seconds | Accuracy, % |

|---|---|---|---|---|---|

| 1 | Grain Size Ratio Template and Visual Method | ||||

| 1.1 | Specialist 1 | - | 17:36 | 17:36 | 98 |

| 1.2 | Specialist 2 | - | 18:48 | 18:48 | 96 |

| 1.3 | Specialist 3 | - | 20:17 | 20:17 | 90 |

| 1.4 | Specialist 4 | - | 23:41 | 23:41 | 84 |

| 1.5 | Specialist 5 | - | 26:14 | 26:14 | 80 |

| Average results of the method using the grain size ratio template | 21:19 | 90 | |||

| Computer Vision Algorithms | |||||

| 2 | PointNet | 16:40 | 00:20 | 17:00 | 83 |

| 3 | PointCloudTransformer | 16:40 | 00:28 | 17:08 | 88 |

| Average performance of the proposed algorithms | 17:04 | 86 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Beskopylny, A.N.; Shcherban’, E.M.; Stel’makh, S.A.; Shilov, A.A.; Razveeva, I.; Elshaeva, D.; Chernil’nik, A.; Onore, G. Developing Computer Vision Models for Classifying Grain Shapes of Crushed Stone. Sensors 2025, 25, 1914. https://doi.org/10.3390/s25061914

Beskopylny AN, Shcherban’ EM, Stel’makh SA, Shilov AA, Razveeva I, Elshaeva D, Chernil’nik A, Onore G. Developing Computer Vision Models for Classifying Grain Shapes of Crushed Stone. Sensors. 2025; 25(6):1914. https://doi.org/10.3390/s25061914

Chicago/Turabian StyleBeskopylny, Alexey N., Evgenii M. Shcherban’, Sergey A. Stel’makh, Alexandr A. Shilov, Irina Razveeva, Diana Elshaeva, Andrei Chernil’nik, and Gleb Onore. 2025. "Developing Computer Vision Models for Classifying Grain Shapes of Crushed Stone" Sensors 25, no. 6: 1914. https://doi.org/10.3390/s25061914

APA StyleBeskopylny, A. N., Shcherban’, E. M., Stel’makh, S. A., Shilov, A. A., Razveeva, I., Elshaeva, D., Chernil’nik, A., & Onore, G. (2025). Developing Computer Vision Models for Classifying Grain Shapes of Crushed Stone. Sensors, 25(6), 1914. https://doi.org/10.3390/s25061914