A Deep Learning Approach for Distant Infrasound Signals Classification

Abstract

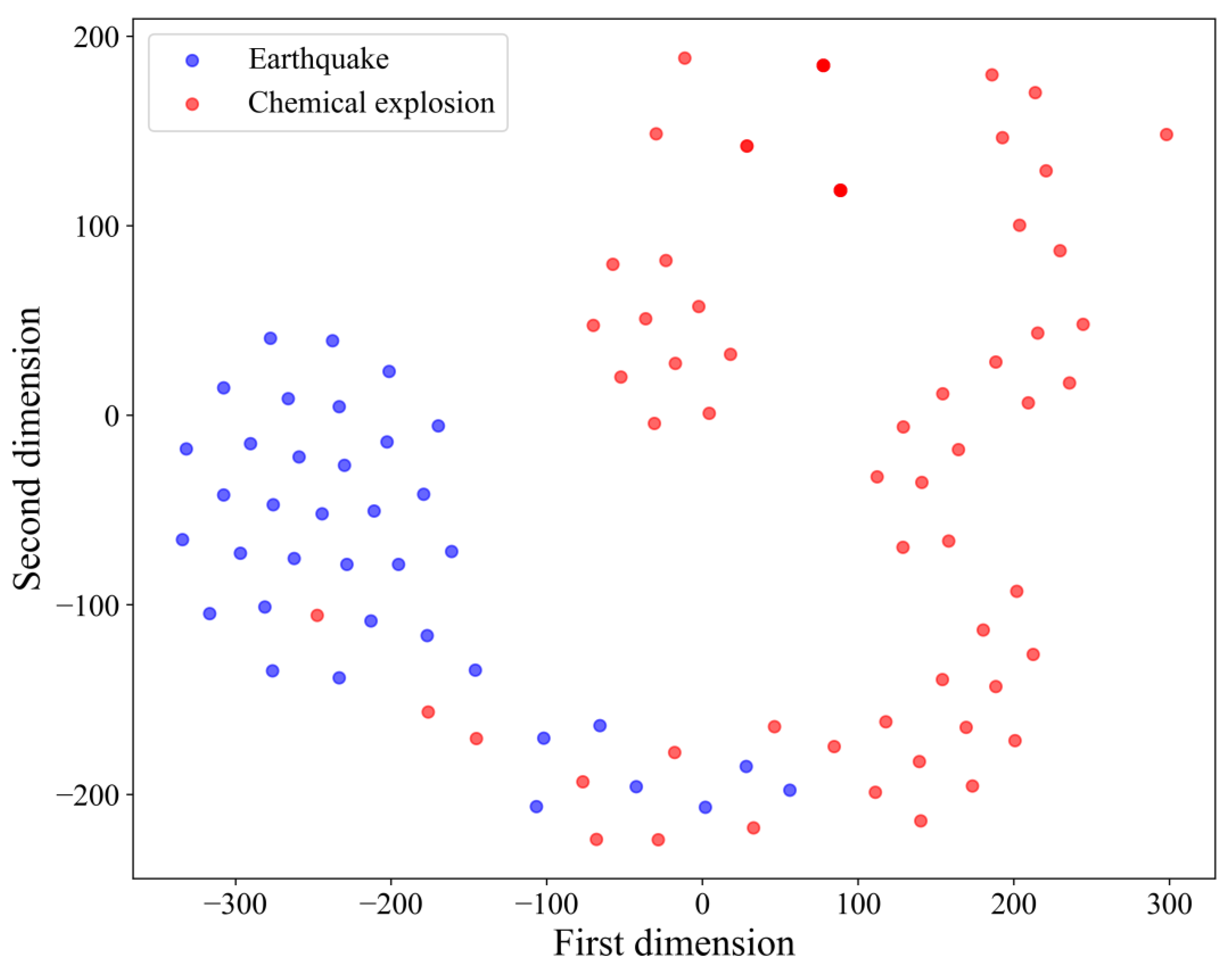

:1. Introduction

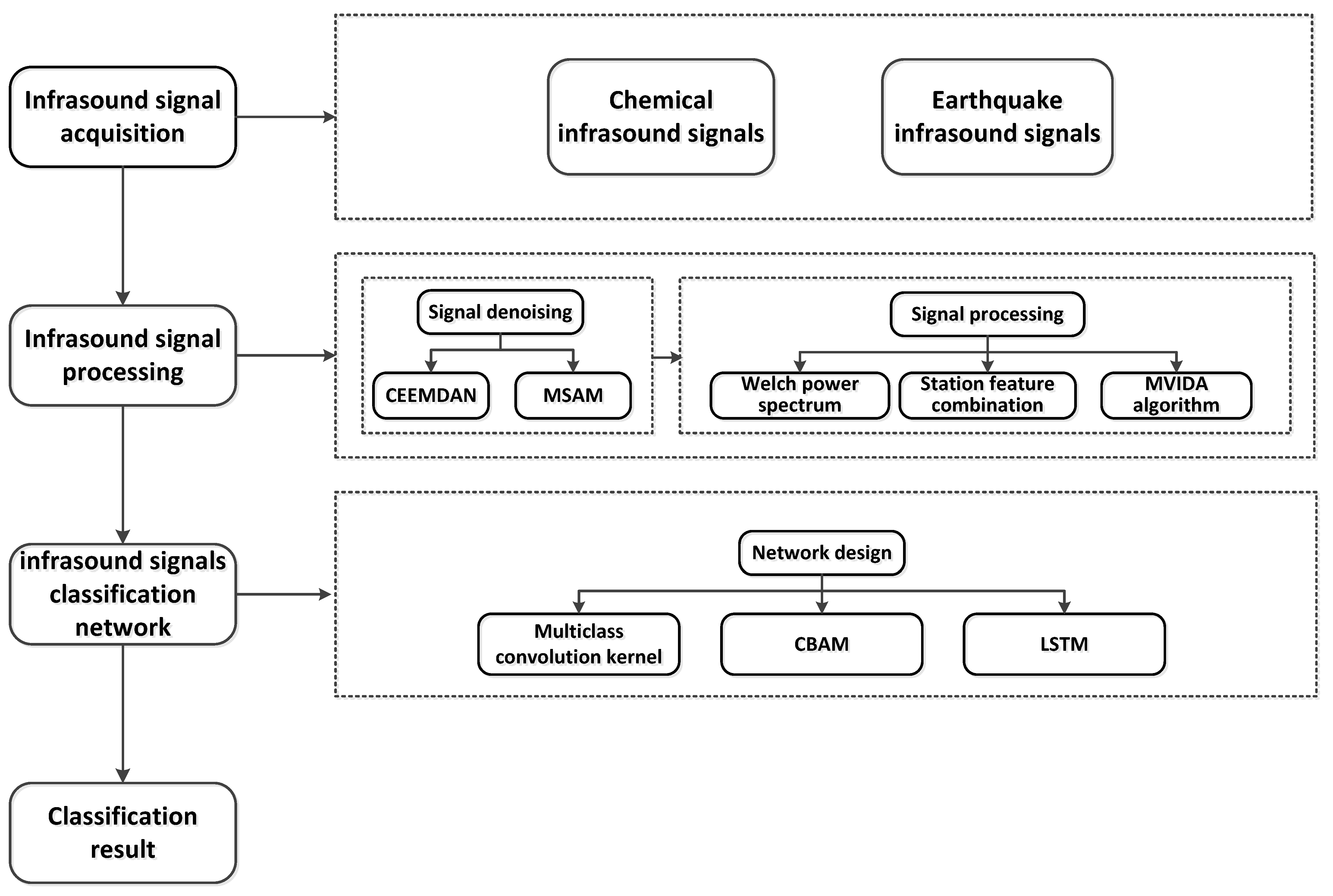

2. Research Lines and Methodology

2.1. Infrasound Signal Acquisition

2.2. Infrasound Signal Preprocessing

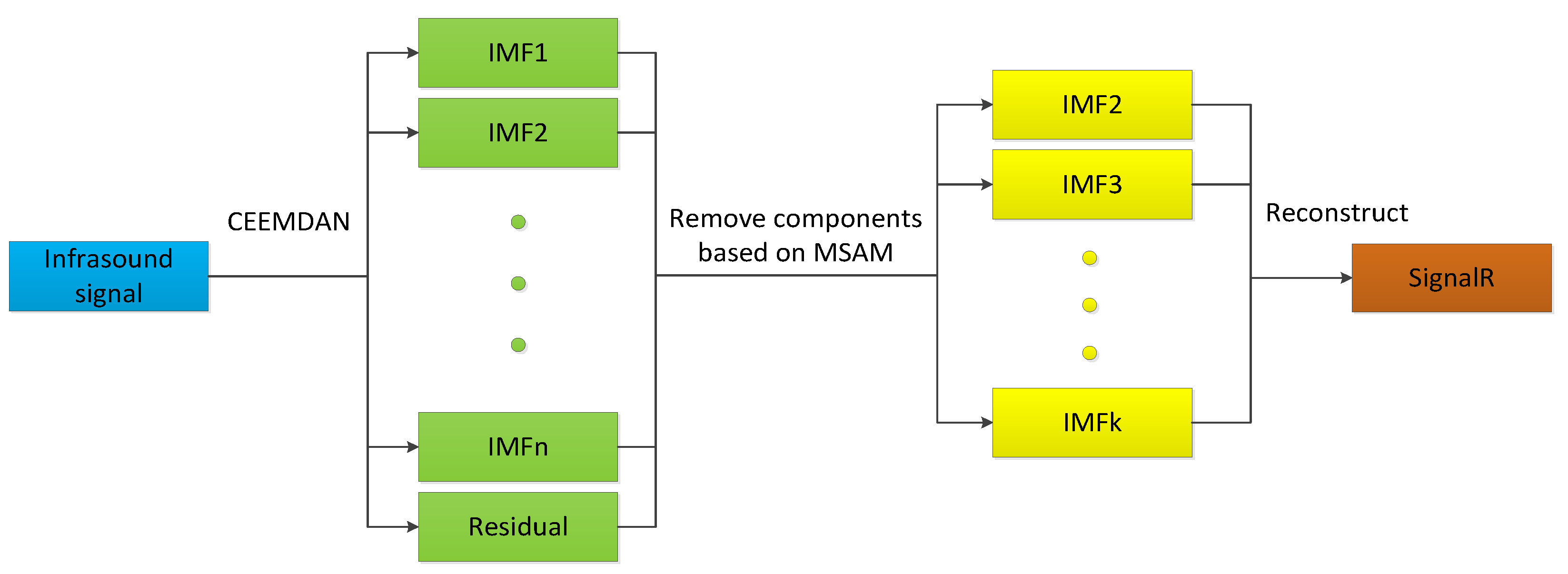

2.2.1. Signal Denoising

Complete Ensemble Empirical Mode Decomposition with Adaptive Noise

The Mean of the Standardized Accumulated Modes

Infrasound Signal Preprocessing Steps

- Signal decomposition: the CEEMDAN algorithm was employed to decompose the infrasound signal, yielding IMF components and residual components;

- Noise identification: the MSAM was utilized to distinguish between noise and useful signal components. Components with MSAM values significantly deviating from zero were identified as low-frequency noise and trend terms, which were subsequently eliminated;

- High-frequency noise removal: the IMF1 component, predominantly containing high-frequency noise, was discarded;

- Signal reconstruction: the remaining IMF components were reconstructed to obtain the denoised signal.

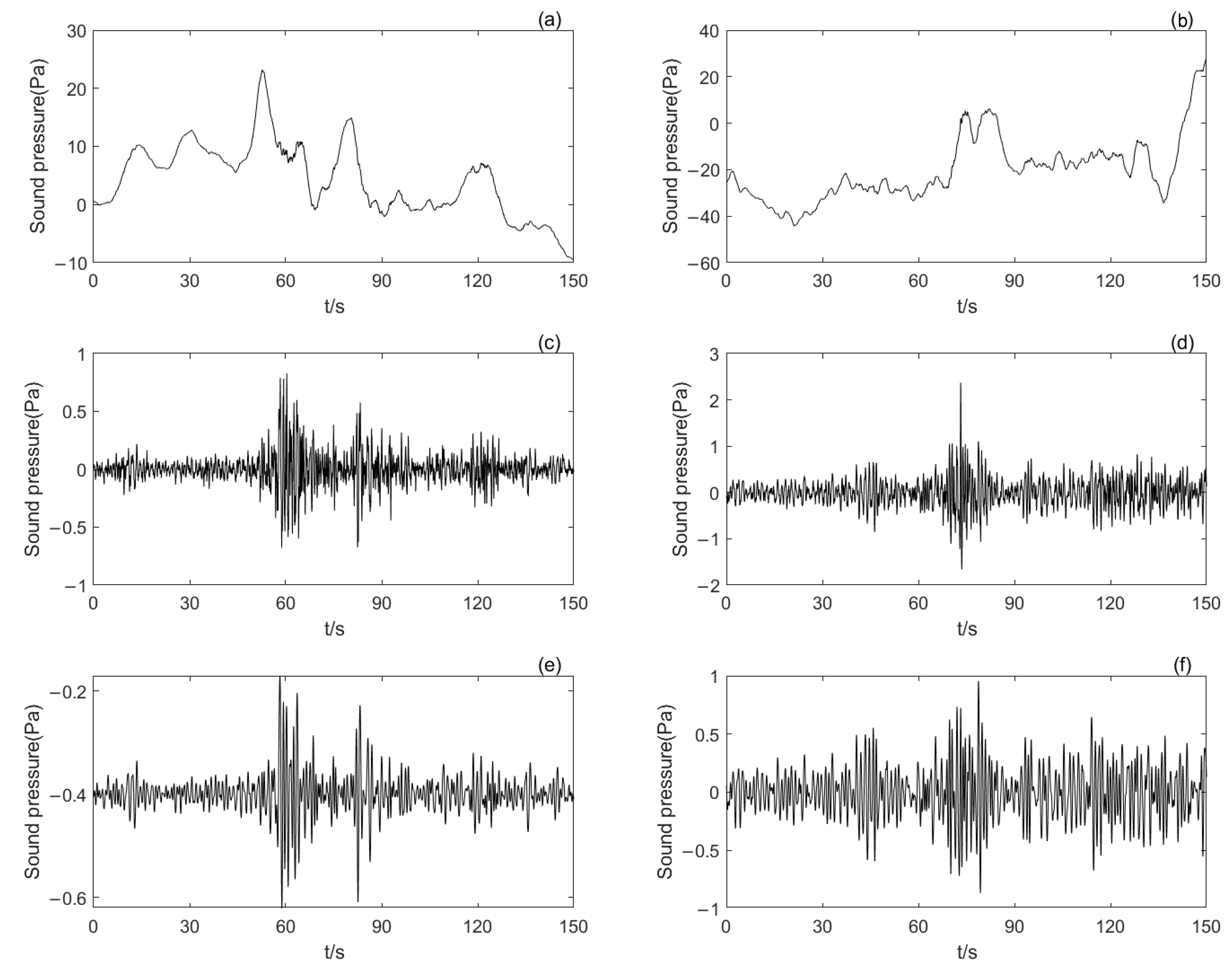

2.2.2. Signal Processing

Welch Power Spectrum

Station Signal Combination

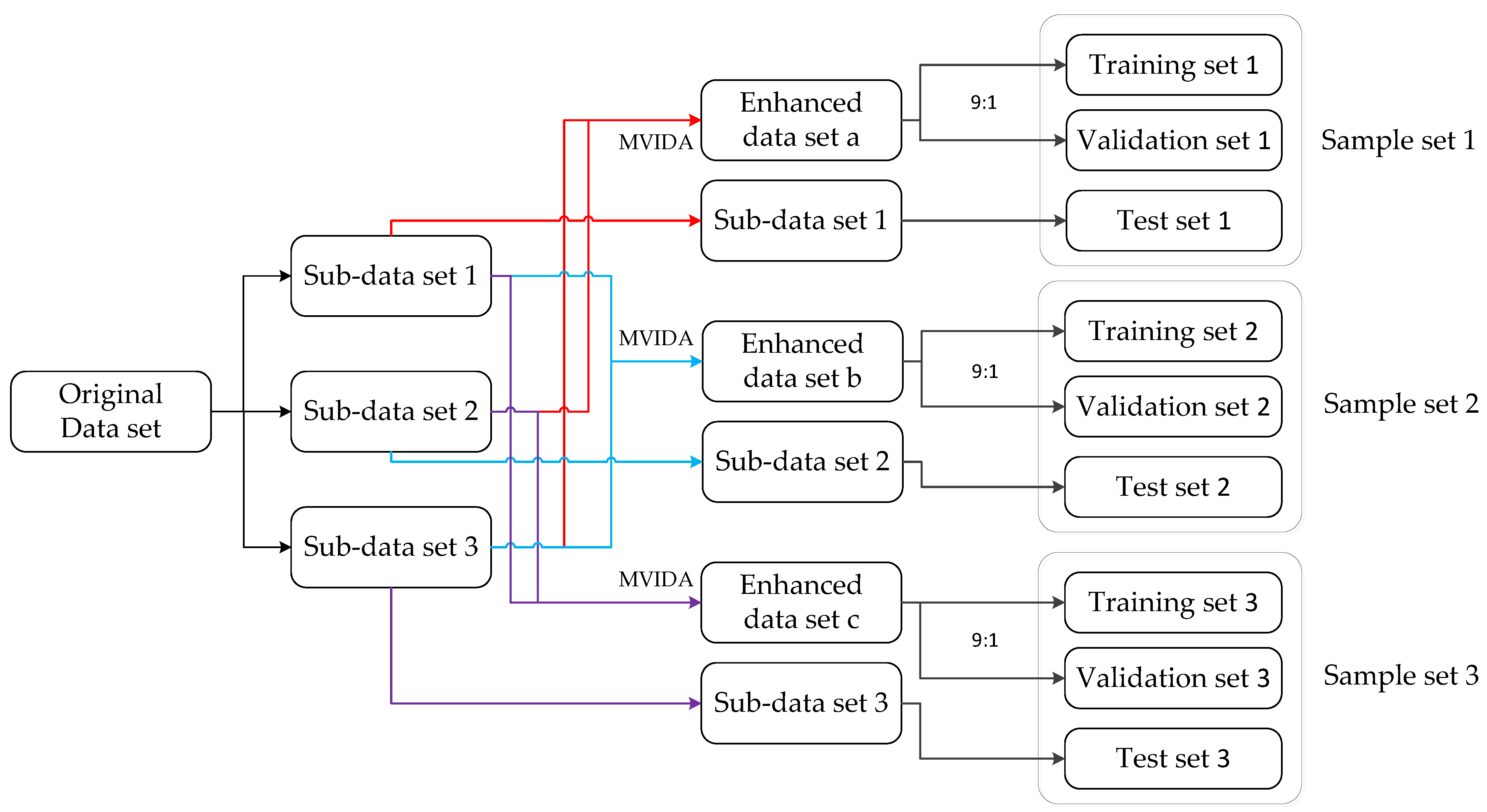

Mixed Virtual Infrasound Data Augmentation

2.2.3. Construction of Infrasound Signal Classification Data Set

- Generate training set 1 and verification set 1 based on subsets 2 and 3’s infrasound samples. That is, sub-data sets 1 and 2 are merged into sample set A. Then after expanding sample set A about fifty times using MVIDA algorithm, we obtain sample set 1. This sample set was then randomly shuffled and divided into training set 1 and verification set 1 at a ratio of approximately nine to one;

- Place infrasound samples from sub-data sets into test-set without enhanced virtual samples;

- The process of generating training set 2 and verification set 2 is repeated based on infrasonic samples from sub-data sets 1 and 3, while test set 2 is generated based on infrasonic samples from sub-data set 2. Similarly, training set 3 and verification set 3 are generated using infrasound samples from sub-data sets 1 and 2, with test set 3 being generated based on infrasonic samples from sub-data set 3. This results in three sets of training, verification, and test data. The classification results for each sample set are calculated separately to obtain the final average classification results. The number of infrasonic samples in each data set before and after enhancement can be found in Table 2.

2.3. Classification Model Based on Neural Network

3. Results and Discussions

3.1. Experimental Procedure

- Preprocess infrasound signals: Firstly, CEEMDAN is applied to decompose the sub-acoustic signal, yielding a set of IMF components and a residual component. Secondly, MSAM is used to distinguish between noise and useful signal components. If the MSAM value significantly deviates from zero, the higher-order IMF components above this threshold are identified as low-frequency noise and trend terms and are removed. Additionally, the IMF1 component, which is predominantly dominated by high-frequency noise, is also eliminated. Subsequently, the remaining IMF components are reconstructed to obtain the denoised signal. Finally, a Welch power spectrum of the reconstructed signal is calculated;

- Combine infrasound signals: A new sample of infrasound signal is obtained by combining pairs according to the station configuration;

- Data set division: The infrasound sample is divided into three sub-data sets, ensuring that the infrasound data generated by the same event only exists in one subset. Each time, the training set and validation set are obtained based on two of the sub-data sets and enhanced by the MVIDA algorithm, while the test set is generated from the remaining sub-data set. This process is repeated three times to obtain three different sets of training, validation, and test sets;

- Model training and refinement: The PCMLN model is trained using the training set, and then amended based on the validation set;

- Classification using trained network model: The trained network model is used to classify the test set. This process is repeated three times, and then average classification results are calculated from these three repetitions.

3.2. Evaluation Parameters of Model Performance

3.3. Analysis of Experimental Results

3.3.1. Infrasound Signal Processing Ablation Experiment

3.3.2. Ablation Experiment of Neural Network Model

3.3.3. Model Performance Comparison Experiment

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Campus, P.; Christie, D.R. Worldwide observations of infrasonic waves. In Infrasound Monitoring for Atmospheric Studies; Le Pichon, A., Blanc, E., Hauchecorne, A., Eds.; Springer: Dordrecht, The Netherlands, 2010; pp. 185–234. [Google Scholar]

- Liu, J.M.; Tang, W.; Wang, X.M.; Wang, H.J.; Tang, H.Z. Analysis of Mechanism to Produce Infraounnd Signals and its Characteristics. Environ. Eng. 2010, 28, 92–96. [Google Scholar]

- Zhang, L.X. Introduction to Test Ban Verification Techniques; National Defense Industry Press: Beijing, Chnia, 2005. [Google Scholar]

- Christie, D.R.; Veloso, J.V.; Campus, P.; Bell, M.; Hoffmann, T.; Langlois, A.; Martysevich, P.; Demirovic, E.; Carvalho, J. Detection of atmospheric nuclear explosions: The infrasound component of the International Monitoring System. Kerntechnik 2001, 66, 96–101. [Google Scholar] [CrossRef]

- Hupe, P.; Ceranna, L.; Le Pichon, A.; Matoza, R.; Mialle, P. International Monitoring System infrasound data products for atmospheric studies and civilian applications. Earth Syst. Sci. Data 2022, 14, 4201–4230. [Google Scholar] [CrossRef]

- Liu, X.Y.; Li, M.; Tang, W.; Wang, S.C.; Wu, X. A New Classification Method of Infrasound Events Using Hilbert-Huang Transform and Support Vector Machine. Math. Probl. Eng. 2014, 2014, 456818. [Google Scholar]

- Li, M.; Liu, X.; Liu, X. Infrasound signal classification based on spectral entropy and support vector machine. Appl. Acoust. 2016, 113, 116–120. [Google Scholar]

- Baumgart, J.; Fritzsche, C.; Marburg, S. Infrasound of a wind turbine reanalyzed as power spectrum and power spectral density. J. Sound Vib. 2022, 533, 116310. [Google Scholar]

- Leng, X.P.; Feng, L.Y.; Ou, O.; Du, X.L.; Liu, D.L.; Tang, X. Debris Flow Infrasound Recognition Method Based on Improved LeNet-5 Network. Sustainability 2022, 14, 15925. [Google Scholar] [CrossRef]

- Lu, Q.B.; Wang, M.; Li, M. Infrasound Signal Classification Based on ICA and SVM. Arch. Acoust. 2023, 48, 191–199. [Google Scholar]

- Albert, S.; Linville, L. Benchmarking current and emerging approaches to infrasound signal classification. Seismol. Res. Lett. 2020, 91, 921–929. [Google Scholar]

- Wu, Y.H.; Zhao, Z.T.; Chen, X.L.; Zou, S.Y. Research on Deep Learning Method of Atmospheric Low Frequency Acoustic Signal Recognition. J. Univ. Electron. Sci. Technol. China 2020, 49, 758–765. [Google Scholar]

- Marcell, P.; Csenge, C.; István, B. A Single Array Approach for Infrasound Signal Discrimination from Quarry Blasts via Machine Learning. Remote Sens. 2023, 15, 1657. [Google Scholar] [CrossRef]

- Zhao, M.; Chen, S.; Dave, Y. Waveform classification and seismic recognition by convolution neural network. Chin. J. Geophys. 2019, 62, 374–382. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer vision-ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Li, H.R.; Li, X.H.; Tan, X.F.; Liu, T.Y.; Zhang, Y.; Niu, C.; Liu, J.H. Infrasound Event Classification Fusion Model Based on Multiscale SE–CNN and BiLSTM. Appl. Geophys. 2024, 3, 579–592. [Google Scholar] [CrossRef]

- Bishop, J.W.; Blom, P.S.; Webster, J.; Reichard-Flynn, W.; Lin, Y. Deep learning categorization of infrasound array data. J. Acoust. Soc. Am. 2022, 152, 2434–2445. [Google Scholar] [CrossRef] [PubMed]

- Torres, M.E.; Colominas, M.A.; Schlotthauer, G.; Flandrin, P. A complete ensemble empirical mode decomposition with adaptive noise. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 4144–4147. [Google Scholar]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.C.; Tung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. Ser. A: Math. Phys. Eng. Sci. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Chen, Y.J.; Cheng, H.; Gong, E.P.; Xue, L. Suppression of random microseismic noise based on complete ensemble empirical mode decomposition with adaptive noise of TFPF. Oil Geophys. Prospect. 2021, 56, 234–241. [Google Scholar]

- Zhong, L. Research on leakage detection method of tailings pipeline based on infrasound wave and negative pressure wave. Master’s Thesis, JiangXi University of Science and Technology, Ganzhou, China, 2023. [Google Scholar]

- Wu, Y.H.; Zou, S.Y.; Pang, X.L.; Chen, X.L. Experimental study on atmospheric infrasound signal recognition using SVM and ANN. J. Appl. Acoust. 2020, 39, 207–215. [Google Scholar]

- Wang, J.; Wang, J.; Craig, R. Reducing GPS carrier phase errors with EMD-wavelet for precise static positioning. Surv. Rev. 2009, 41, 152–161. [Google Scholar]

- Welch, P. The Use of Fast Fourier Transform for the Estimation of Power Spectra: A Method Based on Time Averaging Over Short, Modified Periodograms. IEEE Trans. Audio Electroacoust. 1967, 15, 70–73. [Google Scholar] [CrossRef]

- Jin, Z.H.; Han, Q.C.; Zhang, K.; Zhang, Y.M. An intelligent fault diagnosis method of rolling bearings based on Welch power spectrum transformation with radial basis function neural network. J. Vib. Control 2020, 26, 629–642. [Google Scholar] [CrossRef]

- Wei, Y.G.; Yang, Q.L.; Wang, T.T.; Jiang, C.S.; Bian, Y.J. Earthquake and explosion identification based on Deep Learning residual network mode. Acta Seismol. Sin. 2018, 41, 646–657. [Google Scholar]

- Li, X.H.; Li, Y.H. Nuclear Explosion Reconnaissance Technology and Application; National Defense Industry Press: Beijing, China, 2016. [Google Scholar]

- Tan, X.F.; Li, X.H.; Niu, C.; Zeng, X.N.; Li, H.R.; Liu, T.Y. Classification method of infrasound events based on the MVIDA algorithm and MS-SE-ResNet. Appl. Geophys. 2024, 21, 667–679. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Tan, X.F.; Li, X.H.; Liu, J.H.; Li, G.S.; Yu, X.T. Classification of chemical explosion and earthquake infrasound based on 1-D convolutional neural network. J. Appl. Acoust. 2021, 40, 457–467. [Google Scholar]

- Yuan, L.; Liu, D.L.; Sang, X.J.; Zhang, S.J.; Chen, Q. Debris Flow Infrasound Signal Recognition Approach Based on Improved AlexNet. Comput. Mod. 2024, 3, 1–6. [Google Scholar]

| Chemical Explosion | Earthquake | |||

|---|---|---|---|---|

| Number of Events | Number of Samples | Number of Events | Number of Samples | |

| Sub-data set 1 | 10 | 123 | 4 | 121 |

| Sub-data set 2 | 12 | 144 | 2 | 154 |

| Sub-data set 3 | 6 | 119 | 2 | 128 |

| Chemical Explosion | Earthquake | ||||

|---|---|---|---|---|---|

| Sample Size Before Augmentation | Sample Size After Augmentation | Sample Size Before Augmentation | Sample Size After Augmentation | ||

| Sample set 1 | Training set | 86 | 4947 | 106 | 6299 |

| Validation set | 10 | 549 | 12 | 699 | |

| Test set (nonenhancement) | 48 | - | 54 | - | |

| Sample set 2 | Training set | 82 | 4456 | 92 | 5672 |

| Validation set | 9 | 495 | 10 | 630 | |

| Test set (nonenhancement) | 53 | - | 70 | - | |

| Sample set 3 | Training set | 91 | 4465 | 111 | 5260 |

| Validation set | 10 | 496 | 13 | 584 | |

| Test set (nonenhancement) | 43 | - | 48 | - | |

| Actual\Forecast | Positive | Negative |

|---|---|---|

| Positive | True Positive (TP) | False Negative (FN) |

| Negative | False Positive (FP) | True Negative (TN) |

| Operations | ACC | f-Score | TPR | TNR | |

|---|---|---|---|---|---|

| Noise Reduction | Feature Combination | ||||

| × | × | 76.3% | 76.2% | 77.5% | 75.2% |

| √ | × | 78.6% | 77.4% | 74.9% | 82.1% |

| √ | √ 1 | 81.0% | 78.5% | 76.4% | 84.9% |

| Network Module | ACC | f-Score | TPR | TNR | |

|---|---|---|---|---|---|

| CBAM | LSTM | ||||

| √ | × | 82.3% | 80.4% | 79.9% | 84.3% |

| × | √ | 69.6% | 65.5% | 63.2% | 75.0% |

| √ | √ | 83.9% | 82.1% | 81.3% | 86.0% |

| × (baseline) | × 1 (baseline) | 81.0% | 78.5% | 76.4% | 84.9% |

| ACC | f-Score | TPR | TNR | |

|---|---|---|---|---|

| Based on classic CNN | ||||

| LeNet-5 | 80.7% | 78.3% | 76.4% | 84.3% |

| AlexNet | 79.8% | 78.1% | 79.2% | 80.2% |

| VGG16 | 79.8% | 76.5% | 72.2% | 86.0% |

| GoogLeNet | 80.1% | 76.8% | 72.2% | 86.6% |

| ResNet18 | 80.7% | 79.0% | 79.9% | 81.4% |

| Classification CNN based on infrasound | ||||

| Improved CNN | 81.0% | 78.5% | 76.4% | 84.9% |

| Improved LeNet-5 | 80.4% | 77.9% | 75.7% | 84.3% |

| Improved AlexNet | 80.7% | 78.4% | 77.1% | 83.7% |

| PCMLN | 83.9% | 82.1% | 81.3% | 86.0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tan, X.; Li, X.; Li, H.; Zeng, X.; Liu, T.; Luo, S. A Deep Learning Approach for Distant Infrasound Signals Classification. Sensors 2025, 25, 2058. https://doi.org/10.3390/s25072058

Tan X, Li X, Li H, Zeng X, Liu T, Luo S. A Deep Learning Approach for Distant Infrasound Signals Classification. Sensors. 2025; 25(7):2058. https://doi.org/10.3390/s25072058

Chicago/Turabian StyleTan, Xiaofeng, Xihai Li, Hongru Li, Xiaoniu Zeng, Tianyou Liu, and Shengjie Luo. 2025. "A Deep Learning Approach for Distant Infrasound Signals Classification" Sensors 25, no. 7: 2058. https://doi.org/10.3390/s25072058

APA StyleTan, X., Li, X., Li, H., Zeng, X., Liu, T., & Luo, S. (2025). A Deep Learning Approach for Distant Infrasound Signals Classification. Sensors, 25(7), 2058. https://doi.org/10.3390/s25072058