The Reliability of 20 m Sprint Time Using a Novel Assessment Technique

Abstract

:1. Introduction

2. Methods

2.1. Experimental Approach to the Problem

2.2. Subjects

2.3. Procedures

2.4. Statistical Analysis

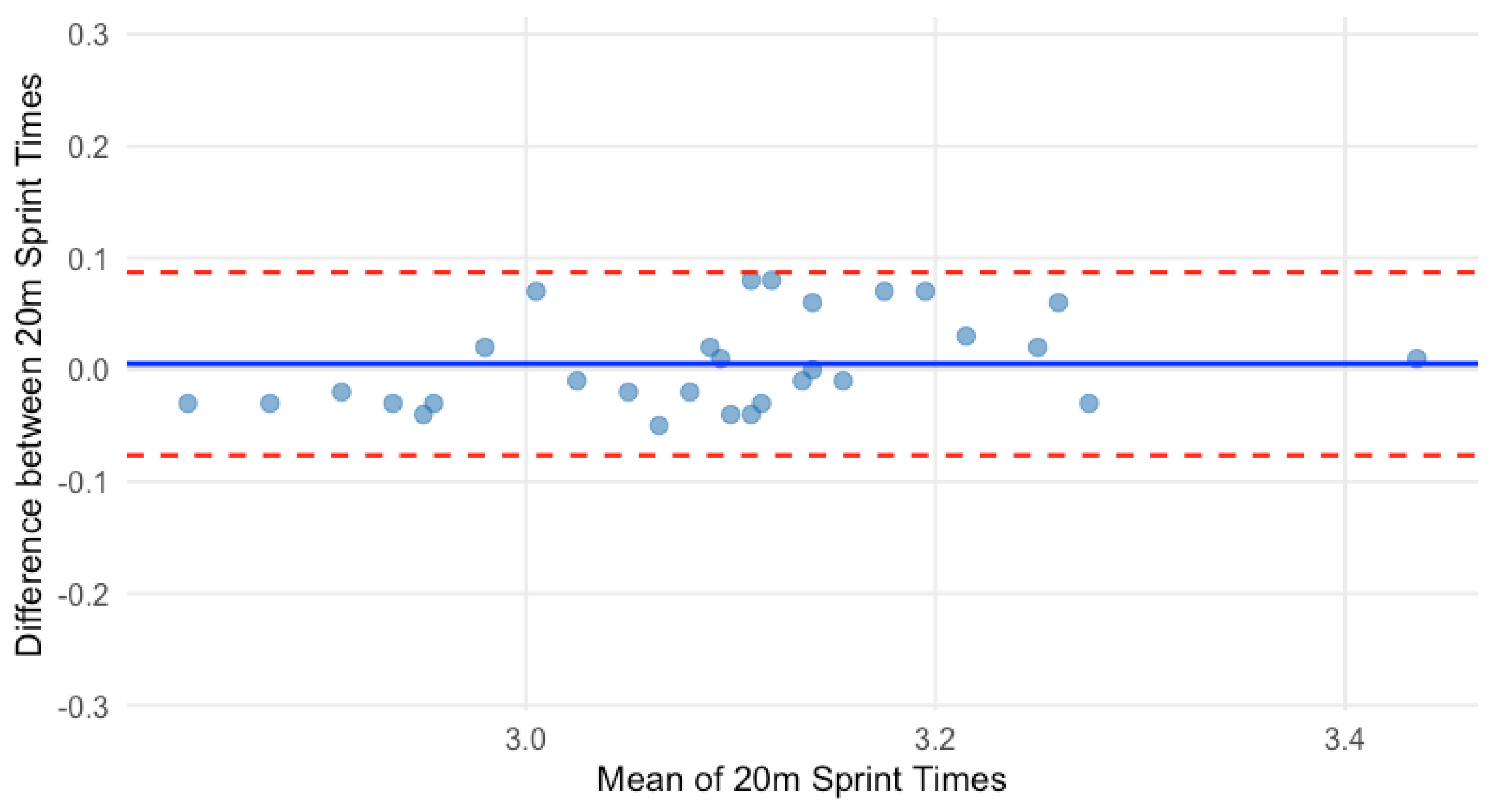

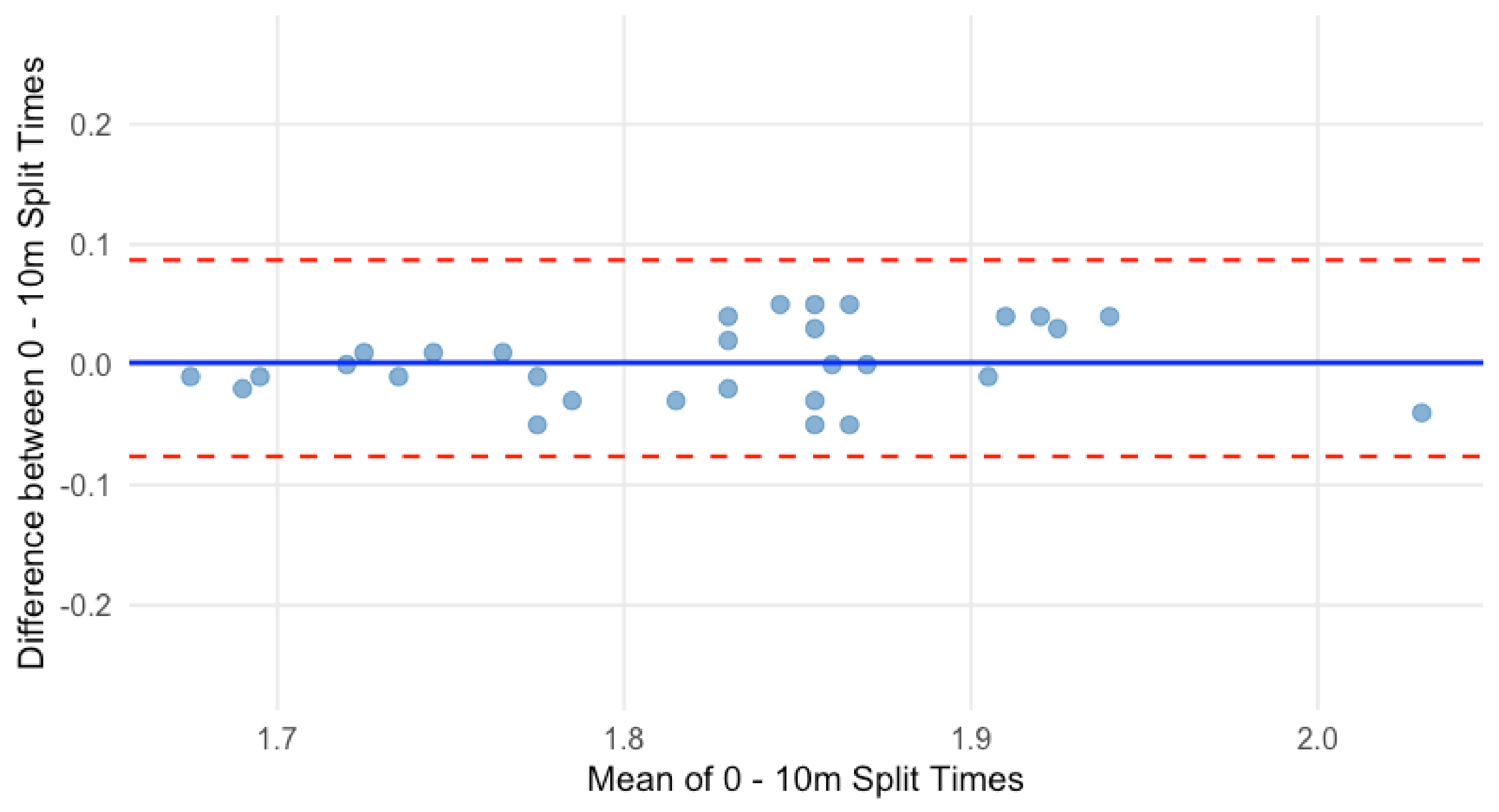

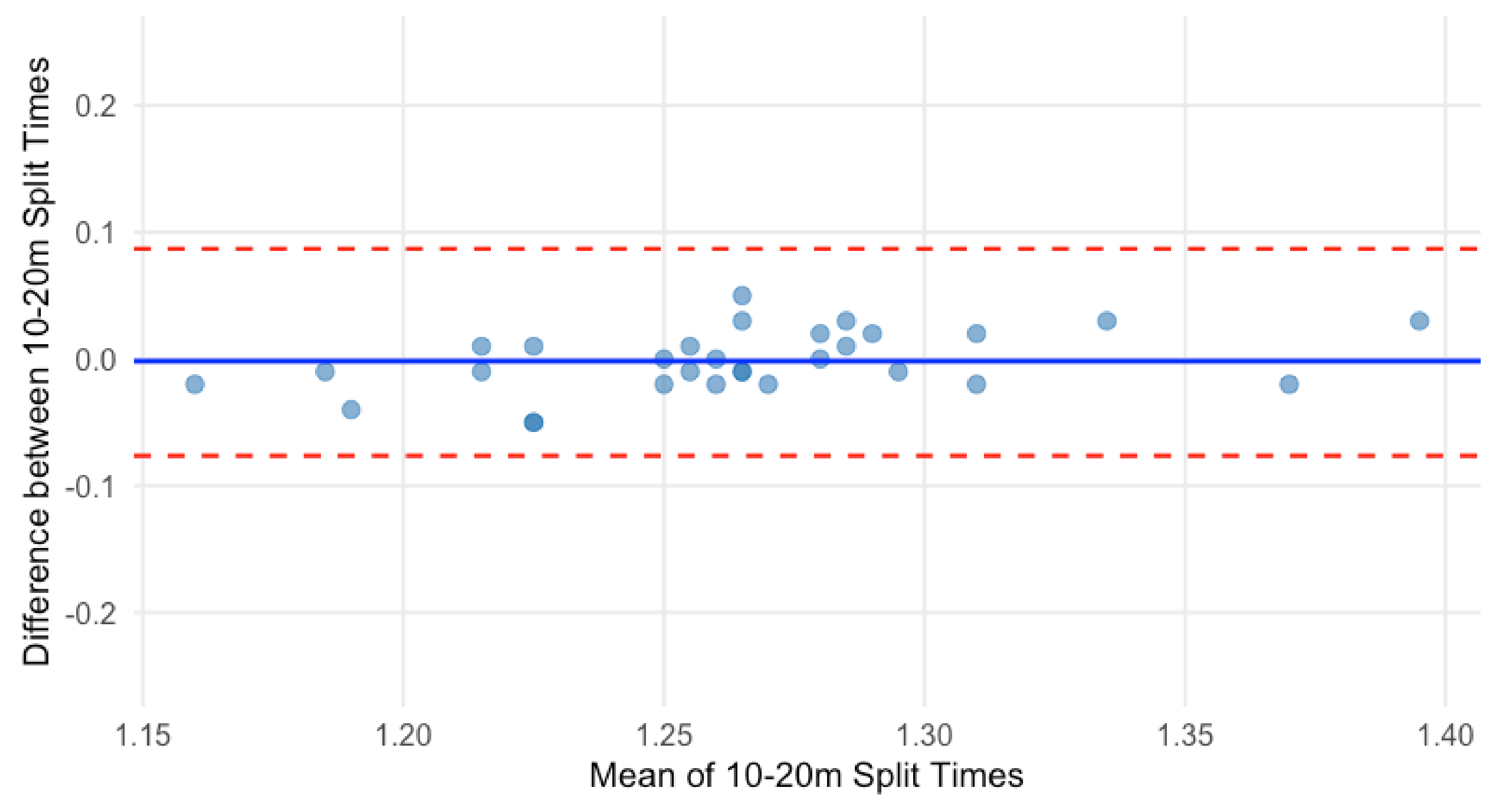

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Simperingham, K.D.; Cronin, J.B.; Ross, A. Advances in sprint acceleration profiling for field-based team-sport athletes: Utility, reliability, validity and limitations. Sports Med. 2016, 46, 1619–1645. [Google Scholar] [PubMed]

- Vigne, G.; Gaudino, C.; Rogowski, I.; Alloatti, G.; Hautier, C. Activity profile in elite Italian soccer team. Int. J. Sports Med. 2010, 31, 304–310. [Google Scholar] [PubMed]

- Lindsay, A.; Draper, N.; Lewis, J.; Gieseg, S.P.; Gill, N. Positional demands of professional rugby. Eur. J. Sport Sci. 2015, 15, 480–487. [Google Scholar] [PubMed]

- Ellis, L. Protocols for the physiological assessment of the team sport players. Physiol. Tests Elite Athl. 2000, 128–144. [Google Scholar]

- Moir, G.; Button, C.; Glaister, M.; Stone, M.H. Influence of familiarization on the reliability of vertical jump and acceleration sprinting performance in physically active men. J. Strength Cond. Res. 2004, 18, 276–280. [Google Scholar]

- Duthie, G.M.; Pyne, D.B.; Ross, A.A.; Livingstone, S.G.; Hooper, S.L. The reliability of ten-meter sprint time using different starting techniques. J. Strength Cond. Res. 2006, 20, 251. [Google Scholar]

- Earp, J.E.; Newton, R.U. Advances in electronic timing systems: Considerations for selecting an appropriate timing system. J. Strength Cond. Res. 2012, 26, 1245–1248. [Google Scholar]

- Kraan, G.A.; Van Veen, J.; Snijders, C.J.; Storm, J. (2001). Starting from standing; why step backwards? J. Biomech. 2001, 34, 211–215. [Google Scholar]

- Darrall-Jones, J.D.; Jones, B.; Roe, G.; Till, K. Reliability and usefulness of linear sprint testing in adolescent rugby union and league players. J. Strength Cond. Res. 2016, 30, 1359–1364. [Google Scholar]

- Hopkins, W.G. Measures of reliability in sports medicine and science. Sports Med. 2000, 30, 1–15. [Google Scholar]

- Brooks, B.T. Relative Effects of Running Surface, Equipment, and Competitive Test Conditions upon Sprinting Speed of Football Players. Master’s Thesis, The Pennsylvania State University, University Park, PA, USA, 1976. [Google Scholar]

- McKay, A.K.A.; Stellingwerff, T.; Smith, E.S.; Martin, D.T.; Mujika, I.; Goosey-Tolfrey, V.L.; Sheppard, J.; Burke, L.M. Defining training and performance calibre: A participant classification framework. Int. J. Sports Physiol. Perform. 2022, 17, 317–331. [Google Scholar] [PubMed]

- Drust, B.; Waterhouse, J.; Atkinson, G.; Edwards, B.; Reilly, T. Circadian rhythms in sports performance—An update. Chronobiol. Int. 2005, 22, 21–44. [Google Scholar] [PubMed]

- Borg, D.N.; Bach, A.J.; O’Brien, J.L.; Sainani, K.L. Calculating sample size for reliability studies. PM&R 2022, 14, 1018–10255. [Google Scholar]

- Cronin, J.B.; Green, J.P.; Levin, G.T.; Brughelli, M.E.; Frost, D.M. Effect of starting stance on initial sprint performance. J. Strength Cond. Res. 2007, 21, 990–992. [Google Scholar]

- Wilcox, R.R. Understanding and Applying Basic Statistical Methods Using R, 1st ed.; Wiley: Hoboken, NJ, USA, 2016; pp. 139–173. [Google Scholar]

- Smithies, T.D.; Toth, A.J.; Campbell, M.J. Test–retest reliability and practice effects on a shortened version of the Category Switch Task—A pilot study. J. Cogn. Psychol. 2024, 36, 742–753. [Google Scholar]

- Koo, T.K.; Li, M.Y. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar]

- Hopkins, W.G. Reliability from Consecutive Pairs of Trials (Excel Spreadsheet). 2000. Available online: http://sportsci.org/resource/stats/xrely.xls (accessed on 1 December 2024).

- Turner, A.; Brazier, J.; Bishop, C.; Chavda, S.; Cree, J.; Read, P. Data analysis for strength and conditioning coaches: Using Excel to analyze reliability, differences, and relationships. Strength Cond. J. 2015, 37, 76–83. [Google Scholar]

- Duthie, G.; Pyne, D.; Hooper, S. The reliability of video-based time motion analysis. J. Hum. Mov. Stud. 2003, 44, 259–272. [Google Scholar]

- Hopkins, W.G.; Batterham, A.M.; Marshall, S.W.; Hanin, J. Progressive statistics. Sportscience 2009, 13. [Google Scholar]

- Lexell, J.E.; Downham, D.Y. How to assess the reliability of measurements in rehabilitation. Am. J. Phys. Med. Rehabilit. 2005, 84, 719–723. [Google Scholar]

- Bucher, E.; Millet, G.; Wehrlin, J.; Steiner, T. Test–retest reliability of ski-specific aerobic, sprint, and neuromuscular performance tests in highly trained cross-country skiers. Scand J. Med. Sci. Sports 2023, 33, 2482–2498. [Google Scholar] [PubMed]

- Ettema, M.; Brurok, B.; Baumgart, J.K. Test–retest reliability of physiological variables during submaximal seated upper-body poling in able-bodied participants. Front. Physiol. 2021, 12, 749356. [Google Scholar]

- Lindberg, K.; Solberg, P.; Bjørnsen, T.; Helland, C.; Rønnestad, B.; Frank, M.T.; Haugen, T.; Østerås, S.; Kristoffersen, M.; Midttun, M.; et al. Strength and power testing of athletes: A multicenter study of test–retest reliability. Int. J. Sports Physiol. Perform. 2022, 17, 1103–1110. [Google Scholar] [PubMed]

- Buchheit, M.; Rabbani, A.; Beigi, H.T. Predicting changes in high-intensity intermittent running performance with acute responses to short jump rope workouts in children. J. Sports Sci. Med. 2014, 13, 476–482. [Google Scholar]

- Pyne, D.B. Interpreting the Results of Fitness Testing. In Proceedings of the International Science and Football Symposium, Melbourne, VIC, Australia, 16–20 January 2007. [Google Scholar]

- Arsac, L.M.; Locatelli, E. Modeling the energetics of 100-m running by using speed curves of world champions. J. Appl. Physiol. 2002, 92, 1781–1788. [Google Scholar]

| Sprint Times | Mean ± SD | CV (%) | ICC (95% CI) | TE (90% CI) | SEM | SWC (0.2) | SWC ≥ TE | MDC95 |

|---|---|---|---|---|---|---|---|---|

| 0–20 m (s) | 3.09 ± 0.13 | 0.52 | 0.95 (0.90, 0.98) | 0.016 (0.012, 0.021) | 0.029 | 0.025 | Yes | 0.057 |

| 0–10 m (s) | 1.82 ± 0.08 | 0.67 | 0.93 (0.86, 0.97) | 0.012 (0.009, 0.016) | 0.022 | 0.016 | Yes | 0.044 |

| 10–20 m (s) | 1.26 ± 0.05 | 1.36 | 0.89 (0.78, 0.95) | 0.017 (0.013, 0.023) | 0.017 | 0.010 | No | 0.033 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Holmberg, P.M.; Olivier, M.H.; Kelly, V.G. The Reliability of 20 m Sprint Time Using a Novel Assessment Technique. Sensors 2025, 25, 2077. https://doi.org/10.3390/s25072077

Holmberg PM, Olivier MH, Kelly VG. The Reliability of 20 m Sprint Time Using a Novel Assessment Technique. Sensors. 2025; 25(7):2077. https://doi.org/10.3390/s25072077

Chicago/Turabian StyleHolmberg, Patrick M., Mico H. Olivier, and Vincent G. Kelly. 2025. "The Reliability of 20 m Sprint Time Using a Novel Assessment Technique" Sensors 25, no. 7: 2077. https://doi.org/10.3390/s25072077

APA StyleHolmberg, P. M., Olivier, M. H., & Kelly, V. G. (2025). The Reliability of 20 m Sprint Time Using a Novel Assessment Technique. Sensors, 25(7), 2077. https://doi.org/10.3390/s25072077