Abstract

Few-shot learning has demonstrated remarkable performance in medical image segmentation. However, existing few-shot medical image segmentation (FSMIS) models often struggle to fully utilize query image information, leading to prototype bias and limited generalization ability. To address these issues, we propose the dual-filter cross attention and onion pooling network (DCOP-Net) for FSMIS. DCOP-Net consists of a prototype learning stage and a segmentation stage. During the prototype learning stage, we introduce a dual-filter cross attention (DFCA) module to avoid entanglement between query background features and support foreground features, effectively integrating query foreground features into support prototypes. Additionally, we design an onion pooling (OP) module that combines eroding mask operations with masked average pooling to generate multiple prototypes, preserving contextual information and mitigating prototype bias. In the segmentation stage, we present a parallel threshold perception (PTP) module to generate robust thresholds for foreground and background differentiation and a query self-reference regularization (QSR) strategy to enhance model accuracy and consistency. Extensive experiments on three publicly available medical image datasets demonstrate that DCOP-Net outperforms state-of-the-art methods, exhibiting superior segmentation and generalization capabilities.

1. Introduction

Automatic medical image segmentation [1] has demonstrated significant advantages in terms of efficiency, flexibility, and reliability compared to manual segmentation with the evolution of artificial intelligence [2]. It provides a more scientific and accurate basis for physicians’ clinical decision making. Currently, most existing medical image segmentation models are built on deep learning methods [3,4,5]. An essential prerequisite for these models to achieve excellent performance is to have a large amount of annotated data during training. However, due to the necessity to protect patient privacy and the professionalism required to label medical images, constructing medical image datasets with dense annotations takes time and effort [6]. Therefore, medical image segmentation evolves into an immensely challenging endeavor.

Fortunately, few-shot learning (FSL) [7,8,9] offers a potential solution to address the issue of insufficient medical image data. The key to this method is to fully utilize the similarity between the support set and the query set to guide segmentation and effectively enhance the model’s “learn how to learn” capability, enabling it to quickly generalize to new categories even in the case of scarce samples. Since ALP-Net [10] introduces few-shot learning into medical image segmentation, few-shot medical image segmentation (FSMIS) models have developed rapidly. The current FSMIS models are mainly divided into two categories: one is based on prototype networks [11,12,13,14,15] and the other is based on interaction-based models [16,17,18,19,20]. Nowadays, in the FSMIS field, models based on prototype networks have become the mainstream architecture.

However, traditional prototype-based models [21,22] may fail to effectively learn from the query foreground (FG) features, which can amplify the prototype bias issue. To address this issue, the recent CRAP-Net [20] employs a multi-layer cycle-resemblance module to capture pixel-level relationships between support features and query features. Moreover, to eliminate irrelevant pixel information in support images, CAT-Net [23] adopts a cross masked attention mechanism to enable effective interaction between support FG features and query features. Regrettably, such approaches tend to intermingle the background (BG) features of the query image with the FG features of the support images, resulting in BG mismatches. Furthermore, some models rely solely on a masked average pooling (MAP) [24] method during prototype extraction after feature interactions. This process may cause prototypes to struggle to represent fine-grained support FG features. The combination of these two factors magnifies the prototype bias issue.

Meanwhile, existing FSMIS models [16,25,26] suffer from poor generalization performance and lack of in-depth learning from the query image. This limitation restricts their ability to effectively adapt to unseen categories. To address these issues, PA-Net [27] introduces a potential solution by incorporating a prototype alignment regularization task, which allows query image information to flow back to the support image, thereby enhancing the segmentation ability of prototype learning-based models. Nevertheless, its improvement in model generalization performance is limited. In addition, some existing methods [25,26] attempt to use the query image to generate learnable thresholds to delineate FG and BG regions when performing segmentation on the query image. However, these methods only take the query image as input, making the threshold vulnerable to noise. Meanwhile, due to the insufficient feature extraction of the query image, the generated thresholds are challenging to complete the delineation task effectively. Consequently, it is essential for elevating FSMIS models’ generalization and segmentation capabilities to design a regularization task and a practical threshold generation module.

To tackle the issues above, we propose a dual-filter cross attention and onion pooling network (DCOP-net) for FSMIS. Compared to other FSMIS methods that suffer from prototype bias and lack generalization ability, the innovation of DCOP-Net lies in the effective interaction between support and query features, allowing contextual information from support images to be better retained as prototypes, thereby addressing the BG mismatch problem and mitigating prototype bias. Furthermore, DCOP-Net is capable of generating robust thresholds for anomaly score discretization and incorporates a novel feedback mechanism, enabling superior segmentation and generalization performance compared to other FSMIS methods. Specifically, we design dual-filter cross attention (DFCA) and onion pooling (OP) modules in the prototype learning stage to alleviate the prototype bias problem. Firstly, the DFCA module performs a dual filter on the query BG by a prior mask and an adaptive value to avoid the problem of BG mismatch. Subsequently, the DFCA module reduces the intra-class gap of FG categories by feature fusion. It captures the pixel-wise relation between query FG features and support FG features using the cross attention mechanism to obtain enhanced support and query features. Inspired by real-life Russian nesting dolls, we construct the OP module which generates onion layer masks by eroding query masks layer by layer through the proposed erosion pooling operation. Then, the OP module utilizes MAP to extract the prototypes and a fully connected layer-based approach to enable the prototypes to learn from each other, thus reducing the loss of critical details of the medical images when obtaining the prototypes. In this way, the quality of the prototype is significantly improved.

In the segmentation stage, we propose a parallel threshold perception (PTP) module and implement query self-reference regularization (QSR) to improve the model’s generalization and segmentation capabilities. The PTP module learns segmentation thresholds based on a parallel architecture, which effectively improves the nonlinearity of the module and reduces the impact of noise in medical images on threshold generation. The QSR module improves the model’s self-correction and generalization ability by self-guiding the query prediction information flow back to the query image, forming a feedback mechanism between the prediction mask and itself.

In summary, our contributions can be summarized as follows:

- In the prototype learning stage, we propose the DFCA and OP modules to mitigate the issue of prototype bias in FSMIS. The DFCA module employs a dual-filter mechanism to prevent BG mismatches, enabling prototypes to learn query FG information more efficiently. Meanwhile, the OP module preserves the prototype’s contextual information when extracting the contextual information of prototypes during extraction and enhances their ability to represent FG features in medical images.

- In the segmentation stage, we develop the PTP and QSR modules to improve the accuracy and generalization capabilities of the FSMIS models when segmenting query images. PTP module acquires valid thresholds through a dual-path architecture. Meanwhile, QSR improves the model’s ability to adapt to unseen categories by forming a suitable feedback mechanism.

- We propose an effective FSMIS model DCOP-Net. Extensive experiments on three publicly available medical image datasets show that DCOP-Net outperforms other state-of-the-art methods. The results of the comparative experiments and ablation studies demonstrate the effectiveness and superiority of our method.

The rest of this article is organized as follows. Section 2 presents the related work. Section 3 describes the proposed methods in detail. Section 4 evaluates the proposed model on three public datasets and provides experimental results. Finally, Section 5 summarizes the entire article and suggests directions for future work.

2. Related Work

2.1. Few-Shot Medical Image Segmentation

The automatic medical image segmentation models usually adopt visual transformers [28], multi-scale fusion [29], and other methods [30,31] to achieve more accurate, flexible, and reliable results than manual segmentation. However, if large annotated medical image datasets are not available during training, it becomes challenging for large-scale image segmentation models to achieve the best possible results. Unlike natural images, acquiring medical images is more costly, and the annotation process is complex. Few-shot image segmentation provides an effective solution to these issues. Current FSMIS models are mainly divided into methods based on prototype networks [11,12,13,14,15] and interaction-based models [16,17,18,19,20].

As a pioneer in attention-based interactive models for FSMIS, SE-Net [16] achieves effective interactions between the conditioner arm and segmenter arm [32] by introducing channel squeezing and spatial excitation blocks. SSL-ALPNet [13], proposed by Ouyang et al., is the first prototype-based FSMIS method, which can adaptively generate local prototypes according to organ size and predict the FG or BG regions in the query image by distinguishing between FG and BG prototypes. Ouyang et al. [13] also proposed a superpixel-based self-supervised method to meet the demand for labeled data during training. ADNet [25] builds upon this by extending superpixels to supervoxels, fully utilizing the 3D information of the image, and introducing a fixed threshold to segment the query mask. ADNet++ [33] further increases the measurement of predictive uncertainty and proposes a one-step multi-class medical image segmentation framework. CRAP-Net [20] designs a recurrent similarity attention module to preserve the spatial connections between support and query images. CAT-Net [23] limits the attention range while mining the relevance between support and query images.

However, the above works either do not learn the FG information of the query image, or there is a BG mismatch issue at the time of learning. These reasons amplify the prototype bias problem, resulting in inaccurate segmentation of the query images by the model. Taking AD-Net [25] as the baseline network, we propose a DFCA module, which ensures effective interactions between support and query FG features by filtering on BG elements, thereby addressing the BG mismatch issue. It consists of a prior mask generation (PMG) module and a filter cross attention (FCA) module. The PMG module creates a prior mask for the query image, serving as the first barrier to prevent interactions between the query BG and support FG; the FCA module, on the other hand, acts as the second barrier by limiting the impact of low-quality attention scores. This design not only enhances the representation ability of the support prototypes, but also avoids entanglement between the query BG and support FG features.

2.2. Attention Mechanism

Some recent attention-based works [34,35] have proved that the attention mechanism can achieve significant improvements in computer vision tasks. Therefore, many few-shot segmentation models [20,23,36,37,38,39] employ cross attention to solve the problem of some features in the query image not appearing in the support features in few-shot segmentation tasks. Particularly, CRAP-Net [20] introduces a cycle-resemblance attention network, facilitating the reciprocal interaction between support and query feature sets. This interaction enables the generation of enhanced support and query features, which are then utilized to refine and adjust their respective informational content.

Although these works have shown promising results, the query BG features are inevitably fused with the unmatched supporting FG features, resulting in feature deviations. Therefore, in this work, we design a dual-filter cross attention (DFCA) module to reduce the entanglement between FG and BG features, thereby improving the quality of the prototype.

2.3. Prototype Extraction Method

In FSMIS models, utilizing the similarity between prototypes and query features for segmentation is a mainstream approach. Therefore, enhancing the quality of prototypes becomes a crucial step in improving model performance. Current prototype-based methods may have prototype bias issues [24], which researchers typically address by enhancing the representation ability of individual prototypes or generating multiple prototypes. For instance, PA-Net [27] enhances the representation ability of prototypes through a prototype alignment regularization task. Although single-prototype methods have strong interpretability, they still lack representative details; multi-prototype methods, on the other hand, make up for this deficiency, such as ALPNet [13], which adaptively generates multiple local prototypes based on organ size to supplement the details in the prototype set. Despite this, while multi-prototype methods can provide rich and detailed information, they may also lead to feature fragmentation, making it difficult for a single prototype to express complete organ features.

Inspired by the layer-wrapping of onions in nature, this work proposes the OP module. In order to combine the merits of multiple prototypes and single prototype approaches, we utilize the OP module to retain support image context information to generate multiple prototypes. Subsequently, we aggregate these prototypes into one, enhancing the prototype’s representational capacity and interpretability.

2.4. Regularization

Regularization techniques are crucial in improving few-shot segmentation models’ segmentation and generalization capabilities. Previous works [14,15] typically follow this process: utilizing support images and masks to obtain support prototypes and then utilizing these prototypes to segment query images. However, this workflow only partially leverages support images, query images, and masks. Thus, PA-Net [27] proposes a prototype alignment regularization that enhances the model’s generalization ability by segmenting the support images in reverse using the query and predicted masks as new support images and masks to obtain the loss function. In addition, Wang et al. [40] noticed that existing methods neglected supervision of the support images and proposed self-reference regularization, which enhances the support FG prototype’s representational capacity for the FG by segmenting the support images using the support prototypes to obtain the loss function.

However, the above methods still lack in-depth learning and understanding of query images. Therefore, we design QSR, a loss function whose influence gradually increases with the number of training rounds. It can assist in enhancing the model’s comprehension and segmentation of query images.

3. Proposed Method

3.1. Problem Definition

The FSMIS task aims to use techniques such as meta-learning [41] to train a model, which is trained from the dataset containing visible categories , to quickly generalize to the segmentation of unseen categories . This means that . During the training stage, we follow the episode training method [27], which is commonly used in FSMIS tasks. Specifically, in each N-way K-shot task, we adopt a random sampling strategy to divide the dataset with novel categories into a support set and a query set , where has N categories and superscripts K and are the number of image–mask pairs for the support and query sets, respectively. Moreover, each image–mask pair in the task forms an episode, where and denote the i-th support image and its corresponding mask. The subscripts s and q represent that the categories of images are support or query images, respectively. In general, the inputs to the model are the given support image with its corresponding mask and the query image, while the output is the predicted mask for the query image.

3.2. Architecture Overview

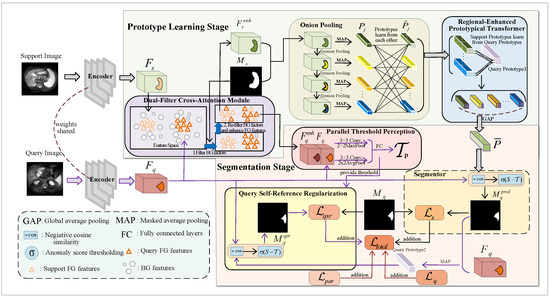

As shown in Figure 1, the proposed DCOP-Net’s workflow is divided into two main stages: prototype learning and segmentation. The prototype learning stage, composed of the DFCA, OP, and Regional-enhanced Prototypical Transformer (RPT) modules [42], has the core task of learning high-quality support prototypes. Meanwhile, the segmentation stage, which consists of the PTP, Segmentor, and QSR modules, is primarily responsible for segmenting the query images.

Figure 1.

Overview of the proposed DCOP-Net architecture. This model consists of two stages, i.e., the prototype learning stage and the segmentation stage, which are used to complete the prototype learning and the segmentation of the query image, respectively.

Specifically, we first input the support and query images into a weight-sharing feature encoder to extract feature maps. Then, in the prototype learning stage, we propose a novel strategy for learning prototypes. In the first step, we input support features and query features into the DFCA module to obtain the enhanced support features and the enhanced query features. The DFCA module filters background features and employs a cross-attention-based approach, enabling the support FG features to learn and integrate the query FG features, effectively. In the second step, we input the enhanced support features from above and their ground truth masks into the OP and RPT modules to obtain the support prototype. The innovative OP module can generate onion-layer masks by eroding support mask boundaries layer by layer and then generate multiple prototypes using MAP. Afterward, the OP and RPT modules enable the prototypes to learn from each other, thus enhancing their representation ability for FG features. Finally, we employ global average pooling (GAP) to aggregate multiple prototypes into one.

During the segmentation stage, we utilize the segmentation method based on anomaly scores [25] to segment query image and design the QSR module to enhance the model’s generalization ability. Specifically, we concatenate the query features output by the DFCA module with the original query features and then input the result into the parallel-structured PTP module for feature processing, generating parameters for thresholding the anomaly scores in the subsequent context. In the subsequent Segmentor module, we utilize the query features and the prototype extracted from the prototype learning stage to calculate the negative cosine similarity (i.e., anomaly scores) [25]. Then, we employ the parameters output by the PTP module to binarize calculation results, thereby obtaining the predicted mask. Significantly, after completing segmentation, we propose a QSR module that resegments the query image using the query image and the predicted mask. Thus, QSR creates a feedback mechanism regarding the query image, enhancing the accuracy and consistency of the model when processing query images.

3.3. Prototype Learning Stages

Before the prototype learning stage, we employ a parameter-sharing feature encoder [43] to extract feature vectors from support image and query image . This process can be represented as follows:

where and are the support and query features, respectively.

3.3.1. Dual-Filter Cross Attention Module

During the prototype learning stage, many FSMIS models leverage cross-attention-based approaches [22,23] to achieve the interactions between support and query features, enhancing support FG features’ ability to represent FG classes. However, feature interactions that fail to filter BG features may have the query BG incorrectly fused with the support FG features, which can directly lead to lower prototype quality and indirectly lower segmentation accuracy.

To address this problem, we restrict the interaction between query FG features and support FG features by purposefully filtering BG features with the proposed DFCA module. This design allows for the stable fusion of FG features, thereby significantly enhancing the representation of the support FG features while avoiding BG mismatching issue [44].

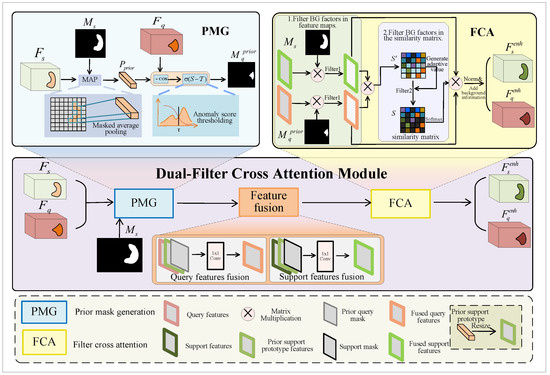

The entire process is illustrated in Figure 2. Firstly, we input the support features, query features, and support mask into the prior mask generation (PMG) block to obtain a prior query mask. Then, to reduce the disparity between the FG features of support and query images, we fuse the support features , support mask (or query features , prior query mask ), and prior support prototype map using 1 × 1 convolution, outputting the fused support features (or fused query features ). Finally, we employ the proposed filter cross attention (FCA) block to facilitate mutual learning among the FG features while filtering out the influence of BG factors as much as possible, resulting in enhanced support features (or enhanced query features ).

Figure 2.

Dual-filter cross attention module. This module takes support features, query features, and the support mask as inputs and outputs enhanced support features and enhanced query features after three blocks of PMG, feature fusion, and FCA.

(1) Prior mask generation

The PMG module applies MAP to the support image and support mask to compute the prior support prototype. The prior support prototype is then used to calculate the cosine similarity with the query image, which is subsequently thresholded to obtain the prior query mask. Using the generated prior query mask, we can initially separate the FG and BG regions of the query image.

Specifically, as shown in Figure 2, we conduct MAP [24] to obtain a prior support prototype by leveraging the support features and corresponding mask . The mathematical form of this process is expressed as follows:

where ⊙ represents the Hadamard product, denotes the pixel position in the original mask, and represent the support features and the corresponding binary FG mask, respectively, and represents the support prototype in the process of generating the prior mask.

Subsequently, we utilize to compute the negative cosine similarity (i.e., anomaly scores) with each location in . This can be denoted as:

where is the anomaly score map for each position in , represents the query features, is the scaling factor introduced by Oreshkin et al. [45] to facilitate backpropagation, which is generally set to 20, and represents the norm of a matrix.

After that, to make the process of thresholding the anomaly scores differentiable, we employ a shifted Sigmoid function [25], which ensures that regions with anomaly scores below the prior threshold obtain a higher FG probability, thereby obtaining the final prior query mask . is typically set to [25]. The entire process is illustrated in the following formula:

where is the Sigmoid function with a steepness parameter .

(2) Feature fusion

Before the support and query FG features interact, it is important to recognize that they may not be similar. In order to close their gaps and improve the quality of interaction, we fuse the query features , prior support prototype features , and prior query mask . Moreover, we fuse the support features , prior support prototype features , and support mask . This aligns the query features with the support features in the same feature space, reducing the distribution discrepancy between them.

Specifically, as shown in Figure 2, we process to match the size of , thereby obtaining prior support prototype features . Subsequently, we conduct channel concatenation on , , and and use convolution for dimensionality reduction to achieve feature fusion. Similarly, will be concatenated with and to achieve feature fusion. The fused query features and fused support features are calculated as:

where denotes channel concatenation and is convolution operation.

(3) Filter cross attention

As shown in Figure 2, after feature fusion, FCA takes the fused support features , fused query features , and both corresponding masks as inputs and then outputs the enhanced support features and enhanced query features . We use masks as the first filter to filter BG factors in feature maps, which involves performing a Hadamard product between masks and features. This process is shown in the following formulas:

where and are the FG parts of fused support features and fused query features, respectively.

Then, we perform a cross-attention-based approach [20,23] to obtain and . We take obtaining as an example to illustrate the whole process. FCA first projects support FG features into a sequence and then projects the query FG features into sequences .

where and are weight matrices and bias terms for generating , and are weight matrices and bias terms for generating , and and are weight matrices and bias terms for generating . After that, FCA conducts matrix multiplication to calculate the similarity between and to obtain the similarity matrix :

where is the dimension of .

However, considering the inaccuracy of the prior query mask, the first filter may not completely filter BG factors. We design an filtering function in cross attention as the second filter to filter BG factors in :

where is the filtering function, is the filtered similarity matrix, is the adaptive value for filtering BG factors, and and represent the operations of obtaining the maximum value and the average value, respectively.

The filtering function adaptively filters low-quality similarity scores that may exist in . Then, we use the softmax function to normalize and fuse the result with , which can be denoted as:

where is the FG regions of enhanced support features.

After that, FCA adds the corresponding BG information to , yielding the enhanced support features :

where represents the operation of adding BG information.

Similarly, we can obtain the enhanced query features . The whole process is as follows:

where sequence is the query FG features being projected and sequences are the support FG features being projected.

3.3.2. Onion Pooling

During the prototype extraction, prototype bias represents a significant challenge that every prototype learning method must confront. In addition, due to intra-class diversity, there will be significant differences between support and query features, so the intra-class bias problem is also a significant challenge.

To address the above challenges, we propose the OP module and introduce RPT module. We use the erosion pooling (EP) operation to help prototypes acquire richer contextual information, thus enhancing the ability of prototypes to represent FG features and alleviating the problem of prototype bias. Furthermore, we alleviate the intra-class bias by using the self-attention mechanism and the RPT module to reduce the inconsistency between prototypes.

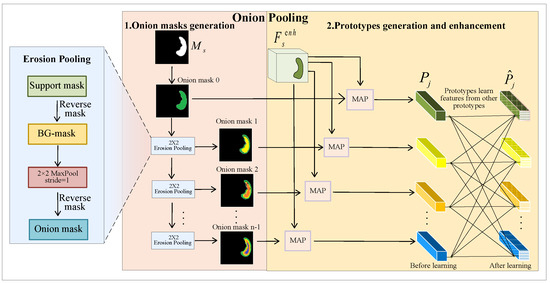

Specifically, we use the proposed EP operation to erode the support mask, creating layer-by-layer onion masks that prepare for extracting prototypes. Then, we conduct the MAP method to generate prototypes. Finally, we employ a self-attention-based method to enable mutual learning within the prototypes. This process is illustrated in Figure 3.

Figure 3.

Onion pooling module. This module extracts multiple support prototypes by conducting erosion pooling (EP), masked average pooling (MAP), and a self-attention-based approach on the support mask and the enhanced support features.

(1) Onion mask generation

Here, we refer to the eroded support masks as onion masks. We conduct several EP operations to shrink the FG region of the support mask progressively, resulting in multiple onion masks.

In the beginning, we obtain the BG mask by reversing the support mask. Subsequently, we perform a max-pooling operation on the BG mask to reduce the size of the FG region. These two steps simultaneously expand the background region of the mask while reducing the foreground region. Finally, we reverse the pooled BG mask again to obtain the FG mask, which is the onion mask. This process can be expressed as follows:

where represents the onion mask obtained after j-th () erosion pooling. It is worth noting that is support mask when . represents the EP operation using a window.

Finally, we obtain n onion masks by executing EP n — 1 times. Empirically, the upper limit of the number of onion layers is typically set to 4.

(2) Prototypes generation and enhancement

After obtaining onion masks, we conduct MAP to extract support prototypes by leveraging enhanced support features and onion masks:

where represents the support prototype extracted using the j-th onion mask. It is worth mentioning that we obtain n support prototypes from the n generated onion masks.

To alleviate intra-class bias, we employ a self-attention mechanism [46] to enable prototypes to learn from each other internally. Precisely, we concatenate the prototypes into one, then project the result into the sequences , , and :

where , , are weight matrices for generating , , , respectively. , , and are bias terms for generating , , and , respectively. Subsequently, we compute the dot product similarity between and , then normalize it using a softmax function to obtain the attention scores. We employ the attention scores to weight and then split the result into n prototypes. The entire process is illustrated as follows:

where represents the support prototypes generated by mutual learning through the self-attention method (where ) and represents splitting the concatenated prototype into n prototypes.

3.3.3. Regional-Enhanced Prototypical Transformer Module

After extracting the prototypes using the OP module, we introduce the RPT module to mitigate the effects of intra-class bias. As shown in Figure 1, the RPT module employs the methods presented in PRNet [47] to extract a coarse query prototype from . Subsequently, it utilizes this query prototype to refine support prototypes. In the end, GAP operation is used to regenerate the optimal global prototype . The whole process is shown below.

where is the global average pooling operation and is the operation using the RPT module.

In the next segmentation stage, is used to segment the query image.

3.4. Segmentation Stages

3.4.1. Parallel Threshold Perception Module

Few-shot medical image segmentation models typically make predictions by measuring the similarity between support and query images, followed by thresholding the prediction results with a parameter to generate the final segmentation. In AD-Net [25], Hansen et al. proposed a learnable threshold during training, combined with a Sigmoid function with a steepness parameter of 0.5, to assign higher foreground probabilities to regions with values below the threshold and lower foreground probabilities to regions above .

In the standard segmentation setting, test-class slices are accessible during training, enabling the learned threshold for anomaly detection to be optimized on the segmentation targets. However, if test-class slices are excluded during training, the test classes become entirely unseen categories for the model. Consequently, the threshold, not being optimized on these segmentation targets, fails to adapt directly to such categories. This limitation may explain the suboptimal performance of AD-Net in the Setting 2. Therefore, it is essential to dynamically generate thresholds based on the content of the query image, rather than relying solely on the loss between prediction results and ground truth to learn the threshold.

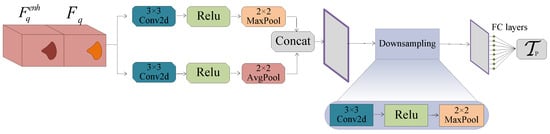

Accordingly, to address this issue, we propose the PTP module to enhance the robustness and efficacy of the threshold. This module processes and through a dual-path structure to fully extract features and reduce the effect of noise. Then, after downsampling, the module outputs the threshold through the fully connected layer.

In detail, Figure 4 shows the dual-path structure. One branch retains the salient features of the original image through convolution and max-pooling operations, and the other retains the image’s overall features by combining convolution and avg-pooling operations. These two complementary branches help the module extract both salient and global features effectively, reducing noise interference.

where and represent the feature maps obtained by the first branch and the second one, respectively. is a commonly used activation function in few-shot learning. is a convolution operation.

Figure 4.

Parallel threshold perception module. This module inputs query features and enhanced query features and outputs a robust and effective threshold after dual-path feature processing and establishing a fully connected layer.

Then, PTP concatenates the outputs of the two branches. To resize the concatenated features to match the fully connected layer, we downsample them using convolution and max-pooling. Finally, the threshold is predicted through the fully connected layer:

where is the operation of using fully connected layer and is the downsampling operation, which means performing convolution, , and max-pooling in sequence.

3.4.2. Segmentor

After obtaining , we employ the anomaly-score-based segmentation method [25] to obtain the predicted mask for query image . As shown in Figure 1, Segmentor calculates the negative cosine similarity between the prototype and the query features , yielding anomaly scores for each position of the query features:

where is the predicted anomaly score map for and is the same scaling factor as in Equation (4).

Then, we perform soft thresholding using , thereby generating the predicted query mask . The process is depicted as follows:

where is the predicted mask for query image and is the same Sigmoid function as in Equation (5).

3.4.3. Query Self-Reference Regularization

Existing FSMIS segmentation models [13,14] usually use the similarity between the support and query images to obtain the predicted mask. It is evident that existing models primarily focus on the information stream from support features to query features, overlooking the supervisory role of the query image itself during the segmentation process.

To enhance the model’s ability to generalize and refine its comprehension and segmentation capabilities for query images, we propose query self-reference regularization (QSR). It allows the information from the query image to be streamed back into itself, effectively reinforcing the model’s generalization performance. QSR is inspired by the assumption that the more accurately a mask labels an image, the better the model can learn and segment FG features. Suppose that the model generates a more accurate predicted query mask as training rounds increase. In this case, we use the predicted query mask as a ‘new support mask’ and the query image as a ‘new support image’ to resegment the query image. After that, we can use cross-entropy loss to measure the prediction mask and the ground truth mask.

Specifically, QSR initially conducts MAP operation, utilizing and as inputs to extract the query prototype. Subsequently, QSR calculates the negative cosine similarity between the query prototype and the query features, then thresholds the calculation results using to finalize another predicted query mask . We can perform cross-entropy loss on the prediction result and the query mask. In addition, we introduce a growth factor for QSR loss, which can increase with the number of training times to gradually amplify the influence of QSR loss. The QSR loss is represented as follows:

where is the query self-reference regularization loss, is the total number of points in the spatial location, is the growth factor, which is initially set to 0.02 and increases by 0.02 with each epoch, and is the query prediction mask generated during the query self-reference regularization process.

3.4.4. Loss Function

The loss function can guide FSMIS models’ learning and enhance training efficiency. Here, we adopt the cross-entropy loss function to measure the dissimilarity between the predicted mask and the ground truth mask for the query image. This process is mathematically shown below:

where is the binary cross-entropy segmentation loss and is the query ground truth mask.

Additionally, as is common with other FSMIS models [27,42], we also add query loss and prototype alignment regularization loss to the overall loss function, as depicted in the subsequent formula:

where is the query loss, is the predicted mask for the query image generated during the calculation of the query loss, is the prototype alignment regularization loss, and is a predicted mask for the support image generated during the calculation of the prototype alignment regularization loss.

In summary, the overall loss function is given by the following formula:

where is the overall loss function.

4. Experiment

4.1. Datasets

We evaluate the proposed method on three publicly available datasets:

(1) CHAOS-MRI [48] is an abdominal MRI dataset from the ISBI 2019 Combined Healthy Abdominal Organ Segmentation Challenge. The dataset comprises 20 3D T2-SPIR MRI scans containing approximately 36 slices. Among them, we selected the left kidney (LK), right kidney (RK), liver, and spleen for the assessment.

(2) Synapse-CT [49] is an abdominal CT dataset obtained from the MICCAI 2015 Multi-Atlas Abdomen Labeling Challenge. It consists of 30 3D abdominal CT scans, and we likewise selected the left kidney, right kidney, liver, and spleen.

(3) CMR [50] is a cardiac MRI dataset from the MICCAI 2019 Multi-Sequence Cardiac MRI Segmentation Challenge. It contains 35 3D cardiac MRI scans, each divided into approximately 13 slices. We selected the blood pool (LV-BP), left ventricle myocardium (LV-MYO), and right ventricle myocardium (RV).

4.2. Experimental Settings and Evaluation Metric

The current evaluation methods for FSMIS task are generally aligned with ALPNet [13]. In order to make a fair comparison, we also adopt the same experimental settings.

(1) Setting 1 allows FG category organs in the input image to appear in the background. This situation means that the new category used for evaluation is not unseen.

(2) Setting 2 does not allow FG category organs in the input image to appear in any form. This situation means that the new category used for evaluation is unseen. It is worth stating that Setting 2 cannot be applied to CMR dataset due to the difficulty of excluding slices containing the target class from the cardiac dataset. In contrast, there is no such difficulty in MRI and CT datasets.

Before training, we process the training dataset employing the same data preprocessing technique used in ALP-Net [10], where 2D slices of 3D scans are formatted as and replicated three times along the channel dimensions to fit the model’s input. For model training, we adopt the self-supervision method based on supervoxels used in AD-Net [25] to train the model.

For evaluating the model output, we adopt the Dice score, which is commonly used in FSMIS tasks, as an evaluation metric. The Dice score [10] measures the similarity between the predicted mask and the ground truth based on the pixel-level overlap of the images. For ground truth A and predicted mask B, their Dice score can be expressed as:

where the value of ranges between 0 and 1. When the value equals 1, it means the segmentation result matches precisely. When the value equals 0, it means a complete disjunction of the segmentation result and the actual annotation. Thus, the higher the value of the Dice score, the better the segmentation result.

4.3. Implementation Details

In DCOP-Net, the encoder is a pretrained ResNet-101 network [43] on the MS-COCO dataset [51]. For the training of our model, we set the total number of required iterations to 15K, comprising 5K iterations per epoch. Moreover, the initial learning rate is set to 0.001, the batch size to 1, and the decay rate to 0.98. Training takes 2.25 h on an Nvidia RTX 4060 GPU. In order to simulate the scarcity of data in healthcare scenarios, the experiments follow the 1-way 1-shot task. In order to avoid the influence of the dataset on the experimental results, we adopt the five-fold cross-validation [25] to record the results.

4.4. Results and Comparison with SOTA Methods

In order to determine the effectiveness and superiority of DCOP-Net, we compare our model with FSMIS models, including ALP-Net [10], SE-Net [16], AAS-DCL [19], CRAP-Net [20], CAT-Net [23], AD-Net [25], Q-Net [26], PA-Net [27], SR&CL [40], RPT-Net [42], DSP-Net [52], GMRD [14], and PAMI [53]. Table 1 presents the experimental results of the currently proposed FSMIS models on the Synapse-CT and CHAOS-MRI datasets under Setting 1 and Setting 2. Table 2 presents the experimental results on the CMR dataset under Setting 1.

Table 1.

Comparison of different methods under Setting 1 and Setting 2 on CHAOS-MRI and Synapse-CT. Best values are in bold, second best are underlined. ‘–’ indicates not reported.

Table 2.

Qualitative comparison of different methods under Setting 1 on the CMR-MRI. The best value is shown in bold font and the second-best value is underlined.

As shown in Table 1, the average Dice scores of DCOP-Net in the three scenarios are generally better than the other methods. Specifically, under Setting 1, our model’s average Dice score on the CHAOS-MRI dataset is 82.64%, which exceeds the highest current result (RPT-Net) by 0.2%. Especially for the segmentation of spleen organs, our method outperforms the highest current result (RPT-Net) by 4.15%. Our method achieves an average Dics score of 74.59% on the Synapse-CT dataset. Under Setting 2, DCOP-Net achieves average Dice score of 71.72% and 80.74% on the Synapse-CT and CHAOS-MRI datasets, respectively. In particular, DCOP-Net’s average Dice score on the CHAOS-MRI dataset exceeds the second-best method (PAMI) by 1.21%. Notably, DCOP-Net achieves 4.95%, outperforming the suboptimal method PAMI, and 1.94%, outperforming the suboptimal method RPT-Net, in the segmentation of spleen and LK, respectively, on the CHAOS-MRI dataset. Moreover, comparing the segmentation results of our model on the Synapse-CT dataset under Setting 1, our model outperforms RPT-Net, demonstrating our model’s ability to generalize under more stringent conditions. In addition, compared to processing MRI-modality medical images, few-shot segmentation models exhibit a decrease in overall segmentation accuracy when dealing with lower-contrast CT-modality datasets. Addressing this challenge remains a crucial task for current few-shot medical image segmentation models.

As shown in Table 2, although the average Dice score of our model does not exceed the current best method, it achieves satisfactory results in the segmentation of LV-BP and RV. There are possible reasons for this situation. The poor sample quality of the CMR dataset makes it difficult for the model to adequately learn enough features to segment accurately. Moreover, the shape and structure of LV-MYO are relatively complex, making it difficult to accurately distinguish between the tissues surrounding the LV wall and the myocardial cell wall itself.

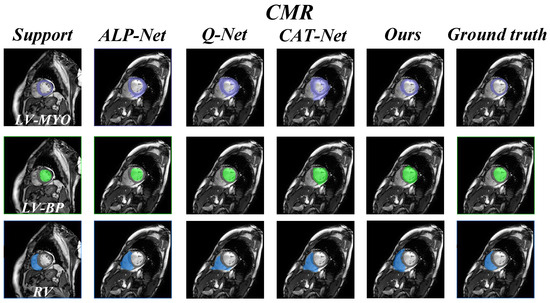

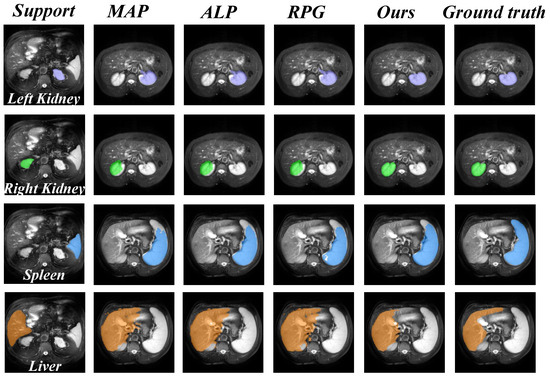

In addition, we also present a visual comparison of the prediction results of existing models on the three datasets in Figure 5 and Figure 6. It can be seen from Figure 5 that our model exhibits fewer over-segmentation and obtains more accurate segmentation results in CHAOS-MRI and Synapse-CT datasets. As shown in Figure 6, our model achieves good results in segmenting LV-BP and RV organs in the CMR dataset.

Figure 5.

Comparison of qualitative results between our model and other methods. The upper row illustrates the results on the CHAOS-MRI dataset, while the lower row shows the results on the Synapse-CT dataset.

Figure 6.

Comparison of qualitative results between our model and other methods on the CMR dataset.

4.5. Ablation Studies

We conduct ablation studies on the CHAOS-MRI dataset under Setting 1 or Setting 2. Specifically, we examine component performance and the validity of the generation of prior masks, extraction of prototypes, and generation of thresholds.

4.5.1. Effect of Each Component

Notably, we utilize AD-Net as Baseline in this part. As shown in Table 3, we conduct experiments with several combinations of the proposed models. For example, “Baseline + DFCA” indicates that the DFCA module is embedded in the Baseline network, while “Baseline + DFCA + OP + PTP + QSR” indicates that DFCA, OP, PTP, and QSR modules are embedded in the Baseline network.

Table 3.

Ablation experiments with different module combinations on the CHAOS-MRI dataset. Best values are in bold, second best are underlined. ‘–’ indicates not reported.

It can be seen from Table 3 that the overall segmentation performance of the model shows a steady improvement as our proposed modules are progressively embedded into the Baseline. Specifically, the overall segmentation performance of the model is improved by 2.06% with the addition of the DFCA module to the Baseline. On this basis, adding OP further improves the overall performance of the Baseline by 1.14% by fusing contextual information in the prototype. The overall performance of the Baseline is improved by 3.2% with the combination of the DFCA module and the OP module, which also shows that the prototype bias problem is effectively mitigated.

Furthermore, to validate the effectiveness of the QSR strategy in enhancing the model’s generalization ability, we conduct additional ablation experiments under Setting 2. As clearly shown in Table 4, when the QSR module is not integrated, the model achieves an average Dice score of 79.96% on the CHAOS-MRI dataset. After incorporating the QSR strategy, the overall segmentation accuracy improves to 80.74%, with noticeable improvements in the segmentation accuracy of the spleen, liver, LK, and RK. Moreover, when evaluated on the Synapse-CT dataset, the model with the QSR strategy achieves an overall segmentation accuracy of 71.86%, surpassing the version without QSR by 0.62%. These results demonstrate that integrating the QSR strategy consistently enhances the model’s segmentation performance on both CHAOS-MRI and Synapse-CT datasets, thereby improving its generalization ability for segmenting unseen categories. Overall, with the gradual integration of the modules, the model’s segmentation performance continues to improve, which validates the effectiveness of our work.

Table 4.

Qualitative comparison of the validity of the QSR strategy on the CHAOS-MRI and Synapse-CT datasets. Best values are in bold.

4.5.2. Validity of Different Prior Mask Generation Methods

In order to evaluate the role of PMG, we conduct an ablation study in DCOP-Net. The results in Table 5 clearly and unambiguously show that our model achieves a 0.49% higher average Dice score when applying PMG than when applying mask incorporate feature extraction (MIFE) [23]. This improvement is mainly attributed to the fact that MIFE directly employs the normalized value of cosine distance as the prediction probability, which may introduce more noise. In contrast, we use the shifted Sigmoid function processing to discretize the anomaly score results more obviously, which enhances the model’s ability to recognize image boundaries.

Table 5.

Qualitative comparison of the validity of different prior mask generation methods on the CHAOS-MRI dataset. Best values are in bold.

4.5.3. Validity of Different Prototype Extraction Methods

In order to verify the validity of OP in extracting prototypes, we compare it with the single prototype method MAP [24], the multiple prototype methods adaptive local prototype pooling (ALP) [10], and regional prototype generation (RPG) [42] for the ablation study.

The comparison results are summarized in Table 6 and Figure 7. It can be seen from the results that the overall segmentation of the model is less effective than the ALP, RPG, and OP methods when the single prototype method MAP is used. The reason for this is that in a few-shot environment where it is already difficult to describe the FG categories, MAP may further blur the detailed features, leading to a lower model segmentation accuracy. In contrast, the model achieves 0.52% better results when applying the RPG method than the ALP method. However, RPG divides FG features into dozens of fragmented prototypes while ignoring the collaborative relationships between support prototypes.

Table 6.

Qualitativecomparison of the validity of different prototype extraction methods on the CHAOS-MRI dataset. Best values are in bold, second best are underlined.

Figure 7.

Comparison of validity of prototype extraction methods between our method and other methods on the CHAOS-MRI dataset.

In our OP module, we exploit the self-attention mechanism to make support prototypes learn from each other, enhancing the representation ability of support prototypes. Thus, our method achieves better results in segmenting the three organs RK, LK, and spleen than the RPG method and achieves a 0.96% higher overall segmentation accuracy.

4.5.4. Ablation Study on the Maximum Number of Onion Layers

The number of onion layers depends on the size of the target organ, but there is an upper limit . This is a hyperparameter determined experimentally. We conduct experiments using DCOP-Net under Setting 1 on the CHAOS-MRI dataset, and the experimental results are presented in Table 7. As shown in the table, the model achieves the best segmentation performance when , reaching an average Dice score of 82.64%. Therefore, this result is 0.67% higher than the suboptimal case with . This demonstrates that the optimal value for the upper limit of onion layers is 4.

Table 7.

Ablation study on the maximum number of onion layers. Best values are in bold, second best are underlined.

4.5.5. Validity of Different Threshold Generation Methods

In order to verify the effectiveness of PTP, we compare it with the query-informed threshold adaptation (TA) method in Q-net [26]. Table 8 illustrates the comparison results. As can be seen from the table, there is an overall improvement of 0.47% for our method compared to the TA method. This indicates that thresholds can be obtained more efficiently using multiple inputs and multiple paths, thus positively affecting model segmentation.

Table 8.

Qualitative comparison of the validity of different threshold generation modules on the CHAOS-MRI dataset. Best values are in bold.

5. Conclusions and Future Work

In this paper, we propose the DCOP-Net for few-shot medical image segmentation. To address the issues of prototype bias and low generalization ability in few-shot environments, we design the DFCA and OP modules in the prototype learning stage. These modules enable the support FG features to efficiently learn and integrate new features from the query FG features and enable the extracted prototype to retain crucial contextual information, effectively mitigating the prototype bias problem. In the subsequent segmentation stage, we input the query features into the PTP module to obtain more effective and robust thresholds to delineate the FG and BG regions of the query image. In addition, we propose a query self-reference regularization strategy to optimize the model training process and speed up the model convergence. We validate the effectiveness of DCOP-Net through comprehensive experiments on CHAOS-MRI, Synapse-CT, and CMR datasets. The experimental results show that the proposed model outperforms other state-of-the-art methods on the CHAOS-MRI and Synapse-CT evaluation metrics, demonstrating superior segmentation and generalization capabilities.

Although DCOP-Net achieves strong performance on CHAOS-MRI and Synapse-CT datasets, it does not attain optimal segmentation across all evaluation metrics. For example, it did not outperform the best available method in segmenting LV-MYO on the CMR dataset. This problem is mainly attributed to two factors: (1) the cardiac dataset is difficult to label, resulting in a training dataset with a lower quality than the abdominal dataset, from which the model is difficult to learn high-quality prototypes, and (2) the cardiac dataset has a complex structure, which increases the difficulty of recognition. In addition, the proposed dual-filter cross attention module enhances the utilization of query image foreground features, allowing the model to leverage information from the query image to improve the quality of support prototypes and mitigate prototype bias. However, while the proposed filter cross attention strategy effectively eliminates background mismatches, it also restricts the model’s ability to utilize potentially useful auxiliary information present in the background. Therefore, an important future research direction would be to enhance the utilization of background features by improving intra-class cohesion and inter-class separation in feature space, further optimizing the performance of few-shot segmentation networks. Moreover, in future work, we plan to improve the model’s performance in weakly annotated environments and enhance its ability to handle the segmentation of complex organs for a variety of FSMIS tasks.

Author Contributions

L.N.: project administration, supervision. Y.L. (Yang Liu): conceptualization, writing—original draft, Software. Z.Z.: methodology, writing—review and editing. Y.L. (Yongtao Li): methodology, validation, writing—reviewing and editing. J.Z.: project administration, supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Science Foundation of Shandong Province under the Grant ZR2022MF338 and ZR2023LZH018, Humanity and Social Science Fund of the Ministry of Education under Grant 20YJAZH078 and 20YJAZH127, and Open Project of Tongji University Embedded System and Service Computing of Ministry of Education of China under Grant ESSCKF2022-02.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Guo, Y.; Liu, Y.; Georgiou, T.; Lew, M.S. A review of semantic segmentation using deep neural networks. Int. J. Multimed. Inf. Retr. 2018, 7, 87–93. [Google Scholar]

- Waisberg, E.; Ong, J.; Masalkhi, M.; Kamran, S.A.; Zaman, N.; Sarker, P.; Lee, A.G.; Tavakkoli, A. GPT-4: A new era of artificial intelligence in medicine. Ir. J. Med. Sci. 2023, 192, 3197–3200. [Google Scholar] [PubMed]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: Redesigning skip connections to exploit multiscale features in image segmentation. IEEE Trans. Med. Imaging 2019, 39, 1856–1867. [Google Scholar] [CrossRef]

- Ruan, J.; Xie, M.; Gao, J.; Liu, T.; Fu, Y. EGE-UNet: An efficient group enhanced unet for skin lesion segmentation. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2023; Springer: Cham, Switzerland, 2023; pp. 481–490. [Google Scholar]

- Zhou, Y.; Kang, X.; Ren, F.; Lu, H.; Nakagawa, S.; Shan, X. A multi-attention and depthwise separable convolution network for medical image segmentation. Neurocomputing 2024, 564, 126970. [Google Scholar]

- Liu, Q.; Yu, L.; Luo, L.; Dou, Q.; Heng, P.A. Semi-supervised medical image classification with relation-driven self-ensembling model. IEEE Trans. Med. Imaging 2020, 39, 3429–3440. [Google Scholar] [CrossRef] [PubMed]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Lang, C.; Cheng, G.; Tu, B.; Li, C.; Han, J. Base and Meta: A New Perspective on Few-Shot Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10669–10686. [Google Scholar]

- Tang, H.; Yuan, C.; Li, Z.; Tang, J. Learning attention-guided pyramidal features for few-shot fine-grained recognition. Pattern Recognit. 2022, 130, 108792. [Google Scholar] [CrossRef]

- Ouyang, C.; Biffi, C.; Chen, C.; Kart, T.; Qiu, H.; Rueckert, D. Self-supervision with superpixels: Training few-shot medical image segmentation without annotation. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part XXIX; Springer: Cham, Switzerland, 2020; pp. 762–780. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. Adv. Neural Inf. Process. Syst. 2017, 30, 4077–4087. [Google Scholar]

- Tang, H.; Liu, X.; Sun, S.; Yan, X.; Xie, X. Recurrent mask refinement for few-shot medical image segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 3918–3928. [Google Scholar]

- Ouyang, C.; Biffi, C.; Chen, C.; Kart, T.; Qiu, H.; Rueckert, D. Self-supervised learning for few-shot medical image segmentation. IEEE Trans. Med. Imaging 2022, 41, 1837–1848. [Google Scholar]

- Cheng, Z.; Wang, S.; Xin, T.; Zhou, T.; Zhang, H.; Shao, L. Few-shot medical image segmentation via generating multiple representative descriptors. IEEE Trans. Med. Imaging 2024, 43, 2202–2214. [Google Scholar] [CrossRef]

- Teng, P.; Liu, W.; Wang, X.; Wu, D.; Yuan, C.; Cheng, Y.; Huang, D.S. Beyond singular prototype: A prototype splitting strategy for few-shot medical image segmentation. Neurocomputing 2024, 597, 127990. [Google Scholar] [CrossRef]

- Roy, A.G.; Siddiqui, S.; Pölsterl, S.; Navab, N.; Wachinger, C. ‘Squeeze & excite’guided few-shot segmentation of volumetric images. Med. Image Anal. 2020, 59, 101587. [Google Scholar]

- Feng, R.; Zheng, X.; Gao, T.; Chen, J.; Wang, W.; Chen, D.Z.; Wu, J. Interactive few-shot learning: Limited supervision, better medical image segmentation. IEEE Trans. Med. Imaging 2021, 40, 2575–2588. [Google Scholar] [PubMed]

- Sun, L.; Li, C.; Ding, X.; Huang, Y.; Chen, Z.; Wang, G.; Yu, Y.; Paisley, J. Few-shot medical image segmentation using a global correlation network with discriminative embedding. Comput. Biol. Med. 2022, 140, 105067. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Xiao, F.; Liang, C. Dual contrastive learning with anatomical auxiliary supervision for few-shot medical image segmentation. In Computer Vision—ECCV 2022; Springer: Cham, Switzerland, 2022; pp. 417–434. [Google Scholar]

- Ding, H.; Sun, C.; Tang, H.; Cai, D.; Yan, Y. Few-shot medical image segmentation with cycle-resemblance attention. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 2488–2497. [Google Scholar]

- Li, G.; Jampani, V.; Sevilla-Lara, L.; Sun, D.; Kim, J.; Kim, J. Adaptive prototype learning and allocation for few-shot segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8334–8343. [Google Scholar]

- Zhang, B.; Xiao, J.; Qin, T. Self-guided and cross-guided learning for few-shot segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8312–8321. [Google Scholar]

- Lin, Y.; Chen, Y.; Cheng, K.T.; Chen, H. Few shot medical image segmentation with cross attention transformer. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2023; Springer: Cham, Switzerland, 2023; pp. 233–243. [Google Scholar]

- Zhang, X.; Wei, Y.; Yang, Y.; Huang, T.S. SG-One: Similarity Guidance Network for One-Shot Semantic Segmentation. IEEE Trans. Cybern. 2020, 50, 3855–3865. [Google Scholar]

- Hansen, S.; Gautam, S.; Jenssen, R.; Kampffmeyer, M. Anomaly detection-inspired few-shot medical image segmentation through self-supervision with supervoxels. Med. Image Anal. 2022, 78, 102385. [Google Scholar]

- Shen, Q.; Li, Y.; Jin, J.; Liu, B. Q-net: Query-informed few-shot medical image segmentation. In Intelligent Systems and Applications; Springer: Cham, Switzerland, 2023; pp. 610–628. [Google Scholar]

- Wang, K.; Liew, J.H.; Zou, Y.; Zhou, D.; Feng, J. Panet: Few-shot image semantic segmentation with prototype alignment. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9197–9206. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-unet: Unet-like pure transformer for medical image segmentation. In Computer Vision—ECCV 2022 Workshops; Springer: Cham, Switzerland, 2022; pp. 205–218. [Google Scholar]

- Wu, Y.; Xia, Y.; Song, Y.; Zhang, D.; Liu, D.; Zhang, C.; Cai, W. Vessel-Net: Retinal vessel segmentation under multi-path supervision. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2019: 22nd International Conference, Shenzhen, China, 13–17 October 2019, Proceedings, Part I; Springer: Cham, Switzerland, 2019; pp. 264–272. [Google Scholar]

- Chen, L.; Bentley, P.; Mori, K.; Misawa, K.; Fujiwara, M.; Rueckert, D. DRINet for medical image segmentation. IEEE Trans. Med. Imaging 2018, 37, 2453–2462. [Google Scholar]

- Gu, R.; Wang, G.; Song, T.; Huang, R.; Aertsen, M.; Deprest, J.; Ourselin, S.; Vercauteren, T.; Zhang, S. CA-Net: Comprehensive attention convolutional neural networks for explainable medical image segmentation. IEEE Trans. Med. Imaging 2020, 40, 699–711. [Google Scholar]

- Dong, N.; Xing, E.P. Few-Shot Semantic Segmentation with Prototype Learning. In Proceedings of the British Machine Vision Conference 2018, BMVC 2018, Newcastle, UK, 3–6 September 2018; Volune 3; p. 4. [Google Scholar]

- Hansen, S.; Gautam, S.; Salahuddin, S.A.; Kampffmeyer, M.; Jenssen, R. ADNet++: A few-shot learning framework for multi-class medical image volume segmentation with uncertainty-guided feature refinement. Med. Image Anal. 2023, 89, 102870. [Google Scholar]

- Petit, O.; Thome, N.; Rambour, C.; Themyr, L.; Collins, T.; Soler, L. U-net transformer: Self and cross attention for medical image segmentation. In Machine Learning in Medical Imaging: 12th International Workshop, MLMI 2021, Held in Conjunction with MICCAI 2021, Strasbourg, France, 27 September 2021, Proceedings; Springer: Cham, Switzerland, 2021; pp. 267–276. [Google Scholar]

- Nie, D.; Gao, Y.; Wang, L.; Shen, D. ASDNet: Attention based semi-supervised deep networks for medical image segmentation. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2018: 21st International Conference, Granada, Spain, 16–20 September 2018, Proceedings, Part IV; Springer: Cham, Switzerland, 2018; pp. 370–378. [Google Scholar]

- Wang, H.; Zhang, X.; Hu, Y.; Yang, Y.; Cao, X.; Zhen, X. Few-shot semantic segmentation with democratic attention networks. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part XIII; Springer: Cham, Switzerland, 2020; pp. 730–746. [Google Scholar]

- Hu, T.; Yang, P.; Zhang, C.; Yu, G.; Mu, Y.; Snoek, C.G. Attention-based multi-context guiding for few-shot semantic segmentation. Proc. AAAI Conf. Artif. Intell. 2019, 33, 8441–8448. [Google Scholar]

- Wang, Y.; Sun, R.; Zhang, Z.; Zhang, T. Adaptive agent transformer for few-shot segmentation. In Computer Vision—ECCV 2022; Springer: Cham, Switzerland, 2022; pp. 36–52. [Google Scholar]

- Xie, G.S.; Liu, J.; Xiong, H.; Shao, L. Scale-aware graph neural network for few-shot semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5475–5484. [Google Scholar]

- Wang, R.; Zhou, Q.; Zheng, G. Few-shot medical image segmentation regularized with self-reference and contrastive learning. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2022; Springer: Cham, Switzerland, 2022; pp. 514–523. [Google Scholar]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-learning in neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5149–5169. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, S.; Xin, T.; Zhang, H. Few-shot medical image segmentation via a region-enhanced prototypical transformer. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2023; Springer: Cham, Switzerland, 2023; pp. 271–280. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Xu, Q.; Zhao, W.; Lin, G.; Long, C. Self-calibrated cross attention network for few-shot segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 655–665. [Google Scholar]

- Oreshkin, B.; Rodríguez López, P.; Lacoste, A. Tadam: Task dependent adaptive metric for improved few-shot learning. Adv. Neural Inf. Process. Syst. 2018, 31, 719–729. [Google Scholar]

- Karimijafarbigloo, S.; Azad, R.; Merhof, D. Self-supervised few-shot learning for semantic segmentation: An annotation-free approach. In Predictive Intelligence in Medicine; Springer: Cham, Switzerland, 2023; pp. 159–171. [Google Scholar]

- Liu, J.; Qin, Y. Prototype refinement network for few-shot segmentation. arXiv 2020, arXiv:2002.03579. [Google Scholar]

- Kavur, A.E.; Gezer, N.S.; Barış, M.; Aslan, S.; Conze, P.H.; Groza, V.; Pham, D.D.; Chatterjee, S.; Ernst, P.; Özkan, S.; et al. CHAOS challenge-combined (CT-MR) healthy abdominal organ segmentation. Med. Image Anal. 2021, 69, 101950. [Google Scholar]

- Landman, B.; Xu, Z.; Igelsias, J.; Styner, M.; Langerak, T.; Klein, A. Miccai multi-atlas labeling beyond the cranial vault—Workshop and challenge. In Proceedings of the MICCAI 2015, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Zhuang, X. Multivariate mixture model for myocardial segmentation combining multi-source images. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2933–2946. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Tang, S.; Yan, S.; Qi, X.; Gao, J.; Ye, M.; Zhang, J.; Zhu, X. Few-shot medical image segmentation with high-fidelity prototypes. Med. Image Anal. 2025, 100, 103412. [Google Scholar]

- Zhu, Y.; Wang, S.; Xin, T.; Zhang, Z.; Zhang, H. Partition-a-medical-image: Extracting multiple representative sub-regions for few-shot medical image segmentation. IEEE Trans. Instrum. Meas. 2024, 73, 5016312. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).