Technological Advancements in Human Navigation for the Visually Impaired: A Systematic Review

Abstract

:1. Introduction

2. State of the Art

3. Methodology

3.1. Search Strategy

3.2. PRISMA Methodology

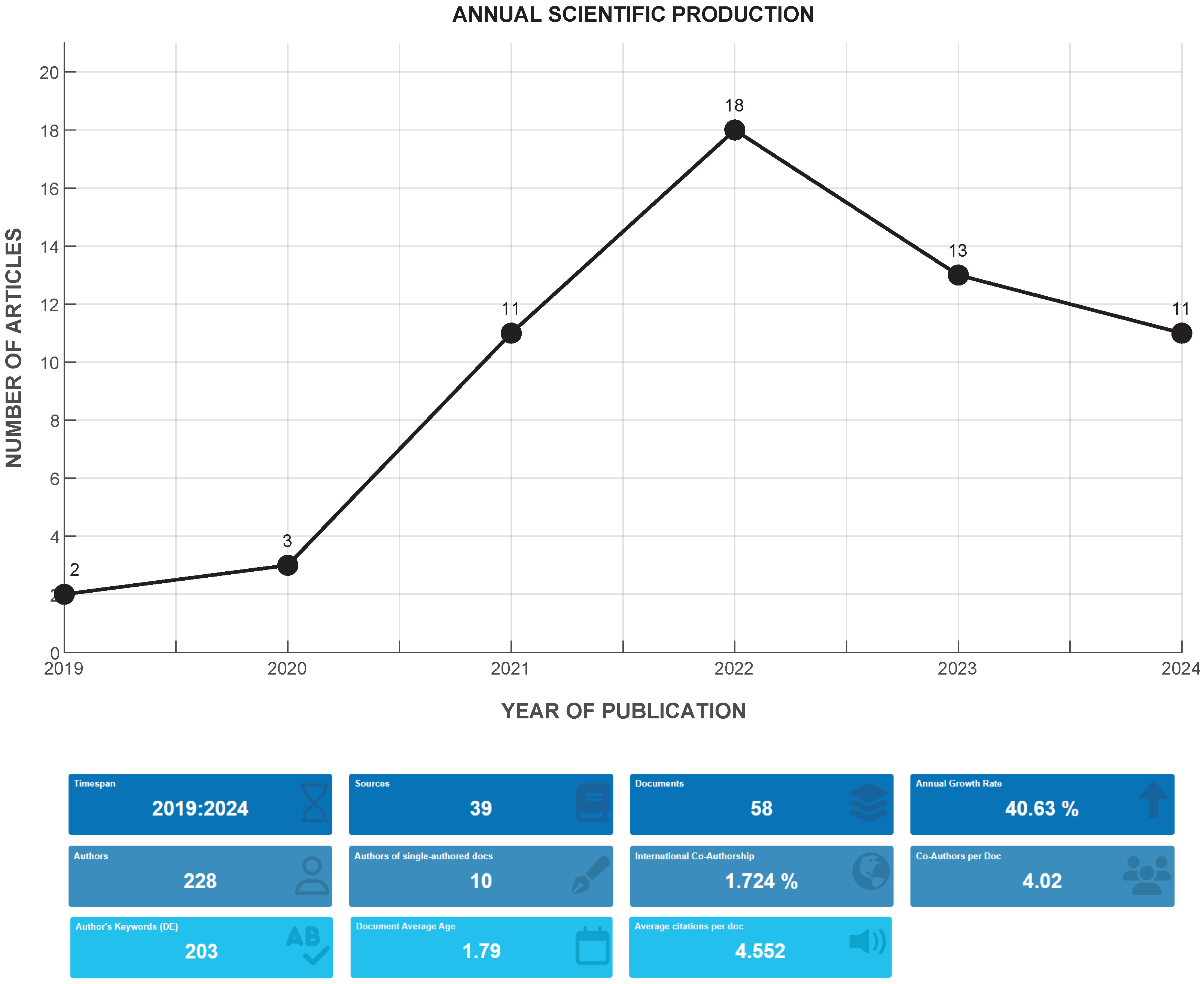

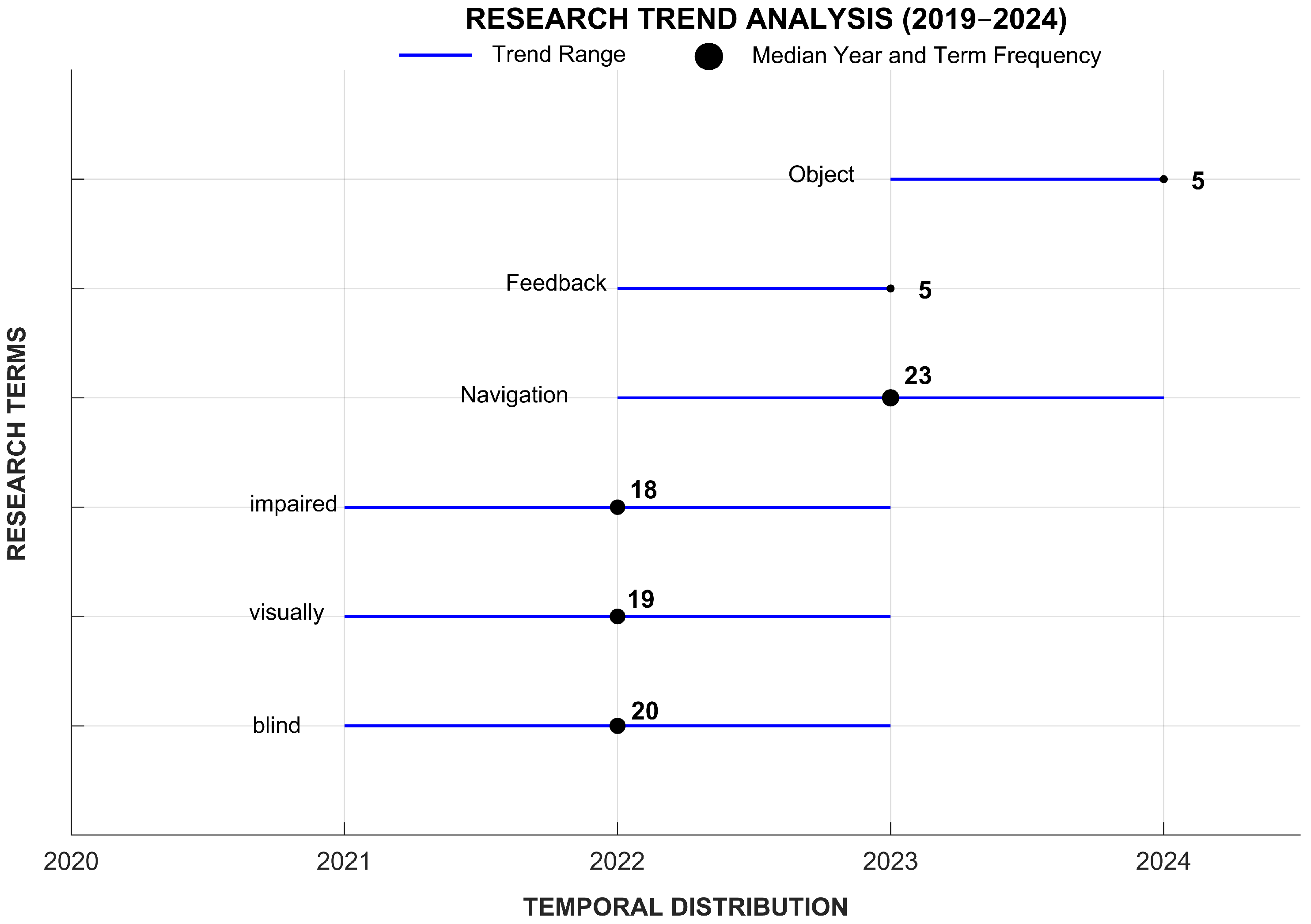

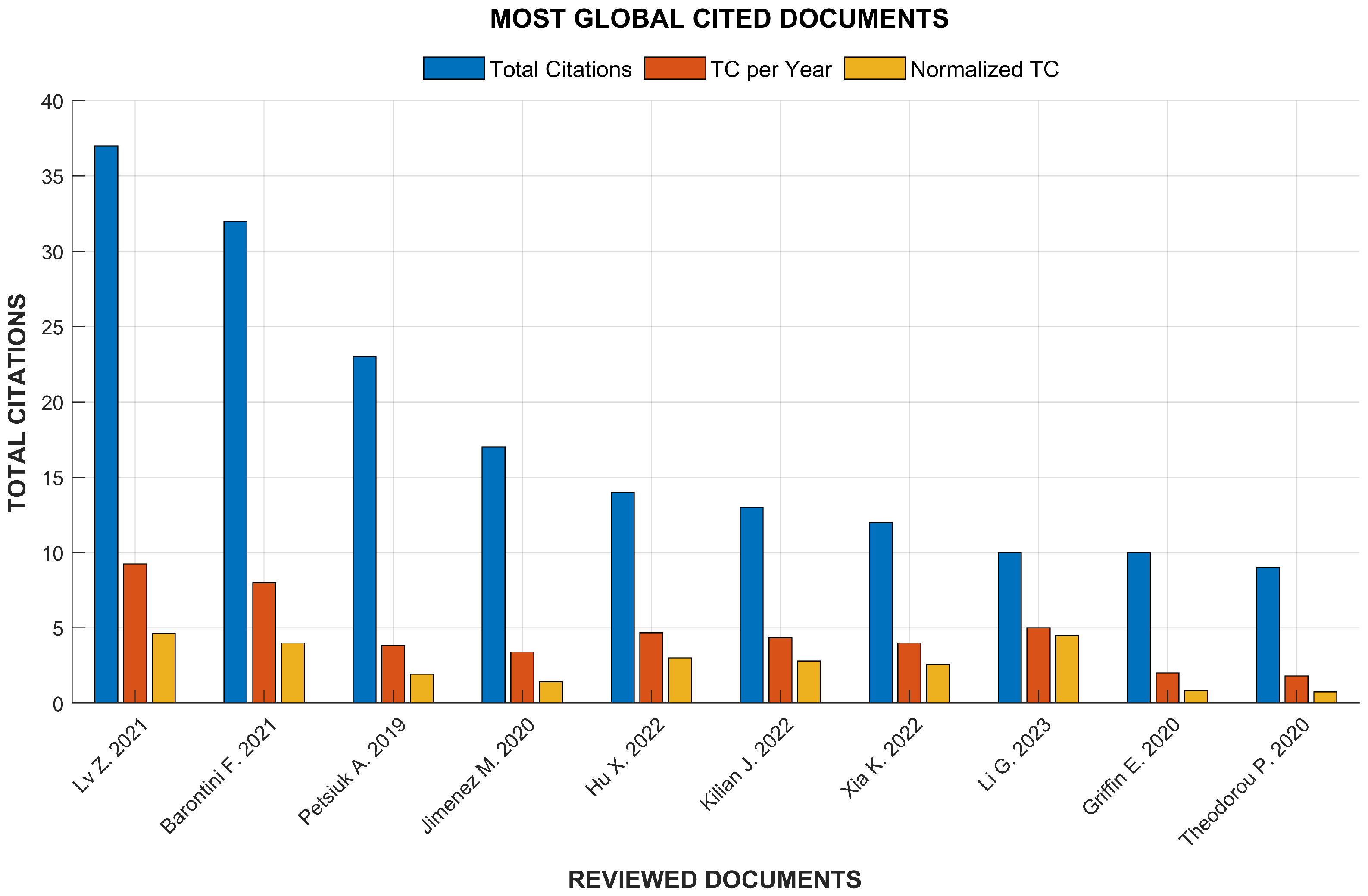

3.3. Bibliometric Analysis

4. Results

4.1. Smartphone Technologies

4.2. Haptic Systems

4.3. Navigation Algorithms in Systems for the Blind

4.4. Systems with Artificial Intelligence (AI)

5. Discussion

Limitations and Recommendations

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Sudha, L.K.; Ajay, R.; Manu Gowda, S.H.; Poornima, V.B.; Vaibhav, S. Smart Stick for Visually Impaired on Streets Using Arduino UNO. Int. J. Eng. Technol. Manag. Sci. 2023, 7, 309–316. [Google Scholar] [CrossRef]

- Vella, D.; Porter, C. Remapping the Document Object Model using Geometric and Hierarchical Data Structures for Efficient Eye Control. Proc. ACM Hum.-Comput. Interact. 2024, 8, 234. [Google Scholar] [CrossRef]

- Isaksson-Daun, J.; Jansson, T.; Nilsson, J. Using Portable Virtual Reality to Assess Mobility of Blind and Low-Vision Individuals With the Audomni Sensory Supplementation Feedback. IEEE Access 2024, 12, 26222–26241. [Google Scholar] [CrossRef]

- Alejandro Beltran-Iza, E.; Oswaldo Norona-Meza, C.; Alfredo Robayo-Nieto, A.; Padilla, O.; Toulkeridis, T. Creation of a Mobile Application for Navigation for a Potential Use of People with Visual Impairment Exercising the NTRIP Protocol. Sustainability 2022, 14, 17027. [Google Scholar] [CrossRef]

- Busaeed, S.; Katib, I.; Albeshri, A.; Corchado, J.M.; Yigitcanlar, T.; Mehmood, R. LidSonic V2.0: A LiDAR and Deep-Learning-Based Green Assistive Edge Device to Enhance Mobility for the Visually Impaired. Sensors 2022, 22, 7435. [Google Scholar] [CrossRef]

- Sethi, D.; Bharti, S.; Prakash, C. A comprehensive survey on gait analysis: History, parameters, approaches, pose estimation, and future work. Artif. Intell. Med. 2022, 129, 102314. [Google Scholar] [CrossRef] [PubMed]

- Xu, P.; Kennedy, G.A.; Zhao, F.Y.; Zhang, W.J.; Van Schyndel, R. Wearable Obstacle Avoidance Electronic Travel Aids for Blind and Visually Impaired Individuals: A Systematic Review. IEEE Access 2023, 11, 66587–66613. [Google Scholar] [CrossRef]

- Lian, Y.; Liu, D.E.; Ji, W.Z. Survey and analysis of the current status of research in the field of outdoor navigation for the blind. Disabil. Rehabil. Assist. Technol. 2024, 19, 1657–1675. [Google Scholar] [CrossRef]

- Abidi, M.H.; Noor Siddiquee, A.; Alkhalefah, H.; Srivastava, V. A comprehensive review of navigation systems for visually impaired individuals. Heliyon 2024, 10, e31825. [Google Scholar] [CrossRef]

- Madake, J.; Bhatlawande, S.; Solanke, A.; Shilaskar, S. A Qualitative and Quantitative Analysis of Research in Mobility Technologies for Visually Impaired People. IEEE Access 2023, 11, 82496–82520. [Google Scholar] [CrossRef]

- Santos, A.D.P.D.; Suzuki, A.H.G.; Medola, F.O.; Vaezipour, A. A Systematic Review of Wearable Devices for Orientation and Mobility of Adults with Visual Impairment and Blindness. IEEE Access 2021, 9, 162306–162324. [Google Scholar] [CrossRef]

- Lv, Z.; Li, J.; Li, H.; Xu, Z.; Wang, Y. Blind Travel Prediction Based on Obstacle Avoidance in Indoor Scene. Wirel. Commun. Mob. Comput. 2021, 2021, 5536386. [Google Scholar] [CrossRef]

- Barontini, F.; Catalano, M.G.; Pallottino, L.; Leporini, B.; Bianchi, M. Integrating Wearable Haptics and Obstacle Avoidance for the Visually Impaired in Indoor Navigation: A User-Centered Approach. IEEE Trans. Haptics 2021, 14, 109–122. [Google Scholar] [CrossRef]

- Petsiuk, A.L.; Pearce, J.M. Low-cost open source ultrasound-sensing based navigational support for the visually impaired. Sensors 2019, 19, 3783. [Google Scholar] [CrossRef]

- Jimenez, M.F.; Mello, R.C.; Bastos, T.; Frizera, A. Assistive locomotion device with haptic feedback for guiding visually impaired people. Med. Eng. Phys. 2020, 80, 18–25. [Google Scholar] [CrossRef]

- Hu, X.; Song, A.; Wei, Z.; Zeng, H. StereoPilot: A Wearable Target Location System for Blind and Visually Impaired Using Spatial Audio Rendering. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 1621–1630. [Google Scholar] [CrossRef] [PubMed]

- Kilian, J.; Neugebauer, A.; Scherffig, L.; Wahl, S. The Unfolding Space Glove: A Wearable Spatio-Visual to Haptic Sensory Substitution Device for Blind People. Sensors 2022, 22, 1859. [Google Scholar] [CrossRef] [PubMed]

- Xia, K.; Li, X.; Liu, H.; Zhou, M.; Zhu, K. IBGS: A Wearable Smart System to Assist Visually Challenged. IEEE Access 2022, 10, 77810–77825. [Google Scholar] [CrossRef]

- Li, G.; Xu, J.; Li, Z.; Chen, C.; Kan, Z. Sensing and Navigation of Wearable Assistance Cognitive Systems for the Visually Impaired. IEEE Trans. Cogn. Dev. Syst. 2023, 15, 122–133. [Google Scholar] [CrossRef]

- Griffin, E.; Picinali, L.; Scase, M. The effectiveness of an interactive audio-tactile map for the process of cognitive mapping and recall among people with visual impairments. Brain Behav. 2020, 10, e01650. [Google Scholar] [CrossRef]

- Theodorou, P.; Meliones, A. Towards a training framework for improved assistive mobile app acceptance and use rates by blind and visually impaired people. Educ. Sci. 2020, 10, 58. [Google Scholar] [CrossRef]

- Theodorou, P.; Tsiligkos, K.; Meliones, A.; Filios, C. An Extended Usability and UX Evaluation of a Mobile Application for the Navigation of Individuals with Blindness and Visual Impairments Outdoors—An Evaluation Framework Based on Training. Sensors 2022, 22, 4538. [Google Scholar] [CrossRef] [PubMed]

- Kamilla, S.K. Assistance for Visually Impaired People based on Ultrasonic Navigation. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 3716–3720. [Google Scholar] [CrossRef]

- Muradyan, S. Assistive technology for students with visual impairments. Armen. J. Spec. Educ. 2023, 7, 77–88. [Google Scholar] [CrossRef]

- Siddesh, G.M.; Srinivasa, K.G. IoT Solution for Enhancing the Quality of Life of Visually Impaired People. Int. J. Grid High Perform. Comput. 2021, 13, 1–23. [Google Scholar] [CrossRef]

- Schwartz, B.S.; King, S.; Bell, T. EchoSee: An Assistive Mobile Application for Real-Time 3D Environment Reconstruction and Sonification Supporting Enhanced Navigation for People with Vision Impairments. Bioengineering 2024, 11, 831. [Google Scholar] [CrossRef]

- Yerkewar, D.; Vaidya, M.B.; Hekar, M.; Jadhao, M.; Nile, M. Implementation of Smart Stick for Blind People. Int. J. Adv. Res. Sci. Commun. Technol. 2022, 2, 239–242. [Google Scholar] [CrossRef]

- Yuan, Z.; Azzino, T.; Hao, Y.; Lyu, Y.; Pei, H.; Boldini, A.; Mezzavilla, M.; Beheshti, M.; Porfiri, M.; Hudson, T.E.; et al. Network-Aware 5G Edge Computing for Object Detection: Augmenting Wearables to ’See’ More, Farther and Faster. IEEE Access 2022, 10, 29612–29632. [Google Scholar] [CrossRef]

- Lakshmi, D.S. Smart Blind Walking Stick. Int. J. Res. Appl. Sci. Eng. Technol. 2023, 11, 4954–4964. [Google Scholar] [CrossRef]

- Velázquez-Guerrero, R.; Pissaloux, E.; Del-Valle-Soto, C.; Carrasco-Zambrano, M.Á.; Mendoza-Andrade, A.; Varona-Salazar, J. Mobility of blind people using the smartphone’s GPS and a wearable tactile display; [Movilidad para invidentes utilizando el GPS del teléfono inteligente y un dispositivo táctil vestible]. Dyna 2021, 96, 98–104. [Google Scholar] [CrossRef]

- Jeevitha, S.; Kavitha, P.; Keerthana, C. Smart Blind Stick Using IoT. Int. J. Adv. Res. Sci. Commun. Technol. 2022, 2, 535–538. [Google Scholar] [CrossRef]

- Luo, Y.; Sun, J.; Zeng, Q.; Zhang, X.; Tan, L.; Chen, A.; Guo, H.; Wang, X. Programmable tactile feedback system for blindness assistance based on triboelectric nanogenerator and self-excited electrostatic actuator. Nano Energy 2023, 111, 108425. [Google Scholar] [CrossRef]

- Chinchai, P.; Inthanon, R.; Wantanajittikul, K.; Chinchai, S. A white cane modified with ultrasonic detectors for people with visual impairment. J. Assoc. Med Sci. 2022, 55, 11–18. [Google Scholar] [CrossRef]

- Kappers, A.M.L.; Oen, M.F.S.; Junggeburth, T.J.W.; Plaisier, M.A. Hand-Held Haptic Navigation Devices for Actual Walking. IEEE Trans. Haptics 2022, 15, 655–666. [Google Scholar] [CrossRef] [PubMed]

- Pati, V.R.; Kotha, P.; Kumar M, C.L. Navigating the Unseen: Ultrasonic Technology for Blind Navigation. Int. J. Mod. Trends Sci. Technol. 2024, 10, 25–31. [Google Scholar] [CrossRef]

- Rehan, S.A.; Mande, U.; Kumar, M.R.; Manindhar, P.; Ganesh, G.; Gupta, H.P. Smart Glasses for Blind People. Int. J. Sci. Res. Eng. Manag. 2022, 6, 1–5. [Google Scholar] [CrossRef]

- Felix, F.; Swai, R.; Dida, D.A.; Sinde, D. Development of Navigation System for Blind People based on Light Detection and Ranging Technology(LiDAR). Int. J. Adv. Sci. Res. Eng. 2022, 8, 47–55. [Google Scholar] [CrossRef]

- Gayathri, N. Smart Glove for Blind. Int. J. Res. Appl. Sci. Eng. Technol. 2021, 9, 3309–3315. [Google Scholar] [CrossRef]

- Bouteraa, Y. Smart real time wearable navigation support system for BVIP. Alex. Eng. J. 2023, 62, 223–235. [Google Scholar] [CrossRef]

- Marzec, P.; Kos, A. Thermal navigation for blind people. Bull. Pol. Acad. Sci. Tech. Sci. 2021, 69, e136038. [Google Scholar] [CrossRef]

- Balakrishnan, A.; Ramana, K.; Ashok, G.; Viriyasitavat, W.; Ahmad, S.; Gadekallu, T.R. Sonar glass—Artificial vision: Comprehensive design aspects of a synchronization protocol for vision based sensors. Meas. J. Int. Meas. Confed. 2023, 211, 112636. [Google Scholar] [CrossRef]

- Ranjan, A.; Kumar, S.; Kumar, P. Design and Analysis of Smart Shoe. Int. J. Adv. Res. Sci. Commun. Technol. 2023, 3, 119–125. [Google Scholar] [CrossRef]

- Tarik, H.; Hassan, S.; Naqvi, R.A.; Rubab, S.; Tariq, U.; Hamdi, M.; Elmannai, H.; Kim, Y.J.; Cha, J.H. Empowering and conquering infirmity of visually impaired using AI-technology equipped with object detection and real-time voice feedback system in healthcare application. Caai Trans. Intell. Technol. 2023, 1–14. [Google Scholar] [CrossRef]

- Syahrudin, E.; Utami, E.; Hartanto, A.D. Enhanced Yolov8 with OpenCV for Blind-Friendly Object Detection and Distance Estimation. J. RESTI (Rekayasa Sist. Dan Teknol. Informasi) 2024, 8, 199–207. [Google Scholar] [CrossRef]

- Adamu, S.A.; Adamu, A.; Anas, M.S. Design, Construction and Testing of a Smart Static and Dynamic Obstacle Walking Stick With Emf Detector for the Blinds Using Mcu and Controlling Software. Fudma J. Sci. 2021, 5, 334–343. [Google Scholar] [CrossRef]

- Brandebusemeyer, C.; Luther, A.R.; König, S.U.; König, P.; Kärcher, S.M. Impact of a vibrotactile belt on emotionally challenging everyday situations of the blind. Sensors 2021, 21, 7384. [Google Scholar] [CrossRef]

- Gill, S.; Pawluk, D.T.V. Design of a “Cobot Tactile Display” for Accessing Virtual Diagrams by Blind and Visually Impaired Users. Sensors 2022, 22, 4468. [Google Scholar] [CrossRef]

- Lei, Y.; Phung, S.L.; Bouzerdoum, A.; Thanh Le, H.; Luu, K. Pedestrian Lane Detection for Assistive Navigation of Vision-Impaired People: Survey and Experimental Evaluation. IEEE Access 2022, 10, 101071–101089. [Google Scholar] [CrossRef]

- Ramadan, B.; Fink, W.; Nuncio Zuniga, A.; Kay, K.; Powers, N.; Fuhrman, C.; Hong, S. VISTATM: Visual Impairment Subtle Touch AidTM–a range detection and feedback system for sightless navigation. J. Med. Eng. Technol. 2022, 46, 59–68. [Google Scholar] [CrossRef]

- Pilski, M. Technologies supporting independent moving inside buildings for people with visual impairment. Stud. Inform. 2021, 1, 85–97. [Google Scholar] [CrossRef]

- Siddhi, S.; Roy, S.; Pandit, S. Bli-Fi: Li-Fi-Based Home Navigation for Blind. Int. J. Sens. Wirel. Commun. Control 2024, 14, 173–183. [Google Scholar] [CrossRef]

- Wakhare, P.; Tavar, H.; Jagtap, P.; Rane, M.; Waghmare, H. Elder Assist—An IoT Based Fall Detection System. Int. J. Adv. Res. Sci. Commun. Technol. 2022, 558–563. [Google Scholar] [CrossRef]

- Bakouri, M.; Alyami, N.; Alassaf, A.; Waly, M.; Alqahtani, T.; AlMohimeed, I.; Alqahtani, A.; Samsuzzaman, M.; Ismail, H.F.; Alharbi, Y. Sound-Based Localization Using LSTM Networks for Visually Impaired Navigation. Sensors 2023, 23, 4033. [Google Scholar] [CrossRef] [PubMed]

- Apprey, M.W.; Agbevanu, K.T.; Gasper, G.K.; Akoi, P.O. Design and implementation of a solar powered navigation technology for the visually impaired. Sens. Int. 2022, 3, 100181. [Google Scholar] [CrossRef]

- Patil, P.S. Trinal Optics for Blind People Using Smart Object Recognition for Navigation Through Voice Assistant. Int. J. Sci. Res. Eng. Manag. 2024, 8, 1–5. [Google Scholar] [CrossRef]

- Bamdad, M.; Scaramuzza, D.; Darvishy, A. SLAM for Visually Impaired People: A Survey. IEEE Access 2024, 12, 130165–130211. [Google Scholar] [CrossRef]

- Karthik, S.; Satish, N. Smart Gloves used for Blind Visually Impaired using Wearable Technology. Eng. Sci. Int. J. 2021, 8, 143–146. [Google Scholar] [CrossRef]

- Mohan, B. A Review on Voice Navigation System for the Visually Impaired. Int. J. Sci. Res. Eng. Manag. 2024, 8, 1–5. [Google Scholar] [CrossRef]

- Scalvini, F.; Bordeau, C.; Ambard, M.; Migniot, C.; Dubois, J. Outdoor Navigation Assistive System Based on Robust and Real-Time Visual—Auditory Substitution Approach. Sensors 2024, 24, 166. [Google Scholar] [CrossRef]

- Mohan, N.; Ravi, K.L.; Sharath, K.D.A.; Sreeja, L.; Dhisley, S.S. Navigation Tool for Visually Impaired Hurdle Recognition using Image Processing and IoT. J. IoT Mach. Learn. 2023, 1, 12–17. [Google Scholar] [CrossRef]

- Pedzisai, E.; Charamba, S. A novel framework to redefine societal disability as technologically-enabled ability: A case of multi-disciplinary innovations for safe autonomous spatial navigation for persons with visual impairment. Transp. Res. Interdiscip. Perspect. 2023, 22, 100952. [Google Scholar] [CrossRef]

- Bleau, M.; Paré, S.; Djerourou, I.; Chebat, D.R.; Kupers, R.; Ptito, M. Blindness and the reliability of downwards sensors to avoid obstacles: A study with the eyecane. Sensors 2021, 21, 2700. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Li, X.; Zhang, Z.; Zhao, R. Research Advanced in Blind Navigation based on YOLO-SLAM. Theor. Nat. Sci. 2023, 5, 163–171. [Google Scholar] [CrossRef]

- Hong, B.; Guo, Y.; Chen, M.; Nie, Y.; Feng, C.; Li, F. Collaborative route map and navigation of the guide dog robot based on optimum energy consumption. AI Soc. 2024, 1–7. [Google Scholar] [CrossRef]

| Citation | Year of Review | Technologies Reviewed | Publication Year Range of Studied Articles | Number of Studies Included | Type of Navigation | Differences with Respect to Our Paper |

|---|---|---|---|---|---|---|

| [7] | 2023 | Ultrasonic sensors, infrared, laser, computer vision | 2020–2023 | 89 | Wearable | Our study includes more recent papers (2019–2024) and focuses on newer advancements in sensors and algorithms. |

| [8] | 2021 | Ultrasonic sensors, cameras, LiDAR, RFID, RGB, RGBD, computer vision | 2020–2021 | 179 | Outdoor | Our study includes papers up to 2024, highlighting the latest advancements in computer vision and mobile devices. |

| [9] | 2024 | Ultrasonic sensors, LiDAR, artificial intelligence, RGB-D sensors, tactile feedback | 2014–2023 | 102 | General | Our review updates technological advancements with a focus on the latest research and developments in AI and mobile navigation systems. |

| [10] | 2022 | Optical and sonic sensors, cloud computing, AI, multisensory fusion, robotics | 1946–2022 | 140 | Mobility | We provide a more recent and focused analysis of technological advances, emphasizing current sensor integration and the latest AI developments. |

| [11] | 2021 | Ultrasonic sensors, computer vision, RGB-D cameras | 2010–2020 | 61 | Portable | Our paper includes recent studies from 2019 to 2024 and provides a broader perspective on the integration of newer sensors and navigation algorithms. |

| Database | Search Formula |

|---|---|

| Scopus | TITLE-ABS-KEY (“navigation*”) AND (TITLE-ABS-KEY (“technolog*”) OR TITLE-ABS-KEY (“device*”)) AND TITLE-ABS-KEY (“blind*”) AND PUBYEAR > 2019 AND PUBYEAR < 2025 AND (LIMIT-TO (DOCTYPE, “ar”)) |

| Web of Science | TS = (“navigation*”) AND TS = (“technolog*” OR “device*”) AND TS = (“blind*”) AND PY = (2020–2024) AND DT = (Article) |

| Dimensions | (“navigation”) AND (“technologies” OR “devices”) AND (“blind people”) |

| Criteria | Details |

|---|---|

| Inclusion Criteria | - Articles describing navigation technologies (e.g., GPS systems, assistive devices, portable technologies, etc.) |

| - The topic is focused on mobility technologies for blind people. | |

| Exclusion Criteria | - Review articles not identified during initial database filtering. |

| - Articles with a structure that is too general or lacks specific details. | |

| - Studies focused on medicine, unrelated to technological navigation systems. | |

| - Articles that do not address technologies for human navigation. |

| Citation | Main Topic | Technology Used | Smartphone Integration | Does the System Have an Experimental Phase? |

|---|---|---|---|---|

| [22] | Usability and UX Evaluation of BlindRouteVision App | Android app, high-precision GPS, ultrasonic sensor | Smartphone app for navigation, emergency alerts | Yes, with 30 visually impaired users in a pilot study |

| [23] | Ultrasonic Navigation Assistance | Ultrasonic transceivers, water sensor, GPS, Bluetooth | Android phone with Bluetooth voice feedback | Yes, in both obstacle and fixed-route modes |

| [24] | Assistive Technology for Education | Computer and smartphone interfaces | Smartphone apps for user experience improvement | No experimental phase reported |

| [25] | IoT-Based Quality of Life Enhancement for VI People | Arduino UNO, ultrasonic and proximity sensors | Android app with IoT integration | Yes, tested for efficiency with IoT and apps |

| [26] | EchoSee for Real-Time 3D Environment Navigation | 3D scanning, spatialized audio, real-time updates | Mobile device generating 3D soundscapes | Yes, feasibility study with user testing |

| [27] | Smart Stick for Obstacle Detection | Ultrasonic sensors, GSM, GPS, Arduino | Not directly smartphone-integrated but GSM notifications sent | No direct experimental phase mentioned |

| [4] | Navigation App Using NTRIP Protocol | GNSS, RTCM corrections, Android Studio | Screen reader and early obstacle alerts | Yes, tested using NSSDA for precision comparison |

| [28] | 5G Edge Computing for Object Detection in Wearables (VIS4ION) | High-res cameras, 5G networks, wearable device | Smartphone-connected processing via 5G | Yes, simulation-based study with navigation routes |

| [29] | Smart Blind Walking Stick | Ultrasonic sensor, GPS, audio feedback | Integration through live GPS location | No experimental phase detailed |

| [30] | GPS and Wearable Tactile Display for Navigation | Smartphone GPS, tactile feedback device in shoes | GPS-based positioning with haptic instructions | Yes, with two user experiments |

| [18] | Intelligent Blind Guidance System (IBGS) | WiFi, cloud database, speech recognition (ConvT-T) | Not reliant on smartphones; cloud-connected | Yes, with outdoor and speech recognition tests |

| [31] | IoT-Based Blind Smart Stick | Ultrasonic sensors, SPO2 sensor, Node MCU | GPS-enabled location alerts with user-defined audio input | No detailed experimental phase |

| [17] | Unfolding Space Glove for Spatial Exploration | Haptic feedback, sensory substitution | Not reliant on smartphones | Yes, with structured training sessions and testing |

| [21] | Training Framework for Assistive App Acceptance | Interviews, training methodologies | Training-focused smartphone app development | No formal experimental results |

| Citation | Main Topic | Technology Used | Type of Haptic System | Does the System Have an Experimental Phase? |

|---|---|---|---|---|

| [32] | Programmable tactile feedback system for blindness assistance | Triboelectric nanogenerator (TENG), self-excited electrostatic actuator (SEEA) | Vibrotactile system with programmable matrix for navigation and Braille assistance. | Yes, evaluated with various applications including Braille and haptic navigation systems. |

| [33] | A white cane modified with ultrasonic detectors | Ultrasonic sensors integrated into a white cane | Vibrotactile and auditory feedback for obstacle detection at head and waist levels. | Yes, tested with 10 blindfolded participants across three obstacle stations. |

| [34] | Hand-held haptic navigation devices for current walking | GPS for outdoor and various tracking systems for indoor navigation | Portable vibrotactile devices for indoor and outdoor navigation. | Yes, high success rates reported, though training was limited. |

| [35] | Navigating the unseen: Ultrasonic technology for blind navigation | Ultrasonic sensors, microcontroller, and buzzer | Vibrotactile and auditory feedback for obstacle detection and object localization. | Yes, demonstrated through scenarios including cane localization and obstacle avoidance. |

| [1] | Smart Stick for Visually Impaired on Streets Using Arduino UNO | Arduino UNO, ultrasonic sensors, buzzer | Vibrotactile and auditory system providing artificial vision and obstacle alerts. | Yes, focused on real-time obstacle detection and navigation for urban scenarios. |

| [36] | Smart Glasses for Blind People | Ultrasonic sensors, Raspberry Pi, vibrators, and audio output | Mixed audio and vibrotactile feedback for navigation and obstacle avoidance. | Yes, tested with real-world obstacle avoidance tasks. |

| [37] | Navigation system for blind people using LiDAR | LiDAR, microcontroller, vibration motor, and buzzer | Vibrotactile and auditory system for real-time obstacle detection. | Yes, verified through field tests for usability and efficiency. |

| [38] | Smart Glove for Blind | Ultrasonic sensors, GPS, color sensor, and vibration motor | Glove-based vibrotactile feedback with multiple sensors for enhanced mobility. | Yes, tested for obstacle detection, color identification, and fall alerts. |

| [39] | Real-time wearable navigation support system for BVIP | Fuzzy logic decision-making, Raspberry Pi4, and haptic voice interface | Vibrotactile signals for safe navigation, enhanced with human safety evaluations. | Yes, validated through tests in different environments with visually impaired participants. |

| [40] | Thermal navigation for blind people | GPS, infrared sensor array, and vibration bracelet | Vibrotactile feedback for thermal-based navigation and orientation. | Yes, tested for precise indoor navigation and orientation. |

| [41] | Sonar Glass | Sonar sensors, log-polar mapping, and SIFT algorithm | Vibrotactile and auditory alerts for spatial awareness using synchronized visual-like perception. | Yes, simulations and real-world tests conducted in indoor and outdoor environments. |

| [42] | Smart Shoe for visually impaired | Piezoelectric materials, obstacle sensors, and SOS button | Vibrotactile feedback integrated into footwear for enhanced safety and navigation. | Yes, tested for obstacle detection and emergency assistance. |

| [43] | AI-equipped assistive devices for blind individuals | Internet of Things (IoT), AI-based object detection, and GPS | Vibrotactile and auditory feedback for integrated obstacle detection and navigation. | Yes, tested for cost-efficiency and multi-functional use. |

| [20] | Audio–tactile map for visually impaired individuals | Interactive audio–tactile mapping system | Haptic map with mixed vibrotactile and audio feedback for cognitive spatial mapping. | Yes, evaluated with 14 participants for spatial recall and navigation learning. |

| [44] | Enhanced YOLOv8 with OpenCV for object detection | YOLOv8 object detection, OpenCV for distance measurement | Haptic-enhanced detection for precise obstacle awareness and feedback. | Yes, achieved 3.15% error rate with efficient response times. |

| [45] | Smart cane with EMF detector for the blind | Ultrasonic sensors, EMF detector, and vibration motor | Vibrotactile and auditory feedback for static and dynamic obstacle detection. | Yes, validated with optimized operational results. |

| [46] | Vibrotactile belt for challenging situations | Magnetic north-based vibrotactile orientation | Belt with vibrotactile stimuli for spatial awareness and emotional comfort. | Yes, tested over 7 weeks with blind users in various outdoor situations. |

| [15] | Assistive locomotion device for the visually impaired | Smart walker with haptic controllers | Vibrotactile guidance through physical interaction with the walker. | Yes, tested with blindfolded and sighted participants. |

| [13] | Wearable haptics for indoor navigation | RGB-D camera, haptic portable device, and force feedback | Haptic wearable devices for force-guided indoor navigation. | Yes, tested with visually impaired and blindfolded participants. |

| [19] | Cognitive systems for visually impaired individuals | RGB-D camera, embedded computer, and haptic modules | Wearable vibrotactile system with modular task support. | Yes, pilot study evaluated navigation and object recognition tasks. |

| [14] | Open-source haptic navigation system | Ultrasound-based haptic feedback with vibration patterns | Low-cost vibrotactile system for accessible navigation. | Yes, effective in real-world navigation tasks. |

| [47] | Cobot tactile display for virtual diagram exploration | Omnidirectional robot base, optical mouse sensor, and admittance control | Haptic robotic assistance for tactile diagram exploration. | Yes, found intuitive and useful by visually impaired participants. |

| [48] | Pedestrian lane detection for vision-impaired individuals | Deep learning methods for lane detection | Vibrotactile and visual feedback for safe pedestrian lane guidance. | Yes, evaluated on a large dataset of pedestrian lane images. |

| [49] | VISTATM: Touch aid for sightless navigation | Ultrasonic range detection and vibrotactile feedback | Vibrotactile belt providing proportional distance feedback. | Yes, validated in real-world navigation scenarios. |

| Citation | Main Topic | Technology Used | Algorithm/Method Used | Does the System Have an Experimental Phase? |

|---|---|---|---|---|

| [50] | SDGs and Visual Impairment | Smartphone cameras, laser rangefinders, Wi-Fi, BLE beacons, smart lighting, barometric sensors, and magnetic fields. | Obstacle detection | No |

| [51] | BLi-Fi Navigation | Li-Fi, LEDs as transmitters, LDRs as receivers, microcontrollers. | Obstacle detection | No |

| [52] | Smart Cane for the Elderly and Visually Impaired | Ultrasonic sensors, IoT, vibration motor, buzzer. | Obstacle detection | Yes, tested with elderly users to detect obstacles and provide feedback. |

| [53] | Sound-Based Prototype for Localization | Ultrasonic sensor networks, GPS, digital compass. | Dijkstra’s algorithm, LSTM | Yes, 45 indoor and outdoor tests with navigation accuracy measurements. |

| [54] | Safe Navigation with Arduino and 1Sheeld | Arduino Uno, 1Sheeld, ultrasonic and water sensors, buzzer, vibration motor, mini solar panel. | Navigation through obstacle detection | Yes, tested with volunteers to verify navigation safety and system accuracy. |

| [55] | Trinal Optics | Arduino Uno, camera, Bluetooth headsets, ultrasonic sensors, voice assistant. | Object detection and environment analysis | No |

| [16] | StereoPilot | RGB-D camera, spatial audio representation (SAR). | Fitts’ Law experiments | Yes, compared spatial navigation performance with SAR and other auditory feedback methods. |

| [56] | Systematic Review of SLAM | SLAM, various sensors (not specified), machine learning. | Various SLAM-based approaches | No |

| [57] | Smart Glove for Navigation | Ultrasonic sensors, GPS, vibrators, microcontrollers (PIC). | Environmental data processing | No |

| [2] | Web Content Accessibility Guidelines (WCAG) for Eye Tracking Navigation | Eye-tracking technology, web navigation. | Quadtree-based goal selection, hierarchical menu representation | Yes, tested with 30 participants to evaluate usability and performance. |

| [12] | Indoor Navigation for the Blind | Obstacle avoidance algorithms, spatiotemporal trajectory prediction model. | Trajectory prediction | Yes, experimental evaluation of the trajectory model in a multi-floor mall. |

| [3] | Evaluation of ETAs with Parrot-VR and Audomni | Audomni, Parrot-VR, various sensors. | VR-based evaluation of navigation tools | Yes, 19 BLV participants tested navigation in large urban environments using Audomni. |

| [5] | LidSonic V2.0 | LidSonic V2.0, LiDAR with servo motor, ultrasonic sensor, Arduino Uno. | Deep learning for object classification | Yes, prototype tested in real-world environments to detect and classify obstacles. |

| [58] | Voice Navigation System | Computer vision, deep learning, GPS, smartphone-based platform. | Real-time obstacle recognition and classification | Yes, experimental tests with users in various environments to guide navigation. |

| [59] | Navigation Aid Using Spatialized Sound | Inertial sensors, GPS, camera, deep learning. | Neural networks for spatialized navigation | Yes, real-time navigation tasks using 3D spatialized sound and obstacle feedback. |

| [60] | Smart Cane with IoT and Image Processing | Camera, ultrasonic sensor, image processing, IoT. | Obstacle detection through image analysis | No |

| [61] | Architecture for Autonomous Navigation for the Visually Impaired | ADAS, supercomputing, artificial intelligence. | Advanced Driver Assistance for spatial navigation | No |

| [62] | EyeCane | EyeCane, 2-meter obstacle detection sensor. | Obstacle detection and navigation | Yes, users performed obstacle detection tasks in a real-world environment. |

| Citation | Main Topic | Technology Used | Algorithm/Method Used | Does the System Have an Experimental Phase? |

|---|---|---|---|---|

| [63] | Blind Navigation with YOLO-SLAM | YOLO for object detection, SLAM for simultaneous localization and mapping, human–machine interface. | Combination of YOLO for object detection and SLAM for localization and mapping. | Yes, evaluated recent studies, focusing on test sessions, sensor optimization, and interface improvements. |

| [64] | Collaborative Route Planning for Guide Dog Robot | Low-speed guide dog robot (GDR), energy optimization, virtual–real collaborative scenarios. | Energy consumption integral equation to optimize navigation efficiency. | Yes, experiments showed energy savings of 6.91% in straight motion and 10.60% in curved motion. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Casanova, E.; Guffanti, D.; Hidalgo, L. Technological Advancements in Human Navigation for the Visually Impaired: A Systematic Review. Sensors 2025, 25, 2213. https://doi.org/10.3390/s25072213

Casanova E, Guffanti D, Hidalgo L. Technological Advancements in Human Navigation for the Visually Impaired: A Systematic Review. Sensors. 2025; 25(7):2213. https://doi.org/10.3390/s25072213

Chicago/Turabian StyleCasanova, Edgar, Diego Guffanti, and Luis Hidalgo. 2025. "Technological Advancements in Human Navigation for the Visually Impaired: A Systematic Review" Sensors 25, no. 7: 2213. https://doi.org/10.3390/s25072213

APA StyleCasanova, E., Guffanti, D., & Hidalgo, L. (2025). Technological Advancements in Human Navigation for the Visually Impaired: A Systematic Review. Sensors, 25(7), 2213. https://doi.org/10.3390/s25072213